Planning and implementing space missions is a long-term process, which is why we’re already talking about successors to the James Webb Space Telescope, itself a Hubble successor that has yet to be launched. Ashley Baldwin, who tracks telescope technologies deployed on the exoplanet hunt, here looks at the prospects not just for WFIRST (Wide-Field InfraRed Survey Telescope) but a recently proposed High-Definition Survey Telescope (HDST) that could be a major factor in studying exoplanet atmospheres in the 2030s. When he is not pursuing amateur astronomy at a very serious level, Dr. Baldwin serves as a consultant psychiatrist at the 5 Boroughs Partnership NHS Trust (Warrington, UK).

by Ashley Baldwin

?”It was the best of times, it was the worst of times…” Dickens apart, the future of exoplanet imaging could be about two telescopes rather than two cities. Consider the James Webb Space Telescope (JWST), and Wide-Field InfraRed Survey Telescope (WFIRST), which as we shall see have the power not just to see a long way but also determine any big telescope future. JWST, or rather its performance, will determine whether there is even to be a big telescope future. The need to produce a big telescope and its function are a cause of increasing debate as the next NASA ten year roadmap, the Decadal Survey for 2020, approaches.

NASA will form Science Definition “focus” groups from the full range of its astrophysics community to determine the shape of this map. The Exoplanet Program Analysis Group (ExoPAG) is a dedicated group of exoplanetary specialists tasked with soliciting and coordinating community input to NASA’s exoplanet exploration programme through missions like Kepler, the Hubble Space Telescope, HST and more recently Spitzer. They have produced an outline of their vision in response to NASA’s soliciting of ideas, which is addressed here in conjunction with a detailed look at some of the central elements by way of explaining some of the complex features that exoplanet science requires.

Various members of ExoPAG have been involved in the exoplanet arm of the JWST and most recently in the NASA dark energy mission, which with the adoption of the “free” NRO 2.4m mirror array and a coronagraph is increasingly becoming an ad hoc exoplanet mission too. This mission has also been renamed: Wide-Field InfraRed Survey Telescope (WFIRST), a name that will hopefully go down in history! More about that later.

The Decadal Survey and Beyond

As we build towards the turn of the decade, though, the next Decadal Survey looms. This is effectively a road map of NASA’s plans for the coming decade. Never has there been a decade as important for exoplanet science if it is to build on Kepler’s enormous legacy. To date, over 4000 “candidate” planets have been identified and are awaiting confirmation by other means, such as the radial velocity technique. Recently twelve new planets have been identified in the habitable zones of their parent stars, all with Earth-like masses. Why so many now? New sophisticated software has been developed to automate the screening of the vast number of signals returned by Kepler, increasing the number of potential targets but more importantly, becoming more sensitive to the smaller signals of Earth-sized planets.

So what is next? In general these days NASA can afford one “Flagship” mission. This will be WFIRST for the 2020s. It is not a dedicated mission but as Kepler and ground-based surveys return increasingly exciting data, WFIRST evolves. In terms of the Decadal Survey, the exoplanet fraternity has been asked to develop mission concepts within the still-available funds.

Three “Probe” class concepts — up to and above current Discovery mission cost caps but smaller than flagship-class missions — have been mooted, the first of which is developing a star-shade to accompany WFIRST. This, if you recall, is an external occulting device that blocks out starlight by sitting several tens of thousands of kilometers off between the parent star and the telescope, allowing through the much dimmer accompanying planetary light, making characterisation possible. A recent Probe concept, Exo-S, addressed this very issue and proposed either a small 1.1m dedicated telescope and star-shade, or the addition of a star-shade to a pre existing mission like WFIRST. At that time, the “add on” option wasn’t deemed possible as it was proposed to put WFIRST into a geosynchronous orbit where a star-shade could not function.

The ExoPAG committee have recently produced a consensus statement of intent in response to a NASA request for guidance on an exoplanet roadmap for incorporation into NASA’s generic version for Decadal Survey 2020. As stated above, this group consists of a mixture of different professionals and amateurs (astrophysicists, geophysicists, astronomers, etc) who advise on all things exoplanet including strategy and results. They have been asked to create two science definition teams representing the two schools of exoplanet thinking to contribute to the survey.

One suggestion involved placing WFIRST at the star-shade friendly Earth/Sun Lagrange 2 point (932,000 kilometers from Earth, where the Sun and Earth Gravity cancel each other out, allowing a relatively stable orbit). This if it happens represents a major policy change from the original geosynchronous orbit, and is very exciting as unlike the current exoplanet coronagraph on the telescope, a star-shade of 34m diameter could image Earth-mass planets in the habitable zones of Sun-like stars. More on that below.

WFIRST at 2.4m will be limited in how much atmospheric characterisation it can perform given its relatively small aperture and time-limited observation period (it is not a dedicated exoplanet mission and still has to do dark energy science). The mission can be expected to locate several thousand planets via conventional transit photometry as well as micro-lensing and possibly even a few new Earth-like planets by combining its results with the ESA Gaia mission to produce accurate astrometry (position and mass in three dimensions) within 30 light years or so. There has even been a recent suggestion that exoplanet science or at least the coronagraph actually drives the WFIRST mission. A total turnaround if it happens and very welcome.

The second Probe mission is a dedicated transmission spectroscopy telescope. It would be a telescope of around 1.5m with a spectroscope, fine guidance system and mechanical cooler to spectroscopically analyse the light of a distant star as it passes through the atmosphere of a transiting exoplanet. No image of the planet here, but the spectrum of its atmosphere tells us almost as much as seeing it. The bigger the telescope aperture, the better for seeing smaller planets with thinner atmospheric envelopes. Planets circling M-dwarfs make the best targets, as the planet to star ratio here will obviously be the highest. The upcoming TESS mission is intended to provide such targets for the JWST, although even its 6.5m aperture will struggle to characterise atmospheres around all but the largest planets or perhaps, if lucky, a small number of “super-terrestrial” planets around M-dwarfs. It will be further limited by general astrophysics demand on its time. A Probe telescope would pick up where JWST left off and although smaller, could compensate by being a dedicated instrument with greater imaging time.

The final Probe concept links to WFIRST and Gaia. It would involve a circa 1.5m class telescope as part of a mission that like Gaia observes multiple stars on multiple occasions to measure subtle variations in their position over time, determining the presence of orbiting planets by their effect on the star. Unlike radial velocity methods, it can accurately determine mass and orbital period down to Earth- sized planets around neighbouring stars. A similar concept called NEAT was presented unsuccessfully for ESA funding but rejected despite being robust — a good summary is available through a Google search.

These parameters are obviously useful in their own right but more important provide targets for direct imaging telescopes like WFIRST rather than leaving the telescope to search star systems “blindly,” thus wasting limited time. At present the plan for WFIRST is to image pre-existing radial velocity planets to maximise searching, but nearby RV [radial velocity] planets are largely limited to the larger range of gas giants, and although important to exoplanetary science, they are not the targets that are going to excite the public or, importantly, Congress.

All of these concepts occur against the backdrop of the ESA RV PLATO mission and the new generation of super telescopes, the ELTs. Though ground based and limited by atmospheric interference, these will synergize perfectly with space telescopes, as their huge light-gathering capacity will allow high-resolution spectroscopy of suitable exoplanet targets identified by their space-based peers, especially if also combined with high quality coronagraphs.

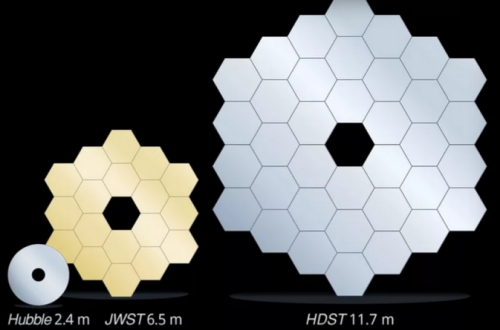

Image: A direct, to-scale, comparison between the primary mirrors of the Hubble Space Telescope, James Webb Space Telescope, and the proposed High Definition Space Telescope (HDST). In this concept, the HDST primary is composed of 36 1.7 meter segments. Smaller segments could also be used. An 11 meter class aperture could be made from 54 1.3 meters segments. Credit: C. Godfrey (STScI).

Moving Beyond JWST

So the 2020s have the potential to be hugely exciting. But simultaneously we are fighting a holding battle to keep exoplanet science at the top of the agenda and make a successful case for a large telescope in the 2030s. It should be noted that there is still an element in NASA who are unsure as to what the reaction to the discovery of Earth like planets would be!

A series of “Probe” class missions will run in parallel with or before any flagship mission. No specific plans have been made for a flagship mission but an outline review of its necessary requirements has been commissioned by the Association of Universities for Research in Astronomy (AURA) and released under the descriptive title “High Definition Space Telescope” (HDST). A smaller review has produced an outline for a dedicated exoplanet flagship telescope called HabEX. These have been proposed as happening at the end of the next decade but have met resistance as being too close to the expensive JWST in time. As WFIRST is in effect a flagship mission (although never publicly announced as such), NASA generally can afford one such mission per decade, which means any big telescope will have to wait until the 2030s at the earliest. Decadal 2020 and the exoplanet consensus and science definition groups contributing to it will basically have to play a “holding” role, keeping up the exoplanet case throughout the decade using evidence from available resources to build a case for a subsequent large HDST.

The issue then becomes the launch vehicle upper stage “shroud,” or width. The first version of the Space Launch System (SLS) is only about 8.5m. Ideally the shroud should be at least a meter larger than the payload to allow “give” during launch pressures, which is especially important for a monolithic mirror where the best orientation is “face on”. Given the large stresses of launch, lightweight “honeycomb” versions of traditional mirrors cannot be used and solid versions weigh in at 56 tonnes, even before the rest of the telescope. For the biggest possible monolithic telescopes at least, we will have to wait for the 10m-plus shroud and heavier lifting ability of the SLS or any other large launcher.

A star-shade on WFIRST via one of these Probe missions seems the best bet for a short term driver of change. Internal coronagraphs on 2m class telescopes allow too little light through for eta Earth spectroscopic characterisation, but star-shades will (provided their light enters the telescope optical train high enough up, if like WFIRST the plan is to have internal and external coronagraphs). There will be a smaller inner working angle, too, to get at the habitable zone of later spectrum stars (K). That’s if WFIRST ends up at L2, though L2 is talked about more and more.

The astrometry mission will be a dedicated version of WFIRST/Gaia synergy, saving lots of eta Earth searching time. It should be doable within Probe funding, as the ESA NEAT mission concept came in at under that. It fell through due to the formation flying element, but post PROBA 3 (a European solar coronagraphy mission that will in effect be the first dedicated “precision” formation flying mission) that issue should be resolved.

A billion dollars probably gets a decent transition spectroscopy mission which will have enough resolution to follow up some of the more promising TESS discoveries. Put these together and that’s a lot of exoplanet science with a tantalising amount of habitability material, too. WFIRST status seems to be increasing all the time and at one recent exoplanet meeting led by Gary Blackwood it was even stated (and highlighted) publicly that the coronagraph should LEAD the mission science. That’s totally at odds with previous statements that emphasised the opposite.

Other Probe concepts consider high-energy radiation such as X-rays, and though less relevant to exoplanets, the idea acknowledges the fact that any future telescopes will need to look at all facets of the cosmos and not just exoplanets. Indeed, competition for time on telescopes will become even more intense. Given the very faint targets that exoplanets present it must be remembered that collecting adequate photons takes a lot of precious telescope time, especially for small, close-in habitable zone planetary targets.

The ExoPAG consensus represents a compromise between two schools of thought: Those who wish to prioritise habitable target planets for maximum impact, and those favouring a methodical analysis of all exoplanets and planetary system architecture to build up a detailed picture of what is out there and where our own system fits into this. All of these are factors that are likely to determine the likelihood of life, and both approaches are robust. I would recommend that readers consult this article and related material and reach their own conclusions.

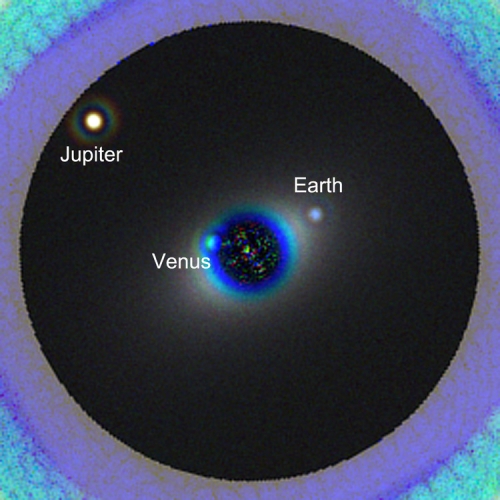

Image: A simulated image of a solar system twin as seen with the proposed High Definition Space Telescope (HDST). The star and its planetary system are shown as they would be seen from a distance of 45 light years. The image here shows the expected data that HDST would produce in a 40-hour exposure in three filters (blue, green, and red). Three planets in this simulated twin solar system – Venus, Earth, and Jupiter – are readily detected. The Earth’s blue color is clearly detected. The color of Venus is distorted slightly because the planet is not seen in the reddest image. The image is based on a state-of-the-art design for a high-performance coronagraph (that blocks out starlight) that is compatible for use with a segmented aperture space telescope. Credit: L. Pueyo, M. N’Diaye (STScI).

Defining a High Definition Space Telescope

What of the next generation of “Super Space Telescope”?. The options are all closely related and fall under the broad heading of High Definition Space Telescope (HDST). Such a telescope requires an aperture of between 10 and twelve metres minimum to have adequate light-capturing ability and resolution to carry out both exoplanet imaging and also wider astrophysics, such as viewing extragalactic phenomena like quasars and related supermassive black holes. Regardless of specifics these parameters require absolute stability with the telescope requiring picometer (10-12 metre) levels in order to function.

The telescope is diffraction limited at 500nm, right in the middle of the visible spectrum. Diffraction limit is effectively the wavelength that any circular mirror gives its best angular resolution, the ability to discern detail. Angular resolution is governed by the equation ? (lambda) or wavelength expressed as a fraction of a metre / telescope aperture (D) expressed in metres; e.g HDST has its optimum functioning or “diffraction limit” at 500nm wavelength, defined by the equation 500nm (10-9)/12m.

The higher the aperture of a telescope the more detail it can see at any given wavelength and conversely the longer the wavelength, the less detail it can see. That is under perfect conditions experienced in space as opposed to the constantly moving atmosphere for ground-based scopes that will rarely approach the diffraction limit. So the HDST will not have the same degree of resolution at infrared wavelengths as visible wavelengths, which is relevant as several potential biosignatures will appear on spectra at longer wavelengths.

Approaching the diffraction limit is possible on the ground with the use of laser-produced guide stars and modern “deformable mirrors or “adaptive optics,” which help compensate. This technique of deformable primary and especially secondary mirrors will be important in space as well, in order to achieve the incredible stability required for any telescope observing distant and dim exoplanets. This is especially true of coronagraphs, though much less so with star-shades, which could be important in determining which starlight suppression technique to employ.

Additionally, the polishing “finish” of the mirror itself requires incredible precision. As a telescope becomes larger, the quality of its mirror needs to improve given the minute wavelengths being worked with. The degree of polish or “finish” required is defined as fractions of a wavelength or wavefront error (WFE). For the HDST this is as low as 1/10 or even 1/20 of the wavelength in question. In its case, generally visible light around 500nm, so this error will be below 50nm, a tiny margin that illustrates the ultra high quality of telescope mirror required.

A large 12m HDST would require a WFE of about 1/20 lambda and possibly even lower, which works out to less than 30nm. The telescope would also require a huge giga-pixel array of sensors to capture any exoplanet detail, electron-magnifying CCDs, Electron Multiplying CCDs (EMCCDs), or their Mercury Cadmium Tellurium-based near infrared equivalent, which would need passive cooling to prevent heat generated from the sensors themselves producing “dark current,” creating a false digital image and background “noise”.

Such arrays already exist in space telescopes like the ESA Gaia, and producing larger versions would be one of the easier design requirements. For a UltraViolet-Optical-InfraRed (UVOIR) telescope an operating temperature of about -100 C would suffice (for the sensors, while only the telescope itself would be near room temperature).

All of the above is difficult but not impossible even today and certainly possible in the near future, with conventional materials like ultra-low expansion glass (ULE) able to meet this requirement, and more recently silicon carbide composites, too. The latter have the added advantage of a very low coefficient of expansion. This last feature can be crucial depending on the telescope sensor’s operating temperature range. Excessive expansion due to a “warm” telescope operating around 0-20 degrees C could disturb the telescope’s stability. It was for this reason that silicon carbide was chosen for the structural frame of the astrometry telescope Gaia, where stability was also key to accurately positioning one billion stars.

A “warm” operating temperature of around room temperature helps reduce telescope cost significantly, as illustrated by the $8 billion cost of the JWST, with an operating temperature of a few tens of Kelvin requiring an expensive and finite volume of liquid helium. Think how sad it was seeing the otherwise operational 3.5m ESA Herschel space telescope drifting off to oblivion when its supply of helium ran out.

The operating temperature of a telescope’s sensors determines its wavelength-sensitive range or “bandpass.” For wavelengths longer than about 5 micrometers (5000 nm), the sensors of the telescope require cooling in order to prevent the temperature of the telescope apparatus from impacting any incoming information. Bandpass is also influenced, generally made much smaller, by passing through a coronagraph. The longer the wavelength, the greater the cooling required. Passive cooling involves attaching the sensors to a metal plate that radiates heat out to space. This is useful for a large telescope that requires precision stability, as it has no moving parts that can vibrate. Cooler temperatures can be reached by mechanical “cryocoolers,” which can get down as low as a few tens of Kelvin (seriously cold) but at the price of vibration.

This was one of the two main reasons why the JWST telescope was so expensive. If required liquid helium to achieve its operating temperature of just a few Kelvin from absolute zero (the point at which a body has no energy and therefore the lowest reachable temperature) without vibration, in order to reach longer infrared wavelengths and look back further into time.

Remember, the further light has travelled since the Big Bang, the more it is stretched or “red-shifted,” and seeing back as far as possible was a big driver for JWST. The problem is that liquid helium only lasts so long before boiling off, with the large volumes required for ten years of service presenting a large mass and also requiring extensive, expensive testing, all of which contributed to the telescope’s cost and time overrun.

The other issue with large telescopes is whether they are made from one single mirror, like Hubble, or are segmented like the Keck telescopes and JWST. The largest currently manufacturable monolithic mirrors are off-axis (unobstructed), 8.4m in diameter, bigger than JWST and perfected in ground scopes like the LBT and GMT. Off-axis means that the focal plane of the telescope is offset from its aperture such that a focusing secondary mirror, sensor array, spectroscope or coronagraph doesn’t obstruct and reduce available light by up to 20% of the aperture. A big attraction to this design is that the unobstructed 8.4m mirror thus collects roughly the equivalent of a 9.2m on-axis mirror, ironically near the minimum requirements of the ideal exoplanet telescope.

Given the construction of six such mirrors for the GMT, this mirror is now almost “mass produced,” and thus very reasonably priced. The off-axis design allows sensor arrays, spectroscopes and especially large coronagraphs to sit outside the telescope without need of suspension within the telescope, with the “spider” attachments creating the “star” shaped interference diffraction patterns in the images we are all familiar with in conventional telescope designs. Despite being cheaper to manufacture and already tested extensively on the ground, the problem arises from the fact that there are currently no launchers big and powerful enough to lift what would in effect be a 50 tonne-plus telescope into orbit (non-lightweight honeycomb design due to high “g” and acoustic vibration forces at launch).

In general, a segmented telescope can be “folded” up inside a launcher fairing very efficiently, up to a maximum aperture of up to 2.5 X the fairing width. The Delta IV heavy launcher has a fairing width of about 5.5m, so in theory a segmented telescope of up to 14m could be launched provided it was below the maximum weight capacity of about 21 tonnes to geosynchronous transfer orbit. So it could be launched tomorrow! It was this novel segmentation that, along with cooling, added to the cost and construction time of the JWST, though hopefully once successfully launched it will have demonstrated its technological readiness and be cheaper next time round.

By the time a HDST variant is ready to launch it is hoped that there will be launchers with fairing widths and power to lift such telescopes, and they will be segmented because at 12m they exceed the monolithic limit. With a wavelength operating range from circa 90nm to 5000nm, they will require passive cooling only and the segmentation design will have been tested already, both of which will help reduce cost, which will be more simply dependent on size and launcher cost. This sort of bandpass, though not so large as a helium cooled telescope, is more than adequate for looking for key biosignatures of life such as ozone, O3, Methane, water vapour and CO2 under suitable conditions and with a good “signal to noise ratio”, the degree to which the required signal stands out from background noise.

Separating Planets from their Stars

Ideally signal to noise ratio should be better than ten. In terms of instrumentation, all exoplanet scientists will want a large telescope of the future to have starlight suppression systems to help directly image exoplanets as near to their parent stars as possible, with a contrast reduction of 10-10 in order to view Earth-sized planets in the liquid water “habitable zone.” The more Earth-like planets and biosignatures the better. There are ways of producing biosignature signs on a spectrograph that are abiotic, so a larger sample of such signatures strengthens the case for a life origin rather than a coincidental non-biological origin.

As has been previously discussed, there are two ways of doing this, with internal and external occulting devices. Internal coronagraphs are a series of masks and mirrors that help “shave off” the offending starlight, leaving only the orbiting planets. The race is on as to how close this can be done to the star. NASA’s WFIRST will tantalisingly achieve contrast reductions between 10-9 and 10-10, which shows how far this technology has come since the mission was conceived three years ago when such levels were pure fantasy.

How close to the parent star a planet can be imaged, the Inner working angle (IWA) is measured in milliarcseconds (mas), and for WFIRST this is slightly more than 100, between Earth and Mars in the solar system. A future HDST coronagraph would hope to get as low as 10 mas, thus allowing habitable zone planets around smaller, cooler (and more common) stars. That said, coronagraphs on segmented telescopes are an order of magnitude more difficult to design for segmented scopes than monolithic designs and little research has yet gone into this area. An external occulter or star-shade achieves the same goals as a coronagraph but by sitting way out in front of a telescope, between it and the target star, casts a shadow to exclude starlight. The recent Probe class concept explored the use of a 34m shade with WFIRST that was up to 35000kms away from the telescope. The throughput of light is 100% versus 20-30% maximum for most coronagraph designs, in an area where photons of light are at a premium. Perhaps just 1 photon per second or less might hit a sensor array from an exoplanet.

A quick word on coronagraph type might be useful. Most coronagraphs consist of a “mask” that sits in the entrance pupil of the focal plane and blocks out the central parent starlight whilst allowing the fainter peripheral exoplanet light to pass and be imaged. Some starlight will diffract around the mask (especially so for longer wavelengths like infrared) but can be removed by shaping the entry pupil or subsequent apodization (i.e., optical filtering technique), a process utilising a series of mirrors to “shave” off additional starlight till just the planet light is left.

For WFIRST the coronagraph is a combination of a “Lyot” mask and shaped pupil. This is efficient at blocking starlight to within 100 mas of the star but at the cost of losing 70-80% of the planet light, as previously stipulated. Such is the current level of technological progression ahead of proposals for the HDST. The reserve design utilises apodization, which has the advantage of removing starlight efficiently but without losing planet light; indeed, as much as 95% gets through. The design has not yet been tested to the same degree as the WFIRST primary coronagraph, though, as the necessary additional mirrors are very hard to manufacture. Its high “throughput” of light is very appealing where light is so precious, and thus the design is likely to see action at a later date. A coronagraph throughput of 95% on an off-axis 8.4m telescope compared to 20-30% for an alternative on even a 12m would allow more light to be analysed.

The advantage here is that the even more stringent stability requirements of a coronagraph are very much relaxed, and the amount of useful light reaching the focal plane of the telescope is near 100%. No place for waste. Star-shades offer deeper spectroscopic analysis compared to coronagraphs, too. The disadvantage is that the star-shade needs two separate spacecraft involved in precision “formation flying” to maintain the star-shade’s shadow in the right place, and the star-shade needs to move into a new position every time a new target is selected, taking days or weeks to get into position and of course having finite propellant supplies limiting its lifespan to a maximum of 5 years, and perhaps thirty or so premium-target exoplanets. Thus it may be that preliminary exoplanet discovery and related target mapping is done rapidly via a coronagraph before atmospheric characterisation via spectroscopy is done later by a star-shade with its greater throughput of light and greater spectroscopic range.

The good news is that the recent NASA ExoPAG consensus criteria require an additional Probe class ($1 billion) star-shade mission for WFIRST as well as a coronagraph. This would need the telescope to be at the stable Sun/Earth Lagrange point, but would make the mission in effect a technological demonstration mission for both types of starlight suppression, saving development costs for any future HDST while imaging up to 30 habitable zone Earth-like planets and locating many more within ten parsecs in combination with the Gaia astrometry results.

The drawback will be that WFIRST has a monolithic mirror and coronagraph development to date has focused on this mode rather than the segmented mirrors of larger telescopes. Star-shades are less affected by mirror type or quality, but a 12m telescope — compared to WFIRST’s 2.4m — would only achieve maximum results with a huge 80m shade. Building and launching a 34m shade is no mean feat but building and launching an enormous 80-100m version might even require fabrication in orbit. It would also need to be 160000-200000Kms from its telescope, making formation flying no easy achievement, especially as all star-shade technology can be tested only in computer simulations or downscaled in practice.

HDST Beyond Exoplanets

So that’s the exoplanet element. Exciting as such science is, it only represents a small portion of all astrophysics and any such HDST is going to be a costly venture, probably in excess of JWST. It will need to have utility across astrophysics, and herein lies the problem. What sort of compromise can be reached amongst different schools of astrophysics in terms of telescope function and also access time? Observing distant exoplanets can take days, and characterising their atmospheres even longer.

Given the price of JWST and its huge cost and time overrun, any Congress will be skeptical of being drawn into a bottomless financial commitment. It is for this reason that increasingly the focus is on both JWST and WFIRST. The first has absolutely GOT to work, well and for a long time, so that all its faults (as with Hubble, ironically) can be forgotten amid the celebration of its achievements. WFIRST must illustrate how a flagship level mission can work at a reasonable cost (circa $2.5 billion) and also show that all the exoplanet technology required for a future large telescope can work and work well.

The HABX2 telescope is in effect a variable aperture-specific variant of HDST (determined by funds) with the maximum possible passively cooled sensor bandpass described above and a larger version of the additional starlight suppression technology of WFIRST. In effect, a dedicated exoplanet telescope. It, too, would use a coronagraph or star-shade.

The overarching terms for all these telescope variants are determined by wavelength; thus the instrument would be referred to as Large Ultraviolet Optical InfraRed (LUVOIR), with specific wavelength range to be determined as necessary. Such a telescope is not a dedicated exoplanet scope and would obviously require suitable hardware. This loose definition is important as there are other telescope types — high energy, for instance, looking at X-Rays. The NASA Chandra telescope doesn’t image the highest energy X-Rays emitted by quasars or black holes. Following on from JWST and between it and the ALMA (Atacama Large Millimeter/submillimeter Array) is far infrared, which can use dedicated telescopes and has not been explored extensively. There are astrophysicist groups lobbying for all these telescope types.

Here WFIRST is again key. It will locate thousands of planets through conventional transition photometry and micro-lensing as well as astrometry, but the directly-imaged planets via its coronagraph and better still its star-shade should, if characterised (with the JWST?), speak for themselves, and if not guarantee a dedicated exoplanet HDST, at least provide NASA and Congress with the confidence to back a large space “ELT” with suitable bandpass and starlight suppression hardware, and time to investigate further. The HDST is an outline of what a future space telescope, be it HABX2 or a more generic instrument, might be.

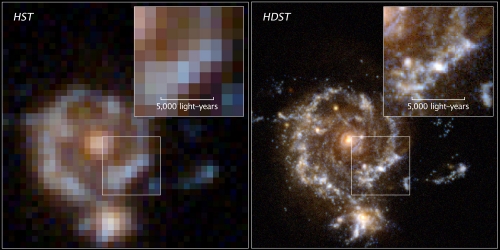

Image: A simulated spiral galaxy as viewed by Hubble, and the proposed High Definition Space Telescope (HDST) at a lookback time of approximately 10 billion years (z = 2) The renderings show a one-hour observation for each space observatory. Hubble detects the bulge and disk, but only the high image quality of HDST resolves the galaxy’s star-forming regions and its dwarf satellite. The zoom shows the inner disk region, where only HDST can resolve the star-forming regions and separate them from the redder, more distributed old stellar population.

Credit: D. Ceverino, C. Moody, and G. Snyder, and Z. Levay (STScI).

Challenges to Overcome

The concern is that although much of its technology will hopefully be proven through the success of JWST and WFIRST, the step up in size in itself requires a huge technological advance, not least because of the exquisite accuracy required at all levels of its functioning, from observing exoplanets via a star-shade or coronagraph to the actual design, construction and operation of these devices. A big caveat is that it was this technological uncertainty that contributed to the time and cost overrun of JWST, something both the NASA executive and Congress are aware of. It is highly unlikely that such a telescope will launch before the mid-2030s at an optimistic estimate. There has already been pushback on an HDST telescope from NASA. What might be more likely is a compromise, one which delivers a LUVOIR telescope as opposed to an X-Ray or far-infrared alternative, but at more reasonable cost and budgeted for over an extended time prior to a 2030s launch.

Congress are keen to drive forward high profile manned spaceflight. Whatever your thoughts on that, it is likely to lead to the evolution of the SLS and private equivalents like SpaceX launchers. Should these have a fairing of around 10m, it would be possible to launch the largest monolithic mirror in an off-axis format that allows easier and most efficient use of a coronagraph or an intermediate star-shade (50m) with minimal technology development and at substantially less cost. Such a telescope would not present such a big technological advance and would be a relatively straightforward design. Negotiation over telescope usage could lead to greater time devoted to exoplanet science, thus compensating further for the “descoping” from the 12m HDST ideal (only 15% of JWST observation is granted for exoplanet use).Thus the future of manned and robotic spaceflight is intertwined.

A final interesting point is the “other” forgotten NRO telescope. It is identical to its high profile sibling and with “imperfections” in its manufacturing, but a recent NASA executive interview conceded it could still be used for space missions. At present logic would have it as backup for WFIRST. Could it, too, be the centrepiece of an exoplanet mission, one of the Probe concepts perhaps, especially the transit spectroscopy mission where mirror quality is less important?

As with WFIRST, its large aperture would dramatically increase the potency of any mission over a bespoke mirror and deliver a flagship mission at Probe costs. A bonus if, like WFIRST, it too is launched next decade, and as with Hubble and JWST, a bit of overlap with JWST would provide great synergy with the combined light-gathering capacity of the two telescopes, allowing greater spectroscopic characterisation of interesting targets provided by missions like TESS. The JWST workload could also be relieved, somewhat critically extending its active lifespan. Supposition only at this point. I don’t think NASA are sure what to do with it, though Probe funding could represent a way of using it without the need of diverting additional funds from elsewhere.

When all is said and done, the deciding factors are likely to be JWST and evidence collected from exoplanet Probe missions. JWST was five years overdue, five billion dollars overspent and laden with 162 moving parts, yet placed almost a million Kms away. It has simply got to work, and work well, if there is to be any chance of any other big space telescopes. Be nervous and cross fingers when it launches late 2018. Meantime, enjoy TESS and hopefully WFIRST and other Probe missions, which should be more than enough to keep everyone interested even without the arrival of the ELT ground base reinforcements with their high dispersion spectroscopy, which in combination with their own coronagraphs may also characterise habitable exoplanets. These planets and the success of the technology that finds them will be key to the development of the next big space telescope, if there is to be one.

Capturing public interest will be central to this, and we have seen just how much astrophysics missions can achieve in this regard with the recent high-profile successes of Rosetta and New Horizons. With ongoing innovation and the exoplanet missions next decade, this could usher in a golden era of exoplanet science. A final often forgotten facet of space telescopes, central to HDST use, is observing solar system bodies from Mars out to the Kuiper belt. Given the success of New Horizons, it wouldn’t be a surprise to see a similar future flyby of Uranus, but it gives some idea of the sheer potency of an HDST that it could resolve features down to just 300Km resolution. It could clearly image the icy “plumes” from Europa and Enceladus, especially in UV, where the shorter wavelength will allow its best resolving power, which illustrates the need for an ultraviolet capacity on the telescope.

By 2030 we are likely to know several tens of thousands of exoplanets, many characterised and even imaged, and who knows, maybe some exciting hints of biosignatures warranting the kind of detailed examination only a large space telescope can deliver.

Plenty to keep Centauri Dreams going for sure and maybe realise our position in the Universe.

——-

Further reading

Dalcanton, Seager at al., “From Cosmic Birth to living Earths: The future of UVOIR space astronomy.” Full text.

“HABX2: A 2020 mission concept for a flagship at modest cost,” Swain, Redfield et al. A white paper response to the Cosmic Origins Program Analysis Group call for Decadal 2020 Science and Mission concepts. Full text.

I have puzzled long over the question: What motivation can be offered to politicians to spend that kind of money on something that does not directly and quickly garner large blocs of votes. I can not imagine any. Thus, the work outlined in this article, will be done by more centralized governments. China comes to mind.

For 40 hours, Earth moves 300 its diameter. We need to know host star’s distance, mass, planetary plane inclination, etc in order to piece out the image of its planets.

Is this the way how it works?

Two questions:

1. If we gain experience with managing 2 craft, a telescope and star shade, will this open up teh possibility of using interferometer linked telescopes to increase the effective diameter and resolution?

2. Is is possible to build automated craft to refill the liquid helium to extend the life of such telescopes? Alternatively, would it make any sense to put the spacecraft in Earth’s shadow, or even farther out in the solar system, to keep the telescope cool with less helium?

The sun earth L2 is about 1,500,000 km from earth. And at L2 the central and orbiting bodies both pull in the same direction so they don’t cancel each other out. At SEL2 he combined sun and earth gravity cancel out inertia in a rotating frame, what we use to call centrifugal force.

@Ashley Baldwin

Thanks for a very informative article on the future of space telescopes, there is much too look forward to.

Now I am surprised that no binocular scopes have been proposed, although they are more complex to deploy and build they have an advantage of increasing the resolution greatly.

Thanks for the excellent overview.

May I ask what is the concern of an element within NASA about the reaction to the discovery of earth like planets?

Thanks

Great article.

Good catch Hop. I too was scratching my head over that SEL-2 comment, but just kept reading. Thanks for clearing it up.

Great read! A few thoughts and questions.

Could we use objects in our own solar system as a starshade, such as planets and asteroids?

I also remember reading that, a mirror needs to be a minimum of 18m diameter to to imiage an earth sized exoplanets ? Is that not required due to enhancements on the sensors?

Are any thoughts of building a large aperture telescope on the moon? It would provide a more stable surface to allow better precision, without the risk of loosing its reaction wheels?

As an add-on to Alex’s questions:

1) At some point it might make sense to give each mirror segment it’s own propulsion and navigation unit and fly them in formation with the detectors. This would permit swapping out of defective mirror segments, dynamic extension of telescope size, and swapping out of detectors for upgrades and changes in mission focus.

2) Could the passive cooling not be extended to the entire telescope by the deployment of a sunshade? This should allow the telescope to remain cool indefinitely, without coolant, power, or vibrations. Equilibrium temperature in the shade should be no more than a handful of Kelvin, or not? A sunshade would be a very thin metal foil just large enough to shade the craft, and could be very lightweight.

Some interesting facts about the JWST

http://webbtelescope.org/webb_telescope/technology_at_the_extremes/quick_facts.php

And webcam views

http://www.jwst.nasa.gov/

Great in-depth analysis . Only one thing I personally miss : What about in-orbit assembly of structures too big or too heavy to launch in one piece ?

The ISS will not be there for ever , it would be a tragedy if it was given up, just a few years before it could enable the assemby of THE giant telescope that we hardly dare to dream about anymore . The ISS is still the single biggest investment ever made in space , and it was build to assemble things in space . Perhaps worth the effort to install ion-motors and boost it to a longtime-stabe orbit , and find a way to ”wrap it in plastic” (or fill it up with nitrogen gas ) like they do with old aircraft carriers ?

To Harold Daughety, we motivate politicians to spend on space telescopes by spreading the spending among as many states as possible. The votes will follow. This works for military projects, even though they are much more expensive, and often don’t work or are strategically unnecessary. We can use the same technique in a better cause.

Thanks for this engrossing article and for the links.

I remember feeling foolish for arguing with my high school physics mentor (a truly life changing teacher) about building giant telescopes to see extrasolar planets…He correctly explained the limits of resolution of visible light.

How I wish we could read this article together. I recall your words, “Always move forward. Never be satisfied.”…Too right Mr. J!

Eniac, a sunshade would probably be multi-layer insulation (MLI). A single layer would reflect sunlight but also pass along a good amount of infra-red to the telescope. A few layers would greatly reduce infrared to the scope.

SEL2 is a great spot for a shade. The earth, sun and moon will all be in the same region of the sky so the shade could subtend a small solid angle maybe as little as 1 pi steradians.

That way the telescope could enjoy 3 pi steradians of of open sky that’s mostly 3 degrees Kelvin. (2 pi steradians is a hemisphere, the whole sky would be 4 pi steradians).

That’s going to require quite a large light bucket; It’s not enough to have an aperture large enough for diffraction limited imaging. You also need enough photons to land in it in a reasonable amount of time.

Photons from exoplanet features are going to be arriving at a pretty low rate.

Dr. Baldwin, thank you for another in-depth and insightful article on the state of NASA’s exoplanet missions, including WFIRST! I’m always glad to see this kind of public discourse on the missions we build to bring discoveries to all.

I would like to comment, though, on the statement that “There has even been a recent suggestion that exoplanet science or at least the coronagraph actually drives the WFIRST mission. ” We have continued to define WFIRST in terms of its full complement of primary mission objectives: dark energy, infrared surveys, exoplanet census, exoplanet coronagraphy, and guest observer science (that last piece being the mode in which Hubble typically is used). Some aspects of the coronagraph influence the mission’s design, but in truth only a small part.

The decision to consider the Sun-Earth L2 as a baseline for WFIRST is motivated by the improvement to each of the science cases above enabled by that location. Being suitable for future star-shade use is a beneficial side effect, and the Science Definition Team for Exo-S is in the process of working out what would need to be incorporated into WFIRST to make it star-shade-ready. NASA hasn’t made a firm commitment to WFIRST yet (although it is being studied as the presumed Decadal Survey mission from 2010, to be launched in the 2020s), and certainly has made no commitment to make it star-shade-ready. Any star-shade mission — and indeed all the other concepts of “probe” or “flagship” scale you mention — will await the 2020 Decadal Survey in order to prioritize what goes forward. A star-shade for WFIRST, if given a high ranking, would likely follow WFIRST to L2 several years later, perhaps after the other surveys were already complete. Under the assumption of a flat budget for NASA Astrophysics, significant funding for a new mission won’t be available until 2025.

I would also like to address the statement that WFIRST “will locate thousands of planets through conventional transition photometry and micro-lensing as well as astrometry”. The centerpiece of WFIRST’s exoplanet science is the microlensing survey, which will detect thousands of planets in a census to complete the work started by Kepler. The microlensing technique will be significantly more sensitive to planets in the habitable zone (roughly Earth-Mars orbits), and can detect planets reliably down to masses only a few times that of the Moon. We will therefore have a substantially more complete picture of what exoplanetary systems are like. A comparatively minor aspect of the exoplanet census science is anticipated to come from transit photometry or astrometry. The coronagraph is envisioned as an experimental instrument for technology demonstration to give us an early sense of what can be achieved with very high contrast imaging and spectroscopy of exoplanets.

Dominic Benford

WFIRST Program Scientist, NASA

I’d also like to take this opportunity to address Alex’s questions.

1. “…will [the star-shade] open up the possibility of using interferometer linked telescopes…?”

It’s a useful step, but free-flying interferometers require something approaching a million times more precise positional knowledge/control of the optical elements to combine light correctly. Blocking light is a relatively coarse and therefore more tractable formation-flying challenge.

2a. “Is is possible to build automated craft to refill the liquid helium to extend the life of such telescopes?”

Yes, in theory, although our telescopes haven’t thus far been designed for this. Transferring liquid helium in space was demonstrated by an experiment in the Space Shuttle (the Superfluid Helium On-Orbit Transfer experiment), which successfully moved the cryogen around in the zero-gravity, vacuum environment.

2b. “Would it make any sense to put the spacecraft in Earth’s shadow, or even farther out in the solar system, to keep the telescope cool with less helium?”

A spacecraft can’t easily operate in Earth’s shadow: there is no stable position fully shadowed, and if you were there, no solar power would be available. For cold missions, in Low-Earth Orbit we often use a polar sun-synchronous orbit that keeps the Sun always on one side, the Earth always below the telescope, and the telescope itself pointed toward cold space. This is the most benign condition and has been used several times, including on the last infrared survey mission I worked on (WISE). To go farther out, the least fuel is used in an Earth-trailing (driftaway) orbit, which remains about 1AU from the Sun but slowly slides back along our path. Spitzer, another of our infrared missions, is in such an orbit. The L2 orbit to be used by Webb is another great place for a cold, thermally stable environment, but we don’t actually go _to_ L2, which is an unstable place, but rather perform some elaborate dance to remain near it.

Finally, to address one of Andrew’s questions:

“Could we use objects in our own solar system as a starshade, such as planets and asteroids?”

Yes, this is done. It’s referred to as occultation, and is how astronomers discovered the rings of Uranus and studied the atmosphere of Pluto, among other things. However, occultations are relatively rare and brief things, and I could well imagine that an event that would permit exoplanet spectroscopy can never happen.

A fascinating and thorough read, I would like to express my thanks to the author for this article.

Personally I am also interested in future developments in membrane telescopes and the DARPA MOIRE project, as well recently proposed study of rainbow space telescope.

http://phys.org/news/2014-04-telescope-tech-membrane-optics-phase.html

http://www.space.com/29677-floating-cloud-space-telescope-glitter-tech.html

Thanks for all the comments. I should add that direct imaging is one of THEE great human technological achievements . Just thinking how far away these tiny non luminous planets are to see them and characterise them is phenomenal really. We stand at the edge of greatness really as technology that was inconceivable even 20 years ago is proposed and used. Astronomy is littered with unexpected discoveries and exoplanet science is no exception and despite all the great simulation and observation work so far as these be large space and ground telescopes come on line no doubt stunning findings will occur. Especial thanks to Dr Benford for taking time to reply. A great honour. The coronagraph driving the mission was cited in an exoplanet presentation in May at JPL as much as a question as a statement but the very fact such a comment should slip into the public domain is amazing given the uncertainty surrounding coronagraphs before WFIRST which has driven the technology on enormously for which I am certainly very grateful. AURA described coronagraphs as the “work horse” of exoplanet science but they too are enormously difficult to construct to high precision with stability and wavefront control absolutely critical and one of the biggest obstacles to overcome , hopefully through the WFIRST technology demonstration. The complex entry pupil to the NRO mirror assembly at its centre in many respects is like a segmented mirror so this will be of additional assistance for any future HDST if segmented. It might be worth remembering that thanks to the GMT the Arizona Uni Steward Mirror Lab can now construct space worthy off axis 8.4 m at very reasonable cost , with the light gathering capacity of a 9.2m on axis which is not far short of the AURA 10-12 m proposal. Coronagraphs are much easier to construct for monolithic mirrors especially if unobstructed .

I think the Large Binocular telescope will be a one off. It has incredible viewing quality up to 90% of the diffraction limit in the near infrared but its complexity and novel technology often lead to “down time” as glitches are fixed. Compared to Hubble or the VLT, its publication output is small , a measure of telescope activity. That said it offers the equivalent of a 12m mirror and up to 22m as an interferometer which I think is its best and most important role as long term interferometry will come to play a big part in optical imaging as with radio astronomy. Given the much greater light gathering of the ELTs though I don’t think there will be a drive to build more LBTs. Meantime it has played an important role in exoplanet imaging already by conducting a survey of “zodiacal light” in nearby star systems that could be imaged by future telescopes. This light is caused by ground up comet and asteroid dust and could severely impede imaging of exoplanets as it can mimic them so given the premium on any exoplanet imaging telescope’s time ruling out these stars surrounded by his levels of zodiacs light will be very helpful. To date the solar system seem to have a low level this light compared to other systems with its level defined as one “zodi ” . Up to several hundred higher levels have been found in some younger star systems by the LBT . The “New Worlds Observer” article on arXiv led by Margaret Turnbull is a very educational and easy read for exoplanet imaging with an excellent graph illustrating the effect on discovery and characterisation time of distance and zodiacal light for its 4m mirror . I recommend a read.