Centauri Dreams

Imagining and Planning Interstellar Exploration

A Potential Martian Biosignature

I’ve long maintained that we’ll find compelling biosignatures on an exoplanet sooner than we’ll find them in our own Solar System. But I’d love to be proven wrong. The recent flurry of news over the interesting findings from the Perseverance rover on Mars is somewhat reminiscent of the Clinton-era enthusiasm for the Martian meteorite ALH8001. Now there are signs, as Alex Tolley explains below, that this new work will prove just as controversial. Biosignatures will likely be suggestive rather than definitive, but Mars is a place we can get to, as our rovers prove. Will Perseverance compel the sample return mission that may be necessary to make the definitive call on life?

by Alex Tolley

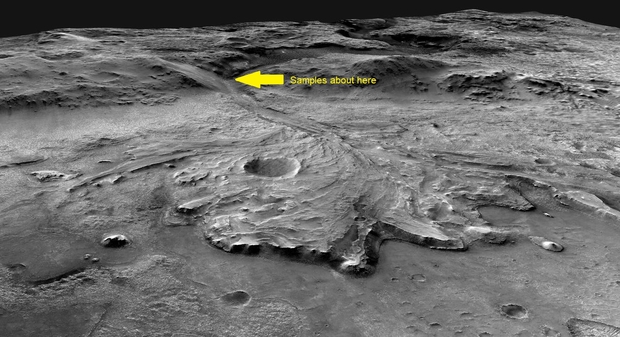

Overview of jezero Crater and sample site in article. Credit NASA/MSSS/USGS.

On September 10, 2025, Nature published an article that got wide attention. The authors claimed that they had discovered a possible biosignature on Mars. If confirmed, they would have won the race to find the first extraterrestrial biosignature. Exciting!

One major advantage of detecting a biosignature in our system is that we can access samples and therefore glean far more information than we can using spectroscopic data from an exoplanet. This will also reduce the ambiguity of simpler atmospheric gas analyses that are all we can do with our telescopes at present.

Figure 1. Perseverance’s path through Neretva Vallis and views of the Bright Angel formation. a, Orbital context image with the rover traverse overlain in white. White line and arrows show the direction of the rover traverse from the southern contact between the Margin Unit and Neretva Vallis to the Bright Angel outcrop area and then to the Masonic Temple outcrop area. Labelled orange triangles show the locations of proximity science targets discussed in the text. b, Mastcam-Z 360° image mosaic looking at the contact between the light-toned Bright Angel Formation (foreground) and the topographically higher-standing Margin Unit from within the Neretva Vallis channel. This mosaic was collected on sol 1178 from the location of the Walhalla Glades target before abrasion. Upslope, about 110 m distant, the approximate location of the Beaver Falls workspace (containing the targets Cheyava Falls, Apollo Temple and Steamboat Mountain and the Sapphire Canyon sample) is shown. Downslope, about 50 m distant, the approximate location of the target Grapevine Canyon is also shown. In the distance, at the southern side of Neretva Vallis, the Masonic Temple outcrop area is just visible. Mastcam-Z enhanced colour RGB cylindrical projection mosaic from sol 1178, sequence IDs zcam09219 and zcam09220, acquired at 63-mm focal length. A flyover of this area is available at https://www.youtube.com/watch?v=5FAYABW-c_Q. Scale bars (white), 100 m (a), 50 m (b, top) and 50 cm (b, bottom left). Credit: NASA/JPL-Caltech/ASU/MSSS.

Let’s back up for context. The various rover missions to Mars have proceeded to determine the history of Mars. From the Pathfinder mission starting in 1996, the first mission since the two 1976 Viking landers, the various rovers from Soujourner (1997), Spirit & Opportunity (2004), Curiosity (2012), and now Perseverance (2021), have increased the scope of their travels and instrument capabilities. NASA’s Perseverance rover was designed to characterize environments and look for signs of life in Jezero Crater, a site that was expected to be a likely place for life to have existed during the early, wet phase of a young Mars. The crater was believed to be a lake, fed by water running into it from what is now Neretva Vallis, and signs of a delta where the ancient river fed into the crater lake are clear from the high-resolution orbital images. Perseverance has been taking a scenic tour of the crater, making stops at various points of interest and taking samples. If there were life on Mars, this site would have both flowing water and a lake, with sediments that create a variety of habitats suitable for prokaryotic life, like the contemporary Earth.

Perseverance had taken images and samples of a sedimentary rock formation, which they called Bright Angel. The work involved using the Scanning Habitable Environments with Raman and Luminescence for Organics and Chemicals (SHERLOC) instrument to obtain a Raman UV spectrum of rock material from several samples. The authors claimed that they had detected 2 reduced iron minerals, greigite and vivianite, and organic carbon. The claim is that these have been observed in alkaline environments on Earth due to bacteria, and therefore prove to be a biosignature of fossil life. The images showed spots (figure 2) which could possibly be the minerals formed by the metabolism of anaerobic bacteria, reducing sulfur and iron for energy. The organic carbon in the mudstone rock matrix is the fossil remains of the bacteria living in the sediments.

Exciting, no? Possible proof that life once existed on Mars. The authors submitted a paper to Nature with the title, “Detection of a Potential Biosignature by the Perseverance Rover on Mars“. The title was clearly meant to catch the scientific and popular attention. At last, NASA’s “Follow the Water” strategy and exploration with their last rover equipped to detect biosignatures had found evidence of fossil life on Mars. It might also be a welcome boost for NASA’s science missions, currently under funding pressure from Congress.

Then the peer review started, and the story seemed less strong. Just as 30 years ago, when the announcement from the White House by the US president, Bill Clinton claimed that a Martian meteorite retrieved from the Antarctic, ALH8001, was evidence of life on Mars proved very controversial. Notably, slices of that meteorite viewed under an electron microscope showed images of what might have been some forms of bacteria. These images were seen around the world and were much discussed. The consensus was that the evidence was not unambiguous, with even the apparent “fossil bacteria” being explained as natural mineral structures.

Well, the new paper created one of the longest peer review documents I have ever read. Every claimed measurement and analysis was questioned, including the interpretation. The result was that the paper was published as the much drier “Redox-driven mineral and organic associations in Jezero Crater, Mars”. There are just 3 uses of the term biosignatures, each prefaced with the term “potential”, and the null hypothesis of abiotic origin emphasized as well. One of the three peer reviewers even wanted Nature to reject the paper, based on what might be another ALH8001 fiasco. A demand, too far.

What were the important potential biosignature findings?

Organisms extract energy from molecules via electron transfer. This often results in the compounds becoming more reduced. For example, sulfur-reducing bacteria convert sulfates (SO4) to sulfide (S). Iron may be reduced from its ferric (Fe3+) state to its ferrous (Fe2+) state. Two minerals that are often found reduced as a result of bacterial energy extraction are greigite Fe2+Fe3+2S4] and vivianite [Fe2+3(PO4)2·8H2O]. On Earth, these are regarded as biosignatures. In addition, unidentified carbon compounds were associated with these 2 minerals. The minerals were noticed as spots on the outcrop and identified with the Planetary Instrument for X-ray Lithochemistry (PIXL), which can identify elements via X-ray spectroscopy. The SHERLOC instrument identified the presence of carbon in association with these minerals.

Figure 2. An image of the rock named “Cheyava Falls” in the “Bright Angel formation” in Jezero crater, Mars, collected by the WATSON camera onboard the Mars 2020 Perseverance rover. The image shows a rust-colored, organic matter in the sedimentary mudstone sandwiched between bright white layers of another composition. The small dark blue/green to black colored nodules and ring-shaped reaction fronts that have dark rims and bleached interiors are proposed to be potential biosignatures. Credit: NASA/JPL-Caltech/MSSS.

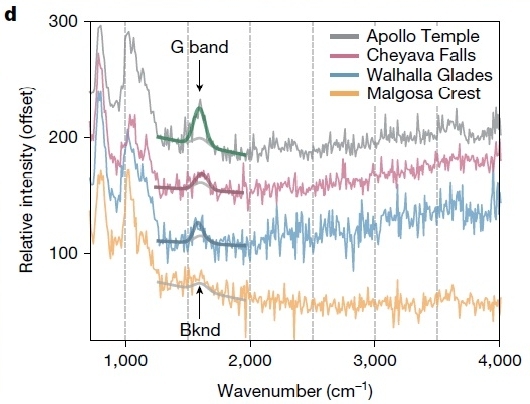

To determine whether the carbon associated with the greigite and vivianite was organic or inorganic, the material was subjected to ultraviolet rays. Organic carbon bonds, especially carbon-carbon bonds, will respond to specific wavelengths by vibrating, like sound frequencies can resonate and break wine glasses. Raman spectroscopy is the technique used to detect the resonant vibrations of types of carbon bonds, particularly specific arrangements of the atoms and their bonds that are common in organic carbon. The spectroscopic data indicated that the carbon material was organic, and therefore possibly from decayed organisms. This would tie together the findings of the carbon and the 2 minerals as a composite biosignature. However, the reviewers also questioned the interpretation of the Raman spectrum.. The sp2 carbon bonds (120 degrees) seen in aromatic 6-carbon rings, in graphene, graphite, and commonly in biotic compounds, should show both a G-band (around 1600 cm-1) and a D-band (around 2700 cm-1), yet the spectrum only clearly showed the G-band. Did this imply that the organic carbon may not have been found? The reviewers also questioned why the biological explanation was favored over an abiotic one. No one questioned the greigite and vivianite findings, other than that they are not exclusively associated with anaerobic bacterial metabolism.

Figure 3 – Raman spectrum with interpolated curves to highlight the G-band in the 4 samples taken at the location.

So what to make of this? Clearly, the authors backed down on their more positive interpretation of their findings as a biosignature.

What analyses would we want to do on Earth?

Assuming the samples from Perseverance are eventually retrieved and returned to Earth, what further analysis would we want to do to increase our belief that a biosignature was discovered?

A key analysis would be to analyze the carbon deposits. The Raman UV spectra indicate that the carbon is organic, which is almost a given. You may recall that the private MorningStar mission to Venus will do a similar analysis but use a laser-induced fluorescence that detects aromatic rings [1]. Neither of these techniques can distinguish between abiotic and biogenic carbon. The carbon may even be in the form of common polycyclic aromatic hydrocarbons (PAH), a form that is ubiquitous and is easily formed, especially with heat.

One useful approach to distinguish the source of the carbon is to measure the isotopic ratios of the 2 stable carbon isotopes, carbon-12 and carbon-13. Living organisms favor the lighter carbon-12, and therefore, the C13/C12 ratio is reduced when the carbon is from living organisms. This must be compared to known abiotic carbon to confirm its source. This analysis requires a mass spectrometer, which was not included with the Perseverance instrument pack.

The second approach is to analyze the carbon compounds. Gas chromatography followed by infrared spectroscopy is used to characterize the compounds. Life restricts the variety of compounds compared to random reactions, and can be compared to expectations based on Assembly Theory [2], although exposure to UV and particle radiation for billions of years may make the composition of the carbon more random.

Lastly, if the carbon were once protein or nucleotide macromolecules, any chirality might distinguish its source as biotic.

Isotopic analysis can also be made on the sulfur compounds in the greigite. As with carbon, life will preferentially use lighter isotopes. Bacteria reduce sulfate to sulfide for energy, and the iron sulfide mineral, greigite, is a waste product of this metabolism. Of the 2 stable sulfur isotopes, sulfur-32 and sulfur-34, if the S34/S32 ratio is reduced, then this hints that the greigite was formed biotically.

Lastly, opening up the samples and inspecting them with an electron microscope, there may be physical signs of bacteria. However, any physical features will need to be identified unambiguously to avoid the ALH8001 controversy.

Unfortunately for these proposed analyses, the Mars Sample Return (MSR) mission has been cut with the much-reduced NASA budget. When, or whether, we get these samples for analyses on Earth is currently unknown.

My view on the findings

If the findings and the interpretation of their compositions is correct, then this would probably be the most convincing, but still not unambiguous biosignature to date. If the samples are returned to Earth and the findings are extended with other analyses, then we probably would have detected fossil life on Mars. In my opinion, that would validate the idea that Martian life existed, and further exploration is warranted. We would then want to know if that life was similar or different from terrestrial life to shed light on abiogenesis or panspermia between Earth and Mars. As the formation of the Moon would have been very destructive, if life emerged on Mars and was spread to Earth, this might provide more time for living cells to evolve compared to the conditions on Earth. It would also stimulate the search for subsurface life on Mars, where interior heat and water between rock grains would support such a niche habitat as it does on Earth.

It seems a pity that without an MSR, we may have the evidence for Martian fossil life, packaged for analysis, but kept frustratingly remote and unavailable, mere millions of kilometers distant.

The paper is Hurowitz, J.A., Tice, M.M., Allwood, A.C. et al. “Redox-driven mineral and organic associations in Jezero Crater, Mars.” Nature 645, 332–340 (2025). https://doi.org/10.1038/s41586-025-09413-0

Other readings

Tolley A, (2022) Venus Life Finder: Scooping Big Science web: https://www.centauri-dreams.org/2022/06/03/venus-life-finder-scooping-big-science/

Walker, S. I., Mathis, C., Marshall, S., & Cronin, L. (2024). “Experimentally measured assembly indices are required to determine the threshold for life.” Journal of the Royal Society Interface, 21(220). https://doi.org/10.1098/rsif.2024.0367

Exoplanets: Refining the Target List

I wasn’t surprised to learn that the number of confirmed exoplanets had finally topped 6,000, a fact recently announced by NASA. After all, new worlds keep being added to NASA’s Exoplanet Science Institute at Caltech on a steady basis, all of them fodder for a site like this. But I have to admit to being startled by the fact that fully 8000 candidate planets are in queue. Remember that it usually takes a second detection method finding the candidate world for it to move into the confirmed ranks. That 8000 figure shows how much the velocity of discovery continues to increase.

The common theme behind much of the research is often cited as the need to find out if we are alone in the universe. Thus NASA’s Dawn Gelino, head of the agency’s Exoplanet Exploration Program (ExEP) at JPL:

“Each of the different types of planets we discover gives us information about the conditions under which planets can form and, ultimately, how common planets like Earth might be, and where we should be looking for them. If we want to find out if we’re alone in the universe, all of this knowledge is essential.”

I sometimes think, though, that the emphasis on an Earth 2.0 is over-stated. The search for other life is fascinating, but deepening our scientific knowledge of the cosmos is worthwhile even if we learn we are alone in the galaxy. What nature creates in bewildering variety merits our curiosity and deep study even on barren worlds. With ESA’s Gaia and NASA’s Roman Space Telescope in the mix, exoplanet detections will escalate dramatically. And a little further down the road is the Habitable Worlds Observatory, assuming we have the good sense to green-light the project and build it.

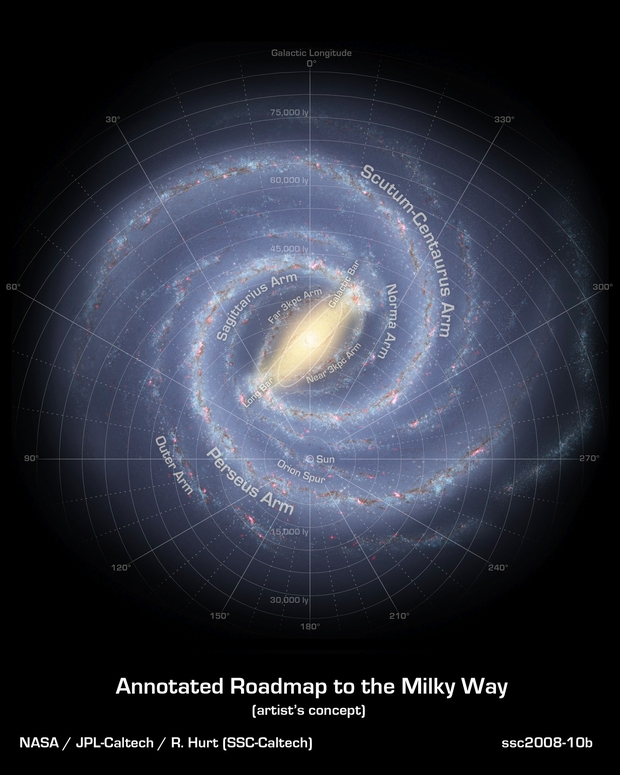

Bear in mind that almost all the known exoplanets are within a few thousand light years of Sol. We are truly awash in immensity. If there will ever be a complete catalog of the Milky Way’s planets, it will likely be from a Kardashev Type III civilization immensely older than ourselves. It’s fascinating to think that such a catalog might already exist somewhere. But it’s also fascinating to consider that we may be alone, which raises all kinds of questions about abiogenesis and the possible lifetime of civilizations.

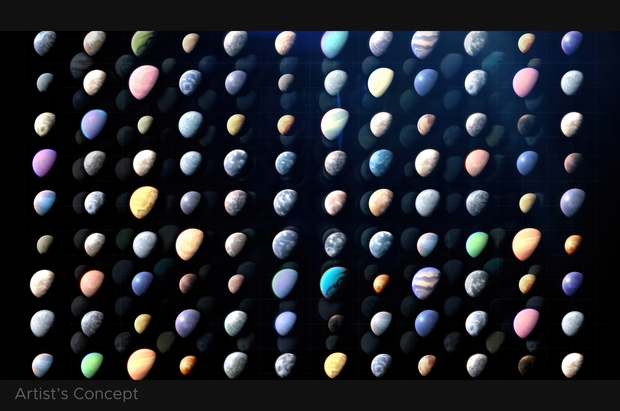

Image: Scientists have found thousands of exoplanets (planets outside our solar system) throughout the galaxy. Most can be studied only indirectly, but scientists know they vary widely, as depicted in this artist’s concept, from small, rocky worlds and gas giants to water-rich planets and those as hot as stars. Credit: NASA’s Goddard Space Flight Center.

The idea of a large and growing catalog of exoplanets is the kind of thing I used to dream about as a kid reading science fiction magazines. Now we’re on the cusp of biosignature detection capabilities via the deep study of exoplanet atmospheres. Fewer than a hundred exoplanets have been directly imaged, a number that is likewise expected to rise with the help of the Roman instrument’s coronagraph. With new tools to better block out the overwhelming glare of the host star, we’ll be seeing gas giants in Jupiter-like orbits. That in itself is interesting – how many exoplanet systems have gas giants in such positions? Thus far, the Solar System pattern is rarely replicated.

The Fortunes of K2-18b

The sheer variety of planetary systems brings even more zest to this work. Consider the planet K2-18b, so recently in the news as the home of a possible global ocean, and one with prospects for life given all the parameters studied by a team at the University of Cambridge. It’s a fabulous scenario, but now we have a new study that questions whether sub-Neptunes like this are actually dominated by water. Caroline Dorn (ETH Zurich), co-author of the paper appearing in The Astrophysical Journal, believes that water on sub-Neptunes is far more limited than we have been thinking.

Here’s another demonstration of how our Solar System is so unlike what we’re finding elsewhere. Lacking a sub-Neptune among our own planets, we’re learning now that such worlds – larger than Earth but smaller than Neptune and cloaked in a thick atmosphere abundant in hydrogen and helium – are relatively common in our galaxy as, for that matter, are higher density but smaller ‘super-Earths.’ A global ocean seems to make sense if a sub-Neptune formed well beyond the snowline and brought a robust inventory of ice with it as it migrated into the warmer inner system. Indeed, the term Hycean (pronounced HY-shun) has been proposed to label a sub-Neptune planet with a deep ocean under an atmosphere rich in hydrogen.

The new paper examines the chemical coupling between the planet’s atmosphere and interior, with the authors assuming an early stage of formation in which sub-Neptunes go through a period dominated by a magma ocean. The hydrogen atmosphere helps to maintain this phase for millions of years. And the problem is that magma oceans have implications for the water content available. Using computer simulations to model silicates and metals in the magma, the team studied the chemical interactions that ensue. Most H20 water molecules are destroyed, with hydrogen and oxygen bonding into metallic compounds and disappearing deep into the planet’s interior.

From the paper:

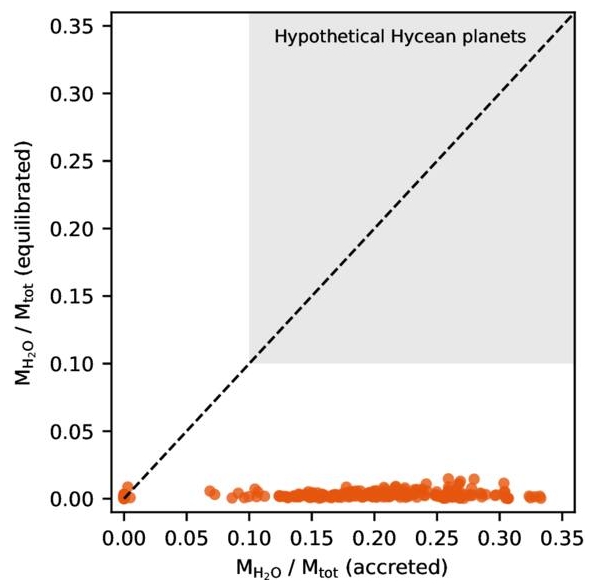

Our results, which focus on the initial (birth) population of sub-Neptunes with magma oceans, suggest that their water mass fractions are not primarily set by the accretion of icy pebbles during formation but by chemical equilibration between the primordial atmosphere and the molten interior. None of the planets in our model, regardless of their initial H2O content, retain more than 1.5 wt% water after chemical equilibration. This excludes the high water mass fractions (10–90 wt%) invoked by Hycean-world scenarios (N. Madhusudhan et al. 2021), even for planets that initially accreted up to 30% H2O by mass. These findings are consistent with recent studies suggesting that only a small amount of water can be produced or retained endogenously in sub-Neptunes and super-Earths.

The work analyzes 19 chemical reactions and 26 components across the range of metals, silicates and gases, with the core composed of both metal and silicate phases. A computer model known as New Generation Planetary Population Synthesis (NGPPS) combines planetary formation and evolution and meshes with code developed for global thermodynamics. Thus a population of sub-Neptunes with magma oceans is generated, and consistently primordial water is destroyed by chemical interactions.

Image: This is Figure 3 from the paper. Caption: Envelope H2O mass fraction as a function of semimajor axis… The left panel shows planets that predominantly formed inside the water ice line; the right panel shows those that formed outside. Classification is based on the accreted H2O mass fraction, with a threshold set at 5% of the total planetary mass. The colorbar indicates the molar bulk C/O ratio. Planets formed inside the ice line are systematically depleted in carbon due to the lack of volatile ice accretion and exhibit higher envelope H2O mass fractions. In contrast, planets formed beyond the ice line retain lower H2O content despite higher bulk volatile abundances. Each pie chart shows the mean mass fraction of hydrogen in H2 (gas), H (metal), H2 (silicate), H2O (gas), and H2O (silicate), normalized to the total mean hydrogen inventory for each population. Only components contributing more than 5% are labeled. Planets that formed beyond the ice line store most hydrogen as H2 (gas) and H (metal), while those that formed inside the ice line retain a larger share of hydrogen in H (metal), H2 (silicate), and H2O (gas + silicate).. Credit: Werlen et al.

This is pretty stark reading if you’re fascinated with deep ocean scenarios. Here’s Dorn’s assessment:

“In the current study, we analysed how much water there is in total on these sub-Neptunes. According to the calculations, there are no distant worlds with massive layers of water where water makes up around 50 percent of the planet’s mass, as was previously thought. Hycean worlds with 10-90 percent water are therefore very unlikely.”

The paper is suggesting that we re-think what had seemed an obvious connection between planet formation beyond the snowline and water in the atmosphere. Instead, the interplay of magma ocean and atmosphere may deliver the verdict on the makeup of a planet. By this modeling, planets with atmospheres rich in water are more likely to have formed within the snowline. That leaves rocky worlds like Earth in the mix, but raises serious doubts about the viability of water in the sub-Neptune environment.

From the paper:

Counterintuitively, the planets with the most water-rich atmospheres are not those that accreted the most ice, but those that are depleted in hydrogen and carbon. These planets typically form inside the ice line and accrete less volatile-rich material. While some retain significant atmospheric H2O, the high-temperature miscibility of water and hydrogen likely prevents the presence of surface liquid water—even on these comparatively water-rich worlds.

This has broad implications for theories of planet formation and volatile evolution, as well as for interpreting exoplanet atmospheres in the era of the James Webb Space Telescope (JWST), the Extremely Large Telescope (ELT), ARIEL, the Habitable World Observatory (HWO), and the Large Interferometer for Exoplanets (LIFE). It also informs atmospheric composition priors in interior characterization of transiting planets observed by Kepler, TESS, CHEOPS, and PLATO with radial velocity (RV) or transit timing variation (TTV) constraints.

K2-18b will obviously receive continued deep study. But with an aggregate 14,000 exoplanets confirmed or listed as candidates, consider how overwhelmed our instruments are by sheer numbers. To do a deep dive into any one world demands the shrewdest calculations to find which exoplanets are most likely to reward the telescope time. This aspect of target selection will only get more critical as we proceed.

The paper is Werlen et al., “Sub-Neptunes Are Drier than They Seem: Rethinking the Origins of Water-rich Worlds,” Tje Astrophysical Journal Letters Vol. 991, No. 1 (18 September 2025), L16 (full text).

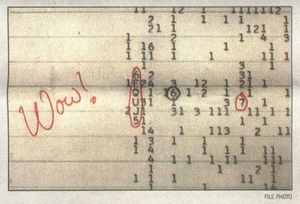

Beaming and Bandwidth: A New Note on the Wow! Signal

James Benford (president of Microwave Sciences, Lafayette CA) has just published a note in the Journal of the British Interplanetary Society that has relevance to our ongoing discussion of the Wow! Signal. My recent article was in the context of new work at Arecibo, where Abel Mendez and the Arecibo Wow! research effort have refined several parameters of the signal, detected in 1977 at Ohio State’s Big Ear Observatory. Let me slip in a quick look at Benford’s note before we move on from the Wow! Signal.

Benford has suggested both here and in other venues that the Wow! event can be explained as the result of an interstellar power beam intercepting our planet by sheer chance. Imagine if you will the kind of interstellar probe we’ve often discussed in these pages, one driven by a power beam to relativistic velocities. Just as our own high-powered radars scan the sky to detect nearby asteroids, a beam like this might sweep across a given planet and never recur in its sky.

But it’s quite interesting that in terms of the signal’s duration, bandwidth, frequency and power density, an interstellar power beam would be visible from another star system if this were to occur. All the observed features of such a beam are found in the Wow! Signal, which does not prove its nature, but suggests an explanation that corresponds with what data we have.

An interesting sidenote to this is, as Benford has discussed in these pages before, that if the Wow! Signal were an attempt to communicate, it should at some point repeat. Whereas a power beam from a source doing some kind of dedicated work in its system would never recur. We have had a number of attempts to find the Wow! Signal through the years but none have been successful.

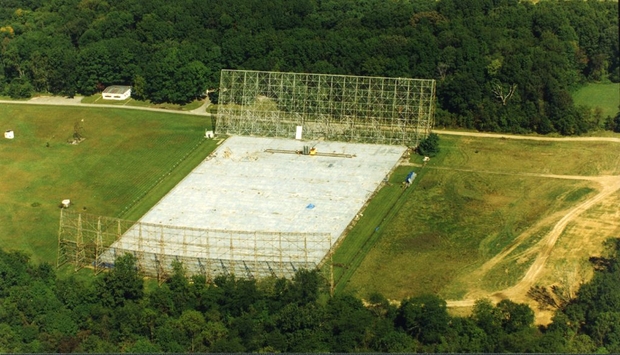

Image: The Ohio State University Radio Observatory in Delaware, Ohio, known as the Big Ear. Credit: By Иван Роква – Own work, CC BY-SA 4.0, via Wikimedia Commons.

The note in JBIS comes out of one of the Breakthrough Discuss meetings, where Michael Garrett (Jodrell Bank) asked Benford why, if a power beam explanation were the answer to the Wow!, a technical civilization would limit their beam to a narrow band of less than 10 kHz. It turns out there is an advantage in this, and that as Benford explains, narrow bandwidth is a requirement for high power-beaming systems in the first place.

Here I need to quote the text:

High power systems involving multiple sources are usually built using amplifiers, not oscillators, for several technical reasons. For example, the Breakthrough Starshot system concept has multiple laser amplifiers driven by a master oscillator, a so-called master oscillator-power amplifier (MOPA) configuration. Amplifiers are themselves characterized by the product of amplifier gain (power out divided by power in) and bandwidth, which is fixed for a given type of device, their ‘gain-bandwidth product.’ This product is due to phase and frequency desynchronization between the beam and electromagnetic field outside the frequency bandwidth.

Now we come to the crux. Power beaming to power up, say, an interstellar sail demands high power delivered to the target. To produce high power, each of the amplifiers involved must have small bandwidth, with the number of amplifiers used being determined by the power required. Benford puts it this way: “…you get narrow bandwidth by using very high-gain amplifiers to essentially ‘eat up’ the gain-bandwidth product.’

Thus we have a bandwidth limit for amplifiers, one that would apply both to beacons and power beams, which by their nature would be built to project high power levels. Small bandwidth is the physics-dictated result. None of this proves the nature of the Wow! Signal, but it offers an explanation that resonates with the fact that the four Wow! parameters are consistent with power beaming.

SETI Odds and Ends

I’m catching up with a lot of papers in my backlog, prompted by a rereading yesterday of David Kipping’s 2022 paper on the Wow! Signal, the intriguing, one-off reception at the Big Ear radio telescope in Ohio back in 1977 (Kipping citation below). I had just finished checking Abel Mendez’ work at Arecibo, where the Arecibo Wow! project has announced a new analysis based on study of previously unpublished observations using updated signal analysis techniques. No huge surprises here, but both Kipping’s work and Arecibo Wow! are evidence of our continuing fascination with what Kipping calls “the most compelling candidate for an alien radio transmission we have ever received.”

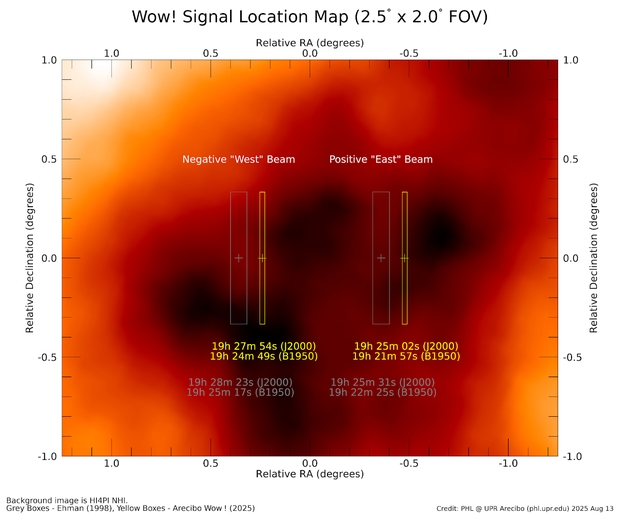

They also remind us that no matter how many times this intriguing event has been looked at, there are still new ways to approach it. I give the citation for the Mendez paper, written with a team of collaborators (one of whom is Kipping) below. Let me just pull this from Mendez’ statement on the Arecibo Wow! site, showing how the new work has refined the original Wow! Signal’s properties:

Location: Two adjacent sky fields, centered at right ascensions 19h 25m 02s ± 3s or 19h 27m 55s ± 3s, and declination –26° 57′ ± 20′ (J2000). This is both more precise and slightly shifted from earlier estimates.

Intensity: A peak flux density exceeding 250 Janskys, more than four times higher than the commonly cited value.

Frequency: 1420.726 MHz, placing it firmly in the hydrogen line but with a greater radial velocity than previously assumed.

Leading Mendez (University of Puerto Rico at Arecibo) to comment:

“Our results don’t solve the mystery of the Wow! Signal. “But they give us the clearest picture yet of what it was and where it came from. This new precision allows us to target future observations more effectively than ever before…. This study doesn’t close the case,” Méndez said. “It reopens it, but now with a much sharper map in hand.”

Image: Comparison of the previously estimated locations of the Wow! Signal (gray boxes) with the refined positions from the Arecibo Wow! Project (yellow boxes). The signal’s source is presumed to lie within one of these boxes and beyond the foreground Galactic hydrogen clouds shown in bright red. Credit: PHL @ UPR Arecibo.

Homing in on interesting anomalies is of course one way for SETI to proceed, although the host of later one-off detections from other locations (none evidently as powerful a signal as Wow!) doesn’t yield optimism that one of these will eventually repeat. A sweeping beam that by sheer chance swept across Earth from some kind of ETI installation? Works for me, if only we had repeating evidence. In the absence of it, we continue to dig into existing data using new techniques.

We can also proceed with targeted searches of nearby stars of interest both because of their proximity as well as the presence of unusual planetary configurations. The TRAPPIST-1 system isn’t remotely like ours, with its seven Earth-sized planets crammed into tight orbit around an M8V red dwarf star, but the fact that each of these transits makes the system of huge value. Now a team led by Guang-Yuan Song (Dezhou University, China) has used the FAST instrument (Five hundred meter Aperture Spherical Telescope) to search for SETI signals, delving into the frequency range 1.05–1.45GHz with a spectral resolution of ~7.5Hz. No signals detected, but the scientists plan to continue to search other nearby systems and do not rule out a return to this one. Citation below.

The Factors Leading to Technology

The frustration over lack of success at finding an extraterrestrial civilization is understandable, so it’s no surprise that theoretical work explaining it from an entirely opposite direction continues to appear. As witness a new study just presented at the EPSC-DPS Joint Meeting 2025 in Helsinki. Here we’re asking what factors go into making a technological society possible, assuming an evolutionary history something like our own, and probing whether changes to any of the parameters that helped us emerge would have made us impossible.

All this goes back to the 1960s and the original Drake Equation, which makes a loose attempt at sizing up the possibilities. Manuel Scherf and Helmut Lammer of the Space Research Institute at the Austrian Academy of Sciences in Graz paint a distressing picture for those intent on plucking a signal from ETI out of the ether. Their work has focused on plate tectonics and its relationship with the critical gas carbon dioxide, which governs the carbon-silicate cycle.

CO2 is a huge factor in sustaining photosynthesis, but too much of it creates greenhouse effects that can likewise spell the end of life on a planet like ours. The fine-tuning that goes on through the carbon-silicate cycle ensures that CO2 gets released back into the atmosphere through plate tectonics and volcanic emissions, recycling it back out of the rock in which it had been previously locked. Scherf pointed out to the EPSC-DPS gathering that if we wait somewhere between 200 million and one billion years, loss of atmospheric CO2 will bring an end to photosynthesis. The Sun may have another five billion years of life ahead, but the environment that sustains us won’t last nearly as long.

Indeed, the researchers argue, surface partial pressures and mixing ratios of CO2, O2, and N2 likewise affect such things as combustion, needed for the smelting of metals that underpins the growth of a technological civilization. Imagine a planet with 10 percent of its atmosphere taken up by CO2 (as opposed to the 0.042 percent now found on Earth). This world produces a biosphere that can sustain itself against a runaway greenhouse if further away from its star than we are from the Sun, but it would also require no less than 18 percent oxygen (Earth now has 21 percent) to ensure that combustion can occur.

If we do away with plate tectonics, so critical to the carbon-silicate cycle, we likewise limit habitable conditions at the surface. So we need this as well as enough oxygen to provide combustion to make technology possible. In other words, we have astrophysical, geophysical, and biochemical criteria that have to be met even when a planet is in the habitable zone if we are hoping to find lifeforms that have survived long enough to create technology. Rare Earth?

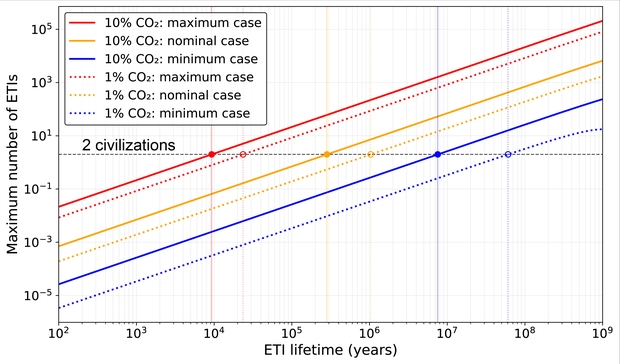

Scherf and Lammer weigh these factors against the amount of time it takes technology to emerge, assuming that the longer an ETI civilization exists, the more likely we are to observe it. Here I don’t have a paper to work with, so I can only report the conclusions presented at the EPSC-DPS conference, which are stated bluntly by Scherf:

“For 10 civilizations to exist at the same time as ours, the average lifetime must be above 10 million years. The numbers of ETIs are pretty low and depend strongly upon the lifetime of a civilization.”

I can also fall back on a 2024 paper from the same team discussing these matters, which delves not only into the question of the perhaps rare combination of circumstances which allows for technological civilizations to emerge but also our use of the Copernican Principle in framing the issues:

…our study is agnostic about life originating on hypothetical habitats other than EHs. Any more exotic habitats (e.g., subsurface ocean worlds) could significantly outnumber planets with Earthlike atmospheres, at least in principle. Finally, we argue that the Copernican Principle of Mediocrity cannot be valid in the sense of the Earth and consequently complex life being common in the Galaxy. Certain requirements must be met to allow for the existence of EHs and only a small fraction of planets indeed meet such criteria. It is therefore unscientific to deduce complex aerobic life to be common in the Universe, at least based on the Copernican Principle. Instead, we argue, at maximum, for a combined Anthropic-Copernican Principle stating that life as we know it may be common, as long as certain criteria are met to allow for its existence. Extremophiles, anaerobic and simple aerobic lifeforms, however, could be more common.

Image: An artist’s impression of our Milky Way Galaxy, showing the location of the Sun. Our Solar System is about 27,000 light years from the centre of the galaxy. The nearest technological species could be 33,000 light years away. Credit: NASA/JPL–Caltech/R. Hurt (SSC–Caltech).

All of which illuminates the paucity of data. We could say that it took four and a half billion years for technology to emerge on Earth, but that is our only reference point. We also have no data on how long technological societies exist. It is clear, though, that the longer the survival period, the more likely we are to be present in the cosmos at the same time they are. For there to be even one technological civilization in the galaxy coinciding with our existence, ETI would have to have survived in a technological phase for at least 280,000 years. I think that matches up with the case Brian Lacki has been making for some time now, which emphasizes the ‘windows’ of time within which we view the cosmos.

But Scherf adds this:

“Although ETIs might be rare there is only one way to really find out and that is by searching for it. If these searches find nothing, it makes our theory more likely, and if SETI does find something, then it will be one of the biggest scientific breakthroughs ever achieved as we would know that we are not alone in the Universe.”

Image: This graph shows the maximum number of ETIs presently existing in the Milky Way. The solid orange line describes the scenario of planets with nitrogen–oxygen atmospheres with 10 per cent carbon dioxide. In this case the average lifetime of a civilization must be at least 280,000 years for a second civilization to exist in the Milky Way. Changing the amount of atmospheric carbon dioxide produces different results. Credit: Manuel Scherf and Helmut Lammer.

I’ll note in passing that Adam Frank (Rochester Institute of Technology) and Amedeo Balbi (University of Rome Tor Vergata) have analyzed the question of an ‘oxygen bottleneck’ for the emergence of technology in a recent paper in Nature Astronomy. The memorable thought that if there are no other civilizations in the galaxy, it’s a tremendous waste of space sounds reasonable only if we have fully worked out how likely any planet is to be habitable. This new direction of astrobiological research tells me we have a long way to go.

The Mendez paper is Mendez et al., “Arecibo Wow! II: Revised Properties of the Wow! Signal from Archival Ohio SETI Data,” currently available as a preprint but submitted to The Astrophysical Journal. The Kipping paper from 2022 is Kipping & Gray, “Could the ‘Wow’ signal have originated from a stochastic repeating beacon?” Monthly Notices of the Royal Astronomical Society, Volume 515, Issue 1 (September 2022), pp.1122-1129 (full text). Thanks to my friend Antonio Tavani for the heads-up on the Mendez paper. The paper on the FAST search of TRAPPIST-1 is Guang-Yuan Song et al., “A Deep SETI Search for Technosignatures in the TRAPPIST-1 System with FAST,” submitted to The Astrophysical Journal and available as a preprint.

The Scherf and Lammer presentation is titled “How common are biological ETIs in the Galaxy?” EPSC-DPS Joint Meeting 2025, Helsinki, Finland, 7–12 Sep 2025, EPSC-DPS2025-1512. The abstract is available here. The same team’s 2024 paper on these matters is “Scherf et al. “Eta-Earth Revisited II: Deriving a Maximum Number of Earth-Like Habitats in the Galactic Disk,” Astrobiology 24 (2023), e916 (full text). The paper from Adam Frank is Frank & Balbi, “The oxygen bottleneck for technospheres,” Nature Astronomy 8 (2024), pp. 39–43 (abstract / preprint).

And I want to be sure to mention Robert Gray, Kipping’s co-author on his 2022 paper, who devoted years to the study of the Wow! Signal and was kind enough to write about his quest in these pages. If you’re not familiar with Gray’s work, I hope you’ll read my An Appreciation of SETI’s Robert Gray (1948-2021). I only wish I had gotten to know him better. His death was a loss to the entire community. David Kipping’s fine video covering his work with Gray is available at Cool Worlds. Keith Cooper also explicates this paper in One Man’s Quest to Investigate the Mysterious “Wow!” Signal.

Stitching the Stars: Graphene’s Fractal Leap Toward a Space Elevator

The advantages of a space elevator have been percolating through the aerospace community for quite some time, particularly boosted by Arthur C. Clarke’s novel The Fountains of Paradise (1979). The challenge is to create the kind of material that could make such a structure possible. Today, long-time Centauri Dreams reader Adam Kiil tackles the question with his analysis of a new concept in producing graphene, one which could allow us to create the extraordinarily strong cables needed. Adam is a satellite image analyst located in Perth, Australia. While he has nursed a long-time interest in advanced materials and their applications, he also describes himself as a passionate advocate for space exploration and an amateur astronomer. Today he invites readers to imagine a new era of space travel enabled by technologies that literally reach from Earth to the sky.

by Adam Kiil

In the quiet predawn hours, a spider spins its web, threading together a marvel of biological engineering: strands that are lightweight, elastic, and capable of absorbing tremendous energy before failing. This isn’t just nature’s artistry; it’s a lesson in hierarchical design, where proteins self-assemble into beta-pleated sheets and amorphous regions, creating a material tougher than Kevlar — able to dissipate impacts like a shock absorber — while outperforming steel in strength-to-weight ratio, though falling short of Kevlar’s raw tensile strength.

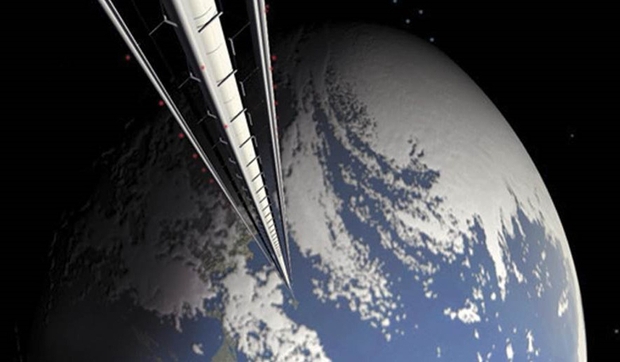

As we gaze upward toward the stars, dreaming of bridges to orbit, such bio-inspired ingenuity beckons. Could we mimic this to construct a space elevator tether, a ribbon stretching 100,000 kilometers from Earth’s equator to geostationary orbit and beyond? The demands are staggering: a material with a specific strength exceeding 50 GPa·cm³/g to support its own weight against gravity’s pull, all while withstanding radiation, micrometeorites, and immense tensile stresses. [GPa is a reference to gigapascals, the units used to measure tensile strength at high pressures and stresses. Thus GPa·cm³/g represents the ratio of strength to density].

Image: A space elevator is a revolutionary transportation system designed to connect Earth’s surface to geostationary orbit and beyond, utilizing a strong, lightweight cable – potentially made of graphene due to its extraordinary tensile strength and low density—anchored to an equatorial base station and extending tens of thousands of kilometers to a counterweight in space. This megastructure would enable low-cost, efficient transport of payloads and people into orbit, leveraging a climber mechanism that ascends the cable, potentially transforming space access by reducing reliance on traditional rocket launches. Credit: Pat Rawlings/NASA.

Enter a recent breakthrough in graphene production from professor Chris Sorensen at Kansas State University and Vancouver-based HydroGraph Clean Power, whose detonation synthesis yields pristine, fractal, and reactive graphene — potentially a key ingredient in weaving this cosmic thread.

But this alone may not suffice; we must think from first principles, exploring uncharted solutions to assemble nanoscale wonders into macroscale might.

Graphene’s Promise and Perils: The Historical Context

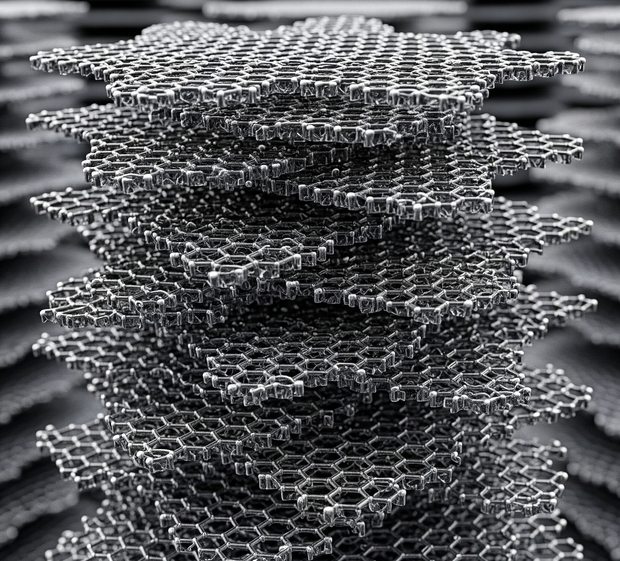

Graphene, that atomic-thin honeycomb of carbon, tantalizes with its theoretical tensile strength of 130 GPa and density of 2.2 g/cm³, yielding a specific strength around 59 GPa·cm³/g—right on the cusp of space elevator viability.

Yet, production has long been the bottleneck. Chemical vapor deposition churns out high-quality but limited sheets; mechanical exfoliation delivers impure, aggregated flakes. These yield composites where graphene platelets, bound weakly by van der Waals forces (mere 0.1-1 GPa), slip under strain, like loose pages in a book. For a tether, we need seamless load transfer, hierarchical reinforcement, and defect minimization—echoing the energy-dissipating nanocrystals in spider silk’s protein matrix.

Sorensen’s Detonation Concept: Fractal and Reactive Graphene

Chris Sorensen’s innovation at HydroGraph Clean Power flips the script. Using a controlled detonation of acetylene and oxygen in a sealed chamber, his team produces graphene with over 99.8% purity, fractal morphology, and tunable reactivity—all at scale, with zero waste and low emissions.

The fractal form — branched, snowflake-like platelets with 200 m²/g surface area — enhances interlocking, outperforming traditional graphene by 10-100 times in composites, but crucially, these gains shine at ultra-low loadings (0.001%) and under modest stresses, not yet the gigapascal realms of a space elevator.

Reactive variants add edge functional groups like carboxylic acids (COOH), enabling covalent bonding—yet, note that simple condensation reactions here yield strengths akin to polymer chains (1-5 GPa), not the in-plane prowess of graphene’s sp² lattice.This fractal graphene could form a foundational scaffold, reconfigurable into aligned structures that mimic bone’s porosity or silk’s hierarchy. Earthly spin-offs abound: tougher concrete, sensitive sensors, efficient batteries. But for the stars, we must bridge the gap from nanoplatelets to kilometer-long cables.

Image: Conceptual view of Hydrographs’ turbostratic, 50nm nanoplatelets, 99.8% pure carbon, sp2 bonded graphene. Credit: Adam Kiil.

From First Principles: Many Paths to a Cosmic Thread

To transcend these limits, let’s reason from fundamentals. A space elevator tether must maximize tensile strength while minimizing density and defects, distributing stress across scales like spider silk’s beta-sheets (crystalline strength) embedded in an extensible amorphous matrix.

Graphene’s strength derives from its delocalized electrons in a defect-free lattice; any assembly must preserve this while forging inter-platelet bonds rivaling intra-platelet ones. Current methods fall short, so here are myriad speculative solutions, drawn from physics, chemistry, and biology—some extant, others nascent or hypothetical, demanding innovation:

- Edge-Fusion via Plasma or Laser Annealing: Functionalize edges with hydrogen or halogens, then use plasma arcs or femtosecond lasers to fuse platelets into seamless, extended sheets or ribbons, healing defects to approach single-crystal continuity. This could yield tensile strengths nearing 100 GPa by eliminating weak interfaces.

- Supramolecular Self-Assembly in Liquid Crystals: Disperse fractal graphene in nematic solvents, applying shear or electric fields to align platelets into helical fibrils, stabilized by pi-pi stacking and hydrogen bonding. Inspired by silk’s pH-induced assembly, this bottom-up approach might create defect-tolerant bundles with built-in energy dissipation.

- Bio-Templating with Engineered Proteins: Design peptides (via AI like AlphaFold) that bind graphene edges, mimicking silk spidroins’ repetitive motifs to fold platelets into hierarchical nanocrystals. Extrude through microfluidic spinnerets, acidifying to trigger beta-sheet formation, embedding graphene in a tough, elastic matrix.

- Covalent Cross-Linking with Boron or Nitrogen Dopants: Introduce boron atoms during detonation to create sp³ bridges between platelets, forming diamond-like nodes in a graphene network. This could boost shear strength to 10-20 GPa without sacrificing tensile properties, verified by molecular dynamics.

- Electrospinning with Magnetic Alignment: Mix reactive graphene in a polymer dope, electrospin under magnetic fields to orient platelets, then pyrolyze the polymer, leaving aligned, sintered graphene fibers. Enhancements: Add ultrasonic waves for dynamic packing, targeting <1 defect per 100 nm².

- Hierarchical Bundling via 3D Printing: Nanoscale print graphene inks layer-by-layer, using click chemistry (e.g., thiol-ene) for instant cross-links. Scale up to micro-bundles, then macro-cables, tapering density like a tree trunk to root.

- Dynamic Compression and Sintering: Apply gigapascal pressures in a diamond anvil cell, combined with heat, to induce partial sp²-to-sp³ transitions at overlaps, creating hybrid structures akin to lonsdaleite—ultra-hard yet flexible.

- Biomineralization Analogs: Introduce calcium or silica ions to reactive groups, mineralizing interfaces like nacre, adding compressive strength and crack deflection.

- AI-Optimized Hybrid Composites: Simulate (via quantum computing) blends of fractal graphene with silk-mimetic polymers or boron nitride, optimizing ratios for 90% tensile efficiency. Fabricate via wet-spinning, testing at centimeter scales.

These aren’t exhaustive; hybrids abound—e.g., combining bio-templating with laser fusion. Each target’s aim: moving beyond low-load enhancements and polymer-like bonds to harness graphene’s full lattice strength.

Weaving and Laminating: Practical Steps Forward

Drawing from these, a viable process might start with a high-solids dispersion of reactive fractal graphene, extruded via wet-spinning into aligned fibers, where optimized cross-linkers (not mere condensations) ensure graphene-dominant strength. Stack into nacre-like laminates, using hot isostatic pressing (5-20 GPa) to forge sp³ bonds, elevating shear (and thus overall tensile) resilience to 10-20 GPa. Taper the structure: thick at the base for 7 GPa stresses, thinning upward.

Scaling leverages HydroGraph’s modular reactors, producing tonnage graphene for kilometer segments.

Join via overlap lamination, braid for redundancy, deploy from orbit. Prototypes must demonstrate cohesive failure, >90% load transfer, via nanoindentation.

A Bridge to the Cosmos

Sorensen’s detonation-born graphene, fractal and reactive, ignites possibility. Yet, as spider silk teaches, true mastery lies in hierarchy and adaptation.

Success means a tether with inter-platelet bond strength nearing single-crystal graphene (>100 GPa), verified by nanoindentation or pull-out tests, with >90% tensile transfer efficiency. Centimetre-scale prototypes should show minimal defects (<1 per 100 nm² via TEM), failing cohesively, not delaminating, like a spider’s web holding under a gale. The full tether, massing under 500 tonnes, could be deployed from orbit, a lifeline to the cosmos. This graphene tether embodies our ‘sea-longing,’ a bridge to the stars woven from carbon’s hexagons, inspired by nature’s spinners and builders.

By innovating from first principles—fusing, assembling, templating—we edge closer to stitching the stars. This isn’t mere materials science; it’s the warp and weft of humanity’s interstellar tapestry, a web to catch the dreams of Centauri and beyond.

3I/ATLAS: The Case for an Encounter

The science of interstellar objects is moving swiftly. Now that we have the third ‘interloper’ into our Solar System (3I/ATLAS), we can consider how many more such visitors we’re going to find with new instruments like the Vera Rubin Observatory, with its full-sky images from Cerro Pachón in Chile. As many as 10,000 interstellar objects may pass inside Neptune’s orbit in any given year, according to information from the Southwest Research Institute (SwRI).

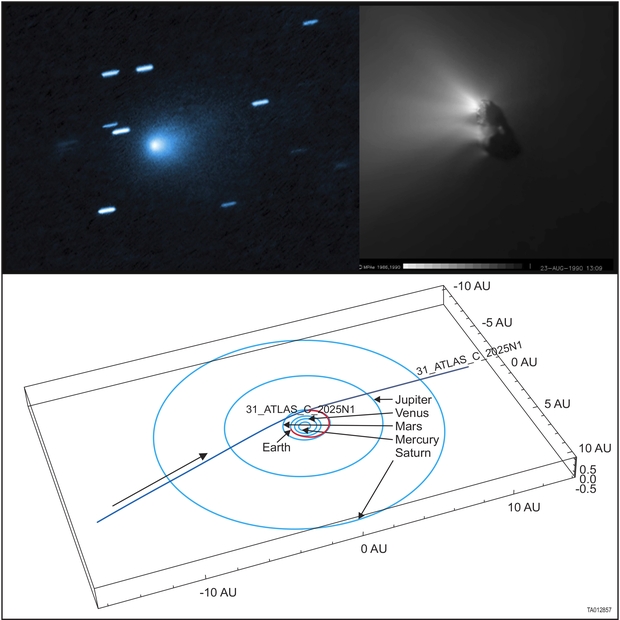

The Gemini South Observatory, likewise at Cerro Pachón, has used its Gemini Multi-Object Spectrograph (GMOS) to produce new images of 3I/ATLAS. The image below was captured during a public outreach session organized by the National Science Foundation’s NOIRLab and the Shadow the Scientists initiative that seeks to connect citizen scientists with high-end observatories.

Image: Astronomers and students working together through a unique educational initiative have obtained a striking new image of the growing tail of interstellar Comet 3I/ATLAS. The observations reveal a prominent tail and glowing coma from this celestial visitor, while also providing new scientific measurements of its colors and composition. Credit: Gemini Observatory/NSF NOIRlab.

Immediately obvious is the growing size of the coma, the cloud of dust and gas enveloping the nucleus as 3I/ATLAS moves closer to the Sun and continues to warm. Analyzing spectroscopic data will allow scientists to understand more about the object’s chemistry. So far we’re seeing cometary dust and ice not dissimilar to comets in our own system. We won’t have this object long, as its orbit is hyperbolic, taking it inside the orbit of Mars and then off again into interstellar space. Perihelion should occur at the end of October. It’s interesting to consider, as Marshall Eubanks and colleagues do in a new paper, whether we already have spacecraft that can learn something further about this particular visitor.

Note this from the paper (citation below):

Terrestrial observations from Earth will be difficult or impossible roughly from early October through the first week of November, 2025… [T]he observational burden during this period will, to the extent that they can observe, largely fall on the Psyche and Juice spacecraft and the armada of spacecraft on and orbiting Mars. Our recommendation is that attempts should be made to acquire imagery from encounter spacecraft during the entire period of the passage of 3I through the inner solar system, and in particular from the period in October and November of 2025, when observations from Earth and the space telescopes will be limited by 3I’s passage behind the Sun from those vantage points.

As we consider future interstellar encounters, flybys begin to look possible. Such was the conclusion of an internal research study performed at SwRI, which examined costs and design possibilities for a mission that may become a proposal to NASA. SwRI was working with software that could create a large number of simulated interstellar objects, while at the same time calculating a trajectory from Earth to each. Matthew Freeman is project manager for the study. It turns out that the new visitor is itself within the study’s purview:

“The trajectory of 3I/ATLAS is within the interceptable range of the mission we designed, and the scientific observations made during such a flyby would be groundbreaking. The proposed mission would be a high-speed, head-on flyby that would collect a large amount of valuable data and could also serve as a model for future missions to other ISCs [interstellar comets].”

Image: Upper left panel: Comet 3I/ATLAS as observed soon after its discovery. Upper right panel: Halley’s comet’s solid body as viewed up close by ESA’s Giotto spacecraft. Lower panel: The path of comet 3I/Atlas relative to the planets Mercury through Saturn and the SwRI mission interceptor study trajectory if the mission were to be launched this year. The red arc in the bottom panel is the mission trajectory from Earth to interstellar comet 3I/ATLAS. Courtesy of NASA/ESA/UCLA/MPS.

So we’re beginning to undertake the study of actual objects from other stellar systems, and considering the ways that probes on fast flyby missions could reach them. 3I/ATLAS thus makes the case for further studies of flyby missions. SwRI’s Mark Tapley, an expert in orbital mechanics, is optimistic indeed:

“The very encouraging thing about the appearance of 3I/ATLAS is that it further strengthens the case that our study for an ISC mission made. We demonstrated that it doesn’t take anything harder than the technologies and launch performance like missions that NASA has already flown to encounter these interstellar comets.”

The paper on a fast flyby mission to an interstellar object is Eubanks et al., “3I/ATLAS (C/2025 N1): Direct Spacecraft Exploration of a Possible Relic of Planetary Formation at “Cosmic Noon,” available as a preprint.