Gamma ray bursts (GRBs) are much in the news. GRB 090423 turns out to be the most distant explosion ever observed, an event that occurred a scant 630 million years after the Big Bang. We’re detecting the explosion of a star that occurred when the first galaxies were beginning to form. Current thinking is that the earliest stars in the universe were more massive than those that formed later, and astronomers hope to use GRB events to piece together information about them. GRB 090423 was evidently not the death of such a star, but more sensitive equipment like the Atacama Large Millimeter/submillimeter Array (ALMA) will soon be online (ALMA within three years) to study more distant GRBs and open up more from this early epoch.

And then there’s all the fuss about Einstein. The Fermi Gamma Ray Space Telescope, which has already captured more than a thousand discrete sources of gamma rays in its first year of observations, captured a burst in May that is tagged GRB 090510. Evidently the result of a collision between two neutron stars, the burst took place in a galaxy 7.3 billion light years away, but a pair of photons from the event detected by Fermi’s Large Area Telescope arrived at the detector just nine-tenths of a second apart.

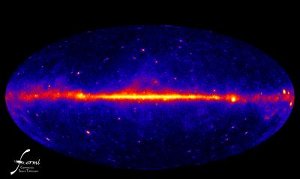

Image: This view of the gamma-ray sky constructed from one year of Fermi LAT observations is the best view of the extreme universe to date. The map shows the rate at which the LAT detects gamma rays with energies above 300 million electron volts — about 120 million times the energy of visible light — from different sky directions. Brighter colors equal higher rates. Credit: NASA/DOE/Fermi LAT Collaboration.

These are interesting photons because they possessed energies differing by a million times, yet their arrival times were so close that the difference is probably due solely to the processes involved in the GRB event. Einstein’s special theory of relativity rests on the notion that all forms of electromagnetic radiation travel through space at the same speed, no matter what their energy level. The results seem to confirm this, leading to newspaper headlines heralding ‘Einstein Wins This Round,’ and so on.

And at this point, Peter Michelson (Stanford University, and principal investigator for Fermi’s Large Area Telescope) echoes the headlines:

“This measurement eliminates any approach to a new theory of gravity that predicts a strong energy dependent change in the speed of light. To one part in 100 million billion, these two photons traveled at the same speed. Einstein still rules.”

All of this matters because we’re trying to work out a theory of quantum gravity, one that helps us understand the structure of spacetime itself. The special theory of relativity holds that the energy or wavelength of light has no effect on its speed through a vacuum. But quantum theory implies that, at scales trillions of times smaller than an electron, spacetime should be discontinuous. Think of it as frothy or foamy.

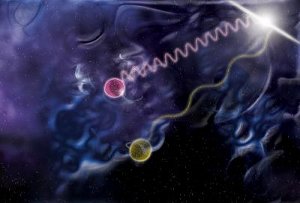

In that case, we would expect that shorter wavelength light (at higher energies) would be slowed compared to light at longer wavelengths [but see Erik Anderson’s comment below, which argues that this should be reversed, greater delays for lower-energy photons]. So the theories imply, and they’ve been untestable because we don’t have the tools to tease out information at the Planck length and beyond.

Image: In this illustration, one photon (purple) carries a million times the energy of another (yellow). Some theorists predict travel delays for higher-energy photons, which interact more strongly with the proposed frothy nature of space-time. Yet Fermi data on two photons from a gamma-ray burst fail to show this effect, eliminating some approaches to a new theory of gravity. Credit: NASA/Sonoma State University/Aurore Simonnet.

So does special relativity fail at the quantum level? The Fermi instrument has given us the chance to use astronomical data to test these ideas. The 0.9 second difference between the arrival times of the higher and lower-energy gamma rays tells us that quantum effects that involve light slowing proportional to its energy do not show up at least until we get down to about eight-tenths of the Planck length (10-33 centimeters).

That’s useful information indeed, but we still need a way to place Einsteinian gravity into a theory that handles all four fundamental forces, unlike the Standard Model, which unifies three. So what GRB 090510 gives us is early evidence about the structure of spacetime of the sort that future Fermi data can help to refine. Einstein confirmed? At this level, yes, but pushing deeper into the quantum depths via GRB study may help us see, in ways that laboratory experiments cannot show, how gravity at the quantum level fits into the fabric of the universe.

The paper is Abdo et al., “A limit on the variation of the speed of light arising from quantum gravity effects,” Nature advance online publication 28 October 2009 (abstract). The paper on GRB 090423 is Chandra et al., “Discovery of Radio Afterglow from the Most Distant Cosmic Explosion,” submitted to Astrophysical Journal Letters and available online.

Wonder what it means if space-time isn’t roiling with virtual wormholes?

Friedwardt Winterberg has his own theory about all this based on an “aether” filled with Planckons of positive and negative mass-energy – they nearly cancel and only change locally in average distribution. Perhaps that’s a better theory of the quantum vacuum?

I’ve been following this idea (that high-energy photons may slightly exceed lightspeed) for several years now – ever since I first read about it in Lee Smolin’s book “Three Roads to Quantum Gravity,” and in his Jan. ’04 Scientific American cover story “Atoms of Space and Time,” and in João Migueijo’s book “Faster Than The Speed of Light.” I’ve always respected the boldness of the proposal – a spirit of respect which harkens to Kuhn’s idea that “the price of scientific progress is the risk of being wrong.”

Yet, for some time now, I’ve been anticipating that the Fermi (née GLAST) experiment would indeed turn up a null result. What I take to be the meaning of this result is a clue to the existence of a misstep in the logic of Loop Quantum Gravity. Questions I would like to see asked are: Is the conception of spacetime as a fabric of “spin-networks” really compatible with LQG’s cardinal principle of background independence? Is the depiction of photon paths that evolve instant-by-instant (so that they may ‘accumulate’ discrete ‘jumps’) really compatible with QED’s cardinal principle telling us that quantum states are only realized upon the outcome of actual measurements?

What I certainly would NOT like to see happen is a collective shift in rhetoric that repeatedly falls back on the attitude that experimental results merely place an “upper-limit” on the anticipated effect. This is the tried-and-tired mode of string theorists, who “string us along” decade after decade by saying that bigger and bigger supercollider experiments merely set an upper-limit to the mass of the hypothesized superparticles they are looking for. That parlor game can be played forever. When the risk of being wrong is circumspectly avoided, science stagnates.

I’m inclined to think that the Standard Model is fatally flawed. QCD, for example, can’t make sense of atoms and molecules (specifically, the equations remain unsolved at the level of atomic nuceli). Its backround independence and lack of a way to predict the masses of its particles are its biggest problems, though.

So many university positions and journal editorships and lots of grant money are dependent upon the current orthodoxy remaining current it’s no surprise we need a scientific revolution now and then.

Perhaps the non-existence of the Higgs particle will get things back on track.

Unfortunately for you, that’s what the experimental result tells us. It’s a null result: since no experiment is perfect it cannot be concluded that there is no effect there. Strictly speaking, this result is consistent with the null hypothesis that there is no energy-dependent variation of the speed of light, arising from quantum gravity, but it cannot exclude the possibility of smaller variations.

Same goes for more well-known physics: the experimental evidence only gives a limit on, say the deviation of the falloff of the electrostatic force with distance from a perfect inverse square law. Just because the theory states that the relationship is an inverse square law has no bearing on whether the actual law is an inverse square law: our theories must respect the universe, not the other way round.

The reliance on experimental data to test validity of the model is one of the things which distinguishes physics from mathematics. There is no substitute for actually going and doing the experiment, making the observations. It seems to me fairly clear that the problem with getting a working theory of quantum gravity is lack of experimental data: at present the Standard Model works too well in the places where it can be compared with experiment. Same goes for general relativity.

You can do all the theory work you want on string theory, loop quantum gravity, whatever other quantum gravity theory takes your fancy, but without the experiments, it is just mathematics.

Einsteins theory of general relativity was “just mathematics” for quite a long time, with only the scantest experimental evidence. It was convincing far more for its elegant unification of Newton’s gravity with special relativity, than for explaining any unexplained observations. The situation is similar today, where we have two extraordinarily powerful theories that do not fit together. Just coming up with a valid and elegant unification would be worth the trouble. Experimental verification, if possible, would be the icing on the cake.

andy wrote:

Agreed. Reading some interesting predictions of Schiller’s speculative unification theory based on “featureless strands”:

http://www.motionmountain.net/research/index.html

Among his predictions based on his theory: “No quantum gravity effect will ever be observed – not counting the cosmological constant and the masses of the elementary particles.”

Cheers, Paul.

Andy,

I believe it was Leibniz who first surmised that any finite series of observations can accommodate an infinite number of theoretical constructs. Surely you must concede that there is more to scientific advancement than freshening up stale theories with the embalming fluid of new parameters to fit every new observation. In practice, theories are discarded all the time – even with non-empirical justification, e.g., per “Occam’s Razor.”

I agree with your assessment that experimental data are crucial. But I don’t know how well you appreciate how astonishingly deep the business of gathering and interpreting data is mired in preconceptions. Hence, the irony of the quantum gravity problem is that the requisite observations are already well-documented (e.g., Doppler spectrography and telemetry of galaxies, stars, Pioneer spacecraft, and possibly Mars). What remains to be demonstrated is the recognition of what has actually been observed. All the conundrums imposed by dark energy, dark matter, modified gravity, etc., are eliminated by the realization that the velocities of celestial-borne objects inferred from Doppler spectrography and telemetry are misstated.

There is a sense in which the GRB predictions formulated under the LQG regime was on the right track. Astronomical scales are indeed the most promising realm to look for unmodeled quantum gravity effects. And just as the Fermi/GLAST satellite was recruited to look for them in this decade, so too will ESA’s Gaia satellite be recruited in the next. Gaia will refine the precision of stellar astrometry by 50x-200x. There will be no wiggle room for the scenario I’ve outlined above. It will live or die, there and then, by the judgment of Gaia.

Cheers,

– Erik

BTW, I’ve subsequently noticed that NASA’s article, imagery, and animation on this topic got one detail appalling backwards: the LQG prediction was that the high-energy photons would arrive first, not the opposite. Paul’s write-up above repeats the mistake.

Erik Anderson writes:

Good grief. Thanks for calling that to my attention — I’ll insert a note in the original text!

Paul — now you have it almost right. However, neither of the photons are supposed to be “delayed.” Both are supposed to go faster than lightspeed. The boost is slight — it’s proportionate to the Planck-length divided by the photon wavelength (a photon with a theoretical wavelength equal to the Planck length would go 2x lightspeed). Don’t feel too bad — everybody else seems to be reporting it wrong.

Erik, it’s certainly outside of my expertise, so I appreciate the clarification. Surprised that the NASA materials were this far off the mark, though.

I regret admitting this, but NASA’s carelessness may be an indication of just how poorly the original theory inspiring this test was ever regarded. :-(

Friday, October 30, 2009

Too Far to Be Seen

from the Palomar Skies blog by Scott Kardel

Sometimes it is pretty exciting when you look for something and don’t see it. Last April there was a gamma-ray burst (GRB 090423) detected by NASA’s SWIFT satellite. One of the first ground-based telescopes to look for the visible light afterglow was the automated 60-inch telescope at Palomar. The 60-inch was imaging the source within three minutes of the satellite’s detection of the GRB. The result? The 60-inch didn’t see it.

Full article here:

http://palomarskies.blogspot.com/2009/10/too-far-to-be-seen.html

Maybe someone can help me out : if Lorentz invariance extends into planck regieme, then does it follow that space-time discrete structures, if they exist, would be required to be smaller than Planck length? If so. doesn’t this undermine the motivation for the whole project?

However there was experimental evidence for parts of the model that were missing: when Einstein published the field equations he could show that they led to orbital precession, an effect that had been noted and led to searches for the planet Vulcan. The difference is that we currently have two theories that work extremely well in the regimes in which they can be tested, but are mutually incompatible. The current problem in physics thus arises from theoretical problems rather than detected experimental effects.

Experiment is not the icing on the cake, it is the cake. And probably a significant proportion of the marzipan too.

Indeed, one could postulate a law of gravitation which held except for two electrons in the core of Alpha Centauri A for a period of five nanoseconds in the year 1484 BCE. This is however completely untestable and thus not in the domain of science. Sure we go for the laws that fit the evidence and can be generalised to new predictions, with ideally the minimum of excess baggage (like, say, the exclusion of said electrons). However I did not come in with a demand to not discuss what the results can actually tell us.

Of course there is this, but there is a reason for the preconceptions being preconceptions: that is, they have been shown to work pretty well. So when you come out with something along the lines of…

it seems that you have disregarded the question of just what is doing the additional gravitational lensing observed in galaxy clusters. Guess we can handwave that one away, eh? It might make the mathematics look worse. I believe Feynman had a pithy quote about the relationship between physics and mathematics.

However there was experimental evidence for parts of the model that were

missing: when Einstein published the field equations he could show that

they led to orbital precession, an effect that had been noted and led to

searches for the planet Vulcan.

That was the scant evidence I was referring too. Hardly enough to validate the theory, only a general indication that there is something not understood. In fact, if I recall correctly, it was later found that most of the precession in question is explained by finite size effects, rather than general relativity.

The difference is that we currently have two theories that work extremely

well in the regimes in which they can be tested, but are mutually

incompatible.

I don’t think this is a difference. This sentence quite accurately describes the relationship between special relativity and Newtons theory of gravitation, and at the time the incompatibilities were mainly theoretical, not experimental.

Experiment is not the icing on the cake, it is the cake. And probably a

significant proportion of the marzipan too.

True, if you can get it. However, modern physics is peculiar as a science in that it is dominated by theory. You are more likely to find experimenters validating theory than theoreticians trying to explain unpredicted observations. Yes, both are needed, but, depending on the science and the times, sometimes one group is well ahead of the other.

Hi Andy,

Another reason that preconceptions become preconceptions is that whatever defies them is downplayed as a minor problem rather than recognized as an intractable one.

I appreciate your critique of my rhetoric. Lensing profiles do count as a major cosmic conundrum — especially in the case of low surface brightness galaxies, for which the mass distributions derived from orbital dynamics and the mass distributions derived from lensing profiles always end up mismatched (one of those annoying “minor” problems, you see).

Of course, should the entire existence of CDM halos and MONDian accelerations indeed be refuted 8 short years from now, the mystery of the steep lensing profiles may seem to be that much more exacerbated. But it turns out that the same quantum gravity principle responsible for distorted Doppler shifts also steepens the perceived lensing angles of gravitationally deflected photons. This is why we see, in the fabulous Bullet Cluster, that the lensing is strongest where the luminous mass is — not because collisionless CDM particles followed the cluster to the other side.

I looked up the Feynman quote. Duly noted.

And look what a mess happens if the theorists are allowed to reign rampant without any checks on them by the experimentalists ;)

Furthermore don’t let the aggressive PR machines behind particle physics and cosmology deceive you that they comprise the whole of modern physics. There are many other disciplines, such as materials science, which are doing very healthily in the experiments department.

My related hypothesis on this event is that for some Gammy Ray bursts, type II supernovae, depending upon where in the galaxy they are relative to our line of sight, when the full spectra of EM radiation arrives at the Galaxy perimeter it can be spit depending on the angle, similar to the prism effect, into different frequencies going in different directions. The Gamma Rays would have therefore taken a slightly longer path to get here.

Proof of this hypothesis would be that in those cases where this difference in arrival time is observed, the supernova’s location within the galaxy would be more blurred in a linear rather than a spherical manner. This linear blurring would accordingly be only observable in the middle range of distances such as a redshift of .5. For shorter distances the difference in arrival time differential accordingly should be less, and for farther away supernovae the blurred distinction may not be observable at all since the galaxy would not be observable at all and any blur would still appear point-like because of the distance involved. There accordingly should be no event where the high frequency waves should arrive first. This hypothesis would also predict that the relative position of the supernova within the galaxy would be more important than the distance. For some supernovae at similar distances the arrival time of low and high frequency radiation should accordingly arrive at the same time, therefore there should be only a correlation between arrival time and distance, not a consistency.

I think it is much more the lack of an elegant theory than a lack of experiments that is causing the current sad-looking state of affairs. I am willing to bet that the situation was no better, historically, before General Relativity came along.

Maybe there is no elegant Grand Unified Theory to be found. However, if there is, and if it is found, it will be recognized with or without experimental evidence. Experimenters will then race to confirm (likely) or refute (unlikely) its predictions, just as has happened with GR. Only, this time it will be much harder to come up with testable predictions, and to test them.

You are right that there are much more experimentally dominated fields in physics. Material Science, though, falls mostly in the category of applied physics, where theory is sought to be exploited rather than challenged.

A good example of experimentally led progress in (non-applied) physics is high temperature superconductors, where unexpected new physics showed up in experiments before being predicted. However, I think this mode of discovery is not very common anymore, in any branch of physics.

According to Granot, these results “strongly disfavour” quantum-gravity theories in which the speed of light varies linearly with photon energy, which might include string theory or loop quantum gravity.

nonlinear quantum mechanics, the best candidates.

Singh.

Elze.

(they have to be relativistic too).

from Elze:

Is there a relativistic nonlinear generalization of quantum mechanics?

http://arxiv.org/PS_cache/arxiv/pdf/0704/0704.2559v1.pdf

from Singh:

Nonlinear quantum mechanics, the superposition principle, and the quantum measurement problem.

http://arxiv.org/PS_cache/arxiv/pdf/0912/0912.2845v2.pdf

Zloshchastiev. RULED OUT.

Svetlichny. RULED OUT.

“At the Planck scale, nonlinear effects may be of the same order of magnitude as linear ones”

>Zloshchastiev. RULED OUT.

Not so fast, dear – in the very last paragraph of:

http://arxiv.org/abs/0906.4282v4

it is explained why Fermi data don’t rule the LogQM out.

Sorry :)

The farthest GRB yet:

http://www.universetoday.com/85967/gamma-ray-burst-090429b-far-out/

Here is what I am wondering about. Why is this counted as a “win for SR”. Clearly compared to quantum loop theory it doesn’t show dependence of c wrt wavelength (or places an upper limit depending on where you sit). But 19th century ether theories also predict no dependency of c because of the media.