Is the universe at the deepest level grainy? In other words, if you keep drilling down to smaller and smaller scales, do you reach a point where spacetime is, like the grains of sand on a beach, found in discrete units? It’s an interesting thought in light of recent observations by ESA’s Integral gamma-ray observatory, but before we get to Integral, I want to ponder the spacetime notion a bit further, using Brian Greene’s superb new book The Hidden Reality as my guide. Because how spacetime is put together has obvious implications for our philosophy of science.

Consider how we measure things, and the fact that we have to break phenomena into discrete units to make sense of them. Here’s Greene’s explanation:

For the laws of physics to be computable, or even limit computable, the traditional reliance on real numbers would have to be abandoned. This would apply not just to space and time, usually described using coordinates whose values can range over the real numbers, but also for all other mathematical ingredients the laws use. The strength of an electromagnetic field, for example, could not vary over real numbers, but only over a discrete set of values. Similarly for the probability that the electron is here or there.

By ‘limit computable,’ Greene refers to ‘functions for which there is a finite algorithm that evaluates them to ever greater precision.’ He goes on to invoke the work of computer scientist Jürgen Schmidhuber:

Schmidhuber has emphasized that all calculations that physicists have ever carried out have involved the manipulation of discrete symbols (written on paper, on a blackboard, or input to a computer). And so, even though this body of scientific work has always been viewed as involving the real numbers, in practice it doesn’t. Similarly for all quantities ever measured. No device has infinite accuracy and so our measurements always involve discrete numerical outputs. In that sense, all the successes of physics can be read as successes for a digital paradigm. Perhaps, then, the true laws themselves are, in fact, computable (or limit computable).

If computable mathematical functions are those that can be successfully evaluated by a computer using a finite set of discrete instructions, the question then becomes whether our universe is truly describable at the deepest levels using such functions. Can we reach an answer that is more than an extremely close approximation? If the answer is yes, then we move toward a view of physical reality where the continuum plays no role. But let Greene explain the implications:

Discreteness, the core of the computational paradigm, should prevail. Space surely seems continuous, but we’ve only probed it down to a billionth of a billionth of a meter. It’s possible that with more refined probes we will one day establish that space is fundamentally discrete; for now, the question is open. A similar limited understanding applies to intervals of time. The discoveries [discussed in Greene’s Chapter 9] which yield information capacity of one bit per Planck area in any region of space, constitute a major step in the direction of discreteness. But the issue of how far the digital paradigm can be taken remains far from settled.

And Greene goes on, in this remarkable study of parallel universes as conceived by physics today, to say that his own guess is that we will find the universe is fundamentally discrete.

All of this gets me to the Integral observatory. Because underlying what Greene is talking about is the fact that the General Theory of Relativity describes properties of gravity under the assumption that space is smooth and continuous, not discrete. Quantum theory, on the other hand, suggests that space is grainy at the smallest scales, and it’s the unification, and reconciliation, of these ideas that is wrapped up in the continuing search for a theory of ‘quantum gravity.’

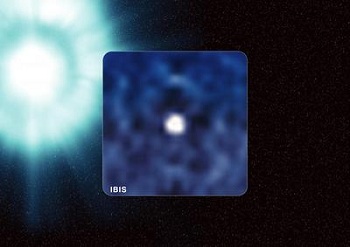

New results from Integral can’t give us a final answer, but they do put limits on the size of the quantum ‘grains’ of space, showing us that they must be smaller than at least some theories of quantum gravity suggest. All of this comes courtesy of a gamma-ray burst — specifically, GRB 041219A, which took place on the 19th of December back in 2004. This was an extremely bright GRB (among the top one percent of GRB detections), and it was bright enough that Integral was able to measure the polarization of its gamma rays. The so-called ‘graininess’ of space should affect how gamma rays travel, changing their polarization (the direction in which they oscillate).

In fact, high-energy gamma rays should show marked differences in polarization from their lower-energy counterparts. Yet studying the difference in polarization between the two types of gamma rays, Philippe Laurent of CEA Saclay and his collaborators found no differences in polarization to the accuracy limits of the data. Theories have suggested that the quantum nature of space should become apparent at the Planck scale: 10-35 of a meter. But the Integral observations are 10,000 times more accurate than any previous measurements and show that if quantum graininess exists, it must occur at a level of at least 10-48 m or smaller.

“Fundamental physics is a less obvious application for the gamma-ray observatory, Integral,” notes Christoph Winkler, ESA’s Integral Project Scientist. “Nevertheless, it has allowed us to take a big step forward in investigating the nature of space itself.”

Indeed. Philippe Laurent is already talking about the significance of the result in helping us rule out some versions of string theory and some quantum loop gravity ideas. But many possibilities remain. The new findings that will come from further study will not displace earlier laws so much as extend them into new realms. In this sense past discoveries are rarely irrelevant, and it is a tribute to the coherency of physics that new perspectives so often incorporate earlier thinking. Thus Newton is not displaced by Einstein but becomes part of a more comprehensive synthesis.

Image: Integral’s IBIS instrument captured the gamma-ray burst (GRB) of 19 December 2004 that Philippe Laurent and colleagues have now analysed in detail. It was so bright that Integral could also measure its polarisation, allowing Laurent and colleagues to look for differences in the signal from different energies. The GRB shown here, on 25 November 2002, was the first captured using such a powerful gamma-ray camera as Integral’s. When they occur, GRBs shine as brightly as hundreds of galaxies each containing billions of stars. Credits: ESA/SPI Team/ECF.

Greene points out in The Hidden Reality that Isaac Newton himself never thought the laws he discovered were the only truths we would need. He saw a universe far richer than those his laws implied, and his statement on the fact is always worth quoting:

“I do not know what I may appear to the world, but to myself I seem to have only been a boy playing on the seashore, diverting myself in now and then finding a smoother pebble or prettier shell than ordinary, whilst the great ocean of truth lay before me all undiscovered.”

The impressive Integral results offer us data that may one day help in the unification of quantum theory and gravity. Thus the distant collapse of a massive star into a neutron star or a black hole during a supernova, the apparent cause of most GRBs, feeds new material to theoreticians and spurs the effort to probe even deeper. In the midst of this intellectual ferment, I strongly recommend Greene’s The Hidden Reality as a way to track recent cosmological thinking. I’ve spent weeks over this one, savoring the language, making abundant notes. Don’t miss it.

For more, see Laurent et al., “Constraints on Lorentz Invariance Violation using integral/IBIS observations of GRB041219A,” Physical Review D, Vol. 83, issue 12 (28 June, 2011). Abstract available.

Not to wax too poetic, but I find it spectacularly wondrous that an almost inconceivably powerful burst of energy from such an enormous object such a vast distance away could tell us about the literally smallest aspect of existence. It’s really incredible how one end of the scale can inform us about the other end. The universe is such an amazing place, and for little bits of lukewarm carbon we’re pretty damned smart at figuring it out.

Tulse is spot on. Symmetry indeed! And, delightfully, we have so far to go in figuring this all out. Enough to keep enthusiastic amateurs such as myself coming back for more for a long time. And I wholeheartedly recommend Professor Greene’s work to all. His joy and passion are infectious.

Apologies to lovers of elegant theories, but science is observational. In 2003 Hubble observations showed no evidence of quanta of Planck time. Now Integral says no evidence of quanta of Planck length – or even 13 orders of magnitude below! Two testable predictions refuted by observations.

Einstein is 100% confirmed by observation. String theory is 0% confirmed. The obvious conclusion is that string theory is “not even wrong”. It is time to come to grips with the fact that string theorists have been on the wrong track for decades. Time to send Michio Kaku et al out to pasture and start anew.

In other news, the rumour I have heard is not only did the tevatron not find Higgs, but nothing seen at LHC so far either. Kaku (who tried to stop the launch of Cassini!) says non-discovery of Higgs would be a disaster. Au contraire. I agree with Hawking (who bet $100 in 2000 that Higgs would not be found), that refutation of the Standard Model would be “very interesting”. The half century of the intellectual tyranny of the Standard Model has got us nowhere.

Time to move on. We need another Einstein. Hopefully he or she has already been born.

PS: Peter Woit’s book, “Not Even Wrong” is really intended for young physicists more than the general public, and is a tough read. But it is well worthwhile to go to the Amazon page for this book and read the EXCELLENT customer review, “Why postmodernists love string theory”, May 2, 2011 by Dennis Littrel. Littrel unmasks the extent to which string theory has become a self contained religion or ideology similar to the postmodernism found in humanities departments.

I was a teenager when I attended some Feynman lectures, I adored the integrity of the man, so lacking in then postmodern (post rational) academic world. So I repeat his quote here, “String theorists make excuses, not predictions.”

Finally, Woit’s “Not Even Wrong” blog covered the recent Strings 2011 conference in Uppsula:

“In Jeff Harvey’s summary of the conference, he notes that many people have remarked that there hasn’t been much string theory at the conference. About the landscape, his comment is that “personally I think it’s unlikely to be possible to do science this way.” He describes the situation of string theory unification as like the Monty Python parrot “No, he’s not dead, he’s resting.” while expressing some hope that a miracle will occur at the LHC or in the study of string vacua, reviving the parrot.

That the summary speaker at the main conference for a field would compare the state of the main public motivation for the field as similar to that of the parrot in the Monty Python sketch is pretty remarkable. In the sketch, the whole joke is the parrot’s seller’s unwillingness, no matter what, to admit that what he was selling was a dead parrot. It’s a good analogy, but surprising that Harvey would use it.”

Joy,

very nicely put. The amazing thing is that 13 orders of magnitude are labeled as “Discreteness, the core of the computational paradigm, should prevail” and “the issue of how far the digital paradigm can be taken remains far from settled”. Creating concepts which will not be testable in a rigorous manner (that is in a lab, with controlled conditions, subject to repeated verification) and present them as “theories”, write books, etc is really cheap.

For a very long time theoretical physics has gone way out of line with all available experimental observations, near term future experimental work and long term future experimental work. The reason for this is a self-serving desire to justify one’s existence. By labeling wild and untestable hypothesis as “theories”, e.g. “string theories”, those guys are doing a big disservice to the whole scientific community. There will come a day when people not remotely connected with particle physics will have to do explaining for those guys and try to prove we are not all charlatans.

Part 1: And here we have it again, “the true laws themselves” (Jürgen Schmidhuber)

This quasi-religious believe that “out there” there is not only a world of stones, plants, animals, stars, planets, light etc., but — additionally to the physical laws invented by physicists in a sophisticated cultural process called “the science of physics” — there are *natural* laws in the world (some see “our” physical laws as an approximation to the “true laws”).

What would physicists do? They would design physical experiments with the purpose of showing whether this additional hypothesis, say, “natural laws exist as seperate entities among all the other things of the world”, is supported by observations (“additional” means “additional to the physical theories or laws we have already”).

As long as such experiments have not been executed, or if they have been executed and failed to show the existence of “natural laws” as seperate entities of the world, then the physicists would not assume the hypothesis to be true, and they would *not* *use* it in further considerations.

Now then, the current state is what? No “true laws” out there.

The world is out there, and we invented the physical laws, and that’s enough for doing physics.

Part 2: “… all calculations that physicists have ever carried out … even though this … has always been viewed as involving the real numbers, in practice it doesn’t. ” (Jürgen Schmidhuber)

When I make calculations regarding an electromagnetic circuit using Maxwell’s laws — I learned this at university — the real number Pi is involved. In Practice!

Here certain laws of physics are computable, and at least one real number is involved.

Bottom lines:

Do not abandon the reliance on real numbers in physics!

And: “superb new book”? I’m not quite with you.

Hi Folks;

I thought I’d add my two cents to this discussion.

Perhaps space is quantized at both the Planck Levels and at a level below 10 EXP – 48 meters simultaneously. Which level becomes manifest or not may depend on the phenomena being investigated.

Some phenomena like black holes may see the Planck length as the smallest diameter or perhaps the smallest radius that a black hole can have. Diameters or perhaps radii that are smaller would result in the black hole not forming because the black hole would decay at a rate faster than the Planck Time Unit.

The Planck Power is equal to [C EXP 5]/G and would normally be the highest possible power output in well evolved 4-D space time at least for time periods where a Planck Power laser would shine for a time longer than the temporal light cone of its width. This is because the Planck Power is equal to one Planck energy unit per Planck Time Unit or the time it takes the light to extend one Planck Length Unit. Higher powered beams operated for a time period equal to or greater than the Planck Time Unit would imply the formation of a black hole and the resulting collapse of the beam under its own gravitation. I can think of huge nuclear explosive devices of galactic scale that would be detonated in a pretimed segment wise manner that would temporarilly best the Planck Power by many order of magnitude but that is a different story alltogether.

So Planck Length and Time Units have an elegance but as the new observation show, they are not the end all answer to the structure of the fabric of space time.

It would be very strange indeed if space could be quantized at two or more widely spaced scales, but heck we already have the wave particle duality of bosons and fermions so what the heck, perhaps this concept needs to be investigated more thoroughly.

Quantization is not a new concept in physics. To the extent that “discreteness” overturns any philosphies of science, it did so in the 1920s.

“Joy July 5, 2011 at 17:15

Apologies to lovers of elegant theories, but science is observational. In 2003 Hubble observations showed no evidence of quanta of Planck time. Now Integral says no evidence of quanta of Planck length – or even 13 orders of magnitude below! Two testable predictions refuted by observations. Einstein is 100% confirmed by observation. String theory is 0% confirmed.”

I’m very much with Joy on this, but I have to stop for a moment and just confess that this result floors me. I honestly do not know what to make of it. If there is “no evidence of quanta of Planck length . . . 13 orders of magnitude below,” something is, put it this way, being “seriously overlooked.” I’ve long had somewhat more confidence in GTR than QM (I just think GTR is more elegant, prettier if you will, more mathematically sound), and my fondness for the grand continuums found in mathematics make Einstein’s great theory more aesthetically appealing. So I am just thrilled with this discovery, a double-rainbow shock. What does it mean?

Note: I loath, despise, and excoriate string theories, Higgs bosons, various dark stuffs (though I admit I may have to yield on the dark business in some form at some point) and the annoying silliness (and other words starting with “s”) that Mr. Kaku promulgates. I once put down one of his books. I couldn’t pick it up again.

I would like to add that tying the “graininess” of quantum mechanics to an integral world model is plain wrong. Quantum mechanics is based just as much on real numbers as any other physics, indeed it uses complex numbers, which are a good deal further from integral than “mere” real numbers.

Thus, as far as I can see, there is neither evidence nor a theoretical need for a “digital” universe. The only reason it keeps coming up is that, admittedly, the notion is somewhat appealing, especially for those with a computing bend…

Pi is an irrational, transcendental number which is non-repeating and no finite number or equation can ever be equal to its value. There is no way you could have used the real number…only an approximation, not the actual value of Pi.

I am somewhat surprised and concerned that evidence for graininess in both space and time has not shown up at the Planck level nor orders of magnitude well below it. If I understand this correctly then something is very wrong either with the observations or more troubling with our theories of physics. We need to remember that the eternal now in which we wre living is NOT a special time. Past geniuses have promulgated well reasoned theories that fell when new observations of nature showed them to be poor approximations. I think we’re onto a similar revolution this century. It’s likely that string theory and lots of trendy tenure producing work will hit the dustbin of history. It will take a preponderance of evidence for this to occur as well as time, and the established careerists won’t go easily.

Bobby Coggins: “There is no way you could have used the real number…only an approximation, not the actual value of Pi.”

I *use* the number Pi by (e.g.) incorporating the symbol “Pi” in some formulas, such as exp( phi * Pi * i ), where phi is a real number, and i is the well known imaginary unit. In this case, which has to do with the calculation of electromagnetic circuits, Pi is very well used with its, as you call it, “actual” value. As examples exp( 2 * Pi ) is exactly equal to the real number 1, and exp( Pi ) is exactly equal to the real number -1, with would not be the case if it’s only possible to use an approximation.

Many analytical considerations with Pi inside formulas do not use approximations but the exact number. I hope you see, that there are several ways to “use” a number. And, by the way, it’s a little bit problematic talking about a number and … er … “its value”.

I will not continue this discussion, because, again, something drifts into being off topic.

There seems to be some confusion on use of real numbers in physics. Yes, I can use pi, exactly, in an equation and manipulate it using algebra, etc. It is also true that I must fail in any attempt to represent pi in our common, human-friendly number representation system — 3.14159… — since there is no finite algorithm to produce that representation.

Then there’s physics. Physics, it is said, though perhaps mainly in regard to continuum or classical physics, is all PDEs (partial differential equations). Apart from the simplest ones, in general a PDE has no exact (analytical) solutions. That’s why we get so excited when a Schwarzchild or a Kerr find such a solution in general relativity, or even the well-known ellipse solution to Newton’s equations for a special type of two-body system.

Therefore we must rely on numerical solutions to differential equations, which proceed, step-wise to evolve a system over (usually) time. Obviously any real numbers must be approximations. Usually however, it is not the ability to represent pi or its ilk exactly that is the problem (we can calculate them to any desired precision beforehand), but the necessity to make the steps in the algorithm small enough, and accurately enough, that the cumulative errors are both understand and low enough in magnitude to not damage the wanted result.

There is no philosophical problem here, just lots of work. Usually for a computer.

Whew.

I suppose this new observation suggests that we are not, after all, living in a computer simulation run through grant support by some alien junior faculty member who’s trying for tenure. Or if we are, we at least now know that the grant was good enough to buy a very, very, very high resolution simulation so that we don’t notice any underlying graininess.

It does however look bad for my solution to the Fermi Paradox, which said they were already “here” and we were, in fact, just being simulated by the aliens.

“…we at least now know that the grant was good enough to buy a very, very, very high resolution simulation so that we don’t notice any underlying graininess. ”

Just try to avoid thinking any really deep, profound thoughts or you may cause a floating-point exception. That would really mess things up for the rest of us.

Computer!, end program!

This article and the comments that follow, remind me of topic that should be very important to science: its definition. To me this is the real mistake that has led to the bizarre episode within science where string theory and multiverses were allowed to entangle and strangle the once shining fields of cosmology and particle physics.

Only recently have I attempted to follow the philosophy of science, and I find it difficult to convey my degree of shock in finding that many have thought of it as just any attempted naturalistic explanation of our universe.

By the above definition most science from the beginning of civilisation to now has been of detriment to mankind. The most obvious example is in medicine. The human body is so complex that virtually any extreme action on it is detrimental. Death or injury, should soon follow unless we chose the better alternative of sacrificing to the gods. Even with the aid of medicine developed by what I would define as science, when doctors make calls (in desperation) based on hope rather than established statistical outcomes, they tend to do harm.

Taking science as naturalistic explanations explains why some have a knee-jerk reaction against it and others devote their lives to explanation of our universe that can never be tested.

Worse still is that definition desired by a prominent creationist friend of mine: the quest for true explanations to the world around us. If instead all thought of science as the search for predictive answers, can you imagine how much further the world of humanity would have advanced by now, and how few could hold anything against it!

“Just try to avoid thinking any really deep, profound thoughts or you may cause a floating-point exception. That would really mess things up for the rest of us.”

Ron, freakily I often think the exact same thing. If you or any other readers here really think you almost have proof that our universe is simulated, best stop cogitating on it.

@Joy That was a great Feynman quote and one I hadn’t seen before, thanks!

One can judge the quality of a science blog by the inverse of the respect given Kaku (or Tyson or the Very Bad Astronomer, for that matter). By that metric, this is indeed a very good blog.

Sorry joy, they discovered the higgs boson. Furthermore, experiments based on observation are now being done, that may show that the speed of light is not constant. It depends on where one is in spacetime. These studies mentioned by Green et al now indicate that photons of different energies exist such that up to a five second delay can be observed from th same gammaray burst. I have nothing but respect for Einstein, but the speed of light may vary.