A paper in the December 24 issue of Physical Review Letters goes to work on the finding of supposed faster-than-light neutrinos by the OPERA experiment. The FTL story has been popping up ever since OPERA — a collaboration between the Laboratori Nazionali del Gran Sasso (LNGS) in Gran Sasso, Italy and the CERN physics laboratory in Geneva — reported last September that neutrinos from CERN had arrived at Gran Sasso’s underground facilities 60 nanoseconds sooner than they would have been expected to arrive if travelling at the speed of light.

The resultant explosion of interest was understandable. Because neutrinos are now thought to have a non-zero mass, an FTL neutrino would be in direct violation of the theory of special relativity, which says that no object with mass can attain the speed of light. Now Ramanath Cowsik (Washington University, St. Louis) and collaborators have examined whether an FTL result was possible. Neutrinos in the experiment were produced by particle collisions that produced a stream of pions. The latter are unstable and decayed into muons and neutrinos.

What Cowsik and team wanted to know was whether pion decays could produce superluminal neutrinos, assuming the conservation of energy and momentum. The result:

“We’ve shown in this paper that if the neutrino that comes out of a pion decay were going faster than the speed of light, the pion lifetime would get longer, and the neutrino would carry a smaller fraction of the energy shared by the neutrino and the muon,” Cowsik says. “What’s more, these difficulties would only increase as the pion energy increases. So we are saying that in the present framework of physics, superluminal neutrinos would be difficult to produce.”

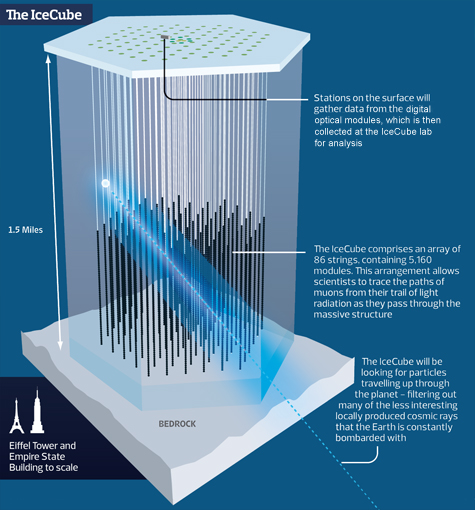

This news release from Washington University gives more details, pointing out that an important check on the OPERA results is the Antarctic neutrino observatory called IceCube, which detects neutrinos from a far different source than CERN. Cosmic rays striking the Earth’s atmosphere produce neutrinos with energies that IceCube has recorded that are in some cases 10,000 times higher than the neutrinos from the OPERA experiment. The IceCube results show that the high-energy pions from which the neutrinos decay generate neutrinos that come close to the speed of light but do not surpass it. This is backed up by conservation of energy and momentum calculations showing that the lifetimes of these pions would be too long for them to decay into superluminal neutrinos. The tantalizing OPERA results look more than ever in doubt.

Image: The IceCube experiment in Antarctica provides an experimental check on Cowsik’s theoretical calculations. According to Cowsik, neutrinos with extremely high energies should show up at IceCube only if superluminal neutrinos are an impossibility. Because IceCube is seeing high-energy neutrinos, there must be something wrong with the observation of superluminal neutrinos. Credit: ICE.WUSTL.EDU/Pete Guest.

As we continue to home in on what happened in the OPERA experiment, it’s heartening to see how many physicists are praising the OPERA team for their methods. Cowsik himself notes that the OPERA scientists worked for months searching for possible errors and, when they found none, published in an attempt to involve the physics community in solving the conundrum. Since then, Andrew Cohen and Sheldon Glashow have shown (in Physical Review Letters) that if superluminal neutrinos existed, they would radiate energy in the form of electron-positron pairs.

“We are saying that, given physics as we know it today, it should be hard to produce any neutrinos with superluminal velocities, and Cohen and Glashow are saying that even if you did, they’d quickly radiate away their energy and slow down,” Cowsik says.

The paper is Cowsik et al., “Superluminal Neutrinos at OPERA Confront Pion Decay Kinematics,” Physical Review Letters 107, 251801 (2011). Abstract available. The Cohen/Glashow paper is “Pair Creation Constrains Superluminal Neutrino Propagation,” Physical Review Letters 107, 181803 (2011), with abstract available here.

That is then the second experiment to contradict the OPERA results.

The Cohen and Glashow calculation was tested , this fall, by the ICARUS experiment located next door to OPERA at Gan Sasso.

http://arxiv.org/abs/1110.3763

A curious question to ask is … just how much can one violate Special Relativity and still have any large elementary particle acceleration still work?

Not much if at all!

I don’t know how many people are aware of how much Relativistic Engineering went into the design of the Large Hadron Collider (just to pick one example).

It’s kind of a strange phrase to say … ‘Relativistic Engineering’ … one thinks of Interstellar Flight ! Yet it’s already a part of our world, the GPS system would not be useable or particle accelerators even work if some engineers has not mastered special and general relativity.

I was so hoping they would be proven correct that it traveled FTL. I’m ready for a warp drive engine. lol

Many people think that the synchronization of clocks is the problem of measuring the speed of neutrinos in the experiment Opera.

A paper is currently submitted for publication shows that this time of 60ns is consistent with the relativistic effect Shapiro.

The title of this article is :

Additional delay in the Common View GPS Time Transfert, and the

consequence for the Measurement of the neutrino velocity with the OPERA detector in the CNGS beam ,

We cab read this article on: http://www.new-atomic-physics.com

A C E

This is typical. A *different* experiment with assumptions based on the Standard model is done with a different result along with the *assumption* that if the OPERA results were real this (different) experiment MUST show the same result. In other words, they more willing to believe and use the Standard model to evaluate new experimental results rather than believe the data.

Basically what happens now is anyone who does repeat the OPERA results will be suspect also and ignored and perhaps the scientists close to the OPERA data and methods will go to their graves convinced they were right unless they feel they must recant to further their careers.

This is exactly how dogma slows done science. It’s all about saving the Standard Model.

“A curious question to ask is … just how much can one violate Special Relativity and still have any large elementary particle acceleration still work?

Not much if at all!”

Built into this question is the assumption that SR can never be overturned because we know how to use its principles to design machines now. That is not very convincing logic to me. Any theory that overturns SR will still look a lot like SR in some energy scales.

I hate to be harsh, but the Cowsick paper is still trying to disprove the experimental observation theoretically. and ugly observation trumps a cool theory every day. I think that measuring pion life times is not every relevant since the original calculations have to be pretty flaky. FTL neutrinos have imaginary mass , or at least it cannot be calculated under S.R. QED how much momentum they have is also not calculable ( under SR) but may be observed).

S.R is NOT wrong, and even if there are FTL neutrinos ( which unfortunately i still rather doubt, though it is FUN to imagine!) in the same sense that newton did a fine job of caclulating the orbits of ( most of) the planets. If there are FTL netrinos, we need some new physics, These guys are just trying to put new wine in old skins, which will burst under the pressure.

on the practical side , no experiment like this will disprove the OPERA experiment to the community, only a better experimental result can falsify it at this point.

I suppose that if they HAD found long lived pions, then that would have been cited as evidence in support, but not having found them falsify’s nothing except their own ( unsubstantiated) calculations. I suppose it was worth a shot, but they net they cast come up empty- does that mean there are no fish in the sea?

“the second experiment to contradict the OPERA results.”

Um, no, certain results _analyzed with existing theory_ contradict the OPERA results. In other words, certain assumptions made by existing theory contradict OPERA. The jury is still out on replicating the OPERA experiment itself.

“how much Relativistic Engineering went into the design of the Large Hadron Collider .”

Yes, and much engineering using Newtons’ laws went into the machines and structures of the industrial revolution. That didn’t mean we couldn’t improve on those laws.

James

yes because 0.025% faster than the speed of light mean that we can create a warpdrive. Even if it is true than it does not mean we can fly to the stars.

Both the Cohen & Glashow and Cowsik papers confuse me. If the current state of the art in physics holds that FTL neutrinos are impossible, how can we use current physics to accurately predict what the consequences of FTL neutrinos would be? To me, that seems like using arithmetic to predict what dividing by zero would look like.

Pardon me, but are they trying to disprove an experimental result that contradicts current theory with…current theory? Seems a little redundant, doesn’t it?

A.A Jackson states “That is then the second experiment to contradict the OPERA results.” then he sites and experiment looking for the equivalent of Cherenkov radiation. That creates a problem in my understanding as I will outline below, and I would be grateful if someone could “put me right”.

It is my understanding that if a stable tachyonic particle emits Cherenkov radiation it will keep increasing in speed forever, and since an increase in speed leads to higher levels of radiation, this creates a contradiction that, if memory serves me correctly, was famously outlined by Martin Gardner. If this is the case, we already know that FTL neutrinos can’t produce this sort of radiation. So why are we looking at its absence as evidence against FTL neutrinos?

It should be noted that the machinery for doing the OPERA, ICARUS and IceCube experiments was all put into place and the measurements taken using the Standard Model for the engineering (which incorporates SR).

So the burden of proof would be on those challenging the results to come up with some physics model different than the Standard Model that one could use to build the machinery used to make the experiments. It can’t be too different or one could not have done the original experiments in the first place.

Right now CERN is trying to validate the Standard Model, hints of the Higgs Boson were presented just this month. By the by there is not only correlation at roughly 125 GEV of the Higgs by ATLAS and the CMS , but also from Fermi Lab before it was shut down.

Next year will tell the final tale.

(Tho , as of now, not seeing Super Symmetry , so far, is a big mystery.)

The best things to come out of particle physics seem to be anything but what they are TRYING to do. The present FTL-whatever might, if real, be the most important item, but exept for that, what exactly has been gained in this 40 year effort that in any way can be said to be proportional to the enormous effort and expenses invested? Basic research should be funded acording to cost-effectivenes, like anything else. Particle physics has been gobling up funds that could have been used to greater effect elsewhere.

A. A. Jackson: “It should be noted that the machinery for doing the OPERA, ICARUS and IceCube experiments was all put into place and the measurements taken using the Standard Model for the engineering (which incorporates SR).”

Yes, and the engineering for the Michaelson-Morley experiment was based on Newtons’ laws. And your point is?

“So the burden of proof would be on those challenging the results.”

There is indeed a burden of proof on those challenging the results of the OPERA experiment to show that it is in error, especially by trying to replicate that particular experiment and showing that it leads to some different results. Not to reiterate the obvious, that it contradicts current theory. There is also a burden of proof on those who think the OPERA results were correct: to replicate it. There is no burden of proof on theory either way. Proof here is an issue of experiments and replication.

If OPERA is replicated, the current theory obviously has to be revised. If not, not. The burden of theory is not one of “proof”, it is of properly describing the experimental reality.

Tachyons may well exist. There might also be forms of matter for which gravity is a repulsive force. (Bob Forward loved such speculations) I am truly agnostic about all of that.

But neutrinos are ordinary leptons, with non-zero mass, which have been observed to be subluminal in a variety of systems at a variety of energies. The source of systemic error in the OPERA result will eventually be found.

It is strange how months seem to go with themes. For the second time in December in the comments on these pages I feel that I have detected the influence of the paradigm approach to science creeping towards the strangulation of progress in the field. Thus, like nick, I take issue with Jackson’s “the burden of proof would be on those challenging the results to come up with some physics model different than the Standard Model that one could use to build the machinery used to make the experiments”

Another comments section theme for the month on this site is the Copernican Principle, and I’m wondering if many realise that his new model where planets moved on circular orbits around the Sun fitted the data far worse than the epicycle model that was the “standard model” of his day. Copernicus knew that his was much less predictive, but he still felt that its simplicity and reduced need to place Earth in a privileged position made up for this. Thus he must have felt that it was okay to postulate a model in parallel to the existing model that, when further worked by greater minds than his own, may, or may not, replace the existing model. And an even greater point of interest in the difference from today’s prevailing mindset, those greater minds than his own must have taken up this challenge rather than taken to griping about it.

“Nick:Yes, and the engineering for the Michaelson-Morley experiment was based on Newtons’ laws. And your point is?”

If the LHC had of been build using Newtonian physics it would have cost maybe 10 thousand dollars instead of billions….. plus it could not have done the correct physics.

Just one point to make.

I think the lowest hanging fruit have always been the likely problem.

1) An overestimation of distance, regardless of their claims of accuracy.

2) Imprecise coordination between the sending and receiving timers.

3) Both could be at fault.

The most likely culprit was the GPS system that determined both distance and timing coordination. Only 20 meters error in 450 mile distance could explain the difference of the speed of light with the neutrinos.

A.A. Jackson, I seriously don’t understand your point. Would you be so kind to explain?

For me, what you say, looks like this: Building the LHC based on Newton’s physics would have been much less expensive but completely useless. I don’t know what point you want to make with this.

@Duncan Ivry

Someone had said the Michelson–Morley experiment had used Newtonian mechanics , actually Newton and Maxwell’s electrodynamics would be more precise.

I was pointing out that one must use special relativity to engineer the LHC, and the Atlas and Cms detectors are designed using the Standard Model , else no useful data would be generated by the experiment.

That is the point.

It looks as if we are in agreement.

Oops Corrected Version, the previous version had exponent symbolic notational issues.

Hi Folks and Happy New Year!

I would love a confirmation of superluminal neutrino results, but I might not be too upset if the results are validly rebuttled for the following reasons.

We in the modern science fiction culture infused 21st Century Earth have all heard of sci-fi and government conspiracy theories of time travel, and space heads such as myself are generally aware of the pros and cons of theories of backward time travel, or of future and backward time travel. According to many of versions of the still speculative theories of such methods, the past and perhaps the future really exists as a backward and forward temporal extension from the present.

However, theoretically, space, and thus time are quantized at the level of the Planck Length and the Planck time. These units are respectively, {{[h/2?] G/[C EXP 3]} EXP 1/2} and {{[h/2?] G/[C EXP 5]} EXP 1/2} or about 1.6 x 10 EXP -35 meters and 5.39 x 10 EXP -44 seconds respectively. Recent astronomical observations tentatively and very indirectly suggest a length quantization of less than about 10- EXP – 48 meters.

Thus, the extension of an object or an energy state for a distance greater than the Planck Time Length into the past or into the future might be discretized and therefore temporally composite with respect to the background.

Now entire assemblages of atoms and the atoms constituent particles and fields may have a backward and forward temporal extension that is smeared out to greater continuity with respect to the past and future simply because each atom, or each discreet quanta of mass and energy composing a large microscopic body or a macroscopic body may proverbially temporally march to its own Planck-Time ruler. Quite simply put, the ticks for each atom or quantized mass energy unit are likely not all centered amongst each other. The general concept here is analogous to two or more parallel rulers placed side to side in contact with each other being displaced from each other by less than one unit distance tick of the ruler.

Thus, the larger the system, the more ambiguity there is in a quantum Planck Time tick that would reference the temporal extension of the system or by which the system could be uniformly temporally measured.

The degree of smearing, Smear, is likely to be at least m-fold for a system with m fundamental quantized particles. In reality, the system’s degree of smearing is likely to be closer to that defined as follows:

If N is the total number of particles or states, the degree of smearing is equal to:

Smear = N = {{?i exp [- Ui/[kbT)]}/{exp [- Ui/[kbT)]}}ni

where ni is the number of particles occupying level i or the number of feasible microstates corresponding to macrostate i; Ui is the energy of i; T stands for temperature; and kb is the Boltzmann constant. This expression applies to systems defined by Boltzmann distributions.

Alternatively, Smear = ? = exp [S/kb]

where S is the system entropy and ? is the number of microstates corresponding to the observed macrostate.

For systems than can be defined by a compound wave-function or a collective wave function, perhaps the smallest unit of time,

conservatively for the system is equal to the Planck Time divided by N or {{[h/2?] G/[C EXP 5]} EXP 1/2}/N = {{[h/2?] G/[C EXP 5]} EXP 1/2}/ {{{?i exp [- Ui/[kbT)]}/{exp [- Ui/[kbT)]}}ni}

or more generally for systems, {{[h/2?] G/[C EXP 5]} EXP 1/2}/ ? = {{[h/2?] G/[C EXP 5]} EXP 1/2 }/ {exp [S/kb]}.

The beginning of all cosmic temporal rulers may have occurred at the very instant of the big bang after which quantum fluctuations cause the entire set of rulers, one for each energy state to become mismatched. For closed universes destined for re-collapse, the very ends of the rulers will line up at the same tick once again. Such an initial and final line up may imply an indistinguishability of the birth of our universe from its death should it be closed and destined for re-collapse. Should the universe re-inflate or rebound, the time dependent distribution of microstates will likely change due to quantum-statistical random-ness or semi-randomness, however, once again, there may be no distinction between the final collapse and re-expansion and the second collapse. The process might continue forever with all births and all collapses being indistinguishable in terms of boundary conditions. Should we learn how to travel at stupendously high gamma factors, and to cloak our space craft from the external universe, upon closely approaching the end state for our universe, provided it is closed, we should be able to tunnel or travel into any number of future versions of our cyclical big bang, provided our universe is of a cyclical closed one.

If our universe is infinitely large, say infinite in the sense of the least transfinite ordinal which I will refer to as Omega in terms of numbers microstates, then the number of possible universe versions is at least equal to Omega = N = {{?i exp [- Ui/[kbT)]}/{exp [- Ui/[kbT)]}}ni as a Boltzmann approximation and more generally, equal to Omega = ? = exp [S/kb].

If our universe is infinitely large, say infinite in the sense of the least transfinite Aleph number, Aleph 0, which is the number of integers, in terms of numbers microstates, then the number of possible universe versions is at least equal to N = Aleph 0 = {{?i exp [- Ui/[kbT)]}/{exp [- Ui/[kbT)]}}ni as a Boltzmann approximation and more generally, equal to ? = Aleph 0 = exp [S/kb].

If our universe is infinitely large, say infinite in the sense of the second least transfinite Aleph number, Aleph 1, which is the number of real numbers according to the continuum hypothesis, in terms of numbers microstates, then the number of possible universe versions is at least equal to N = Aleph 1 = {{?i exp [- Ui/[kbT)]}/{exp [- Ui/[kbT)]}}ni as a Boltzmann approximation and more generally, equal to ? = Aleph 1 = exp [S/kb].

If our universe is infinitely large, say infinite in the sense of the third least transfinite Aleph number, Aleph 2, in terms of numbers microstates, then the number of possible universe versions is at least equal to N = Aleph 2 = {{?i exp [- Ui/[kbT)]}/{exp [- Ui/[kbT)]}}ni as a Boltzmann approximation and more generally, equal to ? = Aleph 2 = exp [S/kb].

On can proceed with similar conjectures with ever higher transfinite Aleph numbers such as Aleph i: i = 3,4,5,… ad infinitum.

For eternally open universes, such a past-future boundary in distinguishability would not apply but such a universe would expand forever perhaps implying innumerable possible future phase changes and all of the additional entropy, order and disorder that would result, and the possibilities for life form untold in number. One thing is for sure, achieving ever higher relativistic gamma factors permits us to travel ever further into the future of our universe from our present era, even if nature censors super-luminal energy and signal propagation in the traditional sense of the word.

The notion of a set of temporal rulers initially perfectly aligned kind of smacks of Newtonian universality, however, perhaps a true contextual background is some form of hybrid between Newtonianism and what I will refer to as Einsteinism.

Regards;

Jim

….Neutrinos have the ability to vibrate between past, present and future along its displacement…

See:

http://la-theory.blogspot.com/2011/12/neutrinos-spazioni-and-velocity.html

Many have come up with possible explanations for the ftl neutrinos, and I see more attempts here. What puzzles me though, is that no one even attempts to explain the raw SN1987a data, and seem content just using a cropped subset of that data that has seemed amenable to more conventional interpretation. I don’t feel that it should fall to me to point out the bloody obvious to particle physicists, but five hours before the main neutrino burst hit us 5 neutrinos were recorded over 7 seconds by the Mont Blanc detector. That detector can also record direction, and the direction of that burst also located it within the Large Magellanic Cloud. It was as if a very narrow and far more powerful beam of neutrinos hit part of the Earth 5 hours before the main bunch.

Everyone at the time seemed to acknowledge that its statistical significance seemed very high, but it was eventually dismissed as incredible anomaly due to the inability of theory to account for it. Such data selection is fine for most purposes, but it means that the cropped data set is now invalid for the purpose of dismissing any result that would imply “new physics”.

@Henry

Depending on the instant at which it will be photographed by the measurement system could be early or late compared to C, but will always be in a neighborhood of C without ever straying too far.

It also means hat the delta time of neutrino versus C is not proportionally to the distance.

After having writed my hypotesis, I read these notes by an Italian Scientist, Fiorentini Giovanni:

“But the glass is half empty – Fiorentini continued – because packages of neutrinos are very close when they leave when they arrive and should be, too: it’s like if some of us put a little ‘more and others a little’ less, as if there was a scattering, this probably reflects the fact that the temporal resolution of the detector operation is not a nanosecond, that is a bit ‘worse than expected, although not to such an extent as to affect the result: we say that the test went well at 70 percent but not 100 percent. ”

In short, the result is so dramatic that it will take first be fully accepted checks and cross checking. It will be necessary to try to make it consistent with other previous measurements, such as those related to the supernova that was implemented in 1987 in the Magellanic Clouds at a distance of 150,000 light years from us. On that occasion, neutrinos came together to light radiation, ie within a range of a few hours, which means that their speed was equal to that of light within a few parts per billion.

http://www.lescienze.it/news/2011/11/18/news/neutrini_superluminali_commento-675984/

The Theory of Relativity leads to the tachyons. They are the moving pieces of space so they have the inertial masses/volumes. This means that the Higgs mechanism is useless. Just the inertial masses/volumes and their energies are the fundamental physical quantities. The phase transitions of the perfect gas composed of tachyons lead to the Einstein spacetime. The ground state of this spacetime consists of the non-rotating-spin neutrino-antineutrino pairs. It is very difficult to detect such binary systems. The neutrino-antineutrino pairs are the carriers of photons and the c is the natural speed of the neutrino-antineutrino pairs in the gas composed of the tachyons i.e. the Theory of Relativity and the Einstein formula E = mc^2 are correct because they do not concern the free neutrinos. The experimental data and the theory of chaos (especially the Feigenbaum constant 4.669…) lead to the atom-like structure of baryons so we can solve the all unsolved problems in the Standard Model in the low-energy regime. Such theory shows that there is the invariance of the mass of neutrinos so in special interactions they can be the superluminal but non-relativistic particles. The superluminal neutrinos appear when the muons (MINOS) or relativistic pions (OPERA) or the W bosons (supernova SN 1987A) decay INSIDE the baryons. There are three methods to calculate the superluminal speeds of neutrinos. The obtained results are consistent with the experimental data and observational facts.

The atom-like structure of baryons leads also to the latest LHC-experiments data. There appear the three weak signals/maximums/peaks for the masses 105 GeV, 118 GeV and 140 GeV associated with the orbits and a little higher peak for the Schwarzschild surface for the strong interactions 127-128 GeV. There should be also a peak for 815 GeV = 0.815 TeV.

The future experimental data will confirm that the Higgs boson(s) is not in existence whereas the free neutrinos produced inside baryons are the superluminal and non-relativistic particles. Before the year 1900 there dominated the wave theories, next the quantum physics whereas now (2012) is the time for theories which start from the gas composed of tachyons. Such theories solve the hundreds unsolved basic problems within the today mainstream theories.

The assumption in some of these cases seems to be that, if neutrinos arrive ahead of light, they must have been traveling at a physical velocity through space faster than light. But perhaps some mechanism we’re unaware of can cause a one time displacement in time or space when the neutrinos are created, such that the “starting line” for the neutrinos is different than for the light. In which case you’d get the same discrepancy in arrival time for different distances, and no Cerenkov radiation.

Similar to the way tunneling photons appear to arrive faster than they should if no obstacle were in the way, not because they’re traveling faster over the entire distance of the experiment, but because the distance of the barrier tunneled through was simply skipped altogether, rather than traveled through.

I don’t think you can really refute an experimental result, save with more experiments.

For want of a good connection, the warp drive was lost (for now):

http://whyevolutionistrue.wordpress.com/2012/02/22/whoops-faster-than-light-neutrinos-dont-exist-after-all/

http://blogs.discovermagazine.com/cosmicvariance/2012/02/22/neutrinos-and-cables/

Neutrinos and Cables

by Sean Carroll from Cosmic Variance blog

February 22, 2012

I’m a little torn about this: the Twitter machine and other social mediums have blown up about this story at Science Express, which claims that the faster-than-light neutrino result from the OPERA collaboration has been explained as a simple glitch:

According to sources familiar with the experiment, the 60 nanoseconds discrepancy appears to come from a bad connection between a fiber optic cable that connects to the GPS receiver used to correct the timing of the neutrinos’ flight and an electronic card in a computer. After tightening the connection and then measuring the time it takes data to travel the length of the fiber, researchers found that the data arrive 60 nanoseconds earlier than assumed. Since this time is subtracted from the overall time of flight, it appears to explain the early arrival of the neutrinos. New data, however, will be needed to confirm this hypothesis.

I suppose it’s possible. But man, that would make the experimenters look really bad. And the sourcing in the article is just about as weak as it could be: “according to sources familiar with the experiment” is as far as it goes. (What is this, politics?)

So it’s my duty to pass it along, but I would tend to reserve judgment until a better-sourced account comes along. Not that there’s much chance that neutrinos are actually moving faster than light; that was always one of the less-likely explanations for the result. But this isn’t how we usually learn about experimental goofs.

Update from Sid in the comments: here’s a slightly-better-sourced story:

http://www.washingtonpost.com/world/europe/european-researchers-find-flaw-in-experiment-that-measured-faster-than-light-particles/2012/02/22/gIQApmdoTR_print.html