Long-time Centauri Dreams readers know I love the idea of ‘deep time,’ an interest that cosmology provokes on a regular basis these days. Avi Loeb’s new work at Harvard tweaks these chords nicely as the theorist examines what we know and when we won’t be able to study it any longer. For an accelerating universe means that galaxies are moving outside our light horizon, to become forever unknown to us. Using tools like the Wilkinson Microwave Anisotropy Probe, we’ve been able to learn how density perturbations in the early universe, thought to have been caused by quantum fluctuations writ large by a period of cosmic inflation, emerged as the structures we see today. But are there limits to cosmological surveys?

Start with that period of inflation after the Big Bang, which would have boosted the scale of things by more than 26 orders of magnitude, helping to account for the fact that the cosmic microwave background (CMB) appears so uniform in all directions. We can tease out the irregularities that have grown into galaxies today. Loeb refers to these density perturbations as a ‘Rosetta stone’ that has unlocked our understanding of the largest cosmological parameters.

Even so, he concludes in his new paper that our ability to understand the earliest period of the universe deteriorates over time. In fact, the optimum time to study the cosmos turns out to have been more than 13 billion years ago, with information being lost along the way in the eras since. From the paper:

… the most accurate statistical constraints on the primordial density perturbations are accessible at z ? 10, when the age of the Universe was a few percent of its current value (i.e., hundreds of Myr after the Big Bang). The best tool for tracing the matter distribution at this epoch involves intensity mapping of the 21-cm line of atomic hydrogen… Although the present time (a = 1) is still adequate for retrieving cosmological information with sub-percent precision, the prospects for precision cosmology will deteriorate considerably within a few Hubble times into the future.

The era Loeb picks as optimum for observation is just 500 million years after the Big Bang, when cosmic perturbations were still emerging in the form of the first stars and galaxies. Hubble time, as defined by the standard cosmological model, is 13.8 billion years, giving us a sense of the scale he is working with. Today we can use 21-centimeter surveys to map the early distribution of matter, but such observations will be impossible in the far future. We have to reckon with the fact that dark energy is still an open question — does it evolve with time? Loeb’s work assumes a universe with a true cosmological constant, but he notes that the ultimate loss of information will occur whether or not an accelerated expansion varies as the universe ages.

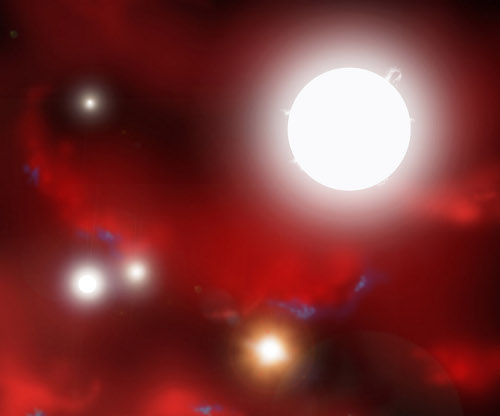

Image: New research finds that the ideal time to study the cosmos was more than 13 billion years ago, just about 500 million years after the Big Bang – the era (shown in this artist’s conception) when the first stars and galaxies began to form. Since information about the early universe is lost when the first galaxies are made, the best time to view cosmic perturbations is right when stars began to form. Modern observers can still access this nascent era from a distance by using surveys designed to detect 21-cm radio emission from hydrogen gas at those early times. Credit: Harvard-Smithsonian Center for Astrophysics.

This work takes us into deep time indeed, with Loeb’s calculations showing that beyond 100 Hubble times (a trillion years in the future), the wavelength of the CMB and other extragalactic photons will be stretched by a factor of 1029 or more, making current cosmological sources unobservable:

While the amount of information available now from observations of our cosmological past at z ? 10 is limited by systematic uncertainties that could potentially be circumvented through technological advances, the loss of information in our future is unavoidable as long as cosmic acceleration will persist.

It’s always interesting to speculate about what any observers in this remote future would make of the universe without the ability to recover evidence of the early cosmological perturbations. Clearly our observations have a fundamental limit as a function of cosmic time, and even our existing data, which Loeb sees as far from optimal, will one day be unrecoverable from new observations. If there are future advances that would somehow surmount these limitations to open up the universe’s past, we can only speculate on what they might be, and how they would be developed by a civilization living in this unimaginably remote future cosmos.

The paper is Loeb, “The Optimal Cosmic Epoch for Precision Cosmology,” accepted for publication in the Journal of Cosmology and AstroParticle Physics (preprint).

Ha! This is lots like archaeology. The greater the time depth, the more likely intervening events scrambled the archaeological record making it hard to make precisely determinations about prehistory.

Interesting that cosmologists can precisely quantify some limits to their knowledge and how these limits evolve over time.

I wonder if there’s some deep connection to this kind of observation and the nature of time itself and entropy. The remote past becomes something more and more loosely constrained and determined as historical evidence becomes scrambled. Perhaps the far far future will look more “timeless” because it will be impossible to determine origins as all historical evidence gets erased via entropy. In that sense, from the perspective of people in the very distant future, the past and the future start to look identical.

No worries. The notion that cosmic expansion is accelerating will be debunked with JWST.

@Erik Anderson I don’t know if JWST will debunk cosmic acceleration. That’s partly based on the observation of hundreds of type 1a supernovae; to change or overturn that you would need to show that type 1a magnitudes are NOT constant, or they vary widely according to metallicity (or age).

Better data on the BAO (Baryon Acoustic Oscillations) may narrow the constraints on dark energy, but won’t eliminate it.

Cosmological theories that are and will change drastically are Dark Matter – there just isn’t enough and the theories trying to explain its distribution are often Middle-Ages ridiculous. TeVeS or other similar relativistic version of MOND that modifies gravity at very low accelerations will probably eliminate the need for most (not all) of the Dark Matter required.

Another theory (or set of theories) that is/are not looking healthy is String Theory. It’s just not testable. If it can’t be tested or falsified, then it’s Philosophy or Math, not Physics.

The assumption is that each future civilization’s acquisition of information is constrained to a point in time, with no knowledge handed down by prior civilizations. If this is not true, then future civilizations will be able to comprehend their universe based on information transferred to them from the past.

Now planning for that deep time information transfer would be an interesting project, making the “clock of the long now” like a children’s exercise in comparison.

@Alex

You gotta work to overcome entropy. Digital preservation is never just “preservation” (bits rot quickly in most storage media), rather data preservation requires active curation. It can’t be interrupted.

It will be hard to communicate much knowledge to the far future. So I think deep time will erase most of our knowledge and our data will be lost to noise, unless we’re successful at continuously capturing energy to fuel data curation.

Sobering.

Alex, the essence of science is repeatability. If that repeatability is lost in some distant future, the knowledge our descendents will have will be closer to religion than science.

Separately, along the same lines as Eric’s thoughts, what are the implications of this on the conservation of information assumption? Is the information Loeb is talking about truly lost from the universe? Or is it just spread out?

When I saw today’s post title my brain clicked: budget cuts?! Oh the humanity!

@Eric Why should information preservation be in digital form, a form so subject to entropy. In our modern infatuation with everything subatomic we’ve forrgotten the large scale. I’ve seen brass sheets hanging outdoors at an Indian temple carrying information as fresh and whole as when they were made thousands of years ago. No reason why that large form wouldn’t last a very lot longer.

@FrankH I believe in the consistency of standard candles. I do not believe in the redshift-age relationship given by LCDM cosmology. The hundreds of SN1A observations do not go deep enough into the universe to expose the problem.

I predict that JWST will find highly evolved galaxies at astonishingly high z values, which will raise the question of how they could evolve so quickly after the Big Bang. We are already seeing the beginnings of a problem near the edge of the visible universe with discoveries of compact galaxies believed to be only several hundred million years old. JWST observations should make the LCDM chronology even more implausible, helping us to expose the theoretical error upon which dark energy subsists.

@ Eric

Sobering indeed.

I note that living organisms have preserved key features of core metabolic processes over billions of years of evolution, so we may have a potential model of how we can store data over long periods.

Massive redundancy of slowly rotting data would also enable reconstruction until the rot was complete, at least increasing the replication intervals.

It is easy to preserve digital data indefinitely, just make it redundant, protect it with hash codes and replicate it often. Self- or otherwise.

Also, if and when our descendants venture out into the galaxy, there will be no way that they could all disappear. Thus, there will always be someone or something around to safeguard, manage, organize, and interpret the accumulated knowledge of all of humankind (whether still human or not).

Therefore, in a trillion years, if you want to get the 2012 copy of Wikipedia, you will likely be able to download it from your local data repository in a few microseconds…. Of course, to read it, you’d have to take classes (or downloads?) in the ancient languages, first.

It’s very hard to imagine any kind of civilization lasting long enough to have to consider this kind of thing. As we progress we exponentially invent more ways of killing ourselves and everything else.

Stan. The Voyager spaceprobes are carrying an early form of data storage by way of metal plates and digital formats advertising their progenitors; as well as exhibiting the structual technology of their construction. Not quite ‘large scale’ as you suggest but designed to last (hopefully) for thousands of years.

Eric

“You gotta work to overcome entropy. Digital preservation is never just “preservation” (bits rot quickly in most storage media), rather data preservation requires active curation. It can’t be interrupted.

It will be hard to communicate much knowledge to the far future. So I think deep time will erase most of our knowledge and our data will be lost to noise, unless we’re successful at continuously capturing energy to fuel data curation.

Sobering.”

By using redundancy (storing the same information in two/more places) you can decrease the probability of losing information to arbitrarily low values.

Preserving intact information until the stars themselves turn into iron is far less challenging than you make it out to be.

@FrankH – Agree

String theory is the Norwegian Blue Parrot that John Cleese bought. According to Woit’s blog at Columbia, no string theorists hired (in the US) so far this year. Good riddance! However, I am sure that string practitioners can find work in the fishing industry, as they are master baiters. Or perhaps they can seek employment elsewhere in the multiverse, in a brane where their “theories” are testable.

As for dark matter, it is looking increasingly unwell, but a bit premature to pronounce dead yet. None of the MOND variants are ready for prime time either.

On the other hand, accelerating cosmic expansion is an increasing well supported observation.

Every deep space probe should be equipped with information about humanity and our world, both for any ETI recipients and our descendants who move into the wider Milky Way galaxy.

Such information packages should also be buried under the surface of relatively stable worlds in our Sol system. If and when our species decides to expand into space, this precious and probably rare if not lost knowledge will be their reward.

As for preserving aspects of ourselves on the planet Earth, here you go:

http://listverse.com/2009/04/27/top-10-incredible-time-capsules/

http://weburbanist.com/2010/01/03/saving-time-ten-trippy-time-capsules/

And here is another reason – in addition to terrestrial erosion – why our civilization information need to be protected up in space for now:

http://www.oglethorpe.edu/about_us/crypt_of_civilization/most_wanted_time_capsules.asp

@Joy

I see another Woit reader!

I’m not particularly wed to MOND, it just seems that theories that modify gravity at low accelerations do a better job of explaining observations than theories with huge, pervasive amounts of magical dark matter. There’s no doubt that there’s plentiful amounts of dark matter (probably mostly in neutrinos) but not 80+% of the matter in the Universe, and certainly not with the magical powers (or self awareness) required for it to match observations.

I agree that accelerating cosmic expansion is here to stay; while one set of data may have large error bars, other unrelated data still produces similar results.

String Theory – On second thought, let’s not mention String Theory. It is a silly place.

Deep time, will there be anything left to study, or anyone to study it ?

Either the “big rip” will tear atoms themself or all star formation would have stopped eons ago. Just a dark universe compiled of burned out stars and black holes.

Who or what would be there to study anything ?

Martin Alfredsson:

Depends on how deep. If a few trillion years is deep enough for you, it appears there will be plenty of active red dwarfs left at that time to sustain our descendants, in whatever form they may exist. If there will be anything left to study, I don’t know. I suppose after millions of years of study, you may actually run out of things that have not been studied, yet. Or not. Hmmm…

Long before that time, really advanced intelligences will have either found or made their own “baby” universes and jumped into them, avoiding this one – assuming they cannot control the Universe itself by then.

http://arxiv.org/abs/1110.3998

http://www.orionsarm.com/eg-article/4609cb6a051a4

“control the universe” is pretty much a contradiction in terms. We are a product of the universe, and that table cannot be turned.

Eniac said on May 25, 2012 at 23:35:

““control the universe” is pretty much a contradiction in terms. We are a product of the universe, and that table cannot be turned.”

I am a product of my mother and father, but every now and then I was able to get them to do what I wanted. :^)

http://arstechnica.com/tech-policy/2011/06/internet-archive-backs-up-digital-books-on-paper/

Internet Archive starts backing up digital books on paper

The Internet Archive is turning to a tried-and-true medium for backing up …

by Jon Stokes – June 13 2011, 7:09pm EDT

Modified shipping containers used by the Internet Archive

Internet Archive

If you want real long-term backups of digitized ebooks, then look no further than dead tree. At least, that’s the consensus of the Internet Archive project, which has announced an incredibly ambitious plan to store one physical copy of every published book in the world.

“Internet Archive is building a physical archive for the long term preservation of one copy of every book, record, and movie we are able to attract or acquire… The goal is to preserve one copy of every published work,” writes IA’s Brewster Kahle in a lengthy blog post about the plan.

Kahle cites a number of reasons for wanting to preserve physical copies of works that are being digitized; for instance, a dispute could arise about the fidelity of the digital version, and only access to a copy of the original would resolve it. Kahle also told Kevin Kelly that we’ll eventually want to rescan these books at an even higher DPI, so the digital copies will be waiting when we do.

Another reason for keeping a physical copy, and one that Kahle doesn’t mention, is that the problem of long-term digital storage still isn’t completely solved. The cloud as a large-scale storage medium has only recently emerged, and it’s definitely not perfect as a long-term archival medium. Digital archivists have long pointed out that given a sufficient length of time, data loss is a problem even for highly redundant, highly available, distributed storage systems. Apart from the well-known phenomenon of bit rot, bits can get flipped for any number of reasons as they traverse a network and multiple software stacks, and ECC and other failsafes don’t catch 100 percent of these errors. So on a long enough time horizon with a large-enough, complex-enough system, these undetected, flipped bits will begin to accumulate.

Realistically, Kahle and Co. expect to preserve 10 million books, out of an estimated 100 million published. These will be packed into climate-controlled storage containers, and stored in a facility in Richmond, CA that opens this month.

Kahle describes the details of the physical preservation as follows:

•Books are cataloged, and have acid free paper inserts with information about the book and its location,

•Boxes store approximately 40 books with labeling on the outside,

•Pallets hold 24 boxes each,

•Modified 40? shipping containers are used as secure and individually controllable environments of 50 or 60 degrees Fahrenheit and 30% relative humidity,

•Buildings contain shipping containers and environmental systems,

•Non-profit organizations own and protect the property and its contents.

In order to reach its goal of collecting one of every book ever made, the Internet Archive is soliciting contributions of books from everyone from libraries to individual collectors.

Preserving the original copies is definitely a good idea. However, long after these physical records have turned to dust, you will still be able to download their images from the Internet, likely from multiple scannings in various stages of decay. That would make a great time lapse movie!

Could the Large Hadron Collider Discover the Particle Underlying Both Mass and Cosmic Inflation?

If the LHC discovers the Higgs boson or other theoretical particles, their existence could help explain inflation, one of the universe’s great mysteries

By Charles Q. Choi | June 29, 2012 |

Within a sliver of a second after it was born, our universe expanded staggeringly in size, by a factor of at least 10^26. That’s what most cosmologists maintain, although it remains a mystery as to what might have begun and ended this wild expansion. Now scientists are increasingly wondering if the most powerful particle collider in history, the Large Hadron Collider (LHC) in Europe, could shed light on this mysterious growth, called inflation, by catching a glimpse of the particle behind it. It could be that the main target of the collider’s current experiments, the Higgs boson, which is thought to endow all matter with mass, could also be this inflationary agent.

During inflation, spacetime is thought to have swelled in volume at an accelerating rate, from about a quadrillionth the size of an atom to the size of a dime. This rapid expansion would help explain why the cosmos today is as extraordinarily uniform as it is, with only very tiny variations in the distribution of matter and energy. The expansion would also help explain why the universe on a large scale appears geometrically flat, meaning that the fabric of space is not curved in a way that bends the paths of light beams and objects traveling within it.

The particle or field behind inflation, referred to as the “inflaton,” is thought to possess a very unusual property: it generates a repulsive gravitational field. To cause space to inflate as profoundly and temporarily as it did, the field’s energy throughout space must have varied in strength over time, from very high to very low, with inflation ending once the energy sunk low enough, according to theoretical physicists.

Much remains unknown about inflation, and some prominent critics of the idea wonder if it happened at all. Scientists have looked at the cosmic microwave background radiation—the afterglow of the big bang—to rule out some inflationary scenarios. “But it cannot tell us much about the nature of the inflaton itself,” says particle cosmologist Anupam Mazumdar at Lancaster University in England, such as its mass or the specific ways it might interact with other particles.

A number of research teams have suggested competing ideas about how the LHC might discover the inflaton. Skeptics think it highly unlikely that any earthly particle collider could shed light on inflation, because the uppermost energy densities one could imagine with inflation would be about 10^50 times above the LHC’s capabilities. However, because inflation varied with strength over time, scientists have argued the LHC may have at least enough energy to re-create inflation’s final stages.

It could be that the principal particle ongoing collider runs aim to detect, the Higgs boson, could also underlie inflation.

“The idea of the Higgs driving inflation can only take place if the Higgs’s mass lies within a particular interval, the kind which the LHC can see,” says theoretical physicist Mikhail Shaposhnikov at the École Polytechnique Fédérale de Lausanne in Switzerland. Indeed, evidence of the Higgs boson was reported at the LHC in December at a mass of about 125 billion electron volts, roughly the mass of 125 hydrogen atoms.

Also intriguing: the Higgs as well as the inflaton are thought to have varied with strength over time. In fact, the inventor of inflation theory, cosmologist Alan Guth at the Massachusetts Institute of Technology, originally assumed inflation was driven by the Higgs field of a conjectured grand unified theory.

Full article here:

http://www.scientificamerican.com/article.cfm?id=could-lhc-discover-higgs-boson

What Did Edgar Allan Poe Know About Cosmology? Nothing. But He Was Right.?

http://www.nytimes.com/2002/11/02/books/think-tank-what-did-poe-know-about-cosmology-nothing-but-he-was-right.html?pagewanted=all&src=pm