Having looked at the Z-pinch work in Huntsville yesterday, we’ve been kicking around the question of fusion for propulsion and when it made its first appearance in science fiction. The question is still open in the comments section and I haven’t been able to pin down anything in the World War II era, though there is plenty of material to be sifted through. In any case, as I mentioned in the comments yesterday, Hans Bethe was deep into fusion studies in the late 1930s, and I would bet somewhere in the immediate postwar issues of John Campbell’s Astounding we’ll track down the first mention of fusion driving a spacecraft.

While that enjoyable research continues, the fusion question continues to entice and frustrate anyone interested in pushing a space vehicle. The first breakthrough is clearly going to be right here on Earth, because we’ve been working on making fusion into a power production tool for a long time, the leading candidates for ignition being magnetic confinement fusion (MCF) and Inertial Confinement Fusion (ICF). The former uses magnetic fields to trap and control charged particles within a low-density plasma, while ICF uses laser beams to irradiate a fuel capsule and trap a high-density plasma over a period of nanoseconds. To be commercially viable, you have to get a ratio of power-in to power-out somewhere around 10, much higher than breakeven.

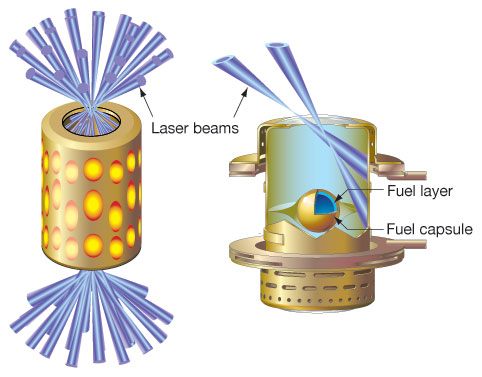

Image: The National Ignition Facility at Lawrence Livermore National Laboratory focuses the energy of 192 laser beams on a target in an attempt to achieve inertial confinement fusion. The energy is directed inside a gold cylinder called a hohlraum, which is about the size of a dime. A tiny capsule inside the hohlraum contains atoms of deuterium (hydrogen with one neutron) and tritium (hydrogen with two neutrons) that fuel the ignition process. Credit: National Ignition Facility.

Kelvin Long gets into all this in his book Deep Space Propulsion: A Roadmap to Interstellar Flight (Springer, 2012), and in fact among the books in my library on propulsion concepts, it’s Long’s that spends the most time with fusion in the near-term. The far-term possibilities open up widely when we start talking about ideas like the Bussard ramjet, in which a vehicle moving at a substantial fraction of lightspeed can activate a fusion reaction in the interstellar hydrogen it has accumulated in a huge forward-facing scoop (this assumes we can overcome enormous problems of drag). But you can see why Long is interested — he’s the founding father of Project Icarus, which seeks to redesign the Project Daedalus starship concept created by the British Interplanetary Society in the 1970s.

Seen in the light of current fusion efforts, Daedalus is a reminder of how massive a fusion starship might have to be. This was a vehicle with an initial mass of 54,000 tonnes, which broke down to 50,000 tonnes of fuel and 500 tonnes of scientific payload. The Daedalus concept was to use inertial confinement techniques with pellets of deuterium mixed with helium-3 that would be ignited in the reaction chamber by electron beams. With 250 pellet detonations per second, you get a plasma that can only be managed by a magnetic nozzle, and a staged rocket whose first stage burn lasts two years, while the second stage burns for another 1.8. Friedwardt Winterberg’s work was a major stimulus, for it was Winterberg who was able to couple inertial confinement fusion into a drive design that the Daedalus team found feasible.

I should mention that the choice of deuterium and helium-3 was one of the constraints of trying to turn fusion concepts into something that would work in the space environment. Deuterium and tritium are commonly used in fusion work here on Earth, but the reaction produces abundant radioactive neutrons, a serious issue given that any manned spacecraft would have to carry adequate shielding for its crew. Shielding means a more massive ship and corresponding cuts to allowable payload. Deuterium and helium-3, on the other hand, produce about one-hundredth the amount of neutrons of deuterium/tritium, and even better, the output of this reaction is far more manipulable with a magnetic nozzle. If, that is, we can get the reaction to light up.

It’s important to note the antecedents to Daedalus, especially the work of Dwain Spencer at the Jet Propulsion Laboratory. As far back as 1966, Spencer had outlined his own thoughts on a fusion engine that would burn deuterium and helium-3 in a paper called “Fusion Propulsion for Interstellar Missions,” a copy of which seems to be lost in the wilds of my office — in any case, I can’t put my hands on it this morning. Suffice it to say that Spencer’s engine used a combustion chamber ringed with superconducting magnetic coils to confine the plasma in a design that he thought could be pushed to 60 percent of the speed of light at maximum velocity.

Spencer envisaged a 5-stage craft that would decelerate into the Alpha Centauri system for operations there. Rod Hyde (Lawrence Livermore Laboratory) also worked with deuterium and helium-3 and adapted the ICF idea using frozen pellets that would be exploded by lasers, hundreds per second, with the thrust being shaped by magnetic coils. You can see that the Daedalus team built on existing work and extended it in their massive starship design. It will be fascinating to see how Project Icarus modifies and extends fusion in their work.

The Z-pinch methods we looked at yesterday are a different way to ignite fusion in which isotopes of hydrogen are compressed by pumping an electrical current into the plasma, thus generating a magnetic field that creates the so-called ‘pinch.’ Jason Cassibry, who is working on these matters at the University of Alabama at Huntsville, is one of those preparing to work with the Decade Module 2 pulsed-power unit that has been delivered to nearby Redstone Arsenal. Let me quote what the recent article in Popular Mechanics said about his thruster idea:

Cassibry says the acceleration of such a thruster wouldn’t pin an astronaut to the back of his seat. During shuttle liftoff, rocket boosters generate a thrust of about 32 million newtons. In contrast, the pulsed-fusion system would generate an estimated 10,000 newtons of thrust. But rocket fuel burns out quickly while pulsed-fusion systems could keep going at a “slow” but steady 24 miles per second. That’s about five times faster than a shuttle drifting around in Earth orbit.

A slow but steady burn like this would be a wonderful accomplishment if it can be delivered in a package light enough to prove adaptable for spaceflight. Given all the background we’ve just examined — and the continuing research into both MCF and ICF for uses here on Earth — I’m glad to see the Decade Module 2 available in Huntsville, even though it may be some time before it can be assembled and the needed work properly funded. Because while we’ve developed theoretical engine designs using fusion, we need to proceed with the kind of laboratory experiments that can move the ball forward. The Z-pinch machine in Huntsville will be a useful step.

I did find this online paper by Spencer from 1963:

JPL Technical Report 32-397

“Fusion propulsion Requirements for an Interstellar Probe”

http://ntrs.nasa.gov/archive/nasa/casi.ntrs.nasa.gov/19630011444_1963011444.pdf

Check out the References at the very end of this paper for even earlier papers on fusion propulsion from Spencer and others.

I love the fact that when this paper was published, the United States had just completed its first manned Mercury mission that lasted an entire day in orbit and not one NASA space probe had yet made it to the Moon in a functioning capacity. However, they did have better luck with exploring Venus with Mariner 2 just months earlier.

Also:

JPL Technical Report 32-233

“Feasibility of Interstellar Travel” by Spencer and Jaffe from 1964.

http://ntrs.nasa.gov/archive/nasa/casi.ntrs.nasa.gov/19640006154_1964006154.pdf

I also found this paper online:

VISTA – A Vehicle for Interplanetary Space Transport Application Powered by Inertial Confinement Fusion, by C. D. Orth, May 16, 2003 (LLNL UCRL-TR-110500)

https://e-reports-ext.llnl.gov/pdf/318478.pdf

Also:

“An Interstellar Precursor Mission”

JPL Publication 77-70 – October 30, 1977

http://ntrs.nasa.gov/archive/nasa/casi.ntrs.nasa.gov/19780013230_1978013230.pdf

And…

“Fontiers in Propulsion Research: Laser, Matter-Antimatter, Excited Helium, Energy Exchange Thermonuclear Fusion”

NASA Technical Memorandum 33-722

http://ntrs.nasa.gov/archive/nasa/casi.ntrs.nasa.gov/19750014301_1975014301.pdf

Who was the first person to think about using a magnetic field to do this?

carl sagan did say to use it to deflect particles in the 1980s, but not to pull it forward.

Who said to use a magnetic field to pull a space craft forward / or like the sun, it blast out charges of particles that push and bust its magnetic field???????????????????????????????????????

My gut feeling is fusion as a power source will turn up in SF later than you expect. I would not be too surprised if it shows up after the Ivy-Mike test in 1952.

As I recall, what SF authors used before fusion entered the public imagination was either fission or total conversion.

There are a number of lines of fusion research so it remains in the mix of possibilities. But for from the First Mission Principle perspective, huge buildings to fire numerous lasers and massive tokomaks don’t seem like probable candidates for the first interstellar propulsion engines. My current bet is still on some form of beamed propulsion.

;@James Davis Nicoll

Could be.

A quick look at my references does not tell me who really first proposed fusion for propulsion.

Shepherd and A. V. Cleaver published “The Atomic Rocket”, JBIS, 1948, and it was republished in

Realities of Space Travel: Selected Papers of the British Interplanetary Society, 1957.

Fusion propulsion , in terms of energetics , was proposed there, but can’t verify if they mentioned it in 1948.

It’s interesting that we know during the Manhattan Project that Oppenheimer kind of occupied a restless Teller by assigning him development of a hydrogen bomb. That idea may have occurred to Bethe first, but during 1940 to 1945 every high powered physicist at Los Alamos must have thought of it.

We know Stan Ulam thought up Orion , and probably thought it up before 1950. Ulam also published internal memos about nuclear propulsion ,dates on those are the 1950’s but when he first thought about them, don’t know.

Have vague references that Rand had in house work on fusion propulsion, don’t know if it was ever published.

From 1950 onwards lots fusion propulsion ideas published.

I recall reading in one of Feynman’s popular books (I forget which, it was years ago) that after the war and the Manhattan project that Oppenheimer had the team write patents on just about every conceivable application of nuclear power. It went something like: pick any existing machine (boat, car, toaster, furnace, …), add nuclear power, write a patent application. They may have patented nuclear propulsion for spacecraft before giving it any serious thought.

Only after several centuries of solar-system settlement by ever-larger O’Neill habitats will there be the knowledge base for such huge fusion machines to be trustworthy for centuries of unattended or occasionally attended operation. Only after spacers far outnumber Earthers will such machines be built.

The enormous opportunity cost of so much fusion fuel will be affordable only by a civilization tens of thousands of times wealthier than today’s Earth, a civilization that extracts cubic miles per day of Helium 3 from Uranus and routinely deals with hundreds to thousands of kps delta-vee, a civilization with fusion-powered mega-habitats entering the Kuiper Belt.

It’s nothing short of ludicrous to envisage any organization of Earthers ever being able to field a fusion starship, considering that its fuel load could power the entire Earth for…what, decades?

A modest sail ship and a single large space-based laser–at least that’s financially feasible for Earthers within a century, but never an Icarus.

It is always nice to see informative post on fusion propulsion! Thanks Paul Gilster that you keep this small flame alive for the public. I just have two comments:

– Fusion propulsion is always related to fusion powers plants: the idea is that if we can build a powerplant on earth, we could build a fusion engine in space. A kind of two-steps approach. MY opinion is that the requirements are so different that the major concepts studied on Earth like tokamaks or laser inertial fusion could never be applied in space. And to try to adapt it would not make non sense. A fusion powerplant has to produce electricy, and to be radiation-safe. That’s it. It can be has heavy has you want, take as much room as you need, there is no problem. A fusion propulsion system has a completely different goal: to expell particles at high velocity in one direction (this formulation covers both the case of thrust or high Isp). Well you will tell me, it could also be used as an intermediate power generator to feed an electrical engine but it will increase mass and complexity and will end up in an engineering nightmare; we just want to dream. Therefore what we need is not energy as produced by fusion but the by-product which is the transformation of this energy in momentum. In a tokamak, we get plenty of these fast particles, the problem is that they are produced axisymetrically, therefore there are of no use for propulsion. But just imagine if we had a concept to produce them in a given direction!

In addition the environment is completely different on earth and in space. How could the space environment help in confining the plasma or triggering the fusion reactions. All these things to say that the most common approach is bottom-up: you take existing earth concepts and try to apply them in space. It would interesting to see if they are also top-down approach where you look at the requirements and the environment and you try to take benefit of it to develop a concept. Perhaps you have a reference in mind?

– the second point concerns the future experiment in Huntsville: that’s great that they go beyond theoretical thinking: experiments can not only validate concepts but also help in finding new ones; technical problems are a good source of inspiration.

For those who have dug into this, what would be affordable next-step increments of research that address key issues and unknowns? I’m not talking about large-scale testing, but tasks at the level of graduate work. For example, which parts have the least fidelity of understanding (or the hugest uncertainty span)? Is it the achievable level of magnetic containment? Or dealing with the waste heat? Or control fidelity for stable operation? What is the weakest link in the chain of technologies needed to bring such devices on line?

If any of you can come up with a laundry list of graduate-level tasks, I’d appreciate having those on hand.

Thanks,

Marc

> The enormous opportunity cost of so much fusion fuel

Good point Bill. There is also the opportunity cost of spending that much money whereas probably most people will have other priorities. In particular, because they are similar in people’s minds, how much exploration and development would that amount of money achieve if spent in the solar system? Also, compare the amount of benefit that an Icarus mission to a probably dead solar system would give over the results of a far cheaper large telescope in 43-100 years after launch. So, we need either a far cheaper approach such as beamed propulsion largely using anticipated in-space infrastructure or a different rationale such as the survival of the human species.

How long has a fusion reaction been sustained?

I see they want to use 500 tons of instruments -that is a lot- with much smaller electronics we would get away with tons of instruments and a much small spacecraft.

Fusion power plants based on MCF or ICF fusion would be extremely large and have a low power densities compared to fission plants of similar electrical output. Also it is questionable whether anything else but D-T fusion can ever be made workable with those kinds of technologies. We haven’t even reached breakeven in a D-T fusion reactor, so it is premature to speak about fusing D-He3 in such machines, which is orders of magnitude more difficult.

I’m a layman and know relatively little about the physics involved, but from reading about fusion research in general I came to the conclusion that neither ICF or MCF will result in a workable high-performance space propulsion system. Unless heterodox approaches like the Polywell lead to success, I fear that antimatter-initiated ICF will be the only option to make fusion pulse propulsion work, since using antimatter allows you to do away with heavy ion or laser beams and the necessity to recoup fusion energy to drive those beams. An an antimatter-initiated ICF system oould run off stored antimatter and electricity from a simple fission reactor, so it might be an option even if net electrical power generation from fusion remains science fiction.

To Michael: actually sustaining a fusion reaction is pretty straightforward: inject MW of power into a plasma through RF waves or beams of fast particles and you can trigger reactions as long as you want. The problem is to have a self-sustaining reaction which is characterized by the respect of the Lawson’s criterion (http://en.wikipedia.org/wiki/Lawson_criterion): you have to maximize the product of density, temperature and confinement time of energy. The problem is that you cannot increase the density as much as you want without exciting instabilities which disrupt the confinement of the plasma. And the confinement time is related to the understanding of transport of energy and particles from the center of the plasma where fusion reactions take place to the edge where they are lost. It has been a relativelyrecent discovery that turbulence could explain a big part of the losses and major efforts are dedicated to its analysis.

The success in reaching the Lawson’s criterion is characterized by the amount of auxiliary power you need (through the famous Q factor, the ratio of fusion generator power to the injected power): the purpose is to have no injection of external power to sustain the fusion reactions. This goal has never been attained: the NIF will try this year, ITER in 10 years. The best result up to now has been a production of fusion power just high enough to compensate for the auxiliary heating. It was on the JET tokamak.

To M. G. Millis: does your question concern the production of fusion energy on earth or directly fusion propulsion?

In both cases, it is a very broad question and it even depends on the type of facility (tokamak, stellarator, laser inertial) I can write a quick list without many details, just enough to have an overview of the various topics:

Physics:

– to understand why we have different types of confinement of the plasma depending on the injected power, of the current profile inside the place, in the shape of the magnetic field into it. To characterize these different modes of confinement.

– analysis of the many types of instabilities (MHD ideal or resistive, particles-related, impurity-related, and the associated limitations on the confinement of the plasma.

– analysis of the tranport of particles and energy inside the plasma, effect of the turbulence, to find the right knobs to turn to play with it and improve the confinement.

– analysis of interactions between the plasma and its environment

– interactions between plasma and waves (either for RF heating or for laser ignition)

Technology:

– supraconducting coils

– tritium inventory and shielding of fast ions

– thermal resistance of materials or cooling strategies (nitrogen cushion)

– control of plasma disruptions (brutal losses of confinement)

– continuous production of energy (most devices except the stellarator work in pulses).

This is the quick overview, it is not very accurate especially if you need topics for graduate students. But if one field attracts your interest, I can give you more detailed infos.

@ljk

Thanks for those JPL linked documents, I had lost my hard copy of those.

Have you seen this one?

http://ntrs.nasa.gov/archive/nasa/casi.ntrs.nasa.gov/19760065935_1976065935.pdf

I think George Dyson has some about von Braun’s interest in nuclear pulse propulsion in his book.

You are welcome A. A. Jackson, and no I had not seen this one before. But now I know where some of the diagrams and artwork I had seen separately in the past came from.

Orion may or may not work as a star vessel, but it could have done (and still could do) wonders around our celestial neighborhood. And unlike many other plans, it is feasible and it can be made now.

what about radiation accumalation especially launching from earth and then from apogee in low earth orbit however once left earth confines slinging to say mars I think the orion would work great and an easy way to get up to speed on the way home just dont take the same route because you will be riding the radiation cloud all the way home

Fusion nuclear propulsion will open vast horizons to the future of mankind. I think electrostatic acceleration is much more energy-efficient than magnetic compression and lasers. With electrostatic fusion machine, it is possible to fuse advanced fusion fuels (helium-3 and p-B11) and recycle unburned fuels, which can open a revolutionary path to interstellar travels. http://www.youtube.com/watch?v=ro5-QYqqxzM

http://philosophyofscienceportal.blogspot.com/2012/09/deceased-john-killeen.html

Wednesday, September 5, 2012

Deceased — John Killeen

John Killeen

1925 to August 15th, 2012

“IN MEMORIAM: Professor Emeritus John Killeen, pioneer in fusion, computational physics”

September 5th, 2012

UCDAVIS

Professor Emeritus John Killeen knew fusion science as a pioneer in the field, from around the time of the then-secret Project Sherwood at UC’s Livermore lab. And he knew the power of computers from their very inception, using practically every generation since in practical scientific applications.

He melded his expertise into a renowned career at UC Davis, as one of the first instructors in the Department of Applied Science, and at the Lawrence Livermore National Laboratory, where he was the founding director of the Controlled Thermonuclear Research Computer Center and the Plasma Physics Research Institute.

Killeen, a member of the American Association for the Advancement of Science and former principal editor of the Journal of Computational Physics, became an emeritus after suffering a stroke in 1989. He died Aug. 15 of cardiac arrest at Chaparral House in Berkeley, at the age of 87.

He joined the College of Engineering shortly after its establishment nearly 50 years ago, as a lecturer in mathematical science in the Department of Applied Science, founded by the famed nuclear physicist Edward Teller in 1963. The department operated in Davis and Livermore.

“John’s colleagues remember him as a strong advocate of campus-laboratory collaboration, which has become more common today in great part because of his efforts,” Enrique Lavernia, dean of the College of Engineering, wrote in an email to college faculty and staff.

Killeen was appointed to a professorship in 1968, serving part time while also working as a scientist at the Livermore lab. He was later appointed as an associate dean, in charge of graduate students and research in the College of Engineering.

“John delivered lectures that were lucid, rigorous and elegant,” former graduate student David V. Anderson said in 1992 during the International Workshop on the Theory of Fusion Plasmas, in Varenna, Italy.

Killeen also went to Italy, honored during the workshop there for his contributions to computational physics in magnetic fusion research.

The differential analyzer

He attended UC Berkeley, earning a bachelor’s degree in physics (1949), and a master’s (1951) and doctorate (1955) in mathematics, the latter two while working at the Lawrence Berkeley lab. His introduction to computing came during his time as a Ph.D. candidate, when he worked on the differential analyzer, a forerunner of the modern digital computer.

He left Berkeley for a stint as a mathematician at Bell Telephone Laboratories in New Jersey, followed by a year as a research associate at the Atomic Energy Commission Computing Facility (today known as the Courant Institute of Mathematical Sciences) at New York University.

“John was a pioneer in our national fusion program,” said Lavernia, noting that Killeen’s association with the program began in 1956 during his time as a post-doctoral fellow at the Courant Institute.

Killeen returned to California in 1957 to join Project Sherwood.

The government partially declassified this research in controlled thermonuclear reaction in 1958 in time for the second U.N. International Conference on the Peaceful Uses of Atomic Energy. Killeen co-authored a paper for that conference, the first of his more than 90 publications, including the book Controlled Fusion.

The 1960s brought what Anderson called “a veritable garden of experiments” to Livermore: pinches, mirror devices, stellarators, levitrons and the Astron, among others — and Killeen developed numerical models of these experiments, to solve on the latest computers. (His daughter Kate, who is not a physicist, knew of “Astron” only as the name of the Killeen family’s dog!)

Meeting the need for large-scale computing

In 1973, John Killeen participated on a committee that identified the need for large-scale computing in the magnetic fusion program. Subsequently, he led the Controlled Thermonuclear Research Computer Center from its opening in 1974 until his retirement. (The center has undergone two name changes: in 1977, to the National Magnetic Fusion Energy Computer Center, and, in 1990, to the National Energy Research Supercomputer Center.

“The center, under John, pioneered in time-sharing, parallel algorithms and network supercomputing — long before its rivals,” Anderson said. “Its successes have been imitated by many other scientific computing facilities, including the National Science Foundation Computer Centers in the United States.”

In 1996, the National Energy Research Supercomputer Center dedicated a new Cray J90 shared-memory multiprocessor to Killeen — in accordance with the center’s traditional way of honoring important contributors to mathematics and science.

The center’s executive committee made the decision on behalf of the Energy Research Supercomputer Users Group, as a way to publicly acknowledge Killeen’s contributions “to the development of supercomputing as a fully functional tool in our scientific and computing endeavors.”

Teacher, doer, user’s advocate

“But this is not all. He has also been a teacher and doer. It is particularly important to this user group that he has steadfastly served as the user’s advocate ‘at the top.’”

In 1980, Killeen received the Department of Energy’s Distinguished Associate Award, the department’s highest honor, in recognition of his contributions to the magnetic fusion energy program.

Killeen engaged in extensive international collaboration and was on extended assignment at the United Kingdom Atomic Energy Authority’s Culham Laboratory (known today as the Culham Centre for Fusion Energy) in 1962-63 and again in 1971.

He was an avid fan of Cal athletics, and he kept up with a sport of his own: swimming, as a member of the Strawberry Canyon Aquatic Masters in Berkeley, and as a competitor in races in the ocean, in San Francisco Bay and in high mountain lakes.

Killeen is survived by his wife, Marjorie, also of Berkeley; and five children, Michael of Davis, Sean of Kentfield, Jack of Santa Rosa, Joan of Berkeley and Kate of Sacramento (all UC Berkeley graduates); and 10 grandchildren and two great-grandchildren. He was preceded in death by a daughter, Ann.

“First NERSC Director John Killeen Dies at 87”

by

Jon Bashor

August 24th, 2012

National Energy Research Scientific Computing Center

John Killeen, the founding director of what is now known as the National Energy Research Scientific Computing Center (NERSC), died August 15, 2012 at age 87. Killeen led the Center from 1974 until 1990, when he retired. The Department of Energy conferred its highest honor, the Distinguished Associate Award, on Killeen in 1980 in recognition of his outstanding contribution to the magnetic fusion energy program.

Initially known as the Controlled Thermonuclear Research Computer Center when established in 1974 at Lawrence Livermore National Laboratory, the center was renamed the Magnetic Fusion Energy Computer Center in 1976. To reflect its broadened mission support of other science domains, the center was rechristened the National Energy Research Supercomputing Center in 1990.

When the center moved from LLNL to Berkeley Lab in 1996, one of the new Cray J-90 supercomputers was named in honor of the founding director.

Born in 1925 in Guam to a Navy family, he entered the U.S. Naval Academy at Annapolis, Maryland at age 18. Following World War II, he earned a B.A. in physics at the University of California, Berkeley. There, while living at International House, he met his wife of 62 years, Marjorie. He continued his studies at U.C.B. and was awarded a M.A. and Ph.D., both in mathematics.

His long association with the Lawrence Livermore National Lab began in 1957 when he worked on the then-secret U.S. fusion effort called Project Sherwood. In 1958, this controlled thermonuclear reaction research was declassified in time for the Second United Nations International Conference on the Peaceful Uses of Atomic Energy and for which Killeen coauthored a paper, the first of 90 publications throughout his career.

Killeen taught mathematical science at the University of California, Davis and was an original faculty member for the Department of Applied Science, founded in 1963 by Edward Teller. He was later appointed associate dean of the U.C.D. College of Engineering. As first director of the Plasma Physics Research Institute, he promoted collaborative work in plasma physics between universities and Lawrence Livermore National Laboratory.

Project Sherwood [Wikipedia]

“So Far Unfruitful, Fusion Project Faces a Frugal Congress”

by William J. Broad

September 29, 2012

The New York Times

For more than 50 years, physicists have been eager to achieve controlled fusion, an elusive goal that could potentially offer a boundless and inexpensive source of energy.

To do so, American scientists have built a giant laser, now the size of a football stadium, that takes target practice on specks of fuel smaller than peppercorns. The device, operating since 1993, has so far cost taxpayers more than $5 billion, making it one of the most expensive federally financed science projects ever. But so far, it has not worked.

Unfortunately, the due date is Sunday, the last day of the fiscal year. And Congress, which would need to allocate more money to keep the project alive, is going to want some explanations.

“We didn’t achieve the goal,” said Donald L. Cook, an official at the National Nuclear Security Administration who oversees the laser project. Rather than predicting when it might succeed, he added in an interview, “we’re going to settle into a serious investigation” of what caused the unforeseen snags.

The failure could have broad repercussions not only for the big laser, which is based at the Lawrence Livermore National Laboratory in California, but also for federally financed science projects in general.

On one hand, the laser’s defenders point out, hard science is by definition risky, and no serious progress is possible without occasional failures. On the other, federal science initiatives seldom disappoint on such a gargantuan scale, and the setback comes in an era of tough fiscal choices and skepticism about science among some lawmakers. The laser team will have to produce a report for Congress about what might have gone wrong and how to fix it if given more time.

“The question is whether you continue to pour money into it or start over,” said Stephen Bodner, a former director of a rival laser effort at the Naval Research Laboratory in Washington. “I think they’re in real trouble and that continuing the funding at the current level makes no sense.”

China is studying the program’s mistakes, Dr. Bodner added, perhaps with a goal of building an improved machine.

“It’s kind of an amazing device,” said William Happer, a physicist at Princeton University who directed federal energy research for the first President George Bush. “Still, it’s not science if you don’t fail now and then. But you do have to have some wins.”

Full article here:

http://philosophyofscienceportal.blogspot.com/2012/10/thats-right-nadinethey-better-start.html

Let me begin with a historical remark regarding the 1963 paper by D. F. Spencer. I am grateful for the reference which gave me for the first time the opportunity to read his paper. He may have overlookeed my rather long 1957 paper published in Astronautica Acta IV , 235 (1958) , in which I discussed fission gas core reactors, but also magnetic confinement fusion reactors for propulsion, in addition to other concepts. The figure 4 of this paper displays a fusion engine very similar as in figure 3 of the paper by Spencer. My paper was published in German prior to coming to the USA, and for that reason did not get much attention. All my later papers were in English.

I strongly believe that at the moment minifusion bomb propulsion with ion beam ignition has the best chance for success.

Except for an unexpected breakthrough in physics, I think the best chance for reaching relativistic velocities is the matter-antimatter GeV laser propulsion concept which I have published in the December 2012 issue of Acta Astronautica. It also would solve the problem of the destructive impact on the spacecraft through dust grains in the interstellar medium at relativistic velocities, by periodically directing the laser beam in the forward direction, making a grain-free tunnel in space, as I had it explained in my lecture at the 2012 Advanced Space Propulsion Propulsion Workshop. The antimatter could be produced with solar energy on the storm-free surface of the planet Mercury in robotic factories.

To escape from the death of the sun in a few billion years, or the moving out of the goldilock zone in about 100 million years, we still have plenty of time to enjoy the unique beauty of planet earth.