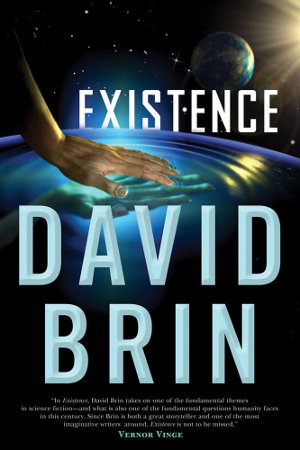

As we’ve had increasing reason to speculate, travel to the stars may not involve biological life forms but robotics and artificial intelligence. David Brin’s new novel Existence (Tor, 2012) cartwheels through many an interstellar travel scenario — including a biological option involving building the colonists upon arrival out of preserved genetic materials — but the real fascination is in a post-biological solution. I don’t want to give anything away in this superb novel because you’re going to want to read it yourself, but suffice it to say that uploading a consciousness to an extremely small spacecraft is one very viable possibility.

So imagine a crystalline ovoid just a few feet long in which an intelligence can survive, uploaded from the original and, as far as it perceives, a continuation of that original consciousness. One of the ingenious things about this kind of spacecraft in Brin’s novel is that its occupants can make themselves large enough to observe and interact with the outside universe through the walls of their vessel, or small enough for quantum effects to take place, lending the enterprise an air of magic as they ‘conjure’ up habitats of choice and create scientific instruments on the fly. You may recall that Robert Freitas envisioned uploaded consciousness as a solution to the propulsion problem, with a large crew embedded in something the size of a sewing needle.

Brin’s interstellar solutions, of which there are many in this book, all involve a strict adherence to the strictures of Einstein, and anyone who has plumbed the possibilities of lightsails, fusion engines and antimatter will find much to enjoy here, with nary a warp-drive in sight. The big Fermi question looms over everything as we’re forced to consider how it might be answered, with first one and then many solutions being discarded as extraterrestrials are finally contacted, their presence evidently constant through much of the history of the Solar System. Brin’s between-chapter discussions will remind Centauri Dreams readers of many of the conversations we’ve had here on SETI and the repercussions of contact.

Into the Crystal

At the same time that I was finishing up Existence, I happened to be paging through the proceedings of NASA’s 1997 Breakthrough Propulsion Physics Workshop, which was held in Cleveland. I had read Frank Tipler’s paper “Ultrarelativistic Rockets and the Ultimate Fate of the Universe” so long ago that I had forgotten a key premise that relates directly to Brin’s book. Tipler makes the case that interstellar flight will take place with payloads weighing less than a single kilogram because only ‘virtual’ humans will ever be sent on such a journey.

Thinking of Brin’s ideas, I read the paper again with interest:

Recall that nanotechnology allows us to code one bit per atom in the 100 gram payload, so the memory of the payload would [be] sufficient to hold the simulations of as many as 104 individual human equivalent personalities at 1020 bits per personality. This is the population of a fair sized town, as large as the population of ‘space arks’ that have been proposed in the past for interstellar colonization. Sending simulations — virtual human equivalent personalities — rather than real world people has another advantage besides reducing the mass ratio of the spacecraft: one can obtain the effect of relativistic time dilation without the necessity of high γ by simply slowing down the rate at which the spacecraft computer runs the simulation of the 104 human equivalent personalities on board.

Carl Sagan explored the possibilities of time dilation through relativistic ramjets, noting that fast enough flight allowed a human crew to survive a journey all the way to the galactic core within a single lifetime (with tens of thousands of years passing on the Earth left behind). Tipler’s uploaded beings simply slow the clock speed at will to adjust their perceived time, making arbitrarily long journeys possible with the same ‘crew.’ The same applies to problems like acceleration, where experienced acceleration could be adjusted to simulate 1 gravity or less, even if the spacecraft were accelerating in a way that would pulverize a biological crew.

Tipler is insistent about the virtues of this kind of travel:

Since there is no difference between an emulation and the machine emulated, I predict that no real human will ever traverse interstellar space. Humans will eventually go to the stars, but they will go as emulations; they will go as virtual machines, not as real machines.

Multiplicity and Enigma

Brin’s universe is a good deal less doctrinaire. He’s reluctant to assume a single outcome to questions like these, and indeed the beauty of Existence is the fact that the novel explores many different solutions to interstellar flight, including the dreaded ‘berserker’ concept in which intelligent machines roam widely, seeking to destroy biological life-forms wherever found. We tend to think in terms of a single ‘contact’ with extraterrestrial civilization, probably through a SETI signal of some kind, but Brin dishes up a cluster of scenarios, each of which raises as many questions as the last and demands a wildly creative human response.

Thus Brin’s character Tor Povlov, a journalist terribly wounded by fire who creates a new life for herself online. Here she’s pondering what had once been known as the ‘Great Silence’:

Like some kind of billion-year plant, it seems that each living world develops a flower — a civilization that makes seeds to spew across the universe, before the flower dies. The seeds might be called ‘self-replicating space probes that use local resources to make more copies of themselves’ — though not as John Von Neumann pictured such things. Not even close.

In those crystal space-viruses, Von Neumann’s logic has been twisted by nature. We dwell in a universe that’s both filled with ‘messages’ and a deathly stillness.

Or so it seemed.

Only then, on a desperate mission to the asteroids, we found evidence that the truth is… complicated.

Complicated indeed. Read the book to find out more. Along the way you’ll encounter our old friend Claudio Maccone and his notions of exploiting the Sun’s gravitational lens, as well as Christopher Rose and Gregory Wright, who made the case for sending not messages but artifacts (‘messages in a bottle’) to make contact with other civilizations. It’s hard to think of a SETI or Fermi concept that’s not fodder for Brin’s novel, which in my view is a tour de force, the best of his many books. As for me, the notion of an infinitely adjustable existence aboard a tiny starship, one in which intelligence creates its own habitats on the fly and surmounts time and distance by adjusting the simulation, continues to haunt my thoughts.

Summer Evenings Aboard the Starship

For some reason Ray Bradbury keeps coming back to me. Imagine traveling to the stars while living in Green Town, Illinois, the site of Bradbury’s Dandelion Wine. If Clarke was right that a sufficiently advanced technology would be perceived as magic, then this is surely an example, as one of Brin’s characters muses while confronting a different kind of futuristic virtual reality:

Wizards in the past were charlatans. All of them. We spent centuries fighting superstition, applying science, democracy, and reason, coming to terms with objective reality… and subjectivity gets to win after all! Mystics and fantasy fans only had their arrow of time turned around. Now is the era when charms and mojo-incantations work, wielding servant devices hidden in the walls…

As if responding to Ika’s shouted spell, the hallway seemed to dim around Gerald. The gentle curve of the gravity wheel transformed into a hilly slope, as smooth metal assumed the textures of rough-hewn stone. Plastiform doorways seemed more like recessed hollows in the trunks of giant trees.

Splendid stuff, with a vivid cast of characters and an unexpected twist of humor. Are technological civilizations invariably doomed? If we make contact with extraterrestrial intelligence, how will we know if its emissaries are telling the truth? And why would they not? Is the human race evolutionarily changing into something that can survive? My copy of Existence (I read it on a Kindle) is full of underlinings and bookmarks, and my suspicion is that we’ll be returning to many of its ideas here in the near future.

Interesting indeed…possible further plot line might be if one such group, having made the step into some articifical post biological form might ever need (or simply wish) to connect back into some more ‘conventional’ biological form of life, in whole or in part. The plot might involve the ‘mining’ of such resources from relatively primitive worlds with some interesting plot possibilities arising – perhaps even ‘fishing quotas’ to ensure sustainability of wildlife stocks….

There is work being done now on brain simulators but the size of the machine needed, and the power consumed, are enormous. I don’t have any links off hand but I read of an effort by IBM to build a brain simulator and, as I recall, they were using megawatts of power and a machine that filled large rooms. The complexity of the brain being simulated was similar to that of an insect or maybe a cat, depending on who you ask.

What I took away from this was a sense of amazement at how the human brain manges to do what it does on only a few watts of power in a size that can fit into a person’s head.

Of course our technology is bound to improve but we are very, vary far away from simulating thousands of people in a few grams of material. Interstellar space flight requires assuming various engineering “miracles” and this is no different. Yet we might be as far away from the kind of brain simulations you mention here as we are from warp drive.

Charlie Stross uses the same clock cycle idea for his humanoid robots to travel around the solar system in “Saturn’s Children”. I suspect the same approach may be used in his upcoming “Neptune’s Brood” for interstellar flight.

Since there is no difference between an emulation and the machine emulated

This is very controversial. This doesn’t mean that intelligence cannot be emulated, just that it may not feel like being the original. An emulated uploaded human may just be a “zombie”. This is something we find out sooner than expected. The Blue Brain project is targeting human brain emulation and so we may get hints about this within the project time frame – possibly in as little as a decade.

Now if we can create human intelligence by emulation/uploading, then that intelligence can be clocked to run very fast and integrated with any number of tools to appear hyper intelligent. And of course, as Brin’s novel suggests, replicated many times.

This scenario for interstellar migration was also used as a background for Jim Hogan’s novel “Voyage from Yesteryear”, published in 1982. Its generally referred to as “embryo space colonization” and has been used as a plot device in SF starting from the early 80’s. Alastair Reynold’s “Revelation Space” novels also make reference to the first interstellar colonies being established by this approach. Believe me, Brin is not the first SF writer to have come up with this idea.

The rationale makes sense. Developments in semiconductors, and more recently, biotechnology have occurred at a much faster rate (e.g. Moore’s Law and Carlson’s Curves) than advances in physics and space technology. Extrapolate 50-100 years out while at the same time assuming no breakthroughs in propulsion physics, and it is reasonable to assume that something like Tipler’s proposal is the only way for us to get to the stars.

The main difference between what Tipler believes possible (and described in Brin’s novel) and what is likely to be the case is that we will probably be able scan peoples’ brains and store them INACTIVELY in computer memory. However, I do not believe it will be possible for “people” to live actively as pure software entities. Upon arrival in the target solar system, the self-replication manufacturing will create the industrial infrastructure, then reanimate the “downloaded” people by growing new bodies for them with the brains grown to the specification of the downloaded individuals. This may be possible in, say, 23rd century.

Baring any breakthroughs in propulsion science, those of us in the milieu starting in the late 80’s have always assumed this would be the only way we will go to the stars.

While Brin is quite clever with his plot (no spoilers) if minds could be downloaded into recreated bodies, then we are firmly back in Fermi Paradox territory.

What I find interesting, is that recreating life from information may be much easier. Venter has proved that totally synthetic DNA can be transplanted into a bacterial cell. It is only a matter of time before we work out the recipe to replicate/grow a cell from just information. Which means that seeding the galaxy/universe with life would be “easy”. Either the libraries go with the the replicator technology, or the appropriate recipes for the target are beamed to the replicator. The question of whether bacteria can survive the rigors of interstellar travel in bombardment ejected rocks becomes moot.

What I would be interested in seeing is how minimal the empty cell needs to be. Can it be a simple lipid membrane with just the key DNA transcription and translation machinery in place, plus enough ATP and fuel to get started? The DNA provides the necessary proteins to bootstrap up the rest of the cell’s structure.

I must confess myself disappointed that “transhumanism” and in particular “mind-uploading” have been so frequently mentioned on this blog. At first glance, it looks like a good idea- get rid of the biological crew and replace them with robots controlled by an AI, thus lowering the mass of the craft. Of course we want to go ourselves, so researchers conjure up the idea of sending “uploads” that are digital copies of a living human mind. The general idea is to copy the human mind onto something more durable than a biological brain, be it an indestructible container of metal and silicon or a crystalline ovoid.

The problem is that we can’t separate our mind from the biological processes that create it in the first place. A specific mind is an emergent property of its specific brain, nothing more and nothing less. Unless the transfer of the mind retains the brain, there will be no continuity of consciousness. When a computer uploads some information, the original is always retained and a copy is created. Even if we could do the same for a simulation of the brain, the original will remain in his or her body, and the “upload” will be a copy that will rapidly diverges from the original.

If by uploading we mean recreation of a specific mind by a physical process rather than a simulation that passes the Turing test, what I just mentioned pretty much takes care of the idea of “uploading” yourself to gain immortality. You can never transfer yourself to a silicon container, crystalline ovoid, or sewing-needle sized computer ship. If non-destructive uploading is ever possible, it will create an autonomous copy that just thinks it is you, not a continuity of your consciousness.

This is not even delving into what recreating a human mind in silico would entail. Somehow, we would have to map every neural connection in a brain and simulate those connections in a computer simulation. Even if we could do this, recreating a human mind without a body is really problematic. Without ping-backs from the body, the simulated brain will be driven insane by a high-octane equivalent of phantom limb pain.

Biologist Athena Andreadis discusses these issues far better than I can at these links: here and here.

If you are going to write about topics related to interstellar travel, get your biology right!! Never once have I seen anyone raise any of the major issues with mind uploading on this blog. Ultimately, it most likely isn’t feasible to place a simulation of a human mind in a physical substrate so different from the one the original existed in, and such a process certainly won’t offer the original immortality.

Thanks Paul for the kind words. I am a huge fan of the efforts that you help to push here at Centauri Dreams. My novel certainly explores many of the same avenues.

Folks might enjoy the lavish and beautiful 3-minute preview-trailer filled with fresh-painted images by the great Patrick Farley tinyurl.com/exist-trailer

Anthony Mugan points out that there have been precedents in fiction and nonfiction for some of the ideas Paul described from EXISTENCE. Of course there are and I refer to many of them scrupulously. But they are extended and explored onward. That is how science progresses, why should it not be true of science fiction?

Let’s grow up and get out there.

With cordial regards,

David Brin

http://www.davidbrin.com

blog: http://davidbrin.blogspot.com/

twitter: http://twitter.com/DavidBrin1

This is a recurring theme in SF, Poul Anderson’s “Harvest Of Stars” proposed recording human brains and cloning the bodies once they had arrive at their destination.

And as always there is “The Ship Who Sang” by Anne McCaffrey.

This begins to ask the question “What is a Human?”

@Chris Phoenix

Athena Andreadis is quite simply wrong. A good treatment of the issue is “The Mind’s I” by Hofstadter and Dennett (pub. 1982).

I say that Andreadis is wrong, because I think she gets confused by what we mean by the conscious “I” and her adherence to what she thinks is the relevant biology.

Let me redo some thought experiments.

1. The continuity of consciousness. Every night you lose consciousness when you sleep. Yet every morning you awake to apparently the same “I”. (Same with being in a coma, or under anesthesia, or knocked unconscious).

Suppose during sleep you were instantly replicated (destructive in her terms). You wake up. Does it matter if the original, the copy or neither was destroyed? No. Both will awake with the perception of being “I”.

2. We accept that the atoms of your brain are being constantly turned over. Suppose that all the atoms instantly changed at the same time. Would your mind disappear with them? No. So “destructive replication” has no effect. If you disagree, then you would have to predict that at some point between slow and instantaneous atom replacement that you lose continuity and become someone else. If it was discovered that subatomic particles making up the atoms were constantly being recreated so that the substance of atoms was constantly changing, you wouldn’t suddenly doubt that your conscious continuity was lost with it. Nor would you doubt it if that recreation resulted in your body being recreated in a slightly different position in space.

3. How much damage can the brain sustain and the continuous “I” disappear? There countless cases of people with various forms of induced brain trauma that hugely impacts their cognition. In some cases so drastically that friends and family do not recognize these people as being “the same person”. Is there even one case where that person feels they are not the same “I” before the trauma? (The only possibility I can think of is amnesiacs, and that suggests that memory is key. But then what would the impact be of having your memories replaced with false memories?) Read Jill Bolte’s story of recovery (incomplete) from her aneurism. At no point in the sometimes strange experiences and perceptions she relates does she ever lose her continuity as “Jill Bolte”. She is a different person, but she recognizes that and doesn’t feel newly created with false memories or a prior existence.

The only real question in my mind is what level of fidelity does mind uploading require. Do you need a physical brain that, albeit synthetic, still retains the neural structure of the original. Does just the state (and state changes) of the synthetic neurons have to be replicated? Can the states be completely virtualized in software? Can thoughts be abstracted as a software program and still retain the sense of consciousness? (People generally don’t think simulations are the same as the real thing, but they may be crossing “reality boundaries” rather than considering what is happening in the simulation.)

I haven’t a clue about the fidelity required. But I do know that your brain changes as you develop, that you can suffer acute brain trauma, you can lose and regain consciousness, and through it all you retain a sense of continuous identity. Consciousness may however, be a concocted illusion, but that is another issue.

But suppose I am mistaken and that mind uploading will not buy you a technologic rapture. You still die. But the uploaded mind will simulate, more or less, a human mind, as long as it also has a simulated body and environment. That still allows for a very interesting options to spreading humanity to the stars.

Note to moderator — please delete my previous comment and preserve this one.

Many aspects of Brin’s Existence reminded me of other science fiction novels that I have recently enjoyed. Charles Stross’ Accelerando comes to mind.

Stross’ book deals with a ‘soda can’ sized supercomputer fired off into the interstellar medium, carrying the mind uploads of its crew.

Unlike Tipler’s concept discussed by #Abelard above, the crew on Stross’ soda can are able to continue their existence in real time in a simulation running on the computronium of the payload.

It turns out that mind uploading means they are personality branches of their original selves, which becomes an issue once they return to the solar system and encounter their doppelgangers, who have lived out totally different lives and choices while they were gone.

Even without contacting extraterrestrials, the next 200 years promises to be a pretty wild place in the vicinity of Sol.

@Christopher – We still don’t know if it is feasible to harvest enough energy to run, say, an Alcubierre drive. There are loads of problems standing in the way of the central preoccupation of this blog, mind uploading being just one of many.

Besides, I think if we take a lesser example to start with, say, simulating all of the neural connections of a dragonfly, the problem starts to look more manageable. From there it is just several orders of magnitude more complicated to get to a meerkat, and further on for a human. Computers in the 1960s such as the PDP-1 were orders of magnitude slower than the devices we regularly toss in the garbage nowadays when we upgrade our mobile phone contracts. On the other hand, our chemical rockets still look and perform much like they did in the 1960s.

@ Alex those are interesting examples, but I am not sure about number 2. I sure wouldn’t be first in line to volunteer to have all of the atoms in my head destroyed simultaneously. Even if they promised to put new atoms back in place.

@Alex Tolley

Interesting assessment, given that Athena Andreadis conducts basic research in molecular neurobiology, focusing on mechanisms of mental retardation and dementia. She knows more about brains than do computer scientists who’s knowledge of neurons stops at “they’re grey and squishy and nasty!!”. You have entirely missed my point, willfully, perhaps.

First off, continuity of consciousness is not being awake, it is maintaining personal identity. During sleep, my brain continues to function, neurons continue to fire, I keep a sense of self through dreams- I am still me, albeit in a state of sleep. Nothing destructive happens to the neural connections in my brain, no “skip” that could be considered analogous to mind uploading. Your first thought experiment proves nothing.

If I were destructively scanned and uploaded during my sleep, that simulation would not be me, whatever it thinks. You could relatively easily prove this by making two copies off of that one destructive scan. Both would have their own separate existence from each other and would diverge from each other. The original mind associated with my brain will be gone, and I will never wake up.

All the subatomic gymnastics you apply in your thought experiments don’t actually occur, and if they did the brain would probably be severely disrupted. Your argument is similar to the argument that replacing the brain would not be a threat to identity because we routinely change cells throughout our lives, but that is not true of the nerve cells in the brain.

When we are born, we start with as many neurons as we are ever going to have. What changes are the neuronal processes (axons and dendrites) and contacts with each other and other cells (synapses). These tiny process are what make our individual personalities, our ability to learn, our sense of identity. Different brain functions are organized in localized clusters that vary from person to person, and removal of these centers will cause irreversible deficits unless it occurs in very early infancy. This is why people suffer cognitive disability and transient or permanent personality changes after brain trauma, as Andreadis mentions in her article “Ghost in the Shell”. Brain trauma is not analogous to mind uploading, as the brain is still there, even if parts of it have been damaged.

I don’t think it will make any difference whether the simulation is an actual physical structure that resembles the brain or a computer simulation- either way, you get a simulated mind, and either way it is a separate copy from the original that begins to diverge from the original (or any other copies) at the moment of its creation.

Exactamundo. If a means of creating a “mind simulation” were available, many people probably would choose to have a copy of themselves left behind after they die. Conceivably, this could be used to create a digital crew for interstellar travel. However, there are still problems even if you assume the technology to do this will exist in the future, the big one being the lack of sensory input from the body driving the simulated mind insane. Even if we can create perfect simulations of an intelligent mind, could a human mind, wired as it is for a human body, remain sane separate from a human body? Perhaps the feedback from a body could be simulated for it, as you mentioned. Perhaps we would skip the human mind entirely and just create a suitable AI.

My objections to “mind uploading” are not that it is discussed here, but that no one mentions the intrinsic impossibility of gaining immortality through “mind uploading” or discusses any of the difficulties with creating a “mind simulation”. Everyone seems to assume that the biological function of the brain can be separated from the substrate of the human body with no trouble at all, and that we can all skip off to computer Nirvana, which just ain’t gonna happen.

> One bit per atom…10^4 individuals

Something like this is the most cost-effective way to send intelligence beings across interstellar space. Anything more massive (umm…like a worldship?) would not make sense from an efficiency standpoint. So, when technology reaches this point, this approach would make the most sense. Well, actually sending nano-replicators first and then beaming the genetic data later could be more efficient yet.

But the key problem here is the assumption of “when nanotechnology reaches this point”. When it does, it will have past the point where nanotechnology will have possibly made self-replicating weapons possible. We can’t overestimate the destructive potential of self-replicating technology whose only limit is the atmosphere. So intelligence might never be able to nanotech interstellar travel because it routinely destroys itself before reaching that point – hence the Great Silence. The solution to this would be to send individuals in a technologically less-sophisticated form earlier and quite possibly slower. So, to say that “no real humans will traverse interstellar space” is to say that they will not reach the safety of another star system until after they may have developed existential technology. I would hope that we will have more foresight than that.

How long will Einstein reign as the accepted authority concerning faster than light speed…..perhaps it is time to investigate again Star Trek’s ‘Q’….we will eventually learn to voyage whole through the quantum foam or we are doomed as a biological race….doomed by emotion to destroy one another….are you suggesting we become monsters to travel to the stars….we’ve come too far to forsake humanity now….JDS

I have not read David Brins novel but it sure sounds close to Tipler. I have those Proceedings too.

Tipler did not want FTL pursued because it would consume the cosmos too fast and interfere with the resurrection…that is what he is really getting at .

Consnoiusness isnt so much uploaded as reconstructed or brought back to life as proposed in late Jewish and Early Christian theology. Tipler argues if life continuews forever the emulation or re-emulation of the entire universe is inevitable.

He explictly argues this in Physics of Christianity. David Deutsch agreed with it ex for the Christian spin. Tipler reviewed Penroses Emporers New Mind in Phyics World . His objection to penroses no physical view of Consciousness was that it mess up the resurrection. I recall I smiled Phyics had become Theology again.

I really dont want to get into an argument with Athena and her followers. I amand evolutionary biologist. I admit I dont kniow what consiousness is but its real (google What is it like to be a Bat?) I cant give you any evoluitionary reason why it should its exist or why it does.

Which brings me back to…….actually agreeing with Athena. We wont be downloading consiusness for a long time if ever.

But we can and should send very small and very functional probes to the stars ….if we want to …and right now that is our biggest problem

Christopher Phoenix:

You might argue that “what it thinks” is the ultimate arbiter of whether or not a personality is replicated successfully. Who are you to tell the replicate that it isn’t what it thinks it is? Are you going to try and argue it out of being itself?

This is true, but far from proving any point of yours

Two entities, both claiming to be “I”, wake up. Sure, there is a third (and an infinity of potential others) who don’t, but who is counting?

If by “The original mind” you mean your personality at a certain point in time, yes, that will be gone. Just like all the other moments in your past existence are now irretrievably gone. The “I” is not any of those, it is the line that connects them through time. What you are saying is this line cannot branch, and you have absolutely no evidence for this curious claim.

If you had read Alex more carefully, you would have noticed that he said “atoms” not cells. And in this he is correct: Your current brain has practically none of the atoms in it that it was made of just one year ago. I do not think you have successfully dealt with this inescapable point of his.

I TAKE IT THAT EVERYONE ON THIS WEB SITE DISBELIEVES IN THE JON STEWART BELL THEORY OF NON-LOCAL TRAVEL….RIGHT…..OR WHAT’S THE PROBLEM…..AM I THE ONLY ONE LIVING OUTSIDE THE BOX EINSTEIN, PODOLSKI, AND ROSEN CREATED?

I am of the opinion that simulating brains, as many here assume is the problem to solve, is entirely the wrong approach. What we really want to simulate is thoughts and memories, emotions and personality. The defining fields in AI will be psychology and psychiatry, along with neuroscience and computer science.

In a manner of speaking, we already solved part of the problem: Language enables us to transfer all of these things (thoughts, memories, emotions and personality) between individuals and onto paper. Our libraries are full of them. How far does this go towards an actual simulated mind that can successfully argue to be “human”, or to be the continuation of a specific person? I have no idea.

But I do have a hunch that language goes further than most of us think towards replicating the human mind. Language is the natural “access port” to our minds.

++ for Christopher Phoenix & Athena Andreadis

I abhor arguments from authority, but FWIW, this former biologist and MD is also disappointed that “transhumanism” and in particular “mind-uploading” have been so frequently mentioned on this blog.

OTOH, GO MARS ONE!!! Sending 4 people on a one way trip (colonisation or suicide mission for science) to Mars in 2023 with spacex hardware is an idea whose time has come.

@ Chris Phoenix

Interesting assessment, given that Athena Andreadis conducts basic research in molecular neurobiology, focusing on mechanisms of mental retardation and dementia. She knows more about brains than do computer scientists who’s knowledge of neurons stops at “they’re grey and squishy and nasty!!”.

This is an argument from authority. I would question whether molecular neurobiology is the correct level of abstraction to understand the how the brain works. How about a chemist working on neurotransmitters, or a physicist on quantum effects in neurons? It probably isn’t a coincidence that the scientists who have the most expertise in this area are working at higher levels of abstraction than the biologists.

First off, continuity of consciousness is not being awake, it is maintaining personal identity. During sleep, my brain continues to function, neurons continue to fire, I keep a sense of self through dreams- I am still me, albeit in a state of sleep. Nothing destructive happens to the neural connections in my brain, no “skip” that could be considered analogous to mind uploading. Your first thought experiment proves nothing.

And in between REM sleep when you are not conscious, how do you maintain personal identity? What about epilepsy. Or electroshock? And you have completely ignored the effects of trauma damaging the brain.

If I were destructively scanned and uploaded during my sleep, that simulation would not be me, whatever it thinks. You could relatively easily prove this by making two copies off of that one destructive scan. Both would have their own separate existence from each other and would diverge from each other. The original mind associated with my brain will be gone, and I will never wake up.

You have the same mistaken idea expressed by Athena. What would happen is that you wake up with each copy of you thinking you are the person who went to sleep. In your previous paragraph you state that your continuity if personality is maintained by the neural firing in your brain. Therefore an exact duplicate would retain that personality. Obviously there are now more than one copy. Each thinks it is “I”, and of course each diverges over time. Each retains the same memories and experiences of the pre-split person. Is your misapprehension that you think you would somehow simultaneously inhabit multiple bodies? In the conscious duplication thought experiment, where one body is destroyed, then that personality experiences death. But the same personality, in the other body perceives it has survived.

All the subatomic gymnastics you apply in your thought experiments don’t actually occur, and if they did the brain would probably be severely disrupted. Your argument is similar to the argument that replacing the brain would not be a threat to identity because we routinely change cells throughout our lives, but that is not true of the nerve cells in the brain.

Are you saying that the atoms in your brain are not slowly replaced? Are you saying that all your neurons never die? Are you saying there is no brain plasticity? Your argument is just incrementalism. Slow changes are OK, fast changes are not. But if you believe that, then there has to be some point at which you get qualitative effects on personality continuity. But I have already shown that major brain trauma does not cause that. So personality continuity does not depend on relatively unchanging brain structure. Furthermore, we can increase atom turnover quite easily by increasing metabolic rate or by introducing a flow of water that will rapidly replace the existing water in the brain. So here is a prediction. Add a flow of water of water to the brain so that the water in the brain is rapidly replaced with the new inflowing water. As long as the brain is not disrupted by physical effect, the complete replacement of the brain’s existing water molecules will have no effect on personality continuity.

When we are born, we start with as many neurons as we are ever going to have.

Incorrect. See for example: http://www.jneurosci.org/content/22/3/612.full

What changes are the neuronal processes (axons and dendrites) and contacts with each other and other cells (synapses). These tiny process are what make our individual personalities, our ability to learn, our sense of identity. Different brain functions are organized in localized clusters that vary from person to person, and removal of these centers will cause irreversible deficits unless it occurs in very early infancy. This is why people suffer cognitive disability and transient or permanent personality changes after brain trauma, as Andreadis mentions in her article “Ghost in the Shell”. Brain trauma is not analogous to mind uploading, as the brain is still there, even if parts of it have been damaged.

That last sentence is incoherent. If the conscious “I” is located in some distinct brain structure, then we would have found an example where this structure was damaged, removing the identity in the “cartesian theater”. That person would apparently think they were freshly created after that trauma. Reference such cases.

I don’t think it will make any difference whether the simulation is an actual physical structure that resembles the brain or a computer simulation- either way, you get a simulated mind, and either way it is a separate copy from the original that begins to diverge from the original (or any other copies) at the moment of its creation.

You are just repeating the “If my body is duplicated, I must simultaneously reside in both of them. That cannot be” fallacy.

If a means of creating a “mind simulation” were available, many people probably would choose to have a copy of themselves left behind after they die. Conceivably, this could be used to create a digital crew for interstellar travel. However, there are still problems even if you assume the technology to do this will exist in the future, the big one being the lack of sensory input from the body driving the simulated mind insane. Even if we can create perfect simulations of an intelligent mind, could a human mind, wired as it is for a human body, remain sane separate from a human body? Perhaps the feedback from a body could be simulated for it, as you mentioned. Perhaps we would skip the human mind entirely and just create a suitable AI.

No-one is talking about brains in vats. A simulated mind would be given a simulated body and environment. One advantageous of a human mind is that we can be reasonably sure of how it will behave. This is not something we can know with an AI. Probably we will have both, with AIs starting as tools and eventually become AGI’s.

My objections to “mind uploading” are not that it is discussed here, but that no one mentions the intrinsic impossibility of gaining immortality through “mind uploading” or discusses any of the difficulties with creating a “mind simulation”. Everyone seems to assume that the biological function of the brain can be separated from the substrate of the human body with no trouble at all, and that we can all skip off to computer Nirvana, which just ain’t gonna happen.

You are just arguing against the religion of “Rapture of the Nerds”. I happen to agree with you here, but that is not a technological argument. Obviously we do not have the technology, or indeed the approach to mind uploading. But what I object to is the fuzzy thinking of what minds are to a priori argue that mind uploading is impossible, particularly given the amount of prior thinking that has gone into this issue by practitioners of many different disciplines.

I would like to add a few small points to the discussion:

– If it ever becomes possible to fully simulate a human brain, it would also be possible to accompany that with a simulation of all stimuli the brain would receive in a human body. The simulated individual might not even be able to tell the difference between reality and simulation.

– I don’t expect mind uploading ever becoming a means to preserves *yourself* forever. Instead, I consider it likely that at some point in the future, when (and if) it becomes possible, people will start uploading themselves instead of having children. Their virtual copies will be their children, not themselves.

– I would not expect such virtual humans to remain human forever. Unless it is unknown to them and they live in a Matrix-style simulation where they are left unaware of the true nature of their existence, some of them would sooner or later embrace the possibilities that come with a mind running entirely in a synthetic substrate. For example, upon reaching a distant planet, why should they want to be put back into artificially produces or vat-grown bodies? They might just as well connect themselves to a wide variety of robotic bodies, probably via remote operation (so they can do hazardous tasks without putting their now-synthetic brains at risk). If mind uploading ever becomes possible, the entities it would spawn would most likely change into something completely different than us normal human beings.

@David Brin

I think it might have been other commentators who were discussing the history of the various ideas in SF rather than myself – I wouldn’t have the level of expertise to comment to be honest. I was just letting my imagination run away with a possible extension of the plot line – wasn’t aware if anyone has ever actually written anything along those line.

On a more general thought – the discussion around consciouness gets us into very complicated territory. this really is the ‘hard problem’. I’ve recently been reading Kelly and Kelly ‘Irreducible Mind’ in connection with some other areas of personal research. Can’t say I am entirely convinced by the argument the present which does seem to rely to some extent on a form of the ‘god of the gaps’ argument with current models to argue in favour of a different model of conciousness than the current mainstream view. They certainly raise some pretty challenging data and perspectives though which I will be pondering for some time I suspect!

“… uploading a consciousness to an extremely small spacecraft”. This scenario was deeply (and brilliantly) explored by Greg Egan in his novel ‘Diaspora’ (1997).

How do you know that, right now, you are not an uploaded intelligence in a soda can? :)

@Christopher Phoenix

Not all biologist have that opinion.

http://ultraphyte.com/2011/12/08/identity-does-it-exist/

I always wonder what the antecedents are for this mode of interstellar travel.

(‘Cryogenic suspension’ has a long long history in science fiction.)

Is there anything that preceded Fred Hoyle’s A FOR ANDROMEDA ?

(I am supposing the John Elliot’s contribution was teleplay construction.)

It’s hard believe that this first appeared as a TV story on the BBC 1961, I did not know this for a long and knew only the novel that appeared in 1962 . I was blown away by the concept , which had to be Hoyle’s, tho the novel as narrative drama is lackluster , not as well put together is The Black Cloud, 1957. (The Black Cloud did not have as odd and entity as Lem’s transcendent alien in Solaris 1961, but was a concept of an alien entity that I had not seen before, probably had an ancestor too?) (I always wondered in Carl Sagan read A for Andromeda, since there are echos on the communication method in Contact.)

Speaking of ‘holographic’ copies of possible intelligent beings , does anything precede Rendezvous with Rama 1972? My first encounter with the idea but where did Clarke get it ? (After Childhood’s End Rama (the first novel) is my second favorite Clarke novel , yes even before City and the Stars.) It’s odd it’s really better than 2001 in examining a …sort of…. ‘transcendent’ advanced civilization , that seems to be because Kubrick’s film narrative trumps Clarke’s prose. Not that the Ramans are more sublime than the Monolith Makers but the whole Rama world is so indifferent and mysterious, that is so delicious.

Clarke did it again with Songs of Distant Earth, but that was 1986 the idea must have been in the air for some time but then.

Why would we want to emulate a human mind for a starship “brain”, let alone upload one? Human minds are primarily designed for dealing with existence on Earth. Our desires and goals are not all that different from most other animals on this planet – just watch any reality show or see what sells best and how to see what I mean.

True awareness and appreciation of the wider Cosmos and space exploration are still mainly intellectual concepts. Most humans remain more interested in eating, fighting, and mating than colonizing Mars or seeing what’s going on at Alpha Centauri.

We are both dazzled and distracted by our very recent technological abilities and knowledge (and the severe lack of other examples of higher intelligence species) into thinking that humans are the best vessels for exploring the stars. We need a system for our first star probes that focus on the main purposes of sending a craft to another solar system: To collect data on that system and return it to those who built it, plus being able to avoid dangers known and unknown and repair itself when necessary.

The AI for such a vessel and plan do not need all the extra evolutionary baggage that would come with a current human mind, one that has largely confined itself to one world and satisfying base needs. If meeting these requirements also do not require an intelligence level that is “aware”, then so much the better and easier for our interstellar mission.

I know this is not quite the same in terms of levels of sophistication, but the two Voyager probes did not need to be aware of require AI that had to act and function like a human brain for a successful space mission. Yes, they had help from humans based on Earth, but the Voyagers were also programmed to operate in the event they did not hear from their distant creators. The same should be extrapolated for our first probes to Alpha Centauri.

A. A. Jackson said on September 5, 2012 at 9:59:

“Not that the Ramans are more sublime than the Monolith Makers but the whole Rama world is so indifferent and mysterious, that is so delicious.”

LJK replies:

In addition to sharing your view on the kinds of fictional ETI that are more interesting than most others, they also tie into the main reasons why I think we have not found any real intelligent extraterrestrials yet: A combination of the vast interstellar and intergalactic distances involved, their innate alienness, and the high likelihood that they, just like humans, are rather preoccupied with their own immediate matters to attend to.

By the way, I could not get through the sequels to Rendezvous with Rama, which I felt were unnecessary as it is. They took away the whole point Clarke envisioned for the Ramans, which is the one you already made. The sequels to 2001 were also probably unnecessary, but at least they were readable, entertaining, and even thought provoking in places.

However, I thought it was rather absurd that the Monolith ETI in 3001 would want to destroy humanity because they were shown our violent nature via centuries-old broadcasts.

Since the whole point of these advanced aliens was starting and nurturing intelligent life wherever they found it in the galaxy, surely they must have encountered a few examples where an evolving species goes through periods of primitive aggression before they either destroy themselves or grow up enough to join the more civilized members of the Milky Way. Besides, humanity would be no serious threat to beings who could remotely do things like turn Jupiter into a star.

I think Clarke was reaching here to add some drama to 3001. That fictional humanity of a thousand years hence had become rather stable to the point that even Frank Poole noted how few individuals he encountered had what we might call in-depth character.

I think Clarke wrote himself into a corner with this future society and had to force the Monolith ETI into becoming the bad guys as it were. I also think Clarke wasn’t nearly imaginative enough in portraying what our distant children might be like and what kind of toys they might possess by then, but that is another matter.

Hard SF pays attention to theoretical plausibility, when someone puts a plausible theory under “consciousness uploading”, I’ll treat it as something worth discussing. Until then, it’s just magic.

Joy:

I also abhor arguments from authority. I blanch before the combined power of two (no doubt excellent, former or not) biologists both claiming to have such certain knowledge about this subject. A subject that most everyone else rightfully approaches with wild speculation, or at best rigorous thought experiments. My only consolation is that it is not too difficult to find biologists of even higher authority than Athena who do not share her opinion. Phewww….

Personally, on this subject I would take the opinion of a philosopher or a psychoanalyst over that of a biologist any day, no matter how good they are at cloning neuroreceptor genes. But that’s just me….

I suppose the rather simple answer to that is that sending a human mind is the next best thing to going yourself. I think you have the purposes mixed up here, a bit. A human mind would not go because it is suitable to support the mission. Rather, the mission would be designed for the purpose of transporting the human mind.

LarryD wrote:

[Hard SF pays attention to theoretical plausibility, when someone puts a plausible theory under “consciousness uploading”, I’ll treat it as something worth discussing. Until then, it’s just magic.]

Indeed. Even at this point, where its plausibility (assuming that it *ever* becomes plausible) is far, far away, the whole notion of “consciousness uploading” and creating “human emulants” leaves me…cold. Like human cloning (which is possible today, or soon could be), just because something could be done does not mean that it -should- be done.

@Alex Tolley

Okay, no arguments from authority. Then you have to stop making strawman attacks, Alex. I never said brains didn’t change, and I explicitly stated that the changing neural connections in the brain are what determine our minds. You conflated my arguments with a grossly exaggerated version that you find easier to attack, and that is not an honest way of debating.

You haven’t offered a shred of evidence for your statement that the atoms in our brains are constantly replaced. I would like to see a peer-reviewed publication on this. Even if atoms in the brain are slowly replaced, the neurons in your brain remain as long as you live, so my arguments stand. By the way, if I am ever alone in a neuroscience laboratory with you, Alex, I’ll be reaching for my phaser holster…

No. Simply put, no method of mind uploading can provide continuity of existence for the original mind, only create copies. If some method of uploading exists and someone makes copies of me, then those copies obviously do coexist with and will begin to diverge from each other and the original me immediately.

As for the “skip” you insist lurks between wakefulness and the land of nod, neural activity continues in the brain between wakefulness and REM sleep- the “skip” doesn’t exist!! Sleep is nothing like being “uploaded” any way, shape, or form. Neither are other sorts of neural trauma.

Neurogenesis in the adult brain is old news, and it only occurs in two rather discrete regions of the brain.. From “Neurogenesis in the Adult Brain” by Fred H. Gage, published in The Journal of Neuroscience:

I rest my case. Apart from some novel neurogenesis in a few discrete areas of the brain, the rest of the neurons in your brain are the same ones you started out with.

You don’t seem to understand that a human mind is an emergent property of the biological activity within its specific brain, and that you can’t transfer your mind onto a computer like a computer program. At no point is the human brain a blank slate waiting to be programmed- a human brain is wired up and functioning from the beginning, and your mind develops as your brain develops. Your mind is not inserted into an empty brain like software programs in an empty computer. Biological software is inseparable from biological “wetware”.

The only way you could ever copy a human mind onto a computer would be to create an incredibly complex computer simulation of the original biological brain, one so complex and faithful to the original that it could exhibit self-awareness and thought. It is plain to anyone that this “simulation” is only a copy of the original. The mind associated with the original brain remains associated with that brain, and will eventually experience death. The simulation will lead an independent existence, diverging from the original with each passing day.

Whenever anyone copies a file from one computer to another, the original file remains intact on the sending computer. With a simulation of a human mind, the analogy is closer to scanning a sculpture to produce a digital model of that sculpture on a computer. After the scanning, the original sculpture exists. Even if the scan was for some reason destructive, the digital file would obviously not be the original sculpture. If we scan a brain at high enough resolution to create a perfect simulation, the original mind associated with the biological activity of the original brain remains, just as the sculpture remains. The newly created computer simulation is only a duplicate, and one that will change from the original with time. This is no way to buy yourself immortality.

To be fair to the concept of “mind simulations”, several SF writers have dealt with the concept fairly accurately. I’ve read several stories in which simulated copies of a human’s mind exist, surviving separately from the original, diverging as they have their own experiences throughout the solar system and beyond. For now, however, the concept of “mind simulation” remains SF, and for much the same reasons as the equally science-fictional Star Trek “transporter” remains fiction. We don’t know how to non-destructively scan someone’s brain with enough accuracy to create a simulation of it, and the computing power required to simulate a human brain down to the last synapse must be enormous.

Eniac said on September 5, 2012 at 23:04:

“[LJK] Why would we want to emulate a human mind for a starship “brain”, let alone upload one? Human minds are primarily designed for dealing with existence on Earth…”

“I suppose the rather simple answer to that is that sending a human mind is the next best thing to going yourself. I think you have the purposes mixed up here, a bit. A human mind would not go because it is suitable to support the mission. Rather, the mission would be designed for the purpose of transporting the human mind.”

LJK replies:

While I would certainly enjoy exploring another world in person in one form or another (or at least I think I would), the primary goal of a deep space mission is to study the target planet or moon or comet and return as much useful scientific data as possible.

Having the virtual experience of walking on the sands of Mars for personal pleasure is for the time when we can afford such luxuries (of course there is that simulated trip to the Red Planet at Disneyworld – do not go on the Orange version of the ride unless you enjoy feeling nauseous afterwards).

If a human brain is the best way to get the most science out of a space mission where humans cannot be physically present, then fine. But if it is not (and I have already argued in this thread why that may be the case), then we go with the technology setup that works best for that primary scientific goal.

Curiousity was not built for 2.5 billion dollars just so we could pretend to be astronauts on Mars. There better be a truckload of real science data from that mission, including and especially knowing if life could or does exist on that planet.

To be blunt, if you want to know what it is like to have the icy crust of Enceladus crunching beneath your boots, go walk around a field of snow after a winter sleet storm. Except for having to wear a spacesuit, I bet it won’t be terribly different and you have the bonus of running back into your house for a nice warm bowl of soup and a roaring fireplace to sit in front of. I am much more interested in learning about that Saturnian moon and the rest of the Universe than playing astronaut.

@Christopher Phoenix

You haven’t offered a shred of evidence for your statement that the atoms in our brains are constantly replaced. I would like to see a peer-reviewed publication on this. Even if atoms in the brain are slowly replaced, the neurons in your brain remain as long as you live, so my arguments stand.

Pick up a textbook on metabolism? Think about this for a moment. If, for example, the structural proteins in a cell were made just once and never replaced, then the genes for those proteins would be shut down. They are not. How could all those radio-labeled studies work if the label was not incorporated into the structure of cells. And of course the water in the cells is constantly being replaced by blood flow. So let’s dispose of this red-herring and agree that the relevant structure is not atoms. If that is the case, then complete replacement of the atoms on any time scale, preserving the structure of the neurons will not disrupt the mind. Since we can replace the atoms, preserving the neural structure, we can also replicate them, making an exact copy. As the mind is an emergent property of the wetware, we should also get a similar copy of the associated mind.

Biological software is inseparable from biological “wetware”.

You correctly reject dualism. You state that mind is and emergent property of the wetware. I agree with that too. I’ve just explained that atoms are not relevant, which you have [sort of] agreed with. I think where you go wrong is in not understanding the nature of the copy.

So let’s back up and consider simpler systems from a behaviorist POV, excluding self awareness. Let’s replicate the 300 celled brain of C elegans. That should work, even if we just clone it. We probably have to train it, but in principle, treating it like a black box, we should be able to generate the same I/O responses. This results in a new worm that is behaviorally indistinguishable from the original. At this point I claim nothing more than behavioral copying. If you agree that is possible, then we should be able to do the same with parts of the brain, for example pieces of the visual cortex. So as long as we just replace components that do not involve self awareness, we should be OK. Indeed this is just the sort of thing we are trying to do medically with stem cell implants.

So we should be in agreement that a duplicate can be created, which acts in a behaviorally identical way to the original (although this will diverge as the histories change).

So the fundamental issue is the self awareness experience of the copy. Let’s try to rethink your sculpture thought experiment. Suppose that you replicated Michelangelo’s David so that it is, atom for atom, identical. Indistinguishable from the original by any test. If I ask you to choose which would you want, like most people you would say “the original”. But why? Usually when I ask this question, I get vague ideas about true provenance, that it has experienced “history”, in other words mystical qualities. Yet any material impact of history is encapsulated in the atoms in the statue. What if we make the duplicate with 50% of the original atoms, so we have 2 statues, each with half the original atoms?

Back to self awareness. If we agree that we can behaviorally copy a brain or person, and we also agree that mind is an emergent phenomena of the wetware, then even a copied human mind must be conscious, unless you want to revert to some sort of dualism or mystical attribute of mind (which I assume you do not). Therefore, at the moment or replication, both the original and the copy will have the same mental state and both have the same “I”. As they draw of the same memories, same body, and even the same environment initially, they both feel contiguous with their pasts. Both feel that they are the original and the other is the copy. Indeed, there would be no way to decide which is which, especially if we used the 50% original atoms trick during replication. So the idea that “you” eventually die but the copy lives is just a failure to understand that “you” are now 2 people, one which lives and the other dies. The living copy has all your memories up until the duplication and can lay a claim as being the “real you” as the other.

Ok, so this doesn’t help with immortality, unless you want to reinstantiate the copy every few decades. This isn’t what I want as immortality.

I think the transhumanists (I may be wrong) expect to upload their minds into computers, which is very different from duplication. Can this be done? Well if we go back to behaviorism, we know that we can implement the I/O responses in a number of different ways. The black box can be neural cells, an artificial neural network, a cellular automaton, even a tinker toy. So in principle, we can build a zombie with any Turing machine. [ Just to be clear, a zombie is a person who acts just like a real person, but there is no self-awareness or consciousness ]. The big question is whether the consciousness simulation has the same qualia as living brain. And how would we recognize it? This is the same problem of recognizing whether other beings are conscious or not. We cannot measure subjective experience, only proxies for it. If Kurzweill were to live long enough to try uploading (extremely unlikely) how would we know he succeeded? The simulation could be a great avatar and respond just like him, but we wouldn’t know if it had the subjective experiences of the man. Mind you, since we are only communicating via text, how do I know you aren’t an advanced bot and vice versa?

But I think we will have a far better understanding of these questions long before the first of our interstellar probes reaches the nearest star.

Alex: “The big question is whether the consciousness simulation has the same qualia as living brain.”

Use the duck test: if it looks like a duck, walks like a duck and talks like a duck, it’s a duck. To claim anything else is folly (Note: I realize that’s not what you are arguing!). After all, I can accuse any human, not just some purported copy, of being a zombie since it is impossible to inspect its internal states. (This has been the fodder for endless discussions by philosophers.)

Try the following question on for size (in case you aren’t already familiar with it). You murder someone, then either make a copy of your body (and therefore your self) or an equivalent copy to a non-biological substrate. The original is then destroyed (killed). When the police arrive you show them the dead original. Should they arrest the biological or machine version of you?

In any case, all of this is very interesting though IMO not terribly useful. This human fear of death should not lead us to waste too much time pursuing some version of continuity. I think it is more useful that our effort should go to understanding the mind so that we can build a suitable machine substrate/body (AI or robot) and then teach it like we would any child. It’s almost certainly easier, though still far beyond our present reach.

@Alex Tolley

I agree. Clearly the atoms in the brain are replaced during metabolism, and I should have realized that from the beginning, but I was too distracted by the notion of someone replacing all the water in my brain. :-) However, the relevant structure of the brain, the neurons themselves, remain intact throughout your whole life. The mind is an emergent property of the biological activity of neurons firing in the structure of the brain, and the neurons are what are important, not water molecules. As long as our neurons are preserved, our mind is preserved, but of the neurons are destroyed, the mind associated with that brain- our “I”- is destroyed.

My entire position is based on rejecting mind/body dualism, and stating that the mind is an emergent property of biological activity in our brains. I specifically reject the notion of a non-physical “spirit”, or as the Vulcans call it, the “katra”. I don’t think you understand what my point is, exactly. I’m only worried about whether “mind uploading” can offer continuity of existence to the original mind, which it can’t.

Going back to my sculpture thought experiment, I demonstrated that scanning and creating a digital model of an object- or even replicating it- does not transfer but instead copies the original sculpture. It is the same with the simulation of the brain- the original remains, and the simulation is a copy. The mind associated with the original brain remains in that brain, and will eventually die. The post-copy simulation may think it is the original, but for the actual original, there is no awakening in the “crystalline ovoid”. How could it, since the physical brain it is an emergent property of is not transferred but copied?

Avoiding any philosophical arguments over the nature of identity, we can both agree that having our brains scanned and simulated will provide no continuity of existence for the mind- our mind- associated with our biological brain. There goes “mind uploading”, if by mind uploading we mean transferring our minds to a more durable form by a physical process rather than creating a simulation that can pass the Turing test. This is exactly what Athena Andreadis says about “mind uploading”, and if you reject mind/brain dualism, you agree with her basic arguments.

You can also question if the simulated brain would be actually self-aware, or just providing the right answers to the right questions, but you can do this with the person who makes your coffee at Starbucks as well. Whether or not Kurzweill’s simulation actually would be a self-aware being, we’d be certain that the man himself never woke up on a computer. As I said, mind simulation does not offer continuity of existence to the original mind associated with the original brain.

All this is ignoring the technical challenges of scanning someone’s brain accurately enough to gain all the data needed to create a simulation, or the amount of computing power required to create such a simulation. If it is difficult enough, creating true “mind simulations” may prove as unreachable as creating a Star Trek transporter.

What will happen to humanity after we upload our brains?

Annalee Newitz and George Dvorsky

Some futurists and science fiction writers predict that centuries from now, humans will be able to upload their minds into computers. These “uploads” could exist in a virtual reality world created by software, or be downloaded back into other bodies — biological or robotic.

But what will we do after we’ve become uploads? That’s a matter of debate. And we’re going to debate it.

Annalee: University of Oxford futurist Nick Bostrom is a fairly passionate believer in the possibility of upgrades. He suggests that eventually our ultra-smart uploaded minds will figure out how to convert all matter in the galaxy (or even the universe) to a technological substrate that hosts our romping brains. And the plots of several science fiction tales, from Iain M. Banks’ culture series to Battlestar Galactica, rely on the idea that minds can be uploaded and transferred into new bodies.

My question is, what should we do once we’ve uploaded our brains? Because I think there’s a real ethical difference between eating all matter in the universe to create our happy brain farm, and using uploads as a kind of storage device while we’re between bodies (maybe while we’re traveling in space).

George: This is a very challenging question to answer, as it’s a kind of ‘what do we want to be when we grow up?’ sort of question. It’s made all the more difficult by virtue of our attempt to predict the needs and values of uploaded humans, or what will really be posthumans at this point. A rather safe assumption, however, is that uploaded minds will be augmented to a considerable degree and capable of substantially more than us run-of-the-mill humans. It’s very likely, therefore, that an uploaded civilization will have an insatiable need for computational power. They may feel that, in order for individuals and society to develop and engage in advanced life, they will have to continue to expand their capacities by converting more and more inert material into so-called computronium. Venturing out into space may truly be the only way to extend the frontier, so to speak, even if it’s a digital one.

Full article here:

http://io9.com/5940969/what-will-happen-to-humanity-after-we-upload-our-brains

Alex:

It does help, though. You would probably make nightly backups, with instructions to activate the latest copy if the “current” one were lost for some reason. In the worst case, you would lose one day of experience. Hardly a calamity, it has been known to happen to normal, un-uploaded individuals who just had a few drinks too much….

Christopher:

We would be certain? By what evidence? He himself certainly would disagree. Whether you call him “Kurzweil” or “The mind simulation derived from the late Kurzweil” does not make a bit of difference. He will have awoken on a computer, and he will insist on being “the” Kurzweil. He will go on writing in his name, and I am pretty sure he will hate you for calling him by the other term.

So, what really is the difference, and does it matter?

What do you think of the 9 year old I vs the 29 year old version of I? They’re certainly not the same neural state. Which one was actually me? I didn’t think to ask this question a minute ago. Does that disqualify 1 minute ago me from being an I? What if you cut out the connections I made to ask this question, resetting me to 1 minute ago?

I don’t think it matters. Only thing that matters is that I think I’m me, at the moment. If there were two of us, we’d both be right. Divergences and all, regardless of how many original atoms were where or in what state. It’s basically the definition of copy. The emergent state would be copied as well, on the other side of the room etc. (Just picture getting cut in half while in a coma, then the half getting rebuilt and both wake up.)

Why is continuity of consciousness even relevant to the preservation of personal identity? The real definitions of consciousness (The one nobody ever seems to use in these debates) is:

1. The state of being awake and aware of one’s surroundings.

2. The awareness or perception of something by a person.

People routinely go through these states and we don’t mourn the deaths of their ‘originals’. If with consciousness you mean all brain activity, well, again, why is instantaneous state relevant to personal identity?

The KESM allows imaging of the entire mouse brain in 100 hours[1]. The speed of the algorithms to extract data from electron micrographs depends on computational capabilities and their efficiency, but I don’t know how long that would take. As for simulation, it has been done before[2].

The sensory and motor neurons can be connected to virtual I/O[2].

And who is saying anything about immortality? The uploads will still be mortal, they can be destroyed by accident or violence or decay.

[1] http://wiki.transhumani.com/index.php?title=Whole_Brain_Emulation#KESM

[2] http://wiki.transhumani.com/index.php?title=Whole_Brain_Emulation#Large-Scale_Emulations_So_Far

[3] http://www.fhi.ox.ac.uk/reports/2008-3.pdf page 74

@Chris Phoenix

The mind associated with the original brain remains in that brain, and will eventually die. The post-copy simulation may think it is the original, but for the actual original, there is no awakening in the “crystalline ovoid”.

So maybe we are just talking at cross purposes? What I tried to show is that we should not attach ourselves to the “original”. The duplicate is as much the same person as the original. I tried to make that clearer by altering the conditions of the duplication experiment. If I start with “Alex”, then get “Alex A” and “Alex B”, both who initially think they are the original “Alex”, what difference does it make if either is killed? The survivor will still think it is “Alex”.

Beyond that, as I have said, simulations and uploading to new substrates is an open question, especially as regards consciousness and identity. I probably agree with you more here.

@ Ron S

Use the duck test: if it looks like a duck, walks like a duck and talks like a duck, it’s a duck. To claim anything else is folly ….. After all, I can accuse any human, not just some purported copy, of being a zombie since it is impossible to inspect its internal states. (This has been the fodder for endless discussions by philosophers.)

It seems to try philosophers more than cognitive scientists and computer scientists. It is almost like a form of dualism, although they deny it when pressed. My 2 cents is that consciousness is an illusion based on “agents” processing the output of other agents. We can certainly get ourselves into a sort of zombie state when driving, but return to full awareness at our destination. Meditation may be similar, although I have no first hand experience of it. If that model is correct, then consciousness should emerge irrespective of the substrate. But this is pure speculation.

BTW, I read that there are now Turing tests for conscious agents in video games. So we definitely getting towards to duck typing of consciousness for our machines.

@Eniac

Have you ever scanned a document? A scanned document is not mystically taken into the computer. A computer file is created with the image of the scanned document, but the document itself remains on the scanner’s tray. It is exactly the same if you are scanning a brain to create a computer simulation of it- the original brain remains outside the computer, and a simulation of the brain’s activity is created within the computer. As your mind is an emergent property of the biological activity in your particular brain, how could your mind wake up on the computer when your brain was not- and cannot- be transferred by the process of scanning and simulation? It cannot- you yourself will remain in your body. Whatever the simulation thinks, it is a copy of your brain, not your original brain.

Unless you are a mind-body dualist, which I assume you are not, it is clear that scanning and simulating someone’s brain will not provide any continuity of existence to the original. You can only create copies this way. You seem to assume that I am making assumptions about the simulation. I am not. In fact, I assume that the simulation is a perfect copy of the original. I am pointing out that no matter how perfect a copy you create, it is simply impossible to transfer your mind to a computer in a way that preserves your continuity of existence.

Why does this matter? Once the scanning process is finished, you will get out of the scanning device and walk away. You won’t wake up in a “crystalline ovoid”. This seems to me to be a very important difference. If I get into a rocket ship and fly to Saturn, I go there myself. If I have myself scanned so a simulation of my brain can be created and sent on a space probe to Saturn, I never get to go to Saturn- I stay on Earth! No astronaut would ever accept staying on Earth while a simulation of their brain goes on a first-time space flight.

@Eudoxia

Arguing over the definition of the phrase I used proves nothing. When I used the term “continuity of consciousness”, what I meant is better expressed as “continuity of existence”- that is, is your personal identity preserved by the process of mind scanning and simulation? As I explained above to Eniac, creating a simulation of someone’s mind will not transfer their original mind to the computer. Their continuity of existence is not preserved- in fact, if the scanning is non-destructive, they will get up out of the scanning chair and walk away.

The Knife-Edge Scanning Microscope can reconstruct maps of 3D cellular structures, but nowhere is it said that the KESM can actually gather the data needed to simulate a living mouse brain. KESM is simply a novel method of microscopy for studying the structure of the brain. You neglected to mention that the mouse brain must be embedded in plastic and cut into slices for the KESM to scan it.

I’m not volunteering. You can read all about the KESM here– that is where the quote above came from.

Generally, “mind simulation” is often discussed as a method of life extension. If we could escape into a more durable silicon shell, we would escape aging, mortality, and eventual death- at least according to transhumanists. The problem is that the simulation will be a copy of you, not you, even assuming simulations are ever possible at all. It is a poor method of life extension that does not extend your life, but instead makes a somewhat more durable simulation, possibly even destroying your brain in the process.

@Alex Tolley

You should remain attached to the original Alex- you are him!! It matters a lot to the person going through the process of scanning whether or not the process preserves their continuity of existence, especially if the scanning process is destructive. If the process isn’t destructive, and we end up with a simulation of Alex and the original Alex in the same room together, it is plain to the meanest intelligence that the simulation is a copy of Alex, not the original.