Yesterday’s thoughts on self-repairing chips, as demonstrated by recent work at Caltech, inevitably called Project Daedalus to mind. The span between the creation of the Daedalus design in the 1970s and today covers the development of the personal computer and the emergence of global networking, so it’s understandable that the way we view autonomy has changed. Self-repair is also a reminder that a re-design like Project Icarus is a good way to move the ball forward. Imagine a series of design iterations each about 35 years apart, each upgrading the original with current technology, until a working craft is feasible.

My copy of the Project Daedalus Final Report is spread all over my desk this morning, the result of a marathon copying session at a nearby university library many years ago. These days you can skip the copy machine and buy directly from the British Interplanetary Society, where a new edition that includes a post-project review by Alan Bond and Tony Martin is available. The key paper on robotic repair is T. J. Grant’s “Project Daedalus: The Need for Onboard Repair.”

Staying Functional Until Mission’s End

Grant runs through the entire computer system including the idea of ‘wardens,’ conceived as a subsystem of the network that maintains the ship under a strategy of self-test and repair. You’ll recall that Daedalus, despite its size, was an unmanned mission, so all issues that arose during its fifty year journey would have to be handled by onboard systems. The wardens carried a variety of tools and manipulators, and it’s interesting to see that they were also designed to be an active part of the mission’s science, conducting experiments thousands of kilometers away from the vehicle, where contamination from the ship’s fusion drive would not be a factor.

Even so, I’d hate to chance one of the two Daedalus wardens in that role given their importance to the success of the mission. Each would weigh about five tonnes, with access to extensive repair facilities along with replacement and spare parts. Replacing parts, however, is not the best overall strategy, as it requires a huge increase in mass — up to 739 tonnes, in Grant’s calculations! So the Daedalus report settled on a strategy of repair instead of replacement wherever possible, with full onboard facilities to ensure that components could be recovered and returned to duty. Here again the need for autonomy is paramount.

In a second paper, “Project Daedalus: The Computers,” Grant outlines the wardens’ job:

…the wardens’ tasks would involve much adaptive learning throughout the complete mission. For example, the wardens may have to learn how to gain access to a component which has never failed before, they may have to diagnose a rare type of defect, or they may have to devise a new repair procedure to recover the defective component. Even when the failure mode of a particular, unreliable component is well known, any one specific failure may have special features or involve unusual complications; simple failures are rare.

Running through the options in the context of a ship-wide computing infrastructure, Grant recommends that the wardens be given full autonomy, although the main ship computer would still have the ability to override its actions if needed. The image is of mobile robotic repair units in constant motion, adjusting, tweaking and repairing failed parts as needed. Grant again:

…a development in Daedalus’s software may be best implemented in conjunction with a change in the starship’s hardware… In practice, the modification process will be recursive. For example the discovery of a crack in a structural member might be initially repaired by welding a strengthening plate over the weakened part. However, the plate might restrict clearance between the cracked members and other parts, so denying the wardens access to unreliable LRUs (Line Replacement Units) beyond the member. Daedalus’s computer system must be capable of assessing the likely consequences of its intended actions. It must be able to choose an alternative access path to the LRUs (requiring a suitable change in its software), or to choose an alternative method of repairing the crack, or some acceptable combination.

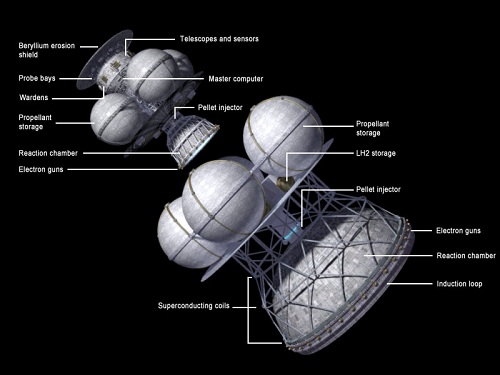

Image: Project Daedalus was the first detailed study of an interstellar probe, and the first serious attempt to study the vexing issue of onboard autonomy and repair. Credit: Adrian Mann.

The Probe Beyond Daedalus

Robert Freitas would follow up Daedalus with his own study of a probe called REPRO, a gigantic Daedalus capable of self-reproduction from resources it found in destination planetary systems. Another major difference between the two concepts was that REPRO was capable of deceleration, whereas Daedalus was a flyby probe. Freitas stretched the warden concept out into thirteen different species of robots who would serve as chemists, metallurgists, fabricators, assemblers, repairmen and miners. Each would have a role to play in the creation of a new probe as self-replication allowed our robotic emissaries to spread into the galaxy.

Freitas would later move past REPRO into the world of the tiny as he envisioned nanotechnology going to work on interstellar voyages, and indeed, the promise of nanotech to manipulate individual atoms and molecules could eventually be a game-changer when it comes to self-repair. After all, we’d like to move past the relatively inflexible design of the warden into a system that adapts to circumstances in ways closer to biological evolution. So-called ‘genetic algorithms’ that can test different solutions to a problem by generating variations in their own code and then running through generations of mutations are also steps in this direction.

One thing is for sure: We have to assume failures along the way as we journey to another star. Grant sets a goal of 99.99% of all components aboard Daedalus being able to survive to the end of the mission. This was basically the goal of the Apollo missions, though one of those missions suffered only two defects, equivalent to a 99.9999% component survival rate. Even so, given the need for repair facilities and wardens onboard to fix failing parts, Grants figures show that a mass of spare components amounting to some 20 tonnes needs to be factored into the design.

It will be fascinating to see how Project Icarus manages the repair question. After all, Daedalus was set up as an exercise to determine whether a star mission was feasible using current or foreseeable science and technology. With the rapid pace of digital change, how far can we see ahead? If we’re aiming at about 35 years, do we assume breakthroughs in nanotechnology and materials science that will make self-healing components a standard part of space missions? Couple them with advances in artificial intelligence and the successor to Daedalus would be smaller and far more nimble than the original, a worthwhile goal for today’s starship design.

The two papers by T.J. Grant are “Project Daedalus: The Need for Onboard Repair,” Project Daedalus Final Report (1978), pp. S172-S179; and “Project Daedalus: The Computers,” Project Daedalus Final Report (1978), pp. S130-S142.

Here is a brief film from 2009 showing a rather different concept of a Warden from the original 1970s BIS plan:

http://spaceart1.ning.com/video/daedalus-warden-test

According to the 1978 book, the Wardens are supposed to possess semi-intelligent AI systems. What if one of them decides it no longer wants to be mere repairman and caretaker? Especially when it realizes that once their primary mission is complete, Daedalus and its entourage will barrel on into the galaxy, with no other destination or purpose than to drift aimlessly in interstellar space until it dies from massive system failure. Carrying a giant fusion reactor out of control, no less.

I have also wondered how the full Artilect that will no doubt be needed to run Daedalus itself (will the ship be its body?) will feel about spending decades in the void only to fly through the target star system in a matter of hours, with little else to do and nowhere else to go after that.

Can we make an Artilect that is intelligent but not aware? Or is both needed? If scientists find a way to suppress an Artilect’s consciousness, basically turning it into a smart tool to serve human needs and then discarding it when the primary goal has been achieved, is that ethical?

The original BIS authors did not address this. Granted, Icarus is planned to stay in the target star system, but if it has an Artilect mind that is aware, will it want to stay there indefinitely?

So many issues regarding interstellar exploration that have yet to be more fully explored.

Even with the Apollo program’s high standards, Apollo I was lost on the pad and Apollo Xiii suffered a crippling explosion which canceled the mission.

With all of the breakthroughs in genetics, I wonder how many functions of the starship (or the wardens themselves) can be provided by self-replicating, self-checking systems similar to DNA? Or asked another way: How many functions of DNA replication and gene expression can be applied to create or repair structure, systems, or circuits necessary to keep the starship viable?

For example, a genetic system that “eats” the old part, and then secretes the new part. Or on another level that builds structure a molecule at a time and gets the raw materials from the damaged structure or other relatively non-essential component of the starship (or even matter encountered along the way).

One issue that comes to mind is the delicacy of life, but there are microbes that are thrive off of radiation of one form or another. Why not designed to live on the radiation created by propulsion of the starship?

No need for it to even be self-aware. A starfish will grow back severed limbs if necessary, but has no capability or need to ponder it’s own existence.

With printer technology, things get a lot easier when you can re-cycle failed components and rapidly create new ones.

Rather than two five-tonne wardens, perhaps a swarm of co-operating, hand-sized ‘spiders’, with larger ‘bots to shift and wrangle massive items and smaller ‘ants’ to access those hard-to-reach places (and nanotech as it becomes available).

Well, if you can move such a huge probe to insterstellar distances surely

future technologies will allow much smaller probes to travel much faster

than Daedalus. If we want to explore solar systems out to 500 LY to

find the BEST possible colony site, I would envision a vast beehive of

100g modular subunits , with redundancies, that assemble a probe out of themselves. If Sent to Type F,G,K targets we would have an excellent chance of a great find.

For the cost in capital and energy, you could send thousands of mini-probes

that would allow for redudancies since some subunits would not survive the

relativistic jouney. It is far easier to accelerate a probe-subunit from the moon than to try and propel Daedalus.

P.S. The biggest nighmare of all is finding that planets like the Earth exist

almost exclusively around M drawf stars,( if like earth we mean liquid water and a similar atmopheric density) And that other type of stars only host bloated Re-3 to Re-7 type planets. It would certainly go along ways to explaining Fermi paradox.

Rob Flores: yes, a nightmare indeed — but only for those planet-bound cultures that prove themselves incapable of adapting to space-based civilisation.

Stephen

Alas, this is not so. The envisioned nuclear engines do not scale down, and the destructive effect of oncoming interstellar gas scales inversely with size.

“-the destructive effect of oncoming interstellar gas scales inversely with size.”

Have to project a beam ahead of the starship to push the atoms out of the way. That is the most common solution in fiction. Not deflector shields but a deflector beam.

Alas, any such system, were it practical at all, would also scale badly to smaller size. Available power scales with size cubed (volume of the craft), required power with size squared (cross sectional area to be protected). Large is better than small, quite generally, for interstellar travel.

It gets worse when magnetic fields are involved. Small magnets are weaker than large ones, and if they are for deflection of charged particles (whether for radiation, rocket exhaust, or power beams) there is a double whammy in that particles spend less time in smaller fields.

FYI, laser beams by themselves do not push atoms away. They may be tuned to ionize, but you’d still need magnetic or electric fields to do the actual pushing. Such fields must be strong and large enough to make a good dent in the particle trajectory, which prevents miniaturization very effectively.

In the end, considering the mass of the required lasers and magnets, it is likely a few feet of ice or carbon or tungsten or whatever in front of the craft will be much more effective in dealing with interstellar gas. In any case, to be worth the trouble, the rest of the ship will have to be quite large.

“-it is likely a few feet of ice or carbon or tungsten or whatever in front of the craft will be much more effective in dealing with interstellar gas.”

How about microwaves?

Microwaves are even less effective than light in moving atoms out of the way. You can convince yourself by looking for wind being generated by laser or microwave beams on Earth. You won’t find any, I think.

Well, there is always the Valkrie method of projecting anti-matter particles ahead of the ship as a shield.

http://www.charlespellegrino.com/propulsion.htm

Anti-matter particles? I think you mean the liquid droplet shield Charles Pellegrino is advocating. If you include pumps and reservoirs, this is not going to be superior to a solid block of tungsten or carbon. And some of those droplets will inevitably be lost, which is a no-no in an interstellar ship.