If our exoplanet hunters eventually discover an Earth-class planet in the habitable zone of its star — a world, moreover, with interesting biosignatures — interest in sending a robotic probe and perhaps a human follow-up mission would be intense. In fact, I’m always surprised to get press questions whenever an interesting exoplanet is found, asking what it would take to get there. The interest is gratifying but I always find myself having to describe just how tough a challenge a robotic interstellar mission would be, much less a crewed one.

But we should keep thinking along these lines because the odds are that exoplanetary science may well uncover a truly Earth-like world long before we are in any position to make the journey. I would expect public fascination with such a discovery to be strong. Dana Andrews (Andrews Space, now retired) has been pondering these matters and recently forwarded a paper he presented at the International Astronautical Congress meeting in Toronto in early October. It’s an intriguing survey of what could be done in the near-term.

How we define ‘near-term’ is of course the key here. Is fusion near-term or not? Let’s think about this in context of the mission Andrews proposes. He’s looking at a minimum transit speed of 2 percent of the speed of light (fifty years per light year), making for transit times in the neighborhood of hundreds of years, depending on destination. A key requirement is the ability to decelerate and rendezvous with the destination planet using a magnetic sail (magsail) that can be built using high-temperature superconductors. Andrews also assumes 20-30 years of research and development before construction actually begins.

The R&D alone takes us out to, say, 2040, but there are other factors we have to look at. As Andrews also notes, we have to assume that a space-based infrastructure sufficient to begin active asteroid mining will be in place, for it would be needed to build any of the systems we can imagine to make a starship. He cites a thirty-five year timeframe for getting these needed systems operational. Some of the R&D could presumably be underway even as this infrastructure is being built, but we also have to take into account what we know about the destination. Remember, it’s the Earth-like world discovery that sets all this in motion.

The ability to detect not just biosignatures but data of the kind needed for a human mission to an Earth-class planet may take twenty years or more to develop, and I think even that is a highly conservative estimate. For naturally we’re not going to launch a mission unless we have not just a hint of a biosignature but solid data about the world to which we are committing the mission. That might mean instruments like a starshade and perhaps interferometric techniques by a flotilla of observatories to pull up information about the planet’s surface, its continents and seas, and its compatibility to Earth-like life.

I’d say that backs us off at least to the mid-2030’s just for the beginning of exoplanet analysis of the destination, after which the planet is declared suitable and mission planning can begin. What technologies, then, might be available to us to begin interstellar R&D for a specific starship mission in, say, 2045, when we may conceivably have such detailed data, aiming at a 2070 departure if all goes well? Andrews doubts that high specific impulse, low power density fusion rockets will be available within the century, if then, and thinks that antimatter, if it ever becomes viable for interstellar propulsion, will follow fusion. That leaves us with a number of interesting alternatives that the paper goes on to analyze.

An Interstellar Point of Departure

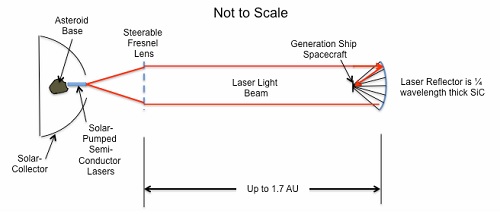

Andrews develops an interesting take on using space-based lasers, working in combination with four-grid ion thrusters using hydrogen propellant and optimized for a boost period of thirty-two days. The specific impulse is 316,000 seconds. The lasers are mounted on a small asteroid (one or two kilometers) and take advantage of a 500-meter Fresnel lens that directs their beam to a laser reflector on the spacecraft. Let me take this directly out of the paper:

An actively steered 500 m diameter Fresnel lens (risk scale 3) directs the beam to the laser reflector on the Generation Ship Spacecraft, where it is focused onto hydrogen-cooled solar panels (risk factor 1), using a light-pressure supported ¼ wave Silicon Carbide reflector (risk factor 2.5). The light conversion panels operate at thousands of volts at multiple suns (risk factor 2) to allow direct-drive of four-grid Ion thrusters using hydrogen propellant and optimized for short life (30 days) and very low weight (risk factor 3). The four-grid thrusters provide a Specific Impulse (Isp) of 316,000 seconds operating at 50,000 volts using hydrogen. The triple point liquid hydrogen propellant is stored in the habitat torus during boost (the crew rides in the landing pods for the duration of the 32 day boost period), so there is no mass for propellant tanks. After acceleration the crew warms up the insulated torus, fills it with air, and moves in.

Image: The ‘point of departure’ design with associated infrastructure. Credit: Dana Andrews.

The ‘risk factors’ mentioned above refer to a ranking of relative risk for the development of various technologies that Andrews introduces early in the paper. Low Earth Orbit tourism, for example, ranks as a risk factor of 1, with development time in 10 years. Faster than light transport ranks as a risk factor 10, with development time (if ever) of over 1000 years. Low risk factor elements within the needed time-frame include asteroid-based mining and possible colonies, gigawatt-level beamed power, thorium fluoride nuclear space power and — this is critical for everything that follows — fully closed-cycle biological life support systems.

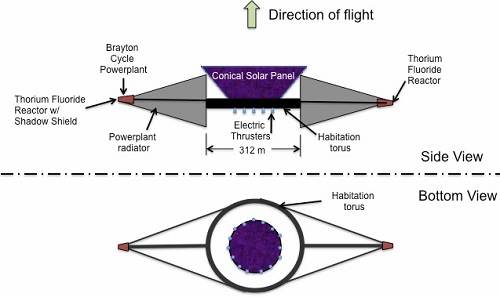

Image: Candidate generation ship configuration. Credit: Dana Andrews.

The habitation torus is assumed to be 312 meters in radius, rotating at two revolutions per minute to provide artificial gravity for the crew. The paper assumes a crew of 250, selected to provide differing skillsets and large enough to prevent problems of inbreeding — remember, we’re talking about a generation ship. The craft uses two thorium fluoride liquid reactors to provide power, acting as breeder reactors to reprocess fuel during the mission. Andrews comments:

The challenge to the spacecraft designer is to include everything needed within the 4000 mT allocated for end of thrust… This design closes but there is very little margin. For instance, there is only 15% replacement air and only 200 kg of survival equipment per person. All generation ships here use the same basic habitat and power systems.

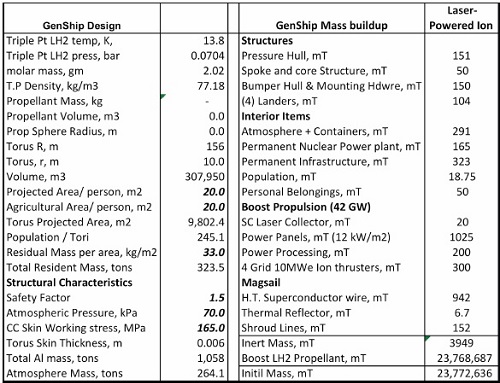

Image: Laser-powered ion propulsion generation ship description. Credit: Dana Andrews.

The magsail needed for deceleration takes, by Andrews’ calculations, 73 years to slow from 2 percent of c, with deployment at approximately 17000 AU from a star like the Sun, with the goal of entering orbit around the star at 3 AU, after which time the magsail can be used to maneuver within the system. Electric sails seem to work for operating inside the stellar wind, but Andrews notes that their mass scales linearly with drag, whereas a magsail’s mass scales as the square root of the drag desired. The magsail is thus the lighter option for this mission, envisioned here as a 6000 kilometer sail using high temperature superconductors, a technology the author believes will fit the proposed chronology.

The propulsion methods outlined here are what Andrews calls a “straw man point of departure (PoD) design,” with other near-term propulsion possibilities enumerated. Tomorrow I’ll take a look at these alternatives, all of them relatively low on the paper’s risk scale. The paper is Andrews, “Defining a Near-Term Interstellar Colony Ship,” presented at the IAC’s Toronto meeting and now being submitted to Acta Astronautica.

@NS – I am not clear what makes this so hard to understand. A number of commentators has explained the situation correctly. There would be 2 independent “you’s”. One would stay at home while the other would go traveling. There is no connection between two, other than each shares exactly the same experiences up to the point of replication. Perhaps it is better to forget the concept of “original” and “copy” and just think of each as equal “copies”. Unique continuity of consciousness is broken. I appreciate that is a novel situation in human experience, but that is what would happen.

AFAICS, destructive mind transfer would be like Star Trek beaming, and most people don’t seem to have much problem with that. (For a more interesting issue with that, watch the movie “The Prestige”.) But the moment we change the conditions and allow non-destructive mind transfer, and especially allow the mind to inhabit a different body, there seems to be a major resistance to that concept being possible and a reversion to the “but the original “me” hasn’t traveled”. To me it is as if one accepts that the mind is created by the wetware of the brain, but when faced with brain/mind replication revert to a mind/brain dualism that allows for a “unique soul” that contains the “me”.

Is it easier to accept mind copies if one posits a constantly created multiverse where infinite copies of you are constantly being made but you have no way to communicate with them? Because if you could, the effect would just be the same as mind transfer from any individual’s perspective, and there is no privileged version that is the “original”.

The science fiction story about making copies of a man via teleportation to examine a deadly alien maze on the lunar surface is titled Rogue Moon by author Algis Budrys, published in 1960.

http://www.omphalosbookreviews.com/index.php/reviews/info/190

NS:

I fail to see the contradiction, here. Yes, the replica would have a separate consciousness. Yes, the replica would be a continuation of their original subjective consciousness. As will be the original. Where’s the problem?

Maybe NS is implicitly postulating an indivisibility of the mind, an essence that cannot be duplicated, in principle. That would be the dualist’s point of view, I suppose. Not a notion I can subscribe to.

Alex Tolley, you and I understand. My point is that the vast majority of people discussing this issue (other than on this forum) don’t have the same understanding. Based on what I’ve read they seem to expect a consciousness transfer to a new body, rather than just a mental clone of themselves with their old life going on. They are anticipating something like personal immortality. As I assume you do, I think this is an extremely unlikely (and at best unverifiable) result.

NS:

This is not an either-or alternative, the “rather” is misplaced. As I believe most of us here understand, both can be true at the same time.

Thanks for so many interesting comments. I have to say up front I’m enjoying this debate – and nothing in any of the responses offends me, or makes me angry. I feel strongly about the issues of human rights and that includes the ‘rights of humans’ in a post-human era. I think these need to be recognised and protected, or the development of post-humans is a step back, not a step forward. To really be ‘progress’ post-humans should be able to bring humans with them in terms of advances in society and also developments like interstellar travel – without trampling on the rights of humans to their own beliefs or principles.

But I balance those concerns with the need to make progress. In that sense I agree with Eniac that the technology needs to be developed for the ethical issues to be tackled. That is the way that it has happened with previous scientific leaps – nuclear energy, computers, genetic engineering, etc – and that is the way it will continue in the future. The tricky period is when the technology is new, and open to abuse, until established principles and protocols are in place.

Let me clear, I have no disagreement with the notion of being post-human. I too am tempted by the prospect of significant longer or indefinitely longer lifespans through artificial enhancements – the sort of thing that Peter Hamilton talks about so well in his novels. Uploading human consciousness is a more challenging prospect because the discussion is also vague, and I don’t think we are dealing with the philosophical or metaphysical issues at all. But I’m a human writing in 2014 – not a post human observing hundreds of years hence, and I have no idea just how the future will unfold. Time…and science…will tell.

In terms of starships – I guess that deep down I’m old fashioned. I like the idea of physically going in a starship to a nearby star system. I find the concept of being left behind whilst some sort of uploaded avatar or a robot version of me goes, a bit disappointing. I want to see new planets with my own eyes from the observation deck whilst the ship is in low orbit. I want to step on the surface in my own body, kneel down, and pick up a handful of soil. That to me is my preferred vision of interstellar travel. That means fast starships – able to get to an appreciable fraction of light speed. That won’t happen in my lifetime, ironically unless the advocates of post-humans make some fairly radical progress. Catch 22.

@ljk suggests that humans who want to hold onto their beliefs including religion in a post-human era, are somehow akin to primitive tribes relating to modern western civilisation today. I disagree with that comparison, and I see no reason why 23rd Century or 24th Century humans cannot coexist seamlessly with 23rd or 24th Century post-humans. The fact is that humans of the future will still be highly intelligent, educated, tech-savvy, and so what if they don’t have an uploaded consciousness in an android body? To me the choice should be up to the individual – be a post-human, or choose not to be – but as with the principles that we fight so strongly to protect today – equality in race, gender, religion, ethnicity, and sexual preference – the principle of equality in rights and freedoms between human and post human should also be something we fight for. If we don’t then I think we undo all the progress that we have made as a species in past generations. We may be ‘technologically superior’ as post-humans, but are we ‘better’?

@Alex,

You said in an above post to another participant – “I am not clear what makes this so hard to understand. A number of commentators has explained the situation correctly. There would be 2 independent “you’s”. One would stay at home while the other would go traveling.”

That’s the problem – I don’t get to go.

The reason I think this is a problem is because of what you say next – “There is no connection between two, other than each shares exactly the same experiences up to the point of replication. Perhaps it is better to forget the concept of “original” and “copy” and just think of each as equal “copies”. Unique continuity of consciousness is broken. I appreciate that is a novel situation in human experience, but that is what would happen.”

So as soon as replication or upload occurs, that new ‘me’ is a new person with no link to me. So I sit and watch him or her get sent out to another star, and I’m stuck back on Earth. I don’t get to go. I don’t see new worlds, or explore new star systems. I don’t get to experience interstellar travel. I don’t even have any further contact with that individual – I go back to my humdrum life here on Earth and wonder what might have been. If the problems suggested for future societies mount up in coming years, that life is very bleak – think climate change, resource depletion, over population, growth of extremism, increasing gaps between wealthy and poor. The typical dystopian future that science fiction is favouring at the moment.

So basically, I don’t even have the chance to go – I’m denied that because the approach to interstellar travel excludes normal humans. Its only for post-humans. They get to go to a better world and a better future (we presume), and get to spread through the galaxy. Humans are left behind in a stagnating society (the movie ‘Elysium’ comes to mind).

Hardly seems fair or just. Most people I think would feel the same way, and that sort of divided, and unfair approach would encounter resistance from the outset.

Of course, that future may not be so dystopian. We may have this fantastic future – a techno-utopia, like that portrayed in SF shows such as Star Trek, absent wars, poverty, disease, or natural disasters. But those shows also show normal humans travelling through the universe – admittedly the creators are taking liberties with the physics.

Frankly, I think that the human race should not be seeking to embrace a ‘two speed’ civilisation, and I think all humans who want to go to other worlds should have the chance, irrespective of whether they are post human or human. The alternative sounds very much like the Indian caste system, which is so appallingly medieval and out of touch with modern values.

So that brings me back to my original point. Investing the time, the effort and the knowledge to build fast starships that can take normal humans to other star systems within a reasonable time frame (I’d argue under 30 years for nearby stars) and making that a goal for the next 100-200 years, should be how we do it. Technologically it may be easier just to allow post-humans to go, but its not a better option, because as all my posts have emphasised, there is more to interstellar travel than the technology, the science and the engineering. Sometimes the harder road is the better one.

@Malcolm Davis

Consider, you would like to do that…in a volcano or on the ocean floor. These places you cannot go without being surrounded by technology that separates you from that soil. Yet you can do both these things by teleoperating a robot with a tactile sense. Similarly, you cannot do this on any body in the solar system without a spacesuit, or even at all on Venus or any of the gas giants. If you’ve ever put on a current technology spacesuit glove, you know that there is nothing to feel. And yet, place your mind in a machine with sensory feedback and you could in principle feel that soil as though you were on Earth using your bare hands.

Riding a bicycle lets you feel the environment more that driving a car. Snorkeling or SCUBA gets you closer than riding in a submarine. Hang gliding feels closer to flying that being in an aircraft. Immersive VR is better than looking at a screen. I suggest that an artificial body will be better than wearing a spacesuit, and also a lot more versatile. I can imagine in the next decade or two that you will be able to do this for the moon, renting a fully sensored artificial body to explore the moon. The only weirdness will be the 2.5 second feedback delay, so don’t go too fast. Martian astronauts will do the same from orbit or safely buried in a base on Phobos (and they could in principle run a number of machine bodies on the surface, jumping to the viewpoint of each).

If you can appreciate that the above is possible, even likely, it should be clear that we already are rapidly developing the technology that will allow full VR and sensory feedback. Explore the moon from Earth, Mars from Phobos base, Titan from a Saturnian orbit. With mind uploading, one of your mind copies will explore these bodies without your meat body being nearby. If memories can be implanted, the meat body “you” could even experience those moments, as well as the uploaded “you”.

If that makes any sense for exploring the solar system, then it makes far more sense for interstellar travel where time and distance mean that your uploaded mind will most likely not return to share the wonders. Travel would be cheap as only the information content of a mind need travel, and time would not be a constraint as your consciousness would not operate until implanted in a body at the target. Even sweeter, once at the target star, you could move your mind perceptually instantaneously to any other body in the system to explore using the same techniques.

From the point of view of what “works best”, I would suggest that this is going to be the cheapest way to go, thus happening far earlier than big star ships. It will also allow for a far wider variety of target destinations that a human cargo star ship will allow.

Finally, it may well be that mind uploading is still very difficult compared to synthetic machine minds based on the technology we can develop. In which case it will be the machine minds that explore the solar system and then the galaxy, not post humans.

Malcolm Davis said on October 15, 2014 at 21:54:

“@ljk suggests that humans who want to hold onto their beliefs including religion in a post-human era, are somehow akin to primitive tribes relating to modern western civilisation today. I disagree with that comparison, and I see no reason why 23rd Century or 24th Century humans cannot coexist seamlessly with 23rd or 24th Century post-humans. The fact is that humans of the future will still be highly intelligent, educated, tech-savvy, and so what if they don’t have an uploaded consciousness in an android body?”

I want to think that even an advanced post-human who doesn’t look or act like a “baseline” human will still recognize its evolutionary predecessor as a fellow intelligent being and treat it if not as an actual equal then at least as a colleague and certainly not a pet.

But who really knows? Look at our class structure across many current human societies: A few differences in a person, not all of them even physical attributes, and one class often treats another as subhuman. Just look at the caste structure in India, where millions of people are called Untouchables and are virtually slaves and commodities in that society.

Now imagine a post-human who can out think and out perform a baseline human, and may even be physically stronger depending upon what kind of body they may be encased in. How often does one hear to look at human history when it comes to how an advanced ETI might treat humans during an encounter just as Western societies treated the seemingly less sophisticated natives of distant lands centuries ago.

This may not even be a case of one species looking down upon another: Post-humans may just not be able to relate to baselines and vice versa. Current humans have a rather narrowly defined set of abilities when it comes to dealing with things and people outside their rather limited environments. A super smart, super fast post-human who can also survive and thrive in places that will kill a baseline in seconds may have little to say and do with each other simply by their very natures. They might even leave Earth to the baselines to explore the rest of the galaxy, being far better able to do so than we who are adapted to one particular planet.

Then Malcolm said:

“We may be ‘technologically superior’ as post-humans, but are we ‘better’?

Define better. Define ethics. Now apply it to beings who will be virtually alien to current humanity.

@Malcolm Davis

I think you are still not getting it. You appear to be invoking the original “me”. It is just as valid to state that “you” go star traveling and the other copy stays home (too bad). One of the copies goes, but there is no privileged viewpoint that seems to be implied by your statement. Would you be happier if we destructively copied the original so that only the star traveling copy existed (like ST transporters)? If you think that the “original” dies and the copy is a new person that”you” do not inhabit, then I think you are still trapped in dual brain thinking.

I think that this problem is even deeper. Think of the classic idea of replicating te Mona Lisa painting down to the atomic level. People still say they want the “original”, even though both are the same. Make 2 copies and destroy the original seems to break that choice, although teh result is exactly teh same. There just seems to be this sense that people have that an object that has existed in the world is different in some way from a perfect copy. It is almost like the weird homeopathic idea that water can retain the “memory” of compounds that were once in it, but subsequently removed.

Malcolm:

But, you do.

You leave behind your meat-bound alter ego and go exploring the universe, at light-speed, with any suitable body high technology can come up with. If you do not forget your regular backups, you can do this forever.

True, you also stay behind and envy your newly liberated alter ego, but you can alleviate the pain with the knowledge that that is really you out there, having fun, even if you can’t.

I think you share with NS a certain mind-block that does not allow you to accept that both of these things would happen to you, assuming mind uploading can be done.

Alex Tolley and Eniac, consider it this way:

If there was a harmless way to scan your brain and create a replica of it and your mind (in whatever body) then afterwards you (in the subjective sense, your identity, your “I-ness”) would still be alive in your original body. Your life would go on as before. The replica, however much it resembled you, would be a separate being, with presumably its own sense of identity or “I-ness”.

Now consider the same brain scan process, except that in the end your “original” brain is destroyed. Would you still be (in the above sense) alive? I would say no. The “you” that in the first scenario continued its life is gone. Your replica is alive but it has its own life that the original you does not experience. You have not transferred your essential identity to the replica. You are dead.

ljk said

“The science fiction story about making copies of a man via teleportation to examine a deadly alien maze on the lunar surface is titled Rogue Moon by author Algis Budrys, published in 1960….”

THANKYOU ever so much ljk for tracking that down :D

@NS – please see my comment to Malcolm. I think you are saying the same thing he is, which I disagree with.

If you get in a replicator so that the result is 2 of you, what is the subjective experience of each? One “me” thinks that nothing has happened, while the other “me” thinks that something has happened (travel, star flight, etc). You can change the conditions a little, but the main idea is that there is no need to lock your identity to the “original”.

Another analogy might be the afterlife. Most religions assume that the “soul” migrates so that the continuity of “I-ness” is maintained. But this needn’t be so, it could well be that a mind copy is the one that wakes up in the afterlife. It is the migration that is required because of this difficulty in understanding that copies should work fine. We humans seem to have a lot of difficulty accepting that our consciousness will disappear with death and I wonder if there is a link between this and the difficulty in accepting the idea that consciousness can be duplicated.

There are all sorts of thought experiments that can be done to help visualize this duplication effect and help understand what it is like, but until we can actually do it and even prove it, then I fear we will be forced to replicate this conversation.

I’m with Alex and Eniac here. The mona lisa argument holds well here I think. From a quantumn mechanical PoV, all electrons, protons etc are identical. There is no difference between this electron and that electron. Swap out a single electron from that pigment molecule with another electron and TAIAP the picture would be the same. Yes we humans ‘feel’ as if there is a difference between the Mona Lisa and a truely identical copy but technically there isn’t.

Replace ‘Mona Lisa’ and ‘pigment’, with ‘brain’ and ‘neuron’ (or connectome, synapse etc). The unique entity that ‘you’ call ‘your consciousness’ (the bit that makes you unlike any other individual and lets us say ‘me’ knowing that there is only one ‘me’ in existence) is an emergent property of the precise arrangement of cells, connectivity, electrical and chemical signals that comprise the brain, in particular the neo-cortex. If we could ignore Heisenberg and copy every last electron in place then that copy would be conscious and it would think it was ‘you’ and it’d be correct (after all, when I reboot my PC running Windows Vista it never changes to running Ubuntu… ever. Infact, thanks to the exact arrangements of charge in all the relevent chips it will come back exactly as before. Make an identical copy with every one and zero in the correct place and I will have two identical PCs… the spreadsheets (for example) on each one will be the same, no difference.

So, make as many identical copies as you like and each one would awaken with the full set of memories, experiences, thoughts and identity that were there in the original at the time of taking the scan, including the memories of undergoing the scan. Each copy awakes ‘knowing’ it was the original. Basically, ‘you’ awaken every time. If two copies converse, they will both be convinced they are the originals and heated arguments would ensue.

It is only our unique consciousnesses that gets in the way here as we are not evolved to handle the seeming contradiction that there is another ‘me’ because it is so counter-intuitive and hasn’t happened yet. There can be no ‘linked’ communication between the two copies as both are individual brains but they are both ‘you’. The differences set in immediately after awaking/copying as from that point any stimuli would be different for each copy so the two versions of you would diverge more and more, but at the moment of awaking, both would be convinced they were the one copied.

Without downloading memories from one of the copies to the other so the stay-at-home could experience the travelling, maybe the fairest way for Malcolm and his copy would be to draw lots after the copying to see who gets aboard the interstellar mission and who stays. But take solace in knowing that the one who does travel is ‘you’ in every sense and ‘he’ will be happy, in fact, just as happy as the other one had ‘he’ been chosen instead.

@Mark – nicely put.

In regards to the discussion on advanced beings who have not lost their hostile attributes for various reasons, see here:

http://projectrho.com/public_html/rocket/aliens.php#blindsight

@NS

“You have not transferred your essential identity to the replica. You are dead.”

If I may… yet the ‘replica’ will utter more than a sigh of relief when they hear the news that it was the ‘other one’ that just got zapped, and not the (real) one that remembers it’s childhood dreams of wanting to be the first person ever copied and who also rembers walking into the clinic hours before falling asleep for the copying process. The ‘Alice’ that survives is still the real Alice, 100%.

The implication is that it is wrong to adher to the concept of ‘essential identity’ once you make a copy, as with two ‘Alices’ there is no essential identity… both Alices are their own respective ‘real’ Alices. Before, there was a unique instance of a person. Then there are two instances of the same person who differ more and more as time goes by but only in terms of any memories and experiences aquired after copying-day… the rest of the brain, memories and identity however are identical and each instance retains it’s identity. The fact that if one instance dies off it’s only one version of recent memories and the identity that you would identify with as the ‘original’ always carries on, no matter how many times.

Our problem seems to be coming to terms with the difference between instances of the original being just instances of the same identity, each of which is as real as the original… and having each copy be a different person to that of the original who are trapped in their material bodies and who are all individuals with different minds.

If one version of you dies then the you that you identify with as being you carries on. I think our brains have difficulty accepting the fact that there can be two you’s at the same time. However, as we are discussing this in 2014 I find it unappealing to think that my material body might be the one destroyed after my copy was up and about and talking. Psychologically he would represent another unique individual to my evolution-centred brain and I to him no doubt… but we would still be this identity called ‘me’ and the identity born as me would carry on. But survival instinct would kick in surely once my bodys fate was revealed and this brain’s me knew it’s time was up. It’s easier on Star Trek when the original is destroyed just before the copy materialises as then there is always just the one instance of you with a continuous flow of experiences through time, just like today. Each instance or copy would have to be classed as unique ‘yous’ with their own diverging memories… and have the rights of individuals so that no ‘unique versions of you’ were lost. If one dies then that version ends it’s existence, those extra memories are lost (if not backed up first), but all the other yous carry on.

@Mark Zambelli

He’s kinda “out there” to the academic physics community, but I think you’ll enjoy reading up on Nick Herbert’s Quantum Tantra ideology and his speculation of “rapprochement”. I don’t fully understand much of what he’s hypothesizing, but it’s interesting, nonetheless, and seems relevant to the above debate. He similarly argues that a psychic extension of consciousness will be enabled through ‘quantum-mediated telepathy’, whereby Heisenbergian potentia evolves into a merging of mind stuff, unable of currently understood communications between related parties, in a way, becoming unified as a fully realizable subject/object essence–minds conjoined as superimposed matter. (http://southerncrossreview.org/16/herbert.essay.htm)

The refutation of his 1982 paper “FLASH—A superluminal communicator based upon a new kind of quantum measurement” (http://rd.springer.com/article/10.1007%2FBF00729622) led to the discovery of the No-cloning theorem, and he continues to work towards the realization of the possibilities of nonlocal communication and animistic substantiation–

http://arxiv.org/abs/1409.5098

http://quantumtantra.blogspot.com/2014/09/detour-john.html

http://arxiv.org/abs/quant-ph/0205076

http://en.wikipedia.org/wiki/No-cloning_theorem

NS:

Here’s the crux: Separate, yes. It’s own, yes. But despite all that, still you in every sense of the word. Not just resembling, but really you. That is practically the definition of a successful mind upload.

In opposition to the No-cloning theorem stands the possibility of time travel, which would be bad news for quantum cryptography, but good news for quantum cloning. The implications are discussed here:

pop description: Time warp: Researchers show possibility of cloning quantum information from the past

http://phys.org/news/2013-12-warp-possibility-cloning-quantum.html

preprint: http://arxiv.org/abs/1306.1795

pop description: Time Travel Simulated by Australian Physicists

http://thespeaker.co/time-travel-simulated-australian-physicists/

article: http://www.nature.com/ncomms/2014/140619/ncomms5145/full/ncomms5145.html

Closed timelike curves would appear to be the white horse of our near-term physical problems, but very unsettling to imagine experiencing a world where macroscopic time warping happens right in front of your eyes and is commonplace.

http://en.wikipedia.org/wiki/Quantum_cloning

“…still you in every sense of the word.”

No, a mental clone of me, no more me than a whole-body clone made from a skin cell would be me. My identity is not based on a certain neurological or genetic pattern, but on being a particular instance of those patterns. That instance maintains its own identity no matter how many times it is copied. Each of the copies is similarly unique no matter how much it resembles the others. And each (including me) experiences an independent life that it cannot escape from other than by death.

The real issue for mind uploading is not whether a replica works (Eniac, Mark and I all agree it would work just fine) but rather if it can be made to work via other substrates. Does a digital simulation (code running on a computer) also work, or is the argument that simulations are inadequate? I tend to think that simulations will work, but I am uncertain this is true.

If we use the spreadsheet analogy, does a spreadsheet coded in another computer language and perhaps running on a different computer (e.g. Mac OSX vs PC Windows) still remain the same spreadsheet in essence? That computers are Turing machines suggest that the substrate and operating mechanism are irrelevant.

If we can encode minds in software and substrates that are not human wetware brains, then the future possibilities for post human beings opens up very rapidly. It solves or bypasses many of the issues we discuss regularly about the growth of space civilization. Running a robot on a distant world like Titan just becomes a job for the locally uploaded person to control it. Almost a day job. Machines to make bodies for uploaded minds become key, and uploaded minds in these bodies can stay to build a civilization or simply do tourism or temporary work before returning to Earth. Transport of minds is almost trivial – beaming at light speed to receivers, or very fast transport as tiny, high density information stores. Bodies are made locally, so no transport involved. No life support needed, or perhaps some minimal support to allow some functions that don’t work in vacuum. Individuals would not die, so immortality is possible. Accidents just require rebooting from a stored copy.

It should be obvious that if we cannot do this with human minds, we will be able to do this with artificial ones. They will be direct descendents of the software that we use to help run the Mars rovers – beaming software upgrades and patches as needed. As the AI component increases to make the rovers more autonomous, at some point they will likely become sentient and then the basis of space civilization, just as the early Asimov robot stories intimated.

@NS – Your body replaces the atoms it is made of on a constant basis, so that you are not the same physical being that you were when yo were younger. Are you the same person as you were last year, 10 years ago? In essence this is very slow replication. It is the continuity that convinces you that you are the same “I”. Every night you sleep and for a number of periods during sleep, you are completely unconscious. Your “I” boots up just fine each morning. Are you the same person? Is the illusion maintained by having the same body? Anesthesia also robs you of consciousness. Same effect. When you are dreaming, with some brain functions disabled, are you the same person? What if you woke up from unconsciousness and found yourself in a different place. Would you worry that you were a different person? Probably not. But what if you woke up in an apparently different body? Then what? What if that body was orbiting alpha Centauri? What if you were told that you had been replicated?

The slow replacement experiment. Suppose each neuron and its synapses was replaced by a functional identical artificial neuron. This is done slowly, say over a year. When each artificial neuron is working identically to the real neuron, the real one is destroyed, leaving the artificial on to take over. Are you still the same person? If yes, then your mind has been transferred.

The point of this is to try to indicate that subjective experience is important to understanding the replication issue. It is the subjective “I” that counts, not the substrate. As has been explained by others, the various copies of “I” all think they are the original. They are all you, but disconnected. Just like waking up from sleep, they all think they are the person who went to sleep. How are you going to distinguish between them?

During this discussion I’ve never denied that the replicas would work, only that they would be the same human beings as the ones that provided the templates for them. That said I do question whether human consciousness is a computational process that can run on different substrates or if it’s something that only a human brain (or an artificial equivalent) can produce, but I’d say the jury is out on that. I don’t doubt that we can eventually build semi-autonomous machines for space exploration. I wouldn’t want them to be fully autonomous — the Borg or a Butlerian Jihad would be unpleasant situations to deal with.

ljk: “My identity is not based on a certain neurological or genetic pattern, but on being a particular instance of those patterns.”

Nicely said.

Alex: Instead of a spreadsheet, take a novel. Is it the same whether printed on paper, or downloaded to an iPad? Spoken as an audio book? Made into a movie? Except, probably, for the last one, we would all agree that each of these (physically vastly different) are equally valid copies of the novel. In fact, a novel does not really have an “original”, it is all copies. I count myself among those who think a mind is not unlike a novel, except that, currently, the only physical substrate it exist on is a brain. When we say uploading is possible, what we are saying that the essence of a mind can be copied onto a different substrate.

The way I see it, such substrates will be as different from a brain as an iPad is different from a stack of paper. I even consider it plausible that the very same iPad can instantiate a mind, given the right software.

NS:

Each one, though, will know the answers to questions that only you could know. Each one, when asked, will insist they are you. Are you calling them liars? Is one of them telling the truth? What if the original used a Star Trek transporter on the way home from the lab? Would he/she be lying, then, too?

@Alex

I would hope all above arguments can be validated as possibilities, but can any of it be accurately labeled as ‘trivial’?–the problem lies in adequately protecting one’s mind(s) across varying transportation channels. The risks involved with transmitting consciousness, due to the nature of the “technology” mentioned involved, could become much riskier than coming as you are in our current form, especially if someone like Herbert above is onto something when speculating subjective sentience as not limited only to the biological realm of physical systems. Our mind is confined to (and to a certain extent “protected” by) our biological person, and it is limiting, narrow thinking to not just get rid of the confinement altogether and just exist as the pure (or compound) consciousness as (informally) imagined by Herbert, if possible. I believe such a philosphy derives itself from the potential of superposition if subject and object attain traversability between mutable and immutable collapse, which would also prove useful in computational memoization, variantly to biological memoriaztion when and if ego/subjective awareness is lost during such a process–meaning, an artificially conscious/counterfactually definite state may provide compensation in such an event without having to be perfectly reversible in a thermodynamical sense.

If you, for some ‘aesthetic’ reason (other than the “protection” it may provide), like living in confinement (inside a machine or a biological system) or change your mind after the counterfactual objection, then you could simply transmigrate into, or invade a system to host your consciousness (which, hypothetically, should be a possible feat in all objects originating from the source of this and other related universes). Ultimately, such capability enables the most freedom, considering risk. If the host dies, or attempts to somehow thwart your subjugation, you just leave and travel to a new host or animate a new object.

If you want to control multiple minds, simultaenously, it may just make more sense to inflate your single original consciousness, perhaps to 10^133 m^3 and merge with everything, imposing your subjection on the whole universe, right?

@Eniac – I tend to agree with your point about different substrates, but I don’t feel as comfortable with that assertion as I do with perfect replication. I hope this is correct because it should make mind uploading a lot easier.

@Joëlle B – I have read “Elemental Mind” by Herbert but I wasn’t convinced by his arguments. Having said that, I do think that mind should run on different substrates if we we accept computations can be Turing complete.

Now whether I could attain God hood, even Stapledon didn’t go that far. However I do think that we could extend our minds greatly, much like our tools do that for us today, but on a vastly larger scale. I’ve read that our brains might have reached growth limits, so new substrates might allow expansion and huge increases in functionality. I think we can already get a sense of that when we immerse ourselves in computational tools that give us new abilities, e.g. basic AI.

Unlike NS, I am convinced that human consciousness is a computational process that could easily run on a computer, maybe even on one no more powerful than the one in your pocket. Like him, though, I am skeptical as to how much of the process that runs in our brains can actually be extracted, in practice. There are really two related issues here: 1) Can we produce an artificial mind at all (AI), and 2) can we make it sufficiently similar to an existing mind to preserve subjective identity (uploading) . It is not clear to me if either is possible, or even in which order they might be most easily achieved.

What we may have at first may be an AI that is modeled on an existing personality, but not faithful enough to feel like a continuation of self. Like after a severe brain injury and partial amnesia, but with increased instead of decreased performance. Something that is more like us than our children, but not enough so to be us.

To be fair it was Mark Zambelli above who first used the word “instance” in this discussion, which I borrowed without credit.

To Eniac, no, the copies aren’t lying from their own perspectives unless they have some reason to know they could be (e.g. having memories of being in a different type of body than they currently are). But are you so sure they’ll always think they’re me? What if like the replicants in “Blade Runner” they decide they’ve been the victims of a cruel hoax, implanted with false memories of things they never experienced directly? As for the Star Trek transportee, he wouldn’t be lying because as far as he can tell he is the original. But he’s not. The original is dead and he is a replica.

@Alex

No period of sleep, nor anaesthesia renders you unconscious in a strictly physical sense. The brain still registers stimuli as normal, you just experience those stimuli in different ways, [with the drugs/molecules] tricking your brain to lack sensation. However, memory loss (amnesia) and death can occur in both states (via such external to internal ‘mind’ influence) and would seem to point to a physically, even mathematically manipulative manifestation of consciousness, contra a metaphysical one if we consider the current inability to test what consciousness may be before being alive as a human being or after being dead as one, which I think ‘rapprochement’ aims at making irrelevant.

&@NS

Likewise, for a Turing machine model of the brain, computability may present a probelm per the halting theorem and Gödel’s incompleteness theorems in such a situation (cfc. Penrose and Hammerof, and Barrow), esp. one in which Automated reduction plays a large role, even those of Higher-order theorem proving. A computer is a completely algorithmic, deterministic, finitely stringed and expressed system, which lacks intuitionistic logic–this is priority #1 for creating a self-aware AI as a human would consider herself as self-aware. [although, uploading human minds as computer processors may remedy this? But why put it in one/as one if you can get it out of its confinement? Why not take it somewhere else and do something else with it?] Although, if the AI (or computerized mind or some other combination) transcends human self-awareness, it is no longer human, but something else–post or evolved.(re: http://arxiv.org/pdf/1409.0813v2.pdf & http://arxiv.org/pdf/1312.4456v1.pdf)

Such a question boils down to can a computer ascertain mathematical truth?–i.e. How can artificial intelligence compute information beyond mathematical computability (how can it reason) in the face of decision problems [‘Entscheidungsproblem’ cfc. Hilbert, Church-Turing]?

Whether or not mathematics is based in intuitionism or formalism is important–(the ultimate objectivity of either is unkowable without some form of craziness like ‘rapprochement’); Alan Turing took a hybridized view with a systemic, logical approach, further elaborated on by the likes of Seth Lloyd, Fotini Markopoulou (for the purpose of reaching unity between classical and quantum systems for the sake of comsology and computation), and Max Tegmark’s ‘CUH’ (Computable Universe Hypothesis ref: http://arxiv.org/pdf/0704.0646v2.pdf).

Also, Alex, our macromolecular genetic information maintains our physical forms in repair, though it is very probable for our physical organ to completely be damaged and mistakenly repair us in a way that we are no longer recognizable as we were, even causing us to die; think mutations, rapid cell growth/death, and self-invasive diseases, including those detrimental to the brain which may cause one to lose memory or even cause total dissociation from self and stimuli.

Such is why I think if consciousness is seperable and navigable, absent of substrata confined to media, redesigning it into a superimposable potentia would probably be the best bet.

“Now whether I could attain God hood, even Stapledon didn’t go that far.”

If our consciousness exists from a mathematical source of an infinite, eternal inflationary universe model, the Godhood you would experience would be more akin to the one of “Architect” in ‘The Matrix’, or some other form of Titanomachy, seeing as how the same eventuality that enabled you to impose your subjection upon all superposed objects would allow for the eventual, final collapse of your superimposed compound state at the hand of an immutated consciousness, proceeding to measure you [self-locate] into an observable eigenvalue [classical state]. Additionally, a time travelling mind could arbitrarily delete you through P-CTCs (make you into a blank state), and/or a priori, a posterior manifest as clones through D-CTCs (this could also inversely be used as a means to inflate your ego across spacetime, possibly?). In such a universe, all information has already been computed, therefore paradox doesn’t exist–only eventual evolution and the final state of the: Ascendant–the unique inverse relation state between creator (objective mathematical source subjector) and created (mathematical source interacting with subjected spacetime [codomain]) on an identity map. @Eniac & NS; The beginning (or self-evident realization) of a rapproched clone war.

You will have become a participant in a sort of Samudra manthan, prime-program cycle to one-up the infinity of selves, self-(re)locating and expropriating subjection!

http://en.wikipedia.org/wiki/Cosmological_interpretation_of_quantum_mechanics

http://en.wikipedia.org/wiki/Samudra_manthan

http://en.wikipedia.org/wiki/Identity_function

http://matrix.wikia.com/wiki/Prime_Program

NS:

True, in a physical sense. In practice, though, nothing was lost, so it would be inappropriate to speak of death. The replica is the original for any practical purpose. Most importantly, the replica will feel to be the same person subjectively, and make that claim with full conviction.

When it comes to identity of the mind, I believe that subjective experience is the most authorative answer, before “practical purposes”, and much before the strict physical notion of sameness you seem to be applying.

Moving from the Star Trek transporter thought experiment to more realistic uploading/AI, we lose any pretense of physical “sameness”. Concerning the mind, however, I think the exact same reasoning above applies. If the replica claims to be the same person with true conviction, that claim must be accepted on its own authority. Similarly to Descarte’s cogito, ergo sum, which postulates that one’s mind exists, on its own authority.

The transporter is just a special-case replicator that kills the original and produces one copy at a time. It’s certainly better for the replica in the transporter scenario, because the one person who might dispute the replica’s claim is dead, and there aren’t any other replicas to bother with either.

A modified version of the scenario may illustrate the true situation. Dr. X comes to me and says “NS, we need a brave [!] guy like you to test our new transporter. It’ll dissolve you and then re-materialize you in a different location, and won’t hurt a bit. Step in here.” I do what he says, he closes the door, and a minute later I hear a voice saying “It worked! He’s right where we wanted him to be”. The door opens and Dr. X stares at me in surprise. “Hmm, I guess the dissolve process doesn’t work, but for any practical purpose the replica is you, and he’ll back up my story. We don’t need you anymore.” My last memory is Dr. X pointing a gun at my head.

There’s no essential difference between this scenario and the one Eniac proposed. The fact that Dr. X murdered me because the transporter didn’t is irrelevant. That a replica of me is still alive somewhere does me no good at all. I’ve lost everything.

@Enica&NS

Where are you guys getting the information about the Star Trek transporter killing you to make a newer version? The science behind the transporter involved the Heisenberg compensator, which used the exact material (albeit energized into a pattern buffered ‘matter stream’) of the single subject (and in some instances its immediate surroundings) being relocated from one point in space to another, via a subspace domain channel, protected and preserved inside an ‘annular confinement beam’. http://en.memory-alpha.org/wiki/Transporter

You [it] are the same stuff you were before you were transported, with or without sentience and whether alive or dead. Therefore, if Dr. X x’d you out, that’s tick-tack-toe!

Now, if you guys are talking about the Replicator, the technology used for that was similar to the transporter, but it wouldn’t necessitate any replacement (or even interaction) of the original you, since it could take basically any matter and (re)materialize it into whatever it had been programmed to replicate. http://en.memory-alpha.org/wiki/Replicator

@Eniac said on October 19, 2014 at 21:09

“If the replica claims to be the same person with true conviction, that claim must be accepted on its own authority. Similarly to Descarte’s cogito, ergo sum, which postulates that one’s mind exists, on its own authority.”

In “reality”, that philosophy may or may not hold depending on what a mind is. If a mind can be objectified, then that mind certainly does exist on its own authority; but if, and to what degree another objectified subject influences that mind can make that postulation shaky.

[JUST A FICTITIOUS EXAMPLE]

Ex.) Subjective Objection: When you were born, your mother named you, Eniac. By your mother’s authority you are Eniac: a human, American national. Later in life, you develop multiple personality (disorder), you are not the Eniac of your mother’s authority, but ‘Dick, ‘Tom’ and ‘Pamela’–even your association with Eniac has dissolved; the three other persons have completely rejected any notion of your previous self.

Not only that, but Dick, Tom and Pamela are baboons. Thus, not only the nominative authority of your mother has been usurped, but also (subjectively) the “genetic” authority which asserts your humanity.

Your mother, being unable to protect herself from these three personalities who have usurped her authority and exert Papioninic behavior, institutionalizes you in a local hospital.

There, those observing you proceed to administer varying drugs and therapy to recover any latent sensibilities as subjected to ‘orderly’ or ‘accepted’ human social behavior.

The treatment works to a certain degree–Eniac is recovered, but still believes himself to be a baboon, even unto death. But Dick, Tom and Pamela are gone.

Whose authority brought Eniac back? Was it Eniac’s preternatural human mind on its own authority–or a combination of differing authorities acting synergistically?

Could it not be argued that the mother, observers, drugs, baboons, and Eniac were all acting as one mind?

More importantly, how would mathematics attempt to semantically compute the above situation? Would we have to ignore ethical implications, as well?

Joelle brings up split personality disorders (DID, dissociative identity disorder), where one personality can split into two or more, each an independent “mind”, sharing a common body. To me, this is further evidence that “engineering” of the mind may not be as hard as most of us think. That the mind is quite malleable and tractable, and that AI may arise from psychology as it graduates to an engineering science, rather than from neuro- or computer science.

It is an integral feature of the human mind that it incorporates within it dozens, if not hundreds, of little sub-minds, each of them modeling a relative, friend, boss, subordinate, celebrity, or other person that is important to us. We use these models to divine what others are thinking, and to help us manipulate them to act in our favor. It can be argued that this is indeed the most important function of the mind, that the perception and manipulation of the non-human environment is trivial in comparison. Autism can be seen as a lack of this critical human ability.

It is my (naive, as a non-psychologist) assumption that DID can result if one of these models becomes oversized, starting to rival the containing mind, and in extreme cases taking over control of the body, relegating the original person to sub-mind status.

As I recall, in the original Star Trek TV series the transporter worked by changing matter into energy, “beaming” the energy to the destination, and then changing the energy back into matter in precisely the same pattern as it originally was.

However, in one of the early stories or novelizations Scotty disputes that explanation. He says that turning that much matter into energy would cause a huge explosion, and that the transporter actually works by scanning the original, recreating the exact same pattern at the destination, and then dissolving the original. This is more or less the version of it that Eniac and I were discussing. IIRC one of the characters in the story objects that this means a person undergoing the transporter process would die, which everybody in the story sort of shrugs off but which I think is what would actually happen.

@Joëlle B I don’t think we have to go that far. In real life we do have split brain individuals (the only connection between hemispheres is cut to prevent total epileptic seizures). While externally we appear to still observe a single mind, in reality we must have 2 minds, only interacting through the motor system, if at all. What happens if we just transport the mind in one hemisphere, destructively or non-destructively?

Regarding your example, we know minds change, potentially quite significantly with “mental illness” and can return. Multiple perssonality disorder is an extreme case, although lesser problems such as bi-polar disorder can elicit quite strong personality changes. Other physical brain damage, e.g. strokes can also alter a mind (c.f. Jill Bolte’s description of her recovery from a stroke). Comatose patients may have no sign of brain cortical activity, yet can recover in some cases, which suggests to me a consciousness reboot. In all these examples, there are issues of what is meant by the conscious “I”, where might it reside, what the subjective response is in these cases and whether “rebooting” can occur in different bodies and substrates.

If we take the split brain example, transplanting each 1/2 into different bodies, what is the experience of each half and does each part contain a part of the original “I”? If it does, then we can imagine reducing the brain to every smaller pieces and moving them to another body. Or copying them and moving them. Or copying and randomly moving half the copies, and so on.

With transcranial magnetic stimulation, we might even be able to temporarily disable parts of the brain to test ideas of what consciousness and identity is, both subjectively and from an observer’s viewpoint.

Wow, turn my back for five minutes… ;)

@Joëlle B… thanks for all those links. I’ll give them a peruse for sure. It’ll be interesting to see what the links pertaining to Herbert say.

@NS… no credit needed but thankyou anyway :D

@ljk… thanks for the link about “very advanced technology is indistinguishable from nature”. I’ve always had a problem with seeing the Kardashev scale as anything other than just a loose guide… and that link goes some way to suggesting why we see no evidence of any type IIIs in the (admittedly small) region we can probe.

After NS’s hypothetical Dr. X, I thought I’d provide a link to John Weldon’s “To Be” animation. Sorry if you’ve seen it but it’s perfect for our discussion here… https://www.youtube.com/watch?v=pdxucpPq6Lc&app=desktop .

Joëlle, I think that while the specifics may differ, the mention of Star Trek transporters here seems to still be relevant. While Kirk’s ‘matter’ is disassembled and then sent through the emitters to the destination for reassembly, for the several seconds between, Kirk is most definitely destroyed and isn’t alive (going further, in the episode ‘Relics’, Scotty (or rather Scotty’s pattern) has been cycling in the buffer for 80 years. I think it’s fair to say Scotty was not actually alive for those decades, only that his pattern remained coherent enough for reconstitution).

The analogy still stands I hope. The replicators are to my mind (and NS’s) specialized transporters that use stored patterns rather than using on-the-fly patterns (the matter can be provided via e=mc^2 from surplus energy). Maybe it would be better to bundle ST transporters into the same catagory as Niven’s ‘teleportation booths’, Brundle’s ‘teleport pods’ from ‘The Fly’ or even Dahl’s ‘Wonka-vision’ as these rely on information-sending rather than ST’s ‘matter stream’. BUT, even in the ST world there is the episode ‘Second Chances’ (I mentioned previously) where a second, redundant confinement beam is used to boost Riker’s ‘pattern’… it gets reflected to the surface where a second instance of Riker materializes only to spend the next 8 years thinking the beam-out failed afterall. Thought provoking.

I can’t agree with NS though on the subject of our consciousness being incapable of copying/uploading as I’m still in the camp that thinks our minds are an emergent feature of our brains (connections and electrical polarisations etc) and should therefore be uploadable. I think that our brains can be simulated and in an ideal process, would lead to ‘minds’ that are indistiguishable from the biological original in the important areas. Yet I admit this is uncharted territory (yet) so all opinions are valid.

As for the ‘spreadsheet’ being the same on different substrates… hmmm. The information content should be identical but can it be if it’s a different OS? Maybe those type of differences lead to subtle changes that might manifest in an uploaded mind as some change to the personality itself?

(Sorry, I used a backslash by mistake after the first italicised ‘nature’ above so the rest of my speil should’ve been in normal format, oops)

The question raised about personality disorders puzzles me somewhat but only when I can’t see past that term disorder. Would this be akin to just a malfunction in the hardware and if so, would it ever be possible to retrieve the original Eniac? (Sorry Eniac). If trauma or illness changes the brain slightly so that it leads to depression, say, yet doesn’t have a major impact on the personality and has no impact at all on identity, then this may be telling us something about how tenacious this mental projection we call ‘us’ really is. That it continues essentially unchanged despite subtle differences in the hardware / activity I find profound. Up the damage and we see drastic changes to the personality, as was the case for my grandad after his stroke. The hardware can still generate a personality of sorts but clearly different to what emerged before the damage. In theory, I think it would be possible to repair the damage perfectly and recover the original, though by perfectly I mean restore every nueron and its number of connections to the previous ‘map’. If new pathways regrow by themselves then the original map will change and the personality that is generated becomes changed.

If the personality disorder is caused by an imbalance in brain chemistry as in depression or anxiety then drug therapy can, and does, restore the original personality to before. My quandry then lies in how much damage or change to the hardware can the brain suffer and still be able to generate the ‘you’ that was there originally.

I have a ridiculous thought-experiment. Sum up the number of neurons in a human brain and factor in the total number of connections possible (oversimplifying here, sorry) eg lets say ten per neuron. Now imagine a brain for every possible combination. Does each brain generate a unique personality or do the brains that differ by only a few(?) connections generate the same personality? If the connections between neurons generate memories then lots of brains will have the same memories with only small differences here and there and I dare say these brains will essentially be generating the same identity/personality. While there may no longer be much space left in our Hubble Volume, does this imply there are, and only ever can be, a finite number of human personalities? Creating more brains that are different involves changing the number of neurons… increase (or decrease) the neurons and you no longer have a modern-day human brain. Extreme changes should generate minds that we would percieve as alien. Our imaginations would seem to be disconnected from actual physical patterns of neurons and rely on electrical activity passing through connected areas… the same group of neurons therefore generating a seemingly dizzying array of imaginings with some of these thoughts being laid down as connections or strengthened pathways that we would call a memory to be accessed later and thus adding to our ‘identities’.

It seems perfectly reasonable to be able to simulate the complexity of a human brain and once the complexity reaches critical then human consciousness should materialise. This will have to come in stages of research with simpler simulations providing aspects of us, eg senses, spatial awareness etc first and building to the whole mind later. Bring in AI and the advances there and I can imagine the evolution of an artificial human mind coming from a concerted effort where these two fields meet.

Fun.

That example with Scotty and Kirk would not contradict the scientific continuity within the Star Trek universe or my comment, since even the inventor of the transporter technology refutes the idea of what you are arguing during a similar incident, whereby Quinn Erickson’s pattern has been priorly suspended in subspace after a failed ‘subquantum’ transporter experiment. What I deduce from the information combined [original reasearch absent of canon explanation], is that the subspace domain acts as a medium for a sort of quantum creation process; no matter how many ‘yous’ are created, the physical laws of the Star Trek Universe allow for a unique path integral formulation enabling a type of rapprochement as envisioned above in my reply to Alex. Also, again, whether you are alive or dead doesn’t matter.

And the Second Chances episode is interesting, due to the distortion field from the planet interacting with the second containment beam being reflected from the interference–this, however, does not affect which Riker is the original (as both of them are), though at the end of the episode, one Riker nominatively subjects authority over the differing personality, becoming two distinct persons: Thomas; William.

Hypothetically, in any case, each instance should be second quantizable and invertable, if the physical laws permit it. :)

@Eniac & Alex

I agree with those notions, although the arguments above would suggest to me that the “I”, wherever it may exist, without the influence of whatever enables it after being confined to whatever substrate, would imply an unpredictable animation of the end result. If you beam your own mind, or a copy of it somewhere else, your mind may secede into a preternatural state, collecting an entirely different evolution of experiences, even foreign to that in which you had before you were aware of any identity of ‘self’. I would hope that it’s possible, but NS may be right.

Our mind may be an emergent feature of our brain, but who you are is an emergent feature of your environment and those other authorities, or minds influencing and shaping your perceptions. A newborn human child has basic emergent properties of a mind, but such a mind has been designed (or specialized) over billions of years to react in a subjective manner, as Eniac above has argued. What is the progenitorial mind of this ‘hive mind’?–I think that is a more important question to answer in finding out really how tractable the concept really is.

There are arguments for and against how malleable the brain is, often labeled as “plasticity”, which I believe was touched on in an earlier CD post referencing a paper on theory of mind, indicating mysterious, or yet to be understood results from a historical perspective of biochemis Dr. Donald Forsdyke: https://centauri-dreams.org/?p=30837&cpage=1#comment-119087

I am also done commenting on this post for now, it seems to be going on a fast track to speculative oblivion.

One final observation in reply to Mark,

I personally don’t think it’s ethically sound to impose your will on another person simply because they are seemingly out of sync with the rest of society, unless they voluntarily seek help; and even then the help must be administered on said person’s own terms, which institutions often fail to prove reliable in that regard. Malfunction is too harsh of a word and would limit the rich forms of creativity that people with varying personalities are capable of. To me, an AI with an infinite amount of personalities would be an interesting venture to analyze.

If you want to be a baboon and genuinely believe you are, I don’t believe anyone has the right to try and change your mind unless you allow them to, Mark. People have the right to defend themselves if a person’s behavior endangers them, but to restrict someone’s freedoms after they have sought help is counterintuitive and disgusting, to me. The question can also be asked, what role does mental diversity play in evolution? As the Tegmark paper on friendly AI suggest, how much do we gain or lose by formalizing certain aspects of ourselves?

Mark Zambelli:

Yes, certainly, in the same sense as there can ever only be a finite number of different books, all eventually typed by enough monkeys on enough typewriters in a large enough room. It is the very large size of this number that makes it practically indistinguishable from infinity.

Mark Zambelli makes an excellent case about the malleability of the mind and the conceptual problems we are already having with the definition of the mind’s I given mental disorders and brain injury. Uploading will create some more such problems, but it is not really a gigantic leap as some would have it. Also, of course, as I have said many times, this resilience of the mind makes it clear that, unlike air travel, uploading will not have to be near 100% perfect to be practical.

Thanks Joëlle regarding your Star Trek comments… the amount of thought that goes into some of the ‘science’ in the many versions of the show is one of the reasons I have always been a follower of the show (do the terms ‘trekkie’ or ‘trekker’ still have meaning as I suppose I’m one of them?).

And I generally agree with you on the grey-area mental health issues you raised above. It is an abhorent thought that we would ever impose any ‘treatments’ on someone who is capable of asking for help but chooses not to and the bone-of-contention for me would only lie in the area where that persons, or more importantly the safety of others, is concerned… then we rely on comparing that individuals mental situation against some ‘standard’ that has settled in place over the development of our societies (I know that is fraught with danger and susceptible to abuse). I agree that we have probable cause for lamenting the possible losses over the years from instances of medicine reducing the diversity of creativity by ‘curing’ (essentially modifying someone’s mind to be closer to a socially acceptable ‘norm’) patients. When the health issues are very extreme then we have to consider safety….?

As for babies having identities I doubt it. The newborn brain is very much incomplete with a furious amount of connections and growth occurring during the first four years or so. Various psychological tests (ie the mirror test) show that self awareness doesn’t kick-in until about the second year and a ‘theory of mind’ doesn’t happen until about year five. I think that the newborn brain is primed for becoming a human but that essential quality of Identity or ‘me-ness’ isn’t there at the start but emerges in short order with increasing complexity and then evolves through nurture and experience-of-existence to shape the person involved. Maybe that is the way towards AI; allow any neural-nets (or whatever) to parallel our own development and see if we can ‘grow’ an AI that becomes much more than a sum of its parts.

I also agree that the discussion is winding down and will yield any floor left but I wanted to mention some musings on the ‘spreadsheet’ example above. Provided the data content in both versions of the same spreadsheet remains identical despite them being on different machines/OSs/code then doesn’t this (oversimplified example) imply that two different substrates, eg brain and computer sim, or two differently coded sims, can generate the same information content and might be applicable to having a duplicated personality running in two different mediums?

Fascinating.

Hi All

Alex Tolley said:

Your body replaces the atoms it is made of on a constant basis, so that you are not the same physical being that you were when yo were younger. Are you the same person as you were last year, 10 years ago?

This isn’t true for large parts of the brain. Carbon-dating recently allowed anatomists to age different parts of the body – the neocortical neurons range from almost as old as the person’s calendar age to the end of neurogenesis at about age 2. After that the neurons are stable against all the to-and-fro of molecular activity. The hippocampus – the buffer for short-term memory becoming long-term memory – does gain new neurons, but it’s hard to argue that it’s the source of personal identity. It might even be replaceable by prostheses, as at least one team is attempting to develop. The only other part which shows post-infancy neurogenesis is the olfactory bulb. The rest of the brain is “neurostable” – what changes are the neuroglia and the degree of connection between neurons.

In light of such data, I’d be very reluctant to imagine that neurons in the brain can be replaced willy-nilly with no effect on personal identity.

@Mark

The emergent properties of a baby’s (human) mind includes its development; my comment was meant to be congruent with Eniac’s idea of the subjective integration of varying “divined” personalities and ejective incorporation of environment and genetics. “Me”, for humanity, doesn’t include a single object; so, where should we look to find objectivity of mindfulness if it is a conglomeration of so many different awarenesses, expereinces and observers?

@Adam

“After that the neurons are stable against all the to-and-fro of molecular activity.”

What do you mean by the above statement?–it would appear to be a slight misrepresentation of the research, if you are referring to http://www.pnas.org/content/103/33/12564.long & http://www.sciencedirect.com/science/article/pii/S0092867413005333 ?

They can be stable, naturally and during the aging process [if nothing is happening at the <1% error margin discussed in the paper], but there's definitely room for technical artifacts to influence or change that stability (i.e. neurogenesis, protection and modulation induced via the CB system), as well as naturally occurring disruptions (i.e. neurodegenerative disease vs the former). As Alex mentioned, transcranial magnetic stimulation could delve deeper into what exactly is happening here, since the destabilization [damage] of these parts of the brain are susceptible to anterograde amnesia, semantic dementia and Alzheimer's–all conditions which can affect 'identity' and who you were 10 years before neurodegeneration began.

But still, while you may not mentally be the person you were before, regaining ‘yourself’ may not be out of the question. Therefore in light of such data, I’d just assume we need a lot more data, before we throw in the towel.

I also wouldn’t be surprised if other receptors have modulating, protective or regulatory affects, yet to be discovered, that could help give us insight into manipulating neurogenesis and hopefully fight disease.