Our speculations about advanced civilizations invariably invoke Nikolai Kardashev’s scale, on which a Type III civilization is the most advanced, using the energy output of its entire galaxy. Given the age of our universe, a Type III has seemingly had time to emerge somewhere, yet a recent extensive survey shows no signs of them. All of this leads Keith Cooper to consider possible reasons for the lack, including societies that use their energies in ways other than we are imagining and cultures whose greatest interest is less in stars than in their galaxy’s black holes. Keith is an old friend of Centauri Dreams, with whom I’ve conducted published dialogues on interstellar issues in the past (look for these to begin again). A freelance science journalist and contributing editor to Astronomy Now, Keith’s ideas in the essay below help to illuminate the new forms of SETI now emerging as we try to puzzle out the enigma of Kardashev Type III.

By Keith Cooper

It’s not often that SETI turns up with a result that can be considered far-reaching, but the initial results from the Glimpsing Heat from Alien Technologies (G-HAT, or ‘?’ for short) survey, which Paul wrote about in April (see G-HAT: Searching for Kardashev Type III), fit the bill. Using publicly-available data from NASA’s Wide-field Infrared Survey Explorer (WISE), astronomers have searched 100,000 galaxies for anomalous infrared emission that could be an indication of heat emitted from vast energy collectors and their consumers encircling myriad stars.

The idea is that as a civilisation grows more technologically advanced, its hunger for energy increases. Civilisations could build Dyson spheres to capture all the energy from their star; as they spread to other stars, they may build Dyson spheres around them too. After perhaps a few million years, they spread amongst all the stars in their galaxy, building Dyson spheres around every one of them. The Dyson spheres grow hot and re-radiate some of that thermal energy away as mid-infrared radiation. Consequently, a galaxy that has been completely filled with intelligent, technological life should completely alter the light coming from that galaxy, pushing it more towards the infrared.

Yet the search of 100,000 galaxies has not turned up even one single galaxy that has the signature of a civilisation harvesting the energy of an entire galaxy of stars. This would be analogous to a Kardashev Type III civilisation, referring to the scale developed by Soviet astrophysicist Nikolai Kardashev to measure a civilisation’s energy usage. He based his scale on the Milky Way, so a Type III civilisation resident in our own Galaxy would have a total output of 1036 watts; an analogous civilisation in another galaxy may have a higher or lesser energy output as a consequence of the differences in the number of stars between galaxies, but for the purpose of this article we’ll describe them as Type III too.

Going down the scale, there are Type II civilisations, which harness the energy of a single star, which in the case of the Sun would be 1026 watts; again, for other stars, this will vary. Meanwhile a Type I civilisation is able to collect all the energy available to it on its home planet, which for the case of Earth is about 1016 watts. Carl Sagan further developed the scale, adding graduations between the types. Human civilisation comes in at just 0.7 on the Kardashev–Sagan scale.

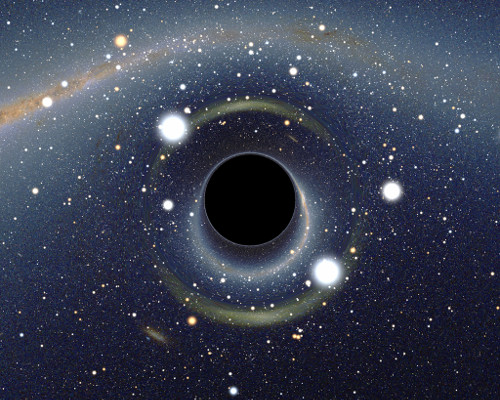

Image: A Kardashev Type III civilization would be able to exploit the energy of all the stars in its galaxy.

The point of all this is that the G-HAT result throws a spanner in the works, by finding no Type III civilisations anywhere. It demands that we look again at the Kardashev scale and the assumptions that it makes.

Indeed, at first glance it may seem like bad news for SETI. After all, the Universe is very old, as are the galaxies that inhabit it. There should have been plenty of time for a civilisation, or more than one civilisation, to colonise and collect the energy from every star they come across in their galaxy, so why haven’t they?

There are a couple of reasons why the apparent absence of Type III civilisations might not be bad news for SETI. First, although there may be no Type III civilisations out there, Jason Wright of Penn State University, who founded the G-HAT project, says we shouldn’t yet discount civilisations below that level.

“This search would have only found the most extreme case of advanced civilisation, one that had spread throughout its entire galaxy and was capturing and harnessing one hundred percent of the starlight for its own purpose,” he told me when asked about G-HAT’s findings. “Kardashev 3.0 is the most extreme possible case, but there could still be a Kardashev 2.9, where only ten percent of the starlight is being used, or 2.8 where only one percent of the starlight is being used. So we’ve ruled out 3.0, but we’ve not even gotten down to 2.9 percent yet, much less something smaller like 2.5, that could be very hard [to detect].”

So far, the G-HAT analysis has found no galaxies with an infrared emission signature suggesting more than 85 percent of the starlight is being converted into thermal radiation. Fifty galaxies in the survey did stand out as having greater than 50 percent of the starlight being transformed into infrared emission, and follow up work on these is the next step, but to confuse matters there are also natural phenomena that can mimic this infrared emission, chiefly interstellar dust. Starburst galaxies, which are experiencing a severe bout of star formation, produce substantial amounts of dust. This dust absorbs starlight, heats up, and re-emits at mid-infrared wavelengths. The fifty galaxies with high infrared emission are quite possibly starburst galaxies (one of them, Arp 220, certainly is).

Image: Messier 82 (top of image), seen here with the spiral Messier 81, is a starburst galaxy, meaning it is currently forming stars at an exceptionally high rate. This huge burst of activity was caused by its close encounter with Messier 81, whose gravitational influence caused gas near the center of Messier 82 to rapidly compress. This compression triggered an explosion of star formation, concentrated near the core. The intense radiation from all of the newly formed massive stars creates a galactic “superwind” that is blowing massive amounts of gas and dust out perpendicular to the plane of the galaxy. This ejected material (seen as the orange/yellow areas extending up and down) is made mostly of polycyclic aromatic hydrocarbons, which are common products of combustion here on Earth. It can literally be thought of as the smoke from the cigar. Credit: NASA/JPL-Caltech/UCLA.

However, the analysis has not yet looked at galaxy type. “That would be an excellent next step, to separate out the galaxies that have a lot of dust and which we would expect to be giving out a lot of heat, from the ones that have hardly any dust and shouldn’t be giving out any mid-infrared radiation at all,” says Wright. He is referring here in particular to dust-free elliptical galaxies; if one was found to have infrared emission that might be relatively low compared to a starburst galaxy, but was high for an elliptical galaxy, it might signal something unusual.

It would seem then that there could still be life in these galaxies, life that could be technological, star-faring and energy consuming – we’ve barely scratched the surface. And yet, one pertinent question still remains unanswered: where are all the Type III civilisations?

The G-HAT results tell us that Type III civilisations do not exist (or, at best, have a frequency of less than one Type III civilisation per 100,000 galaxies). This is why I suggested at the top of this article that this result is far-reaching – we now know something that we didn’t know before, namely that civilisations do not seem to reach Type III status. This, though, is the second reason why the result is not necessarily bad for SETI. Think of it this way: the Kardashev scale has become part of the SETI furniture since it was first proposed in 1964. The G-HAT result forces us to question our assumptions about the Kardashev scale and broaden our thinking about extraterrestrial civilisations to encompass other ideas.

Of course, any model has assumptions inherent in it. So let’s assume that technological extraterrestrial civilisations do exist in the Universe and that they are far older than we are (dictated by the fact that the Universe is very old, and there has been plenty of time for civilisations to have gotten well ahead of us before there was even life on Earth); these seem fairly safe assumptions for this kind of discussion. Somewhere along the line they are falling off the Kardashev trajectory. Why?

I want to flag up three possibilities. They may not be the only possibilities. We’ll discount for now the notion that civilisations could destroy themselves – once they become interstellar the task of destroying themselves becomes inordinately more difficult, so for our purposes we’ll assume they at least reach the stage of interstellar flight. On what alternate trajectories away from the Type III destination could their evolution take them?

1. They fail to colonise all the stars

This hypothesis would to an extent fit with the G-HAT observations – extraterrestrial civilisations haven’t built Dyson spheres around 100 percent of the stars in any of 100,000 galaxies, but the result leaves room for them to have done so around a smaller percentage of stars. Perhaps the best reasoning as to why an advanced civilisation possessing the ability for interstellar travel would fail to colonise an entire galaxy is Geoffrey Landis’ percolation theory.

Landis makes the assumption that interstellar travel is short haul only. We might be able to make direct flights to alpha Centauri or epsilon Eridani, but anything much beyond that, moving at just a small fraction of the speed of light – let’s say between 5 and 10 percent – is going to take far too long. So instead, civilisations will hop across the cosmos via the stepping stones of the colonies they set up along the way. For example, imagine three worldships leaving the Solar System for pastures new: let’s say alpha Centauri, epsilon Eridani and Barnard’s Star, all of which are relatively nearby. They set up colonies there, begin building Dyson spheres and perhaps, after a few centuries, those colonies are ready to send out their own pilgrims to new stars further afield, which then found new colonies and, after a few centuries, they too head out on voyages of colonisation, and so on. Over the millennia, humankind’s reach gradually telescopes outwards.

What Landis realised was that not all colonies will seed daughter colonies. The drive to go further will not exist in every colony; cut-off from their mother-world, Earth, by time and space, they build their own cultures, their own histories, and face their own, perhaps unique, challenges. Some will be content to not explore further. Others may destroy themselves, or exhaust their resources before they can build a Dyson sphere. In some cases, there may be no worlds in nearby systems suitable for colonisation. The consequence of any of these possibilities is that some colonies will become dead ends and will fail to colonise further.

To model this, Landis assigns a probability of being colonised to a given planetary system. If that probability is above a critical threshold, then it will be colonised. If it is below the threshold, colonisation of that system will not take place. Eventually, all colonies may result in dead ends, ultimately limiting the extent to which that species colonises the galaxy it exists in. Even if there is one line of colonisation that does continue for a time, there will be voids all around it, left empty by the dead end colonies. A civilisation would struggle to reach Type III status in this fashion.

Landis’ percolation theory is not without its critics. Robin Hanson of the University of California, Berkeley, points to economics and argues that the only way to survive would be to keep up with a colonising wave because the wave would consume all the resources, leaving little of value behind it, a kind of ‘burning of the cosmic commons’ as Hanson describes it. Jason Wright is also critical, arguing that the proper motion of stars would eventually allow active colonies to spread to other stars. For what it’s worth, Landis agrees that the percolation model is not without its problems.

Landis counters that the motion of stars is slow, at least compared to the lifetimes of civilisations in human history, although Wright points out that all a colony then has to do is wait for one of its neighbours to die off before moving in. Landis is unperturbed by the critics, however.

“A lot of people have commented saying they don’t think it is a sophisticated enough model and that they think it needs more work, and that’s fair,” he told me during an interview in 2013. “I just worry that a model that has too much sophistication into which you are putting data that has no validation is hard to really justify.”

Perhaps percolation theory as it stands isn’t therefore the best solution, but instead maybe it’s a good starting point for considering alternatives to how civilisations could migrate through a galaxy.

2. Their energy requirements are low

Another alternative may be that they never really begin to climb the Kardashev ladder at all, which could lead to two outcomes.

Serbian astrophysicist Milan ?ircovi? has described civilisations that are driven by optimisation, rather than expansion. The optimisation is focused primarily on computation (Jason Wright suspects that Type II and Type III civilisations would use large amounts of their energy for computing, which produces heat). An optimised society would not need to colonise other stars and capture their energy because they would lack the population or computing power that would otherwise soak up vast amounts of energy.

“An optimised society is intrinsically less likely to be observed because most of the things that we tend to associate with advanced technology and advanced societies actually consist of waste energy and the waste of resources,” ?ircovi?, referring to the Kardashev scale, told me in an interview around five years ago.

An optimised society need not be limited to one planetary system – they may still wish to explore, sending out probes to all corners of their galaxy, but colonising star systems to harvest their energy and resources is not on their list of ‘to do’ things. Rather than building galactic empires, optimised civilisations could be like the ancient Greek city states, which would send out scouts just to explore, says ?ircovi?.

Jason Wright acknowledges that a galaxy-spanning civilisation need not be a Type III civilisation; it could still be possible to colonise a galaxy without having to build Dyson spheres around every star. Such galaxy-spanning civilisations could be very hard to detect. However, if an advanced civilisation has had time to colonise a galaxy, why would they not build all those Dyson spheres? The distances involved would mean that colonies, or clusters of close colonies, would develop their own societies relatively independently of the others. Some may chose to become optimised, others may be expansionist and energy-hungry, but the result would be a galaxy-spanning civilisation that does not use all the energy of that galaxy.

3. Black holes are more interesting

I confess, I’m rather taken with this idea. It could still be wrong, but it strikes me as being more purposeful than percolating slowly and somewhat randomly through a galaxy, and more ambitious than an optimised city state.

Suppose Kardashev is right, and Milan ?ircovi? is wrong, and that civilisations actively seek energy. So let’s imagine that a civilisation reaches Type II status, after which it heads for the stars, perhaps even building Dyson spheres around some of them. Estimates suggest that there could be as many as 100 million stellar mass black holes in our Galaxy. Some of them remain dark, while a few are lit up in X-ray binary systems, feeding off a companion star. Sooner or later a star-faring civilisation is going to bump into a black hole. What then?

Image: Simulated view of a black hole in front of the Large Magellanic Cloud. The ratio between the black hole Schwarzschild radius and the observer distance to it is 1:9. Of note is the gravitational lensing effect known as an Einstein ring, which produces a set of two fairly bright and large but highly distorted images of the Cloud as compared to its actual angular size. Credit: Alain r (Own work) [CC BY-SA 2.5], via Wikimedia Commons.

Black holes seem to hold a special fascination for physicists: they create the most extreme gravitational conditions in the Universe, making them a great place for thought experiments. Numerous physicists including John Wheeler, Roger Penrose, George Unruh and Princeton’s Adam Brown have all speculated on methods by which, in principle, it might be possible to draw energy from a black hole. And my, so much energy! Paul Davies in his book The Eerie Silence suggests that a spinning black hole could power our present human levels of energy consumption for at least a trillion trillion years, long after the stars have gone out.

There are numerous options for deriving energy from black holes. Hawking radiation is not the best option, because it leaks out at a trickle, is very low temperature and is difficult to bottle. Small black holes that evaporate relatively quickly would be more efficient for this, but they would not last long. Hawking radiation would make the perfect waste disposal system though – drop your rubbish into the black hole, wait a little while and get energy from Hawking radiation back out.

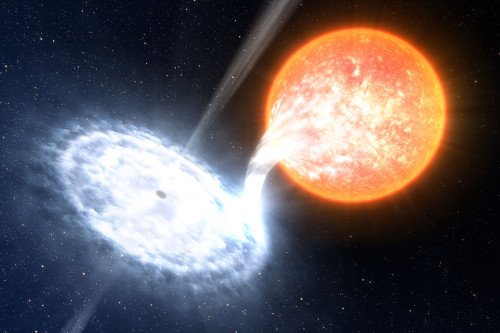

Then there is the energy radiated by the hot plasma in an accretion disc around a black hole, which is often funneled away in a magnetically collimated jet. This could be created artificially – perhaps by sending a steady stream of asteroids and comets, perhaps even planets and stars themselves using Shkadov thrusters (giant mirrors larger than a star, which act as immense solar sails, the mirror’s huge gravity pulling the star along with it) to nudge the star towards the black hole. Alternatively, there are instances in nature whereby a star naturally exists next to a black hole – the aforementioned X-ray binaries (though in many X-ray binaries the black hole is substituted for a neutron star). Jason Wright suggests that the energy efficiency of such a system would be 10 percent, making it the most efficient sustainable method of converting mass to energy.

Then there is the rotational energy of a spinning black hole. To illustrate the concept, in their book Gravitation, Charles Misner, Kip Thorne and John Wheeler imagined some form of cosmic dump truck swooping down through a black hole’s ergosphere – a region just outside a rotating black hole where an observer is forced to rotate with the black hole, but at the same time can also extract energy from the black hole. The dump trucks, each packing a million tonnes of rubbish, take a particular trajectory through the ergosphere and are able to tip out their industrial waste into the black hole. The dump trucks recoil from the ejection of the rubbish and are catapulted back the way they came, stealing away some of the black hole’s rotational energy in the process. Because the mass of the black hole has increased by the mass of the garbage dumped into it, the mass-energy of the black hole is higher than before the dump truck entered it, allowing the truck to leave with more energy than it started with. To put this in terms of the amount of energy available, up to 29 percent of the mass of the black hole is expressed in terms of its rotational energy, according to Paul Davies – this is leagues above the one percent of a star’s mass that is radiated away over a stellar lifetime.

Image: Artist”s impression of a black hole and a normal star separated by a few million kilometres. That’s less than 10 percent of the distance between Mercury and our Sun. Because the two objects are so close to each other, a stream of matter spills from the normal star toward the black hole and forms a disc of hot gas around it. As matter collides in this so-called accretion disc, it heats up to millions of degrees. Near the black hole, intense magnetic fields in the disc accelerate some of this hot gas into tight jets that flow in opposite directions away from the black hole. Credit: ESO/L. Calçada.

The difference between collecting energy from stars and latching onto black holes is that you can do more with black holes than simply generating power, and it is these extra factors that could make them more attractive than colonising the stars. For one, black holes could potentially make the most powerful computers in the Universe. A computer’s computational power is a function of both its computational efficiency and its mass. Black holes have great mass, but computational efficiency? That would take a bit of organising. The trick is to use Hawking radiation, which is formed of pairs of virtual particles that appear close to the black hole’s event horizon.

For each pair, one particle heads inwards towards the black hole’s singularity, while the other quantum tunnels its way through the event horizon and escapes. However, both particles are forever connected via quantum entanglement. Now, send matter into the black hole – perhaps the waste on the dump trucks – in a specific fashion to ‘program’ the black hole, and it will interact with the infalling Hawking radiation particles. This interaction, specifically fine tuned, will then change the state of the outgoing Hawking radiation particle via entanglement, hence producing an ‘output’. Of course, all the Hawking radiation would have to be gathered, sorted through for the relevant bits of data and processed using knowledge of quantum gravity, a theory that remains stubbornly beyond our limits for the time being.

Then there is the possibility proposed by Sir Roger Penrose that black holes are the birth-sites of new universes; an advanced civilisation may choose to somehow enter one of these universes in a black hole, therefore disappearing from our Universe.

Pressing black holes into service could possibly be within reach of an advanced civilisation; black holes provide astoundingly attractive destinations for intelligence. Clément Vidal, in his book The Beginning and the End, points out that there is a surprising over-abundance of X-ray binaries within three or four light years of the galactic centre – maybe advanced civilisations around their stellar-mass black holes migrating towards the supermassive black hole at the centre of our Milky Way galaxy?

Perhaps. The stars still have their attraction, but as the G-HAT result shows, we need to start looking for alternatives to Type III civilisations. These are just three ideas – your own ideas may well be better!

If you look at the distance between black holes ~1000 ly they are quite rare. If you calculated the mass of material available for hydrogen/helium burning within a cubic thousand light years there would be much more energy available even taking into consideration the inefficient fusion process compared with the gravitational approach. Now the fusion process may be not be efficient for energy but we get heavier elements allowing much more useful materials to be made. In my opinion a neutron star would be better than a black hole, dump hydrogen/helium onto its surface and flash.

Aliens in control of the region around a black hole or neutron star for that matter would have an incredible weapon and transport system able to reach between stars and even galaxies.

I think some of the commenters who speak of civilizations plateauing at some energy far below a Type III or even Type II civilization are underestimating how easy expansion of energy production would become for a civilization that had developed “Von Neumann machines”, i.e. self-replicating robots (or self-replicating robot factory complexes). Once that’s possible, you can send just a single one of them to some location with all the needed natural resources (a planet, moon, or asteroid belt) and their numbers will grow exponentially without any further effort on your part, so that within not too long your civilization can have a vast number of robots ready to work on any massive project you like (like converting some significant fraction of the matter in the solar system into a Dyson swarm, constructing massive space-based lasers to push along a fleet of sail-based interstellar probe, etc.).

As for the black hole answer to why we don’t see evidence of other civilizations, I think there’s a flaw in that–if black holes were so valuable in terms of energy or computation or unknown quantum gravity effects, wouldn’t an interstellar civilization with self-replicating machinery want to convert a lot of the stars in the galaxy into black holes, perhaps by using Shkadov thrusters (another large-scale engineering project that would become a lot easier with self-replicating machines) to crash together multiple stars at a single place and time? Why would they settle for only the black holes already in existence, which make up a relatively small proportion of the mass of all the bodies in the Milky Way (not counting insterstellar dust/gas and dark matter)?

I still think the simplest answer is just that no technological civilizations have arisen in our past light cone. Lest this seems unlikely given all the exoplanets we’ve seen, just consider the point emphasized by the authors of “Rare Earth”: if there are a bunch of independent unlikely factors in a planetary system that are each needed to have a planet suitable for the long-term evolution of multicellular life (and the authors give many plausible candidates for such factors), then the probabilities must be multiplied, which can quickly lead to multicellular life being astronomically unlikely even if the individual probabilities aren’t that low. For example, just 8 factors which each have an individual probability of 1 in 1000 of occurring in a given star system would lead to a total probability of 1 in 10^24, and there are only thought to be about 10^22 – 10^24 stars in the observable universe. The same goes for the possibility of multiple “hard steps” in the evolution of intelligent life as suggested by Robin Hanson, where each step is somewhat unlikely even if all the previous steps have occurred already (candidates could be the origin of life, the evolution of complex error-correcting systems in genetic replication, the evolution of eukaryote-like cells with more permeable membranes, the evolution of sexual reproduction, the evolution of multicellular life forms with a body plan that will later allow them to grow to large size on land, and finally the evolution of intelligent life).

Let’s talk about an analogy with the ocean. Algae think “We can spread all over, and fill the ocean, blocking out the Sun.” Except they don’t, since the oceans are dominated by multicellular life – fish eat the algae, keeping the oceans clear of too many algae (but not eating all of them, or the fish would die off).

Maybe the oceans were choked with rafts of algae billions of years ago, before multicellular predators evolved. Then fish came along, and the new order of clear oceans came about.

What if 10 or 11 billion years ago, the first intelligent species filled their galaxies with Dyson spheres. Then a couple of things happened:

1.) Type 2 civilizations proved to be mortal, and not invulnerable, and too close to the “hardscrabble resource wall” to last more than a few tens of thousands of years.

2.) Due to the concentrated energy and information in the Dysons…predators evolved. Some from falling Dyson civilizations heading into dark ages and going back to collections of longer lasting Type 1 civilizations on a few of the star’s remaining planets. They sent out raiders…

Or parasites. Something that fed on (or raided, like the Barbarians sacking Rome) the Dysons, and kept them in check.

3.) Other replicators, including new waves of primitive ones launched by primitive civilizations like us, got sucked into the developing galactic ecologies (see Robert Charles Wilson’s Spin series, and also “Burning Paradise”), that influenced the evolution of galaxies in subtle ways.

4.) Part of a healthy galactic ecology is new civilizations evolving as stale billion year old ones die off. That’s why we have been left (seemingly) alone, and allowed to develop.

5.) The “Fish” that nibble on the “Algae Blooms” of Dysons might be hard to detect, so they can sneak up on the Dysons when they’re hungry. They usually leave smaller civs alone, the better to let them grow into bigger, tastier Type II’s.

The question of how much chance, and how much natural progression is the key divide between the “life everywhere” and “life nowhere” camps. If the life everywhere crowd is right we should know within a decade or so. We’ve established that life was possibe for an extended time on Mars. I guess that’s why NASA’s chief scientist has went out on a limb and predicted we will find it. If it’s everywhere it should be there.

@Larry Kennedy: The problem is, does anyone who proposes to resolve the Fermi paradox by saying there are no other civilizations in our galaxy (and perhaps none in other observable galaxies) actually advocate the idea of “life nowhere”? The authors of Rare Earth, Ward and Brownlee, propose that single-celled, bacteria-like life might be very common throughout the universe, but complex, multicellular life (of the kind needed to evolve an intelligent technological species) astronomically rare, arguing that the sort of long-term stable environment needed for the latter might require all sorts of unlikely elements in the planet and planetary system where life arises. Likewise, Robin Hanson’s argument about multiple “hard steps” in the evolution of intelligent life is totally compatible with the idea that the origin of life is not one of the hard steps–as he says in this paper, “If Earth’s biosphere had to make several hard transitions before creating intelligent life, then the biosphere’s first transition would have had to occur early in Earth’s history, regardless of how hard or easy that first transition was. That is, regardless of how hard it is to originate life, we should expect life to have appeared early relative to when intelligent life appeared”.

Hanson’s paper is worth reading, he points to an interesting consequence of the hypothesis of multiple “hard steps” (a consequence originally noted by Brandon Carter)–for statistical reasons, it seems that even if the probabilities of hard steps are fairly different from another, we should expect that on any planets where all the steps were successfully achieved, they are likely to have been achieved at approximately equal time-intervals (see the discussion of lock-picking starting on p. 4). And this leads to a prediction of sorts (also originally made by Brandon Carter)–whatever the length T of these intervals, we should expect the last step to typically be achieved within about T of the time when the planet becomes uninhabitable (for complex life at least). It’s plausible that the two most recent hard steps would be the evolution of intelligent life and the origin of multicellular life (or perhaps the origin of a particular group of multicellular animals with a body plan that made them pre-adapted to achieving large sizes on land, which is true of the chordates but arguably not true of any other animal phyla), giving a time interval T of around 500 – 800 million years. And, as it turns out, the window for complex life has been predicted to close in about that time (for reasons having nothing to do with the ‘hard steps’ argument), despite the fact that the Earth will be around much longer, due to a process in which the increasing brightness of the sun increases the rate of a rock weathering process that traps atmospheric carbon dioxide in rocks. Models indicate this will cause a long-term decrease in atmospheric CO2 that will make photosynthesis for multicellular plants impossible in between 500 million and 1 billion years. This is discussed for example in this paper, and Ward and Brownlee’s book The Life and Death of Planet Earth says on p. 109 that other researchers have come to the same conclusion. So this is an interesting case when one of the “predictions” of the theory of multiple hard steps in the evolution of intelligent life is independently predicted by other models, and it’s a prediction which might have seemed unlikely on first glance, given that the Earth and Sun will last another 5 billion years or so.

@Jesse M.

Yes, I think this is actually a fairly widespread concept.

To me, the most convincing is the realization that most of the proposed “hard steps” in evolution really aren’t. For example, multicellular life evolved independently, many times. Not a hard step, then, it can’t be. Evolution has a way of overcoming “hard steps” like rising water overcomes dams.

There is only one plausible, really hard step: The origin of life. Before life, there was no evolution to push things along, only random chance. Hanson’s argument, which you cite: “If Earth’s biosphere had to make several hard transitions before creating intelligent life, then the biosphere’s first transition would have had to occur early in Earth’s history, regardless of how hard or easy that first transition was” just clinches the deal, as it negates the often heard argument: “abiogenesis must be easy because it appeared so early”. As Hanson says, abiogenesis, no matter how hard, has to have occurred early, simply to allow evolution enough time to get where we are. Therefore, it happening early says absolutely nothing about how hard it is.

So, to me, the most likely step to be so incredibly hard as to exclude life from the universe is, slam dunk, the formation of the first living thing. This remains Occam’s answer to the Fermi question until we actually find evidence of an independent abiogenesis.

@ Jesse M.

As I said in an earlier conversation on this site given the present feeble data available I do lean toward the idea we are alone in this galaxy. I was just reinforcing that the whole argument revolves primarily around our guesses on how much or how little random chance is involved. My second point was aimed mostly at the very prevalent life everywhere crowd. Like I said, if it’s everywhere it has a chance, then it should have been on Mars.

I’ve been looking over the Hanson paper. I tend to agree with the general premise. As much as I admired Sagan and the Cosmos series, I always thought him wildly over optimistic on nearly all the Drake equation. I’m not sure what happened on the last term.

I have noticed on the aforementioned paper.

“For example, our current observation that high intelligence arose recently on Earth is far from random. Since no one on Earth would be wondering about the origin of life if Earth did not contain creatures nearly as intelligent as ourselves…”

Intelligent life would be wondering this at an equivalent point in it’s evolution, whether at one billion or 10 billion years, thus for this discussion it is random. Any non randomness depends on his other separate arguments.

@Eniac: Yes, I think this is actually a fairly widespread concept.

Just as a possibility, or are there any prominent thinkers who consider this the most likely resolution of the Fermi paradox? I had thought that with the evidence pushing back the origin of life closer and closer to the earliest possible time (when the Earth’s surface was no longer molten), and the evidence of fairly simple biomolecules capable of self-replication (see here), and all the research on plausible paths to the first self-replicator like the RNA world hypothesis, that most astrobiologists were disinclined to see the origin of life as likely to involve any huge improbabilities.

To me, the most convincing is the realization that most of the proposed “hard steps” in evolution really aren’t. For example, multicellular life evolved independently, many times.

Yes, for example plants and animals represent independent origins of multicellular life, but that’s why I suggested earlier that the hard step might not be multicellularity itself but rather the origin of an animal phylum pre-adapted to colonizing the land and growing to large size there–there are various physiological reasons that none of the invertebrate phyla would likely be able to (for example, the exoskeletons and book lungs of land-dwelling arthropods don’t scale up well, and cephalopods have copper-based blood that doesn’t transport oxygen nearly as efficiently as the iron-based blood of vertebrates, which probably explains why they never came on land like the fish).

There are plenty of other significant steps that, as far as we know, only happened once. For example, all organisms share the same basic DNA machinery for DNA replication and the generation of proteins, which is much more complicated than the self-replicating RNA strands thought to have preceded it (there are some interesting speculations that this machinery originated in viruses, see here). The evolution of oxygenic photosynthesis is thought to have only happened once, and the paper “The habitat and nature of early life” by Nisbet and Sleep, included in the collection of papers in this file, suggests it may just be a matter of fortuitous coincidence that oxygenic photosynthesis depends on an enzyme called Rubisco which is actually significantly less efficient than other possible enzymes a genetic engineer might come up with, because a too-efficient form of photosynthesis would remove too much CO2 from the atmosphere and prevent the carbon cycle as we know it from existing. Quoting from the paper:

“Where CO2 is in excess, as in the air, Rubisco87 preferentially selects 12C. For 3.5 Gyr, this isotopic signature in organic carbon, and the reciprocal signature in inorganic carbonate, has recorded Rubisco’s role in oxygenic photosynthesis as the main link between atmospheric and biomass carbon32. But Rubisco itself may long predate oxygenic photosynthesis, as many non-photosynthetic microaerobic and aerobic bacteria use it. Unlike the many enzymes whose efficiency has been so honed by the aeons as to approach 100% (for example, catalase), Rubisco works either as carboxylase or oxygenase in photosynthesis and photorespiration88. This apparent ‘inefficiency’, capable of undoing the work of the photosynthetic process, is paradoxical, yet fundamental to the function of the carbon cycle in the biosphere. Without it, the amount of CO2 in the air would probably be much lower.

“It is possible that Rubisco is not subject to evolutionary pressures because it has a monopoly. The qwerty keyboard, which is the main present link between humanity and the silicon chip, may be a parallel: legend is that qwerty was designed to slow typists’ fingers so that the arms of early mechanical typewriters would not jam. It is among the worst, not the best, of layouts, and only minor evolution occurred (English has Y where German has Z). Perhaps the same applies to Rubisco: if so, genetic engineering to improve Rubisco might lead to a productivity runaway that removes all atmospheric CO2.”

Another seemingly unique event that probably occurred at least a billion years after the first cells (and perhaps more like 2 billion years after) was the origin of eukaryotic cells which have a different structure than the prokaryotes that were around before them, which allows eukaryotes to contain more genetic information (multiple chromosomes instead of the single circular chromosome seen in most prokaryotes), allows them to have multiple specialized organelles like chloroplasts and mitochondria, and allows them to have a more permeable cell membrane that permits more communication between cells (probably necessary for the later evolution of sexual reproduction, and also for the type of inter-cellular communication needed for multicellular organisms with complex internal differentiation). After the evolution of eukaryote-like cells with permeable membranes, it’s possible that the acquisition of some organelle, such as the mitochondria which allow for aerobic respiration, was another hard step. At least in the case of mitochondria, the most favored hypothesis is that this occurred via “endosymbiosis” in which a eukaryote absorbed a prokaryote and evolution drove the arrangement to become mutually beneficial and permanent; this also seems to have been a unique event, as the paper here notes that “genome sequencing shows that the mitochondrial genome (and therefore mitochondria per se) arose only once in evolution”. Finally, another event thought to be unique is the form of sexual reproduction in eukaryotes involving meiosis, and this paper says “Comparative evidence suggests that meiosis appeared early in eukaryotic cell history (RAMESH et al. 2005; SCHURKO and LOGSDON 2008), and its high degree of similarity in different taxonomic groups suggests that it arose only once (HAMILTON 1999; RAMESH et al. 2005)”.

Reply to Eniac, continued:

Not a hard step, then, it can’t be. Evolution has a way of overcoming “hard steps” like rising water overcomes dams.

Well, the evidence suggests life on Earth has gone through long periods of relative stasis, broken up by periodic innovations which change the biosphere significantly, like the origin of eukaryotes after a long period of only prokaryotic cells. This could be because evolution just naturally tends to overcome hard steps, but this evidence is equally compatible with the theory that it’s actually tremendously unlikely for evolution to get out of each stasis period by overcoming a new hard step, and the only reason we see this repeatedly happening on Earth is the anthropic principle–on any planet where one of these hard steps is not fortuitously overcome before its sun expands enough to make the planet inhospitable for life, there won’t be any intelligent observers to study their past! So even if the vast majority of planets that evolve prokaryotic cells don’t evolve eukaryotic cells before the sun expands, or the vast majority of planets with eukaryotic cells don’t evolve a form of sexual reproduction where one copy of each gene is inherited from each parent, perhaps intelligent life will only be found on those extremely rare planets where these (and other) hard steps did all manage to occur in the time required.

There is only one plausible, really hard step: The origin of life.

I think a good argument against the origin of life being the sole hard step is the point Brandon Carter makes in his 1983 paper “The anthropic principle and its implications for biological evolution” (Robin Hanson’s paper is largely just elaborating the ideas of this older paper). Carter points out that it actually seems like a rather remarkable coincidence that the time between the origin of life and the appearance of intelligent life (around 3.5-4 billion years) is of about the same order of magnitude as the period in which the Sun’s output is such that the Earth could be potentially habitable for life (Carter estimated this as around 10 billion years, but for reasons I explained in my previous comment it’s probably more like 4.5-5 billion years)–there’s no a priori reason to think these completely different physical processes (one involving biochemistry/evolution and one involving nuclear fusion in the sun) should be within even, say, 3 orders of magnitude, or 6 or 9.

Now, imagine that we belonged to some super-civilization that could create a large number of habitable planets and supply them with stable external conditions indefinitely (giving them some sort of artificial sun which will never change size or radiation output), and we seeded each with life and observed the mean time for such a world to evolve intelligence. Suppose the mean time under these ideal conditions turned out to be much greater than the real lifetime of the Sun (whether because intelligent life itself was a ‘hard step’, and/or because there were one or more needed hard steps between the origin of life and the origin of intelligence)–let’s say 3 orders magnitude greater, or 10 trillion years. In this case, one would expect in the real world that though the vast majority of planets where life began would not involve intelligent life before the Sun became too hot, on those small subset of planets that did manage to do it (and we should expect to find ourselves in a member of this subset, via the weak anthropic principle), in most of them the total time to evolve intelligent life would be within an order of magnitude of the maximum time available. On the other hand, if we did the experiment of giving life on multiple planets an indefinite time to evolve, and found that the mean time to evolve intelligence was one or more orders of magnitude less than the lifetime of the Sun–say 100 billion years, or 10 billion years–then in the real world, on the majority of planets where intelligent life evolved one would expect the time between the origin of life and the origin of intelligence to be of about this size. There still might be some planets where it happened to take much longer, close to the full lifetime of the planet, but this would be unlikely on any given world, and there’d be no basis in the anthropic principle to expect to find that was true of Earth. Finally, if we did the experiment and found the mean time to evolve intelligence on these artificially-stable planets just happened to match the viable lifetime of ordinary real-world planets, this would just be a strange coincidence. So of the three options, it seems like the only one that doesn’t require very improbable events (that can’t be explained in terms of the anthropic principle) or bizarre coincidences in timescales is the first option, and this option requires at least one hard step after the origin of life.

It would be surprising to me if some hard steps didn’t exist. I’m not sure I follow the CO2 conversion argument though. The idea that a more efficient mechanism would somehow run down the level of CO2 to some arbitrarily low level without any balancing feedback seems to contradict the rest of nature.

Mmmh, I thought there were, but I cannot find them, right now. Anyone?

This is correct. However, evolution provides a plausible explanation of why living systems would overcome barriers: Replication and natural selection combine to provide a relentless drive towards greater and greater fitness. Failure disappears, success is quickly established, leading to a steady progression of successes.

No such plausibility exists for abiogenesis. All the handwaving that is being done trying to understand it is very far from actually providing a plausible path spanning the enormous gulf between a mix of random chemicals and a replicating autotroph.

I am not sure I followed this entire argument completely, but it seems to me that, following Hanson’s argument, an incredibly hard abiogenesis followed by a merely slightly hard rest of evolution would explain the observed timing perfectly well. Let me try to put it simply:

Let us assume that there was only one really hard step (abiogenesis, for example), all the rest would follow easily. Looking back over 4.5 billion years, we know that this step has occurred with 100% probability during this time. The most conservative assumption we can make is a constant probability of occurrence (ignoring that the probability might well have been higher early on for geophysical reasons). With this assumption, the chance that abiogenesis would occur in the first 1 billion years is about 1/4.5, or 23%. This is a decent probability (at 5% I would begin to wonder, being somewhat fluent in statistics), thus the hypothesis of only one single, incredibly hard step holds up just fine.

By incredibly hard I mean truly astronomically unlikely. Something like 10^-40 per planet per year. So improbable that our existence would require a preposterously large universe.

Wait a minute, the universe is in fact preposterously large. Coincidence? Perhaps….

This so-called ‘inefficiency’ is caused by the large concentration difference between O2 and CO2. CO2, in air, is present only in trace amounts, at many orders of magnitude lower concentration than oxygen. Rubisco has to be highly specific to work against this concentration difference. The reason it does so just barely is simple: If it could do better, there would be less CO2 and that in course would cause it to do badly, again. In other words, Rubisco, no matter how ingeniously designed, will always operate at the concentration where it is just barely effective.

The assertion that a chemical engineer could design a better Rubisco is dubious, and I would like to see some real justification for it. Rubisco has gotten really good at what it does, and removed practically all the CO2 from the atmosphere.

The balancing feedback is the concentration difference between CO2 and O2. Imagine you were tasked with collecting black marbles from a large tank of red and black marbles. If the marbles are half black and half red, that would be easy. If the ratio were a thousand red marbles to each black one, it would be a lot more difficult. As you get better doing the task, the number of black marbles decreases, and it becomes even harder. No matter how good you get, in the long run you will be making very little progress.

This is the situation that Rubisco is in.

@Jesse M., to me, only meiosis is a hard step to explain after aboigenesis, as the advantages of Muller’s ratchet are only really noticeable in small populations with complex genomes, and sexual reproduction should, in theory, be very effective at inhibiting the transformation of unicellular life to multicellular life (though, somehow, this second problem doesn’t look to be borne out in practice on Earth).

Also I feel that it is best not to treat evolutionary problems of separate lines of creatures, with the evolutionary problems of entire ecosystems (as in RuBisCO). Most ecosystem problems have never been solved, and this ignorance leads the whole Gaia Hypothesis debate.

I am also interested in your claim that the “book lungs of land-dwelling arthropods don’t scale up well”. I have seen such analyses of the insect’s tracheal system, and know of the extreme modifications of it made in the largest living insects (such as Goliath beetle), but never seen this same claim made in peer reviewed literature or reviews for the book lung system of arachnids. I am wondering if this is solid or merely the guess of one expert.

To continue with two more points Jesse.

Yes, it has been repeatedly said that exoskeletons do not scale up well but this now looks wrong. It is currently believed that a prime reason that dinosaurs grew larger than mammals was that hollow bones can take more weight per mass than solid ones. That is why the twin towers of the World Trade centre were supported on the outside rather than the central column as would be the naive guess. Take heart though, you can claim moulting as an alternative problem in arthropods.

Also of note is, even in the absence of ‘hard steps’, the early appearance of life on Earth can only be used as evidence for high f(l) if the time period still now left for intelligent life to emerge is much less than the gap from the Late Heavy Bombardment until the first signs of life. Thus your 0.5-1 billion years left is okay, but if the actual figure is more like 100 million years then this evidence is invalid (once again reminding you that this analysis is for the assumption that the only hard step is abiogenesis).

I feel that if that 0.5-1 billion year figure is correct this also rather mitigates the possibility of that their will turn out to be any other hard steps at all, and that order of magnitude coincidence can be explained in other ways, such as biogeochemical cycles being important to modifying the environment to one conducive to higher life, or the reduction of solar activity as our sun ages allowing a more stable climate.

@Rob Henry:

“to me, only meiosis is a hard step to explain after aboigenesis, as the advantages of Muller’s ratchet are only really noticeable in small populations with complex genomes”

Is your argument that the step is likely to be hard just because the selective advantage is small? Are you discounting the possibility that some steps might be intrinsically hard even if they offered a significant selective advantage, for reasons like the set of possible mutations that would lead to an adaptation being very small compared to mutations needed to build other kinds of adaptations? There certainly seems to be some strong circumstantial evidence that the evolution of eukaryote-like cells from prokaryote-like ones was a hard step, just given that prokaryotes were around for 1-2 billion years (and most estimates of the age of eukaryotes I’ve seen suggest it was closer to 2) before eukaryotes arose. And since the rate a given group of organisms can evolve is determined to a significant degree by the period of a generation, in a way this is an even more surprisingly long period than it might appear–the table here suggests many prokaryotes have a generation time of 0.5 – 2 hours, and let’s say small vertebrates (which make up the majority of all vertebrates) typically have a generation time of 0.5 – 2 years, about 10,000 times larger, so a billion years of evolution for prokaryotes is more like 10 trillion years of evolution for vertebrates. And of course the prokaryote population is also much larger than the vertebrate population. So the fact that the distinctive features of eukaryotes (particularly those that are probably a prerequisite to multicellular life, such as permeable cell membranes which allow better intercellular communication, and much larger genomes) took such a huge number of generations to evolve in such a large population seems to provide some intuitive support for the idea that the evolution of these features involved at least one hard step.

“I am also interested in your claim that the ‘book lungs of land-dwelling arthropods don’t scale up well’. I have seen such analyses of the insect’s tracheal system, and know of the extreme modifications of it made in the largest living insects (such as Goliath beetle), but never seen this same claim made in peer reviewed literature or reviews for the book lung system of arachnids. I am wondering if this is solid or merely the guess of one expert.”

Aside from just analyzing the efficiency of book lungs, there is some strong historical evidence of this: the only periods in which we see insects much larger than today’s in the fossil record are periods when we know the oxygen levels in the atmosphere were also much higher than today (the Carboniferous and the first part of the Permian both featured high oxygen and very large insects). Anyway, I’m pretty sure I’ve seen this claim repeated in many articles and a number of books, but googling around for a peer-reviewed paper, I found this one which looked at the amount of volume that beetles of different sizes have to devote to their tracheal system, and finds evidence of a trend that would place an upper limit on how large they could get without an increase in atmospheric oxygen.

“Yes, it has been repeatedly said that exoskeletons do not scale up well but this now looks wrong. It is currently believed that a prime reason that dinosaurs grew larger than mammals was that hollow bones can take more weight per mass than solid ones. ”

The argument I’ve seen is that it’s not the hollowness per se that’s a problem, but rather the relative weakness of the limb jointsThe section titled “Why don’t creatures with exoskeletons get very large?” in this article, suggests the two main issues are that they don’t have wide upper surfaces surfaces to distribute the weight above them as vertebrate joints do, and they also don’t have living tissue between the joints to act as a cushion.

“Also of note is, even in the absence of ‘hard steps’, the early appearance of life on Earth can only be used as evidence for high f(l) if the time period still now left for intelligent life to emerge is much less than the gap from the Late Heavy Bombardment until the first signs of life.”

Yes, I agree, the Hanson/Carter statistical argument suggests that even if the probability of the origin of life is very tiny, the time between when the Earth first became habitable and the time when life originated would be expected to be similar to the time between the last hard step being achieved and the time the Earth becomes uninhabitable (or at least uninhabitable to the form of life that might pass the last hard step). So if the Earth is going to become uninhabitable possible as soon as 500 million years from now, and if the evolution of intelligence was a hard step, then unless we can show that life originated significantly less than 500 million years after conditions on Earth became conducive to it, the relatively “early” origin of life can’t be taken as evidence it’s easy.

On the other hand, if you say the evolution of intelligence was not a hard step, but was rather some much earlier one like Meiosis as you suggest, then that increases the predicted step size–Meiosis evolved at least 1.2 billion years ago, so if that was the last hard step that would imply an expected step size of about 1.5 billion years. So if life originated significantly less than 1.5 billion years after the Earth became habitable (as it likely did) that might lead you to conclude it’s likely an easy step. Then again, the argument does not suggest the steps have to be exactly equally spaced, maybe it’s not too improbable to have one step gap be say 1/4 the length of another gap, I don’t understand the details of the statistical argument well enough to figure out the chances here.

“I feel that if that 0.5-1 billion year figure is correct this also rather mitigates the possibility of that their will turn out to be any other hard steps at all, and that order of magnitude coincidence can be explained in other ways, such as biogeochemical cycles being important to modifying the environment to one conducive to higher life”

It seems to me that this just shifts the coincidence rather than actually making it appear non-coincidental. A priori, why should we expect the length of time needed for biogeochemical cycles to modify the environment would be the same order of magnitude as the time needed for the time for the sun to run out of fuel for nuclear reactions? Suppose someone came up with some entirely new laws of physics, ran a universe-sized simulation based on these laws and found some broad similarities–star-like objects whose internal reactions send out energy-carrying waves, planet-like objects that orbit the former, and life on these planets that uses the waves to power some sort of chemistry-like reactions that allow for self-replication and metabolism, and also gradually modify the planet’s surface. Would you have any reason to expect these two types of processes to have a similar time scale even before you knew the details of the simulated physics, or of the size of the planet’s surface relative to the fundamental molecule-like units undergoing chemistry-like reactions?

@Eniac:

“However, evolution provides a plausible explanation of why living systems would overcome barriers: Replication and natural selection combine to provide a relentless drive towards greater and greater fitness. Failure disappears, success is quickly established, leading to a steady progression of successes.”

I don’t think this is a good description of how evolution works at all times–just think in terms of the evolutionary biologist’s notion of the “fitness landscape”, a hypothetical true map (which we can only approximate with empirical studies) giving the fitness of every possible genome (or every possible variant of a given gene, if we’re just talking about the fitness of a single gene). The expectation among biologists is that this landscape would contain plenty of local optima, and the sort of relentless progress to increasing fitness that you describe only happens when a population is on a sloped part of the fitness landscape, but once it settles around a local optima where most small changes would lead to a decrease in fitness, evolution has no inherent long-range vision that makes it seek out greater and greater local optima. This would explain the evolutionary pattern seen in the fossil record, which seems more consistent with some kind of “punctuated equilibrium” (changes happening either because of a shift in the fitness landscape corresponding to a changed environment, or some type of ‘macromutation’ that takes some members of the population to a different region of the fitness landscape, see the discussion here) rather than the “gradualist” notion of continual steady improvement.

And see my previous comment Rob Henry about the time huge gap between the first prokaryote-like cells and the first eukaryote-like cells–if you see eukaryotes as some kind of increase in fitness from prokaryotes, and you see evolution constantly pushing in whatever direction will increase fitness, why do you think this took so long? Depending on how you define fitness it’s not clear to me that eukaryotes are in any meaningful sense more fit than prokaryotes, since they reproduce more slowly and there are few of them on Earth (and ‘fitness’ is normally defined in terms of the probability of reproducing in one’s environment)–they just occupy different niches. Perhaps it’s only meaningful to compare fitness within a single species, or within a single niche, I’m not sure what evolutionary biologists would say about this.

“I am not sure I followed this entire argument completely, but it seems to me that, following Hanson’s argument, an incredibly hard abiogenesis followed by a merely slightly hard rest of evolution would explain the observed timing perfectly well.”

The argument I was referring to was one specific to Brandon Carter’s paper, I don’t remember Hanson discussing it. And it’s not about the timing of abiogenesis as your response talks about, rather it’s about the time between abiogenesis and the evolution of intelligence. This time span is of the same order of magnitude as the time span between the origin of the Sun and its running out of fuel, and although that may seem natural to us because we’re used to it, given the huge range of time scales involved in different physical processes in nature, Carter argues this really deserves to be seen as a major “coincidence”. This coincidence could be easily explained if we assume that average expected time between abiogenesis and intelligent life would be much longer if we looked at a large population of Earth-like planets with artificial Suns that would give them consistent radiation indefinitely, so that the fact it took less time on our planet can be explained in terms of the anthropic principle, but otherwise there seems to be no explanation. If you disagree, could you address the hypothetical scenario of the simulated universe with new laws of physics that I brought up in the last paragraph of my reply to Rob Henry?

“The assertion that a chemical engineer could design a better Rubisco is dubious, and I would like to see some real justification for it. Rubisco has gotten really good at what it does, and removed practically all the CO2 from the atmosphere.”

You may well be right about this–the paper “The habitat and nature of early life” by Nisbet and Sleep that this claim comes from doesn’t offer any citations for the comment about Rubisco’s inefficiency and the suggestion it might be a “QWERTY enzyme”, so it may just be an idle speculation by the authors. I quoted it mainly because I thought it was an interesting idea for a possible hard step I hadn’t seen before, and one that was unlike other proposed hard steps in that it wasn’t about the complexity of some new adaptation, but rather about its long-term effects on the global ecosystem. But in a way we’d better hope the authors are wrong in their speculation, because if it’s actually true someone could genetically engineer a significantly more efficient alternative, that would lead to the danger of someone doing so and releasing it into the environment!

Thanks for that reference Jesse M., it adds to my knowledge of how poorly the insect tracheal system scales. However the tracheal respiratory system is very different than the book lungs seen in spiders and scorpions.

As to the exoskeletons, some fossil sea scorpions were larger than modern humans.

http://en.wikipedia.org/wiki/Jaekelopterus

The greatest problem with meiosis is that it has many many immediate costs, and its most obvious benefits only come 10,000-100,000 generations later.

And to continue on the theme that evolution is a tricky subject, let’s address that eukaryote leap by seeing if it is necessary. Here follows a modified copy of a previous comment that I made on these pages for bacterial multicellarity…

Do not underrate bacterial multicellularity.

Firstly many modern cyanobacteria, display one terminally differentiated cell type (the heterocyst). This feature is in advance of even some metazoan lines (the most advanced of all the branches of eukaryote multicellular cell life), especially sponges whose cells are all pluripotent. Stromatolites had far more special cell types, though we can only guess whether they derive from one species or a community of several.

Secondly myxobacteria are very similar in all superficial respects to slime moulds having larger genomes than most unicellular eukaryotes as if they are the more advanced form.

Thirdly the prokaryotes we see today have been modified by purifying selection that may be a reflection of their typical remaining habitats AFTER they have been outcompeted by eukaryotes for slower growing complex niches (except that of slime mould). This would have forced them into places where the higher growth rate always wins.

Fourthly, have you ever heard those arguments that the eukaryote nucleus has many archaic features not seen in bacteria or archaea? Have you ever asked yourself how primitive features could be retained until the eukaryote origin in the face of a billion years of purifying selection? It is almost as if some ‘slow and complex wins’ niches have always existed isn’t it?

Jesse, the biogeochemical timing argument has an indepentant way of testing it. If Mars had early life (perhaps infected from Earth or visa versa by lithopanspermia), lack of tectonic activity should have resulted in an accelerated rate of O2 build-up, and run down of greenhouse CO2. If life’s development is bound to these cycles we would confidently expect fossil higher forms there despite one order of magnitude difference in the time available between suitable conditions on each planet.

Jesse M.

As you surmised: 1 billion instead of 1.5 billion reduces the significance from 0.5 to 0.33. 1 billion (or even 0.5 billion) are not significantly different from 1.5 billion in this context, statistically speaking.

One possibility that is mentioned by Carter, I believe, is that geological conditions had to change before eukaryotes actually had an advantage, perhaps having to to with oxygen accumulation.

No other explanation is needed, because this is it. As I said, I think abiogenesis is so astronomically unlikely that your hypothetical “population of planets” would have to comprise the entire universe to produce just a single example of life.

Hypothetical? Maybe not ….

As for the evolution argument, the word I used “relentless” does not imply “steady” or “goal oriented”. A rising tide of water is not steady, it will rupture dams on occasion, and it has no goal. Nevertheless, if the water keeps coming (i.e. mutation keeps happening) it will eventually cover everything.

So, to me it makes no difference whether there is steady progress or “punctuated equilibrium” or whatever. What is important is that successful innovations (mutations) are replicated and maintained for long times, and are available for new innovations to develop on top of them. Without replication, there is no memory, and the possibility for advances in complexity is extremely limited. It is like the water being a trickle that immediately disappears into the ground.

Think of a board game, Chutes and Ladders perhaps. With evolution, each time you throw the dice you get to advance (or drop, occasionally), and you’ll make it eventually to the goal. Without evolution, it would be like having to go back to Start before each throw of the dice, which would drastically limit your chance to advance.

” I think abiogenesis is so astronomically unlikely that your hypothetical “population of planets” would have to comprise the entire universe to produce just a single example of life.”

I don’t know how we can make any meaningful statement about the likelihood of abiogenesis since we have only the vaguest guesses about how it works. As I pointed out before, pronouncements of life everywhere and life nowhere else would be on equally shaky footing.

Larry Kennedy we having at least three independent reasons to believe that abiogenesis is an improbable event.

1. Darwin’s theory of evolution is the basis for modern biology, yet it is often badly misrepresented. For two thousand years the natural philosophers of biology were divided between those who thought that lines of creatures evolved into higher forms with time, and those who did not. Darwin broke the impasse with a stunning new idea: that absolutely NO new biological function evolved except by a slight advantageous modification of a pre-existing one. This solved many problems, but its greatest cost was destroying the very idea of spontaneous generation.

2. As all life derives from a common ancestor (as we would predict from Darwin) we would expect this to be very simple, yet it turns out to be massively complex, with highly conserved macromolecules. We could posit that a very late form out-competed all others, but more complex forms can seldom displace simpler ones, and the long fossil record of cyanobacteria also makes this unlikely.

3. Von Neumann studied the minimum requirements for non-trivial selfreplication in both real world design requirement and mathematical systems. He, and all those who came after him found those requirements almost impossibly complex. Von Neumann designed a universe just for that purpose, and proved it possible to build, but even in that system it was so complex that he could not give a design.

So evolutionary theory, biochemistry, and mathematics all point to a common conclusion: abiogenesis, in theory, should be highly unlikely.

@Rob Henry

I certainly believe your arguments are good ones. but the past teaches us that great arguments can often be made about things we simply don’t understand. I certainly think this subject is one of those. On the other hand it is very believable that you may turn out to be right. It keeps circling back to the same question. Is a huge amount of luck involved or are we once again, as so often before missing something.

The fact remains that life appeared fast on Earth, almost as soon as possible, once the Late Heavy Bombardment was over, which itself was probably pretty much inconsistent with it.

At the same time the universe looks lifeless, in spite of the fact there are billions of Earth like planets out there, many of them billions of years older than ours.

However, there is a possible resolution of this paradox.

Probability of spontaneous abiogenesis may be arbitrarily low while conditional expected value of the time needed for it to occur is small, with respect to the event we are considering this very question; its conditional probability is certainly high (that is, 1). See anthropic principle.

Let’s suppose, for the sake of the argument, that chance of spontaneous abiogenesis on an Earth like planet is one in ten to the millionth power in any million years long interval, that is, non zero, but negligible. The universe is expected to be lifeless almost certainly.

However, let’s also suppose that at the tail end of the Late Heavy Bombardment it increased a trillionfold briefly, for a hundred million years or so, then fell back to its previous value. In this case the universe is still expected to be completely sterile, but in the off chance it is not, it should happen on a single planet once and as soon as possible. That’s what is seen.

We can even learn something important from this lesson. As probability of spontaneous abiogenesis is so low, we shall not be able to replicate it in the lab. However, it is still a testable hypothesis, that bombardment by comets at a declining intensity does increase its probability tremendously relative to the no bombardment case.

The conditions to be replicated in the lab are neither lightning, nor UV radiation, nor hydrothermal vents or anything like that, but high speed impacts, which probably bring in simple organic compounds and provide ample stirring along with free energy in the form of shock waves and sharp spatio-temporal temperature gradients.

The signs to watch for are occurrence of much improved, although still broken autocatalytic networks, which fail to generate life, but make a huge leap in that direction compared to any other environment.

Therefore it is testable, anyone?

I do love testable. To me the first step is simply to prove we can force life beginning from simple chemistry in any fashion whatsoever. That would give us something real to talk about. You then wait 5 years while everyone piles on, to either show alternate ways to do it or to illustrate a reasonable natural path.

Then you would actually be ready to talk about probabilities.