If they did nothing else for us, space missions might be worth the cost purely for their role in tuning up human ingenuity. Think of rescues like Galileo, where the Jupiter-bound mission lost the use of its high-gain antenna and experienced numerous data recorder issues, yet still managed to return priceless data. Mariner 10 overcame gyroscope problems by using its solar panels for attitude control, as controllers tapped into the momentum imparted by sunlight.

Overcoming obstacles is part of the game, and teasing out additional science through extended missions taps into the same creativity. Now we have news of how successful yet another mission re-purposing has been through results obtained from K2, the Kepler ‘Second Light’ mission that grew out of problems with the critical reaction wheels aboard the spacecraft. It was in November of 2013 that K2 was proposed, with NASA approval in May of the following year.

Kepler needed its reaction wheels to hold it steady, but like Mariner 10, the wounded craft had a useful resource, the light from the Sun. Proper positioning using photon momentum can play against the balance created by the spacecraft’s remaining reaction wheels. As K2, the spacecraft has to switch its field of view every 80 days, but these methods along with refinements to the onboard software have brought new life to the mission. K2, we quickly learned, was still in the exoplanet game, detecting a super-Earth candidate (HIP 116454b) in late 2014 in engineering data that had been taken as part of the run-up to full observations.

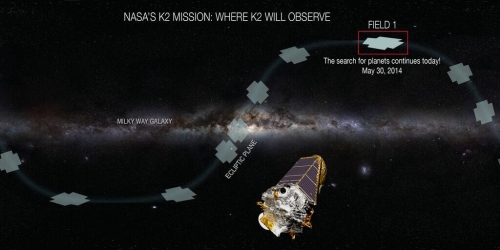

Image: NASA’s K2 mission uses the Kepler exoplanet-hunter telescope and reorients it so that it points along the Solar System’s plane. This mission has quickly proven itself with a series of exoplanet finds. Credit: NASA.

Now we learn that K2 has, in its first year of observing, identified more than 100 confirmed exoplanets, including 28 systems with at least two planets and 14 with at least three — the spacecraft has also identified more than 200 unconfirmed candidate planets. Some of the multi-planet systems are described in a new paper from Evan Sinukoff (University of Hawaii at Manoa). Drawn from Campaigns 1 and 2 of the K2 mission, the paper offers a catalog of ten multi-planet systems comprised of 24 planets. Six of these systems have two known planets and four have three known planets, the majority of them being smaller than Neptune.

In general, the K2 planets orbit hotter stars than earlier Kepler discoveries. These are also stars that are closer to Earth than the original Kepler field, making K2 exoplanets useful for study in the near future through missions like the James Webb Space Telescope. From the Sinukoff paper:

Kepler planet catalogs (Borucki et al. 2011; Batalha et al. 2013; Burke et al. 2014; Rowe et al. 2015; Mullally et al. 2015) spawned numerous statistical studies on planet occurrence, the distribution of planet sizes, and the diversity of system architectures. These studies deepened our understanding of planet formation and evolution. Continuing in this pursuit, K2 planet catalogs will provide a wealth of planets around bright stars that are particularly favorable for studying planet compositions—perhaps the best link to their formation histories.

As this article in Nature points out, K2 has already had quite a run. Among its highlights: A system of three super-Earths orbiting a single star (EPIC 201367065, 150 light years out in the constellation Leo) and the discovery of the disintegrating remnants of a planetary system around a white dwarf (WD 1145+017, 570 light years away in Virgo).

Coming up in the spring, K2 begins a three-month period in which it segues from using the transit method to gravitational microlensing, in which the presence of a planet is flagged by the brightening of more distant cosmic objects as star and planet move in front of them. We’ll keep a close eye on this effort, which will be coordinated with other telescopes on the ground. The K2 team believes the campaign will identify between 85 and 120 planets in its short run.

The Sinukoff paper is “Ten Multi-planet Systems from K2 Campaigns 1 & 2 and the Masses of Two Hot Super-Earths,” submitted to The Astrophysical Journal (preprint). For more on EPIC 201367065 and habitability questions, see

Andrew LePage’s Habitable Planet Reality Check: Kepler’s New K2 Finds.

A summary of the initial analysis of data from the first four “campaigns” of Kepler’s K2 mission can be found here:

http://www.drewexmachina.com/2015/11/29/the-first-year-of-keplers-k2-mission/

Maybe there’s a way to engineer interplanetary probes and space telescopes to make them handle and constructively adapt to unexpected changes in their environment internally and externally? Since they routinely function for decades, maybe this gives an opportunity to a new kind of design philosophy? We could design century probes. Adding one after another in orbit or on the surface of planet P, each being able to cooperate with its older neighbors to build a long term presence in a mix of technology generations. If a human is fired or a company makes losses, there are often many ways to correct that in the diverse and reactive economy of Earth. Maybe spacecrafts can somehow be designed to be resiliently adaptive too, and maybe the more the better.

Kepler K2 seems to be even better than continuing the original Kepler mission! At least to some of the astronomical community, at least parts of the exoplanetary community (who’re interested in nearby exoplanets) and to asteroseismologists and more. Just 150 years ago you became a noted astronomer by discovering one single asteroid. But to Kepler K2 when it observes in the ecliptic, its view is littered by asteroids, comets and KBOs which only disturbs the starry light curves when they pass through the point spread functions of the real targets. Field of view is too small to determine their orbits or distances, so they are just waste data. There’s just too much of this “pest” in the Solar System :-D

https://www.youtube.com/watch?v=AT-KVFGEqMA

Reminds me of the cosmic ray astronomer who complained that all of his astronomy colleagues systematically erase all of the data which he is interested in, because they consider it “bloody noise”!

Greg Laughlin has an article on the Systemic blog about the relative importance of transits and radial velocities in exoplanet detection: RVs definitely seem to have declined in importance as regards finding new systems.

From Andrew LePage’s article linked in the first comment, I see that campaign 2 included several globular clusters in the field-of-view, including Messier 4 which is one of the nearest globular clusters and (so far) the only known globular cluster to host a planet. I wonder if any transiting planets will turn up in these clusters.

Pushing the frontier of what’s possible may allow us to see the planets in nearby binary star systems like Alpha Centauri: http://www.space.com/31565-hunting-exoplanets-alpha-centauri-binary-stars.html

Way to go K2. The shorter observation periods and microlensing observations actually illustrate a potential dichotomy that has in the past divided the exoplanet community and not helped previous bigger initiatives . Is exoplanet science about ALL exoplanets , building up a detailed picture if their formation, nature and even their star system’s architecture ,which all look increasingly important in determining their fate , including indeed especially whether they may end up habitable and where we sit in the hugely bigger picture . Building towards the ultimate goal gradually and methodically .It’s incredible already with limited resource how innovation can give us so much detail about bodies that have only occasionally been seen and only known for twenty years . If the various missions like current K2 and those yet to come like PLATO,TESS, Gaia and WFIRST continue with ground based surveys by the end of the next decade we will likely have 70000 or more exoplanets . Unbelievable but likely and with graphs to boot telling us all about trends and detail.

Or is it to “go for gold ” and try and find that eta Earth in the erstwhile habitable zone with an atmosphere packed with biosignatures . Life ! A difficult question as both approaches have their merit and strong advocates and its a question the community will have to resolve as united they stand. The big UVOIR telescope that we all crave will be in the next Decadel as will exoplanets . But there are other very plausible telescopes there of a very different nature and with entirely different and plausible goals. So how near the top exoplanets sit depends on the agreement of a cohesive strategy from all involved .

A few of these sittings will require conformation so Kepler hopefully will survive long enough to make it back to campaigns 1 and 2 and beyond for a second look to try for planets in longer term orbits

The idea of 70,000 known exoplanets boggles my mind. But then, so does the current number. Thanks for this update!

Belikov and Bendek have indeed created a software programme ,Multi Star Wavefront Control, to enhance the “post processing ” element of imaging binary systems like alpha centauri with a coronagraph . Unfortunately there proposal to use it , covered in a recent article I wrote for the site is only at a low level of ” maturity ” as Nasa call it , development to the rest of us. TRL3 , testing readiness 3 ,of a maximum of 9. To have a chance of getting the $140 million dollars their idea needed for reality it would need to get up to 6 or 7. Their proposal only looked at the one system and all you eggs in one basket ,even a good basket , for $140 million was deemed to too risky. WFIRST-AFTA is using the nine years till is launch to develop this very technology to the same level and we should get the images , and detail, of planets in other systems. The technology involved is near to miraculous and will be one of the great technological,achievements when complete . The problem is being allowed adequate time to use it as there basically aren’t enough telescopes to go round. The ones there are awesome and getting better as is the software necessary too ( is always a software problem !) as Belikov and Bendek show so it’s got to be quality over quantity.

There are so many star systems that are completely unexplored as yet. I feel like a starving man on a cold winter’s day looking through a shop window at a big, fat, juicy cake.

KEPLER is obviously a robust design. Launch a clone after upgrade of the reaction wheel, on a Space-X booster for half the price of the first. Aim at a different section of the sky.

The ACEsat is a beautiful telescope but look at what Damian Peach can do with a

Celestron 14″ (35cm) Schmidt Cassegrain – http://www.damianpeach.com/.

Now for a 140 million you can build 1000 earth based ACE telescopes (140,000.00 each) and at prime locations an 18″ (45cm) should be able to match a space based system. You can easily upgrade and change out with different instruments plus there would be easy access online to them by many astronomers. The unobstructed design is the best for planetary observing and at high altitudes the PIAA coronagraph should work just as well. How about a dozen just to test the concept! I have a spare 20″(50cm) mirror!!!

70,000 is a conservative number too. If Gaia is extended , and it’s original P.I Mike Perryman ( now at Princeton and the author or the fine “exoplanet handbook” ) thinks it can go onto near ten years it will find 70000 on its own ! Everyone sees WFIRST as a direct imaging mission but it’s wide field dark energy work will account for about 5000 planets via Kepler style transit photometry to say nothing of the thousands more via microlensing . Another very exciting possibility if Gaia is extended is that that its observations of nearer star systems (30 light years or so ) can be combined with those of WFIRST to give the sub micro second astrometry precision sufficient to find down to Earth mass planets.

A hell of a mission is WFIRST and there are still high hopes of it being used with a star shade too for another year of direct imaging as well as the coronagraph which is being pushed to the highest level of performance as we speak having been granted a much bigger fund than expected in the recent budget . It’s certainly being built to last too , in a simple modular design that should allow servicing.

That said 70000 planets minimum in fifteen years yet no dedicated telescope to characterise them other than bits of time on JWST , clever adaptation of Spitzer and Hubble and WFIRST via direct imaging. A few dozens of planets characterised at best. The ESA ARIEL transit spectroscopy telescope concept has been shortlisted ( not guaranteed) for a 2029 launch and can characterise down to Super Earths and there is a MIDEX ,Nasa Explorer funding round starting next autumn which should attract a transit spectroscopy bid too ( the Nasa Exoplanet committee has a special interest group whose task it is to develop an Explorer bid for just this event ) it’s just a question of how far this smaller fund can be made to stretch. The good news is that it is unusually large this time at a round at $250 million ( cf $200 million usually ) and will launch by 2023 at the latest.

There was a previous unsuccessful MIDEX bid previously , FINESSE and also a similarly unsuccessful ESA bid “EChO” both of which were very unlucky not to be selected ( presented at a time when the drive was to discover as many planets as possible rather than worry about characterising their atmospheres) and are worth reading about ( just search their names on Google and EChO has a good write up in arxiv) as any future such telescope will likely be a combination of the two quite straightforward designs . With 70K planets we need more than one dedicated telescope though even if we get two neither will be big enough to characterise the thin atmosphere of an Earth like planet, though maybe a Super Earth around a nearby M dwarf ( Wolf 1061c if we get really lucky !) . But atleast hopefully we will begin to see a significant amount of characterisation next decade with the much bigger JWST helping by targeting the most exciting discoveries from TESS in particular.

Interesting what you are saying about ACEsat and smaller apertures Mike. Go small and cheap is now the ethos . The jPL/MIT under Prof Seager are due to launch a very understated 6cm 6U Cubesat transit spectroscopy telescope ,ASTERIA ,later this year. Small ,but it’s taken five years to get the prototype to operational level. They’ve finally succeeded. It’s a watershed moment as these things cost buttons as compared to even ACEsat and the plan ultimately is to have a fleet of 30cm aperture telescopes each dedicated to an individual star system for two years . The breakthrough came with the rapid recent development of the CMOS ( complementary metal oxide semi conductor )sensor , originally the poor cousin of CCDs until recently but now very much on the up, not least because of its radiation toleration, low power requirements and size due to limited ancillary electronics and also no need for expensive cooling.

The e2 V company who make the state of the art EMCCD sensors for WFIRST too have developed it for the JANUS camera on the ESA JUICE mission given its operation in Jupiter’s harsh radiation environs. It’s a sensor very much on the up. Stanford University have also been developing Cubesat mini star shades too . Combine that with your 30 cm LEO Cubesat telescope , with may be more than one scope per star and characterisation problems will be over and exoplanet science can be done as part of a PhD project ! Watch ASTERIA closely , it’s the beginning of something very big ( yet cheap) . Pushed out of the ISS in the autumn.

EricSECTW. Kepler is indeed robust ans so is ACEsat with SIX reaction wheels supplemented with micro thrusters. Bendek and Belikov did a good YouTube presentation at the SETI Institute in December on imaging Alpha Centauri. What cost them was not precision imaging but not de eloping their software algorithm enough. The whole mission depended on something that hadn’t been tested enough and was rejected sadly . For a software issue ! ( $140 million isn’t JWST $8.5 billion perhaps but it’s still a lot of money )

Unfortunately when it comes to precision imaging , down to pico meter precison is the problem, it isn’t robustness or redundancy so much as vibration. And reaction wheels cause that which at 1e11 contrast is a big problem. The biggest problem of all indeed when it comes to direct imaging and what is currently holding it back. Even minor vibrations due to heat conduction in the mirror matter at this level. That’s the reason the rather cumbersome and complex starshade idea is still being pursued because there are real concerns that the sort of precision, vibration free, imaging required for Earth mass planets may not be possible with coronagraphs where stringent requirements of mirror smoothness and stability are necessary. Star-shades reduce this a lot though in return you need accurate formation flying of fifty metre plus self propelled space flowers several tens of thousands of kms

away from the telescope for weeks at a time . A major technological effort to get this far and a great one when eventually successful.

As amazing as K2 is, we need to all remember it’s just one more reaction wheel failure away from extinction. We should treasure every successful campaign Kepler completes as if it were its last.

p

How about an array of ACE set up like the Giant Magellan Telescope or similar to the Ultra–Low Surface Brightness Imaging with the Dragonfly Telephoto Array. All combined with deformable mirrors – just how deep could these go when put in the world’s finest locations for a stable atmosphere. Mountain top observatories, located above frequently occurring temperature inversion layers, where the prevailing winds have crossed many miles of ocean. Sites such as these (La Palma, Tenerife, Hawaii, Paranal etc) frequently enjoy superb seeing much of the year, (with measured turbulence as low as 0.11” arc seconds occurring at times) due to a laminar flow off the ocean. Sea level locations, on shorelines, where the prevailing winds have crossed many miles of ocean (Florida, Caribbean Islands, Canary Islands etc) can be almost as good, and generally very consistent and stable conditions prevail there. Also a major factor is generally unvarying weather patterns, dominated by large anti-cyclones (High pressure systems.) Areas outside these large high-pressure systems have more variable weather, and are more prone to a more variable state of atmospheric stability. I have been lucky here in Bohol, Philippines with the northeasterly winds off the ocean with superb seeing thru my 12.5″ (32cm) telescope.

Other, less well know locations where excellent stability prevails are the Island of Madeira’s highest point (Encumeada Alta, 1800m) where seeing is better than 1” arc second 50% of the time. At Mount Maidanak (Uzbekistan, 2600m) the median seeing value observed from 1996-2000 was just 0.69” arc seconds, presenting a site with properties almost as good as Paranal and La Palma.

Yes I am definitely looking forward to the ASTERIA and its results, my first telescope was a 2.5″ (6cm) Sears refractor that was made in Japan and given to me by my father back in 1966. How much instruments have improved in the last 50 years and hope to see before to long either our first contact or a least details of nearby planets. My favorite book was Intelligent Life in the Universe by Shklovskii and Sagan and the part that has been the greatest leap in mankind abilities, the photo-engraving of microcircuits. Now we have GPU CUDA’s that can handle these tasks for an advanced earth based ACE.

Dear Mike , your not far wrong. Ignas Snellen from Leiden University ( and some time Centauri Dreams author ) hopes to develop large arrays of ” flux collectors ” , telescopes that don’t have sufficient finish to image directly but don’t need too as ultimately all exoplanet routes lead to spectroscopic characterisation . The flux collectors need far less polishing and cost much less than conventional imaging telescopes so huge arrays of multiple telescopes can collect masses of precious photons at some favourable site like the the Atacama desert and then undergo high resolution spectroscopic analysis .

After Mauna Kea , and allowing for all the imaging requirements you allude to , La Palma has the next best viewing in the Northern Hemisphere, and with all the political problems dogging Hawaii sites at present may gain in importance .

In terms of direct viewing from the ground , the most potent direct imaging equipment will end up on the E-ELT , with EPICS ( Adative optics , polarimeters and coronagraphs in tandem ) for visible light and METIS for mid infrared. METIS may even be a first generation instrument but EPICS isn’t likely to be available to 2030. There isn’t enough processing power to work it at present !

Going back to ACEsat it’s the Nyquist Multi star Wavefront control system that is all important for the future as it allows binary systems like uniquely close Alpha Centauri stars to be both imaged and subjected to high resolution spectroscopy from the visible to Mid infrared where many important biosignature signals ( O3 and CH4 especially ) on any habitable zone planets that are sufficiently close that EPICS and METIS could characterise them if the algorithm can mature enough to get on WFIRST first for discovery then onto the E-ELT for characterisation .( it certainly won’t even have been considered yet nor for some time but if all goes well Alpha Cebtauri is too good to ignore so if there is any way to image it I’m sure it will be pursued though the ultimate decision makers currently have far more pressing issues to occupy them ) Early days.The important thing is to get the algorithm up to scratch. It’s software so can be downloaded at any point even after launch . So we will have to see. It certainly needs improving from a measly TRL3 to somewhere near 6 or 7 to be considered for imaging use at some point ,hopefully on WFIRST if it’s coronagraphs can in turn be improved by that critical one order of magnitude contrast to see terrestrial planets in the HBZ of nearby stars atleast ( development on them both is proceeding at breakneck pace and is ahead of schedule and the science team are very motivated to image terrestrial planets if at all possible with Alpha Centauri the obvious choice but ONLY if the multi star algorithm can be made to work reliably on rather bigger telescopes than ACEsat ) . I’m an optimist though and from my dealings with all those involved in these projects they are all incredibly motivated and critically ,innovative too so who knows what can be achieved in even ten years . Moores law alone sats big things will happen with computers and they run algorithms .

Meantime I’m greedy and with an unusually large $250 million MIDEX round coming up in the autumn I hope to see a transit spectroscopy telescope bid submitted so we can get cracking on characterising lots of K2 and TESS targets by meantime.