Frank Wilczek has used the neologism ‘quintelligence’ to refer to the kind of sentience that might grow out of artificial intelligence and neural networks using genetic algorithms. I seem to remember running across Wilczek’s term in one of Paul Davies books, though I can’t remember which. In any case, Davies has speculated himself about what such intelligences might look like, located in interstellar space and exploiting ultracool temperatures.

A SETI target? If so, how would we spot such a civilization?

Wilczek is someone I listen to carefully. Now at MIT, he’s a mathematician and theoretical physicist who was awarded the Nobel Prize in Physics in 2004, along with David Gross and David Politzer, for work on the strong interaction. He’s also the author of several books explicating modern physics to lay readers. I’ve read his The Lightness of Being: Mass, Ether, and the Unification of Forces (Basic Books, 2008) and found it densely packed but rewarding. I haven’t yet tackled 2015’s A Beautiful Question: Finding Nature’s Deep Design.

Perhaps you saw Wilczek’s recent piece in The Wall Street Journal, sent my way by Michael Michaud. Here we find the scientist going at the Fermi question that we have tackled so many times in these pages, always coming back to the issue that we have a sample of one when it comes to life in the universe, much less technological society, and our sample is right here on Earth. For the record, Wilczek doesn’t buy the idea that life is unusual; in fact, he states not only that he thinks life is common, but also makes the case for advanced civilizations:

Generalized intelligence, that produces technology, took a lot longer to develop, however, and the road from amoebas to hominids is littered with evolutionary accidents. So maybe we’re our galaxy’s only example. Maybe. But since evolution has supported many wild and sophisticated experiments, and because the experiment of intelligence brings spectacular adaptive success, I suspect the opposite. Plenty of older technological civilizations are out there.

Civilizations may, of course, develop and then, in Wilczek’s phrase, ‘flame out,’ just as Edward Gibbon would describe the fall of Rome as “the natural and inevitable effect of immoderate greatness. . . . The stupendous fabric yielded to the pressure of its own weight.” We can pile evidence onto that one, from the British and Spanish empires to the decline of numerous societies like the Aztec and the Mayan. Catastrophe is always a possible human outcome.

But is it an outcome for non-human technological societies? Wilczek doubts that, preferring to hark back to the idea with which we opened. The most advanced quantum computation — quintelligence — he believes, works best where it is cold and dark. And a civilization based on what we would today call artificial intelligence may be one that basically wants to be left alone.

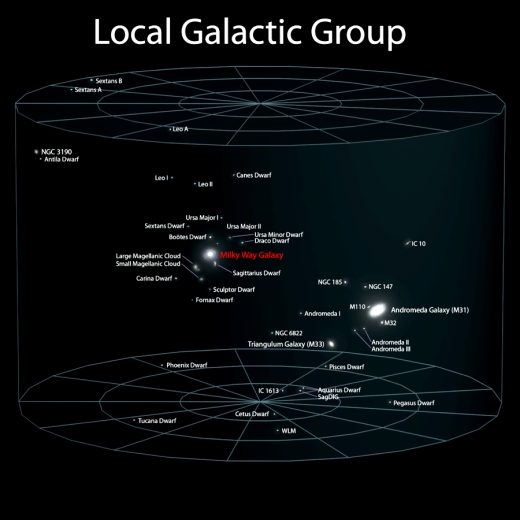

Image: The Local Group of galaxies. Is the most likely place for advanced civilization to be found in the immensities between stars and galaxies? Credit: Andrew Z. Colvin.

All this is, like all Fermi question talk, no more than speculation, but it’s interesting speculation, for Wilczek goes on to discuss the notion that one outcome for a hyper-advanced civilization may be to embrace the small. After all, the speed of light is a limit to communications, and effective computation involves communications that are affected by that limit. The implication: Fast AI thinking works best when it occurs in relatively small spaces. Thus:

Consider a computer operating at a speed of 10 gigahertz, which is not far from what you can buy today. In the time between its computational steps, light can travel just over an inch. Accordingly, powerful thinking entities that obey the laws of physics, and which need to exchange up-to-date information, can’t be spaced much farther apart than that. Thinkers at the vanguard of a hyper-advanced technology, striving to be both quick-witted and coherent, would keep that technology small.

A civilization based, then, on information processing would achieve its highest gains by going small in search of the highest levels of speed and integration. We’re now back out in the interstellar wastelands, which may in this scenario actually contain advanced and utterly inconspicuous intelligences. As I mentioned earlier, it’s hard to see how SETI finds these.

Unstated in Wilczek’s article is a different issue. Let’s concede the possibility of all but invisible hyper-intelligence elsewhere in the cosmos. We don’t know how long it would take to develop such a civilization, moving presumably out of its initial biological state into the realm of computation and AI. Surely along the way, there would still be societies in biological form leaving detectable traces of themselves. Or should we assume that the Singularity really is near enough that even a culture like ours may be succeeded by AI in the cosmic blink of an eye?

I don’t want to get into a discussion on the prevalence of eti given that that is too often inextricably linked to wishful thinking. However when considering the “hyperintelligent” I find it much more enthralling to ponder what computational (cerebral) priorities they might have.

Yeah, too much talk, let’s develop interstellar travel and explore!

Yes, let’s get to it! We need more advances in materials science and energy generation. We get it: computers get faster and smaller on a regular basis reliably providing us with new gadgets, but we need similar advances in other technological arenas! As Peter Thiel said upon pulling an iphone out of his pocket at a tech conference: “I don’t consider this to be a technological breakthrough,’ he said. ‘Compare this with the Apollo space program.’”

I can’t agree more! And I’m not alone: http://buzzaldrin.com/images/MITtechrev_web.jpg

While brains should be compact, that doesn’t mean the body needs to be. If quantum computers show promise for AI, then this c limit only applies to other mechanisms, not to thinking.

We might also think of civilizations being visible by their size. Think of each dust like individual as part of a large dust cloud that could be detected. Unless the individuals can avoid thermodynamics, their thought will require energy and so the cloud will radiate at a higher temperature than would an inert dust cloud.

If civilizations are curious, they will travel, and there is no reason why they wouldn’t use large vessels, event planet-sized ones to do so.

Finally, all that thinking and no action? I don’t buy the idea of thinking in bottles. Civilizations will alter their environment by their actions, even if subtle.

Since biology would always precede AI there will always be biological intelligence out there. How long it lasts in that form should possibly be another variable in the Drake equation shouldn’t it? Biological species can’t miniaturize themselves and so there should be some finite number of them in our galaxy. But time and distance are the key variables surely. If life is very common we should have a chance of finding something we would think of as sentient but it may take a very long time in terms of the human lifespan. We should keep looking but shouldn’t get depressed if we don’t find it in what we think of as a reasonable length of time.

“And a civilization based on what we would today call artificial intelligence may be one that basically wants to be left alone.”

A civilization is not a single agent. Gah, why do brilliant scientists have to be so stupid about social science? A civilization is composed of a group of individuals, each with their own priorities. Try to imagine our civilization at the point when we can build Von Neumann probes that set up shop in every star system of the Milky Way. 99.999% of humans don’t see the value of building such a thing, but that leaves thousands who do. So it’s gonna happen. And that kind of thinking, so easy to apply to us, must be applied to alien civilizations as well. This handwavy assumption that a civilization has one monolithic set of priorities is indefensible and must stop.

Yeah, maybe hanging out in cold parts of the Milky Way is somehow optimal for computing-based civilization. But that civilization is bound to have individuals who get fed up with their neighbors and decide to use the untapped energy from all those stars. I mean, why waste it? You could do a lot of computing with all that starlight and planetary material. It just takes *one individual* to decide to go for it, and a mere million years later, the entire galaxy is paved over.

Nobody, not even the most brutal dictator, can stop every individual from this sort of escape.

Sending Von Neumann nano-machines to somebody home star system is a very good excuse for starting an interstellar war. About those dark-horses poking into other species problems, the late Iain Banks used the term “slap drone” to stop those bad behaviors.

That’s just what I’m talking about. Think through what you’re proposing. First, you assume that a subtle argument that persuades you (aliens might not like a visit from our technology) will persuade 100% of all members of a civilization, forever. Do you have the slightest reason to find this defensible? Honestly, it doesn’t even persuade me. You could just put a clearly marked off-switch (metaphorically) on each drone, so locals have a choice about whether it does its thing in their neighborhood. Like I said in the parent post: it’s not enough to convince 99.999% of everyone.

Second: the slap drone. OK, I’m granting that you could make one work. Could you make it work infallibly? If somebody were talking about making slap drones for real, the first thing I would think is: Crap, I better move to some place where I’m out of the range of those fucking slap drones. I wouldn’t be alone. That splintering would defeat the purpose pretty quickly. Imagine Obama proposing slap drones for America. How do you think this would be received? How about Trump? And that’s just America – one fairly docile human jurisdiction. Of course, I don’t know what aliens are like. Maybe they’re opression-loving super-conformists, but I have no reason to think this. For all I know, they’re just as likely to see us as oppression-loving super-conformists. Probably there’s a range, and given no further data, we should assume that we’re in the fat part of the bell curve. And that’s yet another reason to never ever be satisfied with a rationale of the form “Aliens must have all decided to x.” For all values of x, humans have never all decided to x.

The State Of The Art deals with this problem partially. Anyway, no good old US citizen has ever entered North Korea bringing AK47 to hunt bears, a certain organization in the US or North Koreans themselves would stop this.

the weight between individual freedom and the possibility of interstellar war or the survival of the species is obvious. Any Earth sympathizer who wanted to give our civilization wormhole tech or blueprint of Q-AGI would be either stopped or eliminated. Total individual freedom only exists in VR but not in reality.

If an advanced ETI – heck, not even one much more advanced than 21st Century humanity – entered our Sol system to utilize its resources and decide to either take us out of the picture or at least keep us from interfering with their plans, they would find little in the way of effective resistance from the natives occupying Sol 3.

Attach rocket motors with guidance computers to selected space rocks and aim them at our major population centers. Or drop a few in the oceans and let the resulting giant waves wipe out all the surrounding coastline communities in one or two shots. This is if you want to cripple the humans without destroying all of Earth.

Despite decades of talk, we still have no effective NEO deflection/destruction plans in place: Note how we still read of new planetoids heading past our planet being detected just weeks or less in advance, with no plans to stop or remove them in case something somehow went very wrong.

If you want to get rid of humanity and don’t care about the other flora and fauna of Earth – you just want the minerals and other inorganic resources – aim a single starship at our planet moving at relativistic speeds and let the resulting kinetic energy of the impact sterilize the entire surface of life.

Or genetically engineer a deadly virus that takes out only humans and wait.

There are many options for an ETI that wants Earth and the Sol system and doesn’t want to share. Our weapons and monitoring systems are designed to respectively attack and look for terrestrial enemies, not alien invaders who have all the advantages of the ultimate high ground.

The alien invaders of science fiction are generally stupid in their tactics because if they went with the methods described above, it would be a very short war and the “plucky” humans would be plucked.

If I understand David H’s point correctly, even if a faction did do one of those things, why would another faction try to mitigate it? When we wage war on other countries, organizations like MSF and the Red Cross step in to mitigate the harm. The UN may try to intercede and broker a peace. An so on.

I think it depends on the rarity of abiogenesis and the evolution of human type consciousness. If it is rare enough, then a new emergence may be uniquely valuable and worth protecting. Imagine how easy it would be to protect childhood from exploitation or existential threat if there were only one child? Childhood just has too be valuable enough. I think an ancient peoples would be fascinated by us, a glimpse into their perhaps lost past. A synthetic, computational people who are the product of biological people may be the least likely to show themselves, fearing how their contact may shape the synthetic, computational people we produce or become.

It strikes me that many ideations for ETI resemble old men waging conquests and when we fail to find evidence of these old men conquering the heavens we doubt anyone is there.

Von Neumann probes or similar approaches such as sentinel probes may actually allow or promote peaceful interstellar relations because they would allow neighbors to observe each other and collect intelligence concerning motivations and capabilities. However that does not imply that a civilization capable of Von Neumann probes would seek formal relations with every or any other civilization. The value of maintaining formal relations would be a much better predictor than the capability of maintaining formal relations. For a civilization of super intelligent, immortal, synthetic people; there may be little to no value or very specific but difficult to realize value in short lived biologic people.

I agree that a civilization comprised of individuals shouldn’t, can’t be approached as a monolithic personality. However, we also can’t assume that, for any given time frame, all individual personalities or motivations will be represented. As an extreme example of this, personalities motivated by self harm or suicide will be minimally represented. If there is any competition for resources, personalities motivated towards goals that are relatively less productive will be minimally represented. Less intelligent, less rational, less capable personalities will be eclipsed or eliminated by more their more intelligent, rational, and capable peers.

I think the qualities of the behavior or goal is a better predictor of how commonly the behavior or goal will be represented. What is the cost of the behavior, the possible RoI, are there more productive behaviors? For instance, if the ultimate environment for an AI is a Matrioshka Brain, then we should expect to find lots of them. However, if they aren’t, we shouldn’t expect to find any or only a few. Yes, a personality may decide it wants to be slower thinking and less capable and build a Matrioshka Brain, but that slower thinking, less capable personality will never displace its faster more capable peers.

“It just takes *one individual* to decide to go for it, and a mere million years later, the entire galaxy is paved over.”

This statement describes a galaxy that represents the monolithic will of one personality, the very thing you argue can’t be expected.

Could we even consider hyper capable individuals a filter, as an explanation for the supposed silence? If technological civilizations are comprised of individuals, we should expect the capabilities of the individual to progress proportionally with the capabilities of the civilization. Democratization of future technology would allow many people to build pathogens that could eliminate humanity. Perhaps only civilizations that eliminate the psychopath, sociopath or deficiently rational survive.

Our biology comes with three apparent imperatives: survival, growth (increase in biomass) and replication. Other imperatives could be subliminal. Presuming the same imperatives universally operant among all biologies, how much can they be extended to post-biological intelligences?

Much of the form and course taken by such intelligences will depend on the operation of their imperatives. To the extent that imperatives can be programmed, can evolve as programs and/or can be overcome as programs, they will make additional variables contributing the form and course of those intelligences.

I think that probably the most common form of AI will be that of biological species that have substituted their biological “neurons” by artificial ones, for reasons of safety, velocity of thought, etc. Once you start developing such technology (and we are already doing it) there is a strong evolutive pressure to use it.

I don’t think anyone or anything will want to hang around in intergalactic space. There is nothing there but very cold, widely spaced atoms and molecules. We get the same thing in interstellar space which is much nearer to useful materials for survival for life or AI.

I am biased against AI becoming smarter than us. AI does not have a creative mind which generates ideas. When an AI starts having epiphanies, flashes of insight or a scientific intuition let me know. I’d like to ask it some questions to answer some unknowns. What about thought experiments?

Intergalactic space may surprise us in terms of what is there. Astronomers used to think interplanetary space was pretty dull too. Oceanographers used to think the bottoms of the oceans were essentially lifeless. Then both parties started directly exploring those realms and surprise! I know the regions between galaxies are a wee bit harder to get to at the moment, but still.

Does AI have to be “smart” (conscious, aware) to still be effective? Give it enough sophistication and we may not be able to tell the difference. Already computer programs are good enough to pass the Turing Test or at least do well enough and I am not aware of them being actually “aware.”

Read it and weep for humanity…

Human Or Machine: Can You Tell Who Wrote These Poems? : All …

https://www.npr.org/sections/…/human-or-machine-can-you-tell-who-wrote-these-poems

Jun 27, 2016 – Can a computer write a sonnet that’s indistinguishable from what a human can produce? … NPR’s Robert Siegel, host of All Things Considered, served as one of the judges of the competition. Each judge was asked to read 10 sonnets and decide whether they were written by man or machine.

“AI does not have a creative mind which generates ideas…”

Yet. ;)

The interstellar gas is mostly H, He, 1.5 percent heavily elements and one percent dust. wiki.

Some people are going to be real disappointed when we finally meet an alien race and they turn out to just be basic humanoids with faults and problems of their own. Even worse, they may actually look up to us!

Actually they will probably be thrilled because then they can cosplay Star Trek and Star Wars for real.

Face it, humans are not suited to extended space travel; too big, variable psychology, require very narrow range of temperature and pressure, radiation readily kills us, reproduce in a few digits, need complex sustenance that we must digest, and we dont live long. Results in large ships that will struggle to achieve even 0.1C and we cannot fathom an effort that say would take many many generations to achieve.

Flip all these for something more suited to space travel: you’d want to be very small, long lived, capable of long dormant periods, tolerant of wide range of temperature and pressure, either tolerate radiation or be able to produce rapidly to outpace its effects, reproduce asexually, capable of mutating when environment requires, function even when entirely alone, get sustenance from more basic “food” maybe even from multiple chemical or photochemical sources. Perhaps a supercomplex microorganism or something one order of magnitude below the smallest insect.

I agree that humans don’t travel well, but it turns out that we’re pretty easy to assemble just about anywhere in the neighborhood of a star. You just need some data, a lab with year 2100 tech, and a few pounds of the universe’s most common elements.

Who is going to raise those kids? What if they grow up and decide they didn’t want to do this for a lifetime occupation?

Arthur C. Clarke advocated this (that minimal starships carrying just the “seeds” be sent to other stars, with the babies being artificially conceived twenty years before arrival and brought up by cybernetic nurses that would teach them their inheritance and destiny when they were capable of understanding it) in “The Promise of Space.” He added–regarding the morality of such actions–that “What to one era might be a cold-blooded sacrifice might to another be a great and glorious adventure.” Maybe so, but I wouldn’t care to live in the latter sort of society…

Unless we can perfect androids to look and act just like us, baseline humans will require other humans to be taken care of and raised to adulthood.

With all due respect to Clarke, his failure to expand upon what these “cybernetic nurses” will be like is no more helpful than a typical science fiction story involving FTL propulsion but fails to provide the details on how such a thing can be built and function.

If we want to get to the stars, especially in person, we need the details and the research. Vague generalities pushed off to some ambiguous future no longer cut it. Otherwise this will all remain fiction.

While humans are not monkeys, we know that monkeys can be “raised” by artificial means, just needing a warm, soft proxy for a mother. I see no technological reason why the first generation of humans could not be raised that way. After that, they will have real mothers if needed.

Now that artificial wombs are being demonstrated, we are well on our way to solving the maturation of embryos at the target destination. It is the getting there that remains out of reach.

To ljk and Alex Tolley (regarding Clarke’s “seeds-carrying starship” idea): The only situation I can see in which doing such a drastic thing would be acceptable would be if some predictable, certain, and otherwise-impossible-to-escape catastrophe was going to extinguish all life in the solar system. (In his novel “The Songs of Distant Earth,” the Sun going nova was that catastrophe [until the apparent “deep neutrino deficiency” of the Sun was figured out some years ago, this *was* considered a possible–although thankfully, not too likely–future outcome].) Also:

It will be a long time–if ever–until android surrogate mothers (and fathers…) effective enough to rear children to adulthood become a reality. If such automatons ever became necessary on *Earth* our world will be in truly dire straits, and:

Even if such “parentbots” existed now, denying children real, living parents or guardians, even adoptive or foster ones, would be unethical (exceptions might be: temporarily monitoring very ill babies if many pediatric nurses at a hospital were out sick, monitoring children at a busy orphanage, etc.). The artificial womb (that link is very helpful–Clarke even postulated such a device in his discussion of seedships back then!) sounds like a wonderful invention; it could be a boon to children-desiring couples who, due to illness or accident, couldn’t “do it the old-fashioned way,” but:

Other than for that, or as a last-ditch effort to ensure the survival of humanity elsewhere (or to at least create a chance for that) if Earth’s ‘time was up,’ it would be unethical and unkind to send out such seedships. If (a big “if”), however, human beings ever colonize the stars willingly, inventions like the artificial womb could enable living parents to have any (reasonable) number of children at once, which could help to bolster a colony’s population more quickly than the old-fashioned way would (who knows–a future jealousy among interstellar colonist children might be “real-womb envy…” :-) ).

This scenario also assumes that people sent out on an interstellar colonizing mission – and if this also happens to be done via a multigenerational starship – would automatically want to have children. And that these children born en route, growing up to learn that it won’t be them colonizing a new planet, would want to be little better than links in a chain they would never get to actually enjoy or benefit from.

Not sure how many sociologists and related professional types are here, but I know that most older science fiction with these scenarios were written by men who just assumed, based on their cultural and period upbringing, that men and women would have and obey traditional roles of wife, husband, mother, and father.

One of my favorite stories of the genre, Robert Heinlein’s Orphans of the Sky, had the women aboard that Worldship act like and be treated as little better than breeding animals. This included physical beatings to keep them in line. None of them were even modest characters after all that.

Amazing how they could have the most fantastic technologies or mind-bending locales, but men and women would behave like it is 1925, or earlier.

Sixty years after Sputnik 1 and we have yet to have one single lunar or planetary colony, or even a serious long-term stay in Earth orbit – and what we are learning from the ones who are up there six months to one year are showing some alarming physiological signs. This should not be surprising seeing as everyone sent into space so far has not been enhanced in any way to truly live in the microgravity environment of space.

Yet these same experts both literary and scientific/engineering assume they know how a centuries-long and ultimately permanent manned interstellar colony voyage will function. Lots of focus on the technology, not so much on the generations of humans who will have to endure it.

And now we consider sending robot ships with unborn humans to the stars and assume that magic future technology will develop and raise these children to functioning adulthood to create and raise future generations on some alien world. Am I the only who sees some rather major issues here?

I consider the whole idea of generational ships is already outdated. We are on the verge of a revolution in medicine. Aging will be basically cured in probably 20-25 years. And anyway the number of children per woman worldwide will fall below replacement rate probably before that, maybe only in 10 years (it’s just below 2.5 now and replacement rate is 2.3 with current mortality rates). So all this discussion is quite outdated.

Do you have sources for these claims? As with fusion power, I have been hearing those 20 to 25 years from now claims about magical immortality drugs for the last fifty years.

As for the population issue, I have read just the opposite as of recent years: That instead of plateauing around 2050 as the so-called experts once claimed, now the human population is rising ever upward, with 9 billion humans on Earth by 2050 and over 10 billion by 2100.

So I hope you don’t mind if I hold off on stamping the ideas I posted above as outdated for a while, for the reasons I just stated.

A further comment on immortality: Just as with the psychological states of the generations of humans living aboard a long-duration manned interstellar mission, how many have considered the consequences of living virtually forever – especially for a species that averages a mere 80 years of life and has only been around as a civilized species for about five thousand years.

This is like the dream of wanting to live on tropical island indefinitely. Sure, it sounds nice and is for a while, but what happens when you have endless days of sun, sand, and relaxation? And will everyone get to live forever, or just a selected few like the super rich, how get most everything else already? And if everyone could live forever, what will that do to our already overpopulated society?

You think people who can live indefinitely will want to be stuck on a starship for centuries? Maybe they will immerse themselves in virtual reality, right? Will they still want to complete the original mission if their fantasized worlds are more compelling – and feel just as real?

Yet another idea blithely thrown out there without thinking of all the parameters and consequences for those who might actually have to endure them one day.

> humans are not suited to extended space travel; too big, variable psychology,

cryonics

> require very narrow range of temperature and pressure,

genetic engineering, cyborgs

> radiation readily kills us,

shielding, WILT ( https://www.ncbi.nlm.nih.gov/pubmed/15970505 )

> reproduce in a few digits,

we don’t need so fast reproduction

> need complex sustenance that we must digest,

cyborgs

> and we dont live long

cyborgs, SENS ( https://www.youtube.com/watch?v=pL3DW6-xzLc )

Nothing’s said here about energy sources. Could be nuclear but if it’s very long term you run into the half-life problem. Thermonuclear should be available to such a civilization and likely they could get many centuries out of an ice planet but for hundreds of thousands/millions of years maybe they’d occasionally need to venture over to a nearby source of mass. If they want to be as invisible as possible that would rule out some of the more exciting options. Perhaps a small long-lived star inside nested Dyson spheres?

You are HUGELY understimating energy resources. For example, even if all energy we use today were from uranium, there would be enough affordable uranium in Earth oceans for at least 5 billion years. And we have even more thorium, deuterium, …

Even small and inconspicuous AI would need energy, unless it has compact devices that can harness the zero-point energy of the void in intergalactic space in an extremely efficient manner that does not emit a lot of waste heat, it would need to stay not too far from luminous objects such as stars.

I am more in favour of an artificial civilisation living close to hypermassive black holes in the centre of galaxies, like in G. Benford’s Galactic Center saga. That would make sense, as these are very energetic objects, and time dilation and radiation around those monsters would allow for interesting computing strategies: https://phys.org/news/2017-11-black-holes-spacetime-quantum.html

In addition, the spaces between galaxies are farthest away from super massive black holes, GRBs, supernovas, and others nasties that tend to go bang in the night.

If you have the prospect of immortality, you are probably going to be more selective in choosing your company.

And thinking long term, you might not want to hang around raging wildfires where most of the available H and He fuel supply is being rapidly squandered. Maybe brown dwarfs or gaseous rogue planets might make better long-term residences.

Quoting Nelson Bridwell on February 22, 2018, 6:10:

“Maybe brown dwarfs or gaseous rogue planets might make better long-term residences.”

Yet another compelling reason to aim our instruments of SETI less often at yellow dwarf suns and more often at celestial objects once considered inhospitable to life. Especially since the astronomical community is finally starting to get on board with futuristic technological civilization concepts that science fiction authors have been bandying about for decades.

Computation requires power. While interstellar space is great if you want cold and isolation, it’s rather lacking in handy power sources.

So a computational society would likely have infrastructure around stars to generate high quality power, and beam it out to sites in interstellar space, where it would be used for computation, and then result in waste heat in the form of long wave IR.

So, three points for detection: Power conversion equipment near stars, power beams, and waste heat.

Cryo planets like Pluto, (Sorry, not playing around with the fad of pretending it’s not a planet.) would also be good sites, because they’ll provide high quality heat sinks.

Globular star clusters may provide the available resources plus the relative closeness and their very ancient ages that would be ideal for Artilects. In Omega Centauri’s case, it may be the remnant of a galaxy “consumed” by the Milky Way, so OC may have brought its own ETI with it.

Robert Bradbury wrote a paper on how ETI at Kardashev Type II and III level civilizations might use globular star clusters for resources due to the relative closeness of so many star systems. The same concept may work for open clusters, too.

“Globular Clusters and Astroengineering” (July, 2001)

I cannot find the paper I mention online as it seems Bradbury’s archives disappeared shortly after his death, a major loss if they are truly gone. I hope someone will correct me on this.

Related articles from this blog:

https://centauri-dreams.org/2014/01/15/cluster-planets-what-they-tell-us/

https://centauri-dreams.org/2016/01/06/globular-clusters-home-to-intelligent-life/

Have you tried the wayback machine?

Yes I did, but I appreciate the suggestion. I tried numerous parameters but nothing came up. Hopefully someone somewhere has saved his writings.

At least this important paper he wrote is still online:

http://www.gwern.net/docs/ai/1999-bradbury-matrioshkabrains.pdf

I have long been fascinated with the idea of Dyson Spheres/Shells/Swarms, Matrioshka Brains, and Jupiter Brains.

I became especially fascinated circa 1999 when Robert Bradbury and others took the concept way beyond Freeman Dyson’s original plan of millions of separate enclosed habitats encircling a star to capture all its radiating energy housing future humans and other organic life forms.

In their visions, Dysons were not merely structures for housing organics and giving them lots of extra land to run around on, they were beings unto themselves! Massive “brains” with far more computing power than anything humanity could come up with even if they could all work together (now there is a science fiction concept that seems more fictiony than ever these days).

You may think what you will about the concept, but what I like about it is that if nothing else it helped to break the hoary old paradigm of aliens as just variations of humans – and giving such creatures extra heads or tentacles for appendages were hardly creative breakthroughs.

Food for thought:

http://www.gwern.net/docs/ai/1999-bradbury-matrioshkabrains.pdf

https://www.spiedigitallibrary.org/conference-proceedings-of-spie/4273/1/Dyson-shells-a-retrospective/10.1117/12.435373.short?SSO=1

http://aleph.se/andart2/space/what-is-the-natural-timescale-for-making-a-dyson-shell/

http://www.sentientdevelopments.com/2011/03/remembering-robert-bradbury.html

http://www.orionsarm.com/eg-article/4845fbe091a18

http://www.orionsarm.com/eg-article/462d9ab0d7178

If you want ETI Artilects working best on small scales as Wilczek champions, then you need to get really small to be most effective:

https://centauri-dreams.org/2014/02/12/seti-at-the-particle-level/

Maybe SETI should be using electron microscopes instead of telescopes.

Massive automated solar power infrastructure in space to fuel the production of antimatter could be the key to fueling an interstellar civilization. Charles Pelligrino talked about this concept in his sci-fi novels in which the surface of Mercury was covered in solar panels whose collected energy fueled the production of copious amounts of antimatter.

This sort of civilization / intelligence would not be subject to the IR bounds on “Dyson spheres” (even if they did form Dyson spheres), as those searches are sensitive to waste heat is radiated at around 200 K, not waste heat radiated below 20 K.

Peter Watts’s novel Echopraxia (sequel to Blindsight, both well worth reading) was the place I first heard of the spider genus Portia. Its prey is other spiders, which could eat Portia if it makes a mistake. It appears to be capable of planning and improvising relatively complex hunting strategies despite having a brain of only 600,000 neurons. The tradeoff is that their thinking is a lot slower than ours.

Still, in interstellar space, travel and communications take a long time, and resources are costly to transport. In my opinion, this makes hyperfast AI the wrong approach. Instead, make the tradeoff between time and resource requirements, and have intelligence which could take its time between the stars to develop a strategy for what to do when it reaches the target (regarding us with envious eyes, slowly and surely drawing plans against us, or something).

If a being can exist for millions of years and have a truly cosmic perspective – the exact opposite of most humans stuck on Earth – then they may be in no rush about most things, particularly communications.

We should also at least consider the idea of whole galaxies as living entities. If they “talk” to each other and do not use some form of FTL communications method, then their two-way “chats” will take hundreds of thousands to millions of years to complete. Seeing as our Milk Way galaxy is about 10 billion years old, it may not find such long talks a problem.

There was a science fiction story I once read where two human scientists in a future interstellar and multispecies society were speculating on the subject of galaxies as living beings. One considered the possibility that the growing electromagnetic communications between all their civilized star systems might be perceived as an irritating noise to the Milky Way – and that the slowly approaching Andromeda galaxy was responding to its plea for help in resolving this “medical” issue.

Leave anthropomorphism aside. What is the physics / chemistry behind the FePx? What does Mike Russell’s thought: “The purpose of life is to hydrogenate carbon dioxide.” “mean” on an interstellar scale as far as Life, Consciousness, Intelligence, Technology)? Strikes me that life must be common.

What do Dawkins’ “selfish genes” have to “say” about shifting their substrates to metal? Strikes me that’s not a good idea.

At some point in history, hunter / gatherers became “civilizations” , someone in Sumeria invented the wheel, and Kubrick made a movie about it all. Strikes me that we may be near unique.

Separation + Silence = Aloneness is the simplest FePX explanation by far.

Going small is also one of the ways to avoid detection. A kg of mass that radiates at a mere 1W, and which is buried in a comet, is going to be very hard to find. There are potentially a trillion comets associated with the solar sytem. For all we know there could be a billion civilization out there, just light weeks away.

If a species wants to ensure its survives, it could make a few hundred billion probes, each with a simulation of their civilization, and scatter them around. Send a hundred billion to comets within the milkyway galaxy, with the rest aimed for intergalactic space.

While people within such simulation could live a long long time subjectively, I do not see them being immortal – there’s still the issue of memory storage. Unless, of course, they wiped the memory and started over every once in a while.

Blackholes can be used to perform calculations. If all the mass in the universe was crushed into a single blackhole it could perform around 10^229 calculations before it evaporated. Imagine if that’s what we are – calculations in a blackhole that has not yet evaporeted. That the universe is expanding could be seen as the ability of the blackhole to compute faster and faster as it evaported.

Over forty years ago, the British Interplanetary Society (BIS) came up with the starprobe concept called Daedalus. The robotic vessel they envisioned was a monster fusion-powered probe weighing 54,000 tons and dwarfing a Saturn 5 rocket.

By comparison, the current Breakthrough Starshot design of the Breakthrough Initiatives effort is considering a swarm of very small but highly sophisticated probes called StarChips pushed by powerful laser beams to spread themselves throughout a target star system and report back on the alien realm they have encountered.

Your concept of billions of nanoprobes being seeded among comets and other celestial objects could indeed be the way we are heading as we slowly get better at planning real interstellar missions.

…’Here we find the scientist going at the Fermi question that we have tackled so many times in these pages, always coming back to the issue that we have a sample of one when it comes to life in the universe, much less technological society, and our sample is right here on Earth.’…

It would be nice to have a sample size of two… wouldn’t that stir the pot!

What fascinates me personally, and what is also unfortunately the hardest thing to answer, is the motivation of these ETIs. From that we could infer whether they would like to live on the galactic rim on near the center.

What would they do with all that computation? Simulate?

If they are curious they might just archive human progression instead of destroying it.

But what if there is a cornucopia of different types – some gregarious, some quiet ? How then could we explain the universal silence?

Maybe it’s death by a thousand cuts? To live on geological timescales, civilizations would need to surmount each existential threat (meteor, nano goo, biological terrorism by one aberrant individual) ? The ones that survive may occasionally go to the nearest star or five, but there is no vibrant galactic single society? (How can you have a conversation across a thousand light years?)

I find the Fermi Paradox to be one of life’s most fascinating conundrums and that, together with the very high quality of comments here, is why CD is on the top of my reading list!

Kamal, your comment about a civilization occasionally going “to the nearest star or five” resonates with something that Arthur C. Clarke’s 1986 novel (it was originally a short story) “The Songs of Distant Earth” conveys. (Before the Sun goes “mildly” nova–but enough to destroy the inner planets–an interstellar “seedship” exodus occurs, with humanity thereafter being spread out among a few stars. The story concerns the visit to the colony planet Thalassa by the hibernation Starship Magellan [in need of more ice for her erosion shield, having 72 more light-years to go to her destination, a planet called Sagan Two], whose crew witnessed the destruction as they departed.) Now:

The picture in the novel (and its predecessor short story) is that of a fractured and thinly-spread humanity, whose far-flung members no longer have the social “critical mass” to achieve great things. The Thalassans (who call themselves Lassans), relatively few in number, live a carefree, “island paradise” lifestyle and have what technology–not primitive by any means–that came with their ancestors’ seedship, but they haven’t even launched communications or weather satellites, much less explored their planet’s two moons or the rest of their solar system, and:

Even the crew of the Magellan admit that no one–except three still-hibernating scientists, or so they were told–understand the science behind how their ship’s quantum drive works. They and the Lassans also talk about how little the various new outposts of humanity know about each other’s status or accomplishments, due to the interstellar distances (even before Thalassa’s Arecibo-type interstellar communications dish was wrecked by a volcanic eruption). If humanity ever actually does something like this (regardless of the circumstances that cause it to occur, whether negative or positive), I can’t help but wonder if–barring the discovery of faster-than-light (or *effectively* FTL) methods of travel and/or communication–the portrayal of interstellar humanity in the novel is the best we could hope for?

Nice summary of that important aspect of Clarke’s novel. This highlights another trope about interstellar exploration and colonization that too often is automatically assumed will have future generations claiming new alien worlds with their original mission goals intact.

They will then branch off to yet another star system for the same reasons, all the while either remaining just as advanced and knowledgeable as past generations and then becoming even more so as they move onward and outward to eventually colonize the whole Milky Way galaxy in a mere few million years according to some estimates.

This assumption has often been used as a reason why ETI do not exist, because if even one of them did they would have spread through the entire galaxy long ago – and we would have noticed or not even be here, of course.

This idea also assumes that not only would an ETI want to even do this, but that every star system would be of equal importance and interest to them to want to settle in. Or that humanity will do the same without pause for millions of years or more.

Suppose a future human mission either finds or makes an alien world that is comparable to a tropical paradise. Will the situation cause these “explorers” to want to plant their feet on yet another alien world, or will they instead find it easier to put their feet up in a lounge chair and sip mai-tai’s on the beach?

This is the kind of possibility that raises the chances that if anyone or anything will be spreading out among the stars, it will be some form of Artilects rather than strictly organic beings like humanity.

http://astrobiology.com/2018/02/possible-photometric-signatures-of-moderately-advanced-civilizations-the-clarke-exobelt.html

Possible Photometric Signatures of Moderately Advanced Civilizations: The Clarke Exobelt

Press Release – Source: astro-ph.EP

Posted February 22, 2018 9:22 PM

This paper puts forward a possible new indicator for the presence of moderately advanced civilizations on transiting exoplanets.

The idea is to examine the region of space around a planet where potential geostationary or geosynchronous satellites would orbit (herafter, the Clarke exobelt).

Civilizations with a high density of devices and/or space junk in that region, but otherwise similar to ours in terms of space technology (our working definition of “moderately advanced”), may leave a noticeable imprint on the light curve of the parent star. The main contribution to such signature comes from the exobelt edge, where its opacity is maximum due to geometrical projection.

Numerical simulations have been conducted for a variety of possible scenarios. In some cases, a Clarke exobelt with a fractional face-on opacity of ~1E-4 would be easily observable with existing instrumentation. Simulations of Clarke exobelts and natural rings are used to quantify how they can be distinguished by their light curve.

Hector Socas-Navarro

(Submitted on 21 Feb 2018)

Comments: Accepted for publication in ApJ

Subjects: Earth and Planetary Astrophysics (astro-ph.EP); Solar and Stellar Astrophysics (astro-ph.SR)

Cite as: arXiv:1802.07723 [astro-ph.EP] (or arXiv:1802.07723v1 [astro-ph.EP] for this version)

Submission history

From: Hector Socas-Navarro

[v1] Wed, 21 Feb 2018 17:05:28 GMT (2211kb,D)

https://arxiv.org/abs/1802.07723

Astrobiology

Wow… thanks for the link Larry. I love this idea.

I highly recommend to everyone this online work by Robert A. Freitas, Jr., titled Xenology: An Introduction to the Scientific Study of Extraterrestrial Life, Intelligence, and Civilization, available via this URL:

http://www.xenology.info/

Xenology is a fascinating read overall, though for our discussion I especially recommend Section 14.3 titled “Alien Consciousness and the Sentience Quotient”:

http://www.xenology.info/Xeno/14.3.htm

To quote from that section:

Science fiction writers are fond of pointing out that there may exist many different levels of awareness among the extraterrestrial races of our Galaxy.1543 Perhaps the most classic example of this may be found in The Black Cloud, written by astronomer Fred Hoyle.62 In the novel it is suggested that natural grades of consciousness may exist, and that it is virtually impossible for a being at one level to comprehend the mentality of another being at a higher level of consciousness.

In a similar vein, Carl Sagan draws a fanciful analogy from our relation to the insect world:

The manifestations of very advanced civilizations may not be in the least apparent to a society as backward as we, any more than an ant performing his anty labors by the side of a suburban swimming pool has a profound sense of the presence of a superior technical civilization all around him.15

Makes perfect sense that there should be a spread of intelligence and this is mirrored in thoughts of AI… we are surrounded by many, many examples of ANI that are better than us at performing myriad, yet narrow, tasks. ‘If’ we can crack the step to make/become an AGI then we may only have it for five minutes before…whoosh… of it goes, jumping to the next higher rung of the intelligence-ladder as it explores what it means to be an ever increasing ASI. Let’s hope the active discussions AI experts are calling for, and actively engaged in, help lay the foundations today for when that happens rather than leave it too late.

Tim Urban has a splendid treatise here https://waitbutwhy.com/2015/01/artificial-intelligence-revolution-1.html

And,

Robert Miles has made some good vids on AI safety on his youtube channel, eg https://youtu.be/lqJUIqZNzP8 for anyone interested.

Could quintelligence use low-mass DARK MATTER as a “framework” for their quantum computers, because there is now UNIMPEACHABLE EVIDENCE that these particles DO EXIST!!! Google https://www.aftau.org/news-page-astronomy-astrophysics and click on the SECOND item on the list page. Then, go to the “Latest News section and click on the FIRST item there, concerning the first direct detection of dark matter. WIMPS ARE DEAD!!! LONG LIVE AXIONS!!!