Last December I mentioned the ongoing work at the European Southern Observatory’s Very Large Telescope to modify an instrument called VISIR (VLT Imager and Spectrometer for the InfraRed). Breakthrough Initiatives, through its Breakthrough Watch program, is working with the ESO’s NEAR program (New Earths in the Alpha cen Region) to improve the instrument’s contrast and sensitivity, the goal being the detection of a habitable zone planet at Alpha Centauri. Exciting stuff indeed, especially given the magnitude of the challenge.

After all, we are dealing with a tight binary, with the two stars closing to within 11 AU in their 79.9 year orbit about a common center (think of a K-class star at about Saturn’s distance). The binary’s orbital eccentricity can separate the stars by about 35 AU at their most distant. The latest figure I’ve seen for the distance between Centauri A/B and Proxima Centauri is about 13,000 AU.

In an ESO blog post that Centauri Dreams reader Harry Ray passed along, planet hunter Markus Kasper explained that the performance goal for the upgraded VISIR requires one part in a million contrast at less than one arcsecond separation, something that has not yet been demonstrated in the thermal infrared. Alpha Centauri A/B will be a tough nut to crack. Kasper makes a familiar comparison: This is like detecting a firefly sitting on a lighthouse lamp from a few hundred kilometres away.

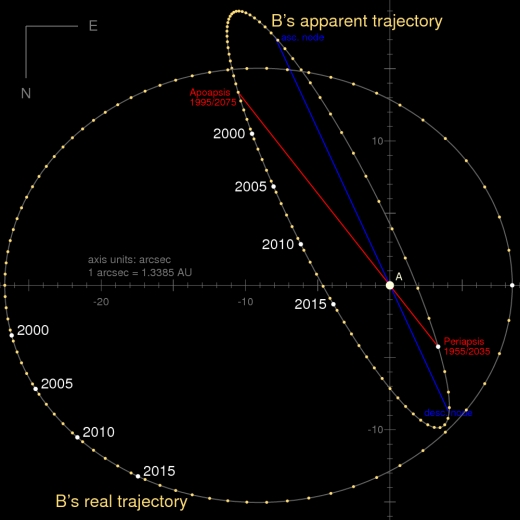

Image: Apparent and true orbits of Alpha Centauri. The A component is held stationary and the relative orbital motion of the B component is shown. The apparent orbit (thin ellipse) is the shape of the orbit as seen by an observer on Earth. The true orbit is the shape of the orbit viewed perpendicular to the plane of the orbital motion. According to the radial velocity vs. time [12] the radial separation of A and B along the line of sight had reached a maximum in 2007 with B being behind A. The orbit is divided here into 80 points, each step refers to a timestep of approx. 0.99888 years or 364.84 days. Credit: Wikimedia Commons.

But VISIR may be up to the task. Installed at Paranal, 2635 metres above sea level in Chile’s Atacama Desert in 2004, it has been used to probe dust clouds at infrared wavelengths to study the evolution of stars. The instrument is capable of compiling in a scant 20 minutes as many images or spectra as a 3-4 meter telescope could obtain in an entire night of observations. VISIR has also been used to study Jupiter’s Great Red Spot, Neptune’s poles, and the supermassive black holes that can occur at the centers of galaxies.

Ramping up VISIR’s capabilities for Alpha Centauri is a multi-part effort, as Kasper describes:

Firstly, Adaptive Optics (AO) will be used to improve the point source sensitivity of VISIR. The AO will be implemented by ESO, building on the newly-available deformable secondary mirror at the VLT’s Unit Telescope 4 (UT4).

Secondly, a team led by the University of Liège (Belgium), Uppsala University (Sweden) and Caltech (USA) will develop a novel vortex coronagraph to provide a very high imaging contrast at small angular separations. This is necessary because even when we look at a star system in the mid-infrared, the star itself is still millions of times brighter than the planets we want to detect, so we need a dedicated technique to reduce the star’s light. A coronagraph can achieve this.

Finally, a module containing the wavefront sensor and a new internal chopping device for detector calibration will be built by our contractor Kampf Telescope Optics in Munich.

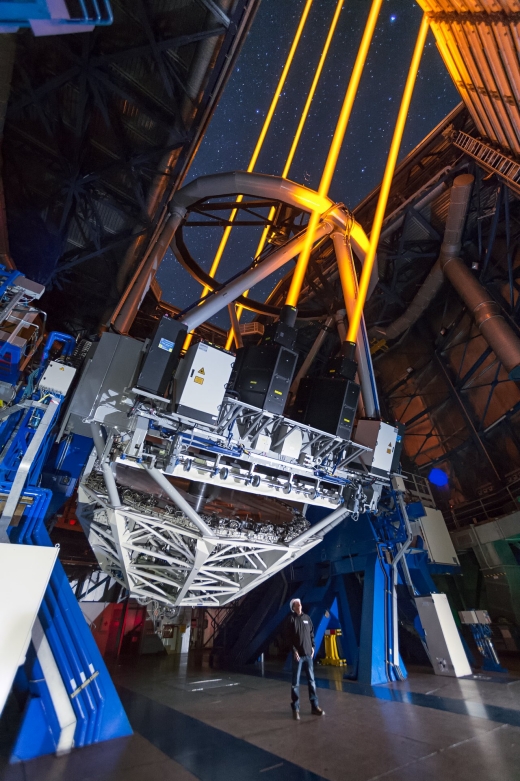

Image: Adaptive optics at work. Glistening against the backdrop of the night sky about ESO’s Paranal Observatory, four laser beams project out into the darkness from Unit Telescope 4 (UT4) of the VLT. Some 90 kilometres up in the atmosphere, the lasers excite atoms of sodium, creating artificial stars for the telescope’s adaptive optics systems. Credit: ESO/F. Kamphues.

The exciting prospect, says Kasper, is that a habitable zone planet could be detected in just 100 hours of observing time on the VLT. To do this will first entail moving VISIR from Unit Telescope 3 to Unit Telescope 4 at Paranal. Hardware testing in Europe continues and the upgrade is expected to be implemented in VISIR by the end of 2018, with the Alpha Centauri observing campaign scheduled for mid-2019, a two-week run that will collect the needed 100 hours of observing time. We may not be far, then, from a planet detection at Centauri A/B.

Kasper points to Breakthrough Initiatives as key players in all this. Let me quote him:

…it is exciting to see how the Breakthrough Initiatives are managing to create momentum in the research field. By backing ideas and projects with a higher risk level than public funding agencies are ready to support, the Initiatives have motivated scientists to push the envelope and leap forward in their research.

Remember that Breakthrough Starshot not only achieved a great deal of media coverage but also caught the eye of a US congressman, with its goal to design and fly tiny probes by beamed sail to the Alpha Centauri system. NASA has begun to look at interstellar concepts again, a small but welcome effort long after the closing of the Breakthrough Propulsion Physics program. Breakthrough Listen is actively conducting SETI at Green Bank and Parkes in Australia. Breakthrough Watch now looks for habitable zone planets within 16 light years of Earth.

Pushing projects with higher risk levels is something that a private initiative can achieve, with repercussions for the broader effort to characterize nearby planetary systems and some day reach them with a probe. We’ll soon have the tools in place to study planetary atmospheres around many of these stars, so the timing of the VISIR effort could not have been better.

I am sure I have read that the NSF, and particularly the NIH, is far too conservative in its funding, preferring projects that will lead to results and not funding more blue sky projects.

In parallel, I think it is clear that SpaceX’ advances in reusability have outpaced the more conservative developments of state-funded rockets. ULA is playing some sort of belated catchup with its Vulcan program, and NASA is steadfast in its adherence to old rocketry with its canceled Aries I & V rockets, and the SLS.

What I would hope is that technological success stories change the funding behavior at the NSF to reinvigorate high-risk projects that could offer breakthroughs if they succeed, an approach more akin to VC funding.

Just as the British biologist Dr. Rupert Sheldrake advocates that 5% of the UK’s public science funding should be used to support experiments that are of interest to the general public (he has drawn up a tentative plan for how it could be done, with proper oversight), the National Science Foundation (NSF) could similarly devote a small percentage of its budget allocation to higher-risk, higher-payoff research projects such as space-based, high-resolution astrometric and/or coronographic telescopes (as Michael C. Fidler described below), and:

Another possibly fruitful project of this type would use the Moon’s limb as a “mask,” to repeatedly momentarily block the starlight to one or more telescopes in inclined Earth orbits (in order to occult the Alpha Centauri system with the Moon), enabling any exoplanets orbiting the “masked” stars to be seen briefly (the same way that Sirius B, the “Pup,” was accidentally discovered [the corner of a building served as the mask in that case]). Using the Moon as such a mask is not a new idea, but today’s improved computer image gathering and processing technology, along with high-resolution photogrammetric maps of the limb contours, would make use of the lunar limbs–and even individual mountain peaks and ridges around the limbs–more effective and predictable.

What advantages would there be in using the Moon as an occulting object over an artificial disk?

The Moon is free, and already in place. :-) (Similarly, it has also been suggested, going back to the 1960s [Arthur C. Clarke wrote about the proposals], that a lunar crater’s rim could form part of an ultraviolet or X-ray telescope’s optics, with the telescope proper being situated in the middle of the crater floor. By noting the exact instant when an invisible [at visible light wavelengths] object rose or set at the crater rim, its position, angular size, and extent could be determined with great precision.) Also, regarding using the Moon as an occultation mask:

Depending on the angles at which the moving Moon intercepts the stars of interest (telescopes in inclined and eccentric Earth orbits would enable more star choices–and more frequent lunar occultation opportunities–than Earth-based telescopes, particularly for stars well off the ecliptic), multiple, variable-length occultations of stars are possible during the same observing run, by utilizing the mountains rimming the lunar limbs. (In his 1976 book, “New Guide to the Moon,” Patrick Moore mentioned the action of this limb effect, when one star whose occultation he and a friend timed–with separate telescopes a few yards apart–faded out instead of instantly disappearing; the star turned out to be a close binary.) In addition:

High-resolution photogrammetric maps of the Moon’s limbs would also permit accurate measurements of exoplanet-star angular separations to be made, by utilizing lunar mountains of known widths, in concert with the known Earth-Moon distance (which varies, of course) and the known “aggregate occultation angular velocity.” The Moon’s librations, while they would complicate things, would also present different limb features of varying sizes, which could be utilized to gradually “filter” the occultation observations to finer resolutions (rather like using pieces of sandpaper–or sanding film–with increasingly-fine grit sizes).

That picture of the telescope looked like it was altered through Photoshop. If so, I wish that they would not do such or at least acknowledge such was done. It reminds me of illustrations showing satellites and space probes with flamboyant nebulae in the background. Space does not look that way to the unaided eye nor does it need to be such to be interesting. CGI is taking away our imagination and our sense of proportions (I know, this complaint makes me sounds a like a curmudgeon).

No, I don’t think you’re being curmudgeonly to think and say that. The true, more “subtle” vistas that we actually see with the naked eye, and even through telescopes (and even in the old time exposures shot on film), have always impressed and even awed me more than the brilliantly-hued CCD pictures that we are presented with today, and:

Even the Pioneer 10 and 11 spin-scanned images of Jupiter and Saturn, and Mariner 10’s vidicon TV pictures of Mercury, are more appealing to me than the better, later views from subsequent probes (and likewise for the Viking views of Mars). I think this is because the modern astronomical and space probe pictures, in a way, show “too much,” and leave no space for the imagination; I like Chesley Bonestell’s and Helmut K. Wimmer’s astronomical artwork–which they both continued to create even after space probes had returned pictures of the worlds they portrayed–for the same reason. Also:

The spectacularly-colored astronomical and space probe pictures in recent and current books are, to my eyes, like what eating a sickeningly-sweet dessert is to my taste buds–a sensory overload, too much of a good thing. This also makes such pictures unremarkable, giving me a “If you’ve seen one, you’ve seen ’em all” feeling toward them. It isn’t just a matter of having enjoyed such books as a boy, either, because I have recently bought numerous older books that I’d never seen before, and those old pictures and paintings make those books convey a sense of wonder that few recent or current books–as informative as they are–convey. You and I are apparently not the only ones to have these reactions, because:

On at least two occasions in the 1990s, “Sky & Telescope” magazine ran articles containing pictures showing how various celestial bodies would *really* look to nearby human observers. One contained “from orbit” (or close flybys) and “from the surface” views of several planets and moons (they were carefully re-processed space probe pictures), and their colors were much more subtle than what we’ve been shown; for example, Mars is really a light reddish brown, and Io is a light yellow, not the garish orange we’ve been treated to since 1979. The other article showed how red dwarf stars (they’re really a dull orange to the human eye) and other classes of stars would really look to interstellar astronauts in their vicinity.

Space artist Don Davis devoted a number of pages to the true colors of the Sol system and beyond here:

http://www.donaldedavis.com/PARTS/colors.html

This one is all about Mars:

http://www.donaldedavis.com/MARSCOLOR.html

Thank you for posting those–the comparative planetary color bands, as well as the color-corrected pictures themselves, are “chromatically informative.” Interestingly, the Pioneer 10 and 11 spin-scanned IPP (Imaging Photo-Polarimeter) pictures of Jupiter and Saturn–especially the “medium-distance,” full-disc ones in the NASA Ames “Pioneer Odyssey” books (of which there are two or three editions)–have the same true, more subtle colors as the color-corrected CCD-taken pictures from later probes. Also:

When I worked at the (now-closed) Miami Space Transit Planetarium and volunteered at the rooftop Claire Weintraub Observatory, one night an avid local amateur astronomer–who often visited on nights when the observatory was open–pointed out an interesting finding. Photometric studies of the planets indicate that only the Earth has colors (saturated colors, I think he meant); the other planets (and moons and asteroids) have tints. (A local “lunar fan” [who even printed a detailed monthly Moon observation newsletter] took great exception to this, insisting that “The Moon has color! :-) )

Not curmudgeonly, just sad that no photo can be trusted as an actual representation these days. The universe doesn’t need artificial aid to be spectacular.

Great find, Harry! And thanks to Yuri Milner for his vision and funding!

I did some work on this over the weekend. A TRUE Super-Earth could only be detected if it has a relatively high albedo. Assuming this is what is out there, I estimate the sigma levels as follows: 4 for 1.6Re, 3 for 1.5Re, and only 2 for 1.4Re. Now for some bad news. The Exoplanet.eu website has taken down Kepler 452 from its confirmed planets list. To find out why, click “Bibliography” and read the newest item at the top.

Interesting projects that Breakthrough Watch is working on, anyone have more details and timelines for them.

Space-borne astrometric telescope:

Viewed with the naked eye, Alpha Centauri looks like a single star – the third brightest in the sky. But in 1689, a Jesuit priest called Jean Richaud noticed while observing a passing comet that it was in fact a binary star. If one or both of these stars hosted planets, the angular separation between the two stars (the size of the angle between the imaginary lines connecting each of them to an observer) would be periodically affected by the gravitational effects of their orbits. But to detect such a modulation – around 2 micro-arcseconds, or a 50-billionth of a degree for an Earth-like planet in the habitable zone – the best bet is a space-borne telescope.

In the next few years, Breakthrough Watch aims to launch a 30cm-diameter space telescope, equipped with an imaging camera, to accurately measure the angular separation between Alpha Centauri A and B with the requisite sensitivity to identify a potential Earth-mass planet in the habitable zone, as well as measuring its mass and orbit. An aperture mask, placed in front of the telescope, will spread the light of the two stars over many detector pixels, thus averaging out small detector imperfections and avoiding saturation.

Space-borne coronagraphic telescope:

Once the mass, orbit and thermal emissions of Proxima b and other potential planets in the Alpha Centauri system have been observed, the next task would be to determine whether they have atmospheres, and if so what their constituents were.

Using the highly stable telescope design developed for the astrometric space telescope, Breakthrough Watch aims to launch a 30cm-diameter space-borne coronographic telescope to image such a planet using visible light. The mission would include on-board wavefront control and starlight supression. It would be able to measure the planet’s size, reflectivity and surface temperature, and provide constraints on its atmospheric composition – a key indicator of the presence of organic life.

Ultimately, Breakthrough Watch also intends to turn its attention to the next closest sun-like stars, including Epsilon Eridani (10.5 light years away), 61 Cygni (11.4ly) and Tau Ceti (11.9ly). This would require developing a 50cm-diameter space coronograph.

https://breakthroughinitiatives.org/instruments/4

Ashley Baldwin could the development of the Breakthrough Watch space coronograph or any other similar project help in the cost of the development and WFIRST coronagraph instrument (CGI).

WITH DOWNSIZING OF WFIRST, CAA ASKS IF CORONAGRAPH IS REALLY NEEDED.

“While agreeing on the scientific value of a coronagraph, both raised concerns about the additional cost and technical risk that will be incurred by adding one to this mission, especially since the technology is not mature.”

https://spacepolicyonline.com/news/with-downsizing-of-wfirst-caa-asks-if-coronagraph-is-really-needed/

Time to add VISIR to your list?

Not as VISIR, but as NEAR, the Alpha Centauri part of the new VISIR work.

NEAR: Low-mass Planets in ? Cen with VISIR.

https://www.eso.org/sci/publications/messenger/archive/no.169-sep17/messenger-no169-16-20.pdf

Some very interesting developments in NIAC 2018 Phase I and Phase II Selections.

NIAC 2018 Phase I and Phase II Selections.

https://www.nasa.gov/directorates/spacetech/niac/2018_Phase_I_Phase_II

These two look very promising:

PROCSIMA: Diffractionless Beamed Propulsion for Breakthrough Interstellar Missions.

Chris Limbach

Texas A&M Engineering Experiment Station

We propose a new and innovative beamed propulsion architecture that enables an interstellar mission to Proxima Centauri with a 42-year cruise duration at 10% the speed of light. This architecture dramatically increases the distance over which the spacecraft is accelerated (compared with laser propulsion) while simultaneously reducing the beam size at the transmitter and probe from 10s of kilometers to less than 10 meters. These advantages translate into increased velocity change (delta-V) and payload mass compared with laser propulsion alone. While primarily geared toward interstellar missions, our propulsion architecture also enables rapid travel to destinations such as Oort cloud objects and the solar gravitational lens at 500 AU.

The key innovation of our propulsion concept is the application of a combined neutral particle beam and laser beam in such a way that neither spreads or diffracts as the beam propagates. The elimination of both diffraction and thermal spreading is achieved by tailoring the mutual interaction of the laser and particle beams so that (1) refractive index variations produced by the particle beam generate a waveguide effect (thereby eliminating laser diffraction) and (2) the particle beam is trapped in regions of high electric field strength near the center of the laser beam. By exploiting these phenomena simultaneously, we can produce a combined beam that propagates with a constant spatial profile, also known as a soliton. We have thus named the proposed architecture PROCSIMA: Photon-paRticle Optically Coupled Soliton Interstellar Mission Accelerator. Compared with a diffracting laser beam, the PROCSIMA architecture increases the probe acceleration distance by a factor of ~10,000, enabling a payload capability of 1 kg for the 42-year mission to Proxima Centauri.

The PROCSIMA architecture leverages recent technological advancements in both high-energy laser systems and high-energy neutral particle beams. The former has been investigated extensively by Lubin in the context of conventional laser propulsion, and we assume a similar 50 GW high-energy laser capability for PROCSIMA. Neutral beam technology is also under development, primarily by the nuclear fusion community, for diagnostics and heating of magnetically confined fusion plasmas. By combining known physics with emerging laser and neutral beam technologies, the PROCSIMA architecture creates a breakthrough payload capability for relativistic interstellar missions.

https://www.nasa.gov/directorates/spacetech/niac/2018_Phase_I_Phase_II/PROCSIMA

Spacecraft Scale Magnetospheric Protection from Galactic Cosmic Radiation.

John Slough

MSNW, LLC

An optimal shielding configuration has been realized during the phase I study, and it is referred to as a Magnetospheric Dipolar Torus (MDT). This configuration has the singular ability to deflect the vast majority of the GCR including HZE ions. In addition, the MDT shields both habitat and magnets eliminating the secondary particle irradiation hazard, which can dominate over the primary GCR for the closed magnetic topologies that have been investigated in the past. MDT shielding also reduces structural, mass and power requirements. For phase II a low cost method for testing shielding on Earth had been devised using cosmic GeV muons as a surrogate for the GCR encountered in space.

During the phase I study MSNW developed 3-D relativistic particle code to evaluate magnetic shielding of GCR and evaluated a wide range of magnetic topologies and shielding approaches from nested tori to large, plasma- based magnetospheric configurations. It was found that by far the best shielding performance was obtained for the MDT configuration. The plans for phase II include an upgrade of the MSNW particle code to include material activation and a full range of GCR ions and energies. The improved particle code will be employed to characterize and optimize a subscale MDT for shielding GCR-generated muons arriving at the Earth’s surface. The subscale MDT will be designed, built, and then perform several shielding tests using the GCR induced muons at various locations and elevations. The intent is to the validate MDT concept and bring it to TRL 4. A detailed design will be carried out for the next stage of development employing High Temperature Superconducting Coils and plans for both structures and space habitat. A substantial effort will be made to find critical NASA and commercial aerospace partners for future testing in Phase III to TRL 5.

https://www.nasa.gov/directorates/spacetech/niac/2018_Phase_I_Phase_II/Spacecraft_Scale_Magnetospheric_Protection_from_Galactic_Cosmic_Radiation

Its ok to laude the private enterprises for their work.

Yet the success we’ve seen have come from persons who made their money in very unusual circumstances. The Internet boom.

And so were not traditionally schooled in traditional business, neither do they have interests similar to more traditional business leaders.

So this have boosted an interest in these areas right now, but it will not last for ever.

Lets say they are experience a “Skunk works” period. Elon Musk, he got a lead in electric cars, but other corporations are caching up. SpaceX will mature and eventually become a slow moving giant like Boeing.

The same is true for science institutions, I sit on something that I found during ‘Blue sky research’ the private sector would never have given funds for it. Now with a pressure from the private sector, the institutions start to act the same way as them.

So the funding have run out, and we still try to go forward, with absolute no funds at all, not even proper wages for us involved – I ask for no pay but have started on second work.

Now I find I do not have enough energy for the research. So please, do not applaud the private sector in all ways or what they do. (No I do no research about space, but my discovery is GM food. I have the gene that is very sickness resistant. And that’s why it’s controversial.)

Did you try non-traditional funding, like ICOs?

Your experience, Andrei (that human beings can run “hot” and “cold” on such large, long-term projects), also parallels a worry that I have, and it also–which could be a positive thing–goes against two widely-held popular notions:

Elon Musk is attracted to settle the planet Mars because it excites him. (His “practical” reason for doing it–creating a second home for humanity–isn’t practical, at least not with current technology [increasingly he’s talking about lunar settlement now], and what if not enough people wanted to go to either place, so that a viable self-sustaining off-Earth society couldn’t develop?) But:

Will Musk’s enthusiasm for developing larger, cheaper, and increasingly-reusable launch vehicles (and spaceships)–the transportation infrastructure that such enterprises would require–continue if it becomes evident to him that true Martian and/or lunar colonies (as opposed to Antarctic base-like “encampments”) simply aren’t going to be possible in his lifetime, if ever? (Jeff Bezos of Blue Origin seems to have a longer view, talking about off-world colonists centuries from now looking back to our time as being when extraterrestrial settlement began. His company’s heraldic imagery [which prominently features two tortoises] and motto, “Gradatim Ferociter” [“Step by Step, Ferociously”], and its partnerships with other aerospace companies, also indicate his patient, steady progress toward that goal.) Also:

A common belief is that no interstellar probes will–or even should–be developed and launched until quite fast ones are feasible, because “Old, slow probes will be passed by new, faster ones.” This belief rests upon two inter-twined assumptions, which the history of the Space Age in general, and that of the Apollo program in particular, have shown to be untrue; the assumptions are that human progress and technological development are steady and continuous (whether linear or exponential), and:

If they were, then Apollo, Mariner, and Pioneer would have led to lunar bases, Mars expeditions, extensive robotic reconnaissance of the other planets of our Solar System, and numerous ultraplanetary probe missions by now. But social, political, international, and economic circumstances change. So do the gross interests of the scientific community as a whole; it too is subject to periodic “fashions,” and all of these factors affect the rate of technological development and the levels of funding of space projects. As well:

With this being the case, interstellar probes–even slower-than-preferred ones–should be built and launched whenever favorable confluences of scientific and societal interest, funding, and technological readiness make it possible, because such opportunities do not arise automatically or often (there probably won’t *be* new starprobes hot on the heels of old ones, simply because any starprobes, slow or fast, will be very expensive). A more probable chronological spacing of successive probes would be on the order of decades or centuries, unless their costs could be greatly reduced (“Sun-diver” solar sail starprobes might enable this). In addition:

Another widely-held notion is that “It wouldn’t be worthwhile to send slow interstellar probes because no one a century or more from now would be interested in data from them.” Really? We directly image Jovian exoplanets that are hundreds of light-years away, and have even measured their wind velocities via transits, and that is–by definition–*very* old data. Slow starprobes would tell us a lot while they’re en route to their target stars, and after arrival they would have far more detailed instrument and imager views than what we could ever perceive from the distant Earth. Another example that runs counter to this assumption involves Pioneer 10 & 11 and Voyager 1 & 2; no space scientists, to my knowledge, have ever suggested that they be turned off “because they’re too old and obsolete.” On the contrary, they were (Pioneer 10 & 11) and are (Voyager 1 & 2) treasured as reporting on conditions in remote regions of space, where no other on-site instruments are available (New Horizons will also be so valued, after its last encounter).

@Antonio: Yes we do look into getting funding from ‘unusual’ places.

The problem we face is that more research still is needed, for example which organism/species that can receive the gene.

Only after that will some investors be interested, and we could sell the idea if we run out of funds again.

Another group will most likely find the gene in the future, we might publish in the end with what we have. But this will be delayed several decades then.

@J. Jason Wentworth: Yes I told this as one example, and I worry on many tendencies in the current world. There is no pro-action on problems we know are coming. My little gene could have been one small in contribution feeding the future larger population on Earth. Other ideas and discovery is needed to fully solve that.

The same go for space research, it is also blue sky research.

Yes Elon seem to have come to his senses, and I applaud that, the fallout of failing his grand plan would have bound us even more to Earth.

Jeff Bezos always seem to have been more realistic about long term ideas.

Eventually I do think several problems could very well be solved trough a more active space program, often as ‘spin off’ ideas and engineering.

(If someone find out how to do CHON food from comets or on Titan to feed astronauts, it can also be used on Earth.)

But illogical views and politicizing of anything from atomic propulsion, global warming, nuclear power (would solve the former) to the idea that GM foods in some odd ways dangerous.

I often say to such people that genes are everywhere, do you often get attacked by the genes in the dandruff or from loose hairs?

All this stupidity make me pessimistic on the outcome and even a (partial) crash of our civilization.

I’m not the least bit pessimistic about the future. That has always seemed to be the majority persuasion, all my life and as long as records have been kept. Oddly, most people are–and have been–optimistic about their *own* lives, but pessimistic about the world, which is cognitively dissonant; if the world “goes to the dogs,” so will the optimists’ lives, unless they are very lucky, but:

That isn’t to say that I don’t see any problems; I do (several of them are astronomical/space-related), but they are within our capability to solve or prevent, and they even present positive opportunities (to “turn lemons into lemonade”), including as useful infrastructure/public works projects (your above-listed ideas also have such potential). They are as follows:

[1] Developing affordable and tasty “CHON-based” foods. (Even in 1968, in his non-fiction book “The Promise of Space,” Arthur C. Clarke wrote–in Chapter 19, “The Lunar Colony”–that “Perhaps by the time (around the turn of the century?) we are planning extensive lunar colonization, the chemists may be able to synthesize any desired food from such basics as lime, phosphates, carbon dioxide, ammonia, water. In fact, this could be done now if expense was no object; it will *have* to be done *economically* [the “**” stand for italics], within the next few decades, to feed Earth’s exploding population.” (This dire prediction, which he repeated elsewhere in the book, fortunately never came to pass; more on such matters below.)

He also mentioned making foods from algae culture (so did Neil P. Ruzic in his 1970 book “Where the Winds Sleep: Man’s Future on the Moon, A Projected History,” see: http://www.amazon.com/Where-Winds-Sleep-Projected-History/dp/0385060645/ref=sr_1_1?s=books&ie=UTF8&qid=1522935766&sr=1-1&keywords=Where+the+Winds+Sleep+by+Neil+P.+Ruzic [and his 1965 book “The Case for Going to the Moon”–for economic, medical, scientific, and engineering reasons–see: http://www.amazon.com/Case-Going-Moon-Neil-Ruzic/dp/B0007DVXVU/ref=sr_1_fkmr0_1?s=books&ie=UTF8&qid=1522935891&sr=1-1-fkmr0&keywords=the+case+for+going+to+the+moon+by+neil+p.+rustic ] is also well worth reading!).

Reading Ruzic’s detailed “recipes” for manufactured algae-based meat, bread, and dairy products actually made me hungry (he was a civil engineer, and founded Industrial Research, Inc.; he also patented a very simple lunar cryostat that used the cryogenic cold of the lunar “night season”–as he predicted future lunarians would call the two weeks of darkness–Wernher von Braun was impressed with Ruzic, and wrote the Forward to “Where the Winds Sleep.”)

I would happily eat CHON- and algae-based foods (goat ^milk^ would also be an excellent component of that “fare selection”–I use it on cereal, and I use goat milk soap for showering [goats were also favored in the space colony farming studies]) if they were affordable and tasty, for health reasons and because, although I’m not a vegetarian, I would rather avoid eating animals if comparable protein sources were available. Many other people would also do the same, and for the same reasons, if such culinary options were available. There’s a market there, on Earth and (later) in lunar and space colonies.

[2] For only about $5 – $10 billion, we could “arc-discharge-proof” the United States’ electrical power distribution grids, which would prevent millions of deaths if another solar Carrington Event (the last one, in 1859, burned telegraph wires off poles, shocked telegraph operators, and made telegraph pylons throw off sparks), or if a human-created EMP (Electro-Magnetic Pulse) nuclear attack–which Iran and North Korea are interested in–were to occur. While government funding is very seldom an investment in the financial sense, despite what politicians are wont to say (governments make *expenditures*, which only in rare situations generate financial returns), the money spent on the ” Brobdingnagian lightning arrestor-like” equipment–and on the workers’ pay to install it–would be an investment in our lives’ safety, because it would prevent loss of life and the economic ruin that would accompany it (Quebec got a small “taste” of such a ‘baby’ Carrington Event in 1989).

[3] A low-probability but high-casualty (and high-damage, and its accompanying financial cost, if it ever occurred here) event would be the impact of a previously-unknown long-period comet (we know about the potentially hazardous short-period comets and asteroids, including their orbital elements). A long-period comet impact, while very unlikely, would give us no time to prepare for it. Therefore:

A previously-prepared “paper plan” (which could be quickly activated if needed) could simply consist of “earmarking” one or two on-alert nuclear warheads, which–if the need arose–would be offloaded from their ICBMs (or removed from their free-fall nuclear bomb cases, depending on their type and normal use), then mated with an existing communications satellite bus (one kept in storage) and launched on the next available rocket possessing sufficient Delta-V, in order to intercept the incoming comet as far away from the Earth as possible. Then:

A stand-off detonation (an enhanced X-ray warhead would be ideal for this) would impart enough thrust to the comet (by vaporizing a thin layer of its surface material on the side facing the blast) to ensure a clean miss, without fragmenting the comet nucleus. Before this “paper plan” was officially adopted, the selected satellite bus design could be test-flown with a dummy nuclear warhead attached, in order to validate the flight control, navigation, and warhead arming/fuzing/detonating hardware and software. Thereafter, the plan would be kept in reserve, in case it ever proved to be necessary. Two such satellite buses could be kept in “bonded storage” (‘salted away’ for possible future use, so to speak), to guard against a launch failure or a spacecraft malfunction before it could reach the comet.

[4] There is a volcano (whose name escapes me at the moment) in the Atlantic Ocean, in the neighborhood of the Azores, if memory serves. The section of the undersea ridge where it is located is very unstable; a huge “tongue” of rock about nine miles long and a mile thick contains many “pockets” filled with seawater, which–if the lava hits them–will explode into steam, causing this asteroid-size mass of rock to break free and plunge to the ocean bottom. This will generate a gigantic tsunami that will simply “erase” the eastern seaboard of North, Central, and South America, and:

This will–with no doubt–occur sometime in the next 10,000 years or so; but exactly when, no one knows. It might happen tomorrow, or ten thousand years hence. But while we can’t prevent it, we could–if we wished–greatly ameliorate its effects simply by dropping many rocks off our shores, to create a breakwater. This would also be a good–and useful (including for tourism)–public works project, which would create many jobs. But creating such a long and robust tri-continental breakwater would require dropping a *lot* of rocks into place offshore, so none one wants to spend money on something that might not come in handy for up to 10,000 years. However:

If the volcano causes the huge “tongue” of rock to plummet into the Atlantic next week, everyone (who survives) will be demanding to know why the politicians knew about the danger, yet did nothing to lessen it. (The human impatience-based problems regarding committing to building & launching slow interstellar probes are related to this–if the “payoff” is beyond one’s lifetime, most people are reluctant to devote time and money to it [the attitude that resulted in people committing to building multi-lifetime pyramid and cathedral projects is, unfortunately, quite rare today, although some people–myself included–are willing to “build probes that will inform generations yet to be even conceived”].)

I don’t worry about global warming because in my lifetime, numerous dire predictions of civilization-ending–and even world-ending–catastrophes of a similar nature just never happened. (These included the population explosion [and worldwide starvation resulting from it], nuclear war, pollution, killer bees, the end of oil [first in the 1970s, and again in the 1990s, then called peak oil], the ice age of the 1970s, and the ozone hole [before the ozone hole, the SST–supersonic transport–was supposed to destroy the ozone layer in the 1970s, even though military supersonic jets, and military as well as civilian ^subsonic^ jets that had been operating at the same altitudes for decades, didn’t harm it; the jarring sonic booms in daily public tests, and the cost overruns of the Boeing 2707, ultimately resulted in the SST’s cancellation]). Also:

A long-period comet impact, another Carrington Event, or the mid-Atlantic volcano’s tsunami would certainly result in many deaths and much suffering, but civilization–and the human race–wouldn’t be imperiled by such calamities, and we can do things to prevent or ameliorate them. (A nearby supernova explosion’s radiation would be “lights out” for us–and maybe for all Earth life, except possibly deep subsurface-dwelling microbes, and perhaps some insects–but there’s no point worrying about that because we have nowhere to escape to, and no way to get there [alive, anyway], even if we did know of a refuge.) But no stars “within range” show signs of going supernova, so it’s only a hypothetical possibility.

If we tend to the other problems (which we can, fortunately, do something about ), curb our numbers (which is already beginning to happen), and educate and empower girls and women (which has a civilizing influence of societies, and results in families having fewer children, and at older ages, when they’re wiser and are better financially and emotionally prepared to look after them; this started in Brazil), we will have an even brighter and more prosperous, peaceful, and pleasant future–both on and off the Earth.

Seafloor spreading. ///’\\\

Earth’s Stable Temperature Past Suggests Other Planets Could Also Sustain Life.

“Seafloor weathering was more important for regulating temperature of the early Earth because there was less continental landmass at that time, the Earth’s interior was even hotter, and the seafloor crust was spreading faster, so that was providing more crust to be weathered,”

http://astrobiology.com/2018/04/earths-stable-temperature-past-suggests-other-planets-could-also-sustain-life.html

https://centauri-dreams.org/2018/03/27/trappist-1-an-abundance-of-water/#comment-170446

Constraining the climate and ocean pH of the early

Earth with a geological carbon cycle model.

The early Earth’s environment is controversial. Climatic estimates

range from hot to glacial, and inferred marine pH spans strongly

alkaline to acidic. Better understanding of early climate and ocean

chemistry would improve our knowledge of the origin of life and its

coevolution with the environment. Here, we use a geological carbon

cycle model with ocean chemistry to calculate self-consistent histories

of climate and ocean pH. Our carbon cycle model includes an

empirically justified temperature and pH dependence of seafloor

weathering, allowing the relative importance of continental and seafloor weathering to be evaluated. We find that the Archean climate

was likely temperate (0–50 °C) due to the combined negative feedbacks

of continental and seafloor weathering. Ocean pH evolves onotonically from 6.6+0.6

?0.4 (2?) at 4.0 Ga to 7.0+0.7

?0.5 (2?) at the Archean–Proterozoic

boundary, and to 7.9+0.1

?0.2 (2?) at the Proterozoic–

Phanerozoic boundary. This evolution is driven by the secular decline

of pCO2, which in turn is a consequence of increasing solar luminosity,

but is moderated by carbonate alkalinity delivered from continental

and seafloor weathering. Archean seafloor weathering may

have been a comparable carbon sink to continental weathering, but

is less dominant than previously assumed, and would not have induced

global glaciation. We show how these conclusions are robust

to a wide range of scenarios for continental growth, internal heat

flow evolution and outgassing history, greenhouse gas abundances,

and changes in the biotic enhancement of weathering.

http://www.pnas.org/content/pnas/early/2018/03/27/1721296115.full.pdf

New Study Findings: Dinosaur-killing Asteroid Also Triggered Massive Magma Releases Beneath the Ocean.

https://paleontologyworld.com/exploring-prehistoric-life-paleontologists-curiosities/new-study-findings-dinosaur-killing-asteroid

Anomalous K-Pg–aged seafloor attributed to impact-induced mid-ocean ridge magmatism.

“Eruptive phenomena at all scales, from hydrothermal geysers to flood basalts, can potentially be initiated or modulated by external mechanical perturbations. We present evidence for the triggering of magmatism on a global scale by the Chicxulub meteorite impact at the Cretaceous-Paleogene (K-Pg) boundary, recorded by transiently increased crustal production at mid-ocean ridges. Concentrated positive free-air gravity and coincident seafloor topographic anomalies, associated with seafloor created at fast-spreading rates, suggest volumes of excess magmatism in the range of ~105 to 106 km3. Widespread mobilization of existing mantle melt by post-impact seismic radiation can explain the volume and distribution of the anomalous crust. This massive but short-lived pulse of marine magmatism should be considered alongside the Chicxulub impact and Deccan Traps as a contributor to geochemical anomalies and environmental changes at K-Pg time.”

http://advances.sciencemag.org/content/4/2/eaao2994.full

The First Naked-Eye Superflare Detected from Proxima Centauri

Press Release – Source: astro-ph.EP

Posted April 9, 2018 9:30 PM

http://astrobiology.com/2018/04/the-first-naked-eye-superflare-detected-from-proxima-centauri.html

https://arxiv.org/abs/1804.02001