We are entering the greatest era of discovery in human history, an age of exploration that the thousands of Kepler planets, both confirmed and candidate, only hint at. Today Ashley Baldwin looks at what lies ahead, in the form of several space-based observatories, including designs that can find and image Earth-class worlds in the habitable zones of their stars. A consultant psychiatrist at the 5 Boroughs Partnership NHS Trust (Warrington, UK), Dr. Baldwin is likewise an amateur astronomer of the first rank whose insights are shared with and appreciated by the professionals designing and building such instruments. As we push into atmospheric analysis of planets in nearby interstellar space, we’ll use tools of exquisite precision shaped around the principles described here.

by Ashley Baldwin

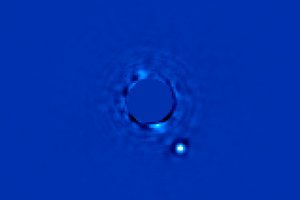

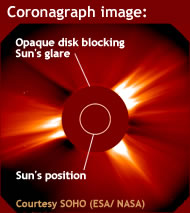

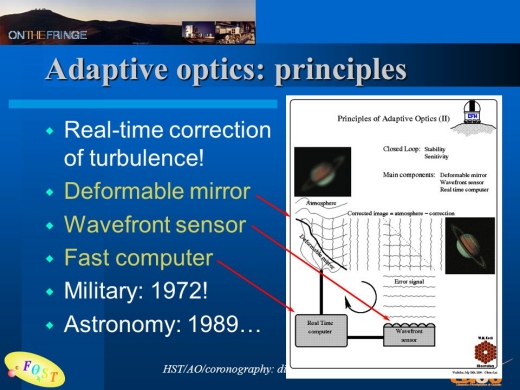

This review is going to look at the current state of play with respect to direct exoplanet imaging. To date this has only been done from ground-based telescopes, limited by atmospheric turbulence to wide orbit, luminous young gas giants. However, the imaging technology that has been developed on the ground can be adapted and massively improved for space-based imaging. The technology to do this has matured immeasurably over even the last 2-3 years and we stand on the edge of the next step in exoplanet science. Not least because of a disparate collection of “coronagraphs”, originally a simple physical block placed in the optical pathway of telescopes designed to image the corona of the Sun by French astronomer Bernard Lyot, who lends his name to one type of coronagraph.

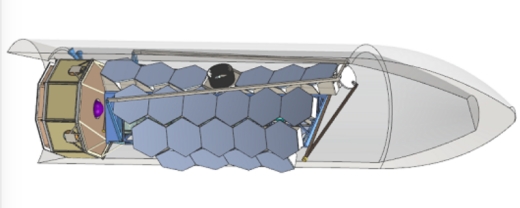

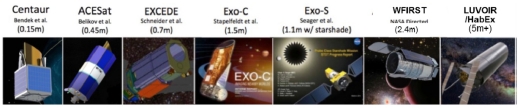

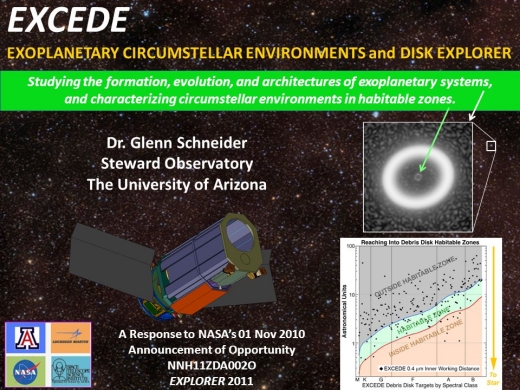

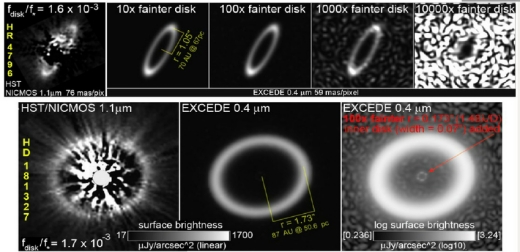

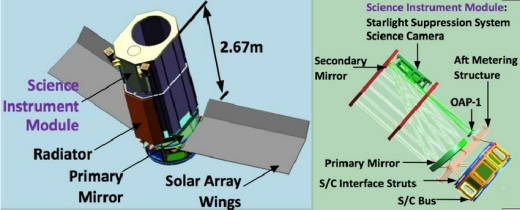

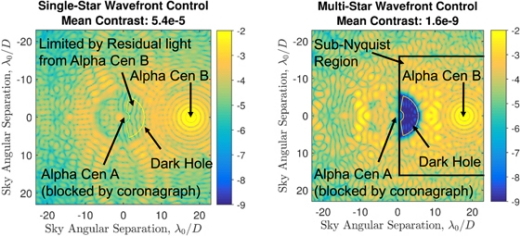

This is an instrument that in combination with ground-based pioneering work on telescope “adaptive optics” systems and advanced infrared sensors in the late 1980s and early ’90s progressed in the last ten years or so to the design of space-based instruments – later generations of which have now progressed to the point of driving telescopes like 2.4m WFIRST, 0.7m EXCEDE and 4m HabEX. Different coronagraphs work in different ways, but the basic principle is the same. On-axis starlight is blocked out as much as possible, creating a “dark hole” in the telescope field of view where much dimmer off-axis exoplanets can then be imaged.

Detailed exoplanetary characterisation including formation and atmospheric characteristics is now within tantalising reach. Numerous flagship telescopes are at various stages of development awaiting only the eventual launch of the James Webb Space Telescope (JWST), and its cost overrun, before proceeding. Meantime I’ve taken the opportunity this provides to review where things are by looking at the science through the eyes of an elegant telescope concept called EXCEDE (Exoplanetary Circumstellar Environment & Disk Explorer), proposed for NASA’s Explorer program to observe circumstellar protoplanetary and debris discs and study planet formation around nearby stars of spectral classes M to B.

Image: French astronomer Bernard Lyot.

Although only a concept and not yet selected for development, I believe EXCEDE – or something like it – may yet fly in some iteration or other, bridging the gap between lab maturity and proof of concept in space and in so doing hastening the move to the bigger telescopes to come. Two of which, WFIRST (Wide Field Infrared Survey Telescope) and HabEX (Habitable Exoplanet Imaging Mission) also get coverage here.

Why was telescope segmented deployability so aggressively pursued for the JWST?

“Monolithic”, one-piece mirror telescopes are heavy and bulky – which gives them their convenient rigid stability, of course.

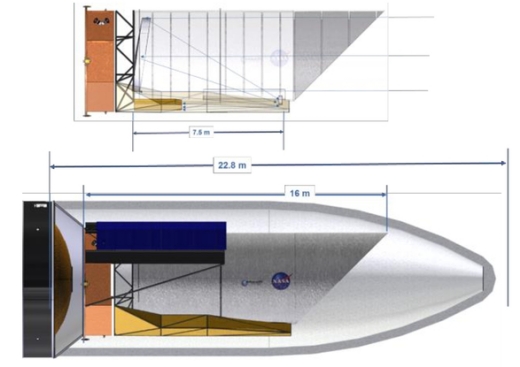

However, even a 4m monolithic mirror-based telescope would take up the full 8.4m fairing of the proposed SLS block 1b and with a starshade added would only just fit in lengthways if it had a partially deployable “scarfed” baffle. The telescope would mass around 20 tonnes built from conventional materials. Though if built with proven lightweight silicon carbide, already proven with the success of the ESA’s 3.5 m Herschel telescope, it would come in at about a quarter of this mass.

Big mirrors made out of much heavier glass ceramics like Zerodur have yet to be used in space beyond the 2.4m Hubble and would need construction of 4m-sized test “blanks” prior to incorporation in a space telescope. Bear in mind too that Herschel also had to carry four years worth of liquid coolant in addition to propellant. With minimal modification, such a similarly proportioned telescope might fit within the fairing of a modified New Glenn launcher too. If NASA shakes off its reticence about using silicon carbide in space telescope construction – something that may yet be driven – like JWST before it – by launcher availability. This given the uncertain future of the SLS and especially its later iterations.

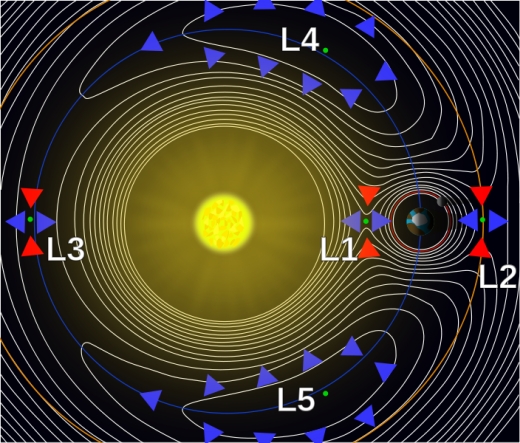

Meantime, at JWST conception there just wasn’t any suitable heavy lift/big fairing rocket available (or indeed now!) to get a single 6.5m mirror telescope into space. Especially not to the prime observation point at the Sun/Earth L2 Lagrange point 900 K miles away in deep space. And that was the aperture deemed necessary to be a worthy successor to Hubble.

An answer was found in a Keck-style segmented mirror which could be folded up for launch and then deployed after launch. Cosmic origami if you will (it may be urban myth but rumour has it origami experts were actually consulted).

The mistake was in thinking that transferring the well established principle of deployable space radio antennae to visible/IR telescopes would be (much) easier than it would turn out. The initially low cost “evolved”, but as it did so did the telescope and its descendants. From infrared cosmology telescope to “Hubble 2” and finally exoplanet characteriser as the new branch of astronomy arose in the late nineties.

A giant had been woken and filled with a terrible resolve.

The killer for JWST hasn’t been the optical telescope assembly itself, so much as folding up the huge attached sunshade for launch and then deploying it. That’s what went horribly wrong with “burst seams” in the latest round of tests and which continues to cause delays. Too many moving parts too – 168 if I recall. Moving parts and hard vacuums just don’t mix and the answer isn’t something as simple as lubricants, given conventional ones would evaporate in space, so that leaves powders, the limitations of which were seen with the failure of Kepler’s infamous reaction wheels. Cutting-edge a few years ago, these are now deemed obsolete for precision imaging telescopes, replaced instead by “microthrusters” – a technology that has matured quietly on the sidelines and will be employed on the upcoming ESA Euclid and then NASA’s HabEX.

From WFIRST to HabEX

The Wide Field IR Space Telescope, WFIRST is more by circumstance than design monolithic, and sadly committed to use reaction wheels, six instead of Kepler’s paltry four admittedly. I have written about this telescope before, but a lot of water as they say, has flowed under the bridge since then. An ocean’s worth indeed and with wider implications with the link as ever being exoplanet science.

To this end, any overview of exoplanet imaging cannot be attempted without starting with JWST and its ongoing travails, before revisiting WFIRST and segueing into HabEX. Then finally seeing how all this can be applied. I will do this by focusing an older but still robust and rather more humble telescope concept, EXCEDE.

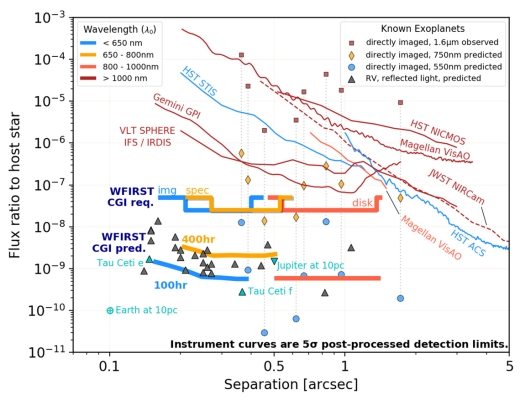

Reaction wheels – so long the staple of telescope pointing. But now passé, and why? Exoplanet imaging. The vibration reaction the wheels cause, though slight, can impact on the imaging stability even at the larger 200mas inner working angle (IWA) of the WFIRST coronagraph, IWA being defined as the nearest to the star that maximum contrast can be maintained. In the case of the WFIRST coronagraph this is 6e10 contrast (which has significantly exceeded its original design parameters already.

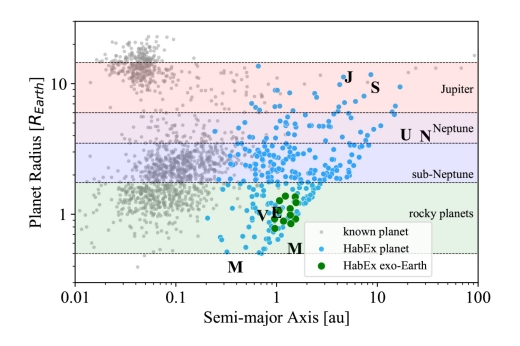

The angular separation of a planet from its star, or “elongation”, e, can be expressed as e = a/d, where a is the planetary semi-major axis expressed in Astronomical Units (AUs) and d is the distance of the star from Earth in parsecs (3.26 light years). By way of illustration, the Earth as imaged from ten parsecs would thus appear to be 100mas from the Sun – but would require a minimum 3.1m aperture scope to capture enough light and provide enough angular resolution of its own. Angular resolution of a telescope is its ability to resolve two separate points and is expressed as the related ? / D, where ? is the observation wavelength and D is the aperture of the telescope – in meters. So the shorter the wavelength and bigger the aperture, the greater the angular resolution.

A coronagraph in the optical pathway will impact on this resolution according to the related equation n ? / D where n is a nominal integer set somewhere between 1 and 3 and dependent on coronagraph type, with a lower number giving a smaller inner working angle nearer to the resolution/diffraction limit of the parent telescope. In practice n=2 is the best currently theoretically possible for coronagraphs, with HabEX set at 2.4 ? / D. EXCEDE’s PIAA coronagraph rather optimistically aimed for 1 ? / D – currently unobtainable, though later VVD iterations or perhaps revised PIAA might yet achieve this and what better way to find out than via a small technological demonstrator mission?

This also shows that searching for exoplanets is best done at shorter visible wavelengths between 0.4 and 0.55 microns, with telescope aperture determining how far out from Earth planets can be searched for at different angular distances from their star. This in turn will govern the requirements determining mission design. So for a habitable zone imager like HabEX where n=2.4 and whose 4m aperture can search habitable zones of sun like stars out to a distance of about 12 parsecs. Coronagraph contrast performance varies according to design and wavelength so higher values of n, for instance, might still allow precision imaging further out from a star, perhaps looking for Jupiter/Neptune analogies or exo-Kuiper belts. Coronagraphs also have outer working angles, the maximum angular separation that can be viewed between a star and planet or planetary system (cf starshades,whose outer working angle is limited only by the field of view of the host telescope and is thus large).

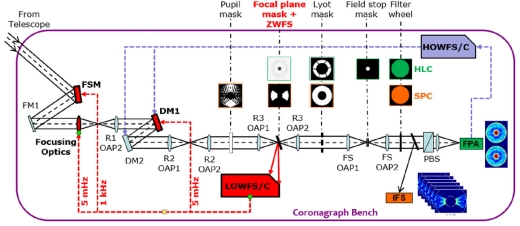

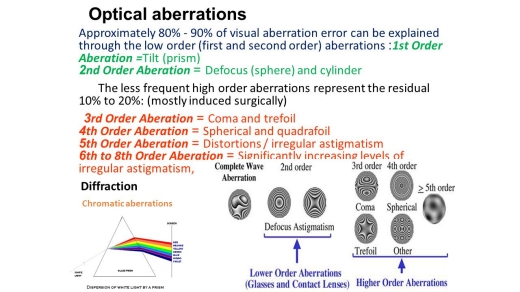

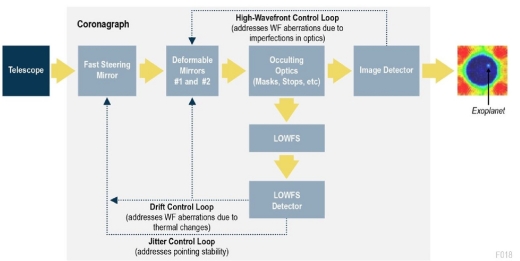

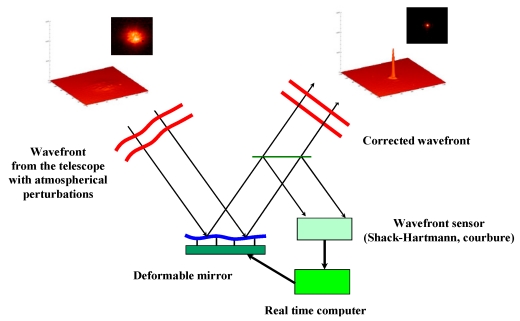

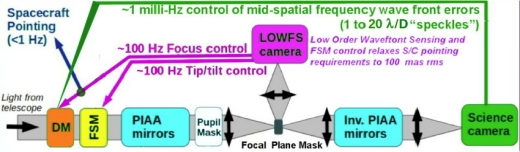

Any such telescope, be it WFIRST or HabEX, for success will require numerous imaging impediments to be adequately mitigated – so called “noise”. Noise from many sources: target star activity, stellar jitter, telescope pointing & drift. Optical aberrations. Erstwhile “low-order wavefront errors” – accounting for up to 90% of all telescope optical errors (ground and space) and including defocus, pointing errors like tip/tilt and telescope drift occurring as a target is tracked, due for instance to variations in exposure to sunlight at different angles. Then classical optical “higher order errors” such as astigmatism, coma, spherical aberration & trefoil – due to imperfections in telescope optics. Individually tiny but unavoidably cumulative.

It cannot be emphasised enough that for exoplanet imaging, especially of Earth-mass habitable zone planets, we are dealing with required precision levels down to hundredths of billionths of a meter. Picometers. Tiny fractions of even short optical wavelengths. Such wavefront errors are by far the biggest obstacle to be overcome in high-contrast imaging systems. The image above makes the whole process seem so simple, yet in practice this remains the biggest barrier to direct imaging from space and from the ground even more.

The delay between the (varying) wavefront error being picked up by the sensor, fed to the onboard computer and in turn the deformable modifying mirror to enable correction (along with parallel correction of pointing tip/tilt errors by a delicate “fast steering mirror”), and the precision of that correction – has been too lengthy. The central core of the adaptive optics (AO) system.

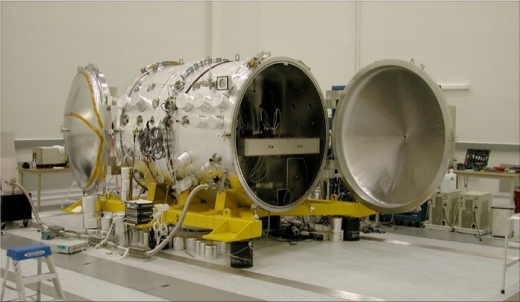

It has only been over the the last few years that there have been essential breakthroughs that should finally allow elegant theory to become pragmatic practice. This through a combination of wavefront correction via improved deformable mirrors and wavefront sensors and their enabling computer processing speed all working in tandem. This has led to creation of so-called “extreme adaptive optics” with the general rule that the shorter the observed wavelength, the greater the sensitivity “extremity” of the required AO. It is an even larger impediment on the ground where the atmosphere adds an extra layer of difficulty. These combine to allow a telescope to find and image tiny, faint exoplanets, and more importantly still, to maintain that image for the tens or even hundreds of hours necessary to locate and characterise them. Essentially a space telescope’s adaptive optics.

A word here. Deformable mirrors, fast steering mirrors, wavefront sensors, fine guidance sensors & computers, coronagraphs, microthrusters, software algorithms. All of these, and more, add up to a telescope’s adaptive optics – originally developed and then evolved on the ground, this instrumentation is now being adapted in turn for use in space. It all shares the feature of modifying and correcting any errors in wavefront of light entering a telescope pupil prior to reaching its focal plane and sensors.

Without it imaging via big telescopes would be severely hampered and the incredible precision imaging described here would be totally impossible.

That said, the smaller the IWA the greater the sensitivity to noise and especially vibration and line of sight “tip/tilt” pointing errors, and the greater the need for the highest performance, so called “extreme adaptive optics”. HabEX has a tiny IWA of 65 mas for its coronagraph (to allow imaging of at least 57% of all sun-like star hab zones out as far as 12 parsecs) and operates at a raw contrast as low as 1e11 – a hundred billionth of a metre!

Truly awesome. To be able to image at that kind of level is incredible frankly when this was just theory less than a decade ago.

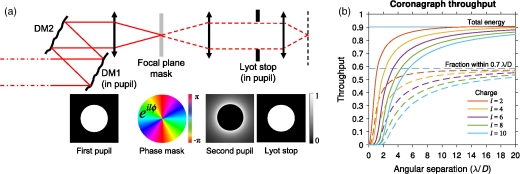

That’s where the revolutionary Vector Vortex “charge” coronagraph (VVC) now comes in – the “charge 6” version still offers a tiny IWA but is less sensitive to all forms of noise – and especially the low wavefront errors described above – than other ultra high performance coronagraphs, noise arising from small but cumulative errors in the telescope optics.

This played a major if not pivotal role in the VVC 6 selection for HabEX. The downside (compromise) is that only 20% light incident on the telescope pupil gets through to the focal point instruments. This is where the unobscured largish 4m aperture of HabEX helps, to say nothing of removing superfluous causes of diffraction and additional noise in the optical path.

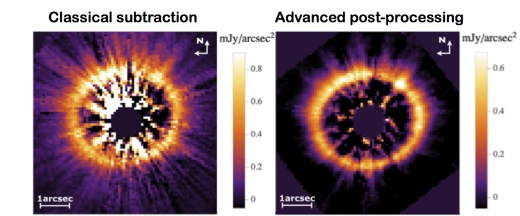

There are other VVC versions, the “charge 2” for instance (see illustration), that allows 70% throughput – but is so sensitive to noise as to be ineffectual at high contrast and low IWA. Always a trade off. That said, at the higher IWA (144mas) and lower contrast (1e8 raw) of a small imager telescope like the Small Explorer Programme concept EXCEDE, where throughput really matters, the charge 2 might work with suitable wavefront control. With a raw contrast (the contrast provided by the coronagraph alone) goal of < 1e8, "post-processing" would bring this down to the 1e9 required to meet the mission goals highlighted below. Post-processing involves increasing contrast post-imaging and includes a number of techniques with varying degrees of effectiveness that can increase contrast by up to an order of magnitude or more. For brevity I will mention only the main three here.

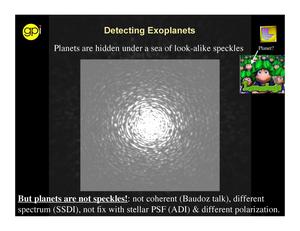

Angular differential imaging involves rotating the image (and telescope) through 360 degrees. Stray starlight, so called "speckles", are artefacts and move with the image.

A target planet does not, allowing the speckles to be removed, thus increasing the contrast. This is the second most effective type of post-processing. Speckles tend to be wavelength-specific, so looking at different wavelengths in the spectrum once again allows them to be removed with a planetary target persisting through various wavelengths. So-called spectroscopic differential imaging.

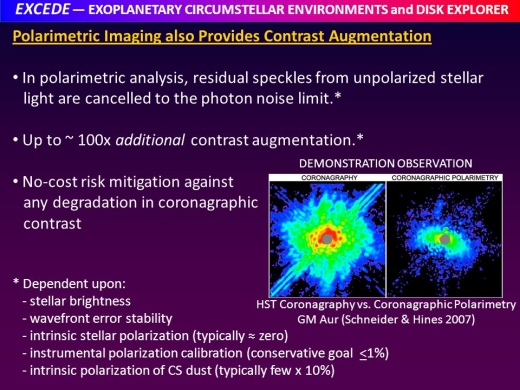

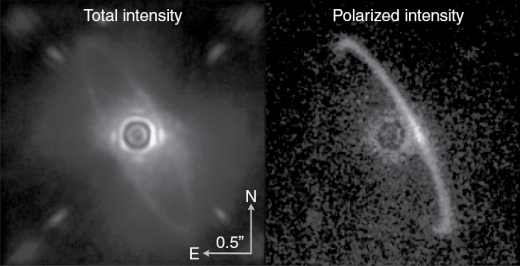

Finally, light reflected from a target tends to be polarised as opposed to starlight, and thus polarised sources can be picked out from background, unpolarised leaked starlight speckles with the use of an imaging polarimeter (see below).

Polarimetric differential imaging. Of the three, the last is generally the most potent and is specifically exploited by EXCEDE. Taken together these processes can improve contrast by at least an order of magnitude. Enter the concept conceived by the Steward Observatory at the University of Arizona. EXCEDE.

EXCEDE: The Exoplanetary Circumstellar Environment & Disk Explorer

Using a PIAA coronagraph with a best IWA of 144 mas (?/D) and a raw contrast of 1e8, the EXCEDE (see illustration) proposal consisted of a three year mission that would involve:

1/ Exploring the amount of dust in habitable zones

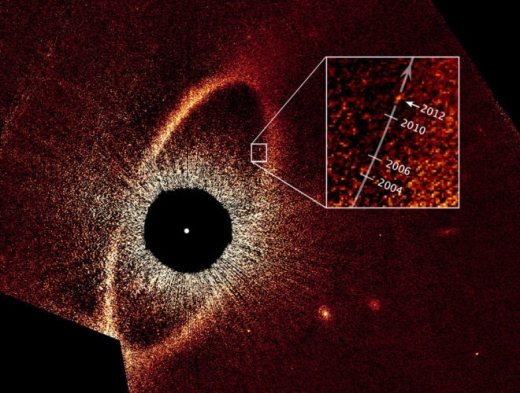

2/ Determining if said dust would interfere with future planet-finding missions – the amount of zodiacal dust in the Solar System is set at 1 “zodi”. Exozodiacal dust around other stars is expressed in multiples of this. Though a zodi of 1 appears atypically low, with most observed stellar systems having (far) higher values.

3/ Constraining the composition of material delivered to newly formed planets

4/ Investigating what fraction of stellar systems have large planets in wide orbits (Jupiter & Neptune analogues)

5/ Observing how protoplanetary disks make Solar System architectures and their relationship with protoplanets.

6/ Measuring the reflectivity of giant planets and constraining their compositions.

7/ Demonstrating advanced space coronagraphic imaging

A small and light telescope requiring only a small and cheap launcher to get it to its efficient but economic observation point in a 2000 Kms “sun synchronous” Low Earth Orbit – whereby the telescope would be in a near-polar orbit such that its position with respect to the Sun would remain the same at all points, allowing orientation of its solar panels and field of view to enable near continual viewing. Viewing up to 350 circumstellar & protoplanetary disks and related giant planets, visualised out to a hundred parsecs in 230 star systems.

The giant planets would be “cool” Jupiters and Neptunes located within seven to ten parsecs and orbiting between 0.5-7 AU from their host stars – often in the stellar habitable zone.

No big bandwidths, the coronagraph will image at just two wavelengths, 0.4 and 0.8 microns. Short optical wavelength to maximise coronagraph IWA and utilise an economic CCD sensor. The giant planets will be imaged for the first time (with a contrast well beyond any theoretical maximum from even a high performance ELT) with additional information provided via follow up RV spectroscopy studies – or Gaia astrometry for subsequent concepts. Circumstellar disks have been imaged before by Hubble but its older coronagraphs don’t allow anything like the same detail and are orders of magnitude short of the necessary contrast and inner working angle to view into the habitable zones of stars.

High contrast imaging in visual light is thus necessary to clearly view close-in circumstellar and protoplanetary disks around young and nearby stars, looking for their reaction with protoplanets and especially for the signature of water and organic molecules.

Exozodiacal light arises from starlight reflection from the dust and asteroid/cometary rubble within a star system, material that along with the disks above plays a big role in the development of planetary systems. It also acts as an inhibitor of exoplanetary imaging by acting as a contaminating light source in the dark field created around a star by a coronagraph with the goal of isolating planet targets. Especially warm dust close to a star, e.g in its habitable zone, a specific target for EXCEDE, whose findings could supplement ground-based studies in mapping nearby systems for this.

The Spitzer and Herschel space telescopes (with ALMA on the ground) both imaged exozodiacal light/circumstellar disks but at longer infrared wavelengths and thus much cooler and consequently further from their parent stars. More Kuiper belt than asteroid belt. Making later habitable planet imaging surveys more efficient as above a certain level of “zodis” imaging will be more difficult (larger telescope apertures allow for more zodis) with a median value of 26 zodis for a HabEX 4m scope. Yet another cause of background imaging noise – cf Solar System “zodiacal” light – which is essentially the same light visible within the Solar System (see illustration).

EXCEDE payload:

- 0.7m unobscured off-axis lightweight telescope

- Fine steering mirror for precision pointing control

- Low order wavefront sensor for focus and tip/tilt control

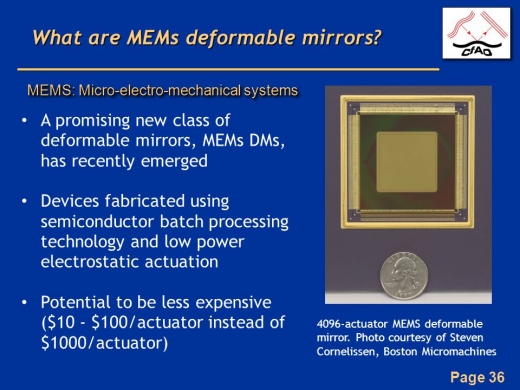

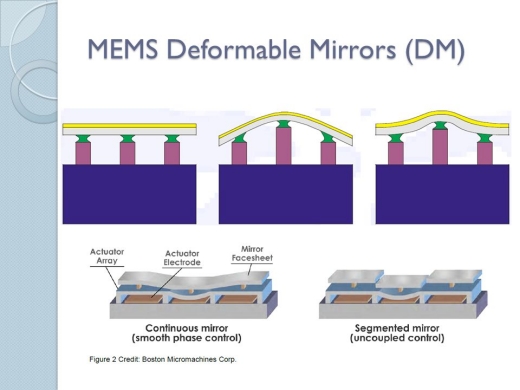

- MEMs deformable mirror for wavefront error control (see below)

- PIAA coronagraph

- Two band imaging polarimeter

EXCEDE as originally envisaged used a Phase Induced Amplitude Apodisation PIAA coronagraph (see illustration), which also has a high throughput ideal for a small 0.7m off-axis telescope.

It was proposed to have an IWA of 144 mas at 5 parsecs in order to image in or around habitable zones – though not any terrestrial planets. However, this type of coronagraph has optics that are very difficult to manufacture and technological maturity has come slowly despite its great early promise (see illustration). To this end it has to be for the time being superseded by other less potent but more robust and testable coronagraphs such as the Hybrid Lyot (see illustration for comparison) earmarked for WFIRST and more recently the related VVC’s greater performance and flexibility. Illustrations of these are available for those who are interested in their design and also as a comparison. Ultimately though one way or the other they block or “reject” the light of the central star and in doing so create a dark hole in the telescope field of view in which dim objects like exoplanets can be imaged as point sources, mapped and then analysed by spectrometry. These are exceedingly faint. The dimmest magnitude star visible to the naked eye has a magnitude of about 6 in good viewing conditions. A nearby exoplanet might have a magnitude of 25 or less. Bear in mind that each successive magnitude is about 2.5 times fainter than its predecessor. Dim!

Returning to the VVC, a variant of it could be easily substituted instead, without impacting excessively on what remains a robust design and practical yet relevant mission concept. Off-axis silicon carbide telescopes of the type proposed for EXCEDE are readily available. Light, strong, cheap and being unobscured, these offer the same imaging benefits as HabEX on a smaller scale. EXCEDE’s three year primary mission should locate hundreds of circumstellar/protoplanetary discs and numerous nearby cool gas giants along with multiple protoplanets – revealing their all important interaction with the disks. The goal is quite unlike ACEsat, a similar concept telescope, which I have described in detail before [see ACEsat: Alpha Centauri and Direct Imaging]. The latter prioritized finding planets around the two principal Alpha Centauri stars.

The EXCEDE scope was made to fit a NASA small Explorer programme $170 million budget, but could easily be scaled according to funding. Northrop Grumman manufactures them up to an aperture of 1.2m. The limited budget excludes the use of a full spectrograph, but instead the concept is designed to look at narrow visual spectrum bandwidths within the coronagraph’s etendue [a property of light in an optical system, which characterizes how “spread out” the light is in area and angle] that coincide with emission of elements and molecules from with the planetary or disk targets, water in particular. All this with a cost effective CCD-based sensor.

Starlight reflected from an exoplanet or circumstellar disk tends to be polarised, unlike direct starlight, and the use of a compact and cheap imaging polarimeter helps pick the targets out of the image formed at the pupil after the coronagraph has removed some but not all of the light of the central star. Some of the starlight “rejected” by the coronagraph is directed to a sensor that links to computers that calculate the various wavefront errors and other sources of noise before sending compensatory instructions to the optical pathway deformable mirrors and fast steering mirror to correct.

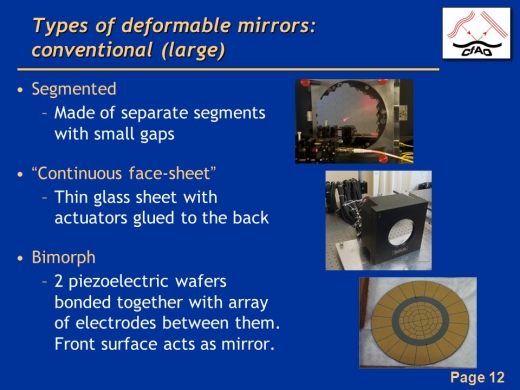

The all important deformable mirrors (manipulated from beneath by multiple mobile actuators) and especially the cheap but efficient new MEMs (micro-electro-mechanical mirrors) – 2000 actuators per mirror for EXCEDE, climbing to over 4096, or more, for the more potent HabEX. But yet to be used in space. WFIRST is committed to an older, less efficient “piezoelectric” alternative (more expensive) deformable mirror.

So this might be an ideal opportunity to show that MEMs work on a smaller, less risky scale with a big science return. MEMs may remain untested in space and especially the later more sensitive multi-actuator variety, but the more actuators, the better the wavefront control.

EXCEDE was originally conceived and unsuccessfully submitted in 2011. This was largely due to the immaturity of its coronagraph and related technology like MEMs at that time. The concept remains sound but the technology has now moved forward apace thanks to the incredible development work done by numerous US centres (NASA Ames, JPL, Princeton, Steward Mirror Lab and the Subaru telescope) on the Coronagraphic Instrument, CGI, for WFIRST. I am not aware of any current plans to resurrect the concept.

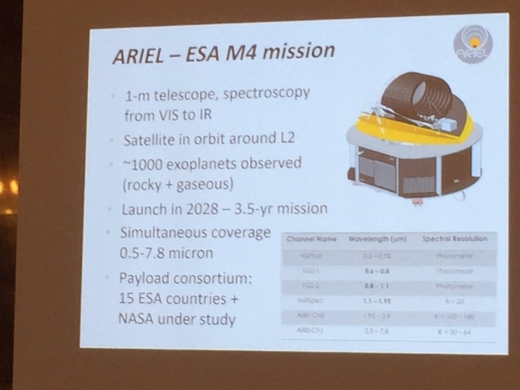

However the need remains stronger than ever and the time would seem to be more propitious. Exozodiacal light is a major impediment to exoplanet imaging so surveying systems that both WFIRST & HabEX will look at might save time and effort to say nothing of the crucial understanding of planetary formation that imaging of circumstellar disks around young stars will bring. Perhaps via a future NASA Explorer programme round or even via the European Space Agency’s recent “F class” $170 million programme call for submissions. Possibly in collaboration with NASA – whose “missions of opportunity” programme allows materiel up to a value of $55 million to supplement international partner schemes. The next F class gets a “free” ride, too, on the launcher that sends exoplanet telescopes PLATO or ARIEL to L2 in 2026 or 2028. Add in EXCEDE class direct imager and you get an L2 exoplanet observatory.

Mauna Kea in space if you will. By way of comparison, the overall light throughput of obscured WFIRST is just 2%!

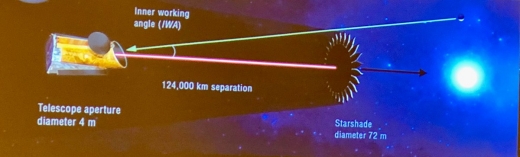

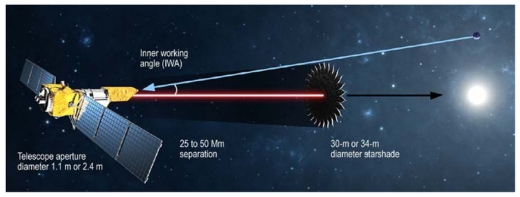

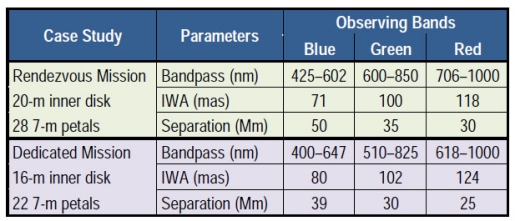

The 72m HabEX starshade has an IWA of 45 mas and a throughput of 100% (as does the smaller version proposed for WFIRST) and requires minimal telescopic mitigation/adaptive optics as for coronagraphs. This also makes it ideal for the prolonged observation periods required for spectroscopic analysis of prime exoplanetary targets, where every photon counts. Be it habitable zone planets with HabEX or a smaller-scale proof of concept for a starshade “rendezvous” mission with WFIRST.

By way of comparison, the proposed EXO-S Probe Class programme (circa $1 billion) included an option for a WFIRST/Starshade “rendezvous” mission. This whereby a HabEX-like 30-34m self-propelled Starshade joins WFIRST at the end of its five year primary mission to begin a very much deeper three year exoplanet survey. Though considerably smaller than the HabEX Starshade, it also possesses the like benefits of high optical throughput (even more important on a non-bespoke obscured & smaller 2.4m aperture), a small Inner Working Angle (much less than with the WFIRST coronagraph), significantly reduced star/planet contrast and most important of all as we have already seen above, vastly reduced constraints on telescope stability & related wavefront control.

Bear in mind that WFIRST will still be using vibration-inducing reaction wheels for fine pointing. Operating at closer distances to the telescope than HabEX, the “slew” times between imaging would be significantly reduced too. This addition would increase the exoplanet return (both number and characterisation) many fold, even to the point of a small chance of imaging potentially habitable exoplanets. The more so if there have been the expected advances in performance of the software algorithms required to increase contrast post-processing (see above) and also to allow multi-star wavefront control that permits imaging of promising nearby binary systems (see below). Just a few tens of millions of dollars are required to make WFIRST “starshade” ready prior to launch and would keep this option open for the duration.

The obvious drawback with this approach is the long time required to manoeuvre into position from one target to the next along with the precision “formation flying” (stationed tens of thousands of kms from the starshade according to observed wavelength) required between telescope and starshade. For HabEX, this has a 250 km error margin in the back or forwards axis, but just 1m laterally and just one degree of starshade tilt.

So the observation strategy is done in stages. First the coronagraph searches for planets in each target star system over multiple visits, “epochs”, over the orbital period of the erstwhile exoplanet. This helps map out the orbit and increases chances of discovery . The inclination of any exoplanetary system in relation to the solar system is unknown – unless it closely approaches 90 degrees (edge on) and displays exoplanetary transits. So unless the inclination is zero degrees (the system sits face on to the solar system and lies in the plane of the sky like a saucer seen face on), the apparent angular separation between an exoplanet and its parent star will also vary across the orbital period. This might include a period during which it lies interior to the IWA of the coronagraph – potentially giving rise to false negative results. Multiple observation visits helps compensate for this.

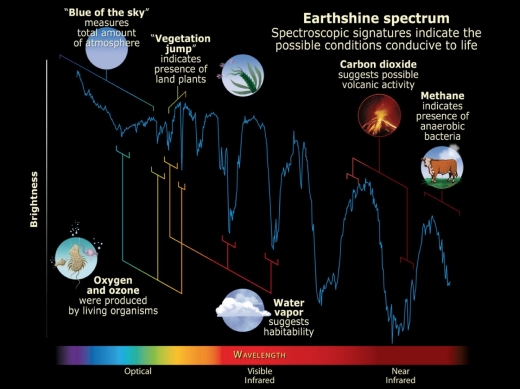

Once the exoplanet discovery and orbital characteristics are constrained, starshade-based observations follow up. With its far larger light throughput (near 100%) the extra light available allows detailed spectroscopy across a wide bandwidth and detailed characterisation of high priority targets. For HabEX, this will include up to 100 of the most promising habitability prospects and some representative other targets. Increasing or reducing the distance between the telescope and the starshade allows analysis across different wavelengths.

In essence “tuning in” the receiver, with smaller telescope/starshade separations for longer wavelengths. For HabEX, this extends from UV through to 1.8 microns in the NIR. The coronagraph can characterise too if required but is limited to multiple overlapping 20% bandwidths with much less resolution due to its heavily reduced light throughput.

Of note, presumed high priority targets like the Alpha Centauri, Eta Cassiopiae and both 70 and 36 Ophiuchi systems are excluded. They are all relatively close binaries and as both the coronagraph and especially the starshade have largish fields of view, the light from binary companions would contaminate the “dark hole” around the imaged star and mask any planet signal. (This is also an issue for background stars and galaxies too, though these are much fainter and easier to counteract.) It is an unavoidable hazard of the “fast” F2 telescope employed – F number being the ratio of focal length to aperture. A “slower”, higher F number scope would have a much smaller field of view, but would need to be longer and consequently even more bulky and expensive. F2 is another compromise, in this case driven largely by fairing size.

Are you beginning to see the logic behind JWST a bit better now? As we saw with ACEsat, NASA Ames are looking to perfect suitable software algorithms to work in conjunction with the telescope adaptive optics hardware (deformable mirrors and coronagraph) to compensate for this (contaminating starlight from the off-axis binary constituent).

This is only at an early stage of development in terms of contrast reduction, as can be seen in the diagram above, but proceeding fast and as software can be uploaded to any telescope mission at any time up to and beyond launch.

Watch that space.

So exoplanetary science finds itself at a crossroads. Its technology is now advancing rapidly but at a bad time for big space telescopes with the JWST languishing. I’m sure JWST will ultimately be a qualified success and its transit spectroscopy characterisation of planets like those around TRAPPIST-1 will open the way to habitable zone terrestrial planets and drive forward telescope concepts like HabEX. As will EXCEDE or something like it around the same time.

A delay that holds up its successors both in time but also in funding. But lessons have been learned, and are likely to be out to good use. Just at the time that exoplanet science is exploding thanks to Kepler, and with TESS only just started, PLATO to come and then the bespoke ARIEL transit spectroscopic imager telescope to follow on. No huge leaps so much as incremental but accumulating gains. ARIEL moving on from just counting exoplanets to provisional characterisation.

Then onto imaging via WFIRST before finally HabEX and characterisation proper. But that will be over a decade or more away and in the meantime expect to see smaller exploratory imaging concepts capitalising on falling technology and launch costs to help mature and refine the techniques required for HabEX. To say nothing of whetting the appetite and keeping exoplanets formally where they belong.

But to finish on a word of perspective. Just twenty five years or so ago, the first true exoplanet was discovered. Now not only do we have thousands with ten times that to come, but the technology is coming to actually see and characterise them. Make no mistake that is an incredible scientific achievement as indeed are all the things described here. The amount of light available for all exoplanet research is utterly minuscule and the pace of progress to stretch its use so far is incredible. All so quick too. Not to resolve them, for sure (that would take hundreds of scopes operating in tandem over hundreds of kms) but to see them and scrutinise their telltale light. Down to Earth-mass and below and most crucially in stellar habitable zones. Precision par excellence. Maybe to even find signs of life. Something philosophers have deliberated over for centuries & “imagined” at length, can now be “imaged” at length.

At the forefront of astronomy, the public consciousness and in the eye of the beholder.

References

“A white paper in support of exoplanet science strategy”, Crill et al: JPL, March 2018

“Technology update”, Exoplanet Exploration Program, Exopag 18, Siegler & Crill, JPL/Caltech, July 2018

HabEX Interim report, Gaudi et al, August 2018

EXCEDE: Science, mission technology development overview, Schneider et al 2011

EXCEDE technology development I, Belikov et al, Proceedings of SPIE, 2012

EXCEDE technology development II, Belikov et al, Proceedings of SPIE, 2013

EXCEDE technology development III, Belikov et al, Proceedings of SPIE, 2014

“The exozodiacal dust problem for direct imaging of ExoEarths”, Roberge et al, Publications of the Astronomical Society of the Pacific, March 2012

“Numerical modelling of proposed WFIRST-AFTA coronagraphs and their predicted performances”, Krist et al, Journal of Astronomical Telescopes, Instruments & Systems, 2015

“Combining high-dispersion spectroscopy with high contrast imaging. Probing rocky planets around our nearest stellar neighbours”, Snellen et al, Astronomy & Astrophysics, 2015

EXO-S study, Final report, Seager et al, June 2015

ACESat: Alpha Centauri and direct imaging, Baldwin, Centauri Dreams, Dec 2015

Atmospheric evolution on inhabited and lifeless worlds, Catling and Kasting, Cambridge University Press, 2017

WFIRST CGI update, NASA ExoPag July 2018

“Two decades of exoplanetary science with adaptive optics”, Chauvin: Proceedings of SPIE, Aug 2018

“Low order wavefront sensing and control for WFIRST coronagraph”, Shi et al Proceedings of SPIE 2016

“Low order wavefront sensing and control..for direct imaging of exoplanets”, Guyon, 2014

Optic aberration: Wikipedia, 2018

Tilt (optics): Wikipedia, 2018

“The Vector Vortex Coronagraph”, Mawet et al, Proceedings of SPIE, 2010

“Phase-induced amplitude apodisation complex mask coronagraph tolerancing and analysis”, Knight et al, Conference paper: Advances in Optical and Mechanical Technologies for Telescopes and Instrumentation III, July 2018

“Review of high contrast imaging systems for current and future ground and space-based telescopes”, Ruane et al, Proceedings of SPIE 2018

“HabEX telescope WFE stability specification derived from starlight leakage”, Nemati, H Philip Stahl, Mark T Stahl et al, Proceedings of SPIE, 2018

Fast steering mirror: Wikipedia, 2018

Thank you, Ashley Baldwin for such a in depth report on such a complicated subject. I’m hoping that with the research ongoing with optics and quantum physics that will will be seeing more breakthroughs that will increase the resolution like the proposed combining interferometry with quantum-teleportation.

How to build a teleportation-assisted telescope.

https://www.technologyreview.com/s/612177/how-to-build-a-teleportation-assisted-telescope/

Quantum-Assisted Telescope Arrays.

Emil T. Khabiboulline, Johannes Borregaard, Kristiaan De Greve, Mikhail D. Lukin

(Submitted on 10 Sep 2018)

Quantum networks provide a platform for astronomical interferometers capable of imaging faint stellar objects. In a recent work [arXiv:1809.01659], we presented a protocol that circumvents transmission losses with efficient use of quantum resources and modest quantum memories. Here, we analyze a number of extensions to that scheme. We show that it can be operated as a truly broadband interferometer and generalized to multiple sites in the array. We also analyze how imaging based on the quantum Fourier transform provides improved signal-to-noise ratio compared to classical processing. Finally, we discuss physical realizations including photon detection-based quantum state transfer.

https://arxiv.org/pdf/1809.03396

I see ole burde already caught this one, but here are some links:

Many Tiny Satellites Can Create Image of a Giant Space Telescope.

https://www.nextbigfuture.com/2019/01/many-tiny-satellites-can-create-image-of-a-giant-space-telescope.html

The paper:

Superresolution far-field imaging by coded phase reflectors distributed only along the boundary of synthetic apertures.

https://www.osapublishing.org/optica/fulltext.cfm?uri=optica-5-12-1607

I can see how this is an improvement, but as with all sum aperture arrays, the loss of light and contrast for exoplanet studies would be a limiting factor. This may work well with the Quantum-Assisted Telescope Arrays since based on similar concepts, but what would be ideal is someway to clone quantum information in photons over large timescales to build a truly quantum telescope.

Wow, what an awesome article Dr. Baldwin. Thanks sincerely for sharing your insights.

This is a treasure-trove of authoritative information on a subject I’m sure many, like me, will find hugely fascinating, and I’m sure I will come back to the article often for reference. My mouth is watering for the discoveries to come. But–and I apologise for the criticism–boy, could it ever have done with some editing to make it readable. Every few sentences I was drawn up short thinking I must have missed some words or something–it was that elliptical and hard to parse, at my normal reading speed. There were many sentence fragments that I just couldn’t make work grammatically; it’s not a question of formality/informality, which I couldn’t care less about, but a question of readability. I hope you might take this criticism constructively. Thanks again for sharing your knowledge here.

Does the starshade have to have the petaled edge as you have drawn or is that just a manifestation of the packaging for launch? I ask because the various large passive comm sats from decades ago (Echo, Pageos, PasComSat https://en.wikipedia.org/wiki/List_of_passive_satellites and https://commons.wikimedia.org/wiki/File:Echo_-_A_Passive_Communications_Satellite_-_GPN-2002-000122.jpg and https://www.youtube.com/watch?v=qeN0_7ZXUrY -at end) were fairly simple and (generally) successfully deployed, and it seems that technology could perform the starshade function when maneuvered into the correct location. These were all in the range of 30-40m dia. That knocks down one challenge fairly “easily” to allow focusing ($ and design/fab effort) on the telescope itself.

Next I wonder if that same inflatable technology could be used to form a reflector or lens by a combination of aluminized and transparent mylar. Later passive com sats were self rigidized by exposure to UV but achieving the purely smooth surface wouldn’t be reliably possible so you’d surely need error corrections as you describe.

The petaled shape is indeed necessary for optimal functioning: it creates a softer edge around the starshade so light is not diffracted around a “hard” edge.

Thanks for this very comprehensive article!

An advice: If you write captions for your illustrations, readers will know what they are about and also, when you say “see illustration” you could reference them by number. Ditto for “see above” and having section titles and numbers.

Thanks.

This subject is technical and it’s been as distilled down as much as possible. It’s already undergone extensive editing . Basing it around specific missions was done to try and illustrate the theory in practice and to break up the detail into manageable chunks. Some of the technological illustrations demonstrate the sheer complexity that has finally been overcome to turn hope into fact .Nothing short of momentous . The devil is in the detail but not necessarily for us all to understand above the basics .

EXCEDE is the most basic mission concept and its technical requirements the least strengthened – indeed as you can see from the illustrations Hubble, ALMA and other ground based scopes have already imaged circumstellar disks. These are easier to resolve than even giant planets – being so much larger . They are vital however in determining how and when planets form and their interact with the disk, which is pivotal in understanding stellar systems plmaerary architectures – and where to look for planets. Or even whether there us too much dust ,”exozodiacal ” light to see them directly . I was just reading an article published on “Centauri Dreams” in 2007 about the now cancelled coronagraph based “Terrestrial Planet Finder”, TPF-C . The technology for that was essentially the same as described for WFIRST and HabEX – over a decade later. The big difference being that the technology has gone from theoretical/ aspirational to reality in the intervening period. Thanks to dedicated experts based at big ground based scopes and their related mirror laboratories . But most especially thanks tothe dedicated work arising from the coronagraphic imager being added to WFIRST on the back of the 2.4m NRO mirror donation. That was the deal clincher for me. It’s original goal was to image planets down to a contrast reduction of 1 billion , “1e9” in visible light . Enough to see gas giants at Jupiter like distances . We are likely still a decade away from WFIRST launch and already that goal has been improved upon ( as illustrated in the requisite graph ) by an order of 5 times, which should already be enough to now image nearby Super Earths ( if they exist as seems likely ) on the edge of nearby star’s habitable zones ( Tau Ceti foremost ) . Add a Starshade and WFIRST’s potentcy increases many fold into a true exoplanetary Observatory. That’s possible now, today – unlike 2007 and TPF, not some stretch goal for five years time. The technology for the enormously more capable HabEX is practically there too . It’s now just a case of will. And money. HabEX ( and WFIRST) with its new technology have , unlike JWST, been matured PRIOR to development . Gone are the days of unexpected obstacles or hidden extras to spiral out of control It’s now just a question of the financial and political fallout the JWST overrun has on NASA’s space telescope programme longer term as to whether we get WFIRST and HabEX happen. HabEX, although badged as planet hunter , has a versatile technological spec that effectively makes it a bigged up Hubble 2. Like Hubble it is robust and fully serviceable.

There is currently a lot of noise about the return of manned space flight . Noise but no substance . Any goal of Mars or even a return to the Moon ( why ?) would require Nasa ‘s budget to be increased well above its current lowly 0.5% GDP – which appears unlikely . The private sector are innovative but still rely on NASA’s funding so can’t go it alone.( whatever Musk says ) . But manned spaceflight might yet prove a boon for the astrophysics community. The current technology is still orientated around low Earth orbit whatever we hear about Mars or the Moon. If there is to be a stepping stone to Mars a more practical solution might be servicing missions to the Sun/Earth Lagrange point so beloved of space observatories and just four times further into deep space than the Moon. No big gravity well to overcome so perhaps a legitimate alternative to the ARM asteroid capture to test out SLS, Orion, BFR, “starship” et al.

I wouldn’t call the development of SpaceX big rockets noise. Further, I suspect the best place to build exoplanet observatories might be the surface of the Moon because if it’s stability, resources to build large structures and base human scientists and technicians to maintain them as well as the obvious clear sky.

Yes there is plenty of reason for cautious optimism.

In terms of radio telescopes a moon base – especially on the dark side – where Earth and its ambient radio emissions are eclipsed . But despite the best efforts of Musk,Bezos et al , manned space flight is still expensive and not without significant risk. Especially if you’re trying to build a base from scratch on the radiation bathed atmosphere less ( or practically atmosphere less ) surfaces of the Moon or Mars. Mass is a big issue and its transference there too. Especially for start up . Lots of launches ( even BFR) .Yes there are in situ resources ( water ice, CO2 etc) to tap into but these would need to be mined first ( in low g and no atmosphere requiring a bulky pressure suit – that’s one scary building site ) . Where would the energy come from to so this too? A lot of energy. There are no small and transferable nuclear reactors remotely close to use ( even if their use in space wasn’t banned by international treaty) so that leaves solar power. I’ve seen calculations that even a rudimentary base on Mars would require the equivalent of ten football pitches worth of high performance solar cells to meet its minimum survival needs – including in situ resource proceeding ( to produce water and O2 from ice and methane fuel from CO2 and ice) This is likely a conservative estimate. It also doesn’t allow for the sort of planet blanketing dust storm that appears to have put paid to poor old Opportunity.

That said, the advent of economical ( and large) space launchers should allow for the construction of giant modular space telescopes in the easily reachable and relatively safe environs of low Earth orbit ( the precedent for this being the Internatioanal Space Station ) before then launching them to the Sun/Earth Lagrange 2 point. In situ servicing , lost since the Shuttle retirement , is likely to become an option again.

I’m not clear why the Moon’s mass has any advantage. For humans, the low g might not be that useful. A space station with a centrifuge to offer up to 1 g might be preferable. Zero g is probably much better for building large structures as the mass required is just needed to maintain shape, not to fight gravity. Finally, the Moon’s surface is very dusty, and electrostatically levitated dust could well contaminate instruments and lenses. This might prove a greater problem than the meteoric particles.

My preference would be for semi-autonomous and telechiric robotic moon “miners” and fabs to extract usable materials, perhaps fabricating feedstock for 3-D printing that can be launched to the desired orbit and converted into the components by more robots, This reduces costs and hazards. If crews need to be present nearby, then hab facilities can be constructed to accommodate them.

Dear Ashley, about the editing, which I requested in another comment: I didn’t mean anything should be cut, or that you had made anything needlessly overcomplicated. The article has all the information one would hope for, and I understand it requires a certain amount of compression. I think my comment wasn’t clear so I’ll just provide one example from the article, with a tedious amount of detail about how I read it, in the hopes of some constructiveness (then I’ll shut up). Consider this sentence:

“If NASA shakes off its reticence about using silicon carbide in space telescope construction – something that may yet be driven – like JWST before it – by launcher availability.”

When I read a sentence like that, I am drawn up short by the end of it, because it started with an if-clause, “If NASA shakes off its reticence…”, and so I expect a consequence of that conditional in the latter part of the sentence–for example something like “If NASA shakes off its reticence […] then we can expect [whatever] results.” But there is no “then” clause, and it’s not just a matter of a missing “then”, which is quite acceptable (e.g. “If you pay me I’ll work” instead of “If you pay me then I’ll work”), but rather an entire missing clause. And so I have to stop reading and go back and see whether I missed something in the sentence, which is a little complicated because of the proliferation of em-dashes. In this case, I reread the sentence twice and still can’t make it make sense, which entails my having to stop longer to figure out what you meant, and ultimately requires me to go back one previous sentence to figure out that this sentence is a sort of condition on the previous sentence. Because this is not the usual practice of dividing one’s writing into sentences each of which is logically complete, it cost me several mental seconds to go back and figure out while reading, and this draws me out of the article. Multiply this by as many sentences as exhibit this tendency to incompleteness in your article (which is a lot! I’d estimate between a quarter and a half of the sentences; the last full paragraph contains more fragments than it does actual sentences), and the net effect is that it was much more difficult to read than it needed to be. And for the level of expert knowledge exhibited, I found this difficulty in parsing to be an unusual reading experience–hence it felt like a comment was not unjustified.

For one other example: the very last sentence (fragment) is an instance where even if I go back slowly and reread, I can’t figure out your exact meaning.

I have been reading a bit lately about telescopes with holographic primary objectives and offer this as a possibly interesting future development. I’m not sure it’s entirely relevant to this particular discussion but it is exiting, to this layman anyway, and hopefully of interest to others. A pdf download on the subject is available at this link.

https://www.researchgate.net/publication/258548786_Astronomical_telescope_with_holographic_primary_objective

A tremendously informative article Dr. Baldwin. Thank you so much.

There is a lot to take in on this article. Thanks so much for providing this. It will take time, but I look forward to this as some of the holiday reading.

Thanks Gary.

The take away message is that the technological principles to deliver HabEX style mission have been around for well over a decade . The technological readiness is only just now catching up.

That’s something I find a lot with articles published in this by the astrophysics /aeronautics community . Aspirational is fine as far as it goes and wins the vital research grants necessary to progress , but it’s ultimately a good dose of the perspirational that delivers. It’s something for us lay people to be aware of when reading about scientific prospects in this area. That said , HabEX and WFIRST-S will represent truly awesome engineering achievements. Only twenty years ago there were practically no known exoplanets . Twenty years from now there will be tens of thousands with thousands imaged /characterised in detail.

The dimmest star visible to the human eye under ideal dark sky conditions has a magnitude of about 6. By way of comparison , an Earth sized planet in its stellar habitable zone has a magnitude of about 22 !

That sounds amazing Ashley. It makes me much more optimistic. I look forward to the days of huge new databases of information about nearby exoplanets. I agree with you that many downplay the idea of finding bacteria-like life forms or even what we refer to as lower eukaryotes. These organisms will alter their environments and should be the first kinds of life detected by us. I don’t think we’re that far away from the first such discoveries!

As for manned exploration, I worry that with such a counter-productive political climate around the world right now that more years will slip by with few advances in low cost earth to orbit and beyond capability. This surely is the most crucial advance we must make to become a truly spacefaring species.

While jonW’s criticisms are valid if this was meant to be a term paper or such I think we should cut the guy some slack here. Most readers of this blog should be quite able to fill in the blanks enough to make sense of this quite well. I liked how Ashley explained technical terms and the math he included, which is something many writers omit.

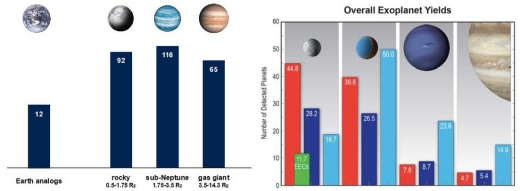

And hey, if there’s something we don’t get all we have to do is ask. For example, Dr. Baldwin, in the bargraph titled “Overall Exoplanet Yeilds” what do the different colors represent?

Learning about the issue caused by exozodiacal light was interesting. Is this going to be much of a problem with imaging old mature systems or just younger planets?

On the left are the figures as calculated by the HabEX team. Available as referenced in the HabEX interim report. A recommended read.

The figures on the right are guesstimates from other data. Dark blue is for “cold” ( the least accurate and thus excluded on the left ) planets , red for “hot” and pale blue “warm”. The magic number – for both graphs – is 12 . For Hab zone “eta Earths” – in green on the right .

We hope.

Exozodiacal light is going to be a big issue imaging all planets and especially older and smaller ones. This is one of the major drivers for EXCEDE , discovering the exozodiacal light levels in nearby start systems ahead of missions like WFIRST & HabEX. Clumps of such dust are a major confounder to exoplanetary imaging as they can very easily mimic a planet in the field of view. The solar system seems to have a significantly below average amount of this dust ( though not uniquely so) and its level is used to define relative units of measurement . Where one “Zodi” represents a level of Exozodiacal light equal to that of the solar system. Get up to ten Zodis and imaging becomes very difficult . Some younger systems have Zodis extending to the hundreds though a recent extended survey by the Large Binocular telescope suggests that the majority of systems have “manageable” levels of exozodiacal light.

I guess we won’t know for sure how problematic till WFIRST is operational. I have to say that the performance projections for WFIRST are already very impressive and have exceeded design goals already. Unlike HabEX it is defintely going to happen . Getting a Starshade up to operate in tandem with it , increases its potential enormously ,offering a chance to explore for terrestrial planets out to about 25 light years. There are a lot of very promising star systems within that radius , especially if multi star wavefront control software matures . This is essential for binary systems like Alpha Centauri where light from the none targeted star can contaminate the imaged star or produce field of view “speckles” that can mimic planets. This is more the case for starshades with their much larger field of view than coronagraphs . Many promising systems are binaries . In addition to Alpha Centauri, systems such as 61 Cygni, 70 Ophiuchi & Eta Cassiopae. 36 Ophuchi & 40 Eridani are trinaries

What an interesting concept EXCEDE turns out to be! Thank you, Ashley, for this contribution to the community. A huge amount of work obviously went into it, and it cleared up a number of misconceptions for this reader.

I wouldn’t be atall surprised to see an EXCEDE like mission happen. Especially given the huge steps forward in coronagraph maturity desribed here. It could suit a small – medium Explorer budget ( $170-240 million) and offers a large science return. ( 40 % of the overall points in the scoring system such proposals have to undergo to be selected ) The big obstacle is that to succeed with a small telescope the coronagraph would have to have a high throughput to compensate for the small mirror . Various designs ( like EXCEDE’s PIAA and the HabEX’s vector vortex) now have this ability but at the cost of vulnerabity to telescope ” jitter” , especially at higher contrast reductions. But these are much less strenuous for a circumstellar disk and young gas giant planet imager ( 1e6 versus 4e11 for a HabEX) The other issue is NASA’s strange suspicion of the low mass and cheap silicon carbide telescope and mirror construction material that reduces costs significantly . This is flight proven, already enjoying great success in major missions like Herschel & Gaia. With Euclid to come .

There is a tendency to focus on Earth-sized planets in the habitable zone to determine the likelihood finding an Earth analog, but I cannot stress how important a census of Neptunian and larger planets in the out stellar system is to determining how frequent Earth analogs are.

We are formulating theories explaining the different types of stellar planetary systems, but these are working only with information from the inner systems and assume that the outer parts will be similar to our solar system. And we all know how well our assumption that the inner part of stellar systems would look like own solar system went.

To develop robust theories of stellar system formation, we’ll need to have a statistical distribution of outer planets to correlate with our knowledge of inner planets. Only once we get the types and frequency of stellar systems will we be able to use it to predict the frequency of Earth analogs and see just how rare Earth is.

I think you are right.

The focus on habitable zone “eta Earths” is partially driven by it being a high NASA priority both in the last Decadel study and likely in 2020 too. That’s when HabEX hopefully gets priority over the various other various ship space telescope concepts. To be fair , all of them have a life finder component attached bar the X Ray cosmology telescope. Even WFIRST originally started off as a dark energy mission before evolving . But HabEX and WFIRST ,especially with a Starshade , have a wide field of view covering most of the stellar systems under exam. The Starshade is likely to be limited by fuel to about 100 prime systems but both telescopes will have additional coverage from their coronagraphs too. The all round exoplanetary haul will be very representative .

WFIRST will also be conducting a microlensing survey that will identify up to three thousand additional exoplanets across the full range of orbital distances . With its Starshade it is one potent exoplanet Observatory in its own right. Gaia, should it run for near ten years, will identify and constrain a few thousand gas and ice giants as far out as 5 AU too , within 70 light years or so. That’s a big , diverse and hopefully representative sample .

Add a budget EXCEDE like mission that maps out young star systems disks architecture and early interplanetary interactions with them and we should have an infinitely better understanding of exoplanetary science proper. HabEX can then be a highly focused icing on the cake .

Here’s hoping with all the budgetary issues falling out of JWST that WFIRST can hang on to its exoplanet science hardware .

My model for indigenous ET life limits a solar system to be exactly like ours with four outer planet, gas giants, the largest must be closest to us like Jupiter. This model assumes that Jupiter is more of an asteroid deflector and protector of Earth than one that sends them towards us, so the largest planet has to be closest to Earth. The ET exoplanet also must have a Moon to have a magnetic field and a fast rotation. It also has to be around a G class star for the right gravity and size of accretion disk to make the outer planet gas giants in roughly the same location as our solar system, so a agree with the gas giant consensus for the outer planets. Even with these limitations, there still could be one thousand to ten thousand ET exoplanet systems in the galaxy. Assuming this is correct we might find an ET inhabited planet faster if we look at only G class stars.

I predict we will only find spectroscopic bio-signature gases around an Earth twin with this model which is very similar to our solar system.

Douglas Adams:

Good quote, Alex. Where is it from?

Certainly most human tribes (and by tribes I do not just mean the kind most Westerners envision when they see this word, for they are tribes too, only bigger and with a few more shiny toys) think they are the “chosen” ones, that everything was made just for them. Everyone and everything else is meant to either serve them in one way or another, or to get out of the way (or else).

I wonder if this attitude is literally universal? Is it a survival tactic that becomes problematic once the tribe gets way bigger than its origins?

Some evidence to this effect:

https://en.wikipedia.org/wiki/The_Privileged_Planet

Wow, that’s a very specific list of habitability requirements Geoffrey, even much narrower than even this rare earth proponent believes is necessary. F stars might make fine energy sources for life baring planets too, and with longer useful MS lifetimes to boot.

But if you’re correct and there were, say 4,000 near copies of our solar system in this galaxy of around, say 400 billion stars then we’d need to search 100 million systems per each true Earth analog find. But if life is spread by intentional seeding, or if the conditions needed for life aren’t nearly as narrow as you think, then life might be much more abundant than you suggest.

Oops, meant to write K instead of F in the above comment. (Many more Ks than G’s).

F6-K3 is potentially sun-like enough for me. Well over 3 billion years on the main sequence ( a minimum agreed as necessary by both Stephen Dole & Maggie Turnbull) yet still luminous enough to have some or most of the “habitable zone” permanently outside of stellar “tidal locking” reach.

One of the things that I most disagree with the “Rare Earth” fraternity about is NOT their parochial view of intelligent life being truly uncommon ( what they really want to say is “unique” ) BUT the dismissive way in which they treat microbial or single cell life. Simple prokaryotic life or whatever. The irony is that the “Rare Earthers” almost begrudgingly acknowledge that missions like HabEX, or even WFIRST-S can and WILL discover evidence of such life at some point soon . Implying it is common nee ubiquitous . In a huge universe what would be the random chance of discovering some other independent source of life within a few tens of light years from Earth ? Unless life was very common. Still only a sample size of two but boy does that n=2 change the entire paradigm. There may be “bottle necks” in the evolution of life but by discovering ANY other measurable life source one of those must be instantly removed. Perhaps the most important of all. That then leaves evolution to multi cellular life , evolution of intelligence then evolution of civilisation and its endurance. But I suspect there is a Domino effect that increases as each bottleneck is met. And overcome. The sand runs through the egg timer but erodes the neck wider as it does so.

So for me life is THE big issue . Discover that and the dominoes will fall.

Yes, proof of present (or past) existence of alien life of any degree of complexity is sufficient to qualify as the greatest scientific discover in my opinion. Although a fan of space exploration via crewed and robotic explorers, I would prefer to see a much greater fraction of the available resources devoted to big and bigger telescopes. The information to prove the existence of alien life and even alien civilizations flows by Earth every second of every day, We just need to figure out how to capture and interpret those photons.

Thank you for such a detailed and well-illustrated article bringing an exceedingly complex topic to within the grasp of technically literate but otherwise non-expert folks.

As Ashley Baldwin has pointed out in his introduction, we live in a time of confirmation that there are plenty of planets out there – somewhere. Kepler statistics seem to indicate as many planets as suns. Now that we know that, which ones have merit nearby.

With regard to efforts like Star Shot or exotic propulsion, I do have some sympathy, especially when it comes to demonstrating a fundamental new concept which could extend our range. But in terms of trades between sending a micr0-chip to the Centauri System and getting a better exo-planet survey for “habitables” for the giga dollar or euro, I would prefer the scope. It would be a heck of a bust to send something to a star system where there actually was nothing there to neglect of another local where in the midst of the flight, we discovered that there was. And it looks like the space based scopes are proving their worth.

With the trends in data so far, whatever the difficulties are in interstellar propulsion concepts are now, I would think that the increasing discoveries would bring more pressure to bear to solve the transport problem. I just can’t imagine people a generation or two from now just accepting “positives” from all over this galactic sector and leaving it to footnotes in textbooks. Something will be done.

Judging again from trends, we can probably expect the unexpected. If planets are coming in greater variety than expected, we might expect evidence for life to more exotic as well.

Though Ward and Brownlee with their book “Rare Earth” have cautioned us about the sterilizing effects of the galactic environment, especially near the core, I still would not give up all hope. If we were to put on their caps and examine the Earth’s overall geological history, would we not conclude much the same thing and argue insistently that we should not exist? We had bombardments. We had no free oxygen. The collision that formed the moon should have done everything in…Yet many organic building blocks seem to have been transported from space to the Earth anyway. Part of the mystery is where things go from there. With the solar system, odds are low we will find evidence of anything similar to the Pre-Cambrian or Ediacaran, but at least with nearby exoplanets we will have some laboratory sites where the odds are better.

Conjecture, of course. But the just right terrestrial jack pot story on odds of a gazillion doesn’t sit well with me either. And I’d still like to go off-shore fishing with a dual sunset and a couple of extra moons or extra large night luminaries in the sky now and then.

Messrs Ward and Brownlee.

Any of their Ptolemaic work & highly selective circular conclusions should be tempered by the knowledge that they were originally close collaborators of Guillermo Gonzalez . Sometime peripatetic professor of astrophysics -cum-self styled champion of Intelligent Design . In Chief. Indeed the original concept of a “Galactic Habitable zone” , cleverly introduced in a scientific veneer was conceived by Gonzalez. It was also originally published in conjunction with –

Messrs Ward and Brownlee

I agree that what some people want is intelligent life to be rare. That puts humans back at the center of the universe and, for teh religious, sets humans as God’s unique creation. It also allows for humans eventual dominion over the universe. For others, I suspect it is about loneliness, not having other intelligent civilizations to communicate with.

My personal feelings are that life, of whatever stripe, is important. Just knowing whether life is exceedingly rare or ubiquitous is important in how we will develop our view of the universe. If life is ubiquitous, it would not just be a momentous discovery but would offer unlimited potential for discovery and research.

As for lonliness, it is perhaps hubris to think that we can communicate with very old, advanced civilizations. As we have discussed before, such civs may be totally incomprehensible to us.

If we do discover signs of life on exoplanets, I cannot but imagine that this will create an impetus to send probes to those worlds, as well as ramping up funding for remote observational techniques. This will have implications for other research, such as climatology and planetary modeling to understand the exoplanets harboring these life signs.

I am a person of faith but not very orthodox when extraterrestrial intelligence is considered I have thought about the possibility of a planet around a far Sun with inhabitants at an early iron age civilization. Each morning they rise from sleep, share a communal morning meal, then go to their fields and forges and labor throughout the day. In the fading light of day they assemble in their villages and and share in thanksgiving for the completion of the day’s labor. As they bow in prayer to their unseen creator, they pray to the same God that I do. “In my Father’s house are many mansions: if it were not so, I would have told you. I go to prepare a place for you.” It would be a puny god indeed, if his creation consisted of one intelligent bipedal race on a single planet in a dusty corner of the universe. Just my way of thinking about it, no proselytism intent at all.

I’m also a “person of faith” Harold, but I’ll keep my comments limited to merely respectfully encouraging people to keep an open mind about the possibilities re life and how widespread it might be or not be.

First let me state that micro evolution is a well established, demonstrable fact of life. That’s why there is so much biological diversity here. Macro evolution is much less well established however, and abiogenesis has an even less solid foundation of proof. Is it sufficient to reason, ‘since life is here, it MUST have arisen here?’ Not if there are other possibilities in play.

Life as we know it works with molecular instruction codes (RNA, DNA) that tell the inner workings of the cell what to do, when to do it, including replication, aka reproduction. We can compare the instruction molecules inside the living cell to the programming that drives the actions of imaginary Von Neumann machines. In a sense, is that not what living organisms are?

How might our descendants one day terraform exoplanets? Could it not be done with the aid of suitably bio-engineered organisms? If this is something we might do in the future, is it impossible that it hasn’t already done in the past, perhaps even here?

Many wonder when we might find evidence of ETI. Isn’t it just possible that we (and all the other lifeforms on Earth) are the descendents of bio-engineered life that was placed on this planet?

If that where the case then life itself would be an artifact of sorts.

Also, if life has been intentionally started here then there is no telling how widespread or rare it might be. The desire to search for it (and to expand it) might be part of our DNA program.

As Arthur C Clarke famously commented , “I think we will ultimately find that life is either universal or incredibly rare nee unique. Both of which scare the hell out of me.”

Indeed. Either option opens up existential cans of worms.

If we see the emergence and dispersal of Terran Life from one planet to a multitude, then aeons hence it’ll be the Origin of countless intelligent species.

This always reminds me of the Pogo comic strip from 1959 where two of the characters are contemplating whether there are more “ad-vanced brains” in the Universe or if humans are the smartest ones around.

Their conclusion:

“Either way, it is a mighty soberin’ thought.”

Something that has not been mentioned in relation to bottlenecks, there were at least eight Homo species with over 1000 cubic centimeters cranial capacity. The argument as to what happened to the other seven is not important, but what would our world be like if some or all survived and thrived to the present day? This is an example of how we perceive even ourselves when looking at what may exist, maybe whole groups of intelligent species live and work together side by side on other planets. Just think how different civilization might be with Neanderthals and Denisovans part of it…

https://www.washingtonpost.com/news/speaking-of-science/wp/2018/03/15/humans-bred-with-this-mysterious-species-more-than-once-new-study-shows/

And Hobbits!

Or Homo naledi for that matter, since their remains date to just 350-250 kya. Hard to imagine how our lineage has been so speciose.

Indeed. Intelligence is strongly related to brain mass and especially its ratio to body mass . In general there is a near linear increase in brain size with body mass . With very few notable exceptions. Humans and to a lesser extent some primate relatives like chimpanzees , along with dolphins and some birds. However intelligence isn’t just about raw size or even brain/body ratio. It’s about which bit of the brain is large and the types of cell and synapses that make it up. In humans the frontal lobe accounts for 50 % of total brain size and is made up of association neuronee derived from olfactory cortex ( yes smell) . These unique neurones, unlike other areas of the cerebral cortex , operate in a “random access” manner which effectively makes each of the frontal lobe’s trillion synapses act as individual processor . A trillion processors acting in parallel to create one monster individual processor. One that blows even the greatest super computers out of the water. The intellect is thus akin to a computer’s software rather than its hardware . The frontal lobe acts as the brain’s executive -planning, initiating and controlling ( most of the time ,though it can be overridden by primitive components in times of stess) . Dolphins’ and birds’ larger brains are largely made up of visual cortex neurones which operate in a “point on point” manner and though conveying the fantastic visual acuity needed by such animals ,doesn’t confer the “intelligence cum sentience ” we recognise , though they are every bit as evolved as us. As are all animals alive to day. Humans are not the pinnacle of some inevitable hierarchical evolutionary pyramid. That’s one of the great fallacies of evolutionary theory.

Chimpanzees have just enough frontal cortex to be sentient and to even understand individual words though not full speech. The human brain isn’t evolved fully until about 25 years old and a chimpanzee’s brain is akin to that of a 2-3 year old human child.

This big ( >1000cc vol as stated above) brain is uniquely expensive , using up to 20 % of the body’s glucose metabolism and 15 % of its cardiac output. Which is incredible really and begs the question of how it evolved . Intelligence as we understand it now certainly confers evolutionary advantages that would drive natural selection but it’s hard to see how it would initially have helped in the Pleistocene when our large brained forbears first arose. Sabre tooth tigers and all. Maybe the ice ages drove our arboreal primate ancestors out of the forests as they receded . To survive in trees would need considerable dexterity and coordination that might require larger than average primate brains . These in turn could be switched to other key survival functions once on the ground. Bipedal stance would out your main senses at the top of a five – six foot tower – a big advantage in a Savannah habitat and would free up the forward limbs to be free for manipulation of tools etc. Who knows, but whatever the answer is , intelligence evolved. After over 4 billion years of life on Earth though . Inevitable though delayed consequence ? – simply an accident of evolution ? ( the reason our frontal lobes consist of olfactory neurones by the way, is because our distant mamalian ancestors were nocturnal out of necessity. Dinosaurs with great vision ruled the Earth in the day so to survive mammals had to be small and innocuous and use smell as a primary sense. Sight isn’t much good in the dark ! Once the asteroid hit , being small made survival easier in tough times, vision improved as existence moved to day time but those clever olfactory neurones came in very handy for the primate group of mammals. How ironic is that ? Mammals would never have made it if dinosaurs had continued unabated but intelligence wouldn’t likely have evolved if they hadn’t existed. It’s also a reason why those Avian dinosaurs that ” survived ” as birds have well developed sight. A reptilian feature ) No wonder those last few integers in the Drake equation are so nebulous and intelligence may represent a “bottle neck”. The fact that so many individual “homo” subspecies did arise however suggests that once that bottleneck is overcome intelligence rapidly expands. I suspect that the disappearance of all but Homo Sapiens was driven by natural selection .

Brutal and random but a fact.