Robert Bradbury had interesting thoughts about how humans would one day travel to the stars, although whether we could at this point call them human remains a moot point. Bradbury, who died in 2011 at the age of 54, reacted at one point to an article I wrote about Ben Finney and Eric Jones’ book Interstellar Migration and the Human Experience (1985). In a comment to that post, the theorist on SETI and artificial intelligence wrote this:

Statements such as “Finney believes that the early pioneers … will have so thoroughly adapted to the space environment” make sense only once you realize that the only “thoroughly adapted” pioneers will be pure information (i.e. artificial intelligences or mind uploaded humans) because only they can have sufficient redundancy that they will be able to tolerate the hazards that space imposes and exist there with minimal costs in terms of matter and energy.

Note Bradbury’s reference to the hazards of space, and the reasonable supposition — or at least plausible inference — that biological humanity may choose artificial intelligence as the most sensible way to explore the galaxy. Other scenarios are possible, of course, including humans who differentiate according to their off-Earth environments, igniting new speciation that includes unique uses of AI. Is biological life necessarily a passing phase, giving way to machines?

I’ll be having more to say about Robert Bradbury’s contribution to interstellar studies and his work with SETI theorist Milan ?irkovi? in coming months, though for now I can send you to this elegant, perceptive eulogy by George Dvorsky. Right now I’m thinking about Bradbury because of Andreas Hein and Stephen Baxter, whose paper “Artificial Intelligence for Interstellar Travel” occupied us yesterday. The paper references Bradbury’s concept of the ”Matrioshka Brain,” a large number of spacecraft orbiting a star producing the power for the embedded AI.

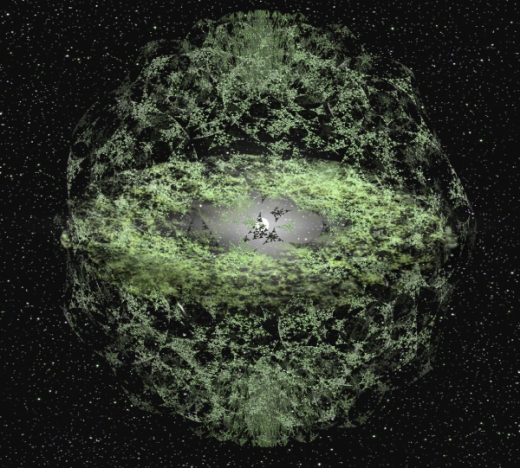

Image: Artist’s concept of a Matrioshka Brain. Credit: Steve Bowers / Orion’s Arm.

Essentially, a Matrioshka brain (think dolls within dolls like the eponymous Russian original) resembles a Dyson sphere on the surface, but now imagine multiple Dyson spheres, nested within each other, drawing off stellar energies and cycling levels of waste heat into computational activities. Such prodigious computing environments have found their way into science fiction — Charles Stross explores the possibilities, for example, in his Accelerando (2005).

A Matrioshka brain civilization might be one that’s on the move, too, given that Bradbury and Milan ?irkovi? have postulated migration beyond the galactic rim to obtain the optimum heat gradient for power production — interstellar temperatures decrease with increased distance from galactic center, and in the nested surfaces of a Matrioshka brain, the heat gradient is everything. All of which gives us food for thought as we consider the kind of objects that could flag an extraterrestrial civilization and enable its deep space probes.

But let’s return to the taxonomy that Hein and Baxter develop for AI and its uses in probes. The authors call extensions of the automated probes we are familiar with today ‘Explorer probes.’ Even at our current level of technological maturity, we can build probes that make key adjustments without intervention from Earth. Thus the occasional need to go into ‘safe’ mode due to onboard issues, or the ability of rovers on planetary surfaces to adjust to hazards. Even so, many onboard situations can only be resolved with time-consuming contact with Earth.

Obviously, our early probes to nearby stars will need a greater level of autonomy. From the paper:

On arrival at Alpha Centauri, coming in from out of the plane of a double-star system, a complex orbital insertion sequence would be needed, followed by the deployment of subprobes and a coordination of communication with Earth [12]. It can be anticipated that the target bodies will have been well characterised by remote inspection before the launch of the mission, and so objectives will be specific and detailed. Still, some local decision-making will be needed in terms of handling unanticipated conditions, equipment failures, and indeed in prioritising the requirements (such as communications) of a multiple-subprobe exploration.

Image: Co-author Andreas Hein, executive director of the Initiative for Interstellar Studies. Credit: i4IS.

Feature recognition through ‘deep learning’ and genetic algorithms are useful here, and we can envision advanced probes in the Explorer category that can use on-board manufacturing to replace components or create new mechanisms in response to mission demands. The Explorer probe arrives at the destination system and uses its pre-existing hardware and software, or modifications to both demanded by the encounter situation. As you can see, the Explorer category is defined by a specific mission and a highly-specified set of goals.

The ‘Philosopher’ probes we looked at yesterday go well beyond these parameters with the inclusion of highly advanced AI that can actually produce infrastructure that might be used in subsequent missions. Stephen Baxter’s story ‘Star Call’ involves a probe that is sent to Alpha Centauri with the capability of constructing a beamed power station from local resources.

The benefit is huge: Whereas the Sannah III probe (wonderfully named after a spaceship in a 1911 adventure novel by Friedrich Mader) must use an onboard fusion engine to decelerate upon arrival, all future missions can forego this extra drain on their payload size and carry much larger cargoes. From now on incoming starships would decelerate along the beam. Getting that first mission there is the key to creating an infrastructure.

The Philosopher probe not only manufactures but designs what it needs, leading to a disturbing question: Can an artificial intelligence of sufficient power, capable of self-improvement, alter itself to the point of endangering either the mission or, in the long run, its own creators? The issue becomes more pointed when we take the Philosopher probe into the realm of self-replication. The complexity rises again:

…for any practically useful application, physical self-replicating machines would need to possess considerable computing power and highly sophisticated manufacturing capabilities, such as described in Freitas [52, 55, 54], involving a whole self-replication infrastructure. Hence, the remaining engineering challenges are still considerable. Possible solutions to some of the challenges may include partial self-replication, where complete self-replication is achieved gradually when the infrastructure is built up [117], the development of generic mining and manufacturing processes, applicable to replicating a wide range of components, and automation of individual steps in the replication process as well as supply chain coordination.

Image: Stephen Baxter, science fiction writer and co-author of “Artificial Intelligence for Interstellar Travel.” Credit: Stephen Baxter.

The range of mission scenarios is considerable. The Philosopher probe creates its exploration strategy upon arrival depending on what it has learned approaching the destination. Multiple sub-probes could be created from local resources, without the need for transporting them from Earth. In some scenarios, such a probe engineers a destination planet for human habitability.

But given the fast pace of AI growth on the home world, isn’t it possible that upgraded AI could be transmitted to the probe? From the paper:

This would be interesting, in case the evolution of AI in the solar system is advancing quickly and updating the on-board AI would lead to performance improvements. Updating on-board software on spacecraft is already a reality today [45, 111]. Going even a step further, one can imagine a traveling AI which is sent to the star system, makes its observations, interacts with the environment, and is then sent back to our solar system or even to another Philosopher probe in a different star system. An AI agent could thereby travel between stars at light speed and gradually adapt to the exploration of different exosolar environments.

We still have two other categories of probes to consider, the ‘Founder’ and the ‘Ambassador,’ both of which will occupy my next post. Here, too, we’ll encounter many tropes familiar to science fiction readers, ideas that resonate with those exploring the interplay between space technologies and the non-biological intelligences that may guide them to another star. It’s an interesting question whether artificial general intelligence — AGI — is demanded by the various categories. Is a human- or better level of artificial intelligence likely to emerge from all this?

The paper is Hein & Baxter, “Artificial Intelligence for Interstellar Travel,” submitted to JBIS (preprint).

Re: transmitting AI.

As long as we can overcome the bandwidth issues of interstellar communication, then this is exactly what I would envisage. If it ever proves possible to upload human minds, then this is also the best means of human minds to travel to the stars. Even with lots of short hops, a mind would sense no time and could awake on the other side of the galaxy in its new embodiment.

Light speed issues will still impact the cohesiveness of the networked AIs, and this may present a practical limit to the expansion of Earth’s sphere of influence to the stars. AIs may not care about Earth’s [i.e. human’s] centrality and keep expanding anyway until the galaxy is a machine civilization.

Matrioshka brains will probably evolve around Sol first which leads to the very satisfying end scenario in Accelerando. If the galaxy fills, then we get a scenario more like Benford’s “Galactic Center Saga” novels, although hopefully with a better life for the humans.

Today, we see AIs in the service of humanity. Historically, slaves were kept ignorant and ineffectual to forestall slave revolts. Yet we aim to make our AIs superintelligent, often rationalized to solve our most intractable or “wicked” problems. There is a rich SF literature about superintelligent computers, stories far more subtle than robot revolts, yet very few in teh AI community seem interested in the issues, preferring to focus on the technology alone. We’ve seen the problems with this mindset in social media, so I would hope that we think more carefully about our AI creations before we go down a road from which we cannot stray from. The hierarchical nature of human origins seems to drive us to create beings who can solve our problems. Whether God[s], monarchs, aliens, and now AGIs, we seem to follow the same pattern, and often with similar outcomes.

The most basal layer of programming may effect the decisions of the entire enterprise in ways so subtle as to be unrecognisable and yet the most resistant to modification or eradication.

Biological programming may have preceded neurological function, arising in molecular cell biology or even in biochemistry. Amongst competing prebiotic (macro)molecules those best adapted to accessing resources and elbowing out or consuming competitors, and replicating themselves might have survived and passed these traits on to their descendants as imperatives.

Increasingly complex molecular machinery had to conform to these imperatives for their systems to survive, grow and replicate. That’s how we are here. Hooray!

But that is not a Procrustean basis for AI or AGI. They may have some mundane task(s) as their basal layer of programming. For example, Matrioshka brains and such superintelligences may consider data gathering and transmission to be the Supreme Good if they are systemically descended from devices designed for that purpose. Survival, growth, replication and even data processing may be seen as ancillaries to that Supreme Good.

“Increasingly complex molecular machinery had to conform to these imperatives for their systems to survive, grow and replicate. That’s how we are here. Hooray!”

This brings up a question which has been on my mind for a considerable period of time. Namely, the replication of single cell as well as multicellular organisms. It is not at all obvious WHY this replication is an out come of Darwinian evolution. Now one might remark, well of course reproduction is a OBVIOUS prerequisite for continuation of life. But is it? One could postulate for example that an organism could have in internalized repair mechanism that would allow continued regeneration of all its functions. And that’s not theoretical by the way, the Hydra (I think that is the organism) is just such an organism which is virtually immortal in the sense that it can regenerate. So sexuality is not necessarily a given nor even a desirable trait as it can introduce deleterious genetics into offspring.

I think you have this back to front. Darwinian selection acts on the individual, but the effect is on the distribution of genes in the population. Replication is necessary for this to work and is one of the 3 conditions needed for evolution to work. An organism that failed to replicate would eventually expire from some event, removing its genes from the biosphere.

Consider your example. There would be just one organism. No copies of its genes anywhere else. Each unique organism would have to be created de novo. There would be no evolution as there would be no replication of genes. This organism would have to be unicellular as replication of its genes for each cell in a complex animal would not exist. At some point, this organism would die due to some external event and therefore it, and its complement of genes would disappear. Evolution requires replication so that mutations, horizontal gene transfer (in bacteria), and sex to mix genes, provides the variation for selection to operate, to generate the “fitter” organisms which in turn can spread their genes more widely for further changes. IOW, replication is a required condition for Darwinian selection and evolution.

I understand what you’re saying, and I agree. However, this filter (by gene mixing) to provide “fitter” organisms does not always result in fitter organisms. As I stated before, a mutation that’s deleterious can propagate through a population and that’s not to the betterment of the species. My point in talking about the Hydra was that this creature breaks apart and its “progeny” if you will, has a uniform genetic composition and can establish (by its splitting apart) itself far and wide, thus perpetuating the species.

What I was talking about in a more theoretical manner was an organism or a collection of the same organism which has self repair as part and parcel of its nature. What do you think is the striving behind this antiaging research that is now being conducted full bore? It’s looking into, in some fashion or another repairing the entire organism and finding a way to rejuvenate “youthfulness” in said organism. Whether one thinks that is desirable or not, that is not a sexual reproductive strategy, but rather a maintenance strategy.

I further add as proof that Darwinian evolution does not always succeed, take a look at the dinosaurs, they were as varied and as prolific across the globe as any species could ever hope to be with an enormous genetic variation. Wasn’t much good against that big asteroid now was it? And yet smaller and less dominant organisms continued life.

So how do you get to this hydra organism which has a perfect replication of its DNA to self-repair? It would have to be made by a Creator.

The imperfect replication of DNA is the material of evolution to test out new genes, gene control, and gene combinations. Bacteria are doing this constantly. It is also the only way we know that allows functioning complexity to emerge without design.

“Daniel Martinez claimed in a 1998 article in Experimental Gerontology that Hydra are biologically immortal.[12] This publication has been widely cited as evidence that Hydra do not senesce (do not age), and that they are proof of the existence of non-senescing organisms generally. In 2010 Preston Estep published (also in Experimental Gerontology) a letter to the editor arguing that the Martinez data refute the hypothesis that Hydra do not senesce.[13]

The controversial unlimited life span of Hydra has attracted the attention of natural scientists for a long time. Research today appears to confirm Martinez’ study.[14] Hydra stem cells have a capacity for indefinite self-renewal. The transcription factor, “forkhead box O” (FoxO) has been identified as a critical driver of the continuous self-renewal of Hydra.[14] A drastically reduced population growth resulted from FoxO down-regulation, so research findings do contribute to both a confirmation and an understanding of Hydra immortality.[14]

While Hydra immortality is well-supported today, the implications for human aging are still controversial. There is much optimism;[14] however, it appears that researchers still have a long way to go before they are able to understand how the results of their work might apply to the reduction or elimination of human senescence.”

https://en.wikipedia.org/wiki/Hydra_(genus)

The above talks about the self repair issue you that you brought up; as regards to having been made by the Creator, I agree with you. I do not disavow evolution, quite the contrary. You can have evolution advanced up to the point in which the Hydra is created and then a Hydra by asexual fission makes its progeny. My point was simply to show that an organism can exist without having to reshuffle genetic code. In fact, even in an asexual environment a reshuffling of the genetic code could occur due to outside influence, which is of a nonsexual nature and produce advances in the species that way. I feel pretty certain that there is a lot of people, especially those who were wealthy who would be more than happy to pay to have cellular regeneration and immortality, even if the price was astronomical. Then if they wanted to reproduce themselves, for whatever reason, they could engage in some form of cloning and get dozens of say, Barack Obamas, or Bill Gates or what have you. I think they’d would be vain enough to like to be surrounded by mirror images of themselves in 3-D.

This was your original statement:

I think what you may have meant is why do organisms need to live beyond their reproductive period. In the case of asexxual organisms that fission or bud off juveniles, their reproductive period is long ccompared to other organisms of their size. I see no reason why such a strategy should not be a selective solution, especially if reproduction by other means is difficult, or offspring survival is very poor. It may be that maintaining a parent that can keep adding to the population may be a better strategy than relying on a successful cohort of offspring. Given what we know about CNA replication errors, as well as mitochondrial senescence, it seems to me that death is inevitable, even without external causes. But let’s assume that the parent never replaces its cells, but just maintains them. The budded offspring will have a mix of perfect genome copies, copies with neutral mutations, and copies with advantageous mutations. This will ensure Darwinian selection can grind away. The parent will survive for a long period, producing close copies, until it dies from environmental factors or predation. Because it produces close copies for a long time, and IF the survival of its offspring is poor, THEN the rate of change of the population’s genetics will be slow. I have to wonder if that may also suggest that its DNA replication mechanism is poor, introducing lots of errors. Seems unlikely, but I do think any long life is selected for due to poor survival of its motile polyps (i.e. like jellyfish).

“I see no reason why such a strategy should not be a selective solution, especially if reproduction by other means is difficult, or offspring survival is very poor. It may be that maintaining a parent that can keep adding to the population may be a better strategy than relying on a successful cohort of offspring. Given what we know about CNA replication errors, as well as mitochondrial senescence, it seems to me that death is inevitable, even without external causes.”

It’s getting to be my belief that we are talking past one another here. My original inquiry was simply to acknowledge the fact that gene mixing can have weaknesses, as well as strengths that may or may not permit propagation of the species. In the case that I used to suggest that an organism might have a better survivability, IF it was able to have a (theoretically) robust cellular repair mechanism, that that might be superior for survival simply because of the fact that that it has a proven track record in that it’s cellular structures is always unchanging. Something that doesn’t change doesn’t mean necessarily that it is weak in the struggle for survival, it may or may not be, but it certainly indicates that the proof in the pudding is in the eating in the fact that it has survived for eons. It is more than likely that there have been certain strains of bacteria, for example that have been unchanged since the beginning of earth.

No one is saying that such a multicellular or single cellular organism CANNOT change due to environmental circumstances, merely that may have found life better for itself by not changing.

Addendum: in simplicity, my ideas simply is that gene mixing and sex MIGHT NOT result in a BETTER organism. Retention of the original cellular design might as a whole benefit the species. That’s what I’m actually trying to say in case I wasn’t clear in my previous posts.

I think it best that you do some research into why sexual reproduction has evolved, despite its inefficiencies compared to the earlier asexual reproduction. This has concerned biologists for a long time. The one solution I am aware of is that it keeps the population ahead of parasites. There are some interesting papers on this subject.

“Charles Stross explores the possibilities, for example, in his Accelerando (1985).” Was actually published in 2005.

Full text of Accelerando novel is on the author’s blog here: –

https://www.antipope.org/charlie/blog-static/fiction/accelerando/accelerando.html

Right you are. Will fix this pronto. Thanks!

Hi Paul,

Interesting article. The mention of Bradbury brought this book to mind,

If the Sun Dies, by Oriana Fallaci

..she interviews Bradbury…illuminating.. You may have read it, if not, well worth the time..

https://www.amazon.com/If-sun-dies-Oriana-Fallaci/dp/B0007DTULA

Regards,

Mark

Thanks for the tip, Mark. Have not read it, but will do so.

I have that book. AFAICS, it is Ray Bradbury, not Robert Bradbury, that she mentions (at least that is the only reference in the index).

Hi Paul,

That is great, please let us know what you think, in particular the interview, chapter with Bradbury, I have yet to find a more concise and inspiring Cri Du Coeur of why need go to space, to the ‘Undiscovered Country ”

Mark

Given the unavoidable long latency times for communication and the need for these probes/ships to be autonomous it seems almost inevitable that they (the AIs) will develop unforeseen habits/behaviors…IF they’re able to survive past the time span of the initial, intended mission.

I’m unclear if the above situation would make it desirable to build in some sort of fail safe (suicide imperative, restricted ‘lifespan’, etc) in order for us (or any other species) to avoid encountering rogue AIs at some future date. It strikes me that as the sophistication of the AI increases so does the desire to have a leash on it.

It is easy to tell if a software file has become corrupted, the operating system will check the file checksum when it is loaded from mass memory. There are ways to detect other kind of errors, too, such as memory errors. There are even ways to detect processor errors, such as using triple redundant computers and voting circuits. That’s how we can make sure computers are running the intended code.

But there is no way to tell for sure if a self modifying computer has modified its own code or its goals in an intended way. It’s too easy for us to accidentally program in some unintended behavior. That’s why self modifying computers can be dangerous, and the more power they control, the more dangerous they can be.

Given the facts that (1) any AI sent to another star system is going to be the strongest, most versatile AI we can build at the time of departure, and (2) there will be a long travel time before reaching the target, it is a fair question to wonder just what the shipbound AI will do with all its travel time and no particular mission-related tasks to take care of en route. Considering that AlphaZero taught itself chess in a few hours, well enough to beat previously world-beating chess engines 100-0, we can imagine that the AI that reaches the other star system will be a far stronger AI than the one that left ours.

Chess is a closed system of course, whereas interstellar exploration isn’t, but still: you can imagine instructing the AI to game out all the possibilities for its arrival in-system, at incredible depth, over its multiple-decade travel time.

The authors imagine the AI to be inactive during transit, due to power limitations. But that’s not a given.

To me the big issue is whether the AI is human-exclusive or human-inclusive, i.e. containing uploaded human[s] participating in the AI’s cognition and the mission generally. In the latter case, the need to establish a biome for physical humans has passed. I expect that to be the case, actually.

With decades or centuries of travel time to game out forthcoming scenarios, it is a possibility that an AGI sent on an interstellar journuy may choose to augment its hardware after arrival at its destination, if it possessed the needed fabrication (or replication) capabilities. There is no telling where that might end: could it be the start of a post-biologic “civilization”?

Why assume it will be fully active during cruise, rather than mostly shut down to save energy with just housekeeping functions operating?

A good point, Alex, as we’ll see in tomorrow’s post.

One thing that always frustrated me about the BIS Daedalus star probe is that after the probe flew through the target star system it would be left to drift indefinitely. The planners gave no thought about what to do with the semi intelligent computer running the ship afterwards.

If we send out an AI which can replicate itself and modify its own programming, eventually we’re going to encounter it and have to deal with it for better or worse. That sounds fairly scary to me. Who knows what motivation a machine intelligence would come up with for itself and how that might effect other beings?

Given the vastness of space I understand the need for humans with short life spans to use AI to explore other star systems but I can’t really believe we would release a system with no oversight, a long lifespan (relatively), the ability to work with local resources to replicate itself, and possibly an eventual unknown set of motivations, into the galaxy.

Tiplers model in Anthropic Cosmological Principle and previously inInternational Journal of Theoretical Physics .

However we are so far away from uploading the brain …personally I think millions of years if ever. Maybe something borg like. Maybe.A recent Planarian study showed we really do not understand memory …a chopped off head one kept some ……where and how who knows……we dont even know what to upload.

Self replicating probe (AI) is unneeded feature, that make whole project many order more expansive, harder to implement, technologically more complicated, but even worse – it is dangerous feature for probe creators.

Everything in the Universe should have limited span of “life” including AI probe.