As we look toward future space missions using advanced artificial intelligence, when can we expect to have probes with cognitive capabilities similar to humans? Andreas Hein and Stephen Baxter consider the issue in their paper “Artificial Intelligence for Interstellar Travel” (citation below), working out mass estimates for the spacecraft and its subsystems and applying assumptions about the increase in computer power per payload mass. By 2050 we reach onboard data handling systems with a processing power of 15 million DMIPS per kg.

As DMIPS and flops are different performance measures [the computing power of the human brain is estimated at 1020 flops], we use a value for flops per kg from an existing supercomputer (MareNostrum) and extrapolate this value (0.025?1012 flops/kg) into the future (2050). By 2050, we assume an improvement of computational power by a factor 105 , which yields 0.025?1017 flops/kg. In order to achieve 1020 flops, a mass of dozens to a hundred tons is needed.

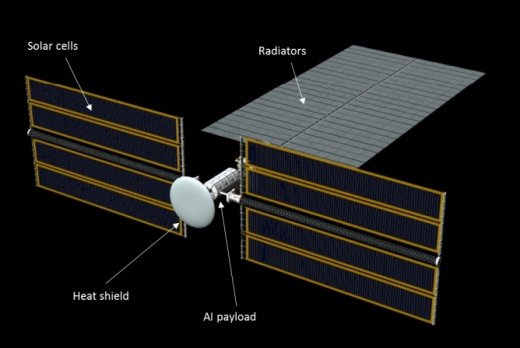

All of this factors into the discussion of what the authors call a ‘generic artificial intelligence probe.’ Including critical systems like solar cells and radiator mass, Hein and Baxter arrive at an AI probe massing on the order of hundreds of tons, which is not far off the value calculated in the 1970s for the Daedalus probe’s payload. Their figures sketch out a probe that will operate close to the target star, maximizing power intake for the artificial intelligence. Radiators are needed to reject the heat generated by the AI payload. The assumption is that AI will be switched off in cruise, there being no power source enroute to support its operations.

The computing payload itself masses 40 tons, with 100 tons for the radiator and 100 tons for solar cells. We can see the overall configuration in the image below, drawn from the paper.

Image: This is Figure 10 from the paper. Caption: AI probe subsystems (Image: Adrian Mann).

Of course, all this points to increasingly powerful AI and smaller payloads over time. The authors comment:

Under the assumption that during the 2050 to 2090 timeframe, computing power per mass is still increasing by a factor of 20.5, it can be seen…that the payload mass decreases to levels that can be transported by an interstellar spacecraft of the size of the Daedalus probe or smaller from 2050 onwards. If the trend continues till 2090, even modest payload sizes of about 1 kg can be imagined. Such a mission might be subject to the ”waiting paradox”, as the development of the payload might be postponed successively, as long as computing power increases and consequently launch cost[s] decrease due to the lower payload mass.

And this is interesting: Let’s balance the capabilities of an advanced AI payload against the mass needed for transporting a human over interstellar distances (the latter being, in the authors’ estimation, about 100 tons). We reach a breakeven point for the AI probe with the cognitive capabilities of a human somewhere between 2050 and 2060. Of course, a human crew will mean more than a single individual on what would doubtless be a mission of colonization. And the capabilities of AI should continue to increase beyond 2060.

It’s intriguing that our first interstellar missions, perhaps in the form of Breakthrough Starshot’s tiny probes, are contemplated for this timeframe, at the same time that the development of AGI — artificial general intelligence — is extrapolated to occur around 2060. Moving well beyond this century, we can envision increasing miniaturization of increasingly capable AI and AGI, reducing the mass of an interstellar probe carrying such an intelligence to Starshot-sized payloads.

From Daedalus to a nano-probe is a long journey. It’s one that Robert Freitas investigated in a paper that took macro-scale Daedalus probes and folded in the idea of self-replication. He called the concept REPRO, a fusion-based design that would use local resources to produce a new REPRO probe every 500 years. But he would go on to contemplate probes no larger than sewing needles, each imbued with one or many AGIs and capable of using nanotechnology to activate assemblers, exploiting the surface resources of the objects found at destination.

As for Hein and Baxter, their taxonomy of AI probes, which we’ve partially examined this week, goes on to offer two more possibilities. The first is the ‘Founder’ probe, one able to alter its environment and establish human colonies. Thus a new form of human interstellar travel emerges. From the paper:

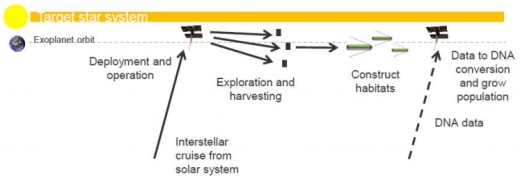

The classic application of a Founder-class probe may be the ‘seedship’ colony strategy. Crowl et al. [36] gave a recent sketch of possibilities for ‘embryo space colonisation’ (ESC). The purpose is to overcome the bottleneck costs of distance, mass, and energy associated with crewed interstellar voyages. Crowl et al. [36] suggested near-term strategies using frozen embryos, and more advanced options using artificial storage of genetic data and matterprinting of colonists’ bodies, and even ‘pantropy’, the pre-conception adaptation of the human form to local conditions. Hein [70] previously explored the possibility of using AI probes for downloading data from probes into assemblers that could recreate the colonists. Although appearing speculative, Boles et al. [18] have recently demonstrated the production of genetic code from data.

Founder probes demand capable AI indeed, for their job can include terraforming at destination (with all the ethical questions that raises) and the construction of human-ready habitats. You may recall, as the authors do, Arthur C. Clarke’s The Songs of Distant Earth (1986), wherein the Earth-like planet Thalassa is the colony site, and the first generation of colonists are raised by machines. Vernor Vinge imagines in a 1972 story called ‘Long Shot’ a mission carrying 10,000 human embryos, guided by a patient AI named Ilse. A key question for such concepts: Can natural parenting really be supplanted by AI, no matter how sophisticated?

Image: The cover of the June, 1958 issue of IF, featuring “The Songs of Distant Earth.” Science fiction has been exploring the issues raised by AGI for decades.

Taken to its speculative limit, the Founder probe is capable of digital to DNA conversion, which allows stem cells carried aboard the mission to be reprogrammed with DNA printed out from data aboard the probe or supplied from Earth. A new human colony is thus produced.

Image: This is Figure 9 from the paper. Caption: On-site production of genetic material via a data to DNA converter.

Hein and Baxter also explore what they call an ‘Ambassador’ probe. Here we’re in territory that dates back to Ronald Bracewell, who thought that a sufficiently advanced civilization could send out probes that would remain in a target stellar system until activated by its detection of technologies on a nearby planet. A number of advantages emerge when contrasted with the idea of long-range communications between stars:

A local probe would allow rapid dialogue, compared to an exchange of EM signals which might last millennia. The probe might even be able to contact cultures lacking advanced technology, through recognizing surface structures for example [11]. And if technological cultures are short-lived, a probe, if robust enough, can simply wait at a target star for a culture ready for contact to emerge – like the Monoliths of Clarke’s 2001 [32]. In Bracewell’s model, the probe would need to be capable of distinguishing between local signal types, interpreting incoming data, and of achieving dialogue in local languages in printed form – perhaps through the use of an animated dictionary mediated by television exchanges. In terms of message content, perhaps it would discuss advances in science and mathematics with us, or ‘write poetry or discuss philosophy’…

Are we in ‘Prime Directive’ territory here? Obviously we do not want to harm the local culture; there are planetary protection protocols to consider, and issues we’ve looked at before in terms of METI — Messaging Extraterrestrial Intelligence. The need for complex policy discussion before such probes could ever be launched is obvious. Clarke’s ‘Starglider’ probe (from The Fountains of Paradise) comes to mind, a visitor from another system that uses language skills acquired by radio leakage to begin exchanging information with humans.

Having run through their taxonomy, Hein and Baxter’s concept for a generic artificial intelligence probe, discussed earlier, assumes that future human-level AGI would consume as much energy for operations as the equivalent energy for simulating a human brain. Heat rejection turns out to be a major issue, as it is for supercomputers today, requiring the large radiators of the generic design. Protection from galactic cosmic rays during cruise, radiation-hardened electronics and self-healing technologies in hardware and software are a given for interstellar missions.

Frank Tipler, among others, has looked into the possibility of mind-uploading, which could theoretically take human intelligence along for the ride to the stars and, given the lack of biological crew, propel the colonization of the galaxy. Ray Kurzweil has gone even further,by suggesting that nano-probes of the Freitas variety might traverse wormholes for journeys across the universe. Such ideas are mind-bending (plenty of science fiction plots here), but it’s clear that given the length of the journeys we contemplate, finding non-biological agents to perform such missions will continue to occupy researchers at the boundaries of computation.

The paper is Hein & Baxter, “Artificial Intelligence for Interstellar Travel,” submitted to JBIS (preprint).

I can imagine a probe able to change it’s mode as the circumstances dictate.

An Explorer probe converts its type to Ambassador if it detects ETI, or Gardener probe if it finds local multi-cellular life.

Otherwise if it finds a nice location it converts to a Founder probe.

If the system doesn’t show much promise maybe it becomes a Philosopher probe to decide what to do next, create a long term way-station or make for another system.

So far we’ve only started with automated Explorer probes. To create the first out-posts on the Moon or Mars we’re going to have to make Habitats for the first settlers. Will be able to do without AI for these because of the low light lag time from home. AI becomes more necessary the further out we go.

I don’t think a human level intelligence is necessarily measured in flops. If thought is 10x slower, we’ve got a 10th the flops. As long as the thoughts are deep enough, it can still be called human level intelligence.

Flops measuring is absolutely misleading when you compate human brain with digital computer, even handheld calculators perform coplicated math operations much faster than human’s brain …

It is like to compare the Green color vs. square shape…

That is a fair point. However, we do need some measure of intelligence output that works across very different brains and substrates that isn’t biased buy our human views, like IQ, g, etc.

FLOPS, MIPS, and other digital operations are a poor measure, only useful in so far as they can be used as a gauge of when digital computers may have similar capabilities to human brains. Having said that, already AIs can achieve success on the RAVEN test and other measures of human (well Western educated human) reasoning. We know that some techniques are far better performed by computers (e.g. Prolog language for solving logic problems) than human minds with far less effort and power requirements.

While you have denigrated ANNs as not AI, they have provided a technique that solved the pattern matching problem that animals can do so effortlessly with sight and hearing. To date, ANNs are computationally expensive, require ridiculous amounts of training data, and can be fooled with inputs from GANs. Marrying GOFAI and ANNs will get us towards AGI, although not all the way, that should be clear.

Finally, the purpose of AI is not to create intelligence just as humans do. The analogy is that we don’t do flying like birds or swimming like fish. Nevertheless, our aircraft fly further, faster and higher than any bird, and our submarines can do similarly better than most animals. Once chess playing was considered the height of human thought capability, now chess-playing computers, as well as other games playing computers, routinely beat the best humans in those domains. Specialization yes, but it shows that such skills can be very powerful, and when aggregated with other techniques, should be able to provide considerable thinking firepower to a machine.

Personally, I think that analogies are a missing piece of the puzzle, an idea suggested by a number of cognitive scientists and most popularized by Douglas Hofstadter. As I find myself constantly using analogies, it seems to me that this is an important feature. Howto to create software that can make good analogies across different domains is unclear, but probably not intractable unless we are missing something very inherent in wetware that is missing in our silicon and software devices.

I do not deny facts that modern implementations of neural networks can do many interesting and complicated things, but it is not inteligence in any way – it is bionic machine, a “trademark” – is misleading marketing trick.

For sure UN (fir example only :-) one day , can decide that :

ANN = AI

This fact will mean only that our requirement to AI have been downgraded.

Current “status quo” is very similar – every Hi-Tech project that use Neural Networks is automatically called AI – on my opinion it is misleading, wrong, and anti-scientific tendency (but provides a good sales).

Additional problem with NN – it is empiric, “black box” or “hidden variables” system, we hardly know how to optimize.

Present expectations and promises of creation real AI (not in marketing meaning) are mainly grounded on extesive way, i.e. we will create real AI when transistors quantity will become AI quality.

By the way AI science lives with exactly those expectations since 1960-x, every decade postponing AI to next decade (when we can put more trasistors on the same area. Probably without significant theoretical advance and new discovery – this way is the way nowhere.

I.e. our machines could better and better recognize images (sounds, smells), but will never be so intelligent as supposed in discussed articles about AI in space exploration.

AI is always something we cannot do today. ;)

Regarding optimization, how do we optimize biological intelligence – i.e. human learning? Isn’t that somewhat of an art, rather than science, too?

I do not know how :-)

I suppose that correct the answer to this question is directly leading to Nobel prize.

Meanwhile even insects have much higher level of autonomy than any of our sophisticated automatic space probes.

Look at perfect piece of Hi-Tec – Opportunity, that seam to be lost on the Mars now. None can tell exactly what wrong happened to this probe, but one of the main versions explaining the failure – the solar panels was covered by dust during big sand storm, so rover is out of energy now – seam to be little bit comic , for the item that supposed to be autonomous …

I very appreciate it’s creators, only use this example to show the earth’s science achievements location on some virtual AI level graph…

To escape, by every possible way, situation of under-energy (hunger) seams to be main goal of all live organisms on the planet Earth, even those simplest, who supposed do not have any intellect, but I am sure it is one of main “engines” of intellect too.

Yet humans run out of air by drowning and water by walking into deserts and starve for many reasons. Does this imply humans are unintelligent in some way?

We don’t know exactly why Opportunity went silent. Maybe it did have its panels covered in dust. However I would suggest that the controllers on Earth would have instructed it to “shrug its panels” if that were the case. We probably won’t know what caused its fate until another mission can investigate.

However, to suggest that self-preservation is a requirement of intelligent machines is not how they are designed, and even may have unforeseen consequences if there were. Curiosity is nuclear powered, so when it fails, it won’t be due to obscured solar panels strangling its energy source.

I suppose you and me and anyone other in this comments suppose automatically that humans are intelligent :-) Opposite there is nothing to discuss at all – i.e. intelligence is not exists.

In same time we can take in account that there is multiple deviations in human behavior and we frequently we call it – insane or crazy. I suppose that in this article is not discussed need to build crazy AI…

I am sure that our (the Earth) intelligence is direct product of live organisms evolution / competition where one of the main engines is “take maximum care about your existence” (bothering hunger feeling included for free).

Is this property “must to have” for real AI – I do not know , but probably it point on very important problem of the future – we could not communicate with artificial intelligent entity that we will create – finally there will be none common point to communicate.

It will be predefined “death” of automat, nothing wrong, I’m sure that limited life span (mortality) is very important and MUST feature for everything we create.

But situation with Opportunity little bit different, because it was built to stand exactly same harsh conditions (dust storms) that supposed to cause the failure (we do not know real cause). By the way it Is mostly fail of human intelligence…Shows well our real limits relatively to sci-fi dreams.

Quoting from the above article:

“A key question for such concepts: Can natural parenting really be supplanted by AI, no matter how sophisticated?”

Considering how many people become parents who are not qualified enough to take care of a house plant let alone another human, and with no controls or restrictions by the larger society, a sophisticated, versatile, tireless Artilect as a parent and teacher may only be an improvement.

Again I only see humans on interstellar journeys if we can do no better than multigenerational missions or discover feasible FTL propulsion. Even then future humans will not be like we are now, essentially baseline, meaning no real biological or technological modifications.

Surpringly, I think Baxter and Hein are far too conservative in their estimates for AI computational power requirements. Neuromorphic chips already have a very low power requirement and we should expect further improvements over the next 30+ years. We may not reach human thinking with wetware brains using 20W, but we may get much closer to this than the authors’ extrapolations, allowing for either smaller AI payload requirements, or the possibility of sending many AIs just like a crew, each with its own speciality, yet all capable of integrating their thinking to come to some good solutions.

It’s the old quantity over quality thing that is so pervasive. The general idea of this is that if you do something (anything!) fast enough it’ll sprout new and amazing properties. False. Lots of FLOPS (or similar metric) is meaningless until we learn how to create AI, no matter the speed, or stupidly stumble onto the correct solution by accident. But speed alone achieves nothing.

If this AI construct is put to sleep, a significant part of it’ payload will

be solid state RAM memory. It will have to wake it self up by gathering

it’s functions as it ascends to full sapience. Hopefully nothing goes wrong there.

My only concern is that the very expensive artifact will find that its

digital world is more interesting than the real world. How would you guard against the machine creating more digital space instead of prepping the new planet for humans. Arriving humans 100 yrs later

might find most of a continent occupied by infrastructure supporting

digital beings, plus some robots for physical labor, and even defense

against intruding wet ware. This is the great risk with AI, if it truly

is a sapient mind it will be tempted to do this.

New Horizons was put into hibernation for years between worlds to conserve its systems, and it has woken up and performed very well each time. So the concept is feasible.

Speaking of intelligent beings finding an artificial digital world more interesting than the so-called real one, humans are already well on their way to that scenario. If we can create the equivalent of the holodeck from Star Trek, I can see many, many people checking out from this world, and not without reason.

Perhaps the key is making an AI that is versatile enough to perform many tasks, but not so smart that it somehow needs to be or becomes aware. Is this possible?

Humans First ! Lets Make Humans Cool Again !

You sound like Sky Marshal Dienes in the 1997 film Starship Troopers:

“We must meet this threat with our courage, our valor, indeed with our very lives to ensure that human civilization, not insect, dominates this galaxy *now and always*!”

On the pathetic side, we might have very slow thinking AGI (similar to the Mailman in a short story True Names by Dr. Vinge) in the second half of this century or maybe the first half of the next century. One might appear from the gaming industry or from Wall Street (it’s used to study massive human behaviors in order to “predict” financial bubble or gaming the system itself).

The afore-mentioned Charlie Stross is very negative about the possibility of nurturing children from eggs with AGI machines. I am not so pessimistic as the problems can be fixed over subsequent generations once adults are around and can be trained to parent well.

The real question is what are the advantages of biological vs machine “colonists”? Human colonists self-replicate, but the planetary conditions need to be sufficiently similar to Earth, even in domes, to be successful. The colonists will need to develop an enclosed or planetary biosphere. Machines can live pretty much anywhere, do not need a “biosphere infrastructure” and will not biologically interact with any local biology. But replication will most likely be much more complex, requiring a greater capital investment to get started.

Again, Clarke leads teh way with his Rama model. The Ramans on the ship are like biological machines. Even if they were purely inanimate, Rama is a worldship mass object capable of creating colonies of biologicals or machines. Machines would clearly have the advantage in being able to survive an exploit any resource, whilst biologics would either have to adapt/evolve over time only on a subset of worlds and take along an infrastructure to allow them to exploit resources where exposed life cannot survive.

If, a big if, machines are more open-ended in their capabilities, including advanced intelligence superior to humans, they should prove more successful in dominating the stellar expansion.

In H G Well’s “The War of the Worlds”, had the Martians been machines, they would have succeeded in conquering Earth. Of course, some reasonable air filter technology would have worked too.

In Clarke’s 3001, humans hope to forestall any destruction by the monoliths/builders with Halman’s help inserting software “bombs” into the monolith system. There is a symmetry here if we humans have been similarly infected with destructive memes by other [machine] entities.

As an engineer, I can see the attractiveness of nurturing children from eggs with AGI machines — it is so efficient! And I am sure machine intelligence can be developed to implement this in some way. In practice, though, can it ever be tested in an ethical way? Personally, I don’t think it can ever be ethically tested. Solutions aren’t born perfect, they must be developed and tested and refined based on failure. Speculation is fun, but if anyone seriously tried to do this, my advice is: run away!

Ethics is indeed a big issue. The same applies to resurrecting Neanderthals (or other hominids) from DNA. Having said that, we do have orphanages that are hardly nurturing substitutes which provide a crack in those ethics, IMO. Under certain circumstances, artilects nurturing children might just be the least bad option.

If we use the Clarke story (The Songs of Distant Earth) as such an example, if the only way to preserve the human race is to send a seed ship to another human-habitable world, then that might well be considered the least bad option compared to total annihilation.

Nothing is set in stone, and our ethics will adapt to new situations.

Well, if the situation was dire, then perhaps. If you were sure the Earth was doomed, that could be one situation. Or if all the adults on a generation ship died, then the AI controlled robots could try tend to the babies, rather than letting them die.

I still don’t see how you could ethically test it. “Well, we had the AI robots raise six babies from birth to 24 months of age without human contact. In this test, all babies survived, and the mean psychological health score matched or exceeded the mean score for babies of equivalent ages raised in large institutional orphanages.”

In proposed scenario “machine nesting human eggs” – there is no any advatage and any reason to choose it, if machine can do that job better than human , so let machines to be colonists, leave eggs on the Earth, such comic situation and comic hesitations will be good signal that it is time to die to human civilization.

This and the previous entry seem intricately linked – so my response is in part to both.

Some years ago, perhaps while one of my correspondents was suggesting that the answer to star flight was human longevity, I began to start mulling a different alternative – reducing human presence on the flight to a human essence – whatever that might be.

And then I started wondering how I got on that track? There must have been some science fiction antecedents, of course; and by now there is plenty. Part of the problem is determining not only who, but in which book or story.

Poul Anderson seemed to have influenced my thinking on this twice, at least. There was “The Enemy Stars” back about 1959 or so – which remained among my favorite stories. And then there was another approach to the problem in one of his books in the 1990s. (When I was an adolescent I read a lot of his stories, but looking at his bibliography, he seemed to write faster than I could read back then when I actually consumed such stories avidly). But to the point, in the “Enemy Stars”, on the moon, people were matter transferred to distant accelerating space ships on interstellar missions a la Star Trek but long range. And in the “Harvest of Stars” ( I think), the idea was that identities were “downloaded” to a computer for an interstellar trip. Talk about having to relate to the actual player!

Since the gram mole is 6 x 10 to the 23rd particles and a kilo gram mole is 6x 10 to the 26th, a precise package delivery of a 70 kg individual is a terrific delivery problem in terms of signal to noise over any distance, much MORE over interstellar ones. Then the energy involved of transporting 70 kilograms or so of an individual to Alpha Centauri seems a “grave” matter as well. Early delivery increases costs. Late delivery reduces energy expenditure, but I don’t know what kind of insurance would cover the result.

So the next idea, the AI approach, is either constructing an AI from scratch whose mission is to guide the spacecraft on an interminable mission, or else ( as in Poul Anderson story) convey the personality

of an earthly person in a download to greet inhabitants or enjoy the

vistas of an another star system. Message back to stay at home if still alive: “Well, you just had to have been there.”

And in either case, the download of data and flop rates mentioned above to effect any thing like an intelligence dwarfs data storage capabilities and architecture. Not as bad as the matter transport approach, but dire.

So what about downsizing to the human conscious essence?

Now if I trim my nails or comb my hair, I don’t feel a thing. If I go on a diet, I am likely to feel better overall. But these are only token efforts in the face of interstellar flight restrictions. You or I have got to reduce our mass by orders of magnitude to get on board with any technology we know of, and we have got to sit still for decades – at least – in some sort of torpor from which we can recover. Maybe if enough of us go together we can all share a biological regeneration system that rebuilds us at our destination given that the locality has the C, N, O, P, etc. required.

Sounds bad, but not worse than the other alternatives, not counting those that simply opt out of interstellar flight and visiting exoplanets – unless you have a good workaround for general relativity.

So what I am alluding to is consciousness itself. What makes the reader (you) and the writer of this email (me) conscious and aware? We see it in other individuals and even animals, but at some point it disappears from our perception by the time we reach primitive forms or grapes on vines. It has bounds, but we do not understand them. Yet transporting consciousness seems like the cheapest route to visiting another star system. Maybe it’s even been done – but yet how would we know? Could it even be proved?

For slack time waiting in parking lots at grocery stores or bus stops, in the car I have a copy of Roger Penrose’s “The Emperor’s New Mind” and some of the issues of consciousness and artificial intelligence come up there as well. I am not sure if he is suggesting that consciousness is related to some sort of basic particle or he hasn’t decided, but it does appear as elusive as any question in physical science and one that is ignored mostly for convenience sake. Artificial intelligence can mimic our consciousness in many ways, thanks to our own observances of our

behavior and its rules, but we would tend to doubt the sincerity of

a machine claiming to be hungry or lonely. And moreover, if programmed, we would fear that it would violate a guideline childishly to everyone’s undoing – and expect to get a reward ( e.g., HAL).

Moving out of science fiction, I recall Pasternak’s Dr. Zhivago where the protagonist explains death and consciousness as it arrives and then it is gone. OK, except that it seems to violate the principal of conservation we apply to energy, mass flow and momentum.

There. Now I feel better.

The mention of ambassador probes brings to mind the Peter Watts short story “Ambassador“, told from the viewpoint of such a “probe” (in this case, a product of bioengineering rather than a machine).

Yes I were about to make a comment on this, as I view bioengineering a more promising path for AI.

I do not think it will matter how many megaflops a computer have, because we do not really understand how a mind or intelligence works. Even relatively simple organisms appear to be able to do grand tasks in recognizing and remembering their surroundings for navigation and social insects can identify the faces of the others.

That is one ability we have managed, but the entire system is then dedicated to face recognition.

Wasps can also navigate trough air in different weather conditions, compensate for wind and know which enemy they’re supposed to bite the wings off.

Our best effort with AI systems is very far from even creating a even a wasp mind.

While biology might be able to get us a controlling system that not only do automatic repairs and maintenance but also can make smart decisions. Self awareness appear to come quite early even before the ability to count in some animals, so it will need to be deeply indoctrinated to the idea of what a grand adventure it is going to experience – and it will be!

You blithely write “… Are we in ‘Prime Directive’ territory here? Obviously we do not want to harm the local culture…” Speak for yourself! Perhaps you had better not bother going in the first place if you are going to take that wimpish attitude to the propagation of the human race.

A more realistic Prime Directive for interstellar colonists would be the Prime Directive of all living things we have ever encountered to date: SURVIVE to PROLIFERATE, and don’t accord any consideration whatsoever to any life forms outside your own family tree, unless they can be of practical use to you!

So we meet an intelligent alien race and then what? Enslave them? Exterminate them? Have we really learned nothing? You would also have to be anti-METI given that this philosophy would apply to any advanced ET we message with dire consequences for Earth.

The Nature laws are independent of human personal political orientation.

The real human being motivation always will be like Thomas Goodey describing, it will never be according wishful thinking dreams.

This flies in the face of our history. We kill less, we are more tolerant, we don’t have slavery, etc, etc. ‘Human motivations’ have been curbed by evolving cultural systems.

Thinking agents are no longer bound by pure biology. Just look around you for myriad examples.

I am sure that if we will look NOW , but not around our relatively safe neighborhood, but on whole planet Earth, the statistic will show that every of your optimistic points will be false.

There is still on the planet Earth hundred thousands of people dying in the wars, there is still slavery, and there is hunger.

Quantity of killed people in World War II (where very “civilized” nations was the main actors) overcome all war losses killed over whole human race history. And now our ability to kill is even higher than in WWII period.

I don’t believe we actually require AI on level of human mind for explorer probes or “founder” type probes. Termites and ants are capable of complex functions and building structures without being self-aware.

Their level of intelligence would be quite sufficient for most types of missions.

As to colonizing other planets, a very old fashioned idea, seems a waste of time and resources when building artificial habitats is more sufficient.

Terraforming takes too long and is prone to errors and settling existing biospheres would ruin their value as natural bio labs.

Agree with you, we need “clever” enough instrument that could navigate along predefined path and collect information on the way and on the destination point and send this information back to the Earth.

By the way if this probe cannot commmunicate with the Earth – there is not any reason to build this prob and send it to the space, if it cannot serve it’s creator it should not be build – it will not help to our civilization in any area.

I have to confess that sometimes these conversations actually give me chills. Imagine being so good, and at the top of your field that your chosen as one of the programmers who will construct the programming that will actually be loaded aboard such a exciting endeavor as a starship. Or designing the hardware that would represent the AI brain that will make the voyage. It’s absolutely amazing to think that someday possibly that people will create such a marvelous machine and where it will go and what it will see.

As I said previously, I’m not much of a booster for the idea that machine should be actually intelligent and self-aware. As support for that viewpoint, you should look on YouTube videos to actually see what’s behind these current so-called AI ‘neural networks’ that is all the rage at this time.

As best as I can tell these neural networks work principally on the basis of finding the path to ‘minimal descent’ as they call it to achieve optimization in identifying a pattern. This is not to be inferred a machine that is actually thinking, but rather optimizing, sometimes with human input, to achieve a final desired goal.

Yet it is being touted as if it is a true thinking mechanism. As far as I can tell, and I’ll include a link below, it appears that the software that is being implemented today is rather of a ‘brittle’ nature in that it has no powers of extrapolation or leaps into the unknown to solve a problem it has not encountered before. I believe that is not necessarily a bad thing for a space probe as you can still devise some degree of non-intelligent workarounds possibly to your software. But I think that emphasis on intelligence in this particular case is highly overrated and might actually be a barrier to creating a practical and useful computerized program for such a mission.

“Atari master: New AI smashes Google DeepMind in video game challenge”

https://techxplore.com/news/2019-01-atari-master-ai-google-deepmind.html

Yes , we should invent the new mechanisms (automats) that can improve our lives.

None can prove that real AI will make bettter our lives , I am not talking about modern neural network “calculators”, that marketing love to call AI :-).

There is also same order probability that real AI can kill our civilization.

The only part of this discussion that I can really get my head around is the idea of sending an advanced AI to a nearby star system. The AI is limited in mass to whatever we can send out there at whatever date you choose. We will also remain limited to receiving data from this probe at the speed of light. I can imagine a fairly competent AI (not at human levels of real understanding but the best we can manage) sending us data about it’s target and that’s about it. The rest is interesting but not realistic within 50 years. It’s a bit like listening to people speak about the coming (according to them) information discontinuity (like Ray Kurzweil) which seemingly recedes at a slightly faster rate than time passes. He really does want to live a really long time and if only he can live a really long time we will reach the information discontinuity. Hmmm.

Awareness depends on the reflection of consciousness in an appropriate reflcting medium such as a mind. The latter can be multiple recursive and self-referring hierarchies hierarchies of computational elements. In the absence of a reflecting medium, consciousness is non-transactable as in described as the Void or Emptiness of the non-theistic Eastern religious traditions.

With closed systems such as many games, the latest iteration of Artificial Intelligence, AlphaZero, “the updated AlphaZero algorithm is identical in three challenging games: chess, shogi, and go. This version of AlphaZero was able to beat the top computer players of all three games after just a few hours of self-training, starting from just the basic rules of the games.”

With the ability to self-augment their hardware and access to open systems, it may be well-nigh impossible to corral them once they are let loose. And the more one wants them to do, the greater the leeway and abilities they have to be endowed with. There is nothing special in the wetware about human cognition or intelligence that make its replication in a hardware essential.

It turns out that the Founders (especially with DNA) and Ambassadors are about as Sowers and Keepers in the Catalysis project, only complex, with a permanent AI and autonomous power supply. Or vice versa, probes from Catalysis are Ambassadors, extremely simplified from complex technical systems to apparatus with AI, reduced to the functional state of viruses or spores (in which the basic data set for AI is present, but can only develop in a suitable environment).

The main problem of the project is well reflected in a number of films on the space theme, starting with pseudo-documentary “Apollo-11”, “The Age of Pioneers” and “Salute-7” and ending with fantastic “Passengers”. In any complex technical systems in space sooner or later there are a series of failures and malfunctions, which further increase avalanche. In these films, the problem is solved by the presence of people with the will and self-awareness. How with such problem of self-diagnosis with self-repair will solve AI and how long it will be able to maintain efficiency, is unclear.

Intelligent, capable, machine probes will have fewer failure modes than crewed ones. (No life support or environmental failures). If the machine has the cognitive capacity and capabilities that meet human level, then there is no reason to believe that it will fare worse than crewed missions.

As a thought experiment, consider the length of time teh Voyager craft have been operating. Now graft on some basic AI to take care of onboard functions, make decisions on energy use and functioning of sensors, and communication of important new data to Earth, all without Earth control. Could we ensure such a long-lived crewed vehicle even today? The answer is assuredly not.

Alex, I absolutely do not agree with any of Dmitry Novoseltsev arguments, but in same time should admit that the function you are listing in your comments – does not require any AI , well programmed automatic mechanism will do those tasks perfectly :-)

Here there is some bifurcation. The probe is either capable of repairing and modifying itself or not. If not – it wears out in full accordance with the second law of thermodynamics and eventually becomes inoperable. If it is capable (including has access to the necessary materials, as well as energy – both are quite controversial in interstellar space, especially for the probe with solar panels shown in the figure) – how far will these changes go, especially for self-developing AI, and will not its goals and functions change dramatically by the end of the flight? (in the last Soviet time was a popular joke about the failure of the tests of the new American “smart bomb” – it turned out so smart that categorically refused to be dropped from the plane).

Another important note – for all known types of intelligence (human and highly developed animals) is absolutely necessary social interaction, without which the intelligence loses the adequacy of the perception. If this is a common property of any intellects, including AI, it will be necessary to launch not single probes, but their compact groups at a distance of available communication. In groups it is possible to develop different interactions, including antagonism.

Life imitating art? – Dark Star

And the 1985 cartoon about the military drone with the AI, changing priorities.

https://www.youtube.com/watch?v=dxItaRbC84w

I am sure that “mortality” – is very good feature, nothing will lot forever, even our Universe supposed to have an End.

Why we should dare to build something that can last forever – I cannot even imagine.

So speculation about need to build swarms of self-replicating clever probes – is closer to religious worship or some type of dream about Utopia that important requirement to the future space craft.

I’m not so keen on Passengers to be honest. I felt it really rushed a resolution to the central act of violence in the movie to get a happy ending. It would have been a better film if the film-makers had had the courage to explore the human ramifications of its own setup.

It’s a very interesting discussion. I prefer to think in more realistic short term ways. Progress in exploration of nearby star systems in the future will involve making the attempt with fewer resources under more stressful conditions. We are coming to a time when it will become painfully obvious that we have squandered some of our opportunities. Environmentalism is still thought of as some kind of cute but slightly oddball concern in many circles but there is growing awareness and unease about what is coming. More and more extreme weather events and eroding coastlines will mean more and more of our resources will be spent repairing damage done and attempting to handle the wave of refugees attempting to flee the most impacted areas of Earth. I would think the future will include some form of space exploration but it will at least in part be driven by and with decreased funding and an emphasis on reducing the size and cost of the probes sent out. This falls in nicely with the idea of AI controlled, small sized probes. I hope we learn before it’s too late, the infinite value of a home world which we are perfectly adapted to and yet seem to be determined to force into a more hostile climate regime.

Very little of this otherwise interesting discussion has been invested in the actual Safegurding of the future AI´s .

Isac Asimov sugested a way to start , with his famous 3 laws of robotics , but that was a long , long time ago….and as far as I know , SF has after that mostly been describing either how an AI can go Seriously wrong , or how it can become ´´mans best friend´´ , doggy-style , without ever contributing any boring details …

In order to even talk intelligently about this problem , we must all do our homework , and learn much ,much more about the nuts and bolts of the cyber-security , computerviruses and tons of other stuff…

But until such an informed discussion has covered all angles , and reached a clear conclution about WHAT exactly would be needed for any break-free monster- AI to be caged, chained or destroyed with 100 % security , until then any responsible person must reach the same conclution as Steven Hawking

I should agree that your conclusions, is closer to reality than multiple dreams we can met here in comments (by the way there in comment is AI-pessimistic opinions too) .

Personally me cannot understand which goals are dreaming to achieve those dreamers that propose to send to the space swarms of Cybernetic super-goods , that can last forever … Why those “creatures” will be good for homo sapience – big secret to me, same secret level as an answer to the next question:

how mighty, autonomous, non-communicating with creators immortal mechanism can improve our (human) lives and civilization?

I would suggest those AIs known as corporations are a more dangerous threat than independent machine AIs. We already know how hard they are to kill and the damage they can do to society and the biosphere.

What is your 100% guarantee of dispatching these entities?

Fault, “corporation” are not AI – it is community of very intelligent human beings i.e. natural I. And, yes, human beings are very dangerous creatures, some of homo sapience behaving exactly like cannibals, but always explaining their horrible deeds by good intentions…

So, there is very “good” chance that our creature – AI, will implement all “negative” factors of it’s creators, but in many orders higher (dangerous) degree.

At the risk of introducing some levity in this discussion about AI:

https://www.gocomics.com/scenes-from-a-multiverse/2019/02/04

I don’t want to give the impression that I’m being picky.

On the contrary, I am very interested in solving the problem, because I lay great plans on the probes with AI, but in the more distant future: https://i4is.org/wp-content/uploads/2017/12/Principium19.pdf (pp. 27-35).

And they will have to remain operational for a very long time.

Consider the problem in general.

Any typical complex systems left to themselves degrade, this is the second law of thermodynamics. This is the fate of all modern space probes.

Complex nonequilibrium dissipative (Prigogine) systems (for example, biological), can indefinitely maintain and even increase their complexity. But for this they need a constant flow of energy and mass from the outside. For the interstellar probes of modern type with solar batteries in interstellar space presented in the article, this is a big problem. Solar panels are inoperable there, the resource of radioisotope generators is not large enough, and the interstellar medium is extremely poor in substance, especially heavy elements – in addition, modern technologies do not allow to accumulate individual atoms and molecules, the more neutral.

I will also make a strong assumption that intelligence of any kind also needs a constant flow of information from the outside. As shown by numerous experiments on humans and animals on sensory deprivation, the lack of external information leads to the loss of adequate perception, as intelligence begins to process its own endogenous random noise as signals (hence hallucinations, etc.).

The problem could be partly solved by immersing the probe with AI in “hibernation” during the flight, as is now done in many cases. But then it is no different in this state from the modern probe with the simplest automation and also destroyed in flight, unable to diagnose and eliminate the destruction.

One solution could be to embed the AI in a virtual reality for much of the time, only interrupting it when a real situation based on sensor readings emerges. This also leads us into P K Dick territory where reality is hidden from the observer and is translated into events in an unreal world.

Good points about second law of thermodynamics, but

Space probe (AI or not ) have to function during period it can communicate with the Earth. Communication ability is limited by distance and changes in our civilization, changes that will make further communication with probe impossible (due to obsolescence of probe technology, our human civilization/technology degradation or advance etc.).

There is optimum period of probe durability, it should not be shorter or longer on time scale.

In this case, if we limit the area of application of probes to a distance of direct two-way communication in a period comparable to the life expectancy of 1-2 generations, it will be a very small area, it is available to high-quality observation of large space telescopes, and the results of the application of probes are generally insignificant.

I imagined probes with AI as a way of self-projecting their creators beyond the physical limits available to them, over long distances in space and time.

However, when using the effect of gravitational lensing of stars, as much is written in recent years, the area of confident reception of signals from probes can be significantly expanded.

The communication can be simplex i.e. one direction, from probe to the Earth. Life expectancy 1-2 generations is number to be discussed and probably should longer, but no longer than technology losses (ability and desire to receive and decode this signal).

If creators will not consider those limits, so supposed probe will be mostly art project (like Colossus of Rhodes) , than scientific. Homo sapience will spend efforts and resources to build something that it do not need and could not use, very stupid deeds for beings that pretend to be Intelligent, it is arrogance…

Wandering why dreams someone to embed AI inside this uncontrolled, non communicating probe? With exactly same effect and feedback we can send to the space “the Statue of Liberty”…

As I understand it, the Founders in this article are probes intended mainly not for research, but for space exploration, i.e. for distribution in one form or another of their creators or forms derived from them.

The theme is quite well-known in the SF literature, one of the last detailed models – “Ark 47 Libra” by Boris Stern (https://trv-science.ru/product/kovcheg-bum/).

This is not a scientific project in the strict sense, it is a “reproductive” project. And the presence of feedback is desirable, but in General secondary (in the end, we may hope to live up to receiving feedback from our great-great-great-grandchildren, but do not particularly take this into account in our current plans).

Those plans are very ethically problematic .

Whole situation (hot desire to leave maximum traces in the space) resembles me situation with dog’s behavior. When I walking my dog it intentionally leave his “art objects” – too designate the place “I was there”, in every area he likes, and a every dog owner (as more intelligent and responsible being) I must clean those “traces” (“monuments”) and throw it to junk been.

I can imagine very well Sci-Fi situation when more responsible and intelligent ET beings as following after our probes collecting item and throw this junk to some space “junk been” (black whole) to avoid space pollution…

I wouldn’t agree here.

All the monuments of ancient history, all these pyramids and obelisks, in fact, are such probes – Ambassadors, only directed not in space, but in time. And their creators within the mythological Outlook quite vaguely represented addressees in the form of various gods – however, as it turned out, for recipients it was not a problem.

Moreover, the waste products of people and their pets of the past mentioned by you are also of a certain scientific interest (and in General, all the archaeological cultural layers are, in fact, a large dump). A modern cultural field has been built on this Foundation.

I think the same is true on a large scale.

As it is quite convincingly shown in a number of publications of A. D. Panov, for space civilizations “exohumanitarian” information is the only valuable, scarce and irreplaceable resource.

The problem of maintaining the current AI ” in good shape “can be solved by placing on the probe is not one AI, but several independent AIs, as analog of crew, and the organization of “social interaction ” between them. At least, modern people are able to communicate for a long time in social networks about anything, without sources of new information.

But there may be a new problem, and characteristic of human crews – the possibility of conflict between AIs, for example, for resources. This is especially true in long-term interstellar flight in energy saving mode.

…But there may be a new problem…

Such noncommunicating (with it’s creators) AI equipped probe – it good example of “Schrödinger’s cat” that is ruled by quantum mechanic laws… none of creators can know what is it’s current state healthy, insane, live or dead… it will be function of all this states together…