Recently we’ve talked about ‘standard candles,’ these being understood as objects in the sky about which we know the total luminosity because of some innate characteristic. Thus the Type 1a supernova, produced in a binary star system as material from a red giant falls onto a white dwarf, causing the smaller star to reach a mass limit and explode. These explosions reach roughly the same peak brightness, allowing astronomers to calculate their distance.

Even better known are Cepheid variables, stars whose luminosity and variable pulsation period are linked, so we can measure the pulsation and know the true luminosity. This in turn lets us calculate the distance to the star by comparing what we see against the known true luminosity.

This is helpful stuff, and Edwin Hubble used Cepheids in making the calculations that helped him figure out the distance between the Milky Way and Andromeda, which in turn put us on the road to understanding that Andromeda was not a nebula but a galaxy. Hubble’s early distance estimates were low, but we now know that Andromeda is 2.5 million light years away.

I love distance comparisons and just ran across this one in Richard Gott’s The Cosmic Web: “If our galaxy were the size of a standard dinner plate (10 inches across), the Andromeda Galaxy (M31) would be another dinner plate 21 feet away.”

Galaxies of hundreds of billions of stars each, reduced to the size of dinner plates and a scant 21 feet apart… Hubble would go on to study galactic redshifts enroute to determining that the universe is expanding. He would produce the Hubble Constant as a measure of the rate of expansion, and that controversial figure takes us into the realm of today’s article.

For we’re moving into the exciting world of gravitational wave astronomy, and a paper in Physical Review Letters now tells us that a new standard candle is emerging. Using it, we may be able to refine the value of the Hubble Constant (H0), the present rate of expansion of the cosmos. This would be helpful indeed because right now, the value of the constant is not absolutely pinned down. Hubble’s initial take was on the high side, and controversy has continued as different methodologies yield different values for H0.

Hiranya Peiris (University College London) is a co-author on the paper on this work:

“The Hubble Constant is one of the most important numbers in cosmology because it is essential for estimating the curvature of space and the age of the universe, as well as exploring its fate.

“We can measure the Hubble Constant by using two methods – one observing Cepheid stars and supernovae in the local universe, and a second using measurements of cosmic background radiation from the early universe – but these methods don’t give the same values, which means our standard cosmological model might be flawed.”

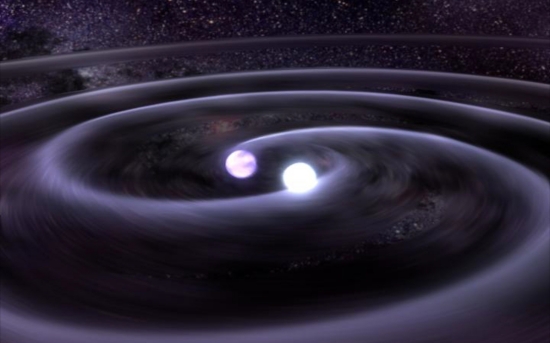

Binary neutron stars appear to offer a solution. As they spiral towards each other before colliding, they produce powerful gravitational waves, ripples in spacetime, that can be flagged by gravitational experiments like the Laser Interferometer Gravitational Wave Observatory (LIGO) and Virgo. Astronomers can then compare the gravitational wave observation with the detected light of a binary neutron star merger, a result that produces the velocity of the system

Image: Artist’s vision of the death spiral of the remarkable J0806 system. Credit: NASA/Tod Strohmayer (GSFC)/Dana Berry (Chandra X-Ray Observatory).

We’re closing on a refined Hubble’s Constant, and researchers on the case, led by Stephen Feeney (Center for Computational Astrophysics at the Flatiron Institute, NY) think that observations of no more than 50 binary neutron stars should produce sufficient data to pin down H0. We’ll then have an independent measurement of this critical figure. And, says Feeney, “[w]e should be able to detect enough mergers to answer this question within 5-10 years.”

Nailing down the Hubble Constant will refine our estimates of the curvature of space and the age of the universe. Just a few years ago, who would have thought we’d be doing this by using neutron star interactions as what the researchers are now delightfully calling ‘standard sirens,’ allowing measurements that should solve the H0 discrepancy once and for all.

A final impulse takes me back to Hubble himself. The man had a bit of the poet in him, as witness his own outside perspective of our galaxy: “Our stellar system is a swarm of stars isolated in space. It drifts through the universe as a swarm of bees drifts through the summer air.” Found in The Realm of the Nebulae (1936).

The paper is Feeney et al., “Prospects for Resolving the Hubble Constant Tension with Standard Sirens,” Physical Review Letters 122 (14 February 2019), 061105 (abstract).

If the gravity wave experiment confirms one of the 2 existing methods, then it will open up the issue of why the “incorrect” method provided the answer it did. This should be interesting in itself.

If the new method provides a different answer, then we are left with 3 conflicting methods, rather than 2, to explain, and a lot of head scratching.

Could part of the problem be calling the Hubble “constant” a constant? When looking for the expansion rate over different distances what’s being measured might simply be different because it is different. If the Hubble expansion rate has changed over cosmic history then you will get different values depending on how deep you are looking.

One might expect that dark energy is increasing the expansion rate. If so, then approaches that measure using shorter distances and hence more recent epochs should have larger values than those that can measure over deeper time. However, such a simple explanation can hardly have been missed by the cosmologists, so the issue is clearly a lot deeper than that.

Hubble’s Law

I’m sure you’re right Alex. I asked a rather dumb question to point out confusing wording. I’m frustrated by the continuing use of obsolete terminology in astronomy and now cosmology. We now know that the rate of universal expansion is increasing, therefore the Hubble factor H(t) is proven to not be a constant, it has been variable over time. That’s what Dark Energy is all about. So why keep calling it a constant if it isn’t one???

History calls it a constant. It is only constant on a hypersurface of equal age (time since the Big Bang); that is, it applies everywhere. It of course decreases with time since the expansion is slowing. That is why distance (aka time) appears in the definition.

Neutron star mergers as standard candles works because the mass range of neutron stars is very narrow (±10%). When the light of the merger can be directly detected or the home galaxy can be identified the red shift in combination with the luminosity of the gravitational wave event can tighten the error bars on the Hubble constant (or variable, if you prefer).

Much of the difference between methods is merely the large error bars and possible systemic errors in our understanding of the stellar processes and events being used as standard candles. Ultimately they’ll lead to a more precise determination of the constant. Along the way we may also have a better understanding of these various processes and events.

Precision is important since it’ll guide us to a better understanding of how the universe is evolving, and perhaps answer many outstanding questions.

>It is only constant on a hypersurface of equal age

That’s the theoretical prediction from GR and a Big Bang model. It needs experimental verification. Spatial inhomogeneity might be be a slow gradient over large distances (as some inconclusive observations of the inhomogeneity of Planck’s constant indicate). Or it be dependent upon regional mass density (meaning a difference between intra-galactic and inter-galactic observations). Or it might be homogeneous. Or it might be something else.

I know, but it’s an excellent first order (and second order) approximation. A net gradient is still unconfirmed and that’s where the use of neutron star mergers can help to shed some light on the matter, by narrowing those error bars enough to help decide which candidate models are a better fit.

>It of course decreases with time since the expansion is slowing.

Er, don’t you mean increasing? The rate of expansion is increasing, thus the need for Dark Energy.

Expansion rate is what I meant to type.

If dark energy (cosmological constant) is non-zero it will gradually come to dominate the expansion rate and we get the so-called Big Rip. That is, the rate of the expansion rate (next higher derivative) is positive.

More info here ..

https://en.wikipedia.org/wiki/RX_J0806.3%2B1527

Triff ..

One question that would be of great interest to me is what the effect of new matter in the universe with a slower running clocks would have on neutron star mergers and especially the resulting gravitational waves? Since neutron stars form from larger short lived stars, if a galaxy cluster was formed from newer matter in the universe these mergers would also be happening and the effect from their slower running clocks on red shifts, cosmological constants and gravity should be measurable.

What new matter?

From the cores of active galaxies and that forms the quasars – you have to read his books.

These three in this order if you really want to understand;

Quasars, Redshifts and Controversies (1987)

Seeing Red: Redshifts, Cosmology and Academic Science (1998)

Catalogue of Discordant Redshift Associations (2003)

Arp? Falsified.

Big bang falsified.

Recent Quasar Observations Support Lots of Mini-Bangs Instead of One Big Bang.

Chris Reeve writes

Wired Magazine is reporting that astronomers have since 2014 witnessed up to 100 possible instances of quasars transforming into galaxies over very short timespans, but the article leaves no hint of the trouble this spells for the Big Bang cosmology. The article begins:

Stephanie Lamassa did a double take. She was staring at two images on her computer screen, both of the same object — except they looked nothing alike.

The first image, captured in 2000 with the Sloan Digital Sky Survey, resembled a classic quasar: an extremely bright and distant object powered by a ravenous supermassive black hole in the center of a galaxy. It was blue, with broad peaks of light. But the second image, measured in 2010, was one-tenth its former brightness and did not exhibit those same peaks.

The quasar seemed to have vanished, leaving just another galaxy.

That had to be impossible, she thought. Although quasars turn off, transitioning into mere galaxies, the process should take 10,000 years or more. This quasar appeared to have shut down in less than 10 years — a cosmic eyeblink.

What the Wired article fails to mention is that the short timespans vindicate the quasar ejection model proposed by Edwin Hubble’s assistant, Halton Arp, who insisted that these objects must be considerably closer than the extreme distances inferred by their redshifts:

The conclusion was very, very strong just from looking at this picture that these objects had been ejected from the central galaxy, and that they were initially at high redshift, and the redshift decayed as time went on. And therefore, we were looking at a physics that was operating in the universe in which matter was born with low mass and very high redshift, and it matured and evolved into our present form, that we were seeing the birth and evolution of galaxies in the universe.

Arp’s attempts to publish his quasar ejection model famously led to his removal from the world’s largest optical telescope at that time — the 200-inch Palomar. He decided to resign from his permanent position at the Carnegie Institute of Washington on the principle of “whether scientists could follow new lines of investigation, and follow up … on evidence which apparently contradicted the current theorems and the current paradigms.”

The fact that these quasar changes appear to occur over just months in some cases should raise questions about whether or not the objects are truly at the vast distances and scales implied by their redshift-inferred distances. However, the Wired Magazine article makes no mention at all of Arp, the implications for Big Bang’s redshift assumption, nor of the dire consequences for the expanding universe paradigm.

Link to Original Source

November 26th, 2018 7:04AM

https://m.slashdot.org/submission/8884908

Additional Arp vindications, to date (Score:4, Interesting)

by Chris Reeve ( 2962081 ) on Sunday November 25, 2018 @09:49PM (#57699416)

A list of vindications for Halton Arp:

1. Alignment of quasar minor axes [eso.org] (vindication of Arp ejection model)

“The first odd thing we noticed was that some of the quasars’ rotation axes were aligned with each other — despite the fact that these quasars are separated by billions of light-years”

2. Numerous apparent interactions of objects of wildly different redshifts (not possible with Big Bang, vindication of Arp)

For example, NGC 7603 [youtu.be], NGC 4319 [youtu.be] and NGC 3628 [youtu.be], just to name three. There are many, many more at this point.

3. Numerous instances where high-redshift quasars appear aligned with the axes of low-redshift “foreground” galaxies (statistics indicate this occurs far too often for a strict recession velocity interpretation of redshift)

Quasars, Redshifts and Controversies Halton Arp (1987)

“To summarize this initial chapter, I would emphasize that with the known densities with which quasars of different apparent brightness are distributed over the sky, one can compute what are the chances of finding by accident a quasar at a certain distance from a galaxy (see Appendix to this chapter). When this probability is low, finding a second or third quasar within this distance is the product of these two or three improbabilities, or very much lower. It is perhaps difficult to appreciate immediately just how unlikely it is to encounter quasars this close by chance, but when galaxies with two or three quasars as close as we have shown here are encountered one needs only a few cases to establish beyond doubt that the associations cannot be accidental.”

Quasars, Redshifts and Controversies Halton Arp (1987)

“Appendix to Chapter 1 — Probabilities of Associations

The basic quantity needed to compute the probability of a quasar falling within any given distance from a point on the sky is just the average density of that kind of quasar per unit area on the sky. For example, if a quasar of 20th apparent magnitude falls 60 arcsec away from a galaxy, we simply say that within this radius of a galaxy there is a circular area of 0.0009 square degrees. The average density of quasars from the brightest down to 20th apparent magnitude is about 6 to 10 per square degree. Therefore, the most generous probability, on average, for finding one of these quasars in our small circle is about 0.001 x 10 = 0.01, that is, a chance of about one in a hundred.

The crucial quantity is the observed average density. The comparison of what various observers have measured for this quantity is given in Arp 1983, page 504 (see following list of references). Overall, the various densities measured agree fairly well, certainly to within 140%. For the kinds of quasars considered in these first few chapters this gives probabilities that cannot be significantly questioned. Of course, on the cosmological assumption, quasars of various redshifts must project on the sky rather uniformly. Therefore adherents of this viewpoint cannot object to taking an average background density, as observed, to compute probabilities of chance occurrences …

4. Intervening galaxies are 4 times more prevalent along lines of sight to GRB’s than quasars [archive.org] (shouldn’t happen if quasars are at extreme distances)

5. Quasars seemingly observed in front of foreground galaxies [ucsd.edu] (has led to mainstream invocation of transparent sightlines through galactic bulges)

6. A quasar that exhibits 10x superluminal motions at inferred distance [discordancy.report] (this is merely the worst case, but the most common examples of this are 2x superluminal; requires invocation of Relativity illusion)

7. A quasar group so large that it spans 5% of the known universe at inferred distance [sciencedaily.com] (not expected from Big Bang theory because it’s a violation of the Cosmological Principle that says that the universe is uniform)

8. No observation of time dilation in quasar variations [phys.org] (no explanation has been accepted, to my knowledge)

9. Quasars have been shown to exhibit proper motion [harvard.edu] (should not be possible at extreme inferred distances, and was once considered a rule for differentiating galactic from extragalactic objects)

10. Quasar clustering [thunderbolts.info] (not expected from Big Bang theory because it’s a violation of the Cosmological Principle that says that the universe is uniform)

11. The Burbidges, Karlsson, the Bamothy’s, Depaquit, Peeker and Vigier have all agreed with Halton Arp that there are preferred values for redshift, and numerous investigators have attempted to disprove it only to find the effect in their own dataset (Disproves the Big Bang’s recession velocity interpretation for redshift) [wordpress.com]

Spooky Alignment of Quasars Across Billions of Light-years.

VLT reveals alignments between supermassive black hole axes and large-scale structure.

https://www.eso.org/public/news/eso1438/

Time to get back on topic here, which in this case means the uses of gravitational wave astronomy at giving us another cosmic distance marker, etc.

Imagine my surprise that the linked article says nothing that your correspondent claims. No need to reply. I’ll leave you with your faith.

The velocity of light is affected by the medium through which it travels. Could it be that besides three spatial and one temporal dimension, there may be other dimensions intimately associated with spacetime but mostly outside our ken, yet with with various effects on electromagnetic radiation and gravity?

If it’s outside our ken that means that there is no measurable effect. If there is an effect it is within our ken. It is therefore perhaps no surprise that string theories, with their many dimensions, have no experimental verification.

Also, velocity of light in a medium is a classical wave description of EM radiation. When we switch to quanta, a photon does not really experience any “medium”; there is an emission event and an absorption event that are connected by what we call a photon. Those interactions en masse sum to the refraction phenomenon. Sort of. QM is weird and I’m no expert.

mostly outside our ken…

How does this help? “Mostly” still signifies that there is something that is measured since there is “some” within our ken.

is the Hubble “constant” a constant?

No. It is a measure of the expansion rate of the universe. This rate is increasing. If it wasn’t, there would be no reason to suspect the existence of Dark Energy.

Likely an old idea but if gravitation waves with a high frequency can be artificially generated without undue amounts of energy (ignore the hand wavium) then perhaps they can be collimated and used for interstellar communications. I don’t know if physics allow a collimated gravitational beam but, if so and if it can be done, it would seem to be a good communication means which filter out lower tech intelligences from crashing the galactic internet with unending cat videos, selfies and other signs of low intelligence (BTW, I like cat videos).

Who knows? Perhaps such a gravitational beam is aimed at our solar system with the sender patiently waiting for us to develop to the point of receiving and answering back – conceptually like AC Clarke’s monolith.

Indeed. As we’ve sometimes speculated here (and I think I first heard the idea through Greg Benford), it could be that a sufficiently advanced civilization wouldn’t even bother with cultures that couldn’t figure out how to use gravitational waves as a way to communicate! Electromagnetic stuff is so 20th Century…

Of course, it would be Greg Benford. Now that I think about it, he may have been an author of a scifi book that described a device that generated and harnessed gravitational waves via miniature orbiting black holes. It was a good read but a long time ago and my memory may be off somewhat. I make a point of reading every one of this books.

Collimation (with hand waving, as you say) isn’t really the problem. The problem is detection because the interaction is so dreadfully weak. Gravitation is, after all, by many orders of magnitude the weakest of the fundamental forces. The luminosity of gravitational wave astrophysical events is extremely high. So we have these enormous and extraordinarily sensitive receivers that can’t hear someone (in effect) screaming into them.