I’m staying in Apollo mode this morning because after Friday’s piece about the Lunar Module Simulator, Al Jackson forwarded two further anecdotes about his work on it that mesh with the discussion. Al also reports that those interested in learning more about the LMS can go to the official Lunar Module familiarization manual, which is available here. I’ve also inserted some background on the LMS, with my comments in italics.

by Al Jackson

A couple of funny anecdotes about the Lunar Module Simulator.

It took some effort to get the LMS up and running … we could do a little simulation when it was first installed, but I had a very irregular schedule. I always worked at the LMS crew training 8 am to Noon, but for most of 1967, because the crew did not train after 5 pm, I came many the night with the Singer engineers to test the LMS, sometimes 6 to midnight, sometimes midnight to 7 am, and yeah I had to stick around for the 8 am to noon shift, and then go home and sleep.

There was a lot of weekend work too, it was 24-7-365 for about two years. (We did take Thanksgiving and Christmas off).

The most impressive glitch I remember was after Apollo 9 flew. Apollo 9 was Earth orbit only. We immediately then started doing lunar operations, especially ascents (I don’t think we had all the software and visuals for descent yet). All this was mostly digital to analog conversion. The equations of motion and the out-the-window visuals were being driven by a large bank of digital computers, most programmed in FORTRAN.

The reset point was the LM on the Lunar surface. So the set-up was that you looked out the LM cockpit window at a lunar surface (we had a better scene later), and when you hit the button to start the engine, the LM window showed the lunar surface slowly fade away and all went gray. The printout said our altitude was negative. We were sinking into the Moon!

So the guys fixed a Boolean in the software to keep us on the surface. Ok, then when one launched, that is turned on the ascent engine, we had engine on for 460 seconds. Ascent looked good, but look out the window and slowly the lunar surface came up and hit you in the face! The LM was doing a ballistic arc!

It took a day to figure it out. The solution was easy – looking at the code we saw that someone had forgotten to replace the central mass in the equations of motion, EOM. We were using the Earth’s mass, not the Moon’s!

A second anecdote. They finally installed the high fidelity Lunar Surface sim support, a model of the landing site made of plaster with a 6 degree of freedom TV camera rig, this video responding to the LMS EOM fed video to the cockpit windows. So, of course, the technicians glued a plastic bug to that model surface. When the crew made a landing, if facing the right way, a Godzilla sized bug appeared in the distance! The crews of course loved it, sim management not so much.

The Lunar Surface Sim

The Lunar Module Simulators in Houston and Cape Kennedy were produced by the Link Group of Singer General Precision Systems, under contract to Grumman Aircraft Engineering Corporation, with visual display units provided by Farrand Optical Company. From an article Al forwarded to me this morning titled “The Lunar Module Simulator,” by Malcolm Brown and John Waters, this description:

The Lunar Module Simulator was designed to train flight crews for the lunar landing mission. It is a complex consisting of an instructor-operator station, controlling computers, digital conversion electronics, external visual display equipment, and a fixed-base crew station. Actual flights are simulated,through computer control of spacecraft systems and mission elements which are modeled by real-time digital programs. Dynamic out-the-window scenes are provided through an infinity-optics display system during the simulated flights.

A five-ton system of lenses, mirrors and mounts made up the visual display system, which was attached to the LMS crew station. The heart of the simulator was a crew station that resembled in all details the actual spacecraft. Here is a photo Al sent showing the Mission Effects Projector. Another subsystem of the LMS visual suite, it used a film projection device for the early descent phase until switching to an optical unit that moved over a model of the Moon’s surface.:

Here’s the document’s description of this critical LMS component:

From approximately 8,000 feet almost to touchdown, the simulated views of the lunar surface are generated by the landing and ascent model, and are transmitted to the visual display system through high resolution television. The images are available to either forward window of the LMS crew station. The landing and ascent model, shown in Figure 18, consists of an optical probe located on a movable carriage and an overhead model of the lunar surface. This last is an accurate reproduction of one of the chosen lunar landing sites, constructed by the U.S. Army Topographical Command. Simulated sunlight on the lunar surface, which produces shadows used for visual evaluation of the landing site, comes from a special collimated light source.

Incidentally, in the incident with the bug that Al mentions above, this same document records that Armstrong, seeing what appeared to be a 200-foot tall horsefly in the distance after landing on the Moon, announced there would be no EVA, whereupon he was asked by the engineers simulating Mission Control why he would cancel, since large horseflies were common in Texas, where Armstrong lived. Neil’s response: He was not concerned as much about the 200-ft horsefly as he was about the 10,000 foot man who had placed it there.

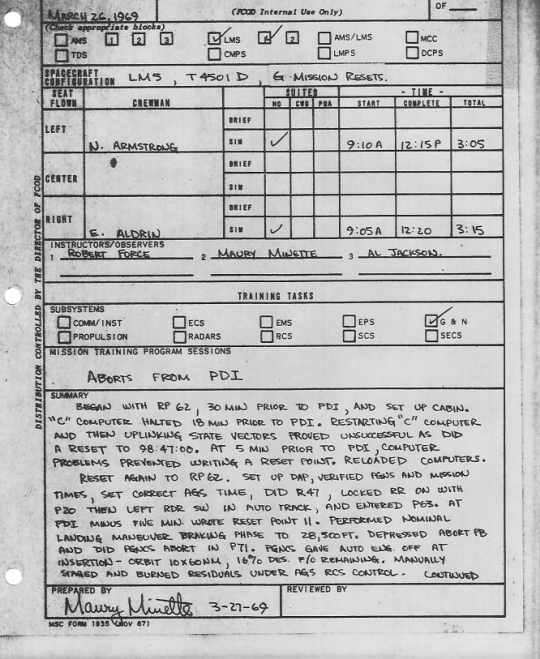

Finally, Al sends along several LMS training reports. Here’s one I found particularly interesting, for obvious reasons.

Have you seen the efforts to run the real LEM Apollo Guidance Computer software on real LEM hardware? One of my fave YouTubers is @CuriousMarc, who has been deeply involved, along with a young FPGA engineer from SpaceX and Marc’s usual cast of brilliant pals.

https://www.curiousmarc.com/computing/apollo-guidance-computer

Marc also has a bunch of other interesting projects that are worth a look.

Thanks for a fascinating blog, Paul.

Glad to have you here, Alan!

That is really impressive work. Woven memory cores! The LM computer simulator is fascinating. I cannot even imagine how the astronauts managed to enter the correct codes while under the stress of a landing.

Today, I imagine an autopilot could simply accept a selected landing target and land the ship itself, with the pilot only taking control if there was an emergency.

“I cannot even imagine how the astronauts managed to enter the correct codes while under the stress of a landing.”

Correct me if I’m wrong, but wasn’t the computer, ideally supposed to take the spacecraft down all the way to the moon, with the exception that the last few feet would have been under the control of the lunar module command pilot?

As far as I understand it, the only reason that Armstrong did what he did in the actual mission was that the pre-targeted area was so dense with boulders that he couldn’t actually allow the computer to put the craft down where they had wanted it to originally go. That’s why there was that tense situation I thought that caused him to spend longer than he originally wanted to bring it down.

There was no absolute auto-land capability of the LM , the crew always took manual control at the end.

If you want to read a technical description it is here:

https://www.hq.nasa.gov/alsj/nasa-tnd-6846pt.1.pdf

More notes, and there may be more.

I see I have used myself a lot in this story but there were about a dozen Lunar Module Instructors and that many more Command Module instructors… about a dozen NASA software engineers and a maybe two dozen technicians (those guys with screwdrivers who were highly skilled). About 30 or so Link (soon Singer Link) engineers working on the LM computer simulator software and hardware stuff.

Robert Force (primary GN&C), me (Abort Guidance System) and Maury Minette (environmental support system) were prime instructors. Two sets of the same , sort of 2nd and 3rd shift guys , so to speak. Other important peripheral people. The CMS actually had a few more people than the LMS.

“Chasing the Moon” starts on PBS tonight and I have no idea how much about the training simulators will be included.

That gives me a chance to shout out one the most neglected groups in the whole Apollo program. You are going to see a bunch of Mission Operations People, guys in the mission control room. However the group that impressed me the most in Apollo days was Mission Planning and Analysis Division , MPAD. Kind of back-room guys , mostly off to the side guys, who busted their butts to do all the engineering physics planning from ascent trajectories, navigation procedures, Lunar abort planning Descent and launch from the lunar surface. Flight planning and planning of the world wide tracking network…about a dozen other things , these were the number crunching cowboys and cowgirls who did some very hard astrodynamics. MPAD never gets the respect it deserved.

I looked through what you said, Al Johnson, but the more I read the less I admit to understanding. And I’m an engineer!

You should actually write a book about what you were involved in and put it out there, but please, for the audience’s sake, please define your terms in great detail, or otherwise everyone will be like me and find themselves lost. Example: So the guys fixed a Boolean in the software … OK, I have heard about Boolean algebra, but what explicitly is a Boolean?

After the first successful landing on the moon, was there an attempt to replace in the software, the films that were taken in flight both of the rendezvous’s (of Apollo 11) that had to be performed, as well as the actual film of the landing somehow into the software such as to allow other Lem pilots actual scenes that could allow for greater fidelity in the subsequent actual landing’s?

Also to, returning to the story about the LEM door not being locked behind them as Buzz Aldrin descended to the lunar surface, could you relate to us what would’ve happened if the unthinkable had occurred and the door had been ACCIDENTALLY locked ?

Would that have actually been an irreversible mistake? I genuinely would like to know.

Finally, I looked at what the gentleman provided on the ACG computer, but to be honest the videos he pointed to, it was out of my league (not a electrical engineer/computer scientist type guy). Is there anyone out there who can give a non-complicated idea of the general properties behind the ACG logic circuits and operation of said computer?

> Charley July 8, 2019, 16:55

> —

> I looked through what you said, Al Johnson, but the more I read

> the less I admit to understanding. And I’m an engineer!

> You should actually write a book about what you were involved in

> and put it out there, but please, for the audience’s sake,

> please define your terms in great detail, or otherwise

> everyone will be like me and find themselves lost.

>

> Example:

> “So the guys fixed a _Boolean_ in the software … ”

> …

> OK, I have heard about Boolean algebra, but what explicitly

> is a Boolean?

>

Charley, you may try this Wikipedia link:

https://en.wikipedia.org/wiki/Boolean_data_type

I believe it’s the most accepted meaning of “BOOLEAN” (with no qualifiers)

inside the community of (today’s) engineers.

Greetings,

Zaphod Beeblebrox, (retired!) engineer.

> So the guys fixed a Boolean in the software …

> OK, I have heard about Boolean algebra, but what explicitly is a Boolean?

I guess what this means is that the CPU logic of the AGC was built almost exclusively from (3 input) NOR-gates (about 5000 of them). This is possible because the NOR (as well as NAND) gate happens to be universal i.e. all possible boolean functions can be realized as a feed-forwards network of NOR gates (plus some constant inputs of 0s).

x NOR y = NOT (x OR y), truth table is

0 NOR 0 = 1

1 NOR 0 = 0 NOR 1 = 1 NOR 1 = 0

By standardizing on a single gate, some problems with appeared on older systems using 2 different types of gates could be avoided.

ignatius

Al Jackson

NASA has intrigued me since I was alittle boy and I truly hope the missions go forward for every man and women that wants to go to space and beyond. Keep these postings going and hope to see you soon again

Extra credit quiz…what is the origin of portmanteau FORTRAN?

Formula Translation.

I actually learned a very formative version of FORTRAN as a freshman at university in 1959. Later I took a course in FORTRAN IV in 1963.

The only programing on the LMS that was not FORTRAN was for the Primary Guidance and Navigation Computer. It was an special set up by MIT in machine language for the two simulators. I am glad I never had to to deal with it.

My subsystem The Abort Guidance System , on the LMS, was an emulation in FORTRAN written by two Singer-Link engineers. (One of them a gorgeous lady!)

I am, to this day, a pretty good FORTRAN 77 programmer.

A dirty little secret around physics numerical people is that FORTRAN is still preferred for number crunching. There is a ton of elegant numerical FORTRAN legacy code that solves nonlinear differential equations with a speed like no other.

The reason for the modern reliance on Fortran has nothing to do with speed or functionality. It has zero advantage and in fact many comparative deficits.

Rather it has to do with predictability. Modern languages tend to change and improve with the weather. For most applications that’s desirable, but not for scientific rigor. There are versions of Fortran that are strenuously tested against large test suites to ensure that revisions don’t break anything, even so much as a different least significant digit in a double precision calculation.

I remember some years ago when I was doing a lot of DSP work using Python that the Linux build process for numpy downloaded a Fortran compiler for the included FFTPACK module. It was quite amusing.

@Ron S. , Al Jackson – yeah, I have a tremendous sneaking suspicion that a lot of these new programming languages such as Python, and Linux as well as many, many others have more to do with somebody wanting to “get their name out there” rather than have a software which is actually moving forward the discipline of computer science. They actually shouldn’t be doing that as it creates unnecessary layers of confusion rather than clarification and advancing functionality.

One thing that I do wonder about if somebody could explain to me is what is the interconnection between the “rope core” memory and the use of IC chips at that time? Was the rope core strictly a memory set of hardware are did it serve as a computing function as well?

There were no IC memory chips at the time, though there were logic gates that could be wired into memory cells. But this was extremely expensive and costly in terms of size and power.

The predominant memory at the time was ferrite core formed from a matrix of tiny ferrite beads, each threaded by 3 wires. Perhaps that’s the meaning of “roped” memories.

Very effective for the time and was to be found on mini-computers and mainframes right through the 70s. Manufacturing was eventually outsourced to poorly paid workers in Mexico and elsewhere since it was very labor intensive.

There were a variety of other mass memory technologies at the time that came and went and are now largely forgotten. Bubble memory anyone?

A note Charlie.

Linux , strictly speaking, is an operating system.

I learned a number of those first for UNIVAC machines and then for VAX machines. If found UNIX pretty easy to use, tho with NASA we switched pretty quickly Linux (lots of similarities to UNIX). Linux is a gem, except for the accursed VI editor! (Well it was better than punching cards or the old line editors.) Last 12 years I worked I did validation of simulation software for the Shuttle and the ISS. I didn’t have to know C++, thank god! I did write Fortran to validate the math models. One blessing of Linux is that one could invoke X Windows , it’s just like using Windows or such like on a Mac. Nice editors within X Windows. Funny software engineers I used work with in the Engineering Lab would have sometimes have four VI windows open on two side by side monitor screens, with an uncountable number of lines of C++ code displayed. JEDI level coders just amaze me but then they were all about 30 years younger than me! … Here are the JSC engineers I last worked with……..

https://www.facebook.com/groups/43573068438/

Ron:

http://moreisdifferent.com/2015/07/16/why-physicsts-still-use-fortran/

Sorry, Al, but there is a great deal of wrong in that article. Just because an article is on the internet doesn’t make it true. It would take a long reply and veer so far off topic Paul might have something to say about it. Not to mention that I’d rather not go there. It’s a rat hole of great depth.

The comments for that post are more interesting than the post. The “language wars” never end. Legacy code for specialized tasks is probably the best reason to use a language, especially if it can be interoperable with a more commonly used language. Using the “right tool for the job” is also very important. Again, interoperability allows using the best tool for each part of a program. Which languages can work that way may influence the languages used.

Vernor Vinge made an interesting observation in a novel that one does not want to rewrite code from older languages to new ones. This results in code forming layers, with newer languages calling older ones in a stack.

Speed is still an issue but we seem to have split into 2 paths. Some applications, like climate and weather forecasting are done on supercomputers. Meanwhile, huge machine learning tasks are run on server farms, often in “the cloud”.

Al, I worked for Link for many years BUT after Apollo… What year are you referring to the “lady programmer”? Maybe I knew her! Thanks for the history. rich

Wernher von Braun once came by the Lunar Module Simulator one morning, during a session, someone from public affairs was showing him around, he talked to the crew in the cockpit for about one min. , I got to shake his hand, he may have said something to us but I can’t remember it. After he left I remembered I had my copy of The Mars Project in my office I could of had him autograph it! I was too gob-smacked to think of that at that time.

So it goes.

Regarding FORTRAN, which I learned and used in the late ’60’s and later, I’ve always heard it stands for Formula Translator and Wikipedia agrees; developed first by IBM. I learned it on a GE computer at a Naval facility; punch cards and all. I watched the first part of “Chasing the Moon”; thanks for mentioning that. Its’s interesting (and frustrating) how much politics has affected the space program but not surprising.

Wolfram wrote Mathematica in Fortran so it’s still hidden there.

Hi Greg,

Mathematica was written to be in a form similar to they way one writes math on a page. I don’t think they quite succeeded , still I found Mathematica easy to learn and use. Wolfram includes a copy with a Raspberry Pi set up which is nice because that software is quite expensive.

Funny how Mathcad and MATLAB eventually trumped Mathematica in sales , … I have always wondered if GNU Octave really works as well.

A brief comparison between MatLab and Octave can be found here: MATLAB Programming/Differences between Octave and MATLAB

I don’t have much experience of either, but you cannot beat the price of Octave. Then there is the Julia language that wants to compete in that space, offering better performance.

I am not particularly enthused by MATLAB but I can tell you that among professional engineers building the chips and firmware we all rely on you can often find an almost religious attachment to it. I suspect this is due to a combination of good marketing and excellent support that goes along with the high price. Craftsmen also become attached to their tools.

When I was choosing development software for a project some years ago I chose not to go with MATLAB even though many of the experienced hands we were looking to hire were wary of alternatives. The dilemma was solved when the project was cancelled.

Didn’t there use to be a dedicated chip design S/W package (the name escapes me) that was used (circa 1990s)? If so, did MATLAB replace it?

There is advice that the best tools are the ones that the users are familiar and comfortable with. Unless there are strong reasons to learn a new tool, or language, it pays to stick with what your team knows.

MATLAB wasn’t used to design the hardware as such. Nowadays many chips are full of software (firmware) and processors rather than relying solely on gate logic. This allows them to be made more sophisticated, reliable and even upgradable over the internet. Modern digital appliances have a surprising number of processors inside even if they’re almost entirely invisible to the user.

Regarding tools, true professionals don’t fall into ruts. They learn and adapt or become obsolete as fast as the technology they work with.

I was one of the Singer engineers that Al Jackson mentioned in his article about the LMS, and it brought back many memories. The LMS simulation was written in Honeywell DDP-224 machine language. “Programmers” had to convert our flow charts into code for us “Engineers.” We always worked in pairs. We started using Fortran when we converted the LMS to the CPES, a testbed Shuttle sim. A Boolean was a variable that was either true or false represented as 0 or 1. An example might be “On the lunar surface?” is either true or false. I remember the incident where that boolean did not get set and we sunk into the moon. But I do not remember the gorgeous lady working on LMS during Apollo. Maybe Al Jackson can remind me!