Astronomy relies on so-called ‘standard candles’ to make crucial measurements about distance. Cepheid variables, for example, perhaps the most famous stars in this category, were examined by Henrietta Swan Leavitt in 1908 as part of her study of variable stars in the Magellanic clouds, revealing the relationship between this type of star’s period and luminosity. Edwin Hubble would use distance calculations based on this relationship to estimate how far what was then called the ‘Andromeda Nebula’ was from our galaxy, revealing the true nature of the ‘nebula.’

In recent times, astronomers have used type Ia supernovae in much the same way, for comparing a source’s intrinsic brightness with what is observed in the sky likewise determines distance. The most commonly described type Ia supernovae model occurs in binary systems where one of the stars is a white dwarf, and the assumption among astronomers has been that this category of supernova produces a consistent peak luminosity that can be used to measure interstellar, and intergalactic, distances.

It was through the study of type Ia supernovae that the idea of dark energy arose to explain the apparent acceleration of the universe’s expansion, but we can also point to our methods for measuring the Hubble constant, which helps us gauge the current expansion rate of the cosmos.

Given the importance of standard candles to astronomy, we have to get them right. Now we have new work out of the Max Planck Institute for Astronomy in Heidelberg. A team led by Maria Bergemann draws our assumptions about these supernovae into question, and that could cause a reassessment of the rate of cosmic expansion. At issue: Are all type 1a supernovae the same?

Bergemann’s work on stellar atmospheres has, since 2005, focused on new models to examine the spectral lines observed there, the crucial measurements that lead to data on a star’s temperature, surface pressure and chemical composition. Computer simulations of convection within a star and the interactions of plasma with the star’s radiation have been producing and reinforcing so-called Non-LTE models that assume no local thermal equilibrium, leading to new ways to explore chemical abundances that alter our previous findings on some elements.

The team at MPIA has zeroed in on the element manganese using observational data in the near-ultraviolet, and extending the analysis beyond single stars to work with the combined light of numerous stars in a stellar cluster, which allows the examination of other galaxies. It takes a supernova explosion to produce manganese, and different types of supernova produce iron and manganese in different ratios. Thus a massive star going supernova, a ‘core collapse supernova,’ produces manganese and iron differently than a type 1a supernova.

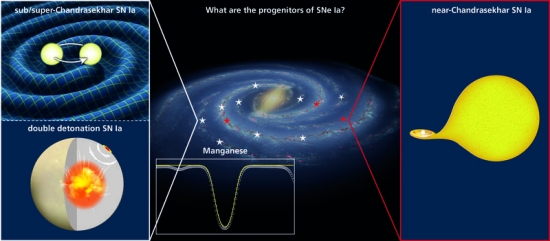

Image: By examining the abundance of the element manganese, a group of astronomers has revised our best estimates for the processes behind supernovae of type Ia. Credit: R. Hurt/Caltech-JPL, Composition: MPIA graphics department.

Working with a core of 42 stars within the Milky Way, the team has essentially been reconstructing the evolution of iron and manganese as produced through type Ia supernova explosions. The researchers used iron abundance as an indicator of each star’s age relative to the others; these findings allow them to track the history of manganese in the Milky Way. What they are uncovering is that the ratio of manganese to iron has been constant over the age of our galaxy. The same constant ratio between manganese and iron is found in other galaxies of the Local Group, emerging as what appears to be a universal chemical constant.

This is a result that differs from earlier findings. Previous manganese measurements used the older LTE model, one assuming that stars are perfect spheres, with pressure and gravitational force in equilibrium. Such work helped reinforce the idea that type Ia supernovae most often occurred with a white dwarf drawing material from a giant companion. The data in Bergemann’s work, using Non-LTE models (No Local Thermal Equilibrium), are drawn from ESO’s Very Large Telescope and the Keck Observatory. A different conclusion emerges about how type Ia occurs.

The assumption has been that these supernovae happen when a white dwarf orbiting a giant star pulls hydrogen onto its own surface and becomes unstable, having hit the limiting mass discovered by Subrahmanian Chandrasekhar in 1930 (the “Chandrasekhar limit”). This limiting mass means that the total mass of the exploding star is the same from one Type Ia supernova to another, which governs the brightness of the supernova and produces our ‘standard candle.’ The 2011 Nobel Prize in Physics for Saul Perlmutter, Brian Schmidt, and Adam Riess comes out of using Type Ia as distance markers, with readings showing that the expansion of the universe is accelerating, out of which we get ‘dark energy.’

But the work of Bergemann and team shows that other ways to produce a type Ia supernova may better fit the manganese/iron ratio results. These mechanisms may appear the same as the white dwarf/red giant scenario, but because they operate differently, their brightness varies. Two white dwarfs may orbit each other, producing a merger with resulting explosion, or double detonations can occur in some cases as matter accretes onto the white dwarf, with a second explosion in the carbon-oxygen core. In both cases, we are exploring a different scenario than the standard type Ia.

The problem: These alternative supernovae scenarios do not necessarily follow the standard candle model. Double detonation explosions do not require a star to reach the Chandrasekhar mass limit. Explosions below this limit will not be as bright as the standard type Ia scenario, meaning the well-defined intrinsic brightness we are looking for in these events is not a reliable measure. And it appears that the constant ratio of manganese to iron that the researchers have found implies that non-standard type Ia supernovae are not the exception but the rule. As many as three out of four type 1a supernovae may be of this sort.

This is the third paper in a series that is designed to provide observational constraints on the origin of elements and their evolution within the galaxy. The paper notes that the evolution of manganese relative to iron is “a powerful probe of the epoch when SNe Ia started contributing to the chemical enrichment and, therefore, of star formation of the galactic populations.” The models of non-local thermodynamic equilibrium produce a fundamentally different result from earlier modeling and raise questions about the reliability of at least some type Ia measurements to gauge distance.

Given existing discrepancies between the Hubble constant as measured by type Ia supernovae and other methods, Bergemann and team have nudged the cosmological consensus in a sensitive place, showing the need to re-examine our standard candles, not all of which may be standard. Upcoming observational data from the gravitational wave detector LISA (due for a launch in the 2030s) may offer a check on the prevalence of white dwarf binaries that could confirm or refute this work. Even sooner, we should have the next data release (DR3) of ESA’s Gaia mission as a valuable reference.

The paper is Eitner et al., “Observational constraints on the origin of the elements III. Evidence for the dominant role of sub-Chandrasekhar SN Ia in the chemical evolution of Mn and Fe in the Galaxy,” in press at Astronomy & Astrophysics (preprint).

Scrutiny about the ‘standard candle’ model with supernovae reminded me of another astronomy practice that I’ve wondered about- inferring distance from Hubble redshifts.

Is there an astronomer in our audience who can answer this for me?

What conditions would it take to compare parallax-determined-distances to redshift-determined-distances? I realize that parallax only works on stars that are very close, and those stars are not moving away as fast as those more distant. So… two questions on how to devise a comparison: 1) How many years of parallax measurements would it take before the distance changes of the nearer stars (from expansion) could be detected? 2) How large of a parallax observer base would it take to compare a parallax-determined-distance to a redshift-determined-difference?

Although not an astronomer I think I can answer your questions. Simply stated, all parallax measurements are of stars within our galaxy, nearby ones at that, and since they are all gravitationally bound to the galaxy they are not participants in cosmic expansion.

Astrometric measurements are in general useless for determining the Hubble constant (really a variable). Hence the need to use astrophysical processes such as Cepheids and supernovae, and deal with the significant error bars by improving our understanding of those processes.

This paper is demonstrating that the error bars for one of those standard candles may not be as good as previously believed.

I have a wildly off-topic follow-up question to Ron’s reply: I’ve heard things before like “.. gravitationally bound and therefore not participants in cosmic expansion”, but I fail to envision a mechanism for that. Is there a reasonable book or article which discusses this at the lay-person level ?

cheers!

“Reasonable” depends a lot on the reader. If you want a kind of answer in a few sentences you can try the following:

https://en.wikipedia.org/wiki/Big_Bang#Structure_formation

A simple description goes as follows. Consider the universe to be a uniform density “dust” at the time of the Big Bang. The dynamics of the expansion are that adjacent dust “particles” have a relative velocity so that the distance between them increases.

Particles have mass and therefore gravitate. The gravitation between adjacent particles acts as a brake on the expansion so that the rate of expansion decreases over time.

However the dust particles are not uniformly distributed. There are statistical fluctuations or inhomogeneities. It is common for adjacent particles to be closer together than the average. For sufficiently large fluctuations the gravitation between particles is large enough for them become gravitationally bound.

Particles bound this way still participate in the expansion, but they do it together. That is, particle groups that are not gravitationally bound see the distance between them grow per cosmic expansion.

Large particle groups form galaxies and other structures. Galaxies can be gravitationally bound such as the ours is to the LMC and SMC. The Andromeda galaxy, for another example, is growing closer to us and our galaxies will eventually merge.

I hope this helps.

thanks Ron.

that certainly makes sense, it’s the ordinary notion of things getting farther apart from each other while also forming clumps.

i think i was confusing this “cosmic expansion” for the other notion out there of space itself expanding. like how something can recede at more than C because space itself is expanding. or so i’m told.

cheers!

GAIA can measure paralaxes with 10% accuracy for stars of visual magnitude 15 or better to the center of the Milky Way, or ~30,000 ly. Not sure what the new ELTs can do, but probably not much better. So no, cosmic expansion can’t be measured by paralax.

The question for the Hubble constant is whether these new measurements could allow the 2 methods of estimating the constant now to converge or not, and presumably with the CMB model value.

It is encouraging that the standard candle approach could be the one with the erroneous assumptions, as it should be possible to determine how the candle measurement should change to allow conformance. Surely someone has already done that calculation and determined if a change will work or not.

Radio telescopes have been used to measure the distance to NGC 4258, with good (for Astronomy) accuracy:

https://www.nrao.edu/pr/2008/vlbiastrometry/

Here’s a more recent article: https://arxiv.org/abs/1908.05625

geometric parallax will always be the foundation of any cosmic ladder.

Thank you FrankH for that link about radio telescopes being used for parallax measurements of things as far away as galaxies (23.5 million light-years, accurate to within 7 percent).

Parallax was not used in that measurement but the megamaser method.

They are doing parallax measurements (months apart in Earth’s orbit) of the masers using VLBI. There is a multitude of papers out there: search with “VLBI parallax”.

Here are three good articles that might give a little perspective on what is going on and why these results have such a high impact:

Cosmic conundrum: Just how fast is the universe expanding?

Astronomers have found two different values for the expansion rate of the universe. But they can’t both be right.

https://astronomy.com/magazine/news/2019/04/cosmic-conundrum-just-how-fast-is-the-universe-expanding

Debate over the universe’s expansion rate may unravel physics. Is it a crisis?

Scientists tackle disagreements about the Hubble constant.

https://www.sciencenews.org/article/debate-universe-expansion-rate-hubble-constant-physics-crisis

H0LiCOW! Cosmic Magnifying Glasses Yield Independent Measure of Universe’s Expansion That Adds to Troubling Discrepancy.

https://scitechdaily.com/h0licow-cosmic-magnifying-glasses-yield-independent-measure-of-universes-expansion-that-adds-to-troubling-discrepancy/

Image of red giant – white dwarf Type Ia supernova process.

https://i.stack.imgur.com/nF7ip.jpg

Image of red giant/white dwarf and white dwarf/white dwarf process.

http://hyperphysics.phy-astr.gsu.edu/hbase/Astro/astpic/supnov1a.jpg

This is where the discrepancy appears.

“Double detonation explosions do not require a star to reach the Chandrasekhar mass limit. Explosions below this limit will not be as bright as the standard type Ia scenario, meaning the well-defined intrinsic brightness we are looking for in these events is not a reliable measure.”

So.. (is this the implication?) the expansion of the universe is NOT accelerating? Please explain clearly.

The paper has nothing to say on that question.

Unless our understanding of the Universe is mostly wrong, the universe is expanding. The information in this article does not imply the universe is NOT expanding. This new information would effect how quickly the universe is expanding.

Cepheid variables allow astronomers to measure the distance to far off galaxies. Everyone thought Cepheid variables could only be formed one way and that way would always produce objects of identical brightness. Since we could assume their true brightness, we could use their apparent brightness from Earth to calculate how far away they are. Hubble proved the universe is expanding when he showed that the further away a standard candle is, the more red shifted its light. The more red shifted the light, the faster the light source is moving.

Now we know that Cepheid variables are not all the same brightness. Our measuring device isn’t as accurate as we assumed but accurate enough to still show that the universe is expanding. There are other ways to measure the rate of expansion (Hubble constant). Analyzing large scale symmetries in the Cosmic Microwave Background topology will also deliver a value for the Hubble constant.

One of the biggest mysteries is that our 2 ways of measuring the Hubble constant deliver different rates of expansion. That is weird. Inaccurate assumptions about our standard candle may explain that weirdness.

You asked about acceleration and I gave an answer for velocity. Let me try again. Fair warning I am an amateur.

Our best evidence for acceleration is the red-shift of our stellar standard candle. There are other sources of evidence. Structures would have been created early in the universe that would have grown in size as the universe aged. Comparing the past and present scale of these structures reveals acceleration. There several structures to use each with their own strengths, weaknesses, and margins of error.

Losing Chepheid variables would have an impact on the theory that cosmic inflation is accelerating but I don’t think we will completely lose them. The risk posed to current models is proportional to how many Chepheids are in our data set, the more the better, and the degree their formation history can vary, the less the better. However, variation in formation history could also provide better resolution. This gain would be proportional to the distribution pattern of type, the more evenly the better, and the reliability that any type delivers a predictable luminosity.

I can’t see calling this a crisis. The expansion values do vary significantly but the methods vary in their accuracy surely. I know they all provide error bars but do the error bars truly represent the amount of variation within each method? Two of the best methods seem to arrive at a number around 70 km/sec per Mpc. This value is significantly higher than a previous method but none of these methods seem very precise to me and more recent methods do seem to be narrowing in on a true if approximate value. Does this make sense to the astrophysicists among us?

I’m not an astrophysicist, but the astrophysicists are calling this a crisis. The error bars no longer overlap between the 2 main methods (standard candles and CMB) and cannot seem to be made to do so. Increasing measurement accuracy is simply separating the 2 methods to arrive at 2 different values for the Hubble constant. One of the 2 methods (or both) may be wrong, or possibly “new physics” may emerge.

Is dark matter clumpy and non randomly distributed enough to give different values for the expansion of the universe depending in approximately where you look or are these studies done over the entire universe i.e. are the standard candles used, scattered over the entire universe in all directions? Or possibly the methods aren’t as accurate as the investigators suggest. That would make the standard error bars bigger which would make the two main methods overlap wouldn’t it?

AFAIK, the standard candles are used across the viewing space, not in a narrow direction. But seriously, you don’t think that obvious sources of error haven’t been looked at very hard to understand why there is this discrepancy. It isn’t as if there is an upcoming collective “Doh!” for a simple explanation that was missed. ;)

Dark matter follows the cosmic web.

Ok I give up Alex :). As a person untrained in astrophysics I’m just trying to understand what is going on. In other words I’m an interested layman. The term crisis still seems a stretch to me. We don’t understand dark matter very well at all among other things. This area is wide open to new research and new discoveries. A very exciting time to be an observer. Thanks for your input though.

Gary,

These two links might be of interest to help clarify the issue.

New Wrinkle Added to Cosmology’s Hubble Crisis

Ka Chun Yu – Re-measuring Hubble’s Constant (60 Minutes in Space, February 2018)

Ok thanks Alex. I’ve been through both of those now and it has helped me understand the basic concepts better. I’m surprised the CMB data can give an equally precise measurement for the expansion value if I understand what they are saying. I can believe they can measure variations in the CMB very precisely for one time point (now) but could those variations have been altered to varying degrees over time by dark matter? I guess I’ll just keep trying to find more information to understand it all better. Thanks again.

Is the evidence presented in this study and similar studies enough to seriously call into question the existence of accelerated expansion and dark energy? Please clarify…

No, the evidence is not enough, and the authors aren’t arguing that. What they’re saying is that we need to investigate further to see how reliable this particular kind of standard candle is. That’s a long way from calling accelerated expansion into question, but it might help us fine-tune studies into it going forward.

BTW, if you want to make the standard candles methods more precise, there are several projects in Zooniverse to search for new cepheids and new supernovae.

I don’t think the merger or collision of two white dwarf stars results in a star based on general relativity and quantum physics. My thought experiment results in the images of a shattering of both of the white dwarfs by the colossal gravitational and kinetic energy of the collision. A white dwarf star is not held together strong enough by gravity like a collision of two neutron stars which can be combined into a black hole.

Consequently, two of them could not stay held together long enough to make a common type 1A supernova. I also don’t think they can make a new short lived star as a result since the heavier elements fused in larger stars require stable inner and outer plasma layers of a star. I think the whole thing would result in a mess of carbon and oxygen clouds and fragments orbiting around a central mass and no supernova. Note: a type 1A super nova can’t have a rotation faster than one second or the centrifugal forces will tear it apart. The combination won’t be stable enough with the center fusing and the outer parts flying apart? It seems to me we might have more of a short time period supernova or flash nova. The type 1 A supernova gets lit like a candle so it has time to get going before the dwarf gets blown apart.

I like the idea of secondary collisions which might in potential make a super nova, and if even such white dwarf collisions resulted in super novas one hundred percent of the time, the odds of such collisions occurring are much less than the more common white dwarfs gas accretion from nearby star type one a supernovas which can still be used as a standard candle.

Well, they do a great job of making it confusing, so don’t believe everything you read.

No Galaxy Will Ever Truly Disappear, Even In A Universe With Dark Energy.

https://www.forbes.com/sites/startswithabang/2020/03/04/no-galaxy-will-ever-truly-disappear-even-in-a-universe-with-dark-energy/

https://www.forbes.com/sites/startswithabang/2019/02/26/how-did-the-universe-expand-to-46-billion-light-years-in-just-13-8-billion-years/

https://medium.com/starts-with-a-bang/if-the-universe-is-13-8-billion-years-old-how-can-we-see-46-billion-light-years-away-db45212a1cd3

https://medium.com/starts-with-a-bang/how-is-the-universe-bigger-than-its-age-7a95cd59c605

Ethan Siegel has been telling us that the universe is 46 billion light years across since 2014. That means our local universe should be 23 billion years old, but NO it’s only 13.8 billion years!!! So why does he keep repeating this every year around this time? Sounds like someone else that keeps repeating things till a lot of people believes him… hmm.

Now I don’t believe any of this and instead prefer the little bang universe of Halton Arp, where Quasars are ejected from the cores of galaxies. This concept negats the many supernatural concepts of the big bang theory, such as dark energy or dark matter. So there you have it, “The Universe is not only queerer than we imagine—it is queerer than we can imagine.”

“That means our local universe should be 23 billion years old”

Nope. Please educate yourself before accusing other people of being stupid or dogmatic.

https://en.wikipedia.org/wiki/Distance_measures_(cosmology)#Details

Sorry, but I have never accused anyone dealing with this subject as stupid. I have made the point that any objection to the big bang theory is taken as an attack on basic science. The problem is that an alternate is available to the to the BB.

Thanks for the Harp mention. Enjoyed reading about him and Ambartsumian in an interview from ’75 and this especially: “[science classes] did not have the same intellectual adventure as the Humanities courses”.

https://www.aip.org/history-programs/niels-bohr-library/oral-histories/4490

In theory, you could use cosmic magnification as a standard candle, too; The expansion of space causes really distant objects to subtend a larger visible angle than they should given their apparent distance; Instead they subtend the angle they would have if there had been no expansion of space, because the light on its way from them gets spread out by that expansion.

The problem is that using this requires identifying structures whose actual size can be accurately inferred at vast distances, and then you have to accurately measure their visible size, when objects that far away are usually quite dim and extensively red shifted.

Regarding comparing parallax distances to Hubble-redshift distances: Tracing FrankH’s leads and then contacting the cited astronomer, I got a qualified answer to my question. In short, parallax and redshift distances have been compared and corroborate. It turns out that the detection range mismatch between close-distance parallax, and far-distance Hubble redshifts, has an exception: megamasers. Even when galactically distant, megamasers allow for parallax measurements. Here is the article:

Pesce, D. W., Braatz, J. A., Reid, M. J., Riess, A. G., Scolnic, D., Condon, J. J., … & Lo, K. Y. (2020). The Megamaser Cosmology Project. XIII. Combined Hubble constant constraints. The Astrophysical Journal Letters, 891(1), L1.

When I first answered your question I completely forgot about what’s been done with VLBI. It’s good that FrankH corrected my omission. If you have an astrophysical process that is small enough in size, with the right spectrum and long enough in duration for VLBI measurements to be made months apart you can extend parallax to large distances. But not optically, at least not yet.

We Actually Live Inside a Huge Bubble in Space, Physicist Proposes!

“What if the Earth, the galaxy, and all the galaxies near us were enclosed in a weirdly empty bubble? This scenario could resolve some longstanding questions about the nature of the universe.”

https://www.vice.com/en_in/article/4agbjn/we-actually-live-inside-a-huge-bubble-in-space-physicist-proposes

Solved: The mystery of the expansion of the universe.

The Earth, solar system, the entire Milky Way and the few thousand galaxies closest to us move in a vast “bubble” that is 250 million light years in diameter, where the average density of matter is half as high as for the rest of the universe.

https://phys.org/news/2020-03-mystery-expansion-universe.html

Consistency of the local Hubble constant with the cosmic microwave background.

https://www.sciencedirect.com/science/article/pii/S0370269320301076?via%3Dihub#!

Ok, so the gravitational lensing in galaxy clusters may be caused by this. ..Hmm

Maybe we are in a different time zone, as Halton Arp and the discordant redshift calls for slower time on newly created matter being ejected from the cores of galaxies…

https://static1.squarespace.com/static/57e97e6ab8a79be1e7ae0ae6/t/59306715db29d64497b16d57/1496344351513/NGC4151MosaicFinal65%25.jpg

If being in a low density bubble accounts for the standard candle divergence from teh CMB, then that should be testable. I imagine it is going to spark some lively commentary among experts.

If true, it would be a neat explanation.

“While special relativity prohibits objects from moving faster than light with respect to a local reference frame where spacetime can be treated as flat and unchanging, it does not apply to situations where spacetime curvature or evolution in time become important. These situations are described by general relativity, which allows the separation between two distant objects to increase faster than the speed of light, although the definition of “separation” is different from that used in an inertial frame. This can be seen when observing distant galaxies more than the Hubble radius away from us (approximately 4.5 gigaparsecs or 14.7 billion light-years); these galaxies have a recession speed that is faster than the speed of light. Light that is emitted today from galaxies beyond the cosmological event horizon, about 5 gigaparsecs or 16 billion light-years, will never reach us, although we can still see the light that these galaxies emitted in the past. Because of the high rate of expansion, it is also possible for a distance between two objects to be greater than the value calculated by multiplying the speed of light by the age of the universe. These details are a frequent source of confusion among amateurs and even professional physicists. Due to the non-intuitive nature of the subject and what has been described by some as “careless” choices of wording, certain descriptions of the metric expansion of space and the misconceptions to which such descriptions can lead are an ongoing subject of discussion within education and communication of scientific concepts.” Cited from Wikipedia. I think this makes sense to me, although what causes this expansion is obviously still unclear. Is it an intrinsic property of the universe? Is it caused by vacuum energy? What is the value of vacuum energy that would cause this expansion rate? I’m fairly certain we have no real answers and probably won’t arrive at the final answer with our current methods. Apparently estimates of vacuum energy have varied by at least 100 orders of magnitude!