In addition to being a rather well-known character on television, SPOCK also stands for something else, a software model its creators label Stability of Planetary Orbital Configurations Klassifier. SPOCK is handy computer code indeed, determining the long-term stability of planetary configurations at a pace some 100,000 times faster than any previous method. Thus machine learning continues to set a fast pace in assisting our research into exoplanets.

At the heart of the process is the need to figure out how planetary systems are organized. After all, after the initial carnage of early impacts, migration and possible ejection from a stellar system, a planet generally settles into an orbital configuration that will keep it stable for billions of years. SPOCK is all about quickly screening out those configurations that might lead to collisions, which means working out the motions of multiple interacting planets over vast timeframes. To say this is computationally demanding is to greatly understate the problem.

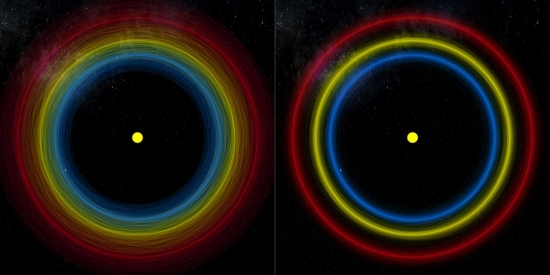

Image: While astronomers have confidently detected three planets in the Kepler-431 system, little is known about the shapes of the planetary orbits. The left-hand image shows many superimposed orbits for each planet (yellow, red and blue) consistent with observations. Using machine learning methods, researchers removed all unstable configurations that would have resulted in planetary collisions and would not be observable today, leaving only the stable orbits (right-hand image). Using previous methods, this process would have taken more than a year of computing time. The new method instead takes just 14 minutes. Credit: D. Tamayo et al./Proceedings of the National Academy of Sciences 2020.

Thus we rule out dynamically unstable possibilities in, compared to older methods, the blink of an eye, a useful fact for making sense of planetary systems we can observe, and possibly giving us information about the composition of some of these worlds. Bear in mind that while we’ve discovered more than 4,000 planets around other stars, almost half of these occur in multi-planet systems, so understanding orbital architectures is vital.

The lead author on this work is Daniel Tamayo, a NASA Hubble Fellowship Program Sagan Fellow in astrophysics at Princeton. As you would expect, Tamayo and his co-authors relish the resonance of the acronym, as witness the note that closes their paper:

We make our ≈ 1.5-million CPU-hour training set publicly available (https://zenodo.org/record/3723292), and provide an open-source package, documentation and examples (https://github.com/dtamayo/spock) for efficient classification of planetary configurations that will live long and prosper.

A good choice, and an acronym with almost as much going for it as my absolute favorite of all time, TRAPPIST (Transiting Planets and Planetesimals Small Telescope), which contains within it not only a description of the twin telescopes but a nod to some of the world’s best beers (the robotic telescopes are operated from a control room in Liège, Belgium).

Thus we move from brute force calculations run on supercomputers to machine learning methods that quickly eliminate unstable orbital configurations, replacing tens of thousands of hours of computing time with the time it takes to quaff a Westmalle Tripel (well, maybe a Westmalle Dubbel). Configurations that would, over the course of a few million years, result in planetary collisions — the team calls these ‘fast instabilities’ — are identified.

The paper notes the relevance of the work to compact systems and the ongoing observations of TESS:

In the Kepler 431 system with three tightly packed, approximately Earth-sized planets,we constrained the free eccentricities of the inner and outer pair of planets to both be below 0.05 (84th percentile upper limits). Such limits are significantly stronger than can currently be achieved for small planets through either radial velocity or transit duration measurements, and within a factor of a few from transit timing variations (TTVs). Given that the TESS mission’s typical 30-day observing windows will provide few strong TTV constraints [Hadden et al., 2019], SPOCK computationally enables stability constrained characterization as a productive complementary method for extracting precise orbital parameters in compact multi-planet systems.

SPOCK can’t provide a solution to all questions of long-term stability, as Tamayo acknowledges, but by identifying fast instabilities in compact systems, where the issues are the most obvious, it can sharply reduce the options and allow astronomers to focus on likely solutions. Freeing up a significant amount of computer time for other uses is a collateral benefit.

The paper is Tamayo et al., “Predicting the long-term stability of compact multi-planet systems,” Proceedings of the National Academy of Sciences July 13, 2020 (preprint).

I confess that I would have to immediately take issue with something of this particular nature . We have tremendous number of unanswered questions concerning the stability of our own solar system and in particular the uncertainty especially in trans-plutonian objects outside of the Orbits of the 9 known planets.

Those comets and asteroids that reside in the outer regions of the solar system may have long term dynamical impact on the very stability of our own system and now we expecting to answer questions on distance Star systems?

First, why do you believe scientific enterprises are serial in nature? One project does not supplant the other.

Second, the two questions are related and therefore work on one informs the other.

Not at all certain about what you were talking about; u were saying something to the effect that one thing is serial in nature? I was only saying that utilizing gravitational models on a computer may not be a very accurate way at this present time to obtain information on the nature of stability of a foreign solar system.

There is considerable effort being taken 2 refine our models of our gravitational system (which is becoming so extremely detailed) simply because of the tremendous amount of new data that has come about in the last 50 years or so. Currently such exquisitely fine data would not be found to exist in foreign solar systems . That was what I was commenting on – nothing else.

Already much of the computing process in neural networks for artificial intelligence is not understood. And sometimes there are unfathomable results, as with AlphaGo versus the world champion: venturing into the realm where genius is mistaken for folly. While marvelling at the manifest capabilities of AI, the unnoticed subliminal but overwhelming subtleties may effect a takeover of human civilization. Perhaps a solution to Fermi’s paradox?

I’ve done work on complex decision-making systems. They are deterministic or, in some cases, stochastic. When we’ve been surprised by these systems the sequential track of data and choices can be traced back. That can be difficult in a complex system but it is very doable, and is fact necessary.

Even if a hypothesized “rogue” system were to become conscious and malevolent (which is quite a stretch!) it isn’t going to pick up a hammer and hit you with it unless you’ve given it that physical ability.

If you check the paper and / or the source code you’d see they’re using xgboost, which is absolutely not a neural network.

Thank you, and I’m not surprised. I hadn’t checked since that wasn’t applicable to the comment I made. However there are those who tend to equate “intelligent” systems with neural networks. In my limited and narrowly focused work in this field neural networks were not appropriate tools.

Very interesting, with these small compact red dwarf systems, could we find something like planet orbit switching, like some of the small moons in and around Saturn’s rings?

Here is some DYNAMITE for the Hidden Worlds!

Hidden Worlds: Dynamical Architecture Predictions of Undetected Planets in Multi-planet Systems and Applications to TESS Systems.

“We introduce the DYNAmical Multi-planet Injection TEster (DYNAMITE), a model to predict the presence of unseen planets in multi-planet systems via population statistics.”

“Multi-planet systems produce a wealth of information for exoplanet science, but our understanding of planetary architectures is incomplete. Probing these systems further will provide insight into orbital

architectures and formation pathways. Here we present a model to predict previously undetected planets in these systems via population statistics. The model considers both transiting and nontransiting planets, and can test the addition of more than one planet. Our tests show the model’s orbital period predictions are robust to perturbations in system architectures on the order of a few percent, much larger than current uncertainties. Applying it to the multi-planet systems from TESS provides a prioritized list of targets, based on predicted transit depth and probability, for archival searches and for guiding ground-based follow-up observations hunting for hidden planets.”

https://arxiv.org/abs/2007.06745

That is 171 CPU-years. Therefore the real cost is the amortized upfront training cost per orbitals calculation. I am not saying this invalidates the concept, but it does mean that to gain this benefit, there was a huge investment in training to get to the 14 minutes results. One would need to use the ML system thousands of times to get a net benefit. If it turned out that the training was incorrect, then it would have to be redone.

I believe we saw this approach in another orbit calculation, something to do with trajectories of spacecraft? Clearly when you need a fast decision in a complex, compute intensive, problem space, this is a useful approach. No doubt the military is doing something like this for [unmanned?] fighter aircraft in dogfights.

http://www.cnrs.fr/en/could-mini-neptunes-be-irradiated-ocean-planets

Could mini-Neptunes be irradiated ocean planets?

July 20, 2020

Many exoplanets known today are ‘super-Earths’, with a radius 1.3 times that of Earth, and ‘mini-Neptunes’, with 2.4 Earth radii. Mini-Neptunes, which are less dense, were long thought to be gas planets, made up of hydrogen and helium.

Now, scientists at the Laboratoire d’Astrophysique de Marseille (CNRS/Aix-Marseille Université/Cnes)1 have examined a new possibility, namely that the low density of mini-Neptunes could be explained simply by the presence of a thick layer of water that experiences an intense greenhouse effect caused by the irradiation from their host star.

These findings, recently published in The Astrophysical Journal Letters, show that mini-Neptunes could be super-Earths with a rocky core surrounded by water in a supercritical state2, suggesting that these two types of exoplanet may form in the same way.

Another paper recently published in Astronomy & Astrophysics, involving in France scientists mainly from the CNRS and the University of Bordeaux3, focused on the effect of stellar irradiation on the radius of Earth-sized planets containing water. Their work shows that the size of the atmospheres of such planets increases considerably when subject to a strong greenhouse effect, in line with the study on mini-Neptunes.

Future observations should make it possible to test these novel hypotheses put forward by French scientists, who are making major contributions to our knowledge of exoplanets.

Stanley Kubrick–on Wikiquotes

“The most terrifying fact about the universe is not that it is hostile but that it is indifferent; but if we can come to terms with this indifference and accept the challenges of life within the boundaries of death — however mutable man may be able to make them — our existence as a species can have genuine meaning and fulfillment. However vast the darkness, we must supply our own light.”

In a different way, and in a different context, Edwin Hubble said pretty much the same thing…

“We are, by definition, at the very center of the observable region.”