If you were crafting a transmission to another civilization — and we recently discussed Alexander Zaitsev’s multiple messages of this kind — how would you put it together? I’m not speaking of what you might want to tell ETI about humanity, but rather how you could make the message decipherable. In the second of three essays on SETI subjects, Brian McConnell now looks at enclosing computer algorithms within the message, and the implications for comprehension. What kind of information could algorithms contain vs. static messages? Could a transmission contain programs sufficiently complex as to create a form of consciousness if activated by the receiver’s technnologies? Brian is a communication systems engineer and expert in translation technology. His book The Alien Communication Handbook (Springer, 2021) is now available via Amazon, Springer and other booksellers.

by Brian S McConnell

In most depictions of SETI detection scenarios, the alien transmission is a static message, like the images on the Voyager Golden Record. But what if the message itself is composed of computer programs? What modes of communication might be possible? Why might an ETI prefer to include programs and how could they do so?

As we discussed in Communicating With Aliens : Observables Versus Qualia, an interstellar communication link is essentially an extreme version of a wireless network, one with the following characteristics:

- Extreme latency due to the speed of light (eight years for round trip communication with the nearest solar system), and in the case of an inscribed matter probe, there may be no way to contact the sender (infinite latency).

- Prolonged disruptions to line of sight communication (due to the source not always being in view of SETI facilities as the Earth rotates).

- Duty cycle mismatch (it is extremely unlikely that the recipient will detect the transmission at its start and read it entirely in one pass).

Because of these factors, communication will work much better if the transmission is segmented so that parcels received out of order can be reassembled by the receiver, and so that those segments are encoded to enable the recipient to detect and correct errors without having to contact the transmitter and wait years for a response. This is known as forward error correction and is used throughout computing (to catch and fix disc read errors) and communication (to correct corrupted data from a noisy circuit).

While there are simple error correction methods, such as the N Modular Redundancy or majority vote code, these are not very robust and dramatically reduce the link’s information carrying capacity. There exist very robust error correction methods, such as the Reed Solomon coding used for storage media and space communication. These methods can correct for prolonged errors and dropouts, and the error correction codes can be tuned to compensate for an arbitrary amount of data loss.

In addition to being unreliable, the communication link’s information carrying capacity will likely be limited compared to the amount of information the transmitter may wish to send. Because of this, it will be desirable to compress data, using lossless compression algorithms, and possibly lossy compression algorithms (similar to the way JPEG and MPEG encoders work). Astute readers will notice a built-in conflict here. Data that is compressed and encoded for error correction will look like a series of random numbers to the receiver. Without knowledge about how the encoding and compression algorithms work, something that would be near impossible to guess, the receiver will be unable to recover the original unencoded data.

The iconic Blue Marble photo taken by the Apollo 17 astronauts. Credit: NASA.

The value of image compression can be clearly shown by comparing the file size for this image in several different encodings. The source image is 3000×3002 pixels. The raw uncompressed image, with three color channels with 8 bits per pixel per color channel, is 27 megabytes (216 megabits). If we apply a lossless compression algorithm, such as the PNG encoding, this is reduced to 12.9 megabytes (103 megabits), a 2.1:1 reduction. Applying a lossy compression algorithm, this is further reduced to 1.1 megabytes (8.8 megabits) for JPEG with quality set to 80, and 0.408 megabytes (3.2 megabits) for JPEG with quality set to 25, which results in a 66:1 Reduction.

Lossy compression algorithms enable impressive reductions in the amount of information needed to reconstruct an image, audio signal, or motion picture sequence, at the cost of some loss of information. If the sender is willing to tolerate some loss of detail, lossy compression will enable them to pack well over an order of magnitude more content into the same data channel. This isn’t to say they will use the same compression algorithms we do, although the underlying principles may be similar. They can also interleave compressed images, which will look like random noise to a naive viewer, with occasional uncompressed images, which will stand out, as we showed in Communicating with Aliens : Observables Versus Qualia.

So why not send programs that implement error correction and decompression algorithms? How could the sender teach us to recognize an alien programming language to implement them?

A programming language requires a small set of math and logic symbols, and is essentially a special case of a mathematical language. Let’s look at what we would need to define an interpreted language, call it ET BASIC if you like. An interpreted language is abstract, and is not tied to a specific type of hardware. Many of the most popular languages in use today, such as Python, are interpreted languages.

We’ll need the following symbols:

- Delimiter symbols (something akin to open and close parentheses, to allow for the creation of nested or n-dimensional data structures)

- Basic math operations (addition, subtraction, multiplication, division, modulo/remainder)

- Comparison operations (is equal, is not equal, is greater than, is less than)

- Branching operations (if condition A is true, do this, otherwise do that)

- Read/write operations (to read or write data to/from virtual memory, aka variables, which can also be used to create input/output interfaces for the user to interact with)

- A mechanism to define reusable functions

Each of these symbols can be taught using a “solve for x” pattern within a plaintext primer that can be interleaved with other parts of the transmission. Let’s look at an example.

1 ? 1 = 2

1 ? 2 = 3

2 ? 1 = 3

2 ? 2 = 4

1 ? 3 = 4

3 ? 1 = 4

4 ? 0 = 4

0 ? 4 = 4

We can see right away that the unknown symbol refers to addition. Similar patterns can be used to define symbols for the rest of the basic operations needed to create an extensible language.

The last of the building blocks, a mechanism to define reusable functions, is especially useful. The sine function, for example, is used in a wide variety of calculations, and can be approximated via basic math operations using the Taylor series shown below:

![]()

And in expanded form as:

![]()

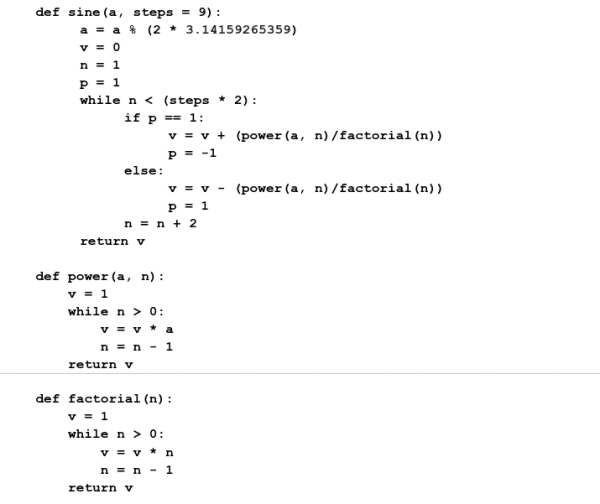

This can be written in Python as:

The sine() function we just defined can later be reused without repeating the lower level instructions used to calculate the sine of an angle. Notice that the series of calculations used reduce down to basic math and branching operations. In fact any program you use, whether it is a simple tic-tac-toe game or a complex simulation, reduces down to a small lexicon of fundamental operations. This is one of the most useful aspects of computer programs. Once you know the basic operations, you can build an interpreter that can run programs that are arbitrarily complex, just as you can run a JPEG viewer without knowing a thing about how lossy image compression works.

In the same way, the transmitter could define an “unpack” function that accepts a block of encoded data from the transmission as input, and produces error corrected, decompressed data as output. This is similar to what low level functions do to read data off a storage device.

Lossless compression will significantly increase the information carrying capacity of the channel, and also allow for raw, unencoded data to be very verbose and repetitive to facilitate compression. Lossy compression algorithms can be applied to some media types to achieve order of magnitude improvements, with the caveat that some information is lost during encoding. Meanwhile, deinterleaving and forward error correction algorithms can ensure that most information is received intact, or at least that damaged segments can be detected and flagged. The technical and economic arguments for including programs in a transmission are so strong, it would be surprising if at least part of a transmission were not algorithmic in nature.

There are many ways a programming language can be defined. I chose to use a Python based example as it is easy for us to read. Perhaps the sender will be similarly inclined to define the language at a higher level like this, and will assume the receiver can work out how to implement each operation in their hardware. On the other hand, they might describe a computing system at a lower level, for example by defining operations in terms of logic gates, which would enable them to precisely define how basic operations will be carried out.

Besides their practical utility in building a reliable communication link, programs open up whole other realms of communication with the receiver. Most importantly, they can interact with the user in real-time, thereby mooting the issue of delays due to the speed of light. Even compact and relatively simple programs can explain a lot.

Let’s imagine that ET wants to describe the dynamics of their solar system. An easy way to do this is with a numerical simulation. This type of program simulates the gravitational interactionsof N number of objects by summing up gravitational forces acting on each object and steps forward an increment of time to forecast where they will be, and then repeats this process ad infinitum. The program itself might only be a few kilobytes or tens of kilobytes in length since it just repeats a simple set of calculations many times. Additional information is required to initialize the simulation, probably on the order of about 50 bytes or 400 bits per object, enough to encode position and velocity in three dimensions at 64 bit accuracy. Simulating the orbits of the 1,000 most significant objects in the solar system would require less than 100 kilobytes for the program and its starting conditions. Not bad.

This is just scratching the surface of what can be done with programs. Their degree of sophistication is really only limited by the creativity of the sender, who we can probably assume has a lot more experience with computing than we do. We are just now exploring new approaches to machine learning, and have already succeeded at creating narrow AIs that exceed human capabilities in specialized tasks. We don’t know yet if generally intelligent systems are possible to build, but an advanced civilization that has had eons to explore this might have hit on ways to build AIs that are better and more computationally efficient than our state of the art. If that’s the case, it’s possible the transmission itself may be a form of Intelligence.

How would we go about parsing this type of information, and who would be involved? Unlike the signal detection effort, which is the province of a small number of astronomers and subject experts, the process of analyzing and comprehending the contents of the transmission will be open to anyone with an Internet connection and a hypothesis to test. One of the interesting things about programming languages is that many of the most popular languages were created by sole contributors, like Guido van Rossum, the creator of Python, or by small teams working within larger companies. The implication being that the most important contributions may come from people and small teams who are not involved in SETI at all.

For an example of a fully worked out system, Paul Fitzpatrick, then with the MIT CSAIL lab, created Cosmic OS, which details the ideas explored in this article and more. With Cosmic OS, he builds a Turing complete programming language that is based on just four basic symbols: 0 and 1, plus the equivalent of open and close parentheses.

There are risks and ethical considerations to ponder as well. In terms of risk, we may be able to run programs but not understand their inner workings or purpose. Already this is a problem with narrow AIs we have built. They learn from sets of examples instead of scripted instructions. Because of this they behave like black boxes. This poses a problem because an outside observer has no way of predicting how the AI will respond to different scenarios (one reason I don’t trust the autopilot on my Tesla car). In the case of a generally intelligent AI of extraterrestrial provenance, it goes without saying that we should be cautious in where we allow it to run.

There are ethical considerations as well. Suppose the transmission includes generally intelligent programs? Should they be considered a form of artificial life or consciousness? How would we know for sure? Should terminating their operation be considered the equivalent of murder, or something else? This idea may seem far fetched, but it is worthwhile to think about issues like this before a detection event.

https://en.wikipedia.org/wiki/A_for_Andromeda

If an ET signal would allow us to build a supercomputer and create Julie Christie, then I am all for it, and damn the risks! (Pity most of the episodes of the original BBC series of A for Andromeda are lost with only some images remaining with the script. Same with the lost episodes of the first Quatermass season.)

So… basically you’re saying that if we receive a message it could be an alien computer virus? Thanks for that. Now we’ll have activists sabotaging radio telescopes to “save humanity from the alien scourge”. But would they be wrong to think that?

It cannot be a functional virus because it does not use the op codes of our computers. It is just a possible Turing machine once we have worked out what it represents and then translated it to our computer languages.

Computer viruses are only a danger if they can infect other machines through some form of connectivity. Even if we used a black box ML to translate the signal into a program for one of our computers, it could be sandboxed in a computer to prevent escape.

So no danger as long as the work is contained, which would suggest that crowd sourcing and citizen science be restricted from this activity.

Suppose the signal contained the information to construct a real virus that was deadly to humans or all life on Earth. Again, we do not need to build that virus initially. If we do, then it will be done in a the highest level containment facilities we have to ensure it does not get out into the wild.

In both cases, Murphy’s law could operate and humans could deliberately evade the containment practices for various motivations. That is possibly the real danger, bad actors deliberately causing a problem by their actions. It would be the 1950s equivalent of the nuclear spies providing information to the Russians for what they saw as noble and rational reasons. More recently we saw the deliberate spread of anthrax in 2001 by a scientist at a US bioweapons facility for reasons unknown.

Of all the possible existential risks facing humans, an alien signal containing a means for our own destruction is very low on my probability list, especially as we have no idea whether there will ever be any signal, benign or otherwise.

If the signal sends both uncompressed and compressed content, we could always decide to just avoid the compressed data. If the data is only compressed it seems unlikely that we could decode it in such a way that it would be a danger to us. Most likely it would remain a mystery. We probably wouldn’t even get as far as the scientists in Lem’s “His Master’s Voice” who managed to extract 2 very different forms of content from a signal.

If you like the idea of messages that contain more than just raw data, it’s worth checking out Rian Hughes’ book “XX: A Novel, Graphic”. The messages contain the aliens themselves.

Thanks for the recommendation. It seems to be larger than a Neal Stephenson! I look forward to reading it.

For anyone who likes Anathem, there are some very slight similarities in the topics being tackled. Very slight!

It’a actually not as long to read as it looks by the page count because the book heavily relies on some creative typography.

It’s also a damn weird book, frankly. But I enjoyed it!

As you know, Pat, so did I! A favorite here, and as I recall, you’ve read it more than once.

I’ve actually lost count of how many times I’ve read it now. At least seven!

Still my favorite novel.

To clarify: I mean Anathem is my favorite novel, that I’ve read multiple times. “XX” I have not read multiple times. :-)

The details are lost in my memory but a Piers Anthony novel invoked an alien message in which the true meaning was contained in the interference pattern between the incoming and reflected signal IIRC. Vague memories include information on how to decompose human bodies into liquid form to allow ultra-high acceleration with said liquid reconstructed back into human form via a weird fish creature swimming in the pool and morphing back into the original human with intact memories. Anthony had an unsurpassed imagination in the hard Sci-Fi realm.

The novel you’re thinking of is “Macroscope”, which is amazing. Piers Anthony also did some great short SF stories. I agree that his imagination was unsurpassed.

“Macrons” were a particle derived from the interaction of photons and gravitons (or something like that, I’m thinking of the definition actually given in the conversation between two of the characters).

The macroscope was built aboard a space station, employing a huge crystal to receive the macrons. It could deliver life-sized, high definition images of scenes from anywhere in the Galaxy, subject to some limitations.

But artificial signals could also be sent. Those signals (apparently using something like Venn diagrams) had multiple levels of interpretation, and therein lay the trap: a sufficiently intelligent person could actually parse messages that would destroy their mind, whereas a duller person would miss them. A sophisticated way to attack.

But another signal was the “Traveller” – it was this signal that could literally decompose a person into a liquid and reconsitiute them at the other end of the trip. The stream-of0consciouness passage about being reconstitued via evolutionary stages including the fish is etched in my mind: “adrift on a continent of bone ” “how to think, without a brain”, etc.

This complex novel has a lot more in it: KII/III technology, love, escapades, politics, science, even a mystery a bit like “Zen and the Art of Motorcycle Maintenance”. But I will refrain from spoilers.

It’s not like it impressed me or anything :-).

Thanks! The novel was truly stunning in its sophistication and scope.

I dunno…

I worked as a professional scientific/engineering programmer for over 13 years, in a variety of computer languages, writing code to support astrometry, image processing, remote sensing, X-ray diffractometry, automated cartography, Geographic Information Systems and celestial navigation applications. I often had to struggle maintaining and debugging code I or my colleagues had written, sometimes only recently. I don’t think plunging into alien computer software written in unknown languages and developed on alien hardware is going to be possible. Even if they have deliberately designed it so we can decipher it.

What if they program in COBOL?

I think there are two very different mindsets when trying to understand programs.

There’s the normal one that you’ve described (and I expect all programmers have experienced!) where you’re looking at source code which you are supposed to be able to understand.

Then there’s the other mindset where you’re trying to work out what a program is doing from scratch, often without any source code at all. It may even have been deliberately designed to be difficult to understand, using techniques to frustrate reverse engineering tools. Malware does this a lot. And yet people do crack this stuff all the time. It’s a lot harder, and it takes a particular type of person (mostly exceptionally patient!), but it is commonly done.

If it’s possible for an alien program to run, then I’m sure eventually people will figure it out. I can’t imagine a reverse engineering challenge that would be more motivational. It’s like the ultimate Capture The Flag!

COBOL? I thought they were supposed to be an advanced intelligence! Actually, it may make sense as COBOL was supposed to be self-documenting.

My computer experience was limited to the 70’s and early 80’s so COBOL was part of my experience along with FORTRAN, a little APL and a fair amount of assembly language. Those were the days with punch cards, hand-wired computers and magnetic core memories.

I hear you. I have enough trouble fixing my own code after a few months. But every language I have used, including dabbling in assembly is far more complex than it need be. All ET needs to do is define a minimal Turing machine and explain it. The simplest are all less than 10 symbols that do something very simple. They are not fast, but as long as they can decompress a compressed bitstream to a bitmap, or even define an AI, by processing a signal stream, then I see no inherent reason why we could not build very simple hardware with the Turing machine to process the following signal stream that includes the software and content. Think of Lisp’s CAR & CDR that can be used in combinations with parentheses as the very core of Lisp. functionality.

If ET, for some strange reason, coded in COBOL (just using different symbolics), then there will be some very happy ol’ timers being paid top dollar to work on the signal. ;)

Or they could define everything from the ground up using a ? calculus, if they want to Curry favor with our computer scientists (no (pun (intended))).

There a number of ways one can define a programming language or substrate. As Pat points out, they could go with the minimum instruction set needed for a Turing complete system, or they could use a larger, more abstract instruction set and assume the receiver can work out how to build an interpreter for it. Either approach is valid.

The good news is that large numbers of people will be able to participate in the process. Some may be part of large research teams, while others may be individual contributors. As I noted in the article, many of the most popular languages in use today were the work of individuals or small teams. So I would not be surprised if the most important contributions come from people who are presently not involved in SETI at all.

what defines ETI? is it assumed that ET will use a programming construct… like we do? what if ET does not do programming? what if ET’s tech evolved in a manner exclusive of programming?

what if in ET’s history programming was done … but that was ancient history – ET no longer programs. It might be analogous to some technology that defined primitive man – such as making flint tools … but do we do that today?

here is a possibility – what if ET has detected “us”, studied our electro-magnetic leakage into space and using that “leakage”, resend to us a targeted message that is spliced and stitched together with words, images, and other stuff that we generated … this is a message that we could then readily understand..

and be totally frightened of

ET still has to use algorithms in some way, so there must be some substrate and methodology to do that. Despite the fantasy of the Mentats in Dune, biology cannot compare with the speed and flexibility of computers. No brain is going to be able to work out large computational problems. AFAICS, neural programming that is all the rage these days for ML can do only the most limited algorithmic computation, not even able to learn the rules to sum arbitrary numbers. Symbolic programming seems far more suited to many computational problems and tasks.

The resending of our transmissions in a way that we can readily understand them implies that the transmitter is very close. a Bracewell probe or smart node nearby. A civilization 2000 ly away might be sending us texts in Latin or Chinese. Unless they are within 120 ly (a relative next door neighbor) there are no em transmissions to even receive from us, assuming they could be detected.

But maybe they’re best bet is to infiltrate human society, take positions of power, and use that to control us. The trope of innumerable scifi plots and apparently the belief of many that we are ruled by “lizard people” (the scenario of the tv series “V”).

It would seem that a universal language or program would be based upon something we and they understand which at its base level would work under bilateral symmetry. Bilateral as in 0 and 1 – left eye right eye – particle antiparticle – wave/particle. Symmetry is universal and aliens would be symmetrical…

What if they have radial symmetry? Radial symmetry evolved first, and might have led to a species with the dominant intelligence.

Larry Niven postulated non-symmetrical intelligent species in his novel “The Mote in God’s Eye”. Biological symmetry is a dead-giveaway for a naturally evolved creatures while non-symmetrical creatures have specialized forms and, IIRC, artificially created, to handle the various functions of a highly advanced civilization.

Well apparently Greg Laughlin has sent “Hello World!” in Malbolge to Gliese 526, not sure what any aliens would make of that.

For the interested, more esoteric programming languages.

Esoteric programming language

Piet looks particularly interesting. ;)

Apparently they’ve received a reply. I wonder what it means?

++++[++++>—+.[–>+++-.—-.–[—>+—.[–>++++++++.++[->+++.+[——>+.–[—>+—.++.————.—[->+++-.++[—>++.>-[—>+-.[—->+++++-.——-.+++++++++++++.—.++++++++.+[—->++++.++[->+++.+++++++++.+++.[–>++++++++.—[->++++.————.—.–[—>+-.++++++[->+++.-[——>+-.+++++++++++.———-.+++++++++++++.—.—–.–.–[—>+-.+[—–>+.——–.[—>+—-..++[->+++++.++++++.–.[->+++-.++[—>++.–[->+++-.[->+++++++.++++++.-[—->++++.—[->++++.————.+.+++++.—.-[++>—+.—[->++++.————.——-.–[—>+-.[—->+—-.–[->+++++.+[—->++++.++[->+++.-[—>+–.——-..[—>+-.-[–>+++++.————.-[—>++–.[—>+—.+++[->+++.++[->++++.+[–>+.—[->+++.++[->+++++.+++++++..++[—–>++.————.[–>+++++++.———–.+++++++++++++.———-.——-.-[++>—–.+++.+[—->++++.–[->+++++.———-.++++++.–[—–>+++.++[->++++..[++>—–.++[->+++.+++.+++++.———-.-[—>+-.+++++[->+++.++++++.—.[–>++++++++.++[->+++.++++++.——-..[—>+—.[—->++++.[->++++.–[—>+—.—–.——–.-.-[—>+-.–[->++++-.+[->++++.+++++++++++.————.–[—>+–.[->++++.+++++++++++++.++++++.———–.++++.————.–[—>+-..+++[->+++.+++++++++++++.[–>++++++++.[->+++++.+++++++++++++.–.———–.[—>+—.+[—->++++.+[->+++++.++++++++++++.++++.[->+++++.+++++++++++..-[—>+.-[—->+++.[->++++.++++++++++++.++++++++.–.———-.+++++.——-.–[->+++-.++[—>++.>-[—>+–.[—–>+++..–[—>+-.–[->+++++.———-.++++++.-[—->++++.—[->++++-.—-..-.[—–>++++.++[—>++.++++++[->++.+++.—.

I know what this means, but only because Pat passed along the information. Let’s see if anyone can decode it, an interesting exercise!

Can you check the source code? 2 interpreters (online and Java) indicate that the source is not valid, possibly missing a “]”.

Alex, Pat just wrote to point out that my software mangled parts of the code, so it can’t be viewed properly. Sorry, Pat! I think he’s going to drop in later to give us the translation.

Yes, as Paul said, the angle brackets are interpreted as HTML formatting, so the code got mangled! I could use the & lt; and & gt; substitutions, but even easier is to replace left bracket with L and right bracket with R. So, here goes, and have fun!

++++[++++R—L]R+.[–R+++L]R-.—-.–[—R+L]R—.[–R+++++L]R+++.++[-R++L]R+.+[——R+L]R.–[—R+L]R—.++.————.—[-R+++L]R-.++[—R++L]R.R-[—R+L]R-.[—-R+++++L]R-.——-.+++++++++++++.—.++++++++.+[—-R+L]R+++.++[-R+++L]R.+++++++++.+++.[–R+++++L]R+++.—[-R++++L]R.————.—.–[—R+L]R-.++++++[-R++L]R+.-[——R+L]R-.+++++++++++.———-.+++++++++++++.—.—–.–.–[—R+L]R-.+[—–R+L]R.——–.[—R+L]R—-..++[-R+++L]R++.++++++.–.[-R+++L]R-.++[—R++L]R.–[-R+++L]R-.[-R+++++++L]R.++++++.-[—-R+L]R+++.—[-R++++L]R.————.+.+++++.—.-[++R—L]R+.—[-R++++L]R.————.——-.–[—R+L]R-.[—-R+L]R—-.–[-R+++++L]R.+[—-R+L]R+++.++[-R+++L]R.-[—R+L]R–.——-..[—R+L]R-.-[–R+++++L]R.————.-[—R++L]R–.[—R+L]R—.+++[-R+++L]R.++[-R+++L]R+.+[–R+L]R.—[-R+++L]R.++[-R++++L]R+.+++++++..++[—–R++L]R.————.[–R+++++++L]R.———–.+++++++++++++.———-.——-.-[++R—–L]R.+++.+[—-R+L]R+++.–[-R++++L]R+.———-.++++++.–[—–R+L]R++.++[-R++++L]R..[++R—L]R–.++[-R+++L]R.+++.+++++.———-.-[—R+L]R-.+++++[-R+++L]R.++++++.—.[–R+++++L]R+++.++[-R+++L]R.++++++.——-..[—R+L]R—.[—-R+L]R+++.[-R+++L]R+.–[—R+L]R—.—–.——–.-.-[—R+L]R-.–[-R++++L]R-.+[-R+++L]R+.+++++++++++.————.–[—R+L]R–.[-R+++L]R+.+++++++++++++.++++++.———–.++++.————.–[—R+L]R-..+++[-R+++L]R.+++++++++++++.[–R+++++L]R+++.[-R+++L]R++.+++++++++++++.–.———–.[—R+L]R—.+[—-R+L]R+++.+[-R+++L]R++.++++++++++++.++++.[-R+++L]R++.+++++++++++..-[—R+L]R.-[—-R+L]R++.[-R+++L]R+.++++++++++++.++++++++.–.———-.+++++.——-.–[-R+++L]R-.++[—R++L]R.R-[—R+L]R–.[—–R+++L]R..–[—R+L]R-.–[-R++++L]R+.———-.++++++.-[—-R+L]R+++.—[-R++++L]R-.—-..-.[—–R++L]R++.++[—R++L]R.++++++[-R++L]R.+++.—.

I have corrected the formatting and the angle brackets (even checked and substituted the html string), but I still get gibberish, and worse the code does not halt after all the outputs are completed. No text or 6×6 image makes any sense.

It looks like Brainfuck, but the range of values I get as outputs for conversion in some way range from -125 to 122, with 36 outputs. The -ve values however corrected do not make any usable match with ascii or unicode, not useful colors.

Looking forward to seeing how this is interpreted and what the signal may be.

[For text output, this interpreter site works well:

https://www.tutorialspoint.com/execute_brainfk_online.php

]

It may be some unicode format issue. There should be an equal number of “[” and “]”, but there is only “[“.

Examining the string this is the character breakddown:

len: 1228

char num_in_string

– 46

— 147

+ 588

[ 89

– 95

> 91

. 172

There are no exact matchups to repair the brackets, and my attempts to reformat the string fail to create a valid string to decode.

More seriously, this shows the problem of decoding an alien signal that must be correctly retrieved without any noise, and then the correct interpretation method used. The number of human esoteric computer languages is large, and growing, so there must be some clue as to how to decode the signal stream and then create an interpreter to run the code. “Hard” doesn’t even begin to indicate the difficulties. Brainfuck and other minimal symbol languages that are Turing complete should be potentially the easiest to understand and build interpreters for, and yet we see how a simple mangling changes things, and this is before noise is added. A Brainfuck “Hello World” needs 106 symbols:

++++++++[>++++[>++>+++>+++>+<<<+>+>->>+[<]>.>—.+++++++..+++.>>.<-.>+.>++.

The Brainfuck interpreter needs to operate on hardware that humans use, as it is not in any way “universal”.

Wolfram’s Rule 110 is Turing complete, although I have no idea how you build a tape with a particular sequence of bits using the rule to create an program. This paper Universality in Elementary Cellular Automata explains how it works, in principle. ;)

As you’ve rightly said, while this is a bit of fun, there is a serious point here about corruption, and how symbols can be misinterpreted. Since posting the update, I’ve noticed another corruption which changes strings of hyphens into em-dashes and en-dashes! However, that corruption is recoverable, so it just adds a layer of complexity.

I’ll let people have a go at decoding this (slightly harder than intended!) challenge, and then I’ll post the translation.

For anyone who’s still playing, here’s the output of the message:

“ Dear Earth. Thanks for the Malbolge message. You think that’s funny, huh? Well, perhaps you’ll find our fleet armed with antimatter bombs equally amusing. See you soon. LOL”

This has been a fascinating article and comment thread, and I’m very much looking forward to reading Brian’s book.

Interesting. Even using just the “Dear Earth” substring different Brainfuck encoders produced different encoded strings! How that can be so non-deterministic is just wild. I suspect that the posted encoded string was not only somewhat corrupted, but that it may even have needed a specific encoder to make it work.

The only consistency I could find in the posted string was the number of “.” output symbols for each character, i.e. the number of this symbol = string length (172).

The java code to decode the string could not correctly interpret any encoded string generated by the various online Brainfuck encoders either, making me think that there is some inconsistency in how these strings are correctly formatted for html output.

My lesson from this is that I probably won’t be trying any citizen science decoding alien signals unless they are really simple!

Once interesting point about Brainfuck as an encoder that it uses just 8 symbols. this works well as a 3 bit binary encoding. However, it probably would work better encoding like Morse code – long and short pulses, separated by no signal. For digital encoding, this could be represented in a number of ways from pulse lengths, number of pulses, etc.

I personally would prefer a system more like that of DNA information storage. For a reasonable possible output, each nucleotide base would be represented by 2 bits, and a word is 4 2 bit letters each separated by spacers, and each word separated by longer spacers, just as in Morse code. This prevents “frame shifts” so that the code is read correctly, and errors do not propagate, because unlike in biology, we do not want message changes as there is no advantage in even small changes to drive evolution.

I gather the actual method of transmission by our deep space probes uses Manchester encoding to ensure the bits are correctly received. Error-correcting codes for the signal are used to ensure that the signal itself can be corrected should any noise cause a corrupted bit stream to be received.

This is a problem for us if we receive an alien signal as we will not know what error correction encoding they would use. We might have to assume that their signal is as simple as possible and is going to be subject to noise that is not correctable by us.

All this is very familiar to the problem of our electronic media. They will not survive a collapse of civilization as the various encoding schemes for storage will be unknown. All we will have is fragments of media with bits with no knowledge how to translate them.

This suggests to me that a physical object or local operating alien probe is a far better bet for intentional information transfer. The probe can handle any internal encoding/decoding and make it output very easy to understand by the receiver, i.e. physical or projected images. (Lem indicates that projected cartoons onto clouds was used to communicate to the Quintan population in his novel Fiasco. ) A dumb artifact might contain images embedded on some media, compressed in size like our old microfiche/microfilm that can be easily viewed with technologically primitive magnification. The image sets might be as small as microdots, or even smaller and read with microscopes.

Do you ever read PoC||GTFO?

The 0x20 edition has an interesting article titled “ Let’s Build a Geniza from the world’s Flash Memory!”, by “Manual Laphroaig”.

You may find it of interest.

https://www.alchemistowl.org/pocorgtfo/pocorgtfo20.pdf

(Some of it is NSFW.)

I well remember Lone Signal when they arrived on the scene in 2013. Big things were supposed to happen in the METI field from them. Alas they never got the funding they wanted and soon disappeared without so much as a goodbye. Very disappointing.

Perhaps those few transmissions they made will be their lasting legacy.

https://en.wikipedia.org/wiki/Lone_Signal

Oh well, they certainly were not the only METI group to declare themselves, only to vanish in short order.

Symmetry is EM that the message is sent on and fundamental in physics. You are looking at an order that originated some 13.5 billion years ago. Gamma rays to electron/positron and back again. Why make it complected? The very base of nature would be the key.

In the news: I’m seeing a report of an ignominious end to the Bussard ramjet: https://phys.org/news/2021-12-science-fiction-revisited-ramjet-propulsion.html I take this as something of a relief – I was never really able to suspend my disbelief about a ship surrounded by giant magnetic fields. We should invent something else involving the laser manipulation of hydrogen atoms between a ship and a distant reflector.

First published in these pages, before its journal appearance:

Crafting the Bussard Ramjet

https://centauri-dreams.org/2021/02/19/crafting-the-bussard-ramjet/

Notes on the Magnetic Ramjet II

https://centauri-dreams.org/2021/07/09/notes-on-the-magnetic-ramjet-ii/

With continued thanks to Peter Schattschneider and Al Jackson.

My apologies – you’re right! The emphasis and conclusion of the recent article I saw was just *very* different. At first glance, prodded by the news report, I was thinking those figures contradicted your previous articles, but they are of course the same. Your table, however, encouraged us to look further down at more achievable notions – perhaps a thrust of only 1/1000 g, with a funnel 1000 times shorter and a 56 km radius. The key intuition-buster there was that the travel time is increased only by the square root of that, because acceleration… Reaching Proxima in a century would still be a planetary accomplishment, and that device is not so tremendously implausible as interstellar spaceships go. I like it better than launching a thousand nuclear weapons into space or building a massive array of deadly lasers, at least.

No apologies needed! I just wanted you to see the articles, since the two authors have Centauri Dreams connections and there is good discussion in the comments there.

Beautiful article. Awe-inspiring. Nonetheless I have a great doubt: it is all about frequencies, radio- or microwave-. But we have not been able to pick up any signal, in at least 60 years of observation, from the “cloud people”, the guus who fly around at their free will, who certainly exist and have technology to sell and are in the same direction, the sky. Maybe no one up there or elsewhere uses frequencies to communicate any longer …