A recent paper in Acta Astronautica reminds me that the Mission Concept Report on the Interstellar Probe mission has been available on the team’s website since December. Titled Interstellar Probe: Humanity’s Journey to Interstellar Space, this is the result of lengthy research out of Johns Hopkins Applied Physics Laboratory under the aegis of Ralph McNutt, who has served as principal investigator. I bring the mission concept up now because the new paper draws directly on the report and is essentially an overview to the community about the findings of this team.

We’ve looked extensively at Interstellar Probe in these pages (see, for example, Interstellar Probe: Pushing Beyond Voyager and Assessing the Oberth Maneuver for Interstellar Probe, both from 2021). The work on this mission anticipates the Solar and Space Physics 2023-2032 Decadal Survey, and presents an analysis of what would be the first mission designed from the top down as an interstellar craft. In that sense, it could be seen as a successor to the Voyagers, but one expressly made to probe the local interstellar medium, rather than reporting back on instruments designed originally for planetary science.

The overview paper is McNutt et al., “Interstellar probe – Destination: Universe!,” a title that recalls (at least to me) A. E. van Vogt’s wonderful collection of short stories by the same name (1952), whose seminal story “Far Centaurus” so keenly captures the ‘wait’ dilemma; i.e., when do you launch when new technologies may pass the craft you’re sending now along the way? In the case of this mission, with a putative launch date at the end of the decade, the question forces us into a useful heuristic: Either we keep building and launching or we sink into stasis, which drives little technological innovation. But what is the pace of such progress?

I say build and fly if at all feasible. Whether this mission, whose charter is basically “[T]o travel as far and as fast as possible with available technology…” gets the green light will be determined by factors such as the response it generates within the heliophysics community, how it fares in the upcoming decadal report, and whether this four-year engineering and science trade study can be implemented in a tight time frame. All that goes to feasibility. It’s hard to argue against it in terms of heliophysics, for what better way to study the Sun than through its interactions with the interstellar medium? And going outside the heliosphere to do so makes it an interstellar mission as well, with all that implies for science return.

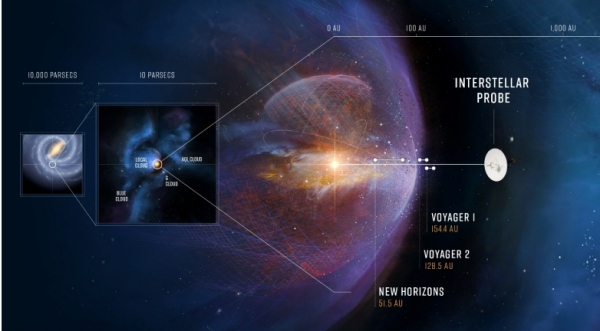

Image: This is Figure 2-1 from the Mission Concept Report. Caption: During the evolution of our solar system, its protective heliosphere has plowed through dramatically different interstellar environments that have shaped our home through incoming interstellar gas, dust, plasma, and galactic cosmic rays. Interstellar Probe on a fast trajectory to the very local interstellar medium (VLISM) would represent a snapshot to understand the current state of our habitable astrosphere in the VLISM, to ultimately be able to understand where our home came from and where it is going. Credit: Johns Hopkins Applied Physics Laboratory.

Crossing through the heliosphere to the “Very Local” interstellar medium (VLISM) is no easy goal, especially when the engineering requirements to meet the decadal survey specifications take us to a launch no later than January of 2030. Other basic requirements include the ability to take and return scientific data from 1000 AU (with all that implies about long-term function in instrumentation), with power levels no more than 600 W at the beginning of the mission and no more than half of that at its end, and a mission working lifetime of 50 years. Bear in mind that our Voyagers, after all these years, are currently at 155 and 129 AU respectively. A successor to Voyager will have to move much faster.

But have a look at the overview, which is available in full text. Dr. McNutt tells me that we can expect a companion paper from Pontus Brandt (likewise at APL) on the science aspects of the larger Mission Concept Report; this is likewise slated for publication in Acta Astronautica. According to McNutt, the APL contract from NASA’s Heliophysics Division completes on April 30 of this year, so the ball now lands in the court of the Solar and Space Physics Decadal Survey Team. And let me quote his email:

“Reality is never easy. I have to keep reminding people that the final push on a Solar Probe began with a conference in 1977, many studies at JPL through 2001, then studies at APL beginning in late 2001, the Decadal Survey of that era, etc. etc. with Parker Solar Probe launching in August 2018 and in the process now of revolutionizing our understanding of the Sun and its interaction with the interplanetary medium.”

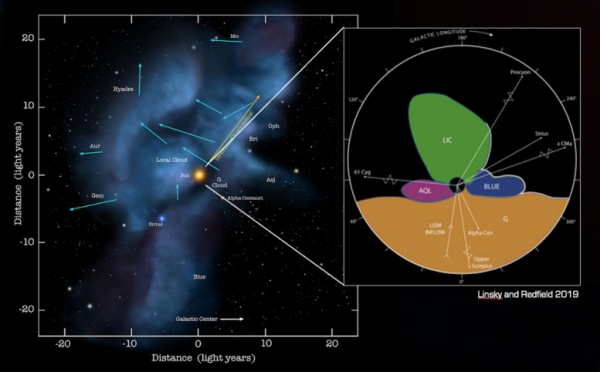

Image: This is Figure 2-8 from the Mission Concept Report. Caption: Recent studies suggest that the Sun is on the path to leave the LIC [Local Interstellar Cloud] and may be already in contact with four interstellar clouds with different properties (Linsky et al., 2019). (Left: Image credit to Adler Planetarium, Frisch, Redfield, Linsky.)

Our society has all too little patience with decades-long processes, much less multi-generational goals. But we do have to understand how long it takes missions to go through the entire sequence before launch. It should be obvious that a 2030 launch date sets up what the authors call a ‘technology horizon’ that forces realism with respect to the physics and material properties at play here. Note this, from the paper:

…the enforcement of the “technology horizon” had two effects: (1) limit thinking to what can “be done now with maybe some “‘minor’ extensions” and (2) rule out low-TRL [technology readiness level] “technologies” which (1) we have no real idea how to develop, e.g., “fusion propulsion” or “gas-core fission”, or which we think we know how to develop but have no means of securing the requisite funds, e.g., NEP (while some might argue with this assertion, the track record to date does not argue otherwise).

Thus the dilemma of interstellar studies. Opportunities to fund and fly missions are sparse, political support always problematic, and deadlines shape what is possible. We have to be realistic about what we can do now, while also widening our thinking to include the kind of research that will one day pay off in long-term results. Developing and nourishing low-TRL concepts has to be a vital part of all this, which is why think tanks like NASA’s Innovative Advanced Concept office are essential, and why likewise innovative ideas emerging from the commercial sector must be considered.

Both tracks are vital as we push beyond the Solar System. McNutt refers to a kind of ‘relay race’ that began with Pioneer 10 and has continued through Voyagers 1 and 2. A mission dedicated to flying beyond the heliopause picks up that baton with an infusion of new instrumentation and science results that take us “outward through the heliosphere, heliosheath, and near (but not too near) interstellar space over almost five solar cycles…” Studies like these assess the state of the art (over 100 mission approaches are quantified and evaluated), defining our limits as well as our ambitions.

The paper is McNutt et al., “Interstellar probe – Destination: Universe!” Acta Astronautica Vol. 196 (July 2022), 13-28 (full text).

“and (2) rule out low-TRL [technology readiness level] “technologies” which (1) we have no real idea how to develop, e.g., “fusion propulsion” or “gas-core fission”, or which we think we know how to develop but have no means of securing the requisite funds, e.g., NEP (while some might argue with this assertion, the track record to date does not argue otherwise).”

That is the basic dilemma: Actual interstellar missions require either the use of nuclear energy, or huge power beaming infrastructure with unavoidable strategic military implications. As a culture, we’ve become terminally stupid about all things nuclear, utterly incapable of thinking about it rationally. We can advance the theory, but we’re not permitted to advance the practice.

Until that somehow changes, we’re stuck with marginal non-nuclear technologies that imply absurdly long mission times to the stars.

Yes and no. There are clear issues with nuclear material getting loose in the biosphere. For nuclear power, utopian desires forget or brush away the dangers of malefactors and Murphy’s law. We’ve just seen Russian soldiers either deliberately try to wreck Ukraine’s largest nuclear power station, and either accidentally or deliberately disturb the Chernobyl site. The partial disassembly of Soviet/Russian nuclear warheads was also fraught with problems of theft, and nuclear proliferation.

For nuclear drives in space, the issue is that the fissile material must currently be launched from Earth. There will inevitably be accidents. The supporters claim that even with an accident, the material will not be released from its container. That may or may not be true, but the possible malfeasance of the various actors may precipitate an accident with a release of fissile material. The consequences would be far greater than the concerns of some over the Cassini probe gravity assists flyby of Earth with the risk of the RTGs burning up and releasing the plutonium.

One hope is that the development of reliable heavy-lift launchers might build in more safety redundancy in the containment systems allowing

for the probability of failures to be generally acceptable. But who decides that? Our history indicates that we cannot plan for all eventualities, and we tend to be very late in changing rules. (It took nearly 20 years before above-ground and underwater nuclear bomb tests were banned by treaty (1963), and not all nuclear nations have signed that treaty.)

If the fissile material could be mined and refined on the Moon or elsewhere, then the biosphere pollution issue would be largely moot, although not the security issues.

This leaves fusion as the desired power source. I think we can accept launch accidents with potential hydrogen isotope losses. Personally, in my ignorance, that would be an acceptable means of rocket propulsion, whether electric or not. But, as you say, at this time we cannot engineer any fusion devices to produce net power or to provide some sort of plasma exhaust by fusion. So this remains a future option – maybe.

I am less concerned with the use of phased laser arrays as military weapons. Ground-based arrays will need some way to direct the beams to distant targets on Earth or be restricted to space targets that are in a cone overhead. IMO, their size and fixed location make them easy targets. More paper tigers than real. Smaller, mobile, and space=based versions would be weapons but would have far less power than the many GW power systems envisaged for beamed sails of the Breakthrough Starshot proposal.

If the project was to be done now, I would still use chemical rockets. However, I would use lower-cost reusable launchers to throw the stages and payload into orbit and assemble them there, as my preferred mission architecture. This hugely lowers the cost compared to using the [still untested] SLS and only increases the risks of failure to reliably dock the components in orbit. [IMO, this is not much of a risk.] The reduced costs make this a more viable project. The science design should ensure that some science can be done in-system, so as to usefully employ scientists and engineers while it is taking its up to 50-year journey to 1000AU.

As an enthusiast, however, I would like to see more funding for other propulsion technologies. I personally find it sickening that the US Congress will almost nonchalantly massively increase military spending on its “legions” while starving space science and technology of just a tiny fraction of that increased military spending. We are trapped in “Imperial overstretch” and the result will be the same for the USA as it was for the British Empire which rapidly imploded after WWII and which I think contributed to the concomitant decline of British science and technology.

“We’ve just seen Russian soldiers either deliberately try to wreck Ukraine’s largest nuclear power station”

Nobody saw that. What was seen was Ukranian soldiers firing at a training and education building nearby, so that, if Russian guards used heavy artillery against he Ukranian position, they could hit the central by error. They instead used assault rifles and the Ukranians soldiers dispersed when reinforcements came.

“and either accidentally or deliberately disturb the Chernobyl site.”

Nobody saw that either. The same workers that were attending the central when the Russian army arrived continued working there under Russia supervision. Some days later Russian nuclear operators came to help. No Ukranian operators working for Ukranian nuclear agency wanted to replace them. So the Ukrainian team lived in the plant for 4-5 weeks until finally another Ukranian replacement team was found. Currently there are no Russian soldiers in the plant, only common (private) security guards.

Destroying power plants and energy supply lines is US Army’s modus operandi, not Russian Army’s. Contrary to US invasions, electricity, internet, cell phones, TV, … continue working in the Ukraine.

All of that said, let’s go no further into geopolitics and ongoing wars. Our topic remains interstellar, or at least the enabling technologies pointing the way there.

Thank you Paul. I have been around long enough to have good reason to doubt every proclamation/fact of a political nature and most especially as of late. Stick to the science.

Its a trend I’ve noticed on another specialized sites I occasionally contribute to. Politically motivated trolls try to gain control of on-line communities of interest with informed or otherwise influential posters, particularly those with an established reputation and following. The result is to either attack any deviation from the political orthodoxy the troll happens to subscribe to, or to establish their own cadre of fellow travelers which can then dominate the discussion and punish dissenters, drive them off, or cause them to self-censor.

The goal is to exploit the prestige or authority of these chat rooms so that lay individuals interested in the topic (say, astrobiology or interstellar communication) will be deluded into thinking the “experts” are in unanimity on whatever partisan issue is being pushed, thereby legitimizing it

I suspect these trolls are not necessarily single actors with strong personal views, but organized groups systematically trying to promote a specific agenda.

I’m not speaking about any particular political or cultural agenda. I’m just talking about agendas in general. I despise propaganda, even when I happen to agree with it.

The only way to avoid this is for strong moderation to keep the discussion on topic.

“The goal is to exploit the prestige or authority of these chat rooms so that lay individuals interested in the topic (say, astrobiology or interstellar communication) will be deluded into thinking the “experts” are in unanimity on whatever partisan issue is being pushed, thereby legitimizing it

I suspect these trolls are not necessarily single actors with strong personal views, but organized groups systematically trying to promote a specific agenda.”

C’mon , lets be real; People are stating ques. because they ques. the validity of this or that idea…

Making unverifiable statements of any kind, including political ones are of no use on here. We (hopefully) are on here to talk and share information about space related issues. If politics is in some way involved it should be allowed but making unverifiable claims about events in the Ukraine are not relevant and could be part of a larger misinformation campaign. There are definitely individuals and groups of individuals doing this sort of thing online everywhere and on many, many platforms.

“If politics is in some way involved it should be allowed but making unverifiable claims about events in the Ukraine are not relevant and could be part of a larger misinformation campaign.”

This is what upsets me about this site (an other un-related sites) – the inability to look at the integrated picture. In reality, the competition for resources – they matter.

@Henry Cordova:

Who do you refer to as troll? Maybe yourself? Both Alex Tolley and me are long time posters here, having started commenting years ago.

“If politics is in some way involved it should be allowed but making unverifiable claims…could be part of a larger misinformation campaign. There are definitely individuals and groups of individuals doing this sort of thing online everywhere and on many, many platforms.”

Gary Wilson April 19, 2022, 17:04

I am in total agreement with Mr Wilson, and I have no quarrel with Mr Tolley. But sinister individuals and groups conducting misinformation campaigns are now, regrettably, a feature of online Terrestrial communications, for the reasons I allude to in my post.

I am not a troll, and I did not respond to your post specifically, although it is clear you were very sensitive to the point I had to make.

I think we’ve kicked this around enough. Let’s get back to the science.

Thanks for the reminder Paul to get back on topic, although I do think this thread served a useful purpose and gave insight about a wide range of viewpoints.

If you must use chemical rocketry, yes, the rocket should be assembled in orbit. It’s not even a close question.

Rockets launched from Earth must be capable of surviving multiple g’s of acceleration, and a great deal of vibration, while passing through a dense atmosphere at high speed. A rocket assembled in space can be built much lighter, with a considerably better mass ratio, and no effort at aerodynamics. This has serious implications for the attainable delta-V.

As far as the dangers of using nuclear, the issue here isn’t so much that nuclear power lacks dangers, as a failure to properly contextualize those dangers, compare them on an even basis with the dangers of OTHER sources of energy.

Remember, no source of energy is risk free.

https://ourworldindata.org/safest-sources-of-energy

Keep in mind that the energy required for chemical rocketry is actually considerably higher than for nuclear rocketry, because of the simply enormous amount of fuel that has to be carried along and also accelerated.

Then, on a deaths per TWH basis, you’re faced with the chemical energy being 40-65 times more dangerous than the nuclear energy.

You’re going to kill an awful lot of people to reduce the chance of an unmeasurably small increase in background radiation.

Then there’s the fact that, being so compact, you can go totally overboard in terms of protective measures with nuclear, encapsulating the fuel in a manner to survive intact the worst possible accident.

As an aside, one does not usually calculate the radiation risk for non-nuclear power sources, which is highly distorting; Coal typically contains a significant amount of radioactive isotopes, and you’ll find if you analyze it that the nuclear energy content of coal is on a par with the chemical energy content. But nobody is taking coal wastes and entombing them in concrete for thousands of years… Your average coal plant violates the radiation emission limits placed on nuclear plants!

But this is not the comparison we need to make. We should be comparing the radioactive contamination per unit mass of the payload. A MT of U235 is a lot more radioactive than a MT of coal or coal ash. The 1978 falling to Earth in Canada

of the Soviet Kosmos 954 illustrates the problem.

Heavy lift launchers could have the capacity to ensure the containment of fissile material will survive the harshest accident, and if so, then I concede this would be a good option. But as Heinlein observed, humans are greedy, and therein lies the problem as this leads to cutting corners and playing the system to increase profits. The history of disasters is littered with human behavior as the cause. Two recent examples come to mind, including the defective bolts in the rebuilt SF Bay bridge, and Kobe Steel falsifying QA records on its steels. Inferior counterfeit components in the supply chain are a major issue today. Bad actors are a fact of life, and I am no longer surprised when a once blue-chip company proves it can no longer be trusted. [Boeing, I am looking at you.] So in theory, I would like to believe solid engineering with wide safety margins would be employed in launching fissile material to space, but my head says that there will be unexpected accidents followed by consequences for some unlucky population.

I note that in a previous post on NTRs, Greg[?] Benford insisted that the fissile material would be launched separated from the nuclear engine and installed in space. But if commenter Antonio’s view was taken, the whole ship would be launched as a unit, with the likely result that containment would be sacrificed to the minimum. While I don’t think space workers will be building ships in space docks, I would not like to think that spacecraft would be limited to single launcher lift masses. I want to see space ships the size of ocean-going ships to transport people and goods within our system and beyond.

The falsification of the advantages of “green power” has given Germany the highest electrical rates in the world despite the reintroduction of coal/peat burning power plants to mitigate the green power disaster caused by unaffordable energy. How many people will suffer from a collapsing economy? There is little doubt that such a collapse leads to dramatically shortened lifespans by reduced health care and increase in death by despair (suicide drug and alcohol overdoses, etc.). The loss in human longevity dwarfs any plausible worse-case nuclear disaster (OK, not counting a nuclear war).

I live less than 2 miles away from a large nuclear power station and can see the cooling tower of another NPP across the lake. No fear but I do feel a slight itch from all those neutrinos (just kidding).

Firstly, there is no support for your claim that German’s cannot afford renewable energy. The problem for Germany right now is its dependence on Russian fossil fuels, notably natural gas. So the problem is supply, not cost.

Why are German electricity prices so high albeit slightly less than Denmark’s? The answer is not supply costs, but taxes:

Source: Household electricity prices worldwide in September 2021, by select country (in U.S. dollars per kilowatt-hour)

In California, my electricity taxes are negligible. Electric prices vary widely across the USA. In California, it is about $0.22/kWh, while in Nebraska it is about $0.950/kWh. Hawaii, which imports a lot of its energy has the highest rate at $0.37/KWh, about the same as Germany. Source: Table 5.6.A. Average Price of Electricity to Ultimate Customers by End-Use Sector

[Americans love to complain about gasoline prices, but in reality, gasoline taxes are very low compared to taxes across the EU.]

The affordability issue should be at least partly dependent on usage. Per capita, Germany uses less electricity than Americans – ~ 7000 KWh vs 13000 KWh. Germany might now demand solar panels on roofs, but better home construction and less extreme weather may help offset the demand.

Even if, for the sake of argument, it was shown conclusively that green energy was much more expensive than fossil fuel energy, there are the cost and health issues of a heating planet. German life expectancy is nearly 3 years more than for the USA. The life expectancy in the Russian petrostate is 6 years lower than in the US. So I think it is fairer to state that other factors are more important than electricity costs. What we do know is that global heating is going to make some parts of the world unlivable for humans. It already is during the summers in parts of the ME and India as already documented. Loss of freshwater and damaged economies is going to make the current migrations for asylum and economic improvement look like nothing compared with what is likely to come. The response to migration by some countries, particularly Anglo ones is already very poor, and Britain is perhaps currently leading in its shameful response, overtaking Australia. The cost of global heating by short-sighted actions will dwarf those of converting to renewable energy. If Germany had made more effort to wean itself off Russian gas as the cheapest source of heating and industrial energy, it wouldn’t be finding itself pitting economic and moral imperatives against each other today.

“But this is not the comparison we need to make. We should be comparing the radioactive contamination per unit mass of the payload.”

No, this is absolutely the comparison we need to make. People dying obtaining the energy are just as dead as people dying of cancer from leaked radiation.

Insisting that only (potential) radiation deaths count is a Green debating tactic to uniquely disadvantage nuclear. Oil field workers lives count, too. It’s deaths from ALL sources we must compare.

Don’t give in to anti-nuclear hysteria, compare energy sources objectively.

I cannot be sure but you seem to be comparing nuclear power with coal combustion to supply the same energy for baseload power generation on Earth. Yes, the sheer amount of coal burning releases radiation over time. The deaths of miners is a paid, voluntary risk, not an involuntary one.

But when we are talking about localized nuclear accidents, the issue is different. Clearly, someone exploding a bomb spreading a tonne of coal ash in a city center is less of a problem that the same amount of fissile material, low grade or otherwise. I used the example of Kosmos 954 as this is a comparable situation. Had it landed in downtown Toronto, Montreal, or Vancouver, it might have made the city downtown too radioactive to safely live in for some time.

Similarly, after Chernobyl, the town and surrounding area is off-limits to human habitation.

The other issue is pulse doses versus small doses over a long period. Exposure to all your lifetime x-rays or airline flights in a short period is far more problematic in causing cancer than the same doses spread out over a lifetime. Using the linear relationship of dose and cancer rates may not even be correct for small doses comparable to the natural background.

However, I accept that one’s tolerance for certain risks is not universal.

Launch the nuclear engine with the nozzle pointing up towards the escape tower for recovery if needed. Assemble in space. NTRs work best with Hydrogen…so I might make a one-off SLS with LOX tank at the bottom, the nuclear stage at the top terminating in a NERVA type engine hooked to the hydrogen tank.

On orbit, the SLS engines and the lower lox tank separates as a unit. All this perhaps at the top of SuperHeavy in place of Starship, perhaps with only a single RS-68 instead of the usual four RS-25 SSMEs.

I agree with the military spending needing to be cut. F-35 dwarves SLS budget—the booster best suited for this interstellar precursor. 15 years to the heliopause.

” The partial disassembly of Soviet/Russian nuclear warheads was also fraught with problems of theft …”.

What theft can u pt. to ? Your source?

Is this sufficient, or too circumstantial?

Loose Nukes

“The Apollo Affair was a 1965 incident in which a US company, Nuclear Materials and Equipment Corporation (NUMEC), in the Pittsburgh suburbs of Apollo and Parks Township, Pennsylvania was investigated for losing 200–600 pounds (91–272 kg) of highly enriched uranium, with suspicions that it had gone to Israel’s nuclear weapons program.”

https://en.wikipedia.org/wiki/The_Apollo_Affair?msclkid=5c56304bc12911eca054529ae92b4e0a

the US worrying about locking the barn door after the horse has escaped (let loose on PURPOSE). So, let’s not get on our High horse about accountability

Totally agree. Fortunately, in the east they are not so nucleohysterical, although they don’t seem much interested in insterstellar missions yet.

“although they don’t seem much interested in insterstellar missions yet.”

Well China is planning an interstellar probe, they also have a dedicated exoplanet search programme

https://en.wikipedia.org/wiki/Interstellar_Express

https://www.sciencetimes.com/articles/30782/20210421/chinas-new-space-telescope-300-times-greater-field-view-hubble.htm

although they don’t seem much interested in insterstellar missions yet.

Well China is planning an interstellar probe, …. ”

China’s motivation might be propaganda; not science.

The assumption of using the SLS Block 2 is a dubious proposition, for the same reason their mention of using the canceled Aries V. SLS is expected to cost over $1bn per launch, excluding all the extra upper boost stages, the probe itself, and 50 years of human support which will end with a generation or 2 of new scientists and technicians at mission control.

With NASA capping Decadal mission costs at %3-5bn, even a single probe might be pushing the limits using SLS. I can understand that McNutt pushing the homegrown MSFC ELV solution, but I really doubt that it will still be flying by the time this mission launches. RLV with similar lift capabilities will be available at a fraction of the cost. I am not even sure why the mission has to be flown as a single stack. Why not launch separate components and mate them in orbit? We have automated docking vehicles today (even the Russian Progress rockets do this with ancient technology). Back in the 1950s manned deep space missions envisaged the construction of spaceships in orbit. We have done this with every space station after Skylab. Why not with this interstellar mission? A rethink might be forced on this mission architecture.

I would hope that the probe can do lots of science well inside the solar system. I cannot imagine any scientist twiddling their thumbs and watching their careers evaporate waiting for their experiments to run while they are technically in retirement. They will want to be taking on board scientists and engineers yet unborn to complete the mission. But consider, think how much technology will have advanced by the time the probe has reached its final position 1000AU out. [Montgomery Scott might be able to use an ancient Macintosh computer and software to demonstrate transparent aluminum is possible, but this is fantasy. How many computer jocks can program a 50-year-old mainframe computer from the 1970s? What about computers from the 1950s? Think of all those retired Cobol programmers rehired to fix the year 2000 software problem. [I have visions of the movie “Space Cowboys” when NASA has to come to retired engineer Frank Corvin (Clint Eastwood) as the current engineers haven’t a clue how to understand the old technology he built for Skylab.]

While I concur with our host that we should build and fly what we can to prevent stasis, for those pursuing that path there is teh real risk that their careers will be damaged by newer technologies that will manage the mission much more quickly. A fleet of beamed micro-sail craft might acquire the same data from many more positions around the heliosphere decades before a single large probe reaches its goal. We cannot know this will be the case, but it is a risk. Les Johnson’s solar sail roadmap posits graphene sails that will reach velocities of 20+ AU/yr. We cannot build them now, but would they be able to take large payloads out to 1000AU in a time frame similar to the proposed mission, but at a fraction of the cost?

Which leads me to my last point. TRLs are a useful metric to evaluate technology risks. What they cannot do is provide estimates of how fast the TRL ladder can be climbed with appropriate funding. Solar sails were stalled at low TRLs for decades due to lack of funding, and it took the Planetary Society to kickstart that development with their Cosmos-1 sail, JAXA to successfully fly IKAROS, and now there are a number of sails being developed to mature the current state of the art. The future of solar sails depends to some extent on the development of very low aerial density sails that increase performance, with or without beamed power to boost their velocities. How fast that development occurs is dependent on funding, currently a fraction of what is spent on conventional rockets. In contrast, chemical rockets are at the mature end of their development, and therefore only by building bigger rockets with more stages can the final velocities be increased. Brute force over elegance.

When one puts together the distance of the science payoff, the sheer cost of the proposed SLS-based architecture, and the increasingly anti-science position of the public and consequently the US legislature, I don’t see this proposal as being a little more than a long shot.

Use of the SLS has to be totally politically driven, and you’re right, it’s eating the proposed budget alive. Committing to using the SLS basically renders any proposed program unaffordable right at the start.

I have to agree with Brett. The extremely high launch costs for SLS (up to 2 billion per launch according to the latest government audit) make it untenable as a high frequency work horse to deliver large payloads to space. It will be phased out quietly and without fanfare in the near future. It cannot compete with Starship for launch costs per pound and as soon as Starship has shown its reliability the game is over for SLS. This is already known at NASA. The plan to go to the moon no longer includes the Gateway system. Instead NASA will initially use SLS to get astronauts into moon orbit to rendezvous with Starship which will then land them on the moon. Once Starship is human certified for the complete trip however SLS will be phased out. It may continue to be used for a couple of years to avoid obvious embarrassment but the cost per launch is wildly exorbitant and completely unsustainable.

“Back in the 1950s manned deep space missions envisaged the construction of spaceships in orbit. We have done this with every space station after Skylab. Why not with this interstellar mission?”

For the same reason every non-space-station mission in history has used the single stack approach: you can test and fix almost everything you want on the ground before launch, but you can test and fix almost nothing in space unless you live there (and even then, options are very limited). So risks are too high.

Hi Paul

The recent work on thermophotovoltaics which push them to 40% efficiency does make me wonder if RTG’s can’t be pushed to the limit. Bumping the efficiency to several times higher than current thermoelectrics or even Stirling Cycle designs, pushes them closer to usefully powering an electric engine for an LISM probe, as well as supplying comms and system power. Of course the choice of propellant and electric propulsion system is another TRL issue for a 2030 probe, but with a Starship launch of a booster for the interplanetary insertion into a Jupiter Oberth kick means the electric propulsion is best used as a topper for the system, once it leaves Jupiter’s sphere of influence. Alternatively it could be used for deceleration for an intercept of an interstellar object mission .

Given extremely finite funding opportunities and an increasingly unstable geopolitical climate I would argue for sail propulsion technologies driven by a laser array that is scalable over time to achieve greater and greater distances. I don’t see any point in the near future where we will be launching and assembling nuclear powered drive systems in Earth orbit. It sounds great but isn’t likely at all given the technological and political issues involved. A laser array has some inherent risk if misused for military purposes but we have survived for more than 50 years with thousands of nuclear warheads pointed at each other. Surely one reasonable possibility is a payload of modest size being sent to 1000 AU with the capability of returning information (probably using the sail). The laser array is not being built in isolation. Any large power that could afford to build it would know that thousands of nuclear weapons are still pointed at them.

OT: New METI message designed by researchers at JPL, SETI Institute and other institutions (not sent yet):

https://arxiv.org/abs/2203.04288

At 7 au/year (in the document) how it is suppose to get to 1000 au in a 50 year lifespan? Unless they are aiming at a wormhole at 350 au (not mentioned) the probe will need 143 years to get there, and the remaining power would be much less than 300W to do anything. Is 1000 au just a PR while the real target is 350 au? If yes, than I call this missinformation.

The document doesn’t specify a velocity, AFAICS, just options of energy vs payload using the various SLS block 1 configurations.

If 20AU/yr = 95 km/s, then the energy (MV^^2) = payload * 95^^2 must lie on one of the curves in Figure 5.

payload mass = 0.05t, V= 95km/s

MV^2 = 0.05*(95^2) = 450.

If this is the correct interpretation, the claim is that they can throw a 50 kg payload out of the solar system at 95 km/s or 20 AU/yr using the SLS block 1B, Centaur D, and STAR 488V (whatever that is) configuration. All this could be done before the end of this decade.

A proposal to further the technology required to produce a thermal solar rocket for near interstellar exploration. Another propulsion concept that has advantages over both beamed sail and nuclear propulsion.

https://www.nasa.gov/directorates/spacetech/niac/2022/Combined_Heat_Shield_and_Solar_Thermal_Propulsion_System/

You might be interested to read the March 3rd, 2022 CD post:

Engineering the Oberth Maneuver

Regarding starship propulsion:

Thunderstorms make antimatter

https://science.nasa.gov/science-news/science-at-nasa/2011/11jan_antimatter/

And there’s a place in Venezuela where a combination of geographic features makes lightning storms happen almost every day of the year.

https://en.wikipedia.org/wiki/Catatumbo_lightning

Maybe there could be antimatter manufacturing plants on Jupiter’s moons, especially Io.

Lots of natural processes generate anti-matter particles. It’s a matter of how much, what type and how they can be harvested. From everything I’ve seen, it is probably far more effective to manufacture anti-matter. That isn’t saying much since it will still be woefully inadequate.

My question is how long any generated antimatter lasts during a lightning storm. Doesn’t it just get mostly annihilated by other matter quite quickly? (Not all, as it can accumulate in the magnetosphere.)

It has been suggested that the best place to harvest antimatter is around Saturn.

Capturing production vs mining accumulated material is always a balance.

An analogy is using the biomaterial produced by the sun. We could mow grass daily to use as an energy source, but historically it was far easier to cut trees for wood after decades of accumulations and even better to mine the millions of years of accumulated biomaterial as coal (and later oil and gas) to power our industrial revolution. Harvesting crops every season is best for food as we cannot eat coal (yet).

Anti-matter may be different as accessibility may be key. We have no mining craft available that can get to Saturn and return. But we might have such craft able to mine the Earth’s magnetosphere much sooner if anti-matter energy is a viable technology.

“Maybe there could be antimatter manufacturing plants on Jupiter’s moons, especially Io.”

http://csep10.phys.utk.edu/OJTA2dev/ojta/c1c/jupiter/magnetic/io_tl.html

“As Io moves around its orbit in the strong magnetic field of Jupiter and through the plasma torus, a huge electrical current is set up between Io and Jupiter in a cylinder of highly concentrated magnetic flux called the Io Flux Tube. The Flux Tube has a power output of about 2 trillion watts, comparable to the amount of all manmade power produced on Earth.”

Yes, it does. On the second slide under BASELINE MISSION CHARACTERISTICS is written:

Launch Mass Peak exit speed

2036. 860 kg. 7.0 au/year

I am not seeing what you are seeing. Can you provide some more context?

URL of the document and the text to search on.

This is in the file “Interstellar Probe: Humanity’s Journey to Interstellar Space” linked at the begining of your article.

https://interstellarprobe.jhuapl.edu/Interstellar-Probe-MCR.pdf

After the Content (page vi) there are two graphic slides. Look at the top of the second slide. It is also possible that these slides are for an earlier version of the project. I don’t know.

You are right. Rereading the documents more carefully, I think there is a lot of obfuscation going on to imply that the goal is 1000AU in 50 years, but that is a “problematic” goal and that a 50-year mission only gets us partway there – enough to fulfill the science goals (heliosphere and ISM science).

It is a mission to justify the SLS, but it is very expensive and fails to meet the aspirational goals of 1000 AU within 50 years. I am now wondering whether the dismissal of the solar sails using the SOM and NEP are biased to make the SLS the preferred solution.

Beamed sails and ultralow aerial density solar sails are possibly looking like better bets, even if the technology is at lower TRL levels. For beamed sails, the cost is then transferred from a single mission and ELV to the multi-use phaser array. Ultra-low aereal density solar sails with a SOM require materials development. In “Solar Sails” by Vulpetti, Johnson, and Matloff, Figure 17.10 shows that a solar sail with a density of 1.2g/m^2 could reach 550 AU in 21.8 years, with a perihelion of 0.151 AU (doable) reaching a cruise velocity of 25.82 AU/yr – i.e. could reach 1000 AU within 50 years. The question is how long could it take to reach this material development for a practical sail and how much would it cost to develop, build, and launch such a mission?

Hi Alex

Launching from 0.005 AU that 0.0012 kg/m^2 sail would get to 142 AU/yr. Assuming the thermal shields can get one to the Photosphere of course, which is surprisingly a technological possibility. Thinner sails might allow higher speeds, but the real trick is slowing down to inspect interesting things at the other end.

I doubt that any material sail could skim the photosphere without becoming a puff of ionized gas. But given that possibility, let me address your point about “slowing down to inspect the target”.

At 142 AU/yr, it would take nearly 2000 years to reach Proxima, so that isn’t a target worth worrying about. Yes, it would whiz by any KBO, making any visual record rather difficult.

But the mission to characterize the medium around the heliosphere, heliosheath, and interstellar does not require any slowing at all. Indeed, it would be a decent velocity for many of these sails to map out the details of these conditions with in situ data collection. It should also be fine for some telescope work along the SGL, especially if the telescope payload had a good light collection capability (wouldn’t large Fresnel lenses be good for this?). However, I suspect that beamed sails, whether using lasers or microwaves will prove the better and more versatile propulsion method, allowing different velocities tailored to missions, as well as carefully aligned “string of pearls” sails for communication and serial flybys. The same technology would allow KBO observations as well as very fast ISM sampling and even interstellar flybys by simply altering the acceleration time or strength.

MAY 18, 2022

Engineers investigating NASA’s Voyager 1 telemetry data

by Jet Propulsion Laboratory

https://phys.org/news/2022-05-nasa-voyager-telemetry.html

Why technical manuals and preserving knowledge are so important, especially for missions that last decades and more as will happen when we really start going interstellar…

https://www.businessinsider.com/engineers-turn-to-voyager-decades-old-documents-fix-a-glitch-2022-7

To quote:

Unearthing old spacecraft documents

Voyager 1 was designed and built in the early 1970s, complicating efforts to troubleshoot the spacecraft’s problems.

Though current Voyager engineers have some documentation — or command media, the technical term for the paperwork containing details on the spacecraft’s design and procedures — from those early mission days, other important documents may have been lost or misplaced.

During the first 12 years of the Voyager mission, thousands of engineers worked on the project, according to Dodd. “As they retired in the ’70s and ’80s, there wasn’t a big push to have a project document library. People would take their boxes home to their garage,” Dodd added. In modern missions, NASA keeps more robust records of documentation.

There are some boxes with documents and schematic stored off-site from the Jet Propulsion Laboratory, and Dodd and the rest of Voyager’s handlers can request access to these records. Still, it can be a challenge. “Getting that information requires you to figure out who works in that area on the project,” Dodd said.

For Voyager 1’s latest glitch, mission engineers have had to specifically look for boxes under the name of engineers who helped design the altitude-control system. “It’s a time consuming process,” Dodd said.

NASA Engineers Have Figured Out Why Voyager 1 Was Sending Garbled Data

The space probe left Earth in 1977, and it’s still running—with some hiccups.

By Isaac Schultz

August 30, 2022 at 2:05 PM

Earlier this year, the Voyager 1 spacecraft—over 14 billion miles from Earth—started sending NASA some wacky data. Now, engineers with the space agency have identified and solved the issue, and no, it wasn’t aliens.

The strange data was coming from Voyager 1’s attitude articulation and control system, which is responsible for maintaining the spacecraft’s orientation as it hurtles through interstellar space at about 38,000 miles per hour.

The garbled telemetry data meant that Voyager 1 was communicating information about its location and orientation that didn’t match up with the possible true location and orientation of the spacecraft. Otherwise, the probe was behaving normally, as was its partner-in-crime, Voyager 2. Both spacecraft launched in the summer of 1977, and Voyager 1 is the farthest human-made object in the universe.

“The spacecraft are both almost 45 years old, which is far beyond what the mission planners anticipated. We’re also in interstellar space – a high-radiation environment that no spacecraft have flown in before,” said Suzanne Dodd, Voyager’s project manager, when the issue first emerged.

“A mystery like this is sort of par for the course at this stage of the Voyager mission,” Dodd added.

Full article here:

https://gizmodo.com/voyager-1-telemetry-problem-fixed-1849474513

To quote:

Now, NASA engineers have realized why the attitude articulation and control system was sending out gibberish data. The system began sending the telemetry through a faulty computer aboard Voyager 1, and the computer corrupted the information before it could be read out on Earth.

The Voyager 1 team simply had the spacecraft start sending data to the right computer, correcting the problem. They’re not sure why the system began sending the telemetry into the faulty computer to begin with.

“We’re happy to have the telemetry back,” Dodd said in a NASA JPL release. “We’ll do a full memory readout of the AACS and look at everything it’s been doing. That will help us try to diagnose the problem that caused the telemetry issue in the first place.”

The good news is that the faulty computer doesn’t seem to be going HAL 9000 on Voyager 1; the space probe is otherwise in good health. On September 5, the mission will celebrate its 45th year, a milestone achieved by Voyager 2 on August 20.

Since the telemetry issue was first made public, Voyager 1 has traveled another 100,000,000 miles. It’s a small, technical fix for humans, but one that ensures we can keep track of the intrepid space probe as it continues its extraordinary journey into deep space.

Other news items on this subject:

https://www.jpl.nasa.gov/news/engineers-solve-data-glitch-on-nasas-voyager-1

To quote:

The team has since located the source of the garbled information: The AACS had started sending the telemetry data through an onboard computer known to have stopped working years ago, and the computer corrupted the information.

Suzanne Dodd, Voyager’s project manager, said that when they suspected this was the issue, they opted to try a low-risk solution: commanding the AACS to resume sending the data to the right computer.

Engineers don’t yet know why the AACS started routing telemetry data to the incorrect computer, but it likely received a faulty command generated by another onboard computer. If that’s the case, it would indicate there is an issue somewhere else on the spacecraft. The team will continue to search for that underlying issue, but they don’t think it is a threat to the long-term health of Voyager 1.

“We’re happy to have the telemetry back,” said Dodd. “We’ll do a full memory readout of the AACS and look at everything it’s been doing. That will help us try to diagnose the problem that caused the telemetry issue in the first place. So we’re cautiously optimistic, but we still have more investigating to do.”

https://www.forbes.com/sites/nicholasreimann/2022/08/30/nasa-fixes-critical-system-issue-on-voyager-1-no-long-term-problems-expected/?sh=239f7a5e1bca