When something turns up in astronomical data that contradicts long accepted theory, the way forward is to proceed with caution, keep taking data and try to resolve the tension with older models. That would of course include considering the possibilities of error somewhere in the observations. All that is obvious enough, but a new paper on JWST data on high-redshift galaxies is striking in its implications. Researchers examining this primordial era have found six galaxies, from no more than 500 to 700 million years after the Big Bang, that give the appearance of being massive.

We’re looking at light from objects 13.5 billion years old that should be anything but mature, if compact, galaxies. That’s a surprise, and it’s fascinating to see the scrutiny to which these findings have been exposed. The editors of Nature have helpfully made available a peer review file containing back and forth comments between the authors and reviewers that give a jeweler’s eye look at how intricate the taking of high-redshift measurements can be. Reading this material offers an inside look at how the scientific community tests and refines its results enroute to what may need to be a modification of previous models.

It’s the availability of that peer review file that, as much as the findings themselves, occasions this post, as it offers laymen like myself a chance to see the scientific publication process at work. That cannot be anything but salutary in an era when complicated ideas are routinely pared into often misleading news headlines.

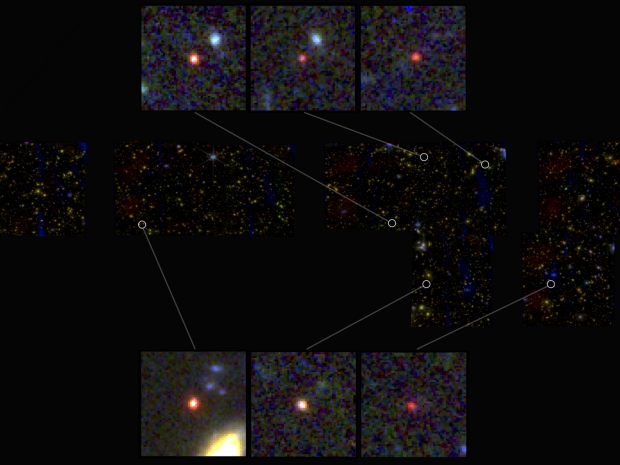

Image: Images of six candidate massive galaxies, seen 500-700 million years after the Big Bang. One of the sources (bottom left) could contain as many stars as our present-day Milky Way, according to researchers, but it is 30 times more compact. Credit: NASA, ESA, CSA, I. Labbe (Swinburne University of Technology). Image processing: G. Brammer (Niels Bohr Institute’s Cosmic Dawn Center at the University of Copenhagen). All Rights Reserved.

One note of caution emerges in the abstract to this work: “If verified with spectroscopy, the stellar mass density in massive galaxies would be much higher than anticipated from previous studies based on rest-frame ultraviolet-selected samples.”

That’s a pointer to what seems to be needed next. Penn State’s Joel Leja modeled the light from these objects, and I like the openness to alternative explanations that he injects here:

“This is our first glimpse back this far, so it’s important that we keep an open mind about what we are seeing. While the data indicates they are likely galaxies, I think there is a real possibility that a few of these objects turn out to be obscured supermassive black holes. Regardless, the amount of mass we discovered means that the known mass in stars at this period of our universe is up to 100 times greater than we had previously thought. Even if we cut the sample in half, this is still an astounding change.”

So the question of mass looms just as large as the formation process even if these do not turn out to be galaxies. No wonder Leja says the research team has been calling the six objects ‘universe breakers.’ On the one hand, the question of mass gets into fundamental issues of cosmology and the models that have long served astronomers. If galaxies actually form at this level at such an early time in the universe, then the mechanisms of galaxy formation demand renewed scrutiny. Leja is suggesting that a spectrum be produced for each of the new objects that can confirm the accuracy of our distance measurements, and also demonstrate what these ‘galaxies’ are made up of.

The paper on this remarkable finding itself continues to evolve. It’s Labbé et al., “A population of red candidate massive galaxies ~600 Myr after the Big Bang.” Nature 22 February 2023 (abstract). Note this editorial comment from the abstract page: “We are providing an unedited version of this manuscript to give early access to its findings. Before final publication, the manuscript will undergo further editing.” I’d like to read that final edit before commenting any further.

Cult of the CMB or how the universe radiation turns into microwaves at the CMB. That is the H bomb universe of the faulty mentality 1960s. Gravitational lensing on top of gravitational lensing, ad infinitum… to bad we can not see through the microwave haze of ad infinitum… Or can we.

The author-referee discussion was very interesting even though I don’t pretend to understand the details.

This was very much in Sagan’s “Extraordinary claims require extraordinary evidence”, as the claims were threatening to upend cosmology models of the early universe. The discussion centered around the methodologies with produced the claimed result, which while softened (lower redshifts, and hence age, and lower galaxy mass and density) the results appeared reasonably robust and satisfied the referees for publication in Nature, one of the two top general science journals.

Given the stakes, I have no doubt this may prove an important paper and follow-up studies will try to confirm or refute the claim. [I’m still waiting for a resolution of the Hubble constant discrepancies using 2 different methods.]

Science working as it should.

I don’t have the skill to weigh in on this controversy, yet I will say I never liked that quote. To me, “extraordinary claims” sounds like an appeal to one’s prejudices… and where those are concerned, I posted a link a while back that had made me wonder if cosmological parameters might be found to vary from place to place ( https://centauri-dreams.org/2022/11/08/stapledons-hawk/#comments ).

I do wonder if cherry-picking requires extraordinary evidence here. I didn’t notice statistical discussion about multiple hypotheses in the preprint ( https://arxiv.org/abs/2207.12446 ). I know in the past, at least biologists have had a blind spot when picking interesting oddities out of large datasets…

I must say I don’t read “extraordinary claims” as an appeal to prejudices. Most published science is filling in details. Because of bad incentives, much of the medical drug testing literature is unrepeatable, just noise due to results in data mining, p-hacking, abuse of statistics, and sometimes outright fraud. Psychology experiments are even worse. But lowering the signal/noise ratio in detail work isn’t particularly damaging. It may or may not be corrected. Reviewers don’t seem to do much more than correct spelling and make small corrections.

But “extraordinary claims” where a paradigm can be upended, really do need a lot more evidence that is as rock-solid as possible. Supporting data/experiments by more than one approach should be made, with a little uncertainty as possible. Such claims will be tested by others, by repeating the experiment and doing more work to test the claim. Hence the “extraordinary evidence”.

Haldane was once asked whether anything would change his mind about evolution. He is supposed to have replied, a fossil rabit in the Pre-Cmbrium or even the Cretaceous. Since then, there have been other approaches to support evolution and its timeline, making claims of a “young Earth” with no significant evolution ridiculous. Dawkins has stated that even if there were no fossils, evolution could be verified by DNA sequences of existing species. Darwin (and Russell) had compiled a lot of evidence supporting their claims of a deep-time evolutionary tree, with Darwin having evidence to support selection as the driving mechanism. One has to be willfully in denial of evolution these days, which restricts the adherents to a “young Earth” claim to religious fundamentalists.

I’m not sure what you are referring to. Biology is phenomenological. There are no “Laws”, and math is almost useless above the cellular level. The only surviving theory from my schooling is the Theory of Evolution, which is still being tinkered with. Predicting the course of evolution into the future isn’t possible, as innumerable experiments have demonstrated. Therefore there will always be interesting cases of some phenomenon that can be added to the “stamp collection”.

Fundamental principles of biology will only be really tested if/when we find another abiogenesis in the cosmos. The N=1 problem will go away as we will have a different life to compare terrestrial life with. It will be an exciting time for biologists. Sadly, I won’t get to see it.

Sorry, I should have said more about the biological experiments I was thinking of. In the 1990s, it was very common for researchers to see whether the amount of RNA for a certain gene would increase in response to a stimulus, such as cell replication or exposure to some stimulus. A certain number of replicates (which might be mice or tissue culture wells) would be tested, and statistics done, and if the p-value was 0.05 or less the result would get written up as “significant”. That meant there was only a 1-in-20 chance you would observe it by random chance.

Well, then people started doing microarray experiments, and they had 10,000 or more different RNA transcripts they were looking at all at the same time, so you can imagine there would be “significant” results obtained for 500 different genes each time no matter what. And so the rules for the statistics for multiple hypotheses tested at once became more strict.

This paper is looking at 13 or fewer objects from a catalog of 42,729, and so far as I know it is generating a probability distribution based on a few broadband filters. Looking at any one of the galaxies it seems like the observation of a massive galaxy at high redshift is the best interpretation, but I didn’t see that they have simulated whether 3000 galaxies could connive together to lay a single misleading image at their feet.

Meanwhile, I don’t see why it is so shocking to “upend a paradigm” for just those few galaxies. Galaxies aren’t all the same, and I’d guess there must be a way to explain a few odd cases without throwing out the whole existing theory. But I also don’t see why it would be so shocking if the curvature of space, Hubble constant, time since the Big Bang etc. couldn’t turn out to be a little different when you test them in different places with a super-powerful space telescope looking 13 billion light years away. Overall, this paper seems like a pilot study that tags some galaxies for a definitive spectroscopic examination that will clear up what’s really happening. No matter what, it’s probably something interesting.

I spent more than 7 years of my life with a company that did these experiments. Starting with cDNA arrays and ending with far superior RNA arrays. As you say, depending on what was being looked for, multiple testing corrections had to be made to exclude the 5% p-value test showing up lots of false positives. We tended to be less focussed on individual genes, but rather impacts on genes in a pathway and we used stats to extract that data. But signal noise was very problematic, especially with genes with very low expression levels, and the quality of processing the microarrays.

Interestingly, while experiments on mice and rats worked well to detect changes in organs subjected to drugs and toxic chemicals, attempts to do the same with cell cultures such as yeast were not good as each culture could be readily identified by the pattern of gene expression changes. I think this was due to the cells adapting or even evolving in the bioreactor.

As a side note, the first cDNA microarray experiments by the inventors were published in Science. By the time we were doing what we thought was cutting-edge research, none of our experiments proved publication-worthy in any of the relevant top journals. These galaxy observations are like those early cDNA microarray experiments. Very rapidly the observations confirming or falsifying the observations will only be published in the astronomical journals as the excitement over new science will be focussed on some other phenomena observations.

I don’t think or intuit that our mechanisms of galaxy formation need to be re considered or revised to shorter period of formation time which is an ad hock assumption or solution approaching absurdity. We should never force our observations to fit a time line of how our universe formed that has never been proven in the first place, but only assumed to be true.

Another possibility is, oh no, our estimation of the age of our universe is completely wrong. If that was true, then larger telescopes and longer exposure times in photographs would reveal even more galaxies. I came up with this idea independently in 2016 with several other predictions of different types of cosmological models, but also I noticed that astrophysicist Dr. John Mather recently came up with the same idea with his prediction of what different possibilities the JWST might see if stretched to its maximum capability before it took it’s first deep space photos.

Isn’t the age of the universe calculated by extrapolating the Hubble constant (H0)? The 13.7 by age is based on the Plank observations of Ho = ~ 67 kms-1/Mpc. Using the higher value of ~ 73 from WMAP would suggest perhaps a 15+ by age. Might that allow for the needed time for galaxy formation from these observations?

It all reminds me of the unknown nature of genetic information that would support Darwin’s theory of evolution by natural selection. Lots of guesses, including “aperiodic crystals” in Shroedinger’s 1944 booklet “What is Life”? Then Watson and Crick elucidated the structure of DNA and suggested this was the information storage mechanism. After that, we could map early genetic data to actual genes, and the “dark matter” of genes, genetic, and genomic information, and mechanisms to account for the core of biology were solved, apart from the details.

It strikes me that the Hubble constant, the age of the universe, and star and galaxy formation in the early universe are still in the equivalent of the pre-DNA period of biology, where lots of statistics on genetic loci and linkage, and experiments gave us clues, but no observations had been made to solve the underlying biology.

Because I am more interested in the question of life in the universe, I am looking forward to the experiments which will no doubt be controversial in their interpretation and only slowly resolved. Like these cosmological observations, the detection of life will have to be via proxies until we can send probes to directly observe and experiment on extra terrestrial life.

Does your cosmology of life include the possibility of Panspermia as the genesis of life on earth with subsequent evolutionary dynamics set into motion naturalistically?

I am open to any theory that will best explain the data, although we have as yet, none. However, if it turns out that life is so fundamentally similar across the galaxy, it will be less interesting than if life was created separately in each world. OTOH, each planet would be like running the same evolution experiment and therefore the resulting complex lifeforms would be data that indicates how contingent evolution is, and how much convergent evolution arrives at very similar forms and behaviors. Life wouldn’t be extremely exotic, but it would likely show which forms are common, and which appear with low probabilities, and whether technological civilization can arise with different species unrelated to humans or not.

The discussion between the referees and the authors was really interesting. It certainly highlights the challenges of being on the leading edge of science.

I think one factor that is unappreciated by many is the use of proxies in these studies. That is, using what can be observable (data from HST, JWST, etc.) to calculate what is being measured (galaxy age, size, composition, etc.) by means of a model. The model is necessary because at these high red shifts there is little possibility of more directly observing those galaxy properties.

Model can never be perfect since they depend on an incomplete understanding of astrophysical processes, cosmology and even the accuracy of our instruments. All of this is seen in the exchange. The models must be good or the proxy is wrong, and therefore the calculated properties are wrong or too uncertain to be useful. There are competing models and disagreement on which are reliable and on the domains in which they can be trusted.

The discussion about JWST instrument calibration was interesting. It is still ongoing. That isn’t too surprising for something so unique and leading edge in its capabilities. How the calibration of instrument sensitivity and resolution affects the methods and conclusions. This will continue to evolve and cause old conclusions to be revisited in future.

Another concern raised was sensitivity analysis. At the limits of the instrument sensitivity and resolution, small uncertainties lead to large deviations of the calculated properties. That is present in the models, and the deviations are different between models. That degree of sensitivity must be resolved with better data or better models that, hopefully, are not as sensitive to data uncertainties.

Once the uncertainties and error bars can be tamed there is some interesting science here. Perhaps a useful comparison is that of the Hubble constant (variable). Various measurements and models result in different values. For years the error bars were so large that the overlapped and caused no great concern. As the error bars shrunk and no longer overlapped, something was amiss with the models or our understanding of cosmology. That’s interesting since it may lead to novel insights, but not while there is no good way to choose between them. The same may be true of this paper.

“So the question of mass looms just as large as the formation process even if these do not turn out to be galaxies. No wonder Leja says the research team has been calling the six objects ‘universe breakers.’ On the one hand, the question of mass gets into fundamental issues of cosmology and the models that have long served astronomers. ”

“So the question of mass looms just as large as the formation process …”

How does mass all play into this??

The new objects imply about 100 times more mass in stars in this early period than was previously thought, according to co-author Leja.

But a strong gravity can produce a red shift. How do we know these aren’t neutron stars, quark stars, magnetars, etc. within our own galaxy? Instead of a galaxy of mass X 13.5 billion lightyears away, maybe it’s a galaxy of mass Y 5 billion lightyears away. Or an odd object of mass Z 1 million lightyears away. How much detail are we seeing in these images?

And obviously the math is beyond me, unfortunately. Sorry.

It was refreshing read. What the authors don’t say (but maybe realize) is that nothing related to cosmology comes even close to being an actual science. That is, apply the Scientific method in order to be considered a theory.

Many years ago one of the original guys who discovered the accelerating expansion of the universe by observing supernovae came to our university to give a public lecture. His key argument was a 2D plot with some supernovae scattered in almost random fashion. The plot featured a random distribution of points, at least this is what I saw as an engaging grad student. However the guy had superimposed a trend, he probably claimed some linear regression parameters in the way people who never studied statistic would do it, and claimed the trend clearly showed that the rate of expansion of the universe is increasing. Since the guy was considered famous, questions were only available through a moderator…

I guess my point is that the subject of cosmology is, by its nature, almost not amenable to the scientific method. People with limited understanding of statistics and modeling perform wild numerical simulations which abuse by orders of magnitude a basic principle that you should not extrapolate your model much beyond the availability of data. Speaking of cosmological “theories” becomes an absurd beauty pageant.

You see, a whole discipline rests on seeing things in random data in ways that no physicist, engineer or mathematician would ever do. And there are much more wild abuses, again presented as theories. For example, inflation. A hypothesis that is simply untestable by experimentalists.

One of the great observational astronomers developed the idea that quasars were ejected from the cores of galaxies. This is where discordent redshifts explaned many galaxy groups that had large redshift variations. The cosmologists tried to bury his research to the extent that this concept is not even known now today.

Remember what was considered untrue: tired light + steady state universe

I don’t think physical cosmology is beyond scientific method specifically our known principles of physics. So far no new physics has been proven, but is only anecdotal. Also what we are looking at is at the limits of our light cone so at some point a larger telescope might be needed . The European extremely large telescope will be completed in 2026. Yes if the universe was a little older it would give enough time for galaxies to form according to models.

It would not surprise me, the early stages of the big bang had huge amounts of matter that could have collapsed into very large black holes very early on forming quasars.

https://upload.wikimedia.org/wikipedia/commons/thumb/d/d3/Phoenix_A_compared_to_Ton_618_and_the_Orbit_of_Neptune.jpg/600px-Phoenix_A_compared_to_Ton_618_and_the_Orbit_of_Neptune.jpg?20220617120033

Not sure how these early galaxies would have altered the Comic background radiation though, it something we should see around them i would have though.

The tv series NOVA “New Eye on the Universe” (S55E04) has various groups of astronomers talking excitedly about the early data. In the segments 30 mins, ~50 mins), they talk about these massive, early galaxies and how that upsets the theories on early formation. Since it is for the general public, it is more talking heads, and pictures, but no details of the meat of interpretation.

There seems to be some suggestion that the detector elements were not fully calibrated and that calibration to adjust signals was done after examining the data. I[I hope that is my misunderstanding of the short segment on the calibration of the detector elements.]

AFAICS, none of the early massive galaxy scientists identified were the authors of this paper.

I wonder how Halton Arp would have felt about these developments.

Come on. What about the Hubble ultra deep field galaxies which were so distant that they were blurry and fuzzy, but the JWST easily clearly imaged the same ones. If they look like galaxies, they are galaxies. Black holes by them selves would need some surrounding gas and dust to become detectable and also tidal disruption events of stars otherwise they are invisible and dimmer than galaxies. Galaxies with large black holes are still galaxies and these black holes get larger from collisions with other galaxies, etc and take billions of years to form.

The JWST images of massive early galaxies certainly challenge the dark matter involvement. Large galaxies are supposed to have formed from mergers of smaller dark-matter halos, which takes a long time. Billions of years more than the ages of the large galaxies in the JWST images. It’s not just these six galaxies either, there are apparently many galaxies at high z that are just to big to have formed in the time that is represented by their redshift. The MOND supporters are claiming this as a victory, because large galaxies form naturally at high z via MOND.

I don’t think we can have supermassive black holes first without stars which is a counter intuitive idea. They would not be very bright, but they are bright so they must be galaxies. It’s the brightness.

Whenever a branch of investigation is limited to a single instrument of such a cost that it has no peer — whether it is a particle accelerator or space telescope, the fundamental requirements of the scientific process for peer review of its findings cannot be met. it doesn’t mean these extra-ordinary instruments shouldn’t be built. It’s just that we should call such investigations science until independent peer review with independently calibrated instruments becomes available. It’s a bit like quantum, the further we look back the less certain we can be.

I really don’t understand the rational for hypothesizing that star formation at the beginning of the universe should be slow.

After the big bang, the formation of super massive stars that quickly turned into black holes should have been quite common. Since the lifetime of super massive stars before they turn into black holes is only a few million years, finding super dense galaxies 500 to 700 million years after the big bang is really not surprising, IMO.

Interesting! More on this in the post I’m about to publish.

Clumps of gas could have been so dense that they literally collapsed inwards to form blackholes without the need for stars to burn the gas.

This is believed to have occurred as galaxies were first coalescing, when they were little more than large H/He nebulae. The mass of a collapsing portion of the nebula has to be quite large to form an event horizon without triggering sufficient radiation pressure from fusion to counter the gravitational pressure. I don’t believe the cutoff mass is theoretically well understood, other than it would probably be well over 100 solar masses. These masses could be much higher in early galaxies.

Even if radiation pressure from fusion does reach equilibrium with the gravitational pressure, these massive stars don’t live long because the fusion rate is very high. When they nova, there would often be enough nearby H/He gas to trigger another gravitational collapse and another nova. It would not take long for several generations of super-massive stars and for the formation of central black holes in early galaxies, while also seeding the galaxy with metals. Of course, the devil is in the details, and those are only partially understood.

There was just so much matter around direct collapse was possible.

https://universemagazine.com/en/how-magnetic-fields-helped-supermassive-black-holes-to-be-born/

“Before final publication, the manuscript will undergo further editing.” I’d like to read that final edit before commenting any further.”

I would like to wait [not too long] for the edited version of the rebuttals- which seems to be the purpose of releasing it before, further editing.

It appears the authors failed to account for the relative movement of the lensing galaxies and the resulting frequency shifts of the target galaxy observed frequency data.

Thank you for posting the peer review; it is insightful. The pattern of authors trying to make adjustments to support the obvious noteworthy conclusions while referees trying to get them to be more conservative suggests this is NOT a revolutionary breakthrough, but rather either a mistake in data or interpretation of it.

Another red flag: one of the referees asked their team to measure the masses of the target galaxies and they got normal (non-remarkable or model breaking) results.

Cool to consider, though; when you think your established models might be wrong then you revert to thinking outside the box a bit more (what if there was matter before the Big Bang that persisted through and after it?) and, this early sensational analysis will likely help us calibrate our models around the new, higher fidelity data we are getting from JWST. All part of forward progress!