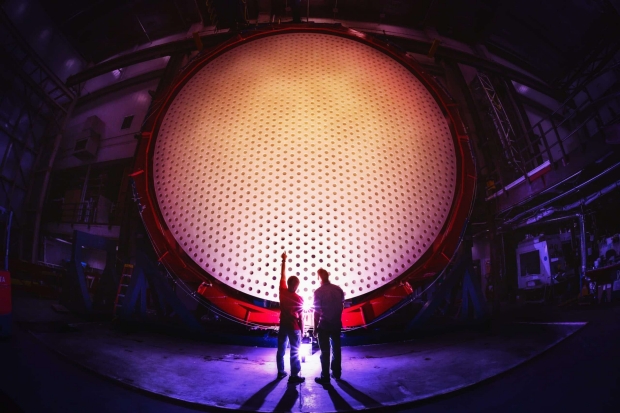

We’re building some remarkably large telescopes these days. Witness the Giant Magellan Telescope now under construction in Chile’s Atacama desert. It’s to be 200 times more powerful than any research telescope currently in use, with 368 square meters of light collection area. It incorporates seven enormous 8.5 meter mirrors. That makes exoplanet work from the Earth’s surface a viable proposition, but look at the size of the light bucket we need to make it work. Three mirrors like that shown below are now in place, and the University of Arizona’s Mirror Lab is building number 6 now.

Image: University of Arizona Richard F. Caris Mirror Lab staff members Damon Jackson (left) and Conrad Vogel (right) in the foreground looking up at the back of primary mirror segment five, April 2019. Credit: Damien Jemison; Giant Magellan Telescope – GMTO Corporation. CC BY-NC-ND 4.0.

Imaging an exoplanet from the Earth’s surface is complicated by the Rayleigh Limit, which governs the resolution of our optical systems and their ability to separate two point sources. Stephen Fleming showed the equation in his talk on super-resolution imaging at the Interstellar Research Group’s recent meeting in Montreal. I use few equations on this site but I’ll show this one because it’s straightforward and short:

θ = 1.22 * (λ / D)

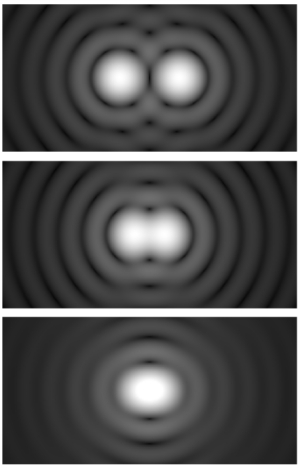

Here λ is the wavelength and D is the diameter of the mirror. What this says is that there is a minimum angular separation (θ) that allows two point sources to be clearly distinguishable, which in terms of astronomy means we can’t pull useful information out of the image when they are closer than this. I’ve pulled the image below out of Wikipedia (in the public domain, submitted by Spencer Bliven).

Image: Two Airy disks at various spacings: (top) twice the distance to the first minimum, (middle) exactly the distance to the first minimum (the Rayleigh criterion), and (bottom) half the distance. This image uses a nonlinear color scale (specifically, the fourth root) in order to better show the minima and maxima.

Here we have another useful term: An Airy disk is a diffraction pattern that is produced when light moves through the aperture of a telescope system. Light diffracts – it’s in the nature of the physics – and the Airy disk is the best focused spot of light that a perfect lens with a circular aperture can make. We’re looking at light interfering with itself, so in the image, we have a central bright spot with surrounding rings of light and dark. The diffraction pattern depends upon the wavelength being observed and the aperture itself. This diffraction can be described as a point spread function (PSF) for any optical system, and essentially governs how tightly that system can be focused.

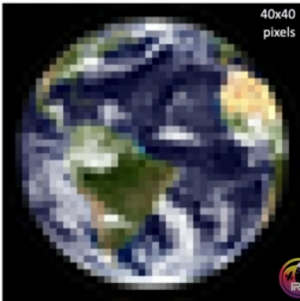

Bigger apertures matter as we try to deal with these limitations, and the Giant Magellan Telescope will doubtless make many discoveries, as will all of the coming generation of Extremely Large Telescopes. But when we want to see ever smaller objects at astronomical distances, we run into a practical problem. Nothing in the physics prevents us from building a ground-based telescope that could see an Earth-class planet at Alpha Centauri, but if we want details, Fleming notes, we would need a mirror 1.8 kilometers in diameter to retrieve a 40 X 40 pixel image.

The point of Fleming’s talk, however, was that we can use quantum technologies to nudge into the Rayleigh limitations and extract information about amplitude and phase from the light we do collect. That, in turn, would allow us to distinguish between point sources that are closer than what the limit would imply. The operative term is super-resolution, a topic that is growing in importance in the literature of optics, though to this point not so much in the astronomical community. This may be about to change.

Counter-intuitively (at least insofar as my own intuitions run), a multi-aperture telescope does a better job with this than a large single-aperture. Instead of a 3-meter mirror you use three 1.7 meter mirrors that are spaced out over, perhaps, an acre. This hits at mirror economics as well, because the costs of these enormous mirrors goes up more than exponentially. The more you can break the monolithic mirror into an array of smaller mirrors, you can add to the data gain but also sharply reduce the expense.

In terms of the science, Fleming noted that the point spread function spreads out when multiple smaller mirrors are used, and objects become detectable that would not be with a monolithic single mirror instrument. The technique in play is called Binary Spatial Mode Demultiplexing. Here the idea is to extract quantum modes of light in the imaging system and process them separately. The central mode – aligned with the point spread function of the central star – is the on-axis light. The off-axis photons, sorted into a separate detector, are from what surrounds the star.

So in a way we’re nudging inside the Rayleigh Limit by processing the light, nulling out or dimming the star’s light while intensifying the signal of anything surrounding the star. I’m reminded, of course, of all the work that has gone into coronagraphs and starshades in the attempt to darken the star while revealing the planets around it. In fact, some of the earliest research that convinced me to write my Centauri Dreams book was the work of Webster Cash out at the University of Colorado on starshades for this purpose, with the goal of seeing continents and oceans on an exoplanet. I later learned as well of Sara Seager’s immense contributions to the concept.

Thus far the simulations that have been run at the University of Arizona by Fleming’s colleagues have shown far higher detection rates for an exoplanet around a star using multi-aperture telescopes. In fact, there is a 100x increase in sensitivity for multi-aperture methods. This early work indicates it should be possible to identify the presence of an exoplanet in a given system with this ground-based detection method.

Can we go further? The prospect of direct imaging using off-axis photons is conceivable if futuristic. If we could create an image like this one, we would be able to study this hypothetical world over time, watching the change of seasons and mining data on the land masses and oceans as the world rotates. The possibility of doing this from Earth’s surface is startling. No wonder super-resolution is a growing field of study, and one now being addressed within the astronomical community as well as elsewhere.

IIRC, wasn’t there some clever offline light interferometry used for the visualization of the first black hole? We cannot do light interferometry for distant mirrors…yet, but I think I recall that the light was carefully recorded and then combined with a computer to get the desired resolution.

If so, might we not use this technique with telescopes on the other side of the Earth to create the needed resolving power?

Also, wasn’t there a talk by scientists on the SETI webinar about using multiple telescopes to improve the resolution? I cannot recall if they were optical or radio telescopes. I assume optical as this wouldn’t be new for the radio telescope world.

Wavelength is important with interferometry. Would it be possible to do interferometry with distant telescopes in the far infrared rather than optical light, or is the increased wavelength still not long enough?

Far away interferometry called baseline interferometry only works with longer wavelength or radio waves such as in radio telescopes. Before star shades and coronagraphs, there was the expensive idea to block the light of the star with the images of several telescopes combined or interferometry in space, an expensive but still valid way to see exoplanet images.

These new very large land based telescopes will have the problem of the Rayleigh limit. Maybe only an extremely large space telescope will work since there is not any atmosphere and no Rayleigh limit, so these will be able to see the actual images of exoplanets it the mirror is large enough. If there is a way to overcome the Rayleigh limit with computers that would be great. We still can use very large ground based telescopes as they soon become completed for transmission spectroscopy and light polarization techniques, so we still can discover the biosignatures of life and corroborate anything found by the JWST.

The Rayleigh limit is a wave phenomenon and limits observations in space as well as on Earth. On Earth, of course, there are numerous other factors induced by the atmosphere. But the Rayleigh Limit still applies in space.

Thank you. I stand corrected. I was not familiar with the idea of Rayleigh criterion diffraction and refraction apply to the entire electromagnetic spectrum and therefore the wavelength and size of the mirror or light gathering power. The larger size of the telescope mirror or sum of many mirrors will give the better resolution. Wouldn’t more mirror length be needed to gather more photons? There is always artificial intelligence. One is looking at an extremely small and faint point of light which how an exoplanet looks from a great distance. Hopefully we will be able to see something without having to build a huge telescope with a huge cost.

Actually, there is a technique that is on the verge of making optical interferometry on a planet wide scale possible. Quantum memory, quantum error correction code and the STIRAP technique.

“In the “encoder” stage, the signal is captured into the quantum memories via the STIRAP technique, which allows the incoming light to be coherently coupled into a non-radiative state of an atom. The ability to capture light from astronomical sources that account for quantum states (and eliminates quantum noise and information loss) would be a game-changer for interferometry. Moreover, these improvements would have significant implications for other fields of astronomy that are also being revolutionized today.”

A new quantum technique could enable telescopes the size of planet Earth.

https://phys.org/news/2022-05-quantum-technique-enable-telescopes-size.html

Now, if we could merge the quantum data from telescopes across the solar system the resolution would be higher then the suns gravity lens!

If that technique can be successfully applied to VLBI in the optical wavelengths, that would be awesome. I would like to see some evidence that it works for actual telescopes. If each telescope needs the same entangled particles that might be a bit problematic, but we seem to be making headway in keeping quantum states stable in quantum computers.

The article cited is already over a year old. Has any progress been made since then?

What are the units of theta, lambda and D?

I presume they are arcseconds, microns and meters, respectively.

from Wikipedia…

theta is in radians

lambda and D are in the same units

the units cancel out and radians are dimensionless.

From Wikipedia (Optical Resolution)

θ = 1.22λ/D

θ is the angular resolution in radians,

λ is the wavelength of light in meters,

and D is the diameter of the lens aperture in meters. is the angular resolution in radians,

I suppose you would have to convert radians to the preferred units.

1 radian = 206265 arc seconds

Pretty sure they would be radians, meters, and Meters respectively. Stay safe.

Every photon has a history, even if one postulates the single photon universe. A telescope is a device to deduce some part of the history of a batch of related photons. Bigger batches, more numerous batches, and more widely separated batches, while still capable of being herded into comprehensible patterns, the more of their history they will reveal to us.

A picture of a black hole was taken with multiple widely separated but interconnected telescopes. A couple of them on the moon might be helpful.

Indeed. The Event Horizon Telescope was in effect an Earth-sized aperture using these methods. Remarkable results!

To make high resolution radio images these days, they use telescopes at various points on the Earth, record and time stamp the incoming photons then combine them to match their phases.

Long wave radio is fairly easy to phase match with our current technology, but our ability to measure time is getting down to the attosecond level, so I wonder if one day we can time stamp light photons from telescopes all over Earth to phase match then and construct an image.

Time stamping isn’t the problem. There is no way to record the photons that preserves the complex (phase, amplitude) components. The frequency is far far beyond our technology. Therefore you have to directly combine the actual photons. That’s exceedingly difficult for telescopes that are far apart.

Computer interferometry might work since images can be combined and sent over fiber optics.

https://en.wikipedia.org/wiki/Spektr-R

“The main scientific goal of the mission was the study of astronomical objects with an angular resolution up to a few millionths of an arcsecond. This was accomplished by using the satellite in conjunction with ground-based observatories and interferometry techniques.[3] Another purpose of the project was to develop an understanding of fundamental issues of astrophysics and cosmology. This included star formations, the structure of galaxies, interstellar space, black holes and dark matter. “