NIAC’s award of a Phase I grant to study a ‘swarm’ mission to Proxima Centauri naturally ties to Breakthrough Starshot, which continues its interstellar labors, though largely out of the public eye. The award adds a further research channel for Breakthrough’s ideas, and a helpful one at that, for the NASA Innovative Advanced Concepts program supports early stage technologies through three levels of funding, so there is a path for taking these swarm ideas further. An initial paper on swarm strategies was indeed funded by Breakthrough and developed through Space Initiatives and the UK-based Initiative for Interstellar Studies.

Centauri Dreams readers are by now familiar with my enthusiasm for swarm concepts, and not just for interstellar purposes. Indeed, as we develop the technologies to send tiny spacecraft in their thousands to remote targets, we’ll be testing the idea out first through computer simulation but then through missions within our own Solar System. Marshall Eubanks, the chief scientist for Space Initiatives, a Florida-based startup focused on 50-gram femtosatellites and their uses near Earth, talks about swarm spacecraft covering cislunar space or analyzing a planetary magnetosphere. Eubanks is lead author of the aforementioned paper.

But the go-for-broke target is another star, and that star is naturally Proxima Centauri, given Breakthrough’s clear interest in the habitable zone planet orbiting there. The NIAC announcement sums up the effort, but I turn to the paper for discussion of communications with such swarm spacecraft. As Starshot has continued to analyze missions at this scale, it explores probes with launch mass on the scale of grams and onboard power restricted to milliwatts. The communications challenge is daunting indeed given the distances and power available.

If we want to reach a nearby star in this century, so the thinking goes, we should build the kind of powerful laser beamer (on the order of 100 GW) that can push our lightsails and their tiny payloads to speeds that are an appreciable fraction of the speed of light. Moving at 20 percent of c, we reach Proxima space within 20 years, to begin the long process of returning data acquired from the flybys of our probes. Eubanks and colleagues estimate we’ll need thousands of these, because we need to create an optical signal strong enough to reach Earth, one coordinated through a network that is functionally autonomous. We’re way too far from home to control it from Earth.

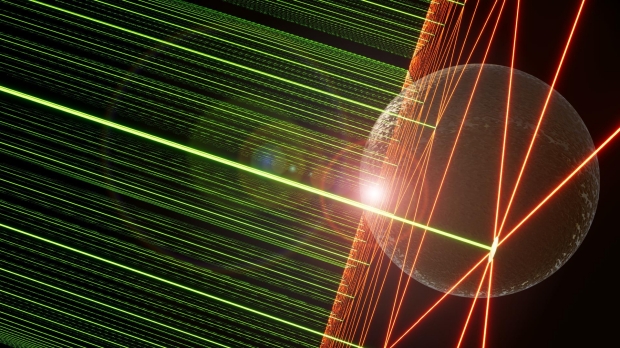

Image: Artist’s impression of swarm passing by Proxima Centauri and Proxima b. The swarm’s extent is ∼10 larger than the planet’s, yet the ∼5000-km spacing is such that one or more probes will come close to or even impact the planet (flare on limb). It should be possible to do transmission spectroscopy with such swarms. Green 432/539-nm beams are coms to Earth; red 12,000-nm laser beacons are for intra-swarm probe-to-probe coms. Conceptual artwork courtesy of Michel Lamontagne.

The engineering study that has grown out of this vision describes the spacecraft as being ‘operationally coherent,’ meaning they will be synchronized in ways that allow data return. The techniques here are fascinating. Adjusting the initial velocity of each probe (this would be done through the launch laser itself) allows the string of probes to cohere. The laser also allows clock synchronization, so that we wind up with what had been a string of probes traveling together through the twenty year journey. In effect, the tail of the string catches up with the head. What emerges is a network.

As the NIAC announcement puts it:

Exploiting drag imparted by the interstellar medium (“velocity on target”) over the 20-year cruise keeps the group together once assembled. An initial string 100s to 1000s of AU long dynamically coalesces itself over time into a lens-shaped mesh network 100,000 km across, sufficient to account for ephemeris errors at Proxima, ensuring at least some probes pass close to the target.

The ingenuity of the communications method emerges from the capability of tiny spacecraft to travel with their clocks in synchrony, with the ability to map the spatial positions of each member of the swarm. This is ‘operational coherence,’ which means that while each probe returns the same data, the transmission time is related to its position within the swarm. The result; The data pulses arrive at the same time on Earth, so that while the signal from any one probe would be undetectable, the combined laser pulse from all of them can become bright enough to detect over 4.2 light years.

The paper cites a ‘time-on-target’ technique to allow the formation of effective swarm topologies, while a finer-grained ‘velocity-on-target’ method is what copes with the drag imparted by the interstellar medium. This one stopped me short, but digging into it I learned that the authors talk about adjusting the attitude of individual probes as needed to keep the swarm in coherent formation. The question of spacecraft attitude also applies to the radiation and erosion concerns of traveling at these speeds, and I think I’m right in remembering that Breakthrough Starshot has always contemplated the individual probes traveling edge-on during cruise with no roll axis rotation.

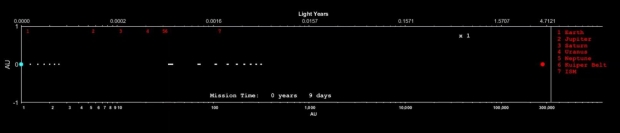

Image; This is Figure 2a from the paper. Caption: A flotilla (sub-fleet) of probes (far left), individually fired at the maximum tempo of once per 9 minutes, departs Earth (blue) daily. The planets pass in rapid succession. Launched with the primary ToT technique, the individual probes draw closer to one another inside the flotilla, while the flotilla itself catches up with previously-launched flotillas exiting the outer Solar system (middle) ∼100 AU. For the animation go to https://www.youtube.com/watch?v=jMgfVMNxNQs (Hibberd 2022).

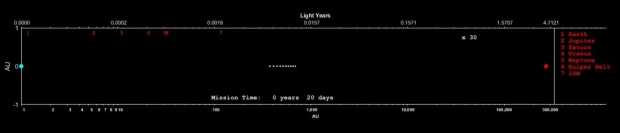

Figure 2b takes the probe ensemble into the Oort Cloud.

Image: Figure 2b caption: Time sped up by a scale factor of 30. The last flotilla launched draws closer to the earlier flotillas; the full fleet begins to coalesce (middle), now under both the primary ToT and secondary VoT techniques, beyond the Kuiper-Edgeworth Belt and entry into the Oort Cloud ∼1000–10,000 AU.

When we talk about using collisions with the interstellar medium to create velocities transverse to the direction of travel, we’re describing a method that again demands autonomy, or what the paper describes as a ‘hive mind,’ a familiar science fiction trope. The hive mind will be busy indeed, for its operations must include not just cruise control over the swarm’s shape but interactions during the data return phase. From the paper;

With virtually no mass allowance for shielding, attitude adjustment is the only practical means to minimize the extreme radiation damage induced by traveling through the ISM at 0.2c. Moreover, lacking the mass budget for mechanical gimbals or other means to point instruments, then controlling attitude and rate changes of the entire craft in pitch, yaw, roll, is the only practical way [to] aim onboard sensors for intra-swarm communications, interstellar comms with Earth and imagery acquisition / distributed processing at encounter.

I gather that other techniques for interacting with the interstellar medium will come into play in the NIAC work, for the paper speaks of using onboard ‘magnetorquers,’ an attitude adjustment mechanism currently in use in low-mass Cubesats in low Earth orbit. It’s an awkward coinage, but a magnetorquer refers to magnetic torquers or torque rods that have been developed for attitude control in a given inertial frame. The method works through interaction between a magnetic field and the ambient magnetic field (in current cases, of the Earth). Are magnetic fields in the interstellar medium sufficient to support this method? The paper explores the need for assessment.

A solid state probe has no moving parts, but it’s also clear that further simulations will explore the use of what the paper calls MEMS (micro-electromechanical systems) trim tabs that could be spaced symmetrically to provide dynamic control by producing an asymmetric torque. This sounds like a kludge, though one that needs exploring given the complexities of adjusting attitudes throughout a swarm. We’ll see where the idea goes as it matures in the NIAC phase. All this will be critical if we are to connect interswarm to create the signaling array that will bring the Proxima data home.

Interestingly, the kind of probes the paper describes may vary in some features:

We note for the record that although all probes are assumed to be identical, implicitly in the community and explicitly in the baseline study, there is in fact no necessity for them to be “cookie cutter” copies, since the launch laser must be exquisitely tunable in the first place, capable of providing a boost tailored to every individual probe. At minimum, probes can be configured and assigned for different operations while remaining dynamically identical, or they can be made truly heterogeneous wherein each probe could be rather different in form and function, if not overall mass and size.

There is so much going on in this paper, particularly the issue of the orbital position of Proxima b, which you would think would be known well enough by now (but guess again). The question of carrying enough stored energy for the two decade mission is a telling one. But the overwhelming need is to get information back to Earth. How data would be received from these distances has always bedeviled the Starshot idea, and having followed the conversation on this for some time now, I find the methods proposed here seriously intriguing. We’ll dig into these issues in the next post.

The paper is Eubanks et al., “Swarming Proxima Centauri: Optical Communication Over Interstellar Distances,” submitted to the Breakthrough Starshot Challenge Communications Group Final Report and available online.

Reminds me of Kennedy’s “we do these things” words

To Larry: Thanks, from another someone named Kennedy, co-author with my colleague Marshall.

To Alex: 1 gram is the allowed payload, the total launch mass is 3.6 g, per the baseline study by Parkin in 2016. The flat diameter is 4 m, hence the circular area is about 13 m^2. A hyperboloid of revolution shows promise of being stable during illumination by the launch laser aka “photon engine”. Yes, the idea is that the ones launched later are boosted faster in order to catch up with the slower ones launched first – the military calls this “time on target”. To which we add another degree of control, varying attitude wrt ISM to provide differential deceleration or “velocity on target”. Individual probes do generate a coherent signal across their full face (4m); a thousand of them synchronize (hence the role of good clocks, in the swarm and on Earth) these blinks into one very short but relatively bright blink that overcomes the background noise from Proxima. The huge launch laser gets used long after launch, as a sort of metronome (the master clock signal from Earth to keep everyone synched) and as a piece of scientific apparatus utilized during flyby, which we dubbed “interstellar flashlight”. There’s other innovations, too, like simple solid-state betavoltaic batteries fueled with a cheap radioisotope to provide decades of power at tens of milliwatts per probe.

@Robert Kennedy.

Thank you for the clarification. However, I would point out that 1-gram probe/spacecraft/payload is used throughout the paper. Whether referencing other studies or not is unclear. Table 2 seems to suggest a total mass budget of 1-gram, whilst Table 3 increases this to 3.6 grams with the aerographene addition. The suggestion that the spacecraft is more like a red corpuscle is a stretch. It seems more like a 4-meter diameter planar sail with 2cm thick edges. The paper mentions both aerographene and aerographite, but states:

Yet the figure 3 (d) clearly shows the use of aerographene foam. Is this synonymous with aerographite?

But these relative nitpicks aside, you haven’t attempted to answer the questions I raised.

1. What is the evidence that the design is structurally stable in the face of the 100GW laser array accelerating the sailcraft to 0.2c? Lubin demanded highly reflective sails to prevent heating. Aerographene/aerographite in contrast are almost the opposite. Why would they not vaporize under the beam intensity, much as Lubin’s original idea was to use such lasers to vaporize asteroids for planetary defense?

2. The assumption that the ISM is relatively isogenous to allow edge on cruise despite erosion to maintain the close proximity of the probes. But more problematic is the interplanetary medium at Proxima, when the probes must closely coordinate their swarm configuration in the face of conditions of the solar em and particle radiation that can propel sails (especially these extremely lightweight sails) and may not be uniform at all across the swarm.

Is there going to be any attempt at modeling possible perturbations on the performance of the sails, or is this to be reserved for a later project if the basic ideas can be verified?

3. If sails fall behind, or get out of synchronization range, are these deemed failures, or does the swarm try to reduce its velocity to try to get them in closer together again? What are the likely swarm decisions if certain percentages of sails fall behind due to increased drag?

Please note, that I am not criticizing the idea of this paper. It is worth doing to explore possible sail technology and swarm possibilities. However, it remains one of the most ambitious technology papers I have read due to the assumption of future technology development and the unknowns still to be determined.

Alex, BTS was divided into three large groups: #1 had the “photon engine” (the launch laser, #2 had the spacecraft per se (the thin disc), #3 had the communications problem. We were in #3, along with 14 other teams. BTS leadership expressed the opinion that “comms were the longest pole in a tent of long poles”. I laughed when I heard that – figures our crew would pick the tough one. In the NIAC proposal that came after, we simply stated “we presume that a 100-GW beamer and a suitably robust lasersail material will exist by mid-century.” Evidently the evaluators had no problem with that assumption. They had a good reason – reviewers said that even if a trip to Proxima Centauri eventually did not work out, that making the concept of “operational coherence” work was worth it all by itself. But to answer one of your questions, work by the big group #2 (don’t know how many teams they had) showed that one stable shape was downward-pointing paraboloid, what used to be called an “ogee” in model rocketry.

Robert the design can also be used as we go towards the solar gravity lens line. If we had multiple of these spacecraft spaced out following the gravity lens manifold we would have the resolution of the sun diameter advantage. Using the master laser as the sync clock the data from all the individual probes could be collected to form a very nice image we could work with.

The reference sail ships are still the 1g mass, 1 m^2 sail size that is flat. Do we even know if this configuration will work? (I have seen papers claiming a flat sail will be stable, but I have yet to see actual hardware proof.)

Then we have the issue concerning random perturbations in sail velocity due to the likely heterogeneity of the ISM, slowing some sails more than others. Does this require all the sails to slow down to the slowest member, or is the slower sail[s] just written off as in failure mode? TBD. If the ISM is heterogenous, just imagine what the interplanetary medium is going to be like – from dust particles to spatial variations in the solar wind from Proxima, all potentially breaking up the swarm’s ability to configure itself accurately.

The hardware development is a TBD. The magnetorquers for CubeSats are acknowledged to be orders of magnitude too massive. For the sails to align themselves correctly at Proxima, they must rotate so that the lasers can locate other sails to achieve coherence. That in itself might be a problem. Can such sails even generate a coherent signal beam given their separation? These are just a few of the likely difficulties – hardware development, software development, and the vicissitudes of the medium the sails must navigate.

All in all, an extremely ambitious project. But even if this proves too difficult, it should provide a lot of knowledge about what may be possible.

If this project gets to some hardware development stage, I would like to see such a swarm be used in the solar system. The sail velocity could be quite modest to reach even the outer planets quite quickly, requiring a far smaller laser, although I suspect that exquisite beam energy will be somewhat disrupted by even subtle changes in the atmosphere for a ground-based, phased laser array in Chile. However, the possibilities of such an approach could be impressive. [While the photon capture would be low, the swarm could make a huge telescope for imaging.]

I haven’t had a chance to read the paper, but in addition to the complexities you mention, did the authors raise the potential additional challenge of time & distance dilation due to relativistic speeds? I know .2 c isn’t *that* relativistic in the grand scheme, but it may still be highly relevant if the goal is to have an entire flotilla/swarm arrive at the same time & work in a coherent way with each other.

Larry, I note this from the paper:

“Time on target” (ToT) means that either velocities, or

paths, or both, are adjusted to bring multiple projectiles on a

target at the same time. For relativistic spacecraft the paths

are largely fixed, so ToT relies on variation of velocity; in

the remainder of this section velocities are assumed to be

scalar quantities.”

And I don’t believe, as you note, that .2 c poses a huge issue in terms of time dilation. The authors do consider the problem of dust impacts and erosion moving at relativistic speeds, of course. I don’t know if the NIAC project will get into this or not.

Instead of sending heavy radioactive batteries why not use the dust impacts to generate power?

Why not make them in space and generate a parabolic curve on the disc via:

“A private Ax-1 astronaut will test making a liquid telescope mirror in space”

https://www.space.com/membrane-mirrors-big-space-telescopes

One thing we will have is a very long time to send data back after the flyby. Atomic scale storage may make large data storage from high resolution mirrors and interferometer scale resolution happen.

Maybe use the giant flares of Proxima to slow the swarm.

It would be better to have a curved surface to deflect on coming hydrogen and helium atoms towards a point where they could used to heat it or induce power to form an energy source.

For a speed of 20% of c, the Lorentz factor, gamma, is about 2%. Not negligible, but not determinative either. As for time distortion, the head and tail of the swarm are moving at similar speeds to each other, so the differential from one probe to the next, due to time dilation, is infinitesimal. Also, the fleet has two decades to form up. To Alex – any stragglers are on their own.

It seems to me that the coalescing of the flotilla on fig.2b is an illusion of the log scale. In a normal scale it would be diverging. It should have been plotted in a zoomed in window, if true.

Our orbital dynamicist (perhaps astrogator is the better term) the remarkable Adam Hibberd, and I made an artistic decision, since making an animation without logarithmic compression in both distance and elapsed time would be like making a video of drying paint or growing grass.

This further develops interstellar swarm technology which could be applied to interstellar colonization as described in the Centauri Dreams article Engineered Exogenesis.

First space junk, then pushing interstellar probes…

https://futurism.com/the-byte/ground-laser-shoot-down-space-junk

So many probes to such a distance reminds of a book I had heard of but never been able to find:

The Anarchist’s guide to herding cats

Could this be what you are looking for?

http://www.roguecom.com/rogueradio/herdingcats.html