The challenges involved in sending gram-class probes to Proxima Centauri could not be more stark. They’re implicit in Kevin Parkin’s analysis of the Breakthrough Starshot system model, which ran in Acta Astronautica in 2018 (citation below). The project settled on twenty percent of the speed of light as a goal, one that would reach Proxima Centauri b well within the lifetime of researchers working on the project. The probe mass is 3.6 grams, with a 200 nanometer-thick sail some 4.1 meters in diameter.

The paper we’ve been looking at from Marshall Eubanks (along with a number of familiar names from the Initiative for Interstellar Studies including Andreas Hein, his colleague Adam Hibberd, and Robert Kennedy) accepts the notion that these probes should be sent in great numbers, and not only to exploit the benefits of redundancy to manage losses along the way. A “swarm” approach in this case means a string of probes launched one after the other, using the proposed laser array in the Atacama desert. The exciting concept here is that these probes can reform themselves from a string into a flat, lens-shaped mesh network some 100,000 kilometers across.

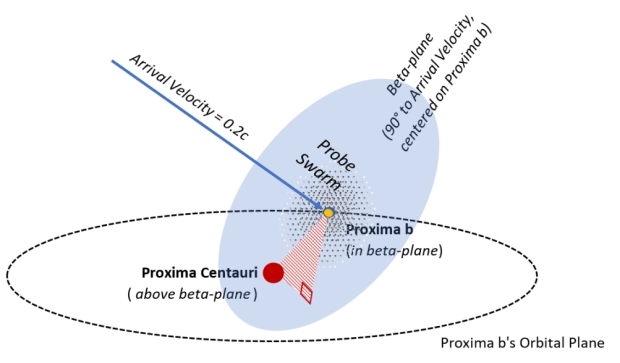

Image: Figure 16 from the paper. Caption: Geometry of swarm’s encounter with Proxima b. The Beta-plane is the plane orthogonal to the velocity vector of the probe ”at infinity” as it approaches the planet; in this example the star is above (before) the Beta-plane. To ensure that the elements of the swarm pass near the target, the probe-swarm is a disk oriented perpendicular to the velocity vector and extended enough to cover the expected transverse uncertainty in the probe-Proxima b ephemeris. Credit: Eubanks et al.

The Proxima swarm presents one challenge I hadn’t thought of. We have to be able to predict the position of Proxima b to within 10,000 kilometers at least 8.6 years before flyby – this is the time for complete information cycle between Earth, Proxima and back to Earth. Effectively, we need to figure out the planet’s velocity to a value of 1 meter per second, with a correspondingly tight angular position (0.1 microradians).

Although we already have Proxima b’s period (11.68 days), we need to determine its line of nodes, eccentricity, inclination and epoch, and also its perturbations by the other planets in the system. At the time of flyby, the most recent Earth update will be at least 8.5 years old. The Proxima b orbit state will need to be propagated over at least that interval to predict its position, and that prediction needs to be accuracy to the order of the swarm diameter.

The authors suggest that a small spacecraft in Earth orbit can refine Proxima b’s position and the star’s ephemeris, but note that a later paper will dig into this further.

In the previous post I looked at the “Time on Target” and “Velocity on Target” techniques that would make swarm coherence possible, with variations in acceleration and velocity allowing later-launched probes to reach higher speeds, but with higher drag so that as they reach the craft sent before them, they slow to match their speed. From the paper again:

A string of probes relying on the ToT technique only could indeed form a swarm coincident with the Proxima Centauri system, or any other arbitrary point, albeit briefly. But then absent any other forces it would quickly disperse afterwards. Post-encounter dispersion of the swarm is highly undesirable, but can be eliminated with the VoT technique by changing the attitude of the spacecraft such that the leading edge points at an angle to the flight direction, increasing the drag induced by the ISM, and slowing the faster swarm members as they approach the slower ones. Furthermore, this approach does not require substantial additional changes to the baseline BTS [Breakthrough Starshot] architecture.

In other words, probes launched at different times with a difference in velocity target a point on their trajectory where the swarm can cohere, as the paper puts it. The resulting formation is then retained for the rest of the mission. The plan is to adjust the attitude of the leading probes continually as they move through the interstellar medium, which means variations in their aspect ratio and sectional density. A probe can move edge-on, for instance, or fully face-on, with variations in between. The goal is that the probes lost later in the process catch up with but do not move past the early probes.

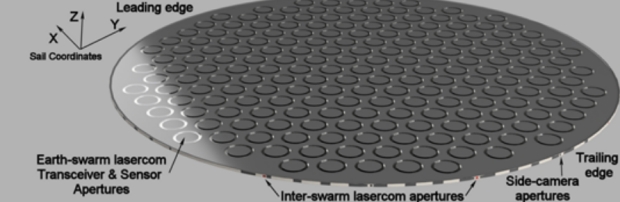

All this is going to take a lot of ‘smarts’ on the part of the individual probes, meaning we have to have ways for them to communicate not just with Earth but with each other. The structure of the probes discussed here is an innovation. The authors propose that key components like laser communications and computation should be concentrated, so that whereas the central disk is flat, the ‘heart of the device,’ as they put it, is concentrated in a 2-cm thickened rim around the outside of the sail disk.

The center of the disk is optical, or as the paper puts it, ‘a thin but large-aperture phase-coherent meta-material disk of flat optics similar to a fresnel lens…’ which will be used for imaging as well as communications. Have a look at the concept:

Image: This is Figure 3a from the paper. Caption: Oblique view of the top/forward of a probe (side facing away from the launch laser) depicting array of phase-coherent apertures for sending data back to Earth, and optical transceivers in the rim for communication with each other. Credit: Eubanks et al.

So we have a sail moving at twenty percent of lightspeed through an incoming hydrogen flux, an interesting challenge for materials science. The authors consider both aerographene and aerographite. I had assumed these were the same material, but digging into the matter reveals that aerographene consists of a three-dimensional network of graphene sheets mixed with porous aerogel, while aerographite is a sponge-like formation of interconnected carbon nanotubes. Both offer extremely low density, so much so that the paper notes the performance of aerographene for deceleration is 104 times better than conventional mylar. Usefully, both of these materials have been synthesized in the laboratory and mass production seems feasible.

Back to the probe’s shape, which is dictated by the needs not only of acceleration but survival of its electronics – remember that these craft must endure a laser launch that will involve at least 10,000 g’s. The raised rim layout reminds the authors of a red corpuscle as opposed to what has been envisioned up to now as a simple flat disk. The four-meter central disk contains 247 25-cm structures arranged, as the illustration shows, like a honeycomb. We’ll use this optical array for both imaging Proxima b but also returning data to Earth, and each of the arrays offers redundancy given that impacts with interstellar hydrogen will invariably create damage to some elements.

Remember that the plan is to build an intelligent swarm, which demands laser links between the probes themselves. Making sure each probe is aware of its neighbors is crucial here, for which purpose it will use the optical transceivers around its rim. The paper calculates that this would make each probe detectable by its closest neighbor out to something close to 6,000 kilometers. The probes transmit a pulsed beacon as they scan for neighboring probes, and align to create the needed mesh network. The alignment phase is under study and will presumably factor into the NIAC work.

The paper backs out to explain the overall strategy:

…our innovation is to use advances in optical clocks, mode-locked optical lasers, and network protocols to enable a swarm of widely separated small spacecraft or small flotillas of such to behave as a single distributed entity. Optical frequency and reliable picosecond timing, synchronized between Earth and Proxima b, is what underpins the capability for useful data return despite the seemingly low source power, very large space loss and low signal-to-noise ratio.

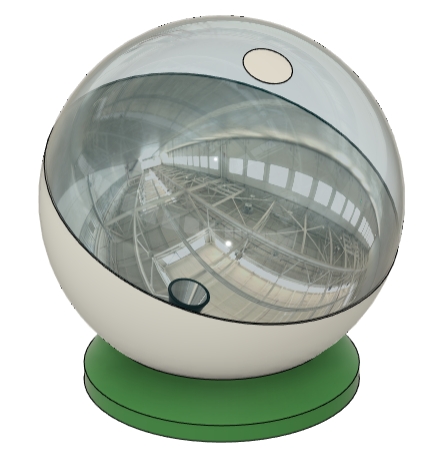

For what is going to happen is that the optical pulses between the probes will be synchronized, meaning that despite the sharp constraints on available energy, the same signal photons are ‘squeezed’ into a smaller transmission slot, which increases the brightness of the signal. We get data rates through this brightening that could not otherwise be achieved, and we also get data from various angles and distances. On Earth, a square kilometer array of 796 ‘light buckets’ can receive the pulses.

Image: This is Figure 13 from the paper. Caption: Figure 13: A conceptual receiver implemented as a large inflatable sphere, similar to widely used inflatable antenna domes; the upper half is transparent, the lower half is silvered to form a half-sphere mirror. At the top is a secondary mirror which sends the light down into a cone-shaped accumulator which gathers it into the receiver in the base. The optical signals would be received and converted to electrical signals – most probably with APDs [avalanche photo diodes] at each station and combined electrically at a central processing facility. Each bucket has a 10-nm wide band-pass filter, centered on the Doppler-shifted received laser frequency. This could be made narrower, but since the probes will be maneuvering and slowing in order to meet up and form the swarm, and there will be some deceleration on the whole swarm due to drag induced by the ISM, there will be some uncertainty in the exact wavelength of the received signal. Credit: Eubanks et al.

If we can achieve a swarm that is in communication with its members using micro-miniaturized clocks to keep operations synchronous, we can thus use all of the probes to build up a single detectable laser pulse bright enough to overcome the background light of Proxima Centauri and reach the array on Earth. The concept is ingenious and the paper so rich in analysis and conjecture that I keep going back to it, but don’t have time today to do more than cover these highlights. The analysis of enroute and approach science goals and methods alone would make for another article. But it’s probably best that I simply send you to the paper itself, one which anyone interested in interstellar mission design should download and study.

The paper is Eubanks et al., “Swarming Proxima Centauri: Optical Communication Over Interstellar Distances,” submitted to the Breakthrough Starshot Challenge Communications Group Final Report and available online. Kevin Parkin’s invaluable analysis of Starshot is Parkin, K.L.G., “The Breakthrough Starshot system model,” Acta Astronautica 152 (2018), 370–384 (abstract / preprint).

The metalens meets the stars.

Large, all-glass metalens images sun, moon and nebulae.

https://seas.harvard.edu/news/2024/01/metalens-meets-stars

Re: swarm targeted direction accuracy.

1. Could the swarm detect the position of Proxima b and its velocity sufficiently accurately and with sufficient time to allow the swarm to manage some “cross range” maneuvering for closer data acquisition? Or is the 0.2 c velocity so great that any possible trajectory reorientation would be marginal from the time and distance of position calculation by the swarm and achievable course correction distance?

2. Given the difficulties and mission time, I wonder if this approach might not be initially better used with our sun’s SGL to image Proxima b. The image resolution would be much poorer and there would be no other local Proxima data to capture, but the swarm could travel much more slowly and return results in a very short time, as well as provide needed very deep space testing for capturing data from a range of targets including, perhaps, interstellar objects entering or leaving our system. Characterizing Proxima b before setting out for terra incognito might be parsimonious. If Proxima b proved disappointing as a habitable/inhabited world, it would save a quarter of a century in time, with the resources of such swarm probes redirected to more accessible targets.

I’m actually not sure how much of an advantage you really get in doing a flyby like this versus using the SGL to image Proxima. You can relax the requirements for velocity greatly while still gaining all the really important information we want to know about Proxima b.

If you relax it down to 2% of the speed of light, you can start entering the SGL in 158 days. Down to 0.2% and you reach it in 4.3 years. You could also increase mass as well. The probes won’t need to be as insanely engineered, and you have more shots at throwing another batch out if something goes wrong.

If the SGL can give us a 1000×1000 pixel image of Proxima b, then we will from that know a lot about its characteristics and habitability. Getting an even higher resolution image by getting closer has diminishing returns in terms of characterizing its habitability. A 1000×1000 pixel image would already reveal whether it’s atmosphere was hazy, whether there were oceans, whether it was tidally locked or had atmospheric induced rotation, what its geological history was etc.

When we can do a flyby of Proxima it will surely be after we’ve characterized it in great detail using the SGL. We’ll know tons about it already.

Even with an SGL mission, it’s best to build up slowly by showing you can send these probes to Jupiter quickly with a smaller amount of lasers. Build up the desert array bit by bit to take on new missions that people come up with.

Agree entirely. Getting to Proxima b is like sending a flyby probe to Mars (like Mariner 4) in the 19th century before even reasonably good telescopic images were available. It would be a shame to send a swarm probe there and find it is a Venus or desert planet analog after 25 years, when this data would perhaps be acquired much more quickly.

If we have images of Proxima b, then the type of science to be done can be more defined, for example, doing spectral analysis of the forests (if they are present), looking for signs of artificial origin, etc.

With “small” shifts in orientation or using a non-linear orbital trajectory, the swarm at the SGL might be able to image a number of exoplanets around other stars, increasing the science value of such a mission. Serendipity might even discover a better target that is reachable by a swarm. [If rogue planets are as common as believed, there may be such planets much closer than Proxima and therefore a more interesting target from both a science POV, as well as mission time for a swarm technology.]

Even if we cant lock onto an explanet with a SGL mission we would see to the very edge of the universe and anything in between. And if a swarm is used the detail scales with the diameter of the observing lens. There is also the possibility if thousands of these probes are used an image can be formed before the SGL line is reached by having them spaced around the gravity light path manifold eventually they orientate themselves at the focal line.

While the proposal here is very interesting as a potential technology for studying exoplanets. It’s not an image we need of Proxima.

Images could be interpreted in many ways in any case. There’s numerous examples on quite high resolution images taken at Mars that have been interpreted in wildly different ways. Such as the flow on crater slopes, possible fossilised bacterial microfilms etc.

What’s needed is a spectrogram and a few other measures of this planet such as in infrared which can be obtained with other means, that will tell volumes.

And if in the extremely unlikely case turns out that Proxima happen be slightly better off than the radiation soaked, atmosphereless and one sided hell the facts tell us it should be. I’d be the first to support further studies to find out where science have failed to predict how such an unprecedented world could exist.

There’s a number of quite promising planets at a larger distance, if we feel we have to look at a relatively nearby world I would suggest Tau Ceti e and f where both show a lot more promise for being potentially habitable.

I’m reading the article now. The concept is brilliant; it reminds me of bees that orient themselves according to the direction of the sun’s rays. Finally, the real challenge of this mission is precision, which must be formidable, not only between modules but also with the earth. So two questions: what synchronizes all the atomic clocks? Aren’t the swarm’s signals at risk of being disrupted by the interstellar environment – I’m thinking of EMI radiation, which could introduce very slight disturbances in signal transmission and cause the swarm’s positioning, for example, to fail?

Sorry for these questions, but the subject is complex for a modest amateur astronomer; I’m studying the article… ;)

Fred, the master laser can be used as the clock.

It appears that in matters of space exploration we are often confronted with choices between particular focus and broad survey. To illustrate, advocates of what would become the Hubble Space Telescope included President Reagan’s science adviser George Keyworth who anticipated it being more cost effective than launching individual interplanetary space probes. If I recall correctly he took his case to an American Astronautical Society conference meeting as a guest speaker in the early1980s. One could imagine such an advocate shutting the door on the two Voyager flights launched a decade before.

Fortunately, to some degree we obtained and benefitted from both: space telescopes with multiple capabilities and spacecraft which targeted individual planets, including their satellite systems.

But nonetheless, clearly the resource allocation problem has not disappeared.

Perhaps we need something like the Drake Equation in terms in evaluating the cost-benefit problem. When it is applied to something like building a bridge or a ferry to ford a river, we have some economic notion such as traffic and enterprise that could result, though there is still some uncertainty involved. Should it be a bridge or a ferry to a square mile island though, less speculation would be involved than say the consequences of the 19th century Eads Bridge over the Mississippi from Illinois to Missouri.

Currently, our dilemma might be described as choosing between survey observatories and directed space probes to a destination such as Proxima Centauri. With perhaps a few decades development and few decades transit, a Proxima flyby can be obtained.

Alternatives:

1. Continuing a program of launching or constructing on the Earth ( or the moon?) larger observatories with increasing survey capabilities and lower threshold for planetary characteristic detection.

2. Hybrid applications such as placing a gravitational spherical lens at 550 AU distance from the sun. A high resolution telescope or telescopes dedicated to a stellar system in other words.

Without a developed analytical tool, my intuition is that space-based observatories and a number of yet to be “consecrated” ground based observatories could still provide near term information about Proxima and its planets, the sort on which decision making information could be made for Starshot efforts or similar follow ons. The worst case would be Starshot launched before we receive some really bad news from such alternate methods.

Reviewing, say, the Wikipedia summary of Proxima Centauri b’s characteristics and discovery, our knowledge is based primarily on radial velocity of the primary, which is to say doppler shifts of spectral lines. This information does not provide inclination determination, inclination of the planet’s orbit with respect to the line of sight. So, unless there is a secondary source of measuring b’s properties, the mass assumption is a minimum mass or else an averaged ( e.g. associated with 45 degree inclination or 1.414 the minimum).

Whether the mass assumption is either of the above, the surface radius is similarly forecast based on a terrestrial analog with such a primary – and then the surface and atmosphere chemistry… More predictably, there should be a phase locked rotation of the planet so close to Proxima – unless it actually has a significant eccentricity. Whatever the minimum detectable eccentricity, it could be substantial enough to have climate impact. As much as the moon has with respect to the Earth might have consequences for weather and climate, say, if there does remain an atmosphere. But all this might be made moot. After all, Trappist 1 and Proxima as primaries both exhibit EM storms out of proportion to what Sol provides in its corresponding habitable zone. Maybe no significant atmosphere remains at all.

In other words, there is a lot of conjecture about Proxima b characteristics founded on radial velocity measurements. Now this could be interpreted as a rationale for Starshot, true. But it is quite possible that many of these fundamentals can be assessed with a next generation of space or ground based telescopes. If not all, maybe some.

If not with existing operating systems, systems already in the pipeline.

Perhaps with a larger space telescope launched with a Starship booster and armed with stellar coronagraph. Perhaps a less expensive extension of JWST so launched might be sufficiently equipped to make the difference. The Starship with a larger payload capacity than the Ariane V and a wider diameter could allow either a simpler construction and/or a wider aperture.

But since Proxima is one of the lower thresholds for HZ investigations based on coronagraphs and spectral analysis, it is hard to say how many other targets can be assessed in the same way offhand. It could be a dozen or it could be hundreds. And likely with Proxima the information could be obtained sooner than what would be by Starshot if it successfully reaches target.

Of course, we are all interested in accessing the stars in some fashion. And Starshot is an evolution of a concept with antecedents in vehicles such as Daedalus. By that measure, from fixing to build a nuclear spaceship as big as

as an iceberg to units as big as jar caps capable of formation flying on the same mission – that is a tremendous increase in technical skill.

Correspondingly, we did mention the alternative of a spherical gravitational lens at station 550 AUs from the sun. It’s another form of intensive study with significant implementation time delay – but the sinker there is that it is not significantly maneuverable. Slewing might be possible at a stellar target, but not significantly through the celestial sphere. Unless you develop a fleet of these too.

So, to wrap up, I hope that we can come up with our own analog to the Drake equation for scoring plans to survey the sky or concentrate on particular target stars. That might require some systems analysis based on various measures or parameters. And this discipline has had both significant successes and failures, depending quite often on how objectives or “benefit” is defined or calculated. but one hopes that it can tell us things that we hadn’t noticed or quantified thus far.

Coincidentally, just found this.

ttps://arxiv.org/pdf/2401.09589.pdf

It’s an examination of ground based Extra Large Telescope on the northern Chilean plateau taking a shot at Proxima b. ELT is still under construction but it includes in its implement complement HARMONI: the high angular resolution monolithic optical and near infrared integral field spectrograph. Getting a spectrograph in space is one thing, but high resolution is better addressed on the ground due to dimensions and resulting mass.

The assessment of the article, if I understand correctly, is that the ELT device as configured is not ready to take on Proxima b, but with some modification it can address some objectives: not search for biomarkers, but detect and identify some Proxima b atmospheric components with a spectral analysis. Whether this is an advance over presumed capabilities of the space based Nancy Roman Telescope, set for deployment in a few years – possibly another trade matter.

“The team proposes making detailed simulations to optimize a design for Proxima Centauri B.”

Perhaps a foolish question, but what happens to the swarm after it has fulfilled its mission?

Is it intended to perform some kind of slingshot and return back to Earth? Does it just continue on a path until a gravitational body captures it (which seems like the galactic equivalent of littering and could potentially disrupt life on another world)? Or some other option?

I suppose it could still transmit info by using the SGL of proxima but I think the alignment would become a problem over time. Also as we go further out from earth the swarm would still be able to do science like see more transiting planets from the change in angles and even act as a huge lens on the way to proxima.

@Rob

No slingshot back to Earth is possible at such velocities. It is conceivable that the swarm could travel to another star, but any gravitational perturbation would tend to scatter the swarm making this extremely difficult.

It is conceivable that by continuing face-on into the ISM, they could slow down and even disintegrate, but my guess is you get a lot of “littering” whatever you do.

On the bright side, a piece of the swarm spreading out might eventually enter a solar system with an advanced ETI. They might detect the sail and determine it is an artifact and therefore the proof of the existence of another intelligence species. Whether they could determine our location is another matter.