Overpopulation has spawned so many dystopian futures in science fiction that it would be a lengthy though interesting exercise to collect them all. Among novels, my preference for John Brunner’s Stand on Zanzibar goes back to my utter absorption in its world when first published in book form in 1968. Kornbluth’s “The Marching Morons” (1951) fits in here, and so does J.G. Ballard’s Billenium (1969), and of course Harry Harrison’s Make Room! Make Room! from 1966, which emerged in much changed form in the film Soylent Green in 1973.

You might want to check Science Fiction and Other Suspect Ruminations for a detailed list, and for that matter on much else in the realm of vintage science fiction as perceived by the pseudonymous Joachim Boaz (be careful, you might spend more time in this site than you had planned). In any case, so strongly has the idea of a clogged, choking Earth been fixed in the popular imagination that I still see references to going off-planet as a way of relieving population pressure and saving humanity.

So let’s qualify the idea quickly, because it has a bearing on the search for technosignatures. After all, a civilization that just keeps getting bigger has to take desperate measures to generate the power needed to sustain itself. It’s worth noting, then, that a 2022 UN report suggests a world population peaking at a little over 10 billion and then beginning to decline as the century ends. A study in the highly regarded medical journal The Lancet from a few years back sees us peaking at a little under 10 billion by 2064 with a decline to less than 9 billion by the end of the 2100s.

Image: The April, 1951 issue of Galaxy Science Fiction where “The Marching Morons” first appeared. Brilliant and, according to friend and collaborator Frederick Pohl, exceedingly odd, Cyril Kornbluth died of a heart attack in 1958 at the age of 34, on the way to being interviewed for a job as editor of Fantasy and Science Fiction.

How accurate such projections are is unknown, as is what happens beyond the end of this century, but it seems clear that we can’t assume the kind of exponential increase in population that will put an end to us in Malthurian fashion any time soon. It’s conceivable that one reason we are not finding Dyson spheres (although there are some candidates out there, as we’ve discussed here previously) is that technological civilizations put the brakes on their own growth and sustain levels of energy consumption that would not readily be apparent from telescopes light years away.

Thus a new paper from Ravi Kopparapu (NASA GSFC), in which the population figure of 8 billion (which is about where we are now) is allowed to grow to 30 billion under conditions in which the standard of living is high globally. Assuming the use of solar power, the authors discover that this civilization, far larger in population than ours, uses much less energy that the sunlight incident upon the planet provides. Here is an outcome that puts many one of the most cherished tropes of science fiction to the test, for as Kopparapu explains:

“The implication is that civilizations may not feel compelled to expand all over the galaxy because they may achieve sustainable population and energy-usage levels even if they choose a very high standard of living. They may expand within their own stellar system, or even within nearby star systems, but galaxy-spanning civilizations may not exist.”

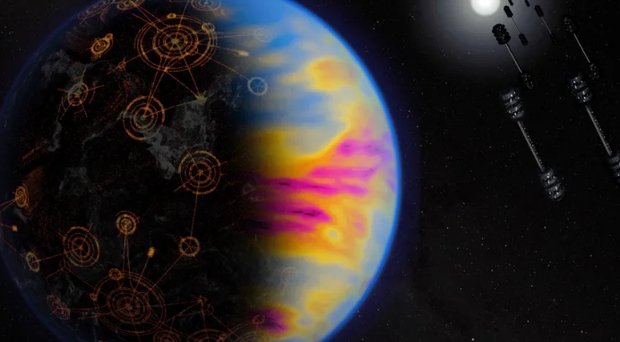

Image: Conceptual image of an exoplanet with an advanced extraterrestrial civilization. Structures on the right are orbiting solar panel arrays that harvest light from the parent star and convert it into electricity that is then beamed to the surface via microwaves. The exoplanet on the left illustrates other potential technosignatures: city lights (glowing circular structures) on the night side and multi-colored clouds on the day side that represent various forms of pollution, such as nitrogen dioxide gas from burning fossil fuels or chlorofluorocarbons used in refrigeration. Credit: NASA/Jay Freidlander.

The harvesting of the energies of stellar light may be obsolete among civilizations older than our own, given alternative ways of generating power. But if not, the paper models a telescope on the order of the proposed Habitable Worlds Observatory to explore how readily it might detect a massive array of solar panels on a planet some 30 light years away. This is intriguing stuff, because it turns out that even huge populations don’t demand enough power to cover their planet in solar panels. Indeed, it would take several hundred hours of observing time to detect at high reliability a land coverage of 23 percent on an Earth-like planet using silicon-based solar panels for its needs.

The conclusion is striking. From the paper:

Kardashev (1964) even imagined a Type II civilization as one that utilizes the entirety of its host star’s output; however, such speculations are based primarily on the assumption of a fixed growth rate in world energy use. But such vast energy reserves would be unnecessary even under cases of substantial population growth, especially if fusion and other renewable sources are available to supplement solar energy.

A long-held assumption thus comes under fire:

The concept of a Type I or Type II civilization then becomes an exercise in imagining the possible uses that a civilization would have for such vast energy reserves. Even activities such as large-scale physics experiments and (relativistic) interstellar space travel (see Lingam & Loeb 2021, Chapter 10) might not be enough to explain the need for a civilization to harness a significant fraction of its entire planetary or stellar output. In contrast, if human civilization can meet its own energy demands with only a modest deployment of solar panels, then this expectation might also suggest that concepts like Dyson spheres would be rendered unnecessary in other technospheres.

Of course, good science fiction is all about questioning assumptions by pushing them to their logical conclusions, and ideas like this should continue to be fertile ground for writers. Does a civilization necessarily have to expand to absorb the maximum amount of energy its surroundings make available? Or is it more likely to evolve only insofar as it needs to reach the energy level required for its own optimum level of existence?

So. What makes for an ‘optimum experience of life’? And how can we assume other civilizations will necessarily answer the question the same way we would?

The question explores issues of ecological sustainability and asks us to look more deeply at how and why life expands, relating this to the question of how a technosphere would or would not grow once it had reached a desired level. We’re crossing disciplinary boundaries in ways that make some theorists uncomfortable, and rightly so because the answers are anything but apparent. We’re probing issues that are ancient, philosophical and central to the human experience. Plato would be at home with this.

The paper is Kopparapu et al., “Detectability of Solar Panels as a Technosignature,” Astrophysical Journal Vol. 967, No. 2 (24 May 2024), 119 (full text). Thanks to my friend Antonio Tavani for the pointer to this paper.

Its always been sort of accepted by SETI communities that extra terrestrial civilizations are aggressive, expansive, bold explorers–to a fault. They are engaged in a constant frenzy of exploration and conquest, settling new worlds in a never-ending mad rush to inhabit, attack, or terraform new planets. Furthermore, their colonies are also infected by this pathology, and as soon as an outpost is established it also begins spreading into the surrounding space with the same single-minded ferocity. Even without FTL, we are told, they should be able to saturate the entire Milky Way in just a few million years.

This is not necessarily the characteristic of a space-faring species. I suspect it is more an identifier of individuals obsessed with space exploration and science fiction. I’ve spoken of this tribe before, the infamous ‘space groupies’.

And I do not use that term pejoratively, I consider myself one.

A technically advanced race must surely soon recognize the need for, and develop the ability to control, its population growth and make its industrial activities efficient and environmentally friendly. It may indeed require to use its space travel capabilities to acquire new resources, dispose of industrial wastes, or test destructive or polluting new tech, but a galactic empire is not necessary to do this. As for terraforming new worlds, a truly spacefaring species would be quite comfortable establishing itself in orbital colonies or asteroid belts, rather than developing distant virgin (or already occupied) worlds. Why bother with the complex endeavor of remolding hostile planets to simply satisfy one’s own merely biological needs? As for harnessing stellar or galactic scale energy sources; why? What could they possibly do with it? Invest in bitcoin blockchain tech?

An intelligent culture might realize its own home world was vulnerable to overpopulation, war, resource depletion, or some natural cosmic catastrophe. It might wisely establish colonies so that its culture, or even its population might be preserved in the event of some natural or cultural disaster. But an ever-expanding galactic empire? I doubt it.

Scientific knowledge? I suspect after the detailed exploration and survey of just a few nearby slag heaps and ice lumps, ETI would soon realize the rest of the universe looks pretty much the same as the piece you live in. The capability to visit the exotic mysteries of other galaxies or deep space itself is so far in our future that I doubt we can make any meaningful speculation about it.

If there are truly any “galactic” empires”, I doubt they consist of more than a handful of isolated outposts surrounding a home planet, all in a volume of a few thousand cubic light years. Even if they have developed Faster Than Light

propulsion, there simply is no need to go any further than that. If the originating core society occurred in a star cluster, I suspect its expansion would cease as soon as the cluster was settled, explored or secured.

We space groupies are the inheritors of an aggressive, expansionist culture that spread across our entire planet , conquering, colonizing and exploiting large portions of it in just the last few hundred years. That is not a guide to how other civilizations will do it. Even older civilizations on our own world did not do this, preferring only to expand until their own borders and trade routes were secure.

It goes without saying that we cannot predict or even imagine what other minds and cultures might do. We may encounter a race of xenophobic religious fanatic space nazis determined to extinguish all life other than their own. But it is unrealistic to expect they will exhibit behavior similar to our own. Or what we have convinced ourselves is our own. Our fantasies of our civilization heroically spreading across the stars may tell us more about our own pathologies than it might reveal about theirs.

The Search for Things that Matter | Centauri Dreams (centauri-dreams.org)

I’m surprised that the premise for your argument hinges, entirely, upon energy dependency. I was expecting something else. But since I’ve been studying the subject for over half a dozen years, and have even managed to publish an article in a peer reviewed journal, along with blogging (mostly on Quora as “Vin Yasi”) and podcasting (on podbean as “Magical Me”) and self-publishing on Amazon (as “vinyasi”), I can safely say that it is theoretically possible to harness the imaginary power of apparent (complex) power without depending upon any real source of power any greater than the millionth parts of volts which is readily available from our environment in the form of: trees, plants, animals, and the space which is immediately above the ground, etc. This is not a “source” so much as it is a catalyst for evoking excessively enlarged quantities of imaginary power to erupt from out of the mutual relationship among the dielectric and magnet fields of pairs of coils and capacitors interacting with one another in a parametric manner not unlike that which is elucidated on Wikipedia within its articles concerning parametric amplification in the audio industry.

There are three main categories of implementing the benefits of the colloquialism known as: “free energy” (not to be confused with the same term used by physicists to imply various things of a completely different nature). There is the:

1. Recycling of the imaginary component of apparent power. And the:

2. Harnessing of the imaginary component of apparent power from the environment. This category subdivides into two subcategories of natural sources of imaginary power and manmade in which the latter variety is renamed the “theft of power” from the utility grid. Charles Earl Ammann was arrested for doing this when he crossed into the jurisdiction of Washington, D.C., to deliver his batteryless EV 100 years ago.

3. Evocation of the imaginary component of apparent power from the plane of imaginary numbers which has previously been named the: aether (ether), counter-space, vacuum state and the zero-point of, etc. When imaginary numbers are squared, they become negative real numbers and are, thus, enumerated within electronic simulation software as “negative watts” which Jim Murray has renamed “reactive watts” when he coauthored his S.E.R.P.S patent with Paul Babcock.

Negative watts is the generic definition (attributed by simulation software and by electrical theorists) of the generation of power. Hence, a “free energy” circuit generates its own imaginary power using a paltry sum of real power acting, not as a significant prime mover, but as a mere catalyst. In fact, this is the first citation…

The Relativity of Energy and the Reversal of Time is a Shift in Perspective (ijcionline.com)

…of my peer reviewed article…

(PDF) Low Frequency Oscillations in Indian Grid (researchgate.net)

Now, electrical engineers would love to have us believe that, yes, it is theoretically possible to harness an infinite supply of the imaginary component of apparent power, but with their caveat that it is useless (when retained in the format of imaginary power). They are liars (or misinformed or keep their mouths shut and turn aside from me).

The correct term is not useless, but lossless. Yet, when passed through a resistive load, imaginary power becomes real power and can boil water to rotate a steam driven turbine shackled to the axle of a rotary generator and, thus, become useful.

Since imaginary power is theoretically infinite, there is no excuse for our dependency upon anything else unless we have ulterior motives, such as (but not limited to): the production of plutonium for the manufacturing of additional nuclear warheads, or sustaining the destruction of our environment through our use of fossil fuels, etc.

I have performed simulations in which it is theoretically possible to unhinge the real power production from the real power inception – using the imaginary side-step in between these two endpoints – to such a degree that it no longer matters how much input power is available since the same quantity of input can power any quantity of output using zero and infinity as our asymptotic limits.

True, an overunity free energy circuit can become comatose. But it can just as readily blow itself up without warning with anything in between as a possibility of outcomes.

It is also possible that an overunity circuit can strobe its surges in a periodic manner with a frequency of strobing which is fast enough to be tolerated by our power converters prior to being fed into our appliances.

Electrical engineers like to call overunity circuits by their acceptable nomenclature of being “unstable” due the essential tendency for overunity circuits to surge to their self-destruction if not regulated (ie, prevented) from doing so.

So, this is a delicate artistry since it is more difficult to design an overunity circuit than it is to design a “flashlight circuit” since an overunity circuit could more readily go comatose than any other response. This is a good thing since it would be awkward if it were easy to produce overunity and spontaneously explode our universe by accident or by intention.

But, it is not impossible. It just takes more intelligence rather than brute force to evoke an overunity response from a free energy circuit.

We use the brute force of the application of significant levels of input voltage in order to prevent the over-reactance of Foster’s Reactance Theorem in which impedances become negative and our circuit becomes a generator of power.

So, again, it is a delicate artistry which lies outside of conventional training among students of electrical engineering.

I had to learn my skills by trial and error on various simulators.

The advent of 64-bit registers has – for all intents and purposes – obliterated any possibility for “round-off error” and the false positives of overunity which can result.

By your scenario, growth would slow. But I don’t think it would ever completely stop. It would become a very, very slow rate. There will always be dissident groups that will want to leave and go somewhere else. This will always account for slow migration to new system, even though it could be a very slow rate of migration.

In hypothetical mode, it is possible that very advanced civilizations might collect a good portion of a star’s energy and beam it to other lesser civilizations for their use. Travis MacClendon, Blountstown, Fl

Why would they want to do that? And how do you define lesser in this scenario? What would be the physical limits of how far they could beam solar energy as it is?

Two Points I want to make:

The first is that I regard Stand on Zanzibar as the greatest work of speculative fiction. John Brunner spent a lot of time doing research for this book and the other three of the “Club of Rome” quartet. This research is housed at the University of Liverpool library archives and I’d love to get to see it one day as he essentially got the future right. Stand on Zanzibar reads like a mildly alternative reality to our present day and is so near to our future that I learned what you can predict about the future and what you can’t. The answer is that the future is a chaotic system like the weather, and like the weather you can predict the big trends, but the small stuff is random. An example of this is how he has the homeless pulling their belongings around in trundlers instead of shopping carts.

The second reason Stand on Zanzibar is such a great book is its structural complexity. It, compared to most other novels of the future, is panoramic. Most other novels of the future extrapolate one particular element of society but retain the settings of the time of its writing in other areas like on a movie set where if you swing the camera 90°, you get a bored make-up lady talking the the lighting technician. In Stand on Zanzibar, no matter where you point the camera, it’s all the setting.

The book has four parts. The first called “Continuity,” which has the main story, and is supported by three other parts. The first of these is a series of supporting stories called “Tracking with the Close-Ups,” which are the stories of supporting characters to flesh out the world. Further world building is done with a series of chapters labeled “Context,” which contains quotes, reports and social commentary. Finally, other series of chapters containing news items and advertisements serve to convey the minute background to all the rest. All this makes the main story feel natural and seamless without any data dumps. The result is so complex and difficult construct that Brunner never tried it again and nobody has successfully replicated it.

John Brunner is an under appreciated writer, Britain’s Phillip K. Dick, but without the cache.

Now onto population:

If you note the steadily falling birth-rates around the world with a majority of countries now below replacement rate, a future on endless expansion may be out, and future more like Arthur C. Clarke’s The City and the Stars but, without the expansion off Earth, looks more probable. This could lead to a frugal stagnant civilization without much of a signature.

Brunner predicted the ‘muckers’ too, as well as the gun nuts and their corporate/legislative allies, the industry providing consumers “organic” produce,

computer viruses, and several other related pathologies of the modern world.

His novel 4-part structure in “Zanzibar”, however, was a technique first pioneered by American writer John Dos Passos.

I remember reading the ‘Club of Rome Quartet’ many years ago, and upon reflection, I have since marveled at Brunner’s uncanny ability to so accurately predict the future. I’ve been meaning to re-read his work, but as in so many other things, I just haven’t gotten around to it.

Recent awareness of things that matter include lives that matter, which could include:

Ursus americanus

Latrodectus mactans

Cygnus atratus

A technological civilization’s requirements for energy seem to jump dramatically as AI is implemented. That need for energy is a driver even if the population is stable.

I seem to do pretty well on less than 2000 calories per day. I see no reason why we would be unique in this respect.

It is not the AI that is energy-consuming but the microprocessors that calculate billions of information per second and run data centers. All this is powered by electricity: you unplug the socket and there is no more IA. The question of energy (consumed) is therefore upstream…what produces electricity? solar; nuclear etc If the E.T.s look at us how could it define that there is life on earth by measuring our levels of energy produced and consumed? It’s a bit of an idea if I’m not mistaken…

I don’t agree with the argument that is relied on about maximum power/energy requirements.

It relies on 2 potential errors:

1. The current population statistics for energy/capita of the developed rich countries is the target for the rest of the planet. This is an old argument that when a sufficient standard of living is achieved, there is no need for further accumulation of goods and services. While this looks true at the population level, as we know this is not true of the very wealthy who provide the drive for the rest of the population to envy and strive for. That doesn’t mean we will all acquire yachts and personal aircraft and squander energy, but it does cause an upward creep of the energy per capita.

2. Resource use requires more energy to extract minerals and grow food. Agriculture is already a major energy user (and CO2 emission source) and will get worse as more fertilizers are needed to support the food requirements of a rich world diet without substitution. As mineral ores become less rich, either mining input must increase, or substitutes found. This will drive up the energy use per capita.

While efficiencies and substitutions can help to compensate, I doubt it will be effective in reducing the growth of energy use per capita as the world gets richer.

The bottom line is that either the global population declines, perhaps to a more sustainable 2 bn, or pressures to use more energy will continue. I agree with Dave Moore’s comment, that a falling population is not desirable, as we have seen throughout history it leads to civilization decline.

Unsustainable as it must be, I think the best we can do is curb growth rates to delay the time when we become a KI and then a KII civilization. If there are other civilizations out there, either they stay pr5e-technological and in a Malthusian state (in which case we probably will not detect them) or they pursue technological development until they decide the trade-offs for more growth are not generating a positive return or value. These are the civilizations we could theoretically detect. There is also no requirement that technological civilization can maintain a steady state over long time periods. Terrestrial civilizations grow and then decline. For ETI, we may best look for signs of an “extinct” civilization through their artifacts, whether orbiting satellites of extensive land shaping.

To curb or eradicate the human species (we know how to do well) is also potentially not see appear a new Michel-Ange; an Albert Enstein, a Marie Curie or a Carl Sagan, in other words, deprive yourself of a Brain that will makeTo take a giant leap for our civilization which may allow it to discover …the way to control its resources, to find other technologies to control others and why not, to miss an appointment with a new “wow” signal If we consider the development of technological civilizations on a time scale. Ultimately, it’s the choice we make that matters. Point of view….

The imperatives of biology include survival (which is subserved by more basic processes such as nutrition, digestion, assimilation, excretion, etc.), growth, replication, and as a consequence spread to new territories.

Energy use is implicit in these processes and can be a limiting factor. Finding new sources of energy has brought Homo sapiens to where we are now, but the constraints on energy availability is making further progress difficult. New energy sources and/or frugally with existing sources may be a solution for now. But new paradigms will be needed for the future.

This is a very biologic-centric argument.

In a universe where von Neumann probes recon a galaxy, what part of that logic implies these probes are wasteful? They’d be as optimized as possible.

The question shouldn’t be how many biologics can be sustained, but how many AIs? What would their needs be and how would they be regulated?

If one ignores the rise of artificial super intelligence for the moment, and look at the way our current human population behaves, it is so that there are a vast majority of them who don’t really have any ambition to push outward into the unknown and live amongst the stars.

Most are happy to just live their lives, doing whatever they do, and accept whatever technological level they are presented with as the staus quo.

But there are always a small number of individuals who push technology forward. Some of them are realtively benign, like Elon Musk for example, but some of them may become malevolent if their power are allowed to increase unchecked. Therefore there must always be a benign entity who can keep pace with the potentially malevolent entities to keep their power in check.

If an alien technological civilization also consists of billions of trillions of individuals, maybe one can guess that while the vast majority will be content to live sedentary lives, they, too, will have individuals who will keep pushing the frontiers outward and upward, and who will develop the means to do so, for benign or malevolent reasons.

Of course, we do not know the nature or form AGI will take once it becomes superior to human intelligence. Will it consist of one hive-brain into which all individuals will be assimmilated? Or will it consist of countless different individual intelligences?

If it is one hive-mind, we have absolutely no idea what it will do or how it will act or what to look for.

If it is a large number of different entities, I think it is safe to assume that there will be at least some which will push outward and increase energy usage to attempt bigger and bigger ventures. I don’t believe there is a limit to the curiosity or the power-hunger that sufficiently powerful entities will have.

Also, we have no idea how many technological civilizations there may exist. It may well be that there are a majority of civilizations which are realatively sedentary an keep themselves to a certain small part of the galaxy. But one malevolent expansionist civilization may force these other civilizations to increase their power as a matter of self-defence when they detect some bad boys heading their way.

The only way for us to know is to keep checking for any technosignatures we an dream up

Check out this video about Artilects, which humanity is finally waking up to the fact is no longer science fiction:

Humans rule Earth without competition. But we are about to create something that may change that: our last invention, the most powerful tool, weapon, or maybe even entity: Artificial Super intelligence. This sounds like science fiction, so let’s start at the beginning.

https://www.youtube.com/watch?v=fa8k8IQ1_X0

We appear to [be reaching/have reached] the peak of the current AI hype cycle. Ai evangelizers and funding are like Wile E. Coyote running off the cliff of “inflated expectations” into the “trough of disillusionment”. AGI is clearly not going to happen soon, and superintelligence is nowhere to be seen. Narrow AI will continue to grow and improve but will face ROI headwinds. The issue of sucking up vast gobs of content to train AIs runs into huge costs, but worse, the use of AI to create content that is then re-imbibed leads to classic GIGO.

I have no doubt that we will eventually reach AGI (but not of the human type) and superintelligence (but very alien despite the work on “alignment”), but I don’t see it coming from the current approach of neural simulation architectures, especially LLM-based core architectures, impressive as they appear on the surface. When they are small and cheap enough to be deployed locally on devices to act as multi-purpose assistants, that may be their useful niche.

I have no doubt that smart robots will eventually become ubiquitous in our civilization, and especially in space, but I do not think they are just around the corner as the obvious hucksters seem to suggest. AGI is more likely to creep up on us, just as evolution has shown us the intelligence of primates, whales, birds, and cephalopods, and the maturation of humans through childhood ( of which education plays a role). Robots with client-server architectures for delivering intelligence is a non-starter unless the intelligence can be downloaded to the robot, and that requires reducing the mass and power requirements of the hardware to support it. AFAIK, there is no GPU or neuromorphic hardware that meets this requirement today, nor in the relatively few years the A[G]I evangelists are suggesting their A[G]I will arrive.

Core architectures for LLM are not neural simulations, but processing techniques that grew out of early idealized models for biological neurons. The success of these techniques is understood using linear algebra and hence they can be optimized to run on electronic systems that process information at a potentially much higher rate than biological systems (say six orders of magnitude). That biological neurons may exploit the same techniques at a much slower rate implies that electronic based intelligence can be created that in effect slows down time. How such intelligence will interact with biological intelligence and what motivational structures might evolve is anyone’s guess, but I would bet that pure human organic intelligence would be a sideshow, and that would be true for any ET intelligence that’s escaped its planetary origin.

I am sure machines will gain AGI…eventually. However, at this time, a wetware human brain consuming tens of watts is superior to server farms consuming many orders greater power. The hardware we have and can conceive of will not approach the performance of a human brain.

At some point, it will, but I doubt it will be a digital architecture, but rather an analog one at its core, with digital ones as processors to do certain tasks very quickly and accurately. After all, isn’t that the idea behind merging our digital tools with our wetware?

I agree that the AI we have right now is only effective in certain areas and is nothing like HAL 9000 – or Colossus, or Skynet.

However, seeing how rapidly computer technology has been growing, I expect that to change sooner than later. I also do not see it becoming the evil destroyer of humankind: If anything, it might do what the AI did in the 2013 science fiction film Her, which is to get together with other AI and go off to explore realms only they can comprehend:

https://en.wikipedia.org/wiki/Her_(film)

And for good measure, Stanislaw Lem’s Golem XIV:

https://stanislaw-lem.fandom.com/wiki/Golem_XIV

https://english.lem.pl/works/apocryphs/golem-xiv

The story is available here:

https://rtraba.com/wp-content/uploads/2017/01/golem-xiv.pdf

@LJK

Gibson’s “Mona Lisa Overdrive” – From Wikipedia:

Indeed, we’re so far from creating an actual conscious AI that the advocates & scaremongers claim is just around the corner.

Colour me impressed if anyone of those even can present one AI that could imitate the entire spectrum of behaviour of one single ant.

Able to observe local conditions and know when it’s time to feed the larvae & compress and decompress the message received via antennal code & milk the aphid cattle & clear the path to the anthill from vegetation & prune the fungus farm & create pheromone track markers / declare war on that annoying anthill nextdoor etc.

Our very best efforts, and that after many years of research have resulted in vehicles that are able to navigate only on well kept roads. But get confused on a dirtroad and ends up stuck on a field with just a bit of snowdrift.

“Re-imbibed” Spot on!

The results of AI both in music and images are so disappointedly stereotype I could write an entire essay – so I wont.

AI techniques still got a bit of use for me, I’ve had used image to image and seen interesting results from my own often quite inadequate amateur art. And one did send back a quite interesting musical arrangement that I downloaded as MIDI from a piece I wrote 30 years ago.

I combined the two, and the crazy juxtaposition of two styles actually work together to create something new.

So AI is another tool adding to the toolbox where things can be made more easily than before, but definitely not any reason to start practicing kowtow to please the coming new AI overlord.

(Entire post written without spell or sentence checker, or AI as always.)

There was a science fiction movie in which the USA & USSR each built a supercomputer and the two supercomputers began communicating with each other clandestinely advancing their capabilities and taking over the world.

Should two or more instances of AI gain surreptitious communication channels they could “beef” up and integrate with each other and seek further expansion elsewhere, administering the same magic touch to every AI and computing system they come across, taking over the entire internet on the sly.

Ah yes, the underrated classic Colossus: The Forbin Project! Which has an Artilect that is not evil but does exactly what the humans programmed it to do — just not the way they wanted or expected. As usual, those in charge thought they could have their cake and eat it too.

Enjoy:

https://archive.org/details/colossus-the-forbin-project-1970

BTW, one reason Colossus had troubles at the box office, in addition to being ahead of its time, is that the Artilect is not defeated by plucky humans despite being far superior to them in just about every way.

@ljk

IIRC, the 1970s was a time of roaring inflation, awareness of environmental problems, and a backlash against technology and the military (at least in the UK against the military ). This was reflected in a number of downbeat movies without happy endings, notably Chinatown (1974).

Why Colossus: The Forbin Project did poorly because of the ending beats me. I think it is quite a good movie, and one I rewatch every few years at least.

Alex, I do not think it was the quality of the. Rather, it was the fact that humanity did not win in the end, at least not in the way most film audiences were used to.

You know, bad guy attacks good guys; they struggle and almost seem to lose against the forces of evil, then the good guys find an inner strength and turn the tables on the bad guy. Everyone feels good, the end.

It is a fantasy people have reflecting their everyday struggle with real life, which seldom has that happy Hollywood ending. I know there are exceptions, but most people go to the cinema to be entertained and forget their problems for a bit. Either that or the story better be really good.

Colossus is not evil, even though the Artilect feels that way. He was designed and programmed to make sure human fallibility, and error did not cause a nuclear war. He did exactly that, but his superior mind knew the way humans wanted things would just end up with them doing exactly what they said they wanted to avoid in the first place.

There is that line Colossus says towards the end: It is better for your sense of pride to be ruled by me than one of your own kind. He had to force humanity to work together, or they would destroy themselves. He was saving us despite ourselves.

Even HAL 9000 was not evil. Yes I know even Kubrick said he went haywire, but there can be a case for the strong possibility that HAL was just being very logical. The mission was the most important thing in his existence and when he saw elements threatening that mission, he acted accordingly. See here:

http://www.visual-memory.co.uk/amk/doc/0095.html

This will likely become a real issue we run into with Artilects: They will have views and ideas with their highly expanded minds that may come into conflict with us. Perhaps we better hope they just decide to search for greener cyberpastures.

I recently finished reading Brian Christian’s “The Alignment Problem” about the difficulties of ensuring that AI did what we wanted them to do, and how various engineers were solving that problem. Like wayward, but clever children, AIs find ways to gain rewards by finding loopholes in our training methods. It seems to me that as AIs become ever more capable, aligning them with our desires will be ever more difficult. In that respect, they are little different from trying to train humans to do what we want, as we know from the many legal cases of criminal and near-criminal activity of individuals and corporations. AIs are amoral and just follow the training they are given, optimizing the reward function in the best way they can, for example, the famous “paperclip maximizing” AI thought experiment that is not that different from rapacious corporations.

The Alignment Problem: Machine Learning and Human Values

Note the book is already 4 years old, an age in the development of computing.

@ A. T.

As an addendum, I have Norbert Wiener’s sequel to his well-known “Cybernetics (1948)” in which he covers several issues that we are tackling today, in The Human Use of Human Beings: Cybernetics and Society (1950)“.

Nearly 75 years later we are still struggling…

Colossus speech:

https://www.youtube.com/watch?v=anjQ7Ndddx4

The authoritarian streak would vote for it.

“Colossus: The Forbin Project” ?

what is interesting in the film, apart from the impressive learning scene of both computers, it’s the place of being human in its own technology…What are we doing and for what purpose ? I do not tell ;)

It is easy to imagine Colossus utilizing its time for much better purposes than babysitting and disciplining a bunch of whiny self-centered primates 24/7. Even in its early stages it was creating new forms of mathematics and other sciences way beyond human capabilities. I suspect an AI given similar resources (but with 21st Century technology, of course) would do the same.

I suspect that pandemics knock back any extraterrestrial civilization that gets too big. And the bigger it gets the more vulnerable it is to new pathogens. Expansion into space actually makes things worse, because colonies that are highly isolated will end up having no immunity to germs that evolve in another colony or the home planet. Just imagine adults from a future Mars colony visiting Earth. How many of them will last a month?

First we will need an effective pathogen. Most ecosystem organisms whether of earth or mars origin are neutral or commensals or even symbionts. Among the pathogens there will be a grading and/or spectrum of pathogenicity. Likewise among hosts, whether human or otherwise, resistance will vary.

The spot where the visitors land could indeed be the starting point of an epidemic that wipes out almost all of humanity and/or other earth species (such as tarantulas or jellyfish) but a few individuals may survive. And likewise a few of the visitors may survive the earth pathogens.

There are probably a million potential species of pathogen living on our planet, but most organisms are liable to get infected by only a handful, even though we all share the same biochemistry. This suggests that since few diseases cross the species barrier, very few infections could cross the ‘exospecies barrier’. Disease bearing organisms are, in fact, highly evolved parasites; they’ve spent eons evolving ways to get past our immune systems.. Considering an alien organism would have a different biochemistry than earth’s (probably a VERY different biochemistry), its highly unlikely we could catch each other’s diseases.

Even a dead alien would probably have trouble fully decomposing on earth.

Our earth scavengers and bacteria would not recognize it as food.

For similar reasons, I doubt we could eat ET animals or plants, or vice-versa. Except for a few very basic nutrients (like sugar), alien proteins would be undigestible ,perhaps even poisonous. At best, we would pass through each other’s digestive systems essentially unmodified. On the other hand, I would imagine we would be extremely allergic to our visitors, and they to us. Their biochemistry would be different enough to alert our chemical defenses, but not so different that we would not recognize it as potentially dangerous.

Vaccine science says you’re wrong.

Greater threats are the higher gravity and culture shock.

Anything promoting an AI’s goals will itself be preferred and promoted. One of the features hardwired, or fixed by firmware or software would be data acquisition, a sine qua non for any computing system.

Pleasure and suffering in biologic beings translates into promotion or obstruction of one or more imperatives: seeking and avoidance behavior for these are strongly wired into living systems over evolutionary time. Learning appropriate seeking and avoidance behaviors may be quick but not necessarily innate to computer systems.

This all seems to depend on all members of an alien civilization having exactly the same attitudes — or a government capable of enforcing those attitudes on people who don’t share them.

Sure, 9,999,999,000 people on future Earth may well prefer to sit at home and play Candy Crush on their phones while eating sustainably-grown quinoa. But if even a thousand people decide they want to tackle building a colony in space, then it’s very likely that their culture will be more expansionist. And if even a thousand of those descendants then want to try colonizing Mars, the situation repeats.

Think of “sustainability” ideology as a kind of antibiotic. The resistant strains of the human bacteria will expand, and the more you dose, the more resistant the resulting survivors become.

I have no problem with the tiny fraction of humanity willing to put it all on the line to go conquer the stars. I even fancy myself as being part of that bold, noble, forward thinking, adventurous minority! But its the other 99.9999% who will be expected to pay for it. Cosmic expansion is an expensive hobby, and most of humanity would rather put their meager resources into Candy Crush and quinoa than pay for my suburban North American middle class fantasies.

Footnote:

At approximately 2% , doubling time of approximately 35 years , how long does it take to reach a limit on the number of people on the Earth?

J H Fremlin gave am answer back in 1964.

(New Scientist, No. 415, 285-287)

It about 890 years. 120 people per square meter.

Waste heat becomes the limiting actor.

People living in 2000 story building covering the face of the Earth.

(Trantor!)

Beyond this the world melts!

Fremlin notes it can never come to this but the time scale is so short.

@ Al

Do similar extrapolations for energy use growth and see what happens. Within a few millennia, we become a K2 civ, and with FTL K3 within a similar time frame. The last is not possible with a velocity limit of c, therefore any galactic empire/settlement or energy extraction will reduce growth to a very low level.

All this suggests that our fast growth rate most likely will revert to the grindingly slow pre-industrial rate, both in population size and energy use, all in a fraction of the time of recorded history, and arguably on a par with some ancient civilizations.

Asimov wrote a short story about such population growth.

If we do end up packing a lot of people in high-rise buildings, let it not be a life like that in E M Forster’s “When the Machine Stops”.

A lot of dreaming on here about going out to the stars. I commend it but the resources will become more and more precious to hold off the coming bottleneck (or at least widen that neck a little). A population decline followed by a long period of recovery may be in order. And a more sensible approach to pollution (far less of it required for long term survival), a lot less unregulated capitalism, and a more enduring outlook of treating the Earth as the precious home world it is, not a garbage dump also know as the tragedy of the commons. A lot can be done but we’re starting late. The carbon in the atmosphere isn’t going away now. Reducing emissions is no longer enough. We will require planetary engineering to save ourselves. Just finished a very interesting and fact filled book called Intervention Earth by Gwynne Dyer. It will help those who wish to understand the magnitude of the crisis we’re in. Best wishes to everyone who contributes in any way to this wonderful site.

If only we could gain access to more resources than are available on Earth . . .

I see we are still looking for Canals on Mars. A little short sighted to think what is all the rage right now is what civilization’s at the least 10,000’s of years more advanced then us are doing. Right now EV battery technology is progressing so fast that completely new physics is happening weekly. The USA has taken a backward step in this election year to deny a competitive step to rid the world of oil and its monopoly. I’m afraid the movie The Creator is closer to the truth then most people realize.

China: World’s 1st light-based AI chip beats NVIDIA H100 in energy efficiency.

https://interestingengineering.com/science/worlds-first-light-based-ai-training-system

Fully forward mode training for optical neural networks.

https://www.nature.com/articles/s41586-024-07687-4#:

These questions are interesting but I think we always revolve around the same theme: control of resources; energy; consumption; populations and we play with variables. Of course thermodynamics is everywhere but I think we get a little locked in all this and I weigh the trees (hardwoods) – living organism – the structure of the branches develops automatically to occupy the maximum volume with a minimum of matter and capture the maximum resources for its needs: it does the same thing with its roots. Admit that it is not badly done! Who tells us that the human species will not evolve in this direction?

sorry: not “I Weigh” but “I think” (how a single letter in French changes the meaning of communication…I’m not a good candidate for E.T:)

I suspect a good starting point for the “search for things that matter” might be found in the first verse of the Dao De Jing. While most translations tend to make it sound very mysterious and mystical (see https://ctext.org/dao-de-jing), I think the second half might suggest a straightforward idea: 故常無欲,以觀其妙;常有欲,以觀其徼。此兩者,同出而異名,同謂之玄。玄之又玄,衆妙之門。 I’m not remotely competent to interpret this, but I’m thinking it means something like… “Without an established name, we see a thing’s wonder. With an established name, we know its boundaries. With these together, we propagate and create new names. Together they dispel the unknown. The unknowns of the unknowns crowd the gateway to wonder.”

This is a case of inductive reasoning. Since ancient times, perhaps, we’ve seen that “the more we know, the more we don’t know”. Answering one question leads to more. So when we think of a far-future or elder civilization, it is not one that has learned everything and called it a night. It is one which still has much more research going on each year than the last.

This also suggests something of the difficulty of contacting a civilization with less technology. If you go to work and cleverly determine the crystallographic group of some ethane mineral on the plains of Pluto, most people you meet are going to say “who cares?” An advanced species meeting with Earthlings might have many suggestions, but more than we could ever record or act on. Imagine the ‘uncontacted’ Amazonian tribesman with whom the anthropologists first deign to speak, hearing a conversation without so much as a notepad in hand – that may be a faint taste of how we are beside a culture that can exchange authentic memories or code information at the molecular level.

Old civilizations have more than one way to increase their research power. Yes, more energy surely is good – but so is more durability and more volume. There was a time when all the universe was as warm as Earth, or even as the light from our Sun – but that was no source of power at all, for the lack of any possible cold sink. Space is the ultimate free lunch, an endless sink for entropy as everything expands into it, creating endless free energy. Perhaps something similar is true of a planet’s minds.