At the recent Starship Congress in Dallas, writer, librarian and futurist Heath Rezabek discussed the Vessel Archives proposal — a strategy for sustaining and conveying Earth’s cultural and biological heritage — which was directly inspired by Gregory Benford’s idea of a Library of Life. Working with author Nick Nielsen, Rezabek is concerned with existential risk — Xrisk — and the need to ensure the survival of our species and its creations in the event of catastrophe. Rezabek and Nielsen’s presentation was the runner up for the Alpha Centauri Prize awarded at the Congress, and it was so compelling that I asked the two authors to offer a version of it on Centauri Dreams. Heath’s work follows below, while we’ll look at Nick’s in tomorrow’s post. Both writers will be returning on a regular basis for updates and further thoughts on their work.

by Heath Rezabek

Some challenges are too daunting to approach alone.

Existential risk is certainly one; bringing a comprehensive strategy to a room full of seasoned interstellar advocates is another. Collaboration can sometimes be a greater challenge than solo work, but often it yields rewards far greater than the sum of their parts. I met Nick Nielsen through his asking an audience question of me, after my first presentation of the Vessel proposal at the 100 Year Starship Symposium in September 2012. My work with Nick has been a continuing process of encountering unexpected ideas in unexpected combinations, and this collaboration led us to propose and present a combined session on our work since 2012: ‘Xrisk 101: Existential Risk for Interstellar Advocates’, at the first Starship Congress, organized by Icarus Interstellar, in August 2013.

I have felt the importance of answering the challenge of existential risk (put simply, risks to our existence) since the moment my own sense of this subject achieved critical mass and began drawing all other related ideas into its orbit — which I can, amazingly, pin to the reading of a key article in io9 on June 18 of 2012. This reading began a process of nearly frenzied integration and streamlining, which culminated in a draft proposal for very long term archives and habitats as a means of mitigating long term risk, presented at 100YSS 2012. My preprint of that first, sprawling, 52 page paper emerged from a month of intense creation. It is still available on figshare.

I call these proposed installations Vessels. While this is a discussion for later, I deliberately invoke that term as the best and most descriptive one available, for something which includes all senses of the word that I can find: not only the sense of a craft, but of a receptacle, a conduit, a medium. If an eventual interstellar vessel does not contain a Vessel, then it may be incomplete.

I had no idea, going into 100YSS 2012, whether this proposal had any merit or use whatsoever. Being a librarian by career and calling, it certainly felt right, as the first and last and best I could do. Yet meeting Nick Nielsen was pivotal: his patient unpacking of the implications of mitigating Xrisk for the future of civilization has been crucial to my own optimism over humanity’s prospects, should we fulfill our full potential.

Offered here is a post which casts our Starship Congress 2013 session into blog format. The content can be found in much the same form in our video and slides, but I still encourage you to view them both if you connect with any of these ideas whatsoever. For links to video and slides, go to http://labs.vessel.cc/.

Though the concept stands for risks to our very existence, existential risk or Xrisk is far from intractable or imponderable. Because of the subtypes described in our session below (Permanent Stagnation and Flawed Realization), we can do much to improve the prospects for Earth-originating intelligent life tomorrow by working to improve its prospects today.

This begins with directly mitigating the extinction risks that we can, and with safeguarding — to the best of our abilities — our scientific, cultural, and biological record so that future recoveries are possible if needed; and the Vessel proposal attempts a unified approach to this work.

“Build as if your ancestors crossed over your bridges.”

— Proverbial

Xrisk 101: Existential Risk for Interstellar Advocates (Part 1)

(Xrisk 101) is divided into two parts. In the first, I will cover the fundamentals of Xrisk, and update on the Vessel project, a framework for preserving the cultural, scientific, and biological record. In the second, Nick Nielsen will explore the longer term implications of overcoming Xrisk for the future of civilization.

Though discussed in other terms, Xrisk was a key concern and priority for the DARPA 2011 starship workshop. In its January 2011 report, that workshop prioritized “creating a legacy for the human species, backing up the Earth’s biosphere, and enabling long-term survival in the face of catastrophic disasters on Earth.” [1]

At the 100YSS 2012 Symposium, I presented a synthesis of strategies to address all three of these goals at once, called Vessel. Before updating on the Vessel project, I want to talk first about Existential Risk, what it includes, and why we should prioritize finding ways to meet its challenge.

Existential Risk denotes, simply enough, risks to our existence. Existential Risk encompasses both Extinction Risk and Global Catastrophic Risk.

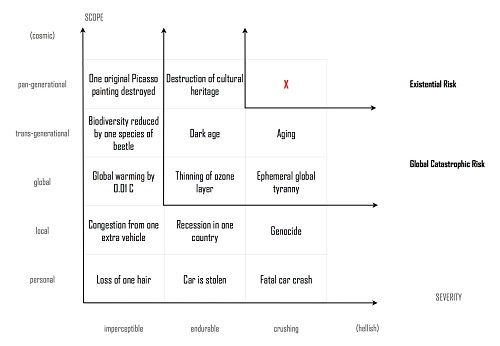

Nick Bostrom, Director for the Future of Humanity Institute, defines Existential Risk this way in a key paper we’ll cover throughout, Existential Risk Prevention as Global Priority : “An existential risk is one that threatens the premature extinction of Earth-originating intelligent life, or the permanent and drastic destruction of its potential for desirable future development.” [2] In an array of possible risks presented in the paper, small personal risks are down in the lower left, while situations of widespread suffering such as global tyranny are in the middle as Global Catastrophic Risks. Finally, the destruction of life’s long term potential defines Existential Risk, in the upper right.

Xrisk has become a popular shorthand for this whole spectrum of risks. We can see signs of it emerging as a priority for various space-related efforts. One of the most popular images of Xrisk today is that of a sterilizing asteroid strike. And asteroids play a big role in some of the most visible efforts in space industry today, such as the ARKYD telescope or NASA’s asteroid initiative. Specialists sometimes see unpredicted cultural or technological Xrisks as even more urgent.

Starship Congress had its eye on some pretty long-term goals, and Earth provides our only space and time to work towards them. On that basis alone, the challenge of Xrisk must be answered.

But setting aside our own goals, what are the stakes? How many lives have there been, or could yet be if extinction is avoided? Nick Bostrom has run some interesting numbers.

“To calculate the loss associated with an existential catastrophe, we must consider how much value would come to exist in its absence. It turns out that the ultimate potential for Earth-originating intelligent life is literally astronomical.” [2]

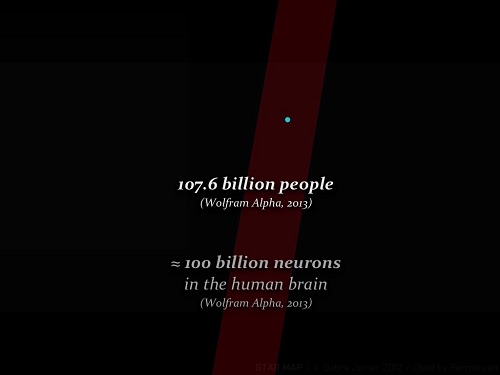

How so? First we need a standard for measurement. Let’s start with the total number of humans ever to have lived on Earth. Wolfram Alpha lists the total world population as 107.6 billion people over time. The current global population is 7.13 billion. If we leave out the current population, we get 100 billion — About the number of neurons in a single human brain.

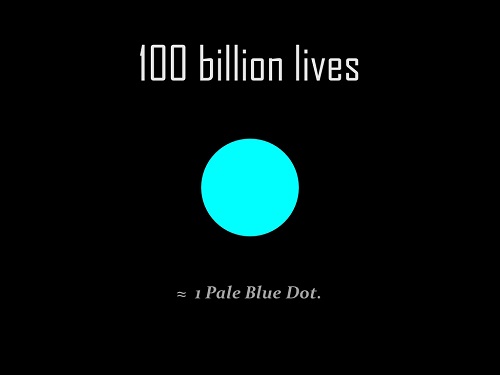

100 billion lives.

One Pale Blue Dot.

Here’s an excerpt of Carl Sagan’s thoughts on that famous image of Earth from afar:

Consider again that dot.

That’s here. That’s home. That’s us. On it everyone you love, everyone you know, everyone you ever heard of, every human being who ever was, lived out their lives. The aggregate of our joy and suffering, thousands of confident religions, ideologies, and economic doctrines, every hunter and forager, every hero and coward, every creator and destroyer of civilization, every king and peasant, every young couple in love, every mother and father, hopeful child, inventor and explorer, every teacher of morals, every corrupt politician, every “superstar,” every “supreme leader,” every saint and sinner in the history of our species lived there – on a mote of dust suspended in a sunbeam.

In … all this vastness … there is no hint that help will come from elsewhere to save us from ourselves. The Earth is the only world known, so far, to harbor life. There is nowhere else, at least in the near future, to which our species could migrate. Visit, yes. Settle, not yet. Like it or not, for the moment, the Earth is where we make our stand.

100 billion lives is our basic unit of measure.

Now; How much value would come to exist if our future potential is never cut short?

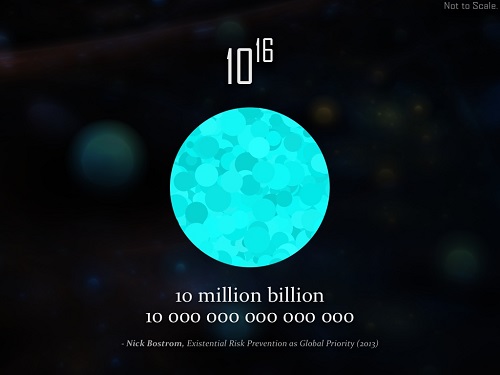

1016 — 10 million billion — is one estimate of the potential number of future lives on Earth alone, if only 1 billion lived on it sustainably for the 1 billion years it’s projected to remain habitable.

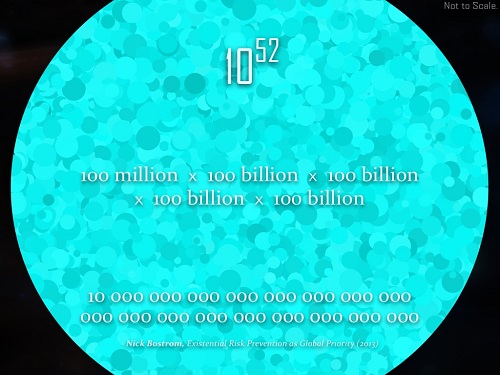

But if we consider the possibility of the spread of life beyond Earth, or synthetic minds and lives yet to come, Bostrom’s estimate [2] grows vast:

1052 potential lives to come. 100 million x 100 billion x 100 billion x 100 billion x 100 billion

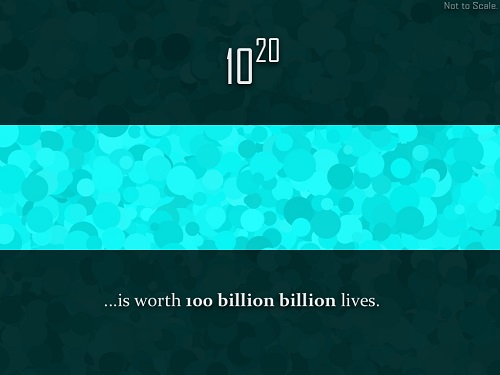

This means that reducing the chances of Xrisk by a mere 1 billionth of 1 billionth of 1 percent…

is worth 100 billion billion lives.

With just a slight shift in priorities, we can hugely boost the chances of life achieving its full future potential by working to enhance its prospects today.

Let’s look at Bostrom’s definition again: “An existential risk is one that threatens the premature extinction of Earth-originating intelligent life, or the permanent and drastic destruction of its potential for desirable future development.”

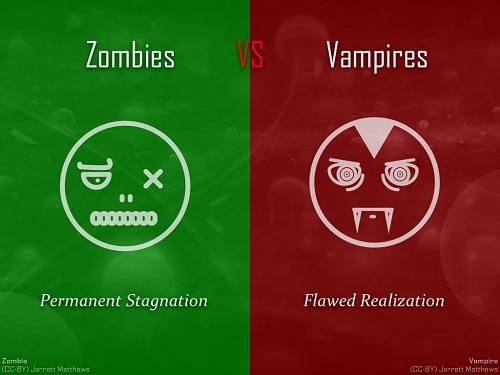

Notice that fragment – “… destruction of its potential for desirable future development.” Survival alone is not enough. In some cases, a surviving society may be brutalized, stagnant, or diminished irreparably, unable to aspire or to build itself anew. This brings us to two subtypes of Xrisk as crucial as extinction itself, and both fall into the realm of Global Catastrophic Risks.

- Permanent Stagnation – Humanity survives but never reaches technological maturity or interstellar civilization.

- Flawed Realization – Humanity reaches technological maturity but in a way that is irredeemably flawed.

Pop culture has a working knowledge of them both, in different terms. Nick and I joke that it’s a bit like: Zombies vs Vampires.

Permanent Stagnation and Flawed Realization. Losing our capability as a civilization, or enduring only in a deeply flawed form. These two risks fill our dystopian movies. But because popular culture understands them, we can learn valuable lessons about our messaging and priorities by understanding them too.

These two types of Xrisk cut to the heart of what it means to achieve our full potential. There is a vast opportunity between these risks, because of the many advances needed to achieve an interstellar future – and because of the benefits such advances could have for life on Earth — in areas such as habitat design, energy infrastructure, biotechnology, as well as advanced computing, networking, and archival.

If we work to prototype here and now, solving real-world problems along the way, all will benefit. If we make advances open and adaptable to humanity’s best minds, we will gain allies in our effort to uplift Earth and thrive beyond it. Perhaps advanced, resilient technologies could carry a seal standing for the dual design goals of uplifting life on Earth while advancing our reach towards the stars. Like LEED certification for an infinite future. What would such projects be like?

Last year, I proposed the Vessel project as a means to safeguard cultural potential on Earth and beyond. I’ll close with a brief update on this approach to advanced computing, compact habitat design, and long-term archival. With deep appreciation to Paul, I’ll continue in future guest entries by updating on the progress of the Vessel project, as I’ve continued to connect with interested specialists in several areas crucial to its implementation as a concrete strategy for Xrisk mitigation.

Image: Vessel Installation Symbol.

Vessel, as a design solution, begins with a simple premise: Capability lost before advanced goals are reached will be very difficult to recover, without a means of setting a baseline for civilization’s capabilities.

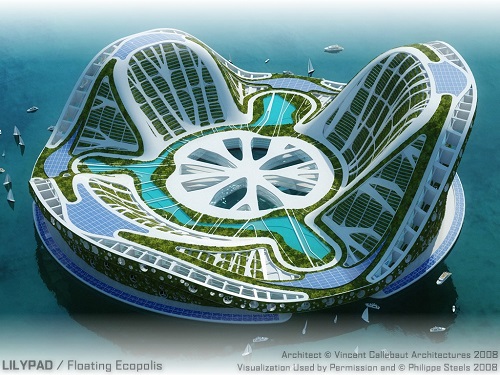

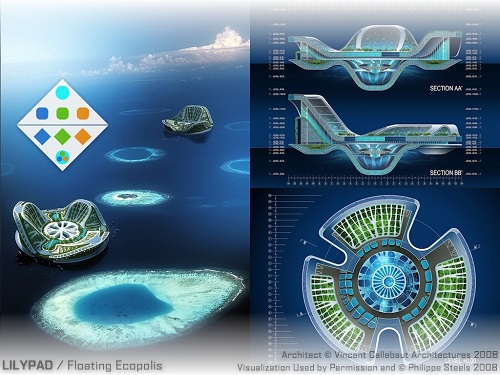

A Vessel is an installation, facility, or habitat that serves as a reservoir for Earth’s biological, scientific, and cultural record. Into a Vessel is poured what must be remembered for humanity’s potential to be maintained. On Earth or beyond, a Vessel habitat is designed to carry forth the sum of all we’ve been. In 2012, Vessel was pictured as the Lilypad seasteading habitat.

But different Vessels would have different designs based on their needs and settings. These traits remain key in each case:

At a Vessel’s core would lie biological archives, meant to preserve key traces of Earth’s biodiversity. Here the primary model is Gregory Benford’s groundbreaking 1992 Library of Life proposal. Benford details a program for freezing and preservation of endangered biomass for possible future recovery. [4]

Also crucial would be core archives for cultural and scientific knowledge, both physical and digital. I’m working with Icarus Interstellar to make sure the Vessel framework is compatible with Icarus projects. Several examples exist of information storage technologies engineered to endure the passage of time, such as the digital DNA encoding strategies of George Church’s team as well as Ewan Birney and Nick Goldman’s approach, the fused quartz technologies of Hitachi or Jingyu Zhang, and the Rosetta Disk project of the Long Now Foundation, which is the first deliverable for their Library of the Long Now. As yet I have not seen it proposed that such initiatives could or should be brought together in the service of a unified goal or project. Throughout 2014, I will be surveying these proposed strategies, as well as interviewing (when possible) their inventors and project leads on potential implementations.

Surrounding these archives would be Research Labs, where specialists could collaborate on advanced technologies, seeking critical paths which avoid and mitigate Xrisk. Or, in a time of recovery, sealed labs could be the birthplace of new beginnings. Research Labs would open inwards to draw upon the Core Cache. Experts in their relevant fields could be both stewards and users of the core archives.

But in the near term, through an outer ring of Learning Labs, Vessel facilities could welcome the curious, and give visitors an inspiring glimpse at advanced studies. Immersive labs could be catalysts for change, helping people understand the arc of history in nature, culture and science, the common risks ahead, and the limitless possibilities if Earth achieves its full potential. This function, familiar in one form to any who have visited a nature & science museum and seen paleontologists at work, hints at a pathway towards actual present-day implementations of the Vessel project as popular, well-attended, comprehensive exhibitions for a public trying to make sense of the patterns of our present day.

Built around these three roles — of Learning, Research, and Archival — the Vessel framework is designed to adapt to any setting or situation. What all Vessels would have in common is a dedication to preserving cultural capability, and a layered, approachable presence adapted to its setting. Many should be built, using many approaches. Some could be public, while mission critical Vessels may be as remote as the Svalbard Seed Vault, or even secret.

Some may be massive as habitats, with others more like sculptures, compact and dense as a room. At the recent Starship Century conference in May, Freeman Dyson envisioned terrarium-like habitats which could seed the vast reaches of space with life. This egg-like approach is hugely inspiring to ponder from the perspective of the Vessel project. Whether urban or remote, extreme habitats or modules on a starship, Vessel is offered as a flexible framework for the long term survival of life’s capabilities.

The Vessel project has several routes forwards. Plans for 2014 include the previously mentioned global survey of existing long-term archival projects, an open design document to help others adapt and evolve the Vessel framework (on which I am already working with a small team of interested artists and specialists), and a Kickstarter for a Vessel- related art project. And, at the invitation of Paul Gilster, we can add to these plans our regular updates on the Vessel project’s progress to the readers of Centauri Dreams. While I explore the nuts and bolts of Vessel’s critical path to an implementation, Nick will help deepen our grasp of the long term potential for a civilization that has chosen to mitigate Xrisk.

Right before Starship Congress, I began an Internship with the Long Now Foundation, working on a project called the Manual for Civilization (See here and here). As the first core collection for their planned Library of the Long Now, a 10,000 year archive, this work will overlap deeply with the Vessel project. So, my own timeline for Vessel is in flux. But if you’d like to collaborate, discuss ways of applying Xrisk mitigation to your own work, or want to help accelerate these efforts, please get in touch. You can register for updates on the Vessel project at vessel.cc.launchrock.com.

And, you can ask questions in the comments; both I and Nick will do our best to answer.

Our discussion of Xrisk continues tomorrow with Nick Nielsen.

——-

[1] 100 Year Starship. 2011. “The 100-Year Starship Study: Strategy Planning Workshop Synthesis & Discussions” (http://100yearstarshipstudy.com/100YSS_January_Synopsis.pdf). Accessed August 2012.

[2] Bostrom, N. “Existential Risk Reduction as Global Priority” (http://www.existential-risk.org/concept.pdf). Accessed August 2013.

[3] Sagan, C. Pale Blue Dot: A Vision of the Human Future in Space (Random House, 1997).

[4] Benford, G. “Saving the library of life,” Proceedings of the National Academy of Sciences 89, 11098-11101 (1992).

1. If we use the “Cambrian Explosion” as the starting point for advanced multicellular life on Earth, it hasn’t even been going for 1 bn years. Projecting forwards a billion years is a very long view.

2. Arks/Vessels to reseed life and civilization is a wonderfully romantic idea. But consider, should the existential risk be the total sterilization of earth, how long would it take to recreate a viable biosphere? Would the vessel even have all the necessary life forms required – e.g. all the necessary bacterial life? How would you add multicellular life to a planet that your had “cooked” with bacteria for say 100k years? You cannot easily reboot higher animals as they need their own continuity of parentage to survive.

3. When expert systems were all the rage, it was found that many aspects of doing a task couldn’t be captured. Recipes will give us a clue, but how would you add the knowledge to be able to bootstrap directly? Civilization today has so many interlocking parts. Is it possible to reboot to where we are today?

4. What if the dinosaurs had a Vessel program? Would that have have resulted in a better future outcome or facilitated the zombie or vampire risk?

What about just the Roman Empire doing the same?

Rather than these dormant, static arks, I think our bet bet is to start extraterrestrial, vibrant, new civilizations that will be quite able to continue if our main terrestrial egg basket breaks. As for keeping viable, extraterrestrial ecosystems, I liked the KSM’s ideas in “2312”, if that is possible to do.

The Vessel idea seems to have many parallels to the “Concent” described in Neal Stephenson’s book Anathem. These parallels are in both execution (housing libraries and researchers) and rationale (mitigating existential, or at least global risks). Another parallel is that Mr. Stephenson was inspired by The Long Now projects.

The plausible risks are obvious, Space rocks, self inflicted harm to

biosphere resulting in soceital collapse or even extinction.

This view of future risk does not take into account the great potential of advanced machines and automation to do damage. I am not talking about

some damn Terminator. I am refeering to cultural damage.

Automation can bring/is bringing a world where material goods are

getting easier to manufacture. Once you have a post scarcity world due to

automation unless you have a ruthless eugenics pogrom, most people will have nothing to do. In a world where only 5% do meanigful work what will

become of those not so gifted?. You could encourage these people to live

their lives in immersive entertainment mediums, which would lead them away from real interaction from others, over time the size of the population would shrink due to low birth rates.

Near term you would have very intelligent substrata of human beings

and in theory they could create vast machine AIs. But there would not be

a balance. Since the struggle for existence is gone, theories and scientific

insight of man are bested by machines, the actual cultural progress of man

would slow to a halt. I would not consider a population of several thousands super geniuses being kidded along about how necessary they were

by a bunch of hyper-intelligent machine minds a cultural pinancle, I would consider such a result an exinction and avoiding such a fate much more difficult than stopping a space rock from hitting us, and about as difficult as stopping a self inficted damage to Earth’s biosphere.

Alex, thanks – Have a moment now and more this eve; appreciate the thoughts.

1 – Agreed. This is a framework meant for near term effort, with potentially long term implications by virtue of potential (unforeseeable) future advancements. Any bridge to the far future passes through the ‘today’ and ‘tomorrow’.

2 – The intent is far less romantic than may seem at a glance, though to me it is at least inspiring. The hope behind developing an open framework is that myriad approaches can be attempted, and that some of those might endure. We can’t know what will confer a selective advantage in an unforeseen crisis. but if many variant attempts are made, something might.

Regarding your particular thoughts, the Dyson speech mentioned above, as well as the original Library of Life proposal, both may be of interest. Benford’s approach originally specified the large-scale in-situ sampling and freezing of complex life, all relationships and coarser / complex structures intact. Though the charisma of megafauna make this a hard sell, I wonder today about the prospects for smaller-scale core sampling of remaining coral reefs. I’m searching for signs of such efforts atm.

So, in other words, we have no idea yet the answers to these questions, but until genuine efforts are undertaken to the best of our abilities, we won’t.

3 – This is a great question. Assuming the ability of a discoverer to decode connected ideas the way we do, techniques such as the Long Now’s Rosetta Disk point to a broad design strategy. http://rosettaproject.org/ This strategy is echoed in the basic Vessel progression of outer to inner to innermost facilities. One makes ones way to the goods as one gains the tools and knowledge needed to grapple with the next discovery. To me, Lucy West’s ‘Origins’ image (first in article above) feels like a kind of template towards long spanning murals which map a progression from one challenge to the next. In this regard, see also the last page of Goldman and Birney’s approach to DNA datastorage as cited above: http://www.bbc.com/future/story/20130724-saving-civilisation-in-one-room/4

This idea of progressive discovery layers is a deep interface design idea, and to me distinguishes their work from Church et al’s similar approach. As I work on a survey of existing approaches, I hope to interview them on this idea for a future installation here.

4 – All true; still I come back to this. By definition, we are the stewards (witting or unwitting, able or bumbling) of the sample-of-one of life that we’re aware of. Once one realizes that, it either has an impact or doesn’t. If it does, then the next step is to start thinking seriously about how to live up to that role to the best of our ability. If it doesn’t, one can always move on to more pressing personal matters. We can’t know whether our actions will have positive or negative impacts, and have all see enough time-travel sci-fi to fret a bit on that. But, we can control our intentions to a degree. At the very very least, whether or not there are any more tigers in the galaxy, there are almost certainly no more Bengal Tigers, because they won’t be another identical Bengal. (In this galaxyy mind you. :) ) So, we do our best with what we’ve got the moment we realize we’ve got it. My approach anyhow.

I don’t see it as having to be an either-or proposition. Even a wildly different future civilization would probably do well to have something(s) like archives, galleries, museums, libraries, collections, as well as research labs and public exhibits introducing its children to wnder and awe well before introducing them to a concept like Xrisk. On that basis alone, I’m relieved to be focused on one subset of a far larger effort. It also turns out that this particular function gives us an interesting reason to look at the design of focused, localized facilities (even if in many versions) during a time when the cloud reigns supreme. And that’s a bit fun to boot.

Thanks for giving this such thought,

– Heath

The Fermi paradox, at least in its usual formulation, proposes some disturbing possibilities for explaining the Great Silence. One of these possibilities is that there is something really scary out there, that makes intelligent civilizations become quiet, or that those that aren’t quiet eventually dissappear.

Frankly, I don’t take this conclusion too seriously; there is still very good chances that either 1) our observations do not have enough sensibility and/or are looking at the wrong places/pattern and 2) ETI in the Milky Way communicate mostly by unobserved channels. But let’s play Devil’s Advocate role for a moment, allow ourselves to be a bit dramatic and see what are the consequences of the idea that there is something so dangerous that ETI do not want to communicate or go to great lengths to hide their existence

One of the main motivations to spread on space is that, by spreading ourselves, we are also spreading our existencial risk in several baskets. But bear with me in one disturbing (if albeit far out) possibility. Let’s imagine thw worst case scenario for a ‘scary’ alien. I think this scenario is an alien (either synthetic or biological) that is capable of, upon detection of another space-faring species, it proceeds to take them, reverse-engineer their technology, and find out if they communicate with other colonies. If they do, they proceed to ‘hijack’ the victim identity in the interstellar network, and possibly, access all kind of sensitive information (star system, technology, resources, colonies precise location, etc.) that could endanger the whole galactic-wide civilisation.

What I think is interesting (and disturbing) of this possibility is that, even if seems far-fetched and out of a SF novel, it counters to the notion that we are automatically safe by spreading on the galaxy. In this case, if we keep bidirectional communications with all colonies, and sensitive information is shared openly, this might increase existencial risk.

A few things to think about

Also worth making clear that this is a modular approach, and not limited to space-based instances. Coherent facilities on Earth would serve to prototype as well as mitigate against shorter-term risks of Permanent Stagnation and Flawed Realization. Wasn’t sure how clear this was in my reply.

This sort of thing got going with Marshal T. Savage in 1994…whom everyone loves to hate….Nevertheless it is done with such fabulous effort and genius it will eventually attract many well-connected political heads….I’m bookmarking this one, and the next….JDS

Dean Kavalkovich writes:

Consider this a strong endorsement and recommendation for Anathem, one of the most supple and ingenious depictions of a human future that I’ve ever encountered. Marvelous book.

@Dean – Indeed; Stephenson is a tremendous creator, and he’s thought of an admirable swath of this stuff before. At Starship Congress, I found myself recommending both Snow Crash and The Diamond Age to describe an approach to placing AI at the interface between seeker and information (the Librarian, and the Primer). I’m over on Project Hieroglyph, and suppose I really should update the forums there about this column. http://hieroglyph.asu.edu/ So there’s another parallel. I tend to take synchronicity as a good sign, that scattered sources are circling the same problem space, reducing the blind spots.

I’d actually love to interview Stephenson on the recurring theme of information interface and archival in his work; perhaps if the chance arises in a future column.

RE: CharlesJQuarra

This is an interesting scenario, and the problem of the Fermi Paradox and the Great Filter came up several times at the Icarus Interstellar Starship Congress, though no one provided your particular scenario of increased existential risk due to increased presence and therefore increased visibility in the galaxy.

Such a “scary” alien as you describe would itself be vulnerable to the same existential risk, and if it were so far in advance of us as to no longer constitute a peer civilization then we would be utterly at the alien’s mercy with or without the ruse you describe.

Given the shared existential risk of peer civilizations, and all other things being equal (which is what makes for peers), the survival of earth-originating life still seems to me far more likely in the event of galactic dispersal as opposed to “hiding out” on our homeworld. The more we disperse, the more likely one or more centers of human civilization would be overlooked by a “scary” alien.

@Rob – The train of thought you’re pursuing will actually be touched upon tomorrow by Nick Nielsen in his installation, and has to do with the fact that one person’s transhuman utopia is another’s Flawed Realization. This is a difficult question, and Nielson (in my experience) is very good at refusing to lose sight of it as he explores other angles, which I think you’ll find in future installations.

It is possible, though not given, that if post-scarcity ever becomes a reality, new strata of risk and reward will be uncovered, and that in any case this outcome trumps base extinction simply because the universe gets the chance to continue perceiving itself in that case. Nick actually ends up circling this possibility by the end of tomorrow’s piece.

As a side note and segue, there is one novel answer to the Fermi Paradox that I know of which has to do with this outcome, filled with its risks and opportunities. This comes from a fellow who is clearly an unabashed transhumanist, but whose sense of optimism I confess to find engaging, even if I’m not as confident as him on the details.

That fellow is John M. Smart, and the Fermi-answer in question is what he calls the Transcension Hypothesis. His paper on the topic suggests the possibility that tool-making and language-wielding civilizations reach a stage where they begin to miniaturize, and to miniaturize some more, and thus become invisible to others. He answers my obvious question (“But aren’t all your eggs still in one basket?”) by pointing out that microbial life seems able to survive all but the most sterilizing of catastrophes; and so, why not nanomicrobial life? It’s certainly worth a (long) skim. http://accelerating.org/articles/transcensionhypothesis.html

@Charles – It’s great to see you here. I have your card here and was wanting to get in touch, to continue our conversations from SC2013. And here we are!

The Fermi Paradox probably needs its own post, and it strikes me that it’d be great to do a global survey of answers to Fermi just as I’m planning a global survey of very long term archival methodologies. The only book I know of that attempts to catalog answers to Fermi exhaustively is ‘Where Is Everybody?’ by Stephen Webb (2002). I’d love to hear of others. http://www.amazon.com/Universe-Teeming-Aliens-WHERE-EVERYBODY/dp/0387955011

I haven’t looked closely, but the answer you suggest may well not be in there, and would fall into Bostrom’s category of ‘Subsequent Ruination’, not covered in my intro but touched upon by Nick Nielsen tomorrow. Bostrom defines it thus: “Humanity reaches technological maturity with a ‘good’ (in the sense of being not dismally and irremediably ?awed) initial setup, yet subsequent developments nonetheless lead to the permanent ruination of our

prospects.” (http://www.existential-risk.org/concept.pdf)

Your answer bears similarity to one of the few other examples of Subsequent Ruination I am aware of, and that is the plotline of the game Mass Effect, in which “a hugely advanced and ancient race of artificially intelligent machines ‘harvests’ all sentient, space-faring life in the Milky Way every 50,000 years. These machines otherwise lie dormant out in the depths of intergalactic space. They have constructed and positioned an ingenious web of technological devices (including the Mass Effect relays, providing rapid interstellar travel) and habitats within the Galaxy that effectively sieve through the rising civilizations, helping the successful flourish and multiply, ripening them up for eventual culling. The reason for this? Well, the plot is complex and somewhat ambiguous, but one thing that these machines do is use the genetic slurry of millions, billions of individuals from a species to create new versions of themselves.” http://blogs.scientificamerican.com/life-unbounded/2012/03/15/mass-effect-solves-the-fermi-paradox/

One nice thing about having terms such as Flawed Realization or Subsequent Ruination (even if cumbersome) is that they give us handholds and filters with which to see clearly and classify possibilities we’d previously lumped together.

Throughout the Congress, several other novel answers to Fermi arose. Another in the Flawed Realization bucket was Ken Roy’s (Day 3) nearly offhand thought that if gamma ray bursts are frequent enough, then galaxies may be self-sterilizing (!). Another came up on Day 4, during Jim Benford’s METI session, when I suggested in Q&A that a species wishing to hide itself from the galaxy might opt to deliberately mask or hide any regular METI signals through using a precisely calibrated counter-signal. Perhaps to hide from the kind of civilization you describe, Charles! Myself, Sonny White, Pat Galea, and Nick Nielsen are still batting this around; it too would make for an interesting future post.

But it did suggest a tantalizing thought, which was that the Great Filter might involve some sort of “ethical leap”. If silence is the right answer, for some reason, then silence could be enforced through technological ability to mask or drown out any METI attempts in the vicinity.

http://www.youtube.com/watch?list=UUjJdNqYgYW7WL5U7oEtJRZA&v=8Ye1CI5wWhc&feature=player_detailpage#t=11059

Interesting pop cultural references. IMO, any human civilization – to a first approximation – consists of a massive herd of brainwashed zombies being ruled and preyed upon by a small cabal of cunning and merciless vampires. That is not only a possible future (i.e. H G Wells – The Time Machine), but our past and present as well. How much of this is worth preserving for the ages is a matter of opinion. Does the NSA get to install backdoors in the Vessels?

I find much in Heinlein to agree with, particularly the concept that human freedom and the fulfillment of human potential are only possible on a frontier where the caste systems and control mechanisms are not well established yet. Oh well, I am so happy that Kate and William had a baby boy George to reign over billions of other people circa 2100. Cheers to GCHQ!

Heath, if you wish to develop Fermi’s question into a paradox, then you must have some definite evidence concerning when life first appeared in the universe. Paul Davies emphasises in his recent “The Eerie Silence” that we have no idea when, where or how this event happened. Until we have more solid information, there is no paradox, merely speculation.

Stephen

Oxford, UK

Regarding the main topic of this thread: I find it curious that people have so much to say about existential risk when in fact human civilisation is more secure than it has been for a long time. The world is better connected, we have greater understanding of our situation and more powerful technologies than ever before. We have survived the Cold War with no nuclear holocaust, and no accidental release of a deadly virus. We have positive information that Earth has been pleasantly habitable for several hundred million years, and even the current interglacial seems to have thousands of years yet to run. We have the imminent prospect of spreading around the Solar System, breaking our reliance on a single biosphere. Peak fossil fuel and disastrous climate change have been put on indefinite hold. The asteroid impact threat is well understood and our abilities to avert it improving all the time. The world continues to become a more peaceful and prosperous place.

Perhaps it is a symptom of the fact that people have so much choice and so much free time that they now worry about existential risks, which would have been a more appropriate concern for early medieval Europe. So we manufacture science fiction risks, such as the fear of “hyper-intelligent machine minds” referred to above (nobody has ever built an intelligent machine, nor do they have a clear idea of how to do so), or the fear that Earth could be destroyed by a rogue neutron star (subject of a recent “documentary” which has been posted on YouTube).

Fear, however, is not a good basis for action.

Stephen

Some of the themes touched upon remind me of visages from “A Canticle

for Leibowitz.” SPOILERS>>>> Yes, some have not read it.

Even if you preserved the knowledge, many might be hostlile to the possesion of such knowledge and might persecute those who are literate

and seek to destroy such knowledge where ever it exists. Worst of

all, the vices and frailties of those who rule do not perclude a recuring cycle

of Ruin, long rediscovery, technological civ.

And I think one of the SF authors have stated, that we have/will have used up the most easily obtainable energy and materials resources and if there is a fall, and a second round of a new civilization arises it will be much more constrained and slower to develop.

@Joy – Sounds like there’s one last novel premise to end all novel premises there. (Vampire Master Zombie Overlords). In all seriousness, I actually did remove a slide from the session; it came up in the Q&A when Charles Quarra above asked for clarification on Flawed Realization. Permanent Stagnation, having been clear and present in nearly every post-apocalyptic work of fiction since the dawn of dirt, was easy enough to understand. But Flawed Realization can lie in a blind spot, for reasons that could make a good future post. The missing slide was a visualization of the broad-brush tropes in Elysium, which had just hit the screen. I’d seen it, and removed the slide because the comparison seemed overwrought and the movie (imo) shed little light and too much heat on a very difficult subject. Yet it remains the first case I know of where Permanent Stagnation and Flawed Realization are right there, well visualized I do grant, in a single work of pop culture. http://www.slideshare.net/heathrezabek/xrisk-101-the-missing-slide-elysium

I do think Flawed Realization of this sort is an avoidable pitfall. It’s humbling to realize that if you look at the stock of world folktales, myths, and legends, or in premodern tales, Flawed Realization really was the risk to beat. King Midas, Gilgamesh, The Sorcerer’s Apprentice… The Picture of Dorian Gray… That we have to work hard to see it these days is worth noting.

As for what’s worth keeping, I’d say to beware of devaluing life unnecessarily; whatever sentient life may be, at the least it’s not ubiquitous, and seems capable of self-analysis and self-preservation, so why not? We have no idea how rare it is. A related caveat is that there’s a core contradiction in musing that humanity may not be worth saving while at the same time continuing to value one’s parents, friends, siblings, mentors, heroes… Each one an individual in that 10^52 potential past-and-future lives. Nihilism is too easy a dodge, imo.

Frontier freedom: This is a really good point, and I think this lens for viewing a critical path between Permanent Stagnation and Flawed Realization is a strong one. I’d be interested to know your thoughts on a couple of pieces I’m aware of whcih center on scenarios of far-future frontier patterns for expansive life, by Robin Hanson, the economist who articulated the concept of the Great Filter:

The Rapacious Hardscrapple Frontier — http://hanson.gmu.edu/hardscra.pdf

Burning the Cosmic Commons — http://hanson.gmu.edu/filluniv.pdf

@Astronist / Stephen – Re: Fermi — You’re right, of course, and this question has a long tail. I will say that one of the most intriguing stabs at this issue I’ve encountered recently is the thought-experiment of mapping genomic complexity to the curve of Moore’s Law, with startling results. http://phys.org/news/2013-04-law-life-began-earth.html // http://arxiv.org/abs/1304.3381

Re: The resilience and current capability of humanity — Maybe it’s because we finally can envision avoiding eventual oblivion that we’re reaching for that ring. I think of it as akin to the person who doesn’t write their will until they have a child. Gaining an order of magnitude has a way of hinting at potential orders of magnitude to come, for the patient and steadfast.

I’d also say that there’s a big difference between oblivion and setback, and that once Earth-originating life is truly and soundly spread to — say — the asteroid belt, and is thoroughly beyond the risk of oblivion, then it’ll be a lot easier for some to calm their urgency for awhile. Unless (as Nielsen suggests today) new vistas of challenge and opportunity have emerged, as we hope they will.

@Astronist

Did I miss the memo?

@Heath Rezabek Re: “Researchers use Moore’s Law to calculate that life began before Earth existed”

I recall seeing someone else bring this up on one of the panel discussions. This is a very controversial claim, e.g. see this paper by Sharov with the reviewers’ comments:

http://www.biologydirect.com/content/1/1/17

In essence, there is some argument about cherry picking data and also whether a single model applies across evolutionary time.

Intriguingly, Benner has just suggested that Mars may have been the origin of earth life. http://www.bbc.co.uk/news/science-environment-23872765

@Alex – Definitely controversial; I just find it interesting. Whatever the reality, I personally don’t find the fact that we can’t pinpoint the origin of life a compelling reason to disregard Fermi’s question (as Astronist seemed to be suggesting).

Heath, I am not suggesting you ignore Fermi’s question. Merely pointing out that a recent origin of life (in space, not on Earth) is perfectly plausible, and that until we have some convincing evidence that life is ancient (greater than say 5 bn years old), the most parsimonious explanation of why we don’t see any alien civilisations within the range detectable by present-day instruments and search strategies is that there aren’t any.

Stephen

To continue my previous comment: why do I insist on the scientific procedure of adopting the most parsimonious explanation that fits the currently known facts? Because people seem to have an urge to believe in aliens, and given half a chance will fantasise endlessly about them. Take a look at the video on this page:

http://www.discovery-enterprise.com/2013/09/aliens-definitive-guide.html

Although there are interviews with big-name scientists like Michio Kaku, Charles Cockell, Chris McKay, Paul Davies and others, they do not prevent, but rather even encourage, the presenter spinning the wildest speculations into confident assertions of established fact, just so long as they match the standard Hollywood “war of the worlds” paradigm of “Independence Day”, “V”, and so on. This, remember, at a time when nobody has positively identified as much as a single extraterrestrial microbe!

Stephen

Alex, Heath: Calling this piece of work “controversial” is exceedingly generous and way off the mark, IMHO. I suggest instead: “Not even wrong”.

Astronist:

That would be, like, 50 years survived out of a billion yet to come. This particular risk, IMHO, has lost none of its teeth.

Maybe the end of any civilization is the moment it invents the pocket-sized, easy-to-assemble hydrogen bomb. How is that for a Fermi solution?

Eniac,

Do remember that a pocket sized, easy to assemble hydrogen bomb would allow for the easy manufacture of Orion starships. You wouldn’t even need high tech to colonise the solar system if you had such weaponry in the hands of the common person. Granted, several dozen major cities might be flattened, but that just serves as an incentive to get off the homeworld…

Inverse Kzinti lesson?

Terraformer: The pocket sized, easy to assemble hydrogen bomb would solve just one of many hard problems in building a starship. It is quite possible that every city on Earth will be flattened before that happens, at which point it would probably be too late….

I’m holding out for the serendipitous invention of multiple, parallel, interdependent technologies during the same timeframe. A number of them I see as possibly needed for a Vessel habitat; what also remains are the methods of getting them established off-Earth.

For me, the first thing that comes to mind is that the Vessels themselves may become tempting targets for antitechnology groups or even cultures. As the products of largely Western, scientific, post-Enlightenment worldviews, they may serve as highly visible symbols that may attract fanatics of many stripes. One does not have to look very far to see the signs of Permanent Stagnation around us even today. There are large segments of the human population that see the ideal human society as a 7th-century Caliphate. (Ironically, this antitechnological worldview sees no disconnect between living by ancient sacred texts and widespread use of cellphones.) And lest I seem to be singling out particular ideologies, I bring to your attention the factions in this country that ferociously – and in some cases, successfully – attempt to block the teaching of evolution and forestall any efforts to manage climate change. Depressing as it may seem, these discordant views of the future may represent the forces that may not only make Permanent Stagnation more immanent, but may be precisely the memes that prevent Vessels from being built.

http://arxiv.org/abs/1310.2961

Towards Gigayear Storage Using a Silicon-Nitride/Tungsten Based Medium

Jeroen de Vries, Dimitri Schellenberg, Leon Abelmann, Andreas Manz, Miko Elwenspoek

(Submitted on 9 Oct 2013)

Current digital data storage systems are able to store huge amounts of data. Even though the data density of digital information storage has increased tremendously over the last few decades, the data longevity is limited to only a few decades.

If we want to preserve anything about the human race which can outlast the human race itself, we require a data storage medium designed to last for 1 million to 1 billion years.

In this paper a medium is investigated consisting of tungsten encapsulated by silicon nitride which, according to elevated temperature tests, will last for well over the suggested time.

Comments: 19 pages, 11 figures

Subjects: Emerging Technologies (cs.ET); Popular Physics (physics.pop-ph); Physics and Society (physics.soc-ph)

Cite as: arXiv:1310.2961 [cs.ET]

(or arXiv:1310.2961v1 [cs.ET] for this version)

Submission history

From: Jeroen de Vries [view email]

[v1] Wed, 9 Oct 2013 09:23:19 GMT (5920 kb)

http://arxiv.org/pdf/1310.2961v1.pdf

ljk, thanks for that excellent resource. I’ve added it to the survey of technologies and approaches I’m undertaking. Appreciated, -h

Researchers Develop Million-Year Data Storage Disk

October 23, 2013 by Staff

Using a wafer consisting of tungsten encapsulated by silicon nitride, scientists have developed a disk that can store data for a million years or more.

Mankind has been storing information for thousands of years. From carvings on marble to today’s magnetic data storage. Although the amount of data that can be stored has increased immensely during the past few decades, it is still difficult to actually store data for a long period. The key to successful information storage is to ensure that the information does not get lost.

If we want to store information that will exist longer than mankind itself, then different requirements apply than those for a medium for daily information storage. Researcher Jeroen de Vries from the University of Twente MESA+ Institute for Nanotechnology demonstrates that it is possible to store data for extremely long periods. He will be awarded his doctorate on 17 October.

Current hard disk drives have the ability to store vast amounts of data but last roughly ten years at room temperature, because their magnetic energy barrier is low so that the information is lost after a period of time. CDs, DVDs, paper, tape, clay and tablets and stone also have a limited life. Alternatives will have to be sought if information is to be retained longer.

Archival storage for up to one billion years

It is possible to conceive of a number of scenarios why we wish to store information for a long time. “One scenario is that a disaster has devastated the earth and society must rebuild the world. Another scenario could be that we create a kind of legacy for future intelligent life that evolves on Earth or comes from other worlds. You must then think about archival storage of between one million and one billion years,” according to researcher De Vries.

Full article here:

http://scitechdaily.com/researchers-develop-million-year-data-storage-disk/

The totality of these discussions appear to point to humanity being the per-eminent focus in the vast universe in which we inhabit. The energies that focus on how to preserve life legacies may be better applied to how to solve our societal disfunctions. The theological and political egocentricities that currently thrive on Earth make this ideal rather fa- reaching. To have our biological heritage and scientific breakthroughs housed in a reminent of any design is wonderful speak, but how useful in the scheme of the universe where we exist as a mere speck? Ideas and deep thought are useful as we project our existence forward and develop sound philosophies and technologies to provide an open end development on our planet to enhance life’s diversity. Maybe, when humanity makes theses strides, we may develop sound technological advancement to exist in environments outside of this blue dot.

I’m re-reading old comments in preparation for new work on the fiction arc, and just noted this last one from February.

I can only say I’m somewhat sympathetic to this view, and continue to develop the Open Vessel Framework in the hope that such facilities could be of use to present-day society in its struggle to overcome shortsightedness and endure hardships (self-created or otherwise) through time.

Yes; we have to earn it. Part of earning it, maybe, is trying.