I first ran across David Messerschmitt’s work in his paper “Interstellar Communication: The Case for Spread Spectrum,” and was delighted to meet him in person at Starship Congress in Dallas last summer. Dr. Messerschmitt has been working on communications methods designed for interstellar distances for some time now, with results that are changing the paradigm for how such signals would be transmitted, and hence what SETI scientists should be looking for. At the SETI Institute he is proposing the expansion of the types of signals being searched for in the new Allen Telescope Array. His rich discussion on these matters follows.

By way of background, Messerschmitt is the Roger A. Strauch Professor Emeritus of Electrical Engineering and Computer Sciences at the University of California at Berkeley. For the past five years he has collaborated with the SETI institute and other SETI researchers in the study of the new domain of “broadband SETI”, hoping to influence the direction of SETI observation programs as well as future METI transmission efforts. He is the co-author of Software Ecosystem: Understanding an Indispensable Technology and Industry (MIT Press, 2003), author of Understanding Networked Applications (Morgan-Kaufmann, 1999), and co-author of a widely used textbook Digital Communications (Kluwer, 1993). Prior to 1977 he was with AT&T Bell Laboratories as a researcher in digital communications. He is a Fellow of the IEEE, a Member of the National Academy of Engineering, and a recipient of the IEEE Alexander Graham Bell Medal recognizing “exceptional contributions to the advancement of communication sciences and engineering.”

by David G. Messerschmitt

We all know that generating sufficient energy is a key to interstellar travel. Could energy also be a key to successful interstellar communication?

One manifestation of the Fermi paradox is our lack of success in detecting artificial signals originating outside our solar system, despite five decades of SETI observations at radio wavelengths. This could be because our search is incomplete, or because such signals do not exist, or because we haven’t looked for the right kind of signal. Here we explore the third possibility.

A small (but enthusiastic and growing) cadre of researchers is proposing that energy may be the key to unlocking new signal structures more appropriate for interstellar communication, yet not visible to current and past searches. Terrestrial communication may be a poor example for interstellar communication, because it emphasizes minimization of bandwidth at the expense of greater radiated energy. This prioritization is due to an artificial scarcity of spectrum created by regulatory authorities, who divide the spectrum among various uses. If interstellar communication were to reverse these priorities, then the resulting signals would be very different from the familiar signals we have been searching for.

Starships vs. civilizations

There are two distinct applications of interstellar communication: communication with starships and communication with extraterrestrial civilizations. These two applications invoke very different requirements, and thus should be addressed independently.

Starship communication. Starship communication will be two-way, and the two ends can be designed as a unit. We will communicate control information to a starship, and return performance parameters and scientific data. Effectiveness in the control function is enhanced if the round-trip delay is minimized. The only parameter of this round-trip delay over which we have influence is the time it takes to transmit and receive each message, and our only handle to reduce this is a higher information rate. High information rates also allow more scientific information to be collected and returned to Earth. The accuracy of control and the integrity of scientific data demands reliability, or a low error rate.

Communication with a civilization. In our preliminary phase where we are not even sure other civilizations exist, communication with a civilization (or they with us) will be one way, and the transmitter and receiver must be designed independently. This lack of coordination in design is a difficult challenge. It also implies that discovery of the signal by a receiver, absent any prior information about its structure, is a critical issue.

We (or they) are likely to carefully compose a message revealing something about our (or their) culture and state of knowledge. Composition of such a message should be a careful deliberative process, and changes to that message will probably occur infrequently, on timeframes of years or decades. Because we (or they) don’t know when and where such a message will be received, we (or they) are forced to transmit the message repeatedly. In this case, reliable reception (low error rate) for each instance of the message need not be a requirement because the receiving civilization can monitor multiple repetitions and stitch them together over time to recover a reliable rendition. In one-way communication, there is no possibility of eliminating errors entirely, but very low rates of error can be achieved. For example, if an average of one out of a thousand bits is in error for a single reception, after observing and combining five (seven) replicas of a message only one out of 100 megabits (28 gigabits) will still be in error.

Message transmission time is also not critical. Even after two-way communication is established, transmission time won’t be a big component of the round-trip delay in comparison to large one-way propagation delays. For example, at a rate of one bit per second, we can transmit 40 megabyles of message data per decade, and a decade is not particularly significant in the context of a delay of centuries or millennia required for speed-of-light propagation alone.

At interstellar distances of hundreds or thousands of light years, there are additional impairments to overcome at radio wavelengths, in the form of interstellar dispersion and scattering due to clouds of partially ionized gases. Fortunately these impairments have been discovered and “reverse engineered” by pulsar astronomers and astrophysicists, so that we can design our signals taking these impairments into account, even though there is no possibility of experimentation.

Propagation losses are proportional to distance-squared, so large antennas and/or large radiated energies are necessary to deliver sufficient signal flux at the receiver. This places energy as a considerable economic factor, manifested either in the cost of massive antennas or in energy utility costs.

The remainder of this article addresses communication with civilizations rather than starships.

Compatibility without coordination

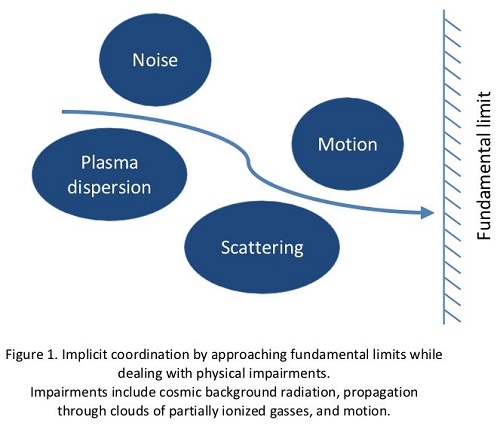

Even though one civilization is designing a transmitter and the other a receiver, the only hope of compatibility is for each to design an end-to-end system. That way, each fully contemplates and accounts for the challenges of the other. Even then there remains a lot of design freedom and a world (and maybe a galaxy) full of clever ideas, with many possibilities. I believe there is no hope of finding common ground unless a) we (and they) keep things very simple, b) we (and they) fall back on fundamental principles, and c) we (and they) base the design on physical characteristics of the medium observable by both of us. This “implicit coordination” strategy is illustrated in Fig. 1. Let’s briefly review all three elements of this three-pronged strategy.

The simplicity argument is perhaps the most interesting. It postulates that complexity is an obstacle to finding common ground in the absence of coordination. Similar to Occam’s razor in philosophy, it can be stated as “the simplest design that meets the needs and requirements of interstellar communication is the best design”. Stated in a negative way, as designers we should avoid any gratuitous requirements that increase the complexity of the solution and fail to produce substantive advantage.

Regarding fundamental principles, thanks to some amazing theorems due to Claude Shannon in 1948, communications is blessed with mathematically provable fundamental limits on our ability to communicate. Those limits, as well as ways of approaching them, depend on the nature of impairments introduced in the physical environment. Since 1948, communications has been dominated by an unceasing effort to approach those fundamental limits, and with good success based on advancing technology and conceptual advances. If both the transmitter and receiver designers seek to approach fundamental limits, they will arrive at similar design principles even as they glean the performance advantages that result.

We also have to presume that other civilizations have observed the interstellar medium, and arrived at similar models of impairments to radio propagation originating there. As we will see, both the energy requirements and interstellar impairments are helpful, because they drastically narrow the characteristics of signals that make sense.

Prioritizing energy simplifies the design

Ordinarily it is notoriously difficult and complex to approach the Shannon limit, and that complexity would be the enemy of uncoordinated design. However, if we ask “limit with respect to what?”, there are two resources that govern the information rate that can be achieved and the reliability with which that information can be extracted from the signal. These are the bandwidth which is occupied by the signal and the “size” of the signal, usually quantified by its energy. Most complexity arises from forcing a limit on bandwidth. If any constraint on bandwidth is avoided, the solution becomes much simpler.

Harry Jones of NASA observed in a paper published in 1995 that there is a large window of microwave frequencies over which the interstellar medium and atmosphere are relatively transparent. Why not, Jones asked, make use of this wide bandwidth, assuming there are other benefits to be gained? In other words, we can argue than any bandwidth constraint is a gratuitous requirement in the context of interstellar communication. Removing that constraint does simplify the design. But another important benefit emphasized by Jones is reducing the signal energy that must be delivered to the receiver. At the altar of Occam’s razor, constraining bandwidth to be narrow causes harm (an increase in required signal energy) with no identifiable advantage. Peter Fridman of the Netherlands Institute for Radio Astronomy recently published a paper following up with a specific end-to-end characterization of the energy requirements using techniques similar to Jones’s proposal.

I would add to Jones’s argument that the information rates are likely to be low, which implies a small bandwidth to start with. For example, starting at one bit per second, the minimum bandwidth is about one Hz. A million-fold increase in bandwidth is still only a megahertz, which is tiny when compared to the available microwave window. Even a billion-fold increase should be quite feasible with our technology.

Why, you may be asking, does increasing bandwidth allow the delivered energy to be smaller? After all, a wide bandwidth allows more total noise into the receiver. The reason has to do with the geometry of higher dimensional Euclidean spaces, since permitting more bandwidth allows more degrees of freedom in the signal, and a higher dimensional space has a greater volume in which to position signals farther apart and thus less likely to be confused by noise. I suggest you use this example to motivate your kids to pay better attention in geometry class.

Another requirement that we have argued is gratuitous is high reliability in the extraction of information from the signal. Achieving very low bit error rates can be achieved by error-control coding schemes, but these add considerable complexity and are unnecessary when the receiver has multiple replicas of a message to work with. Further, allowing higher error rates further reduces the energy requirement.

The minimum delivered energy

For a message, the absolute minimum energy that must be delivered to the receiver baseband processing while still recovering information from that signal can be inferred from the Shannon limit. The cosmic background noise is the ultimate limiting factor, after all other impairments are eliminated by technological means. In particular the minimum energy must be larger than the product of three factors: (1) the power spectral density of the cosmic background radiation, (2) the number of bits in the message, and (3) the natural logarithm of two.

Even at this lower limit, the energy requirements are substantial. For example, at a carrier frequency of 5 GHz at least eight photons must arrive at the receiver baseband processing for each bit of information. Between two Arecibo antennas with 100% efficiency at 1000 light years, this corresponds to a radiated energy of 0.4 watt-hours for each bit in our message, or 3.7 megawatt-hours per megabyte. To Earthlings today, this would create a utility bill of roughly $400 per megabyte. (This energy and cost scale quadratically with distance.) This doesn’t take into account various non-idealities (like antenna inefficiency, noise in the receiver, etc.) or any gap to the fundamental limit due to using practical modulation techniques. You can increase the energy by an order of magnitude or two for these effects. This energy and cost per message is multiplied by repeated transmission of the message in multiple directions simultaneously (perhaps thousands!), allowing that the transmitter may not know in advance where the message will be monitored. Pretty soon there will be real money involved, at least at our Earthly energy prices.

Two aspects of the fundamental limit are worth noting. First, we didn’t mention bandwidth. In fact, the stated fundamental limit assumes that bandwidth is unconstrained. If we do constrain bandwidth and start to reduce it, then the requirement on delivered energy increases, and rapidly at that. Thus both simplicity and minimizing energy consumption or reducing antenna area at the transmitter are aligned with using a large bandwidth in relation to the information rate. Second, this minimum energy per message does not depend on the rate at which the message is transmitted and received. Reducing the transmission time for the message (by increasing the information rate) does not affect the total energy, but does increase the average power correspondingly. Thus there is an economic incentive to slow down the information rate and increase the message transmission time, which should be quite okay.

What do energy-limited signals actually look like?

A question of considerable importance is the degree to which we can or cannot infer enough characteristics of a signal to significantly constrain the design space. Combined with Occam’s razor and jointly observable physical effects, the structure of an energy-limited transmitted signal is narrowed considerably.

Based on models of the interstellar medium developed in pulsar astronomy, I have shown that there is an “interstellar coherence hole” consisting of an upper bound on the time duration and bandwidth of a waveform such that the waveform is for all practical purposes unaffected by these impairments. Further, I have shown that structuring a signal around simple on-off patterns of energy, where each “bundle” of energy is based on a waveform that falls within the interstellar coherence hole, does not compromise our ability to approach the fundamental limit. In this fashion, the transmit signal can be designed to completely circumvent impairments, without a compromise in energy. (This is the reason that the fundamental limit stated above is determined by noise, and noise alone.) Both the transmitter and receiver can observe the impairments and thereby arrive at similar estimates of the coherence hole parameters.

The interstellar medium and motion are not completely removed from the picture by this simple trick, because they still announce their presence through scintillation, which is a fluctuation of arriving signal flux similar to the twinkling of the stars (radio engineers call this same phenomenon “fading”). Fortunately we know of ways to counter scintillation without affecting the energy requirement, because it does not affect the average signal flux. The minimum energy required for reliable communication in the presence of noise and fading was established by Robert Kennedy of MIT (a professor sharing a name with a famous politician) in 1964. My recent contribution has been to extend his models and results to the interstellar case.

Signals designed to minimize delivered energy based on these energy bundles have a very different character from what we are accustomed to in terrestrial radio communication. This is an advantage in itself, because another big challenge I haven’t yet mentioned is confusion with artificial signals of terrestrial or near-space origin. This is less of a problem if the signals (local and interstellar) are quite distinctive.

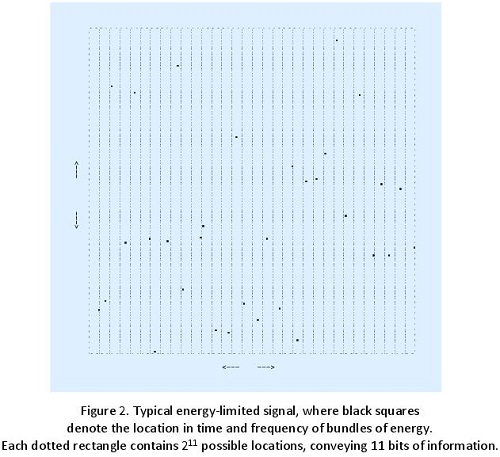

A typical example of an energy-limited signal is illustrated in Fig. 2. The idea behind energy-limited communication is to embed energy in the locations of energy bundles, rather than other (energy-wasting but bandwidth-conserving) parameters like magnitude or phase. In the example of Fig. 2, each rectangle includes 2048 locations where an energy bundle might occur (256 frequencies and 8 time locations), but an actual energy bundle arrives in only one of these locations. When the receiver observes this unique location, eleven bits of information have been conveyed from transmitter to receiver (because 211 = 2048). This location-based scheme is energy-efficient because a single energy bundle conveys eleven bits.

The singular characteristic of Fig. 2 is energy located in discrete but sparse locations in time and frequency. Each bundle has to be sufficiently energetic to overwhelm the noise at the receiver, so that its location can be detected reliably. This is pretty much how a lighthouse works: Discrete flashes of light are each energetic enough to overcome loss and noise, but they are sparse in time (in any one direction) to conserve energy. This is also how optical SETI is usually conceived, because optical designers usually don’t concern themselves with bandwidth either. Energy-limited radio communication thus resembles optical, except that the individual “pulses” of energy must be consciously chosen to avoid dispersive impairments at radio wavelengths.

This scheme (which is called frequency-division keying combined with pulse-position modulation) is extremely simple compared to the complicated bandwidth-limited designs we typically see terrestrially and in near space, and yet (as long as we don’t attempt to violate the minimum energy requirement) it can achieve an error probability approaching zero as the number of locations grows. (Some additional measures are needed to account for scintillation, although I won’t discuss this further.) We can’t do better than this in terms of the delivered energy, and neither can another civilization, no matter how advanced their technology. This scheme does consume voluminous bandwidth, especially as we attempt to approach the fundamental limit, and Ian S. Morrison of the Australian Centre for Astrobiology is actively looking for simple approaches to achieve similar ends with less bandwidth.

What do “they” know about energy-limited communication?

Our own psyche is blinded by bandwidth-limited communication based on our experience with terrestrial wireless. Some might reasonably argue that “they” must surely suffer the same myopic view and gravitate toward bandwidth conservation. I disagree, for several reasons.

Because energy-limited communication is simpler than bandwidth-limited communication, the basic design methodology was well understood much earlier, back in the 1950’s and 1960’s. It was the 1990’s before bandwidth-limited communication was equally well understood.

Have you ever wondered why the modulation techniques used in optical communications are usually so distinctive from radio? One of the main differences is this bandwidth- vs energy-limited issue. Bandwidth has never been considered a limiting resource at the shorter optical wavelengths, and thus minimizing energy rather than bandwidth has been emphasized. We have considerable practical experience with energy-limited communication, albeit mostly at optical wavelengths.

If another civilization has more plentiful and cheaper energy sources or a bigger budget than us, there are plenty of beneficial ways to consume more energy other than being deliberately inefficient. They could increase message length, or reduce the message transmission time, or transmit in more directions simultaneously, or transmit a signal that can be received at greater distances.

Based on our Earthly experience, it is reasonable to expect that both a transmitting and receiving civilization would be acutely aware of energy-limited communication and I expect that they would choose to exploit it for interstellar communication.

Discovery

Communication isn’t possible until the receiver discovers the signal in the first place. Discovery of an energy-limited signal as illustrated in Fig. 2 is easy in one respect, since the signal is sparse in both time and frequency (making it relatively easy to distinguish from natural phenomenon as well as artificial signals of terrestrial origin) and individual energy bundles are energetic (making them easier to detect reliably). Discovery is hard in another respect, since due to that same sparsity we must be patient and conduct multiple observations in any particular range of frequencies to confidently rule out the presence of a signal with this character.

Criticisms of this approach

What are some possible shortcomings or criticisms of this approach? None of us have yet studied possible issues in design of a high-power radio transmitter generating a signal of this type. Some say that bandwidth does need to be conserved, for some reason such as interference with terrestrial services. Others say that we should expect a “beacon”, which is a signal designed to attract attention, but simplified because it carries no information. Others say that an extraterrestrial signal might be deliberately disguised to look identical to typical terrestrial signals (and hence emphasize narrow bandwidth rather low energy) so that it might be discovered accidently.

What do you think? In your comments to this post, the Centauri Dreams community can be helpful in critiquing and second guessing my assumptions and conclusions. If you want to delve further into this, I have posted a report at http://arxiv.org/abs/1305.4684 that includes references to the foundational work.

Definitely an interesting read. The minimum energy signal might be similar to a radio transient, and there are research programs out there which are only now starting to look for these things. Mostly concentrated at lower frequencies. It would be useful to investigate how much of the bandwidth for such a signal needs to be monitored for a particular bitrate in order for it to be recognized as a transmission, and not a natural transient.

I have not finished reading it yet, but I wanted to thank Dr. Messerschmitt for posting this. I tried reading his more technical paper on the same subject and found it (through my own fault) over my head. This is much more approachable for me.

I can only offhand think of three ways to communicate Star to Star.

1) Using the Sun to form a focal point at the Star system and high energy/high frequency lasers for the transmission and optical receivers (many to form a wide base).

2) A large disc near the Sun which acts like a signal or Aldis lamp (free energy) to transmit.

3) A series (in a line) of slow moving laser focusing/emission relays (two or more a set distance apart for improved resolution) along the path between the stars.

The energy to power such equipment would have to come from long lived nuclear power sources due to the distances involved.

I am not very knowledgable in this field, but, for what ever it may be worth, I do not see any flaws in your line of reasoning. In fact I strongly applaud this concept as ‘out of the box’ thinking. I have long felt that the current SETI approaches depend much too much on assuming that ET thinks the same as us. An approach like this, that makes as few assumptions as possible about what an ET may think, and rather is based as much as possible directly on the limits placed on us by nature, seems far superior to me.

Just my 2 cents.

“Some say that bandwidth does need to be conserved, for some reason such as interference with terrestrial services.”

This statement makes me curious if a requirement of what you describe is for the receiver to be in earth orbit to avoid interference with terrestrial signals. If true you might want our transmitter also to be in orbit.

I’m unsure from your description how much interference energy limited signals might suffer as a particular time/frequency combination could be drowned out by terrestrial sources.

This sounds like a description of spread spectrum communications but instead with various frequencies representing a 1 or 0 at a particular time T. You would reconstruct byte by monitoring the various frequencies you saw signals in over long periods (and thus narrow down at what frequencies to expect signals).

My question is: Wouldn’t such communication have been picked up via SETI monitoring? I would think a bit at a certain frequency toggling would stand out in a SETI analysis.

Thanks for all the comments so far! Keep ’em coming.

To Alexandar, yes the search for radio transients has some similarity. However, transients are usually considered to be short-duration pulses that have a high bandwidth, and thus are severely affected by interstellar dispersion. For natural signals like pulsars, this is unavoidable. For artificial signals, I would assume that the “pulse” bandwidth is restricted to that which isn’t affected by dispersion, which implies a longer time duration. Such a “pulse”, which I call an “energy bundle”, looks like a segment of a sinusoid or a random noise with a typical duration of a 0.1 to 1 seconds. Such a “bundle” is far easier to detect than a wideband transient, with a lot less processing and searching. The characteristic of an artificial signal with this character is repeated instances of such “transients” within a given frequency range.

To Michael, I agree that the use of optical wavelengths is a very interesting direction, and harnessing a star’s power is also interesting. Advocates of optical usually argue against radio on the basis of the greater dispersion at longer wavelengths. While that dispersion is real, a point of my article is that this is not an issue for radio, for either discovery or communication, because the transmit signal can easily be designed to circumvent this dispersion with no energy penalty. An interesting property to note for my example signal is that a signal like this may have a very wide bandwidth, and yet is not affected by dispersion because each energy bundle has a narrow enough bandwidth by design. Removing dispersion as a consideration, the comparison of radio and optical remains quite interesting, and I am currently exploring this. My current feeling is that it is mostly a technology/engineering issue rather than a fundamental difference. We would expect an optical communications signal to have a similar character to what I have described, if it is also designed according to energy conserving principles.

Claudio Maccone’s studies on solar gravity lensing astronomy (already discussed here in the past), show conclusively that interstellar information exchanges can happen at very low energy regimes (mere watts) and very high bitrates/low error rates, if we consider gravity lensing emitters/receivers are placed at both ends of the communication channel (that is, 2 stellar gravity lensing telescopes at the mutual focal points of two stars).

It is possible then, that very active communications are happening right now, between many points in the galaxy, and we are simply unable to detect them, due to the very low energy of such communication beams and the requirement of being placed at the stellar focal points in order to detect them.

That adds some speculative way for finding out ETI relatively near home: just look for their Sun gravity lens emitters/receivers at the solar focal points for any star of interest.

To Markham, RFI is an interesting issue that I did not address in my article. Let me say first of all that immunity to RFI can be created by making each energy bundle as long a duration and with the maximum bandwidth permitted by the dispersive and motion effects, and then making the energy bundle waveform look noise-like. That argument is covered in my article “Interstellar Communication: The Case for Spread Spectrum” in Acta Astronautica in Dec. 2012, also available at http://arxiv.org/abs/1111.0547. The addition of FSK+PPM as described here is an additional wrinkle that will further increase immunity to RFI, similar to “frequency-hopping spread spectrum”.

In the current style of SETI, which is searching for continuous narrowband signals, a prime way to rule out false alarms is to look for persistence of the signal, and persistence at the same exact frequency. Thus, the FSK+PPM signal of the type I have described would be deemed in such searches to be a false alarm, and would thus not be detected. To discover such a signal, a very different back-end pattern recognition approach is required, one which looks at a band of frequencies as a unit (rather than a single frequency) and does not expect short-term signal persistence. (This is discussed further in my online report).

Interference created on the transmit side by a high powered transmitter is an interesting issue, and one that has not been fully explored. It helps that the antenna will be highly directive, but the power appearing in small side lobes might be sufficient to cause a problem. Moving the transmitter to space (in our case the backside of the moon) would certainly help. Making the energy bundles spread spectrum helps to reduce the interference impact, as does the frequency hopping nature.

To tchernick, let me agree but modify your statement just a bit. Using stars as a gravitational lens does not reduce the *receive* power requirement, but such ultra high gain antennas reduce the *transmit* power requirement. This is a very exotic and expensive approach, and certainly one worth exploring. Correct me if I am wrong, but I think this approach would allow very low energy communication with a single star, but would be vastly more expensive for a transmitter that wanted to target multiple stars, and also for a receiver that wanted to search for a signal from multiple stars. If so, I think it makes a lot more sense as a way for two civilizations to continue a dialog after they have established mutual contact and in addition have decided to coordinate their efforts. Even then I would question whether the cost of this space-based infrastructure would be justified by the energy cost savings, but that is a fairly straightforward calculation for the two civilizations based on their current state of technology.

If as you say many such point-to-point communications are occurring right now, I would question whether we could detect any of them, except in the unlikely event that we happen to fall directly in the line of sight between these two stars. In any case, you raise an interesting idea.

Dopo aver letto questo interessante articolo, ho pensato che possa interessare a voi tutti, un articolo, di cui vi segnalo il link http://arxiv.org/abs/1311.4608 dal titolo “Jumping the energetics queue: Modulation of pulsar signals by extraterrestrial civilizations”

Un saluto a voi tutti, da Antonio Tavani

Via Google Translate:

After reading this interesting article, I thought it might be of interest to you all, an article of which you point out the link http://arxiv.org/abs/1311.4608 titled “Jumping the queue energetics: Modulation of pulsar signals by extraterrestrial civilizations ”

Greetings to you all, by Antonio Tavani

Bell’articolo, Antonio. Questo è un pezzo emozionante di materiale. L’unico problema che ci troveremmo di fronte, a quanto pare, sarebbe in realtà raggiungendo un pulsar di modulare, in quanto il loro modello richiede di ingegneria attorno vicinanze del sistema stella. Uno dei nostri astronavi attuali avrebbe preso millenni per raggiungere anche la stella di neutroni più vicina conosciuta. Inoltre, apparentemente enorme quantità di energia che ci vuole per la nostra attuale comprensione della più veloce della luce viaggio (ad es infinito, a meno che la manipolazione dello spazio-tempo è coinvolto), avrebbe considerato l’utilizzo di queste strutture interstellari impraticabile, no? Voglio dire, se a quel punto abbiamo sfruttato in una fonte di energia infinita, quindi non sembra applicabile il modello Kardashev, o mi sbaglio?

Inoltre, quali sarebbero le implicazioni delle emissioni quando e se un gruppo di due o più pulsar (o altri oggetti correlati come i buchi neri e nane bianche) è diventato integrata o collisione all’interno del sistema pulsar? Cosa vorrei che fare per i segnali?

Nice article, Antonio. That is an exciting piece of material. The only problem we would be facing, it seems, would be actually reaching a pulsar to modulate, since their model requires engineering around the star system’s proximities. Any of our current spacecraft would take millennia to reach even the closest known neutron star. In addition, the seemingly gigantic amount of energy it would take for our current understanding of faster-than-light travel (e.g. infinite, unless the manipulation of space-time is involved), would deem the utilization of these interstellar structures impractical, no? I mean, if at that point we have tapped into an infinite energy source, then the Kardashev model doesn’t seem applicable, or am I wrong?

Also, what would be the implications of the emissions when and if a group of two or more pulsars (or other related objects like black holes and white dwarfs) became integrated or collided within the pulsar system? What would that do to the signals?

—

On a seperate note @Dr. Messerschmitt,

“Because we (or they) don’t know when and where such a message will be received, we (or they) are forced to transmit the message repeatedly. In this case, reliable reception (low error rate) for each instance of the message need not be a requirement because the receiving civilization can monitor multiple repetitions and stitch them together over time to recover a reliable rendition.”

To me it seems like it should be the other way around… It would make more sense to develop a more accurate, reliable transmission and reception before flooding a group of ETI in sporadic messages, leaving them with a possibly multi-generational patch-job, where meaning could flee in any which direction.

The “beacon” signal seems like a reasonable approach, especially since anyone who is bound to pick it up wouldn’t understand any information we had anyway, and if they tried, the risk of misinterpretation is too high to ignore.

Paul, kudos for a wonderful summary of a rather technical subject. Kudos also to Dr. Messerschmidt for doing all the heavy lifting. It all hangs together and I thus find myself in favour of SETI repurposing its detection algorithms to take account of this new approach. May it bear fruit.

As an interested non-scientist, I read through this piece with growing enthusiasm as Dr. M developed his creative ideas. As design and design process are my fields, I appreciate the rare ability to tease a wholly new and indeed elegant standard from the same data that has flummoxed earlier researchers. It’s fascinating both for the result and the process.

These are exciting times to be alive

Very interesting article. Prof. Messerschmitt, I don’t have Macconi’s book at hand, but I believe that even if a gravitational focal receiver would have an extremely narrow angular effective view, they have large depth of fields, by how much I’m not sure. Such transceivers would have to be kept with negligible orbital momentum, otherwise they won’t be of use for too long.

Kuiper objects are usually identified by their orbital speeds. Now, an interstellar gravitational focal transceiver won’t be orbiting and hence it won’t be detected by the usual algorithm of comparing the image at different times for moving objects. We’ll have to think of something else. If they are being held stable against gravitational pull by coherent radiation from the inner solar system, we’ll have better chance finding those sources

As I’ve once said on this topic the problem of information channel discovery is difficult. Almost certainly extremely difficult. Except for the domain of positive SNR signals the statistical methods to uncover such a signal, even one with a short cycle time, faces many technical challenges.

For example, while those graphically-shown “bits” look ever so enticingly detectable the reality of filtering+DSP places some fundamental constraints on the relationship between bit modulation and reception, and recovery via statistical methods to discover periodic information content. Then there is still the problem of the vast search space.

David, have you considered, or have you already proposed a specific search algorithm of the vast body of data that has already been collected to test your ideas? Rather than deal with a hypothetical it is always better to take action. Even the non-discovery of such a signal would at least tell us something about the types of signals that are not there, and would test the feasibility of performing a comprehensive search for this type of communication.

As Mundus says (and I strongly agree) the search focus should be on the beacon, not the information channel. There at least the probability of discovery is much improved.

I apologize if I’m a little bit vague here, but I do recall a somewhat recent paper by David, a very long one, that included a section on low-power beacons. I spent a few hours reading it but since that was all the time I could manage there are still aspects of it I question or don’t yet understand.

David, do you expect a transmitter / receiver to be small enough to allow its use in an interstellar probe (power requirements and antenna size) ?

I also wonder if your technique could be modified to use vortex radio waves to increase the information density without losing the immunity to RFI and increasing dispersion?

reference:

Experimental demonstration of free-space information transfer using phase modulated orbital angular momentum radio: http://arxiv.org/abs/1302.2990

(popular description: http://www.extremetech.com/extreme/120803-vortex-radio-waves-could-boost-wireless-capacity-infinitely)

I do not know enough about orbital angular momentum’s interference characteristics when considering interstellar signals. Evidently much of the research on interstellar signal characteristics came from pulsar astronomy.. which probably does not consider angular momentum.

I suspect that energy is the currency of exchange among more advanced civilizations. Humankind indirectly derives value from effort, which is a measure of energy, by quantizing it in monetary currency, which is both a medium of exchange and storage of value. But alien civilizations may deal in energy directly, without a medium. As such, it doesn’t surprise me that energy may be the key to detecting SETI.

Very telling ideas in this piece, indeed.

I’d benefit from it more if Dave could work out some representative problems. Maybe he could take the cases we analyzed in our Astrobiology papers and show the consequences of his ideas for them. I’d like to see some detection-optimized signals worked out that resemble the short pulses we’ve already see, such as the WOW signal, or the cost-optimized cases Jim and Dominic and I presented in our papers.

David A Czuba: I supect the opposite. More advanced civilizations will deal primarily in information, not energy. Future generations will view energy as we do food: Something that is needed and important, for sure, but near the bottom of the totem pole of significant economic factors.

These signals, if they reached us, would be classified as false alarms by SETI? ISTR multiple reports of false alarms; seems to me it would be worthwhile re-examining that data.

A good read D M. If your approach is correct then it’s amazing to think that many lost bits of many failed alien signals would be passing through here at any given moment from origins who-knows-where. The ones lost in the noise become the noise. And maybe some signals aren’t lost at all. I wonder how long it would take to recognize one, even if we are looking at the right place.

Question-can someone calculate the energy output of light during the night our civilization emits?

I just wish you and others would stop saying things that make it sound like SETI is some sort of organized science with a 50 year legacy. I had dinner with Frank Drake at the 2002 Bioastronomy Conference on Hamilton Island. I asked him then what he thought of those generalizations. I won’t speak for him but we did agree that less talk and more action is needed. Lets give SETI a chance, good old regular narrow band carrier wave searches a chance before we get so wound up in the technical minutia we remain impotent. Stop assuming organizations with names like Allan Array, SETI league, SETI Institute, SETI R US do SETI…They do a lot of talking and precious little SETI. Perhaps SETI at home is the one exception. And they report millions of hits. Have a bake sale and send the money to support the SKA. ~Operator…would you help me make this call?~

Regarding to my previous comment, I believe that all the radio transients observed so far show signs of dispersion, so are of natural origin. However, as these searches expand, there is an ever increasing chance of a artificial signal being discovered. In this sense, the transient radio surveys are already doing SETI for these kinds of signals, which is an added value to their original science case. Those programs might consider increasing the bandwidth they search, and I believe that will happen as time goes by.

@DavidMesserschmitt

The multiple star contact issue may be solved by moving the transmitter/receivers about the Sun but still using the Suns mass to focus the transmission and receiving signals. If a space faring civilisation has started it will most likely be confined to neighbouring Stars.

Most of this work is concerned with the design and development of the lower level layers of an interstellar communications system.

Many of you will agree that the message we send is just as important as the primary device and medium we’re going to use, obviously not the concern of this specific work.

However I think that in this primary stage of development the message we intend to send is inseparable from the device/medium.

In every form of communication you have different stages of communication, which comprise a protocol. “I don’t know why you say goodbye, I say hello” to borrow lyrics from some universally known musicians.

Every communication must be initiated with a hello, after which you can expect a handshake.

We don’t know what the handshake will look like or where it will come from, but there is no point in sending a message without those two basic things: 1) Saying hello, here I am, this is me, pleased to meet you. 2) Listening to their greetings.

Because they can’t see our faces and our open hand (and those things probably won’t make any sense to our new interstellar friends anyway) the most basic message we need to send is our location.

“Hello, we are from Earth!”, indicating where Earth is and where our solar system in a concise, simple language/protocol to be deciphered on the other end can be a difficult task.

I think that message, even if seemingly trivial, is not trivial at all.

Think of all the possible ways our message can arrive distorted and garbled after traveling parsecs and parsecs sorting cosmic obstacles. Think of the ways they might find the signal a few times, but being unable to figure out where that came from, or being unable to figure out how to stay on channel.

“Look! Here is Earth calling!” seems to me is an inseparable part of a project like this.

We could advertise ourselves better by building orbiting large world stations close to our star, they would be made very reflective to cope with the light from been close in and reflect that light strongly. ET could potentially see this anomaly especially if from multiple sources and for free as well.

I’m glad to see that David Messerschmitt’s arguments lead in the same direction that we Benfords have been arguing for some years now: Broader bandwidth pulsed sources, leading to the beacon concepts we have described in our papers. I have two suggestions on how to take this work further:

1) I agree completely that an end-to-end design should be undertaken, considering both the transmitter and receiver and taking into account David’s energy argument. I think we could assemble a team to do that in some detail. This may better inform SETI searchers as well as people contemplating building their own beacons for messaging purposes (METI).

2) I also think that Dave could use his approach to characterize the detectability of past beacon concepts in some detail. In particular, there are number of partially specified beacon concepts in SETI 2020 volume and of course in the Benford papers. And there are other papers in the literature which have partial specifications of beacon concepts all the way from continuous omnidirectional (and terribly expensive) concepts to short pulse broadband radiators.

If Dave and others such as myself will work all along these lines, we may be able to get a better idea of what SETI is looking for and what METI might do in the future.

Thanks to all for your comments!

To Mundus Gubernavi: Your plea for an end to end design of a reliable communication protocol is a great long-term (centuries or millennia) goal. However, we can do that only in cooperation with those with whom we wish to correspond, which requires that we contact and communicate with them first. For now I am afraid we are stuck with one-way communication, either them to us or us to them. I certainly agree that such an approach is limiting, but just knowing that there are indeed other civilizations out there is a huge step.

To Ron S and coacervate: The issue of jumping to experimentation vs working to understand the problem conceptually and theoretically is an intriguing one. Both approaches have merit, depending on circumstance. A good example is communications itself. Radio communication was developed in the years before WWII by experimentalists who didn’t bother to understand fully what they were doing, but just tried various things. Post-WWII a totally different approach emerged in which mathematics, theory, modeling, simulation took over. Progress was dramatically faster and further as a result. On the other hand, fiber optics emerged as a technology in the 1970’s largely driven by physics, experimentation, fabrication, and also made tremendous progress. What is different? My view of SETI is that it is like designing new jetliners. System experimentation (e.g. trying out a technique in a search comprehensive enough to conclude something significant) is a decades-long and expensive experiment. Thus, just as we can’t afford to crash jetliners during their design, we can’t afford to try out all the good ideas for SETI in a realistic experiment and thus need to rely more on concept and simulation. I believe that we will make much faster progress and enhance our chances of success if we step back first to thoroughly understand the problem before we experiment, and rely more on “controlled experiments” consisting of simulation to aid that understanding. I agree that there hasn’t been as much actual SETI searching as we would like, but that is largely driven by the high costs and long timeframes involved.

To Ron S: Yes, in the report I referenced there is a description of the search technique for energy-limited signals. I haven’t worked it out in detail, but I have plans to implement a simulation of such a search to learn more about it and force myself to work out the statistics.

To Markham: As I describe in the first section of my article, I think two-way communication with a starship has some very different requirements from one-way communication between stars. A lot of the difference is in the need for high reliability. But one point of commonality is the energy requirement on the spacecraft, which may be in short supply. Certainly the signal design techniques I have described would be applicable to reducing those energy requirements, or reducing the antenna gain, or both. In terms of the transmit electronics I would not be worried at all about the signal processing requirements (very simple) but I do think higher peak power to average power ratios would be an issue to address in the design of RF electronics. Your question on vortex waves: I don’t know, but that would be interesting to research.

To Aleksandar: Although I don’t think radio transient searches as currently constituted are going to discover the type of signals I propose, I do believe they could be modified to take that type of signal into account. That would be a great thing to do. Good idea.

To Michael: Agree that multiple stars could be targeted with a gravitation lens search, but only one star at a time. There is a correspondingly greater probability of success if we can target multiple stars concurrently, but there is a cost of doing that for either terrestrial or space based observations. It is probably a much lower cost for terrestrial based observations.

To Horatio Trobinson: Certainly the construction of a message that would be understandable is a very big part of the problem and very interesting. That is outside the scope of my work. Fortunately on the receiving/listening side we don’t have to address that problem, at least until we have discovered an artificial signal. At that time we are on the analysis rather than synthesis side, which is a very different challenge again. As to what might constitute our own transmitted message, there are a lot of different ideas and opinions on that, including some threads here on Centauri Dreams.

To Michael: Yes, there are some interesting ideas about using reflected sunlight for optical communication. That would be a nice finessing of the energy cost issue and we ought to explore it.

To Steve Muise: What I advocate in my report is that all detection events, whether they are classified as false alarms or not, be recorded in a database making them available for further study and analysis by future generations of researchers. Ideally this would be a single well-designed database made available to all. Then various pattern recognition algorithms could go thru the data looking for representative patterns of beacons or information-bearing signals.

To all who mentioned beacons: In my report that I referenced I have a whole chapter on beacons. Of course we don’t know whether other civilizations might choose a beacon or an information-bearing signal, so I would advocate looking for both. But it is interesting (and perhaps telling) that our own discussions of transmit strategy seem to focus only on information, with nobody that I know of advocating transmission of a beacon. (Let me know if I am wrong.)

The question I addressed in the report was how much energy/power would be needed to transmit a beacon, and how that energy/power could be reduced. The conclusion is that the “discoverability” of a beacon is not adversely affected if the beacon power is substantially reduced by transmitting bundles of energy with a low duty factor, similar to how a lighthouse works. It is also interesting (and perhaps telling) that when we design our own beacons (e.g. to attract the attention of ships) we use exactly this technique consistently: transmit high energy “pulses” to overcome loss and noise, and make them periodic with a low duty factor to conserve energy. (Many other engineered systems, like pile drivers and camera flash units and laser fusion reactors, use this approach as well.) A search for a beacon of this type could easily be included in a search for information-bearing signals, at essentially no incremental cost. So I would advocate searching for both at the same time. Our current searches are not likely to discover such low-power beacons for the same reason — their transient (non-persistent) character — and instead classify them as false alarms.

To Greg and James Benford: Completely agree with you both. There needs to be a better melding of the ideas you have presented in your papers with mine. Also, I think there needs to be a design exercise for a high power transmitter that targets multiple stars concurrently with either a beacon or an information-bearing signal. There are all kinds of interesting possibilities to use parallelism in such a transmitter, and of course there is the issue of high-power RF electronics. I personally don’t have the expertise to address the electronics part myself. But I agree that a great deal would be learned in the course of such an exercise.

Anyone receiving a transmission from us will have to contend with the radio emissions of the sun.

How do these emissions compare with the power levels we can create?

My understanding is that the Sun is a very bright object in the microwave sky compared to microwaves coming for interstellar objects.

Would it not be better to transmit narrow band signals within the absorption lines of the sun?

RonSmith said on November 27, 2013 at 15:04:

“I have long felt that the current SETI approaches depend much too much on assuming that ET thinks the same as us. An approach like this, that makes as few assumptions as possible about what an ET may think, and rather is based as much as possible directly on the limits placed on us by nature, seems far superior to me.”

You are quite correct, sir, in that most of current SETI strongly depends on alien recipients and communicators being very much like us. See my book review on this very topic and its relevant links:

https://centauri-dreams.org/?p=27889

Of course it is not entirely SETI’s fault. Between the limited budgets and our even more limited knowledge, we mainly have to go with what we can work with and hope that any ETI interested in cosmic communications will make it easy on us and every other society just starting out on this endeavor.

Michael said on December 1, 2013 at 6:02:

“We could advertise ourselves better by building orbiting large world stations close to our star, they would be made very reflective to cope with the light from been close in and reflect that light strongly. ET could potentially see this anomaly especially if from multiple sources and for free as well.”

Ignoring for the moment the fact that our space program doesn’t even have concrete plans for a manned Mars mission let alone something of the scale you are describing, not to mention a very limited budget, how would we make something reflective enough to outshine Sol and be seen at interstellar distances?

Wouldn’t powerful laser systems make more sense if you are going to have us build giant space structures anyway? And what do you mean by their seeing this for free? We can barely fund our SETI programs based on Earth; what would justify constructing huge objects in space just to do METI, which already has its detractors over simple radio signals?

This is a great paper and I like the FSK protocol, repetition, slow data rates etc. I’m not clear from reading this how long each energy bundle dwells on a frequency and how much time shift is required to identify a pulse position.

Joe Taylor (Nobel for discovering Pulsars) invented a weak signal communication protocol for Amateur Radio that uses some similar FSK ideas. This is very popular for bouncing signals off the moon.

http://physics.princeton.edu/pulsar/K1JT/

http://physics.princeton.edu/pulsar/K1JT/JT65.pdf

The JT65 protocol transmits on 65 separate frequencies to encode short strings of text. The protocol also include averaging of repeated messages for improved decoding. JT65 has to operate under bandwidth constraints for terrestrial comms, and keeps the signals within about 2 KHz.

To debate a few points however…error correction coding (such as Reed Solomon) can perform much much better than repeat and integrate coding schemes proposed here. JT65 uses RS coding as the primary means of improved message reliability. All these coding schemes implement parity on the surface of multidimensional information spaces…which brings all the complexity that we want to avoid. With a better understanding of coding, a theoretically common and easy to decode protocol may exist.

Another point of debate is that narrow bandwidth does not fundamentally increase energy requirements, _if_ the receiver bandwidth is reduced to match the transmitted bandwidth. The Shannon limit is silent on bandwidth because it does not matter, not because it’s assumed infinite. The ongoing SETI research looks for signals in a small fraction of 1 Hz bandwidth assuming a narrow band continuous emission.

For future ideas, one could imagine hierarchical coding schemes where the receiving civilization puts in place 30 dB more gain towards the transmitting civilization and could begin to see small modulations on the energy packets in frequency, phase, pulse position. These would not take more transmit energy nor reduce the detectability of the original signal.

To Michael Simmons: The original Cyclops Report in 1970 does an estimate of radio emissions from a star in the line of sight, and concludes that it is fairly insignificant. I would have to defer to astronomers on this one, but I doubt that our sun is highly atypical in its radio emissions. Rather, my guess is that you are referring to radio emissions from the sun as observed from our earth, which are relatively large only because of proximity. Can anybody more knowledgeable than me confirm this?

To jeff miller: You make several points so let me respond to them individually. You give me a good opportunity to explain some other concepts from my report.

The time duration of an energy bundle has to be long enough to keep the bandwidth small enough to avoid dispersive effects, and short enough to avoid time variation due to motion and scintillation. The former is called the “coherence bandwidth” and the latter is called the “coherence time”. The coherence bandwidth is typically in the 100’s of kHz, and the coherence time is typically in the 100’s of msec, but of course this depends somewhat on the line of sight and carrier frequency.

I disagree with you on Reed Solomon. The Shannon limit on rate (the so-called “channel capacity”) is monotonically increasing with bandwidth. The coding technique I describe combined with time diversity (which I didn’t talk about) can approach the Shannon limit for infinite bandwidth as the signal bandwidth approaches infinity. (The bandwidth approaching infinity is achieved by increasing the number of frequencies without bound.). This is a higher rate than Reed Solomon or any other code can achieve at any finite bandwidth. *However*, the asymptotic improvement gets slower as the bandwidth gets larger, and of course at some point the white noise model falls apart, so there are practical limits to this result. In practice it may make sense to stop increasing the FSK bandwidth and switch to a layered binary coding (like Reed Solomon). I would argue this is entirely appropriate for communication with starships, but not with civilizations. The reason for the latter is (a) robustness to a higher error rate and (b) questions about whether a receiving civilization could ever reverse engineer such a code. But as you say, it would be interesting nevertheless to look into alternative coding schemes to find something simple and reverse engineering-able, as Ian Morrison is doing in Australia.

I also disagree with your statement that narrow bandwidth does not increase energy requirements. The standard Shannon limit does depend on bandwidth (capacity equals BW times log (1+SNR), where SNR also depends on BW). As I mention above, the Shannon limit for white noise has the character that a higher average power is required to achieve the same information rate whenever the bandwidth is decreased. Achieving Shannon limit of course assumes a receiver that is optimal in every way, including bandlimiting to the signal bandwidth.

The current SETI observations generally look for beacons (zero information rate) rather than information-bearing signals. Beacons can have a narrow bandwidth essentially without penalty, but only because they do not embed information and thus are not governed by the Shannon limit.

Regarding your last point, adding 30 dB more gain to the receiver would not reduce the requirement on transmit power, because that gain would raise both the noise and the signal energy equally. Both the Shannon limit and practical receivers are sensitive to the signal-to-noise ratio, rather than the signal or noise power individually.

Jeff: You make some interesting points. It’s correct that more sophisticated coding methods can perform better than a repeat-code, and I agree it is worthwhile making the effort to identify alternative codes that perform better but are still simple enough for the one-way interstellar communications scenario where the receiver needs to guess or deduce the code used.

I must comment on one of the remarks you made; that bandwidth does not matter to the Shannon Limit. In the case of an information-bearing signal, the “bandwidth expansion factor” (BEF) is very important in determining the achievable efficiency in terms of energy per information bit communicated. The BEF can be defined as the amount of bandwidth that is occupied by the signal divided by the information rate of the signal. The only way to approach the very best energy efficiency (known as the “Ultimate Shannon Limit”) is for the BEF to be considerably greater than one, i.e. for the signal to occupy a significantly higher bandwidth than its information rate. The only time a narrowband signal is energy efficient for communications is when its information rate is much lower than its bandwidth. For example, in the case of a 1 Hz narrowband signal, it is only possible to achieve maximum energy efficiency when communicating at rates that are a small fraction of 1 bit-per-second.

I should have been more clear. Once a search receiver finds a signal. The receiving civilization will have the incentive to add a lot more receiving antenna and dwell time in the direction of the emissions. I meant to say 30 dB more _antenna_ gain…which will enable seeing deeper into the signal structure of the transmission. So the transmitting civilization can include faster modulation that a more focused receiver can decode.

Jeff: I think you’re right; once a signal is discovered, we can assume additional antenna gain and other resources (such as processing power) will quickly become available. A hierarchical signal structure is one way to take advantage of this, but it’s not the only way. Even with a conventionally modulated signal that is modulated to full depth, it is generally possible to perform cumulative detection over many modulated symbols. This can make it possible to discover the presence of the signal at an antenna gain that is well below what’s needed to extract the data content. Having said that, I think the hierarchical approach deserves more study to assess whether there might be approaches that provide a good balance between discoverability and communications efficiency.

Ian: “Even with a conventionally modulated signal that is modulated to full depth, it is generally possible to perform cumulative detection over many modulated symbols.”

The signal would have to be periodic and also have a short period or you’re left guessing. This is why I focused on the search algorithm in my earlier comment. In general, as the SNR decreases (especially when small or negative) the more you must already know about the signal to effect a successful detection.

jeff’s strategy makes more sense to me. Sagan also took this approach in his novel “Contact”.

To Jeff and Ian: The issue of making a signal discoverable at an SNR lower than necessary to recover information from the signal is a very interesting one. We don’t do that now, but it is certainly possible. If such a signal were discovered, we would be able (as you say correctly Jeff) to build a bigger antenna to increase the SNR to the point that information could be recovered.

I disagree that this would likely be used to recover phase or amplitude information. For a transmitter concerned about energy consumption or its own antenna gain (presumably because it wanted to transmit toward more targets and increase its chances of being received) it would never make sense to use amplitude or phase modulation. The scheme I have described is much more energy efficient when phase and amplitude modulation are not included.

The scheme I have described can be fairly easily modified to make the signal more discoverable, as I discuss and analyze in Chapter 6 of my report on arXiv. For example, every Nth FSK symbol can be given a higher energy. This does not interfere with information recovery, but those higher energy pulses with 1/Nth duty factor can be detected at lower SNR. The tradeoff is that as N is increased, the energy penalty goes to zero but the observation time required of the receiver increases. Now I am contradicting myself, since this is a form of amplitude modulation! But you can see the energy inefficiency that creeps in through doing this in the interest of discoverability.

Also, inherent to my discovery approach is a pattern recognition algorithm running on the back end. We would never declare “signal” when just a single pulse was detected, but rather we would camp on those frequencies looking for more. Pattern recognition is inherently an accumulation or averaging process. Recognizing that multiple pulses must be detected in the pattern recognition, the false alarm probability on individual pulses can be increased, resulting in greater receiver sensitivity. This basic approach can be moved in the direction of optimality using Bayesian detection approaches. I plan to do some modeling of this and develop the idea quantitatively.

Ian’s approach of “accumulation” or arithmetic averaging is another possibility. A challenge with this that I see is that it inevitably introduces another dimension of the receiver’s search — the frequency samples included in averages — doesn’t it? Another dimension of search increases processing requirements and perhaps more importantly increases false alarm probability. Do you have any way to mitigate these disadvantages?

David, have you considered solitons serving the role of short signal pulses in the

proposed scheme?

The conditions to generate solitions in an optical fiber is to inject a laser pulse of a certain energy, and use the properties of the fiber medium (non linear and confined). I don’t know if this is possible for the interstellar meduim though. Probably not in a real vacuum, but if one considers the ISM as a non linear medum (plasma) it might be doable… It would be interesting to know. If so, then the pulses will recover from the dispersion, since the soliton regenerates itself as

it propagates. I guess this is equivalent to shaping the pulses to counter dispersion as you suggest, but the solitions can travel much farther.

To: Aleksandar: All ideas should be pursued! However, in this case I would be skeptical about using the interstellar medium in this way, since the power levels would have to be very high indeed to work into the nonlinear regime. In any case this is an optical communication approach, whereas my work thus far has focused on radio. So no, I have not considered this.

To everybody: I have now posted a paper on this topic that you might fund useful. I actually used the Centauri Dreams post as a starting point, and your comments were very helpful. This has been submitted to JBIS for publication.

Design for minimum energy in starship and interstellar communication

David G. Messerschmitt

http://arxiv.org/abs/1402.1215

Abstract

The design of an interstellar digital communication system at radio wavelengths and interstellar distances is considered, with relevance to starships and extraterrestrial civilizations (SETI and METI). The distances involved are large, resulting in a need for large transmitted power and/or large antennas. In light of a fundamental tradeoff between wider signal bandwidth and lower signal energy per bit delivered to the receiver, the design uses unconstrained bandwidth and thus minimizes delivered energy. One major challenge for communication with civilizations is the initially uncoordinated design and, if the distances are greater, new impairments introduced by the interstellar medium. Unconstrained bandwidth results in a simpler design, helping overcome the absence of coordination. An implicit coordination strategy is proposed based on approaching the fundamental limit on energy delivered to the receiver in the face of jointly observable impairments due to the interstellar medium (dispersion, scattering, and scintillation) and motion. It is shown that the cosmic background radiation is the only fundamental limitation on delivered energy per bit, as the remaining impairments can be circumvented by appropriate signal design and technology. A simple design that represents information by the sparse location of bundles of energy in time and frequency can approach that fundamental energy limit as signal bandwidth grows. Individual energy bundles should fall in an interstellar coherence hole, which is a time-duration and bandwidth limitation on waveforms rendering them impervious to medium and motion impairments.