We seem to be entering ‘new eras’ faster than I can track. Certainly the gravitational wave event GW170817 demonstrates how exciting the prospects for this new kind of astronomy are, with its discovery of a neutron star merger producing a heavy-metal seeding ‘kilonova.’ But remember, too, how early we are in the exoplanet hunt. The first exoplanets ever detected were found as recently as 1992 around the pulsar PSR B1257+12. Discoveries mushroom. We’ve gone from a few odd planets around a single pulsar to thousands of exoplanets in a mere 25 years.

We’re seeing changes that propel discovery at an extraordinarily fast pace. Look throughout the spectrum of ideas and you can also see that we’re applying artificial intelligence to huge datasets, mining not only recent but decades-old information for new insights. Today’s problem isn’t so much data acquisition as it is data storage, retrieval and analysis. For the data are there in vast numbers, soon to be augmented by huge new telescopes and space missions that will take our analysis of planetary atmospheres down from gas giants to Earth-size worlds.

Consider what a team of European astronomers has come up with by way of gravitational lensing. Working at universities in Groningen, Naples and Bonn, the scientists have deployed artificial intelligence algorithms similar to those that have been used in the marketplace by the likes of Google, Facebook and Tesla. Feeding a neural network with astronomical data, the team was able to spot 56 new gravitational lens candidates. All of this in a field of study that normally demands sorting thousands of images and putting human volunteers to work.

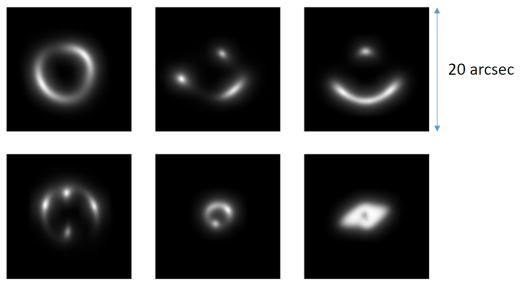

Image: With the help of artificial intelligence, astronomers discovered 56 new gravity lens candidates. This picture shows a sample of the handmade photos of gravitational lenses that the astronomers used to train their neural network. Credit and copyright: Enrico Petrillo (Rijksuniversiteit Groningen).

The team’s astronomical data were drawn from the optical Kilo Degree Survey (KiDS). Working the data is a ‘convolutional neural network’ of the kind Google’s AlphaGo used to beat Lee Sedol, the world’s best Go player. Carlo Enrico Petrillo (University of Groningen, The Netherlands) and team first fed the network millions of training images of gravitational lenses, a project that displayed the lensing properties the researchers wanted to identify.

The images from the Kilo Degree Survey cover about half of one percent of the sky. Matching the new imagery with its training images, the neural network found 761 lens candidates, which subsequent analysis by the astronomers whittled down to 56. These are all considered lensing candidates in need of confirmation by telescopes. The network rediscovered two known lenses but missed a third known lens considered too small for its training sample.

For Carlo Enrico Petrillo, the implications are clear:

“This is the first time a convolutional neural network has been used to find peculiar objects in an astronomical survey. I think it will become the norm since future astronomical surveys will produce an enormous quantity of data which will be necessary to inspect. We don’t have enough astronomers to cope with this.”

Exactly so. We’re accumulating data faster than we can analyze our material, which is why artificial intelligence advances are so telling. Training the network to recognize smaller lenses and to reject false ones is next on the team’s agenda. In the context of improving AI, bear in mind the recent major upgrade of Google’s AlphaGo to AlphaGo Zero, which defeated regular AlphaGo 100 games to 0. The new version trained itself from scratch in three days.

Image: With the help of artificial intelligence, astronomers discovered 56 new gravity lens candidates. In this picture are three of those candidates. Credit and Copyright: Carlo Enrico Petrillo (University of Groningen).

The paper is Petrillo et al., “Finding strong gravitational lenses in the Kilo Degree Survey with Convolutional Neural Networks,” Monthly Notices of the Royal Astronomical Society Vol. 472, Issue 1 (21 November 2017), pp. 1129-1150 (abstract). On AlphaGo Zero, see Silver et al., “Mastering the game of Go without human knowledge,” Nature 550 (19 October 2017), pp. 354-359 (abstract).

Machine learning used to predict earthquakes in a lab setting.

“The machine learning algorithm was able to identify a particular pattern in the sound, previously thought to be nothing more than noise, which occurs long before an earthquake. The characteristics of this sound pattern can be used to give a precise estimate (within a few percent) of the stress on the fault (that is, how much force is it under) and to estimate the time remaining before failure, which gets more and more precise as failure approaches.”

http://www.cam.ac.uk/research/news/machine-learning-used-to-predict-earthquakes-in-a-lab-setting

I can think of many other large scale disasters that machine learning could be of great help with. This is where organizations involved with astronomy and space exploration could use these tools for the benefit of mankind and receive more support from the governments and the world populations. Just think of the backing that projects like SETI, Starshot or Exoplanets surveys would get if they used AI to both help find what they are looking for and the prediction of earthquakes, typhoons and ways to improve pollution problems to name just a few.

Very good article by The Planetary Society on SETI, its history and what is in store in the near future.

Is there anybody out there?

http://www.planetary.org/blogs/jason-davis/2017/20171025-seti-anybody-out-there.html

Especially this section:

” Big data

In an era where the term “big data” dominates the technology landscape, many SETI scientists are eyeing improvements to the way telescope signals are stored and processed.

At Parkes, Breakthrough stores up to 100 times more data than a typical telescope user—down to individual voltage levels bouncing off the dish itself. The team currently stores a petabyte, or 1,000 terabytes, of those data. “But they quickly get through that,” said Jimi Green, the Parkes scientist.

New algorithms and machine learning could help rule out spurious signals while finding hidden ones scientists don’t know to look for. Pete Worden hopes to bring in help, perhaps on a volunteer basis, from unique places like the intelligence community.

“We’d really kind of like to get somebody that may work in the daytime for a three-letter agency, who goes home at night, downloads the data says ‘Hey, I found something interesting,'” Worden said.

About a year ago, the SETI Institute teamed up with IBM to start using machine learning to sift through the 54 terabytes of data per day the Allen Array captures. Bill Diamond said IBM was interested in placing the data in “a gymnasium for software” to test new computing algorithms. In return, the SETI Institute gets access to cloud computing resources and tools.

“It’s almost like building a new instrument, or building a new telescope,” Diamond said.”

All-sky continuum surveys, such as within the SKA capability, will yield transformational scientific discoveries. But the shear volume of the data means we need to plan for an unprecedented scale of information architectures using infrastructure strategies appropriate to this novel scenario. Concepts already obsolescent, like ‘data warehouse’ and ‘cloud computing’ simply won’t do. This is an exciting time to be engaged in data analytics.

Amazing to think how many things are just out there, waiting to be seen, and just escape notice because there aren’t enough eyes on them. The potential for large scale automated occultation studies, for instance, is immense.

Rather off-topic, but again there are such fascinating astronomical topics out there:

– The Dehydration of Water Worlds via Atmospheric Losses: water worlds around M-dwarfs may not stay wet very long;

http://iopscience.iop.org/article/10.3847/2041-8213/aa8a60/meta;jsessionid=3BA510E32A0B775DC6B708C2F23F437B.ip-10-40-2-120

Also: https://www.universetoday.com/137602/water-worlds-dont-stay-wet-very-long/

Exoplanets around M-type stars, the escape rates could be one thousand times greater or more (than around G-stars). The result means that even a water world, if it orbits an red dwarf star, could lose its atmosphere after about a gigayear.

– The Nature of the Giant Exomoon Candidate Kepler-1625 b-i; a Neptune-sized exomoon found orbiting a Jupiter-sized planet

https://arxiv.org/pdf/1710.06209.pdf

Is this a binary planet? Or a moon-planet combination? Or a gas planet (Neptune) orbiting a small brown dwarf?

Looking For Signs of Extraterrestrial Life Just Got a Whole Lot Easier.

These assumptions allowed the team to clearly see how changing the orbital distance and type of stellar radiation affected the amount of water vapour in the stratosphere. As Fujii explained in a NASA press release:

“Using a model that more realistically simulates atmospheric conditions, we discovered a new process that controls the habitability of exoplanets and will guide us in identifying candidates for further study… We found an important role for the type of radiation a star emits and the effect it has on the atmospheric circulation of an exoplanet in making the moist greenhouse state.”

Beyond offering astronomers a more comprehensive method for determining exoplanet habitability, this study is also good news for exoplanet-hunters hoping to find habitable planets around M-type stars.

Low-mass, ultra-cool, M-type stars are the most common star in the Universe, accounting for roughly 75 percent of all stars in the Milky Way. Knowing that they could support habitable exoplanets greatly increases the odds of find one.

In addition, this study is VERY good news given the recent spate of research that has cast serious doubt on the ability of M-type stars to host habitable planets.

https://www.sciencealert.com/signs-of-life-easier-model-water-exoplanets-3d-habitable-2017?perpetual=yes&limitstart=1

My concept on what could be happening on these planets and why they should be the focus of missions such as TESS.

What would be the amount of comets that enter and collide with these planets? Take TRAPPIST1 as an example, a miniature solar system with planets extremely close to the M dwarf star. If the comet orbits are anything like are solar system there would be a much higher chance of collisions with them because of the planet being near where the perihelion of the comets orbit. The other factor is that all these planets revolve around their sun at a very high speed, which would heighten the chance for collisions and also changing the orbit into a shorter period. This would then increase the chances of collisions with the other planets in that system. In conclusion the chances for re-hydration would be very high, plus increasing the elements for the building blocks of life. The resulting Amino Acid oceans would result in a much higher chance for advance lifeforms to develop.

October 26, 2017 @ 05:38 AM

Is E.T. Artificially Encoding Stellar Pulsars?

Bruce Dorminey

Often dubbed the galaxy’s cosmic clocks, millisecond pulsars — the by-product of the fastest-rotating neutron stars — are well known for their seemingly ‘natural’ electromagnetic beams. But what if some of these beams at least aren’t wholly natural?

That’s one of the questions posed in a new paper appearing in the International Journal of Astrobiology which posits that perhaps at least some pulsars have been manipulated by highly-advanced extraterrestrial civilizations. The idea is that such E.T.s would either use them as navigation beacons to direct their own spacecraft, or for galactic communication, or both.

Full article here:

https://www.forbes.com/sites/brucedorminey/2017/10/26/is-e-t-artificially-encoding-stellar-pulsars/#51919c060ee3

The paper here:

https://arxiv.org/abs/1704.03316

https://arxiv.org/ftp/arxiv/papers/1704/1704.03316.pdf

To quote:

The authors of a 2014 paper that appeared in the journal New Astronomy even speculated that an advanced civilization might use a satellite in polar orbit around the neutron star to encode information onto the pulsar’s natural beam.

The idea is that they would simply intercept (and encode the beacon) in a non-random manner so that the beam would become an information-rich communications beacon. The authors further speculate that such a civilization might also choose to construct a scaffold around the rotating star and use physical structures placed at points where the pulsar emission intersects the scaffold in order to modulate (or again encode) the beam.

Vidal admits that it all sounds a bit far-fetched and uses technology that is light years ahead of our own. But he notes that it would still be the worth the effort to test his hypothesis that some pulsars at least might be examples of galactic engineering.

How best to differentiate between a pulsar that was wholly natural and one that had been manipulated?

By looking for anomalies that would deviate from purely astrophysical explanations of these objects, says Vidal. That would include anomalies in their population; galactic distribution; and evolution. Vidal also hopes that if aliens are using pulsars for communication or even advanced navigation, they would include identifiable space-time stamps in their pulses, which Vidal and colleagues would one day hope to decode.