Ideas on interstellar propulsion are legion, from fusion drives to antimatter engines, beamed lightsails and deep space ramjets, not to mention Orion-class fusion-bomb devices. We’re starting to experiment with sails, though beaming energy to a space sail is still an unrealized, though near-term, project. But given the sheer range of concepts out there and the fact that almost all are at the earliest stages of research, how do we prioritize our work so as to move toward a true interstellar capability? Marc Millis, former head of NASA’s Breakthrough Propulsion Physics project and founder of the Tau Zero Foundation, has been delving into the question in new work for NASA. In the essay below, Marc describes a developing methodology for making decisions and allocating resources wisely.

by Marc G Millis

In February 2017, NASA awarded a grant to the Tau Zero Foundation to compare propulsion options for interstellar flight. To be clear, this is not about picking a mission and its technology – a common misconception – but rather about identifying which research paths might have the most leverage for increasing NASA’s ability to travel farther, faster, and with more capability.

The first report was completed in June 2018 and is now available on the NASA Technical Report Server, entitled “Breakthrough Propulsion Study: Assessing Interstellar Flight Challenges and Prospects.” (4MB file at: http://hdl.handle.net/2060/20180006480).

This report is about how to compare the diverse propulsion options in an equitable, revealing manner. Future plans include creating a database of the key aspects and issues of those options. Thereafter comparisons can be run to determine which of their research paths might be the most impactive and under what circumstances.

This study does not address technologies that are on the verge of fruition, like those being considered for a probe to reach 1000 AU with a 50 year flight time. Instead, this study is about the advancements needed to reach exoplanets, where the nearest is 270 times farther (Proxima Centauri b). These more ambitious concepts span different operating principles and levels of technological maturity, and their original mission assumptions are so different that equitable comparisons have been impossible.

Furthermore, all of these concepts require significant additional research before their performance predictions are ready for traditional trade studies. Right now their values are more akin to goals than specifications.

To make fair comparisons that are consistent with the varied and provisional information, the following tactics are used: (1) all propulsion concepts will be compared to the same mission profiles in addition to their original mission context; (2) the performance of the disparate propulsion methods will be quantified using common, fundamental measures; (3) the analysis methods will be consistent with fidelity of the data; and (4) the figures of merit by which concepts will be judged will finally be explicit.

Regarding the figures of merit – this was one of the least specified details of prior interstellar studies. It is easy to understand why there are so many differing opinions about which concept is “best” when there are no common criteria with which to measure goodness. The criteria now include quantifiable factors spanning: (1) the value of the mission, (2) the time to complete the mission, and (3) the cost of the mission.

The value of a mission includes subjective criteria and objective values. The intent is to allow the subjective factors to be variables so that the user can see how their interests affect which technologies appear more valuable. One of those subjective judgments is the importance of the destination. For example, some might think that Proxima Centauri b is less interesting than the ‘Oumuamua object. Another subjective factor is motive. The prior dominant – and often implicit – figure of merit was “who can get there first.” While that has merit, it can only happen once. The full suite of motives continue beyond that first event, including gathering science about the destinations, accelerating technological progress, and ultimately, ensuring the survival of humanity.

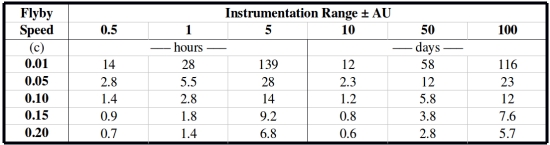

Examples of the objective factors include: (1) time within range of target; (2) closeness to target (better data fidelity); and (3) the amount of data acquired. A mission that gets closer to the destination, stays there longer, and sends back more data, is more valuable. Virtually all mission concepts have been limited to fly-by’s. Table 1 shows how long a probe would be within different ranges for different fly-by speeds. To shift attention toward improving capabilities, the added value (and difficulty) of slowing at the destination – and even entering orbit – will now be part of the comparisons.

Table 1: Time on target for different fly by speeds and instrumentation ranges

Quantifying the time to complete a mission involves more than just travel time. Now, instead of the completion point being when the probe arrives, it is defined as when its data arrive back at Earth. This shift is because the time needed to send the data back has a greater impact than often realized. For example, even though Breakthrough StarShot aims to get there the quickest, in just 22 years, that comes at the expense of making the spacecraft so small that it takes an additional 20 years to finish transmitting the data. Hence, the time from launch to data return is about a half century, comparable to other concepts (46 yrs = 22 trip + 4 signal + 20 to transmit data). The tradeoffs of using a larger payload with a faster data rate, but longer transit time, will be considered.

Regarding the total time to complete the mission, the beginning point is now. The analysis includes considerations for the remaining research and the subsequent work to design and build the mission hardware. Further, the mission hardware, now by definition, includes its infrastructure. While the 1000 AU precursor missions do not need new infrastructure, most everything beyond that will.

Recall that the laser lightsail concepts of Robert Forward required a 26 TW laser, firing through a 1,000 km diameter Fresnel lens placed beyond Saturn (around 10 AU), aimed at a 1,000 km diameter sail with a mass of 800 Tonnes. Project Daedalus envisioned needing 50,000 tonnes of helium 3 mined from the atmospheres of the gas giant planets. This not only requires the infrastructure for mining those propellants, but also processing and transporting that propellant to the assembly area of the spacecraft. Even the more modest Earth-based infrastructure of StarShot is beyond precedent. StarShot will require one million synchronized 100 kW lasers spread over an area of 1 km2 to get it up to the required 100 GW.

While predicting these durations in the absolute sense is dubious (predicting what year concept A might be ready), it is easier to make relative predictions (if concept A will be ready before B) by applying the same predictive models to all concepts. For example, the infrastructure rates are considered proportional to the mass and energy required for the mission – where a smaller and less energetic probe is assumed to be ready sooner than a larger, energy-intensive probe.

The most difficult duration to estimate, even when relaxed to relative instead of absolute comparisons, is the pace of research. Provisional comparative methods have been outlined, but this is an area needing further attention. The reason that this must be included – even if difficult – is because the timescales for interstellar flight are comparable to breakthrough advancements.

The fastest mission concepts (from launch to data return) are 5 decades, even for StarShot (not including research and infrastructure). Compare this to the 7 decades it took to advance from the rocket equation to having astronauts on the moon (1903-1969), or the 6 decades to go from the discovery of radioactivity to having a nuclear power plant tied to the grid (1890-1950).

So, do you pursue a lesser technology that can be ready sooner, a revolutionary technology that will take longer, or both? For example, what if technology A is estimated to need just 10 more years of research, but 25 years to build its infrastructure, while option B is estimated to take 25 more years of research, but will require no infrastructure. In that case, if all other factors are equal, option B is quicker.

To measure the cost of missions, a more fundamental currency than dollars is used – energy. Energy is the most fundamental commodity of all physical transactions, and one whose values are not affected by debatable economic models. Again, this is anchoring the comparisons in relative, rather than the more difficult, absolute terms. The energy cost includes the aforementioned infrastructure creation plus the energy required for propulsion.

Comparing the divergent propulsion methods requires converting their method-specific measures to common factors. Laser-sail performance is typically stated in terms of beam power, beam divergence, etc. Rocket performance in terms of thrust, specific impulse, etc. And warp drives in terms of stress-energy-tensors, bubble thickness, etc. All these type-specific terms can be converted to the more fundamental and common measures of energy, mass, and time.

To make these conversions, the propulsion options are divided into 4 analysis groups, where the distinction is if power is received from an external source or internally, and if their reaction mass is onboard or external. Further, as a measure of propulsion efficiency (or in NASA parlance, “bang for buck”) the ratio of the kinetic energy imparted to the payload, to the total energy consumed by the propulsion method, can be compared.

The other reason that energy is used as the anchoring measure is that it is a dominant factor with interstellar flight. Naively, the greatest challenge is thought to be speed. The gap between the achieved speeds of chemical rockets and the target goal of 10% lightspeed is a factor of 400. But, increasing speed by a factor of 400 requires a minimum of 160,000 times more energy. That minimum only covers the kinetic energy of the payload, not the added energy for propulsion and inefficiencies. Hence, energy is a bigger deal than speed.

For an example, consider the 1-gram StarShot spacecraft traveling at 20% lightspeed. Just its kinetic energy is approximately 2 TJ. When calculating the propulsive energy in terms of the laser power and beam duration, (100 GW for minutes) the required energy spans 18 to 66 TJ, for just a 1-gram probe. For comparison, the energy for a suite of 1,000 probes is roughly the same as 1-4 years of the total energy consumption of New York City (NYC @ 500 MW).

Delivering more energy faster requires more power. By launching only 1 gm at a time, StarShot keeps the power requirement at 100 GW. If they launched the full suite of 1000 grams at once, that would require 1000 times more power (100 TW). Power is relevant to another under-addressed issue – the challenge of getting rid of excess heat. Hypothetically, if that 100 GW system has a 50% efficiency, that leaves 50 GW of heat to radiate. On Earth, with atmosphere and convection, that’s relatively easy. If it were a space-based laser, however, that gets far more dicey. To run fair comparisons, it is desired that each concept uses the same performance assumptions for their radiators.

Knowing how to compare the options is one thing. The other need is knowing which problems to solve. In the general sense, the entire span of interstellar challenges have been distilled into this “top 10” list. It is too soon to rank these until after running some test cases:

- Communication – Reasonable data rates with minimum power and mass.

- Navigation – Aiming well from the start and acquiring the target upon arrival, with minimum power and mass. (The ratio of the distance traversed to a ½ AU closest approach is about a million).

- Maneuvering upon reaching the destination (at least attitude control to aim the science instruments, if not the added benefit of braking).

- Instrumentation – Measure what cannot be determined by astronomy, with minimum power and mass.

- High density and long-term energy storage for powering the probe after decades in flight, with minimum mass.

- Long duration and fully autonomous spacecraft operations (includes surviving the environment).

- Propulsion that can achieve 400 times the speed of chemical rockets.

- Energy production at least 160,000 times chemical rockets and the power capacity to enable that high-speed propulsion.

- Highly efficient energy conversion to minimize waste heat from that much power.

- Infrastructure creation in affordable, durable increments.

While those are the general challenges common to all interstellar missions, each propulsion option will have its own make-break issues and associated research goals. At this stage, none of the ideas are ready for mission trade studies. All require further research, but which of those research paths might be the most impactive, and under what circumstances? It is important to repeat that this study is not about picking “one solution” for a mission. Instead, it is a process for continually making the most impactive advances that will not only enable that first mission, but the continually improving missions after that.

Ad astra incrementis.

I am very happy to see communications in the criteria. Consider that a massive spacecraft with a large onboard power source is taking many months to return science data from as close as the Kuiper Belt. With a spacecraft weight measured in grams it will be a Herculean effort to send just one bit of information (“Hi, I’m here!”) back to us.

Communications and the related challenges of data aquisition in the extremely short fly-by durations deserve close consideration. Magnetic sails may prove incapable of significant spacecraft deceleration, but they could be a potent light weight power source for the spacecraft to address these challenges. Large foil optics would also help.

“To be clear, this is not about picking a mission and its technology – a common misconception – but rather about identifying which research paths might have the most leverage for increasing NASA’s ability to travel farther, faster, and with more capability.”

In my opinion this all depends on and boils down to whether or not one wants to deal with near-term technology and obtaining results, damn the political correctness in the process. Seemingly, if you’re talking about getting to a velocity of . 1 c , and you wish to REALLY achieve this result, then I guess, your best bet would be to go with an Orion-class fusion-bomb starship.

You got simplicity, established technology, a high energy dense fuel, an exposed combustion chamber (extremely important) that would mitigate requiring extensive radiators and cooling systems to sustain, the fuel is relatively less expensive than most others that are a bit further off in the future, and the fuel itself does not require any type of special storage equipment and/or auxiliary systems to maintain it in a appropriate physical state.

I cannot see any reason if one is serious about actually achieving a tenth of the velocity of light speed that this would not be preferable to anything that is likely to come about, I would even say in the next 50 years.

What is not a research problem, of course, is the question of how people will feign outrage that there would be thousands of nuclear bombs being sequestered on some massive starship and how this could be turned into some type of weapon of mass destruction, and how we would pollute space and so on and so on and so on. The usual suspects, as it were. I personally find the arguments quite tiresome and again, it depends on whether or not you have a level of trust that some nationstates are not going to do in somebody else. But the world being the way it seemingly is, there’d would be excessive handwringing in my opinion, and that alone would probably be enough to bring it to a swift close.

But, I’m arguing from a standpoint of what I believe is actually and realistically attainable with in a few decades that could propel a probe of significant mass and sophistication that could be used to bring us within say 60 years of travel time to some appropriate star system. It has the added advantage that the fuel being as dense as it is can probably permit reasonable de-acceleration (perhaps with gravity assist) to actually break into the star system and provide what I would think would be decades of observational scientific data. Right off the top my head such a probe would provide a enormous baseline to perform astrometry distance determinations to other more distant stars and galaxies.

“how people will feign outrage”

Because if people disagree with me, they must be pretending.

I think there are some serious issues to deal with when “there would be thousands of nuclear bombs being sequestered on some massive starship” Charlie. It’s not just an engineering problem to solve. I assume you are talking about launching it from the surface of the Earth? And what happens if the ship fails during launch and crashes? How much radioactive material is scattered over what area? Or if the ship achieves orbit how much radioactive material is left in the atmosphere? So possibly the ship should be built in orbit and how much would that cost? There are a huge number of issues to deal with here.

Sorry that should be Charley. My spelling was always atrocious.

@Gary Wilson,

thank you for the respectful inquiry on my behalf. In truth, I had not thought about the details concerning the assembly of many fissionable nuclear bombs.

There are many, many ways to approach this problem and I’m not sure most of the audience here is aware of the fact that there were a few nuclear tests in the 1950s, which were in the Pacific and were devoted to producing relatively ‘clean nuclear explosives’.

Now these clean bombs, if you will, were not totally radioactive free, and the goal of nuclear weapons development was to obtain the most explosive power for the least weight and size. That’s not the problem here.

Personally, if I had my druthers I would use as clean a nuclear devices possible. My understanding is that these rely upon fairly mildly radioactive to nonradioactive fuels is that rely upon lithium and variants of hydrogen. I could imagine that such materials could be sent into space with relatively safety and even in the event of an accident would probably not pose much of a radiological hazard.

The triggers for these devices would be uranium and/or plutonium and I assume that here only the most stringent methods would be used to loft these items into space in Dibs and Drabbs, so that any accident could be minimal. The actual assembly of the final devices would possibly be achieved in orbit about some other celestial body, and I was recently thinking that if you were completely totally worried about radioactivity that you might actually begin acceleration of the ship with these devices outside of the orbit of Pluto, for example.

And then you could begin the deacceleration at your star system may be one half to a quarter of a light year away from your destination (i.e. you would come in slow). Perhaps topping it off with a gravity assist at the destination star. It should be apparent that there could be any number of tremendous combination impossibilities that could be used to achieve this.

If one is actually SUPER WORRIED over the matter of fissile nuclear materials than perhaps setting up uranium centrifuges on other worlds that could mining local uranium deposits would provide the fissile bomb cores for your nuclear pulse devices.

Obviously cost comes into this and if I had to make a outlandish guess based upon what I said in the above paragraphs I would say anywhere in the neighborhood of from 500 billion to perhaps 2 trillion dollars would probably be not too far out a line. These are just total guesses, of course, and it all depends upon inflation, and just an infinite number of factors which somebody might be able to give some baseline numbers. But everybody already understands that it’s purely guesswork.

I just want to say that the question of cost is, of course, an obvious one, with regards to all the caveats that you stated above. It’s just that the question was asked (by Marc G Millis) at the very beginning, what appears to be a reasonable approach and thread that can actually be achievable with out stretching the technology into a realm we don’t have. And don’t forget also that all these other technologies that are being looked at also have questions that revolve around safety and totally uncharacterized costs. How much would trillion watt laser be cost wise? Antimatter, same question. And you haven’t even begun to factor in the research costs that would be sunk into programs in which there would be untold number of unknowns at this point.

In short, what is achievable and probably would permit a ship to be sent on its way within the next 50 years versus say a century from now, after all the research decision-making, government signing on, negotiating, and all the other endless details that would be needed hashed out.

Thanks for that clarification Charley. I guess the next main step would be to get commitment by various nations to spending 500 billion to 2 trillion dollars on this project. That may be a great challenge indeed given the other problems/commitments that most nations already have.

@Paul451

Exactly !!

Likely to happen once we have viable space colonies with some degree of practical independence; Given that they’re going to already be living in an environment where radiation protection is unavoidable, and be forced by life on the edge to be more practical, they’re not going to have that paranoia about all things nuclear. The technical merits will be everything.

I think we need to move forward with the good because who knows if we can get to the perfect. If starshot gets through the feasability stage it seems it will be the way to go. The technology boom of 1870 to 1970 was unique. The only other near term option is Project Orion the nukes not the current maned program..Though they will have to build a nuclear plant to fuel those lasers.

Isn’t that 160,000 rather than 1600?

Yes, and although several readers noticed it (thanks, RonS!), I couldn’t get it changed before the email version went out with the wrong figure. Sorry, but it’s now fixed on the site.

A wonderful effort to systematically analyze and compare potential paths forward Dr. Millis. Well done!

While data return is the essential feature of exploration, such exploration is may be an aspect of a larger endeavour, an obvious consideration being subsequent human travel.

Progressively extending exploration may increase the separation in space and time between the leading probes and the leading humans, to the point where it may be best to resort to von Neumann probes.

A very interesting report! Nevertheless, I want to suggest an improvement. In the energy/cost estimation, I think it’s a good idea to use energy as the ‘currency’ to estimate cost. But I think there is no need to divide propulsion methods in four categories and have different figures of merit for each of them instead of simply estimating the energy consumption of each of them.

Also, care must be taken to not include the wrong energy in the computation.

For example, in a space-based laser using solar energy, the actual energy produced by the laser must not be accounted for: it comes from the Sun and it’s free. What must be calculated is the energy needed to build, launch and maintain the laser (and the ship).

In the same vein, in a D-D fusion rocket, the actual energy produced by the deuterium must not be accounted for, only the energy needed for obtaining and processing the deuterium, launching it to LEO, launching the reactor, etc. This is what costs us money, not the energy actually produced by the fusion during the travel.

Using that energy calculation, cost estimation can be unified, I think.

Clarification, the four categories refer to the four different computational methods to convert unique performance terms into into the common measures of energy, time, and mass.

Ah, OK. Then I didn’t understand it correctly.

We could greatly reduce the capital and equipment by getting the laser to multi bounce off the sail. We could potentially achieve this by having a toroidal balloon with a highly reflective mirror and stable platform floating high in the atmosphere or even perhaps a very high tower. The laser array then shines on the sail and is reflected back and forth between the sail and mirror. Each bounce reduces the costs of the whole system, if it bounces 20 times thats about a 20 fold reduction in scale which is huge.

You will get absolute divergence of the beam. Irreducible, un-refocusable divergence. That will result in the beam widening with every reflection, regardless of the shape of the mirrors. That’s why Forward’s design has the beam-to-lens distance of 10AU for a 1000km lens, anything less would spread out too much by the time it reached the ship.

And in the case of that beam, once the ship is at 0.01 lightyear, the return beam will have spread out 60 times the original beam-width. The energy per 1000km reflector reduced to about 1/4000th of the original energy. That’s one bounce. You lose the same amount again by the time it returns to the ship for the second push. By the fourth push, you’ve reduced the original 26TW beam to just 1 watt.

Even if you could get the beam so tightly focused that it merely doubled in size on each bounce, you only return 6% to the ship for the second push. A third of 1% on the next push. And so on. His original 26TW beam is reduced to a watt by the time it returns to the ship 10 times.

If your proposal worked, it means we could extract essentially unlimited propulsive energy from any arbitrary laser source. Laser too weak, no problem, just bounce it more. We’d be using it for _everything_.

The acceleration phase is only around 2.5 million km at 60 000 g, focusing is less of a problem in the beginning but the bounces can be very high and energy loss will be small with a very good mirror 0.9999. I also believe we can accelerate it to higher g forces in the hundreds of thousands..

To complicate matters the laser frequency would drop with every bounce off the spacecraft as photon energy is converted to spacecraft kinetic energy. This idea may be better for slowing down an incoming spacecraft, but that is a problem for a much later era.

Due to the conversion efficiency of photon to kinetic energy the wavelength changes only by a small amount. I think the ratio is around 5000 to 1 depending on the velocity of craft.

As for using the laser to slow down objects we could use it on the moon greatly reducing energy losses of spacecraft moving between the earth and moon and landing. A spacecraft coming from the earth or from the moon has a lot of energy with it.

The concept of multiple bounces works over short distances such as a few km or less. It’s been experimentally proven by Y.K. Bae.

https://en.m.wikipedia.org/wiki/Photonic_laser_thruster

With a laser 1 km is as good as 10000 km. Imagine a balloon with the mirror system and the lasers on the ground. Recycling of photons shows great promise and the nice thing about the balloon system is sails can be launched from earth directly.

Forgot attachment.

https://pdfs.semanticscholar.org/719f/ff451031a935d3c914182608f8f284ff492a.pdf

Provided that some sort of “new physics” breakthrough doesn’t occur (quantized inertia drives for example), the most feasible solution seems to be the combination of beamed energy propulsion for accelerating and (anti-matter catalyzed) fusion drives for slowing down. It is very sad but unless some shocking new developments happen, the practical applications of these technologies are 100+ years in the future. If we’re lucky we get to witness working fusion reactors and humans landing on Mars, but interstellar probes are a nut for our grandchildren to crack.

Which doesn’t mean we shouldn’t actively already be working on solving these problems now.

I’m not sure I understand what the major competitor(s) to the Breakthrough Starshot system are for attempting to send a probe to nearby stars in relatively short voyage times. They key features are extremely lightweight payloads and the ability to deliver very large amounts of energy to the sail to achieve a speed equivalent to a significant fraction of the speed of light. All other systems will take hundreds or thousands of years to allow much larger probes to travel the same distance. Can someone explain what I’m missing? For example fusion engines and other types of onboard propulsion systems will be large of necessity surely?

Also surely you aren’t suggesting we will ever build 1 million synchronized 100 kw lasers to drive StarShot? I assume you are just laying out the scale of the project. Would we not have to build 100 1GW laser or something of that magnitude? And I can’t see that realistically happening unless the cost of power to drive the lasers is greatly reduced (surely we will never have the energy of 1-4 years of New York available for this one project). I assume if we somehow stabilize our climate and reduce the population load on the Earth’s ecosystems we will have some money and resources to spend on these types of projects but it will have to become vastly more inexpensive in terms of energy to even think we are going to actually bring them to fruition. People thought we were going to send humans to Mars within a couple of decades of the lunar landings (as far as I understand it Von Braun built Saturn V to actually go to Mars). Similarly the StarShot project may never happen or may happen many scores of years in the future but it is amazing to read about the technical and engineering problems that need to be overcome.

Yes the energy cost for any interstellar probe or journey is so high that alone make the cost very high. Add the engineering and the teams who could follow monitor and later receive the results is a margin note.

I find it a bit sad that negative mass get mentioned, if that would be possible we also could do time travel. And as far as my logic tell me, that would make it impossible as soon as the attempt is made.

Ergo: No negative mass is possible at all, as long as we’re in this universe.

I fully agree that we must face the environmental crisis first, else we never will have the means to do any of these wonderful projects.

We who are in biology research predict a mass extinction, please take this threat serious.

We are already in a mass extinction period for most species. The question is whether this will extend to humans, or can we avert it, albeit with mass starvation in some parts of the globe accompanied by violence on a huge scale.

Sadly, our civilization is not taking it seriously enough. We are probably seeing on a global level the same sort of behaviors that saw off more isolated civilizations in the past.

And I just saw this BBC Future article:

Are we on the road to civilization collapse?

It’s good to see some other people taking the present climate crisis seriously. I’m not surprised to find it on here. The word needs to spread rapidly now from science based thinkers to the rest of the population including the media, many of whom are still treating the subject as if it were some vast joke or hoax. Every day demonstrates it is no hoax. Extreme weather events are now occurring frequently all around the globe. They will continue to increase in severity and frequency and erode our ability to do other things as we fight to repair and replace the damage. I still have hope large scale action will be taken by governments but time is running out.

I agree with Gary. I would love to gave fusikn and antimatter but small sized beam for a flyby is about all I see and about all I think we could get funded by NASA ESA and Japan. The only other is Project Orion and it is a little slower.

If we look at work by Dr Jordon Kare and we approach an acceleration limit of the sail material, not its static limit which is much much higher, of 33 000 000 g the acceleration distance is around 6000 km. This 6000 km acceleration distance is ‘fairly’ well within a 10 metre reflectors diffraction limit. Increasing the g exposure and using the multi bounce principle reduces the amount of energy required but does increase the power of the laser required and complexity, it may be a good trade off. Another advantage of using the mirror high up is lower atmospheric losses which reduces the whole system of the system a fair amount.

http://www.niac.usra.edu/files/studies/final_report/597Kare.pdf

Thank you for the reference Michael. The Kare paper is a lot to get through for a biologist :). I’m not sure I understand what the acceleration forces are on the payload? Total acceleration time is 8.5 years from an initial relative velocity of zero to a final velocity of 0.1c. What range of accelerations does that produce and wouldn’t most of the acceleration be produced early in the acceleration phase when the distance for the microsails to travel is small and the frequency of sail strikes per unit time is at its highest? Is this correct?

In Dr Kares design he was going to use sails as kinetic energy objects to move a more massive spacecraft. The acceleration of the payload is quite small, it’s the projectiles that are accelerating at high rates and there is a lot of them. His work also points out that the materials can handle very high accelerations which we can use for spacecraft with nanotechnology architecture. If we look at the scaling factors accelerating a sail to very high values it can help the design significantly. For instance if I double the acceleration I half the distance and double my power requirements, but I have halfed my diffraction limit I only need a quarter of the mass of sail to intercept the laser light. So in real terms my power requirements halved. Now if I add multi bounce the benefits become much larger than with none at all, in fact the whole system reduces substantially. A great aid of the balloon multi bounce design is solar gravolens missions at say 300 km/s as they become very attractive and we can send 10’s of thousands out for the cost of one starshot.

Thanks again Michael. I did understand that the microsails are under enormous acceleration to provide the impact momentum. I just wondered how fast the acceleration of the payload occurred in the early stages. From what I read there is concern that these microsails might not retain their shape during their travel time to impact. One of many things to test. Overall it seems like a very promising concept. Somehow I don’t think we’ll ever see large starships driven by nuclear explosions ala Project Orion. That was a sixties approach akin to inserting thumb tacks into a cork board with a jackhammer. The new ideas of light sails driven in various ways should clearly win out in the next 50-100 years.

There is the micro fission craft where a small hollow sphere of say plutonium is collapsed under a powerful laser to undergo partial or completed fission.

Perhaps I should have emphasized what kind of work will ultimately be ranked for selection. Several comments appear to think that the debate is still about which propulsion idea is best. Instead, the selection will be on the next research steps.

To advance, all concepts will need a work plan and that plan has to have a next step. This grant is to help NASA pick which of those next-steps to fund. I’m guessing that, similar to NIAC, NASA will seek a suite of short-term tasks to further explore more than one possibility.

Thus, I want to shift the discussions beyond “which idea is best?” to “what work has to commence, next, to make progress on each of those ideas?”

So, if you had half a $mil or so for a couple of years, what critical make-break research would you propose? What actual problem-solving research is needed next on each idea?

@Marc Millis,

at the risk of sounding braggy, I knew all the time what you were asking far. The first step has to be what ideas should be scoped out and researched as purely ideas that could be pragmatically implemented.

At the risk of sounding like I’m speaking for everybody else, I think the reason why propulsion was being singled out was simply due to the fact that if you can’t go to where you want to go, however you want to go about achieving that, then everything else becomes superfluous. So I think that’s why people were emphasizing propulsion.

When you say as to “what work has to commence, next, to make progress on each of those ideas?”; Then my personal temptation is to say to you, well, if this is actually a serious attempt to BEGIN some program which will actually be implemented into reality and into actual hardware, then, if you are going to approach NASA with these ideas, then I would say the only realistic “work” that needs to be accomplished falls into two (and only) categories:

1.) Politics (in brief) what will be your (your here meaning your people, organization, advocacy group, etc. etc. etc.) approach to the nation’s government and perhaps outside governments, UN, individual agencies, private and public, philanthropists, and the like. Because unless you establish some type of political machinery to back this and have an advocacy in your behalf, then you are as good as dead.

2.) The other part is to have, in my opinion, competent research scientists who will be willing to part with some of what they’re working on and willing to align themselves with your goals to begin putting out a broad research proposal on their particular vision behind interstellar travel.

These just seem reasonable to me because without the politics (i.e. the money) and the goals spelled out you’ll get nowhere.

Charley,

The challenges you bring up will come into play -after- one of the propulsion concepts has proven itself ready. Right now all need more substantiation via experimental research. Once a concept is indeed ready for implementation, then those other activities that you cite can commence. By then, that data on costs and benefits will drive those discussions and decisions.

And… Remember that this is not just a 1-mission ambition. It is entirely reasonable to expect better ideas to emerge and mature, suitable for subsequent missions. To keep the pipe line of advancements flowing, it is prudent to dabble in more than one idea at a time.

The good news is that some research can commence now. Many of those ideas pertain to nearer-term, easier applications for which there is already funding.

For the vastly more difficult interstellar flight, I’ll make an analogy to the era of sailing ships (thank you Paul Gilster for the analogy): We are at the stage of planting the trees from which the wood will be harvested in the future to build sailing ships. We’re asking now what wood to plant and where, to see which wood turns out easy to grow and good enough to use. Then, once we’ve done a bit of test harvests, we can grow the right wood and design the ships we need. And in that process we will find other cools things to do with that new wood. This isn’t just about getting there. This is about making the future more capable, better.

I see where you’re coming from…

I suppose that if you are talking about half a million dollars to cast about and attempt to winnow down a winning strategy to get you to some star system, based on the criteria that you seem to want to restrain your self to, then I would have to say that an approach which maximizes information return while attempting to reduce all other quantities, i.e. heat rejection, navigation, mass, etc. etc. etc.

will probably have to be promulgated on the idea that most closely follows a vehicle with large computational systems aboard, sensor systems, correctable navigation systems.

I cannot see how a small gram sized payload can yield any advantages that a larger ship can’t overcome. Less massive ships that have no capability of doing anything but a flyby seems to nullify any time-saving you may have by rapid transit. There seems to be an assumption that your system will be perfectly intact when it arrives as it was when it departed. That I find is a dangerous assumption. Vast numbers of the sprites does not, in my opinion permit any more effective data collected then larger vessels. Guidance control is effectively lost once the laser beam cuts out. I don’t see any advantage to laser driven beam sails; you exchange time for less mass, no real navigational and guidance capabilities, lack of redundancy, robustness would be of a questionable nature given the interstellar medium. Finally, there is the real question; if the information per unit mass is really worth energy to be expended. I would say no, and it seems more of a parlor trick to pursue this than to pursue more conventional crafts. I would say that these reasons and others which I undoubtedly have not thought of would not be fruitful avenues to be investigated.

With regards to potentially future developments that rely upon completely theoretical investigations, I would say pursue those but at a much deferred cost- compared to planting your seeds as you have alluded to into wood types that have shown themselves to be dependable. I hope that contrasts well with your list that is shown below:

Communication – Reasonable data rates with minimum power and mass.

GuidanceNavigation – Aiming well from the start and acquiring the target upon arrival, with minimum power and mass. (The ratio of the distance traversed to a ½ AU closest approach is about a million).

Maneuvering upon reaching the destination (at least attitude control to aim the science instruments, if not the added benefit of braking).

Instrumentation – Measure what cannot be determined by astronomy, with minimum power and mass.

High density and long-term energy storage for powering the probe after decades in flight, with minimum mass.

Long duration and fully antonymous spacecraft operations (includes surviving the environment).

Propulsion that can achieve 400 times the speed of chemical rockets.

Energy production at least 160,000 times chemical rockets and the power capacity to enable that high-speed propulsion.

Highly efficient energy conversion to minimize waste heat from that much power.

Infrastructure creation in affordable, durable increments

We are dreamers after all and this site allows us to realise those dreams.

Thanks Paul

Why thank you, Michael. Much appreciated indeed!

My half a million dollars would be spent on how hard can we hit a thin membrane with a suitable laser. My thoughts are we can hit it very hard and accelerate it very fast. By increasing the acceleration of the membrane we reduce the whole system costs significantly.

That clarifies it a great deal Dr. Millis. I think a few of us have been trying to guess/predict which propulsion scheme will “win out”. It will be very interesting to see what kinds of proposals the groups who represent different propulsion methods put out. Have you a clear idea of when the next report will come out from the Tau Zero Foundation to summarize the next steps various groups propose?

Gary,

Thank you for asking. Your question about the grant’s next steps is difficult to answer at the moment. First, it took until October 2018 for the 2nd year funds to become available. Thereafter I ran into ‘administrative differences’ that led me to resign from Tau Zero in November. NASA and I are discussing options for how to resume the work (then interrupted by the gov’t shutdown). It’s a work in progress. Meanwhile, the next workshop about concepts is in November in Wichita. I’m involved with that too.

I hope things go well with whatever new arrangements you make Dr. Millis. We need your expertise to continue to be applied along with the many other experts involved.

I still like my idea to use the magnetic reconnection in the earth’s magnetotail to accelerate a electron beamed sail. Solar Max is in 2024 and this could be done quickly (Just a CubeSat) and for a very cheap price. Has something like this even been considered?

Waiting For The Next Sunspot Cycle: 2019-2030.

https://www.huffingtonpost.com/dr-sten-odenwald/waiting-for-the-next-suns_b_11812282.html

As for the flight energy perhaps angling the sail in the shape of a taco edge on will cause interstellar ions to bounce via low angle reflection into a point of concentration to heat or an energy converter perhaps an edge on coil. This could be tested in a low cost lab.

My two cents.

It’s clear to me that the only technology that’s actually feasible for interstellar travel is Woodward’s Mach Effect Thruster, if it works, since all other breakthrough ideas are unworkable by known technology yet the Mach Effect is being developed as we speak.

Everything else requires way too much energy, hardware or time.

1. “if it works”

2. “is being developed”

Both statements rest uneasily in the same sentence.

I was just being conservative. It does seem to work but it might turn out not to be scalable to the necessary practical levels.

That’s why I devoted 15 years to it. Not everything works out.

Are you saying you’re done with it? Are you pessimistic of the current work of Woodward, Fearn and associates?

I (and others) are highly pessimistic since all results of which we are aware have been either null (and generally unpublished) or have used suspect experimental protocols. This includes, in hindsight, my own published work.

Furthermore, the mathematics of the theory is flawed and points to a perpetual motion machine of the first kind, which violates either energy conservation or special relativity.

Thanks for sharing your perspective. I’m deeply disappointed. That’s was my primary hope for practical interstellar travel but I do have another.

It great that you are doing this study, and keep up the good work. There are, however, two misconceptions about StarShot, and I would like to avoid these getting spread widely:

“By launching only 1 gm at a time, StarShot keeps the power requirement at 100 GW. If they launched the full suite of 1000 grams at once, that would require 1000 times more power (100 TW).”

In fact, it is perfectly reasonable to keep the launch power power constant and increase the probe mass. The consequence is that the probe speed will decrease inversely to mass to the fourth power, as Philip Lubin showed. This point is relevant to my second comment:

“For example, even though Breakthrough StarShot aims to get there the quickest, in just 22 years, that comes at the expense of making the spacecraft so small that it takes an additional 20 years to finish transmitting the data. ”

This is in fact NOT the StarShot concept. Please see Figure 2 of our paper on StarShot communications at arxiv.org/abs/1801.07778. We show there the data latency vs data volume for a multiplicity of probe masses, with an optimum tradeoff indicated by the dashed line. Note that at the optimum points, the transmission time is always relatively small (much much less than the 20 years you cite), and most of the data latency is due to the probe transit time. If you find yourself transmitting for longer than a few years, then the plot shows that you would be better off increasing the probe mass (the figure assumes that the data rate increases as mass squared), thereby increasing transit time due to slower speed (see above), and transmitting data for a shorter period.

In the end this is consistent with your basic point, which is that it may be better to transit more slowly in order to transmit the data back more quickly. Both transit time and data transmission time have to be taken into account to find the best mission scenario. All this can be accomplished within the StarShot system concept while keeping launch power constant.

David,

Thank you for commenting and providing a link to more info. This is certainly a complex topic where it is hard to cite an illustrative value when so many trades and options remain open.

First, to make sure I’m using representative numbers, what is a reasonable estimate for the time to transmit the data (and what amount of data) for something like a StarShot scenario (similar mass, power, aperture, etc.)? I pulled the 20 year value from your 2017 TVIW presentation. Did I hear or interpret that incorrectly? Also, in my hasty look at figure 2 of the cited paper, it appears that the lowest value of data latency is 25 years. Am I interpreting that chart correctly?

Regardless of the actual values, it became obvious that the transmission time is not a trivial matter. To bring the communication challenges fully into the big picture (and trades), it seemed prudent to define the completion of the mission in terms of getting all the data back, rather than the probe’s arrival point.

And lastly, on the interplay of energy and power: I find it difficult to convey to general audiences the nuances of the challenge. Power can indeed be reduced if you can afford more time to deliver the required energy or by changing other factors. Some important points that I wanted to convey include: the spacecraft’s speed is not the only measure of goodness; the speed challenge includes an enormous energy challenge; and the energy challenge includes a power and waste heat challenge.

You probably did interpret my talk at TVIW correctly (!), but we have since developed a more nuanced understanding. At that time I dont believe we had taken into account the implications of changing the probe mass. The arXiv paper (which will soon be replaced with a newer version) contains our latest understanding.

I fully agree that transmission time should be included. In the figure the data latency (vertical axis) is defined as the time from launch to the reception of the data volume in its entirety, so it includes (a) transit time to the star and start of transmission and (b) the transmission time until the given data volume (horizontal axis) is conveyed.

So the minimum data latency on the figure is 21.2 years, which is the transit time to Proxima Centauri at a speed of 0.2c and zero data volume. Then the latency increases from that minimum as data volume increases. But beyond a few years transmission time, you can always get lower data latency (as defined above) by increasing the mass of the probe. This is because the higher data rate more than compensates for the greater transit time.

This assumes particular laws for the scaling of probe speed and data rate with probe mass, but the calculation can always be repeated for any alternative scaling laws.

I am putting together a talk for my local astronomy club on the challenges of communication with interstellar probes, so I appreciate your issues of communicating these rather complex topics to a general audience. I will post that my (as narrated slides) on my homepage in a week or so.

In re-reading my last post I noticed one statement that can be interpreted incorrectly:

“the data latency (vertical axis) is defined as the time from launch to the reception of the data volume in its entirety, so it includes (a) transit time to the star and start of transmission and (b) the transmission time until the given data volume (horizontal axis) is conveyed”

I should make it clear that the latency is time to launch until the last bit in the data volume is received back on earth. So this includes the propagation time from the probe to earth (from its last position when it stops transmission). (Note that the probe is further from earth than the star for this end of transmission.)

So I also misinterpreted my own statement (!) when I wrote:

“the minimum data latency on the figure is 21.2 years”

It is actually 21.2 years transit time PLUS 4.24 years for the signal to propagate back to earth. Sorry, I was a little careless.

David,

It’s oddly reassuring to learn that I’m not the only one having challenges interpreting everyone’s technical papers. These are difficult subjects and all of ours works are works in progress. With such cross-checking we’ll make more progress overall.

We are forgetting something important here in that data will flowing all the time. As the sail slices through space it could rotate and observe planet transits that can’t be seen from earth due to the change in observation angle.

Paul Gilster: The most RADICAL relativistic propulsion drive idea EVER. Please watch the new “Cool Worlds” video: The Halo Drive ASAP! My take on this: Breakthrough Initiatives should IMMEDIATELY start to look for technosignatures around black hole binaries!

It’s already in the pipeline, Harry, with the first of several articles planned for tomorrow.

The biggest objection to any star mission is that you can always build a bigger telescope. If you considered a 1,000 meter mirror space telescope using the suggested standards how would it compare to other suggested missions?