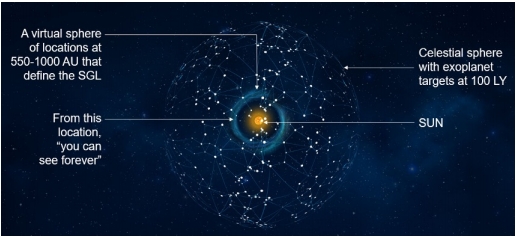

Seeing oceans, continents and seasonal changes on an exoplanet pushes conventional optical instruments well beyond their limits, which is why NASA is exploring the Sun’s gravitational lens as a mission target in what is now the third phase of a study at NIAC (NASA Innovative Advanced Concepts). All of this builds upon the impressive achievements of Claudio Maccone that we’ve recently discussed. Led by Slava Turyshev, the NIAC effort takes advantage of light amplification of 1011 and angular resolutions that dwarf what the largest instruments in our catalog can deliver, showing what the right kind of space mission can do.

We’re going to track the Phase III work with great interest, but let’s look back at what the earlier studies have accomplished along the way. Specifically, I’m interested in mission architectures, even as the NASA effort at the Jet Propulsion Laboratory continues to consider the issues surrounding untangling an optical image from the Einstein ring around the Sun. Turyshev and team’s work thus far argues for the feasibility of such imaging, and as we begin Phase III, sees viewing an exoplanet image with a 25-kilometer surface resolution as a workable prospect.

But how to deliver a meter-class telescope to a staggeringly distant 550 AU? Consider that Voyager 1, launched in 1977, is now 152 AU out, with Voyager 2 at 126 AU. New Horizons is coming up on 50 AU from the Earth. We have to do better, and one way is to re-imagine how such a mission would be achieved through advances in key technologies and procedures.

Here we turn to mission enablers like solar sails, artificial intelligence and nano-satellites. We can even bring formation flying into a multi-spacecraft mix. A technology demonstration mission drawing on the NIAC work could fly within four years if we decide to fund it, pointing to a full-scale mission to the gravitational focus launched a decade later. Travel time is estimated at 20 years.

These are impressive numbers indeed, and I want to look at how Turyshev and team achieve them, but bear in mind that in parsing the Phase II report, we’re not studying a fixed mission proposal. This is a highly detailed research report that tackles every aspect of a gravitational lens mission, with multiple solutions examined from a variety of perspectives. One thing it emphatically brings home is how much research is needed in areas like sail materials and instrumentation for untangling lensed images. Directions for such research are sharply defined by the analysis, which will materially aid our progress moving into the Phase III effort.

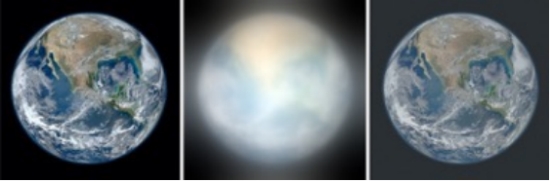

Image: A meter-class telescope with a coronagraph to block solar light, placed in the strong interference region of the solar gravitational lens (SGL), is capable of imaging an exoplanet at a distance of up to 30 parsecs with a few 10 km-scale resolution on its surface. The picture shows results of a simulation of the effects of the SGL on an Earth-like exoplanet image. Left: original RGB color image with (1024×1024) pixels; center: image blurred by the SGL, sampled at an SNR of ~103 per color channel, or overall SNR of 3×103; right: the result of image deconvolution. Credit: Turyshev et al.

Modes of Propulsion

A mission to the Sun’s gravity lens need not be conceived as a single spacecraft. Turyshev relies on spacecraft of less than 100 kg (smallsats, in the report’s terminology) using solar sails, working together and produced in numbers that will enable the study of multiple targets.

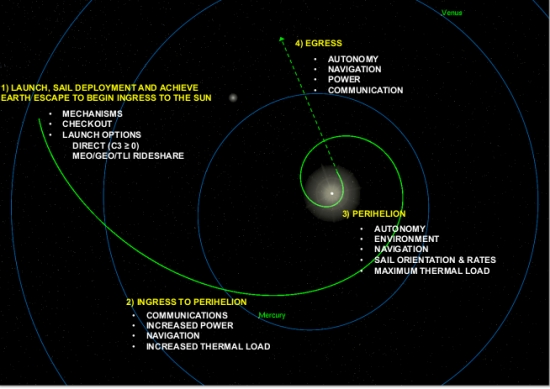

The propulsive technique is a ‘Sundiver’ maneuver in which each smallsat spirals in toward perihelion in the range of 0.1 to 0.25 AU, achieving 15-25 AU per year exit velocity, which gets us to the gravity lensing region in less than 25 years. The sails are eventually ejected to reduce weight, and onboard propulsion (the study favors solar thermal) is available at cruise. The craft would enter the interstellar medium in 7 years as compared to Voyager’s 40, making the journey to the lens in a timeframe 2.5 times longer than what it took to get New Horizons to Pluto.

Image: Sailcraft example trajectory toward the Solar Gravity Lens. Credit: Turyshev et al.

The hybrid propulsion concept is necessary, and not just during cruise, because once in the focal lensing region, the spacecraft will need either chemical or electrical propulsion for navigation corrections and for operations and maintenance. Let’s pause on that point for a moment — Alex Tolley and I have been discussing this, and it shows up in the comments to the previous post. What Alex is interested in is whether there is in fact a ‘sweet spot’ where the problem of interference from the solar corona is maximally reduced compared to the loss of signal strength with distance. If there is, how do we maximize our stay in it?

Recall that while the focal line goes to infinity, the signal gain for FOCAL is proportional to the distance. A closer position gives you stronger signal intensity. Our craft will not only need to make course corrections as needed to keep on line with the target star, but may slow using onboard propulsion to remain in this maximally effective area longer. I ran this past Claudio Maccone, who responded that simulations on these matters are needed and will doubtless be part of the Phase II analysis. He has tackled the problem in some detail already:

“For instance: we do NOT have any reliable mathematical model of the Solar Corona, since the Corona keeps changing in an unpredictable way all the time.

“In my 2009 book I devoted the whole Chapters 8 and 9 to use THREE different Coronal Models just to find HOW MUCH the TRUE FOCUS is PUSHED beyond 550 AU because of the DIVERGENT LENS EFFECT created by the electrons in the lowest level of the Corona. For instance, if the frequency of the electromagnetic waves is the Peak Frequency of the Planckian CMB, then I found that the TRUE FOCUS is 763 AU Away from the Sun, rather than just 550 AU.

“My bottom-line suggestion is to let FOCAL observe HIGH Frequencies, like 160 GHz, that are NOT pushing the true focus too much beyond 550 AU.”

Where we make our best observations and how we keep our spacecraft in position are questions that highlight the need for the onboard propulsion assumed by the Phase II study.

Image: Our stellar neighborhood with notional targets. Credit: Turyshev et al.

For maximum velocity in the maneuver at the Sun, as close a perihelion as possible is demanded, which calls for a sailcraft design that can withstand the high levels of heat and radiation. That in turn points to the needed laboratory and flight testing of sail materials proposed for further study in the NIAC work. Let me quote from the report on this:

Interplanetary smallsats are still to be developed – the recent success of MarCO brings them perhaps to TRL 7. Solar sails have now flown – IKAROS and LightSail-2 already mentioned, and NASA is preparing to fly NEA-Scout. Scaling sails to be thinner and using materials to withstand higher temperatures near the Sun remains to be done. As mentioned above, we propose to do this in a technology test flight to the aforementioned 0.3 AU with an exit velocity ~6 AU/year. This would still be the fastest spacecraft ever flown.

The report goes on to analyze a technology demonstration mission that could be done within a few years at a cost less than $40 million, using a ‘rideshare’ launch to approximately GEO.

String of Pearls

The mission concept calls for an array of optical telescopes to be launched to the gravity lensing region. I’ll adopt the Turyshev acronym of SGL for this — Solar Gravity Lens. The thinking is that multiple small satellites can be launched in a ‘string of pearls’ architecture, where each ‘pearl’ is an ensemble of smallsats, with multiple such ensembles periodically launched. A series of these pearls, multiple smallsats operating interdependently using AI technologies, provides communications relays, observational redundancy and data management for the mission. From the report:

By launching these pearls on an approximately annual basis, we create the “string”, with pearls spaced along the string some 20-25 AU apart throughout the timeline of the mission. So that later pearls have the opportunity to incorporate the latest advancements in technology for improved capability, reliability, and/or reductions in size/weigh/power which could translate to further cost savings.

In other words, rather than being a one-off mission in which a single spacecraft studies a single target, the SGL study conceives of a flexible investigation of multiple exoplanetary systems, with ‘strings of pearls’ launched toward a variety of areas within the focus within which exoplanet targets can be observed. Whereas the Phase I NIAC study analyzed instrument and mission requirements and demonstrated the feasibility of imaging, the Phase II study refines the mission architecture and makes the case that a gravity lens mission, while challenging, is possible with technologies that are already available or have reached a high degree of maturity.

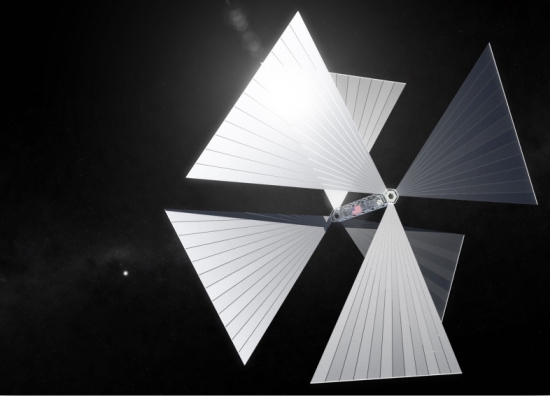

Notice the unusual solar sail design — called SunVane — that was originally developed at the space technology company L’Garde. Here we’re looking at a sail design based on square panels aligned along a truss to provide the needed sail area. In the Phase II study, the craft would achieve 25 AU/year, reaching 600 AU in ~26 years (allowing two years for inner system approach to the Sun). [Note: I’ve replaced the earlier SunVane image with this latest concept, as passed along by Xplore’s Darren Garber. Xplore contributed the design for the demonstration mission’s solar sail].

Image: The SunVane concept. Credit: Darren D. Garber (Xplore, Inc).

The report examines a sail area of 45,000 m2, equivalent to a ~212×212 m2 sail, with spacecraft components to be configured along the truss. Deployment issues are minimal with the SunVane design. The vanes are kept aligned edge-on to the Sun as the craft approaches perihelion, then directed face-on to promote maximum acceleration.

We have to learn how to adjust parameters for the sail to allow the highest possible velocity, with areal density A/m being critical — here A stands for the area in square meters of the sail, with m as the total mass of the sailcraft in kilograms (this includes spacecraft plus sail). Sail materials and their temperature properties will be crucial in determining the perihelion distance that can be achieved. This calls for laboratory and flight testing of sail material as part of the continuing research moving into the Phase III study and beyond. Sail size is a key issue:

The challenge for design of a solar sail is managing its size – large dimensions lead to unstable dynamics and difficult deployment. In this study we have consider[ed] a range of smallsat masses (<100 kg) and some of the tradeoffs of sail materials (defining perihelion distance) and sail area (defining the A/m and hence the exit velocity…). As an example, for the SGLF mission, consider perihelion distance of 0.1 AU (20Rsun) and A/m=900 m2/kg; the exit velocity would be 25 AU/year, reaching 600 AU in ~26 years (allowing 2 years for inner solar system approach to the Sun). The resulting sail area is 45,000 m2, equivalent to a ~212×212 m2 sail.

The size of that number provokes the decision to explore the SunVane concept, which distributes sail area in a way that allows spacecraft components to be placed along the truss instead of being confined to the sail’s center of gravity, and which has the added benefit of high maneuverability. A low-cost near-term test flight is proposed with testing of sail material and control, closing to a perihelion in the range of 0.3 AU, with an escape velocity from the Solar System of 6-7 AU per year. Several such spacecraft would enable a test of swarm architectures.

Thus the concept: Multiple spacecraft would be launched together as an ensemble — the ‘pearl’ — using solar sails deployed on each and navigating through the Deep Space Network, with the spacecraft maintaining a separation on the order of 15,000 km as they pass through perihelion. Such ensembles are periodically launched, acting interdependently in ways that would maximize flexibility while reducing risk from a single catastrophic failure and lowering mission cost. We wind up with a system that would enable investigations of multiple extrasolar systems.

I haven’t had time to get into such issues as communications and power for the individual smallsats, or data processing and AI, all matters that are covered in the report, nor have I looked in as much detail as I would have liked at the sail arrays, envisioned through SunVane as on the order of 16 vanes of 103 m2, allowing the area necessary in a configuration the report considers realistic. This is a lengthy, rich document, and I commend it to those wanting to dig further into all these matters.

The report is Turyshev et al., “Direct Multipixel Imaging and Spectroscopy of an Exoplanet with a Solar Gravity Lens Mission,” Final Report NASA Innovative Advanced Concepts Phase II (full text).

It sounds like the sunjammer design requires very large areas of thin film ceramics covered with nanostructures. There’d be a lot of good crossover working with the breakthrough starshot program since they are thinking the same.

If the integration time of the imaging is 10-20 years (optimistic) then wouldn’t the image of the planet be “smeared out” over that time span both to to it’s intrinsic rotation and displacement and the spacecrafts? That’s not to mention the big problem of how to reassemble an image out of the blended light of the entire planet surface from the Einstein ring.

One way to decrease the integration time more by decreasing shot noise a *ton* that work well at tens of GHz freqs would be to use a superconducting kinetic inductance detector array. But then you need to be able to keep it superconducting for all the time to get out there.

I look forward to all these problems getting engineered out by JPL and friends.

Interestingly the imaging relies on the time to image (years) because that handles clouds ( removes them) and changes in diurnal orientation of the planet. They also track the planet’s orbit too. I am surprised the de convolution works so well given the all the changes in the planet’s size, orientation, phase of rotation, and surface changes especially cloudscapes, but that is what the authors claim. It does make me wonder about the images presented as the clouds remain and just a single face of the planet is shown. This suggests they are using a static image, adding noise and recovering the image. It may prove much harder to deconvolve the Einstein ring in reality with all these underlying changes.

An amazing set of concepts. We would gain so much information from such a mission. I haven’t read the Turyshev report yet but is there a cost estimate? Would it be in the billions of dollars?

Yes this is a billion dollar project, complex as the Webb telescope and the probes have to last for decades. The multi probe approach solve the most important problem, that a single probe would have to know beforehand that a target planet is worth observing. But the main drawback remain, and that in most cases that one probe will only observe one system. The text limit this to 30 parsec, but we still could get a first view of a planet 10 times further out if they choose to divert the probe as it’s flying at high speed. The decision to fund a mission will always be how much information a mission can gather, and a similar cluster of micro probes synchronized so they act as a giant imaging interferometer, or or perhaps Fast fourier transformation telescope in space neither would give this amazing resolution, and it would provide answers to many important questions such as the presence of oceans, volcanoes and signs of continental drift. But the main question, if there’s a planet with the right temperature, presence of water, and possibly oxygen can be addressed with other instruments that do not have to fly so far – and that can be aimed at a long list of targets during their mission.

The solar flyby and sails is a nice idea, but by the time such a mission is considered, the Direct fusion drive might also be an option. https://arxiv.org/abs/2002.12686v1

I think this would have to fall into the category of a Flagship mission, which means cost in the range of $2-3 billion.

Has an exoplanet been detected in the radio realm?

https://phys.org/news/2020-12-astronomers-radio-emission-exoplanet.html

Interesting research and concept and I applaud those undertaking it, but I do wonder if there are better ways to achieve the same goal. Mylar has a reflectivity of 97% in the visual range and higher for IR, if it was gold coated then the reflectivity increases to over 99%.

I think we need to investigate how to engineer a space based mirror made of a mylar sheet with a diameter of 25km. In space there would be none of the pesky Earth bound issues to deal with. If such a structure was placed in a Sun orbit halfway between Earth and Mars but about 15 degrees above the plane of the solar system the results would be staggering.

At present we do not have the technology for the SGL concept, and whilst I encourage investigation and research, it should not be at the expense of other solutions that cna be done now, with imagination.

Why 25 km? Is this some practical limit or constraint? The NIAC report indicates that an Earth telescope would need to be 90 km in diameter to have the resolution of the telescope at the SGF.

Rather than a reflector, would a flat Fresnel lens be more achievable? It would stay flat by rotation and avoid the likely problems of constructing a mylar reflector, whether stiffened on a form or inflated.

Telescopes kilometers across in space would seem like an achievable for an inter-planetary spacefaring civilization, but the reality is that at least for the next few decades humanity seems unlikely to reach this status. It would make for an interesting feasibility/cost comparison study– that is, would a larger telescope closer to Earth be just as or more effective than sending a smaller telescope to the SGF? Dr. Robert Zubrin mentioned the advantages of placing a large telescope on the Moon. At what point would one option be more economical than the other? What about a best-of-both-worlds scenario in which we send a 25 km or larger telescope to the SGF? It is fun to speculate what a mega-telescope could do for astronomy if placed ANYWHERE in the solar system let alone at the SGF!

There is a whole range of technological capabilities, I am guessing, that would be opened up if we could access the solar system facilely. We often think about the technology required to access the solar system facilely, but what about the technological capacities that we could reach by virtue of being able to access the solar system facilely? Take energy for example in the form of solar power and let’s set our sights short of a Dyson-sphere for now. Imagine what could be achieved energetically if we placed massive, highly efficient solar arrays in an orbit closer to the Sun than the Earth is to the Sun?! A massive space based solar array located millions of km from the Earth could, in theory, churn out antimatter by the ton per year, according to Charles Pellegrino (author of “The Killing Star”). Or, what about huge particle accelerators in space? Could there be a technological positive feedback loop associated with being able to access the solar system as a spacefaring civilization?

The topic of using the SGF is fascinating. It makes me wonder about the SGF of other star systems. How might our SGF compare in terms of imaging capabilities to a larger or smaller star’s GF? Or, how might our GF compare to a black hole or neutron star’s GF?

Using the Sun’s deep gravity well as both a slingshot and as a lens is a fascinating concept, but filtering out the glare from the solar corona as others have mentioned might be problematic. Happily though our solar system has another light bender slowly circling just 5.5 AU out from Sol that lacks a pesky corona. Would Jupiter’s focal line start at too great a distance to be useful as a backup grav focuser?

Jupiter’s focal line starts at 6100 AU. Maccone has considered using planets for FOCAL purposes, pointing out that an outbound probe to a star will pass through the focal zone of our system’s planets:

Gravitational Lensing with Planets

https://centauri-dreams.org/2016/04/26/gravitational-lensing-with-planets/

Something that has not been discussed is the number of stars in the 30 parsec or around 100 Lightyears radius of the Sun. The best figure is around 14,000 stars with 83 percent being M dwarfs, the Gaia EDR3 released on 3 December 2020, will most likely increase that number.

The other point is that the location of the spacecraft or mirrors will be in the opposite hemisphere from the stellar system. Proxima Centauri antipoint will be in the eastern section of the constellation Cassiopeia at 0230 RA (Right Ascension) and 62.5 North dec (Declination) if my figures are correct.

As I have mentioned before it would be best to send lightweight slightly parabolic mirrors to these locations. A light carbon foam backing structure with a gold surface that can reflect the the light from the SGL back to a one meter telescope in a Earth L2 halo orbit. By the time this is feasible, 10 to 15 years, the L2 orbit should be accessible by manned or robotic repair spacecraft. The gold mirror can use the same technic as the ‘Sundiver’ maneuver to be sent out to the SGL focal line and the telescopes in the L2 halo orbit can be used over and over again. This should keep cost down and the risk factor much lower. The details that need work are the possibility of the mirrors having ion drives or solar wind systems to stabilize and position them once at the SGL.

Once a working system is found to be viable to observe and extract data and details on these distant worlds many mirrors could be sent out to the distant SGL positions for them. Possibly as many as 7,000 habitable zone exoplanets may be observable and 7,000 one meter telescopes in L2 orbits could keep cost down with mass production such as SpaceX Starlink satellites.

This would give us the ability to explore these planets without having to send long duration probes to them. As the technics are further developed and larger mirrors and technical improvements happen the visible details on these planets will improve drastically. The radius from the Sun will also increase and the chance we may find techno signatures from the past or present will also develop. Hopefully this could all be done at a reasonable cost and without the complications of making direct contact with other highly advanced civilizations. Better to learn from them at a great distance then to have the intense shock of Aliens breathing down our necks!!!

Scientists looking for aliens investigate radio beam ‘from nearby star’.

Fri 18 Dec 2020 06.00 GMT

Tantalising ‘signal’ appears to have come from Proxima Centauri, the closest star to the sun.

“Astronomers behind the most extensive search yet for alien life are investigating an intriguing radio wave emission that appears to have come from the direction of Proxima Centauri, the nearest star to the sun.”

“The narrow beam of radio waves was picked up during 30 hours of observations by the Parkes telescope in Australia in April and May last year, the Guardian understands. Analysis of the beam has been under way for some time and scientists have yet to identify a terrestrial culprit such as ground-based equipment or a passing satellite.

It is usual for astronomers on the $100m (£70m) Breakthrough Listen project to spot strange blasts of radio waves with the Parkes telescope or the Green Bank Observatory in West Virginia, but all so far have been attributed to human-made interference or natural sources.

The latest “signal” is likely to have a mundane explanation too, but the direction of the narrow beam, around 980MHz, and an apparent shift in its frequency said to be consistent with the movement of a planet have added to the tantalising nature of the finding. Scientists are now preparing a paper on the beam, named BLC1, for Breakthrough Listen, the project to search for evidence of life in space, the Guardian understands.”

https://www.theguardian.com/science/2020/dec/18/scientists-looking-for-aliens-investigate-radio-beam-from-nearby-star

Surprise, they are right next door!!!

Scientific American!

Alien Hunters Discover Mysterious Signal from Proxima Centauri.

Strange radio transmissions appear to be coming from our nearest star system; now scientists are trying to work out what is sending them.

By Jonathan O’Callaghan, Lee Billings on December 18, 2020.

“Smith began sifting through the data in June of this year, but it was not until late October that he stumbled upon the curious narrowband emission, needle-sharp at 982.002 megahertz, hidden in plain view in the Proxima Centauri observations.”

“BLC1 itself, while seeming to come from Proxima Centauri, does not quite fit expectations for a technosignature from that system. First, the signal bears no trace of modulation—tweaks to its properties that can be used to convey information. “BLC1 is, for all intents and purposes, just a tone, just one note,” Siemion says. “It has absolutely no additional features that we can discern at this point.” And second, the signal “drifts,” meaning that it appears to be changing very slightly in frequency—an effect that could be due to the motion of our planet, or of a moving extraterrestrial source such as a transmitter on the surface of one of Proxima Centauri’s worlds. But the drift is the reverse of what one would naively expect for a signal originating from a world twirling around our sun’s nearest neighboring star. “We would expect the signal to be going down in frequency like a trombone,” Sheikh says. “What we see instead is like a slide whistle—the frequency goes up.”

https://www.scientificamerican.com/article/alien-hunters-discover-mysterious-signal-from-proxima-centauri/

Very Interesting to say the least!

This apparent development sounds rather like Starglider, the huge (500 kilometers [310.7 miles] wide) “flying spin-rigidized dish antenna” stellar system fly-through type of Bracewell interstellar messenger probe in Arthur C. Clarke’s 1978/1979 novel (see: https://tinyurl.com/yatvbt55) “The Fountains of Paradise.” (This tale is about a project to build the first space elevator, in the 22nd Century, which contains ‘flashbacks’ to the reign of an ancient, parricide king of the elevator’s proposed equatorial Earth terminus region; in his time, he too tried to create his own link with Heaven, in order to become a god-king.) Also:

Starglider was a slow (having taken two thousand years to reach our Solar System–its twelfth destination–after flying through the Alpha Centauri system, having been launched sixty thousand years before), long-lived stellar system fly-through probe. After launch, it relied solely on stellar and (gas giant and ice giant) planetary gravity assists for propulsion. Given sufficient electronics lifetimes (or the ability to produce new parts onboard), and long enough individual biological lifetimes (or societal stability, and patience), humanity could build and launch such immortal interstellar probes (although much faster [relativistic], natural forces-propelled interstellar probes also appear feasible, see: “Propulsion of Spacecrafts to Relativistic Speeds Using Natural Astrophysical Sources”: https://arxiv.org/pdf/2002.03247.pdf ), and:

After the end of the fictional story, Clarke included the equally informative ^and^ entertaining—because it is filled with multiple coincidences (if they were just coincidences)—real-world history of the space elevator concept, including references to many engineering papers on the space elevator and related concepts, including a cable-suspended low-altitude geosynchronous satellite, and even a surface-anchored ring around the Earth at geosynchronous altitude.

Charts for radio frequency bands for USA and Australia showing the 980MHz Frequency allocations. These sources should be pretty easy to eliminate unless military or satellites are using them but that should not be happening.

https://upload.wikimedia.org/wikipedia/commons/thumb/c/c7/United_States_Frequency_Allocations_Chart_2016_-_The_Radio_Spectrum.pdf/page1-6300px-United_States_Frequency_Allocations_Chart_2016_-_The_Radio_Spectrum.pdf.jpg

https://www.acma.gov.au/sites/default/files/2019-10/Australian%20radiofrequency%20spectrum%20allocations%20chart.pdf

The 960MHz to 1164MHz frequencies in the US are used for Aeronautical Radio Navigation and in Australia for Aeronautical Mobile and Aeronautical Radio Navigation. The frequencies below 960MHz to 928MHz are used for GSM cell towers and phones which could be a source.

These should all be well known sources for the 980MHz interference so maybe it is real, especially if the “apparent shift in its frequency said to be consistent with the movement of a planet” is true.

DME; Distant Measuring Equipment, I use to issue Notams for outages when I was an ATC. Their UHF frequency range is from 960 and 1215 megahertz (MHz) and was collocated with VORTACs and ILS navigation and landing systems. That could give a false reading like a planet moving in orbit from the timing of the propagation delay of radio signals via a pulse pair. This would have obvious characteristics that should be immediately identifiable…

https://en.wikipedia.org/wiki/Distance_measuring_equipment

One problem is any satellite transmitting on these frequencies may interfere with aircraft navigation and more importantly with ILS – Instrument landings. This could result in aircrafts crashing, so a protected frequency from interference.

Found the USA frequency 982 MHz that is used to reply from the ground for the TACAN/DME. This is seen on the below page under VHF/UHF Plan for Aeronautical Radionavigation as TACAN Channel 21X Reply listing. https://wiki.radioreference.com/index.php/Instrument_Landing_System_(ILS)_Frequencies

This should be the same in Australia since a military system.

960-1164 MHz 1. Band Introduction.

https://www.ntia.doc.gov/files/ntia/publications/compendium/0960.00-1164.00_01DEC15.pdf

The Parkes airport 15 miles south of the Parkes Radio Telescope has a VOR/DME on the IFR sectional charts and indicates a DME channel of 057X with a frequency of 1018 MHz. This would be the closest NAVAID so is not the right frequency!

There is one other area that could be illegally used and that may be Drones. Below is the Australian regulations showing the 900-915 MHz frequency use for drones but anyone above the 960 MHz would clearly be in the NAVAID freqs and very stiff penalties.

https://www.rcgroups.com/forums/showthread.php?2717721-Legal-frequencies-for-RC-FPV-and-Telemetry-in-Australia-(And-max-output-limits)

Now some interesting history in the development of RADAR, Distance Measuring Equipment (DME) and the 210 ft radio telescope at Parkes, New South Wales, Australia by a Welsh physicist, Edward George Bowen;

Tizard Mission

Bowen went to the United States with the Tizard Mission in 1940 and helped to initiate tremendous advances in microwave radar as a weapon. Bowen visited US laboratories and told them about airborne radar and arranged demonstrations. He was able to take an early example of the cavity magnetron. With remarkable speed the US military set up a special laboratory, the MIT Radiation Laboratory for the development of centimetre-wave radar, and Bowen collaborated closely with them on their programme, writing the first draft specification for their first system. The first American experimental airborne 10 cm radar was tested, with Bowen on board, in March 1941, only seven months after the Tizard Mission had arrived.

“The Tizard Mission was highly successful almost entirely because of the information provided by Bowen. It helped to establish the alliance between the United States and Britain over a year before the Americans entered the war. The success of collaboration in radar helped to set up channels of communication that would help in other transfers of technology to the United States such as jet engines and nuclear physics.

Australia

In the closing months of 1943, Bowen seemed to be at “loose ends” because his work in the US was virtually finished and the invasion of Europe by the Allies was imminent. Bowen was invited to come to Australia to join the CSIRO Radiophysics Laboratory, and in May 1946, he was appointed Chief of the Division of Radiophysics. Bowen addressed many audiences on the development of radar, its military uses and its potential peacetime applications to civil aviation, marine navigation and surveying.

In addition to developments in radar, Bowen also undertook two other research activities: the pulse method of acceleration of elementary particles; and air navigation resulted in the Distance Measuring Equipment (DME) that was ultimately adopted by many civil aircraft.

He also encouraged the new science of radioastronomy and brought about the construction of the 210 ft radio telescope at Parkes, New South Wales. During visits to the US, he met two of his influential contacts during the war, Dr. Vannevar Bush who had become the President of the Carnegie Corporation and Dr. Alfred Loomis who was also a Trustee of the Carnegie Corporation and of the Rockefeller Foundation. He persuaded them in 1954 to fund a large radio telescope in Australia with a grant of $250,000. Bowen in return helped to establish American radio astronomy by seconding Australians to the California Institute of Technology.

Bowen played a key role in the design of the radio telescope at Parkes. At its inauguration in October 1961, he remarked, “…the search for truth is one of the noblest aims of mankind and there is nothing which adds to the glory of the human race or lends it such dignity as the urge to bring the vast complexity of the Universe within the range of human understanding.”

The Parkes Telescope proved timely for the US space program and tracked many space probes, including the Apollo missions.”

https://en.wikipedia.org/wiki/Edward_George_Bowen

The authors of the NIAC report indicate that there are about 15,600 FGK stars within 30 pc.

Rather than mirrors, wouldn’t a Fresnel lens be easier to make? It would stay flat by rotation and therefore could be of much larger size. It would be inherently flexible, so construction on Earth and then deployment might be easier than attempting to build a huge mirror in space, even if using prebuilt segments that fit together.

Adding the M dwarfs would end up with a total of close t0 100,000 stars within 30 pc which could mean over 250,000 planets in the habitual zone.(3 per red dwarf in Hz).

Fresnel lens have one big problem: “A Fresnel imager with a sheet of a given size has vision just as sharp as a traditional telescope with a mirror of the same size, though it collects about 10% of the light.”

https://en.wikipedia.org/wiki/Fresnel_Imager

You want as many photons as possible, that is why gold is best from IR to UV, 99% reflective. The carbon foam could be compacted if it can return to the original shape with no deterioration of the 1/30 wave optical surface of the mirror. This would be one solid mirror that would be compressed on earth and launched into orbit, but many other ways to do it maybe inflate it???

I don’t see the advantage of reflecting the photons across 550+ au of space to a 1 meter telescope at Earth’s L2. This would make the vehicle control and pointing requirements a nightmare. It would also introduce a new source of image smear.

May not be such a nightmare then sending a robotic spacecraft to fix the telescopes over 3 light days away from earth. Lasers, AI and other technology could keep it under control and a large mirror would be sending many more photons to the telescope in a L2 halo orbit near earth. As with Hubble you would be better off to have the complicated scopes nearby to both fix and improve over time. More photons is the main advantage.

The mirror to deliver a focal length of 550 au would have to be massive. The mirror would be the far larger and complex project. The premise of using a mirror is sound but to be practical, the focal length has to be shorter. These mission vehicles won’t be repaired.

Correct me if I am wrong, but didn’t Carl Sagan mention in one of his books using the solar gravitational lensing point for either SETI or for imaging?

That previous post including its comments points out several limitations. In particular:

1) Jupiter being a smaller mass has much smaller light amplification effect, leading to much longer integration time.

2) Jupiter is moving relative to the spacecraft’s own orbit/departure trajectory from the Sun, so target tracking will be much more complex and require much more significant lateral movement. Perhaps not a deal-breaker, but definitely more than the modest amount needed when using the Sun as a lens.

3) If I did my math right, Jupiter would often be separated by less than 50 milliarcseconds from the Sun when observing at 6100 AU. Perhaps can be managed with an internal coronagraph, but not trivial. I think the HST and JWST do not reach this level with their coronagraphs… more like 0.5 to 1 arcseconds.

Gravitational Lens mission is a wonderful precursor for Interstellar: it has a unique science payoff and is ideal to stretch our propulsion, communication technologies to the next proximate level.

These kinds of stepping stones are absolutely needed to build up to interstellar missions.

The sweet spot for gain and the years needed to collect photons may make sending one vehicle to multiple targets less practical. The vehicle would be moving beyond the sweet spots and need delta V to move closer to the Sun.

Breakthrough Starshots dependence on a solar focal vehicle creates an interesting dilemma. A telescope at the focal would set a threshold for usable photon cost for Breakthrough Starshot interstellar vehicles. The threshold could end up being too high.

I think our first mission using this technique should be on a globular cluster as there are millions of stars potentially in a very small space or maybe even star forming regions.

Just wondering if we sent a two part mirror system out there. The middle one is slowed down relative to the outer reflective ring one. We would have a high magnification one from the gravilens ( star facing) and a high resolution lower mag from the outer one which reflects the images back to the otherside of the slowed down one. As the craft goes on its journey the resolution increases as the magnification drops.

With this type of machine even if we miss the planet we will see further and in detail a vast expanse with enomous amounts of information.

Jupiter was discussed as a gravitational lens above … but could an outer planet be used as another sort of lens? I’m looking at https://solarsystem.nasa.gov/resources/17494/a-dark-bend/ which shows the F ring of Saturn appearing bent outward from the planet by about its own width. Don’t trust my astronomy, but if I guess 280 km for the width and use 140,000 km for the distance of the ring, I think that means (not counting geometrical issues) its image has been bent by about 1/500 radians – several arc minutes rather than a fraction of an arc second for the solar lens. With Saturn just 60,000 km across, I’m thinking a probe at a distance of 30 million km = 0.2 AU should receive light bent from a source at infinity all the way around the planet. There would be FOCAL-like issues with the focal line (since we see all parts of the F ring refracted in this photo for example), and others such as atmospheric turbulence and the less spherical shape of the planet, but if the light from a well-defined curve around the planet could be separated from the planet’s light (a non-ringed outer planet seems more sensible, but even for Saturn at least you can observe from the night side!) I think you should have many more photons to work with than if you were looking at only a single image. Do you think that makes any sense?

Wouldn’t Cassini have detected a distortion in the light as it orbited Saturn?

Cassini took that photo and provided the broader view at https://solarsystem.nasa.gov/resources/17394/bent-rings/ (From the second image I think some of the light is bent even further than the diameter of the F ring, though it blends into the colors of the atmosphere) As for FOCAL, a distant star would appear only at one particular radius from the planet at any given part of the horizon, but I don’t see why the star’s image wouldn’t completely circle the upper atmosphere of a planet when viewed from sufficiently far away.

My apologies, I misread what your comment was about. As Michael Fidler comments below, this is basically what Kipping has suggested using Earth’s atmosphere. Kipping’s approach is obviously a lot easier and cheaper to test. The question is whether the heterogeneity of the atmosphere and turbulence renders deconvolving the image very problematic. For example, different wind speeds and pressure gradients will distort the light ray paths (used to visualize them with Schlieren imaging). Maybe using lasers to locally adjust the light in some way akin to adaptive optics might help here?

Prof. David Kipping Terrascope sounds very similar to what you are talking about. I’ve wondered if the gas giants might be a better place to use their thick hydrogen outer atmosphere as a telescope. Especially Neptune since it is much dimmer and less interference from the sunlit side.

Planetary Lensing: Enter the ‘Terrascope’

https://centauri-dreams.org/2019/08/12/planetary-lensing-enter-the-terrascope/

The Terrascope: Challenges Going Forward.

https://centauri-dreams.org/2019/08/13/the-terrascope-challenges-going-forward/

The “Terrascope”: On the Possibility of Using the Earth as an Atmospheric Lens.

David Kipping

https://arxiv.org/abs/1908.00490

Broadcast 3487 Dr. David Kipping.

https://thespaceshow.com/show/03-apr-2020/broadcast-3487-dr.-david-kipping

Prof. Kipping needs support to develop the Terrascope and so far has had very little. The research indicates it is a very practical idea with cost well below what would be needed for SGL FOCAL. This is something that could be done with a ride share CubeSat demo sent to the correct position from earth to see if there are any problems involved. Maybe if the Proxima Centauri signal pans out there may be plenty of money to see what is going on there…

Thanks for the update!! I forgot the plan was to use the Earth – I don’t have an image of refracted light in mind the way I do for Saturn. A bit small, but you can’t beat the location. It would indeed be interesting to see how this works, and *within* our lifetimes … Covid permitting.

Maybe this has been covered elsewhere, but wouldn’t a technological civilization in a sufficiently-widely-separated multiple star system have one (or even more than one) free gravitational lens for their easy use? They couldn’t point it but it would move with the star over time.

Some lucky astronomers over there…..

I don’t think this would work. The telescope has to remain very closely aligned with the star and the target over a period of time to collect the photons. Our sun’s focal point at 550+ AU is in interstellar space. To allow this, the 2 stars must be separated by at least 2x the minimal focal distance. But the inhabited planet must be well within one star’s HZ which means that the telescope would still be just as far out of reach as Earth is to the sun’s focal line. While the focal line from the second star to the inhabited planet can be accessed, the planet’s orbit will mean that any target will be aligned for a very brief moment, rather like an occultation making any imaging next to impossible with any equipment we have, as well as the targets being purely serendipitous. An orbiting telescope might work better, but it will need a relative velocity that keeps it stationary between the 2 stars and will therefore fall towards the inhabited planet’s star. Is this more convenient than a distant location?

A nice youtube video of the technique.

https://m.youtube.com/watch?v=NQFqDKRAROI