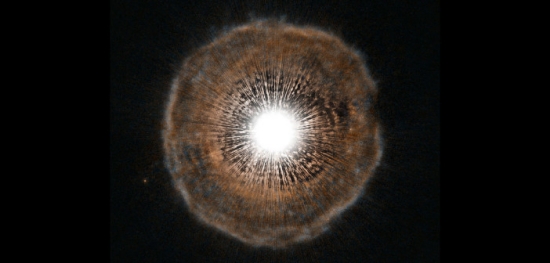

Interesting things happen to stars after they’ve left the main sequence. So-called Asymptotic Giant Branch (AGB) stars are those less than nine times the mass of the Sun that have already moved through their red giant phase. They’re burning an inner layer of helium and an outer layer of hydrogen, multiple zones surrounding an inert carbon-oxygen core. Some of these stars, cooling and expanding, begin to condense dust in their outer envelopes and to pulsate, producing a ‘wind’ off the surface of the star that effectively brings an end to hydrogen burning.

Image: Hubble image of the asymptotic giant branch star U Camelopardalis. This star, nearing the end of its life, is losing mass as it coughs out shells of gas. Credit: ESA/Hubble, NASA and H. Olofsson (Onsala Space Observatory).

We’re on the way to a planetary nebula centered on a white dwarf now, but along the way, in this short pre-planetary nebula phase, we have the potential for interesting things to happen. It’s a potential that depends upon the development of intelligence and technologies that can exploit the situation, just the sort of scenario that would attract Greg Laughlin (Yale University) and Fred Adams (University of Michigan). Working with Darryl Seligman and Khaya Klanot (Yale), the authors of the brilliant The Five Ages of the Universe (Free Press, 2000) are used to thinking long-term, and here they ponder technologies far beyond our own.

For a sufficiently advanced civilization might want to put that dusty wind from a late Asymptotic Giant Branch star to work on what the authors call “a dynamically evolving wind-like structure that carries out computation.” It’s a fascinating idea because it causes us to reflect on what might motivate such an action, and as we learn from the paper, the energetics of computation must inevitably become a problem for any technological civilization facing rapid growth. A civilization going the AGB route would also produce an observable signature, a kind of megastructure that is to my knowledge considered here for the first time.

Laughlin refers to the document that grows out of these calculations (link below) as a ‘working paper,’ a place for throwing off ideas and, I assume, a work in progress as those ideas are explored further. It operates on two levels. The first is to describe the computational crisis facing our own civilization, assuming that exponential growth in computing continues. Here the numbers are stark, as we’ll see below, and the options problematic. The second level is the speculation about cloud computing at the astronomical level, which takes us far beyond any solution that would resolve our near-term problem, but offers fodder for thought about the possible behaviors of civilizations other than our own.

Powering Up Advanced Computation

The kind of speculation that puts megastructures on the table is productive because we are the only example of technological civilization that we have to study. We have to ask ourselves what we might do to cope with problems as they scale up to planetary size and beyond. Solar energy is one thing when considered in terms of small-scale panels on buildings, but Freeman Dyson wanted to know how to exploit not just our allotted sliver of the energy being put out by the Sun but all of it. Thus the concept of enclosing a star with a shell of technologies, with the observable result of gradually dimming the star while producing a signature in the infrared.

Laughlin, Adams and colleagues have a new power source that homes in on the drive for computation. It exploits the carbon-rich materials that condense over periods of thousands of years and are pushed outward by AGB winds, offering the potential for computers at astronomical scales. It’s wonderful to see them described here as ‘black clouds,’ a nod the authors acknowledge to Fred Hoyle’s engaging 1957 novel The Black Cloud, in which a huge cloud of gas and dust approaches the Solar System. Like the authors’ cloud, Hoyle’s possesses intelligence, and learning how to deal with it powers the plot of the novel.

Hoyle wasn’t thinking in terms of computation in 1957, but the problem is increasingly apparent today. We can measure the capabilities of our computers and project the resource requirements they will demand if exponential rates of growth continue. The authors work through calculations to a straightforward conclusion: The power we need to support computation will exceed that of the biosphere in roughly a century. This resource bottleneck likewise applies to data storage capabilities.

Just how much computation does the biosphere support? The answer merges artificial computation with what Laughlin and team call “Earth’s 4 Gyr-old information economy.” According to the paper’s calculations, today’s artificial computing uses 2 x 10-7 of the Earth’s insolation energy budget. The biosphere in these terms can be considered an infrastructure for copying the digital information encoded in strands of DNA. Interestingly, the authors come up with a biological computational efficiency that is a factor of 10 more efficient than today’s artificial computation. They also provide the mathematical framework for the idea that artificial computing efficiencies can be improved by a factor of more than 107.

Artificial computing is, of course, a rapidly moving target. From the paper:

At present, the “computation” performed by Earth’s biosphere exceeds the current burden of artificial computation by a factor of order 106. The biosphere, however, has carried out its computation in a relatively stable manner over geologic time, whereas artificial computation by devices, as well as separately, the computation stemming from human neural processing, are both increasing exponentially – the former though Moore’s Law-driven improvement in devices and increases in the installed base of hardware, and the latter through world population growth. Environmental change due to human activity can, in a sense, be interpreted as a computational “crisis” a situation that will be increasingly augmented by the energy demands of computation.

We get to the computational energy crisis as we approach the point where the exponential growth in the need for power exceeds the total power input to the planet, which should occur in roughly a century. We’re getting 1017 watts from the Sun here on the surface and in something on the order of 100 years we will need to use every bit of that to support our computational infrastructure. And as mentioned above, the same applies to data storage, even conceding the vast improvements in storage efficiency that continue to occur.

Civilizations don’t necessarily have to build megastructures of one form or another to meet such challenges, but we face the problem that the growth of the economy is tied to the growth of computation, meaning it would take a transition to a different economic model — abandoning information-driven energy growth — to reverse the trend of exponential growth in computing.

In any case, it’s exceedingly unlikely that we’ll be able to build megastructures within a century, but can we look toward the so-called ‘singularity’ to achieve a solution as artificial intelligence suddenly eclipses its biological precursor? This one is likewise intriguing:

The hypothesis of a future technological singularity is that continued growth in computing capabilities will lead to corresponding progress in the development of artificial intelligence. At some point, after the capabilities of AI far exceed those of humanity, the AI system could drive some type of runaway technological growth, which would in turn lead to drastic changes in civilization… This scenario is not without its critics, but the considerations of this paper highlight one additional difficulty. In order to develop any type of technological singularity, the AI must reach the level of superhuman intelligence, and implement its civilization-changing effects, before the onset of the computational energy crisis discussed herein. In other words, limits on the energy available for computation will place significant limits on the development of AI and its ability to instigate a singularity.

So the issues of computational growth and the energy to supply it are thorny. Let’s look in the next post at the ‘black cloud’ option that a civilization far more advanced than ours might deploy, assuming it figured out a way to get past the computing bottleneck our own civilization faces in the not all that distant future. There we’ll be in an entirely speculative realm that doesn’t offer solutions to the immediate crisis, but makes a leap past that point to consider computational solutions at the scale of the solar system that can sustain a powerful advanced culture.

The paper is Laughlin et al., “On the Energetics of Large-Scale Computation using Astronomical Resources.” Full text. Laughlin also writes about the concept on his oklo.org site.

Do you know if this paper will be hosted anywhere besides on Google Drive, like on ArXiv or ResearchGate or somewhere? I’d like to link to it but I don’t trust Google Drive to keep it available for the long term.

When I discussed this with Dr. Laughlin via email this morning, he told me the paper is going to stay where it is as a working paper for discussion. No plans currently to place it elsewhere. I think you’re safe in linking to it, Doug.

This was certainly entertaining speculation.

The increasing energy demand of computation is well known. Bitcoin miners have even relocated to sites in Canada that offer low electric rates from hydroelectric systems. While newer forms of CPUs will drastically increase computing efficiency, the limits of efficiency and computation growth will push up against limits. It really doesn’t matter where the energy in teh economy is used, growth will eventually reach terrestrial limits, and well before a millennium has passed. Starships as currently conceived will only happen if we have a solar system-wide economy.

Interestingly, Charles Stross’ Accelerando only looked at sources of material for conversion to “computronium”. As far as I recall, the energy required to run computing systems was not a factor in the novel.

So far so good.

Where I part company with the authors is the idea of using AGB stars. It doesn’t do any civilization much good to halt economic growth until their star is in that state. Far better to harvest their planets and turn them into energy and computation devices in a Dyson swarm. We could even start on such a program today, although we would want to use robots to start mining and manufacturing these devices off-world,

Assuming we still want to live on earth, then the distance factor is still a problem whatever method we choose. No amount of parallel computation will solve the problem of sending computational jobs to devices 2 AU to the other side of the sun and receiving results in anything less than 35 minutes, unless batch job computing (ah, the good old days!) is acceptable. Maybe that is OK for massive computational problems, such as training AIs with data or evolving hyper-intelligent artilects.

Perhaps intelligence becomes “embodied” in a computronium shell[s], either as a single, or many, mind[s], like Hoyle’s Black Cloud, or the planetary mind of Lem’s Solaris. However, I am skeptical of that too. Such a mind[s] would be highly vulnerable to a problem with its star, or an external threat. Individual autonomous agents able to travel the galaxy or universe are far more resilient. [Note the fate of Stapledon’s 18th Men on Neptune, waiting for their end, unable to travel to the safety of another star. (Pluto had only been discovered as the book, Last and First Men was published)]

In summary, the AGB star solution does not seem like a viable way for a technological species to solve its economic growth problem in a time frame suited to its development. Dyson swarms, whether populated or just computational machines is a better, and more flexible, solution, IMO.

This topic reminds me of an old cliche: “The trend is your friend. Until it isn’t.”

What are we going to be computing? Why are we going to be computing it? Why is it so important to compute that we’ll convert vast quantities of matter into computronium?

I can imagine a 19th century economist recommending to the government that it is critical to the nation’s future to expand blacksmith apprenticeship positions since the trends for transportation and construction are such that we will need many more of them 25 years hence to satisfy the market demand for horseshoes and nails.

Well, maybe if we’re still using Windows in the year 2500.

More likely is that when we can’t economically do more computing we’ll find alternatives, or perhaps we’ll fire all the economists.

Right on, Ron! You’ve hit the nail on the head. Our societal obsession with computation is based primarily on boosting profits by eliminating employees. And when that revenue source dries up, the suits will go about increasing the efficiency of wool-gathering and navel-gazing about how to improve on the process.

Computers are great for navigating spacecraft and solving systems of differential equations, but we use them to sell and optimize commercial propaganda. That’s not science or technology, its marketing. And you know what marketing is, don’t you? Its the art of selling people things they don’t need and can’t afford, preferably on credit so you can make money on the financing. Every body knows actually selling hard goods is for the terminally uncool. This is the technology that could take us to the stars, we use it to peddle porn on the internet.

You make an excellent point: just what are those Matrioshka brains actually thinking about, anyway? You can’t get a haircut on the World-Wide Web, and what do these cosmic macro-intelligences do with the profits they secure by selling each other pizza? Or whatever passes for pizza in Vernor Vinge land.

We couldn’t build the Panama Canal today because the software costs would be far too high.

Isn’t this a good way to boost productivity and individual wealth over the long term? Don’t we want to be wealthy enough to have starships?

That is a very cynical view of the world. Eliminating marketing and advertising is throwing out the baby with the bathwater. Don’t we all use adblockers to reduce the intrusiveness of adverts? Don’t we learn to disregard the siren call of marketers, and won’t computation become another tool to defend our minds against manipulation?

Living in a simulated world you can have simulated haircuts if you desire. I expect most of us would be perfectly coiffed, eat gourmet meals, and never be sick or injured in a simulated world. OTOH, if we are living in a simulation now…

“Listen carefully, because our options have changed….”

Some are already choosing a simulated world or a part thereof

“We couldn’t build the Panama Canal today because the software costs would be far too high.” Then I guess we’d have to get the slide rules out of museums.

Hell Ron.

You got very close what I was about to say also.

In my only Scifi story the protagonists spend a significant part of their free time in a perfect VR environment with all senses connected and stimulated. Still won’t need to covert a planets mass into computer hardware. Not even when the personality / attention part of the persons mind reside in the VR world. (OT: A funny detail is that the Virtual out of body experience experiments done some years ago did show that my plot device actually might be possible.)

That we need more processing power and more efficient computers do not mean we always will need so in the future.

We will not have a civilization max out all resources on computation, neither of creating interstellar spacecraft – though reallocate some military funding to space activities would not be a bad idea – anyway I view the idea of a matrioshka brain as a paper clip dead end.

There is a just-released documentary “A Glitch in the Matrix” about simulation theory and whether we are living in a simulation. While it got a decent review on rogerebert.com and IMDB gives it a 5.2 viewer rating, I found it a pretty awful attempt to explore this idea and those who espouse it. Even Bostrom seemed a bit uncomfortable when interviewed. I see it as a techno-religion with no hard evidence to support it. It is also “simulations all the way down”.

The concept of living in a simulation is the substratum of Buddhism two and a half millennia ago, and is derived from the Vedic traditions preceding it.

I asked my miss about this as she’s from a country where Buddhism is the main religion, she protested this notion strongly and replied that:

A. If life was a simulation it would render any action good or bad meaningless. And so the idea is diametrically opposed to Buddhist teachings.

B. The very notion that all life is merely a simulation open the Pandora box of pre determined will. Which also oppose the Buddhist idea that we’re supposed to improve ourselves, or try our best to do so.

This is what happens when you have too much computing power at your disposal. It doesn’t end well.

https://en.wikipedia.org/wiki/The_Nine_Billion_Names_of_God

Or Clarke’s later “Dial F for Frankenstein” where the telephone system became aware. But TNBNoG requires the Tibetan’s were correct, and the end of the universe happened with just one fast computer that could work in a Tibetan monastery. A cellphone could probably do that today!

SkyNet is the current meme for malevolent AI based on global, connected computing systems. I suspect that Elon Musk deliberately called his comsat swarm StarLink because of the Sky:Star & Net:Link word associations.

I am currently reading Yuval Noah Harari’s Homo Deus where he talks about AI displacing humans and making most of humanity useless. But he sees this as intelligence without consciousness. Peter Watts’ Blindsight also explores intelligence without consciousness or understanding.

Unless there is some sort of Butlerian Jihad, I see increasing computerization as almost inevitable. It may even be the driver of space industrialization and the start of machine civilization. In a few hundred years, human populations might collapse leaving a relatively small population living rather as Asimov’s Solarians do, served by huge numbers of robots, a version of Ancient Greece. Or we may have large populations living in a dystopia, where human servants are the luxury item the wealthy elite has, and everyone else lives a hardscrabble life unable to compete with machines.

If AI does cause the end of humanity, I don’t think it will be by a conscious AI entity, but rather by a host of zombie intelligent machines that make our lives untenable as a byproduct of their actions.

Thanks for the link to wiki commons Blindsight novel. What a good read. Some blur between consciousness, self-awareness/self centredness, and sentience, exploring the grey areas between. Blindsight being somewhere between knowing and perceiving.

Nothing resembling the human along that timeline, better to steer clear.

It always annoys me when they say these stars are dying, an intelligent race would collect the material thrown off to form new planets and structures. Large magnetic fields could collect vast amounts of material to form these new objects.

Here is another potential way to keep stars alive beyond their natural states, quoted from here:

http://www.coseti.org/lemarch1.htm

Reeves (1985) suggested the intervention of the inhabitants that depend on these stars for light and heat. According to Reeves, these inhabitants could have found a way of keeping the stellar cores well-mixed with hydrogen, thus delaying the Main Sequence turn-off and the ultimately destructive, red giant phase.

Beech (1990) made a more detailed analysis of Reeves’ hypothesis and suggested an interesting list of mechanisms for mixing envelope material into the core of the star. Some of them are as follows:

* Creating a “hot spot” between the stellar core and surface through the detonation of a series of hydrogen bombs. This process may alternately be achieved by aiming “a powerful, extremely concentrated laser beam” at the stellar surface.

* Enhanced stellar rotation and/or enhanced magnetic fields. Abt (1985) suggested from his studies of blue stragglers that meridional mixing in rapidly rotating stars may enhance their Main Sequence lifetime.

If some of these processes can be achieved, the Main Sequence lifetime may be greatly extended by factors of ten or more. It is far too early to establish, however, whether all the blue stragglers are the result of astroengineering activities.

My comments:

Rationally no species so advanced would build a Black Cloud or a Dyson Shell around a star that is unstable or has a naturally short life span. Especially if they have the ability to stabilize and extend their Main Sequence existence.

Perhaps this is yet another reason why advanced ETI might choose to occupy a globular star cluster: Lots of stars in relatively close proximity to choose from. If one goes dim, pick a new neighbor.

Let the star fall apart and we collect the hydrogen to make red dwarfs, quite a few could be made.

Asimov used the analogy that this is like sheltering under another tree in a rainstorm when your tree starts to let the rain through. Different stellar lifetimes might however solve that problem if the useful stage is relatively transient, allowing continuous occupation of that star by star hopping.

However, this strikes me as analogous to the nomadic way of life. Follow the game herds and fresh grazing. But we learned to obviate that with agriculture and built civilization. I cannot imagine why Kardashev II civilizations couldn’t find similar solutions to maintain their civilization around a star.

Well the question is, then, what other sources of power, heat, and light can we use on a cosmic scale that work as well as a star? Even a small one (red dwarf) has multiple advantages.

A civilization might use geothermal energy from a host world, but how efficient is that really for an interstellar scale society?

Yes you can huddle around a black hole, but how many can do that efficiently?

You can carry your own nuclear powers, but again you will need worlds for resources and such, and they in turn will need their stars even to exist in the first place.

Wandering around may be a small price to pay for having such an amazing natural resource as a star. And that is why I have also brought up the idea of globular star clusters, especially those that may have been cultivated by Artilects to serve their energy needs. They won’t have to travel very far living in a GSC.

I think the question is what is the best long term source of lots of energy. Hydrogen fusion certainly seems like the best as far as we know. However, it is a finite resource. To extend your time the best solution is to:

1. burn it as slowly as possible commensurate with your needs.

2. husband and store hydrogen for burning later, and not allow it to be consumed in stellar furnaces.

To me, the clear and obvious solution (assuming the technology is available) is to dismantle as many stars as you can(say a complete globular cluster) and assemble small, slow-burning stars or, better still, fusion reactors, where needed. Then feed the stored hydrogen from teh stars into the reactor[s] and/or build small stars as needed.

If this logic is correct, we should be able to observe:

1. “holes” in the galactic arms with just a few, or none, small dim stars in the hole. All other stars apparently absent.

2. A gravitational lens effect in the “hole” as all that dark cold hydrogen is present, but still a massive gravity well.

3. It should be distinguishable from a Dyson sphere if there is no point IR signal with a stellar-sized signal. A single or few M_dwarfs as power sources might be enclosed as Dyson spheres/swarms but these can be distinguished by the gravitational lens effect from Dyson megastructures around a few stars whilst most other stars are free of such structures.

Such an approach would provide energy for civilization for an unimaginable long time, and remain viable as the universe turns cold and dark and the last cool stars have burned out and gone dark. [All this assumes no “great rip” from expansion].

So I don’t think we are that far apart from each other than the details.

No we are not far off from each other in this regard.

And look at this new news item:

https://www.space.com/black-holes-globular-cluster-hubble-telescope

Another celestial item that an advanced ETI might want in their “cultivated” globular star cluster.

https://news.columbia.edu/energy-particles-magnetic-fields-black-holes#:~:text=A%20new%20Columbia%20study%20indicates,reconnection%20of%20magnetic%20field%20lines.&text=A%20remarkable%20prediction%20of%20Einstein's,energy%20available%20to%20be%20tapped.

The whole biosphere is “computing” as the paper notes. While some of our computing is truly a waste of resources (I’m looking at you Bitcoin), there is no doubt in my mind that continuing to expand computation is a good thing. It is the best way we know how to understand the world and make it more livable. Just as the Industrial Revolution disrupted the way of life for subsistence farmers, and the development of agriculture disrupted hunter-gatherer life, so will the Information Revolution be a cause of disruption. But would you rather none of these changes happened and you lived a Malthusian life, brutish and short, as hunters or subsistence farmers?

I see vast scope to increase computation, from edge computing making the artificial world seem alive, to vast compute farms offering holodeck or virtual world experiences. In an ideal liberal society, we can choose what levels of technology we want to live with. IMO, making that world is the real challenge.

“But would you rather none of these changes happened and you lived a Malthusian life, brutish and short, as hunters or subsistence farmers?”

That’s a straw man. I said no such thing, nor would I.

No, you didn’t.

However, I see your argument against the increasing use of computerization (automation) as similar to ones that could be voiced by peoples of those eras. There is the obvious historical Ludditism as a reaction against industrial machines replacing crafts and individual handmaking. Hunter-gatherers no doubt railed against crop farming reducing their hunting grounds and excluding them from the farmland. This was partially shown in the western US as the native population was restricted to reservations as the European western expansion continued and is reflected in the depressingly common trope of movie westerns.

What is common is the sense of “this far, but no more”, and even “turn back the clock”. Compared to the environmental damage of industrial-scale farming and manufacturing, computerization seems relatively mild. It has certainly made my life a lot easier. Yes, there are annoyances. like dumb voice menus, but even here AI is likely to replace them with human-like responses. If corporations won’t install AIs to handle calls, then I have no doubt consumers will have AI assistants that will navigate the dumb voice assistants.

I see computation as being similar to autotroph plants. They convert CO2 and H2O into energy-rich carbon compounds that fed on my heterotrophs. Corporate computation is used to create information-rich products that consumers use. The larger the autotroph base of the food chain, the greater the mass of supported heterotrophs. The larger the base of information creating computation, the larger the information-using and information-converting consumers. For all the faults of social media computation, they do allow us to do things that were unable prior to their creation. And just as in nature with its poisonous plants, poisonous animals, parasites, and diseases, so it is in the information world, with malware, trolls, and a host of other information poisons that we have to adapt to deal with.

If computation hits an energy wall, well we have the whole of the sun’s output to breach that wall. Bitcoin mining satellites (ugh!). Server farms to do massive computer modeling powered by solar power satellites, and even possibly located in space (Jupiter might be a great heat sink).

There are dangers of course, but I tend to think Kevin Kelly is right: as long as technology has a small net positive benefit, then as it accumulates we humans will benefit too.

“I see your argument against the increasing use of computerization (automation)… ”

I…did…not…say…that.

Stop your insidious habit of putting words in my mouth that I did not say and that I would not say. Stop it. Now.

I think Ron S is right to protest. His questions “What are we going to be computing? Why are we going to be computing it? Why is it so important to compute that we’ll convert vast quantities of matter into computronium?” are not advocating Luddism. It’s really about establishing that if there is a limit to computational power, there has to be a prioritisation. Henry Cordova and yourself have each given examples of frivolous or wasteful applications: marketing and bitcoin, respectively.

This article and its comments reminded me of Cory Doctorow and Charles Stross 2012 novel “The Rapture of the Nerds” (https://en.wikipedia.org/wiki/The_Rapture_of_the_Nerds). Did no one here read that? In there, you can find an account of what the computing power of a Matrioshka brain is applied to, and yes: it’s indulgent virtual reality. After all, so long as there’s capacity, I guess Maslow’s hierarchy of needs suggests applying such a brain to delivering the pinnacle of needs – self-actualisation – via virtual reality when all others are satisfied, though I suspect most will settle for the easier option: entertainment.

Consider the amount of computing that underlies the coversation taking place in this very thread, or in wider social media. Or the search capabilities used for research expanding and integrating scientific knowledge. All this computing extends, augments, and interconnects global mental culture much like the convolutions and synaptic axons in our physical brain enhance our intellectual capacity. This is a trend, I think, that will certainly continue. It’s not the Internet of Things, its the Internet of Us.

Indeed it is. Our civilization is becoming increasingly interconnected. This is good, not bad.

I also want to say that this connection is vastly less environmentally destructive than physical travel to collect in a small space. This pandemic has shown that electronic meetings can work. Schools and universities have managed to teach without crowding students into a room. I have had video meetings with distant physicians obviating the need to spend time and energy traveling. I have been able to attend video meetings that I hope will continue post-pandemic. The SETI meetings now online are attended by interested people all over the world who can now ask questions rather than just watch the recordings. Businesses recognize that workers really can telecommute, effectively, something that has been resisted for decades by [insecure] middle managers. The shortcomings of current video meetings will be increasingly addressed with new technology. And let’s not forget the potential of self-driving vehicles that will increase who is capable of becoming mobile, while reducing the unnecessary burden of vehicle ownership (something Henry C should condone as it should reduce car advertising and marketing) and the cost of road construction and maintenance. [With the recent collapse of another section of California’s HWY 1 wouldn’t it be nice to experience an immersive trip down this scenic coast from an aerial drone, reducing the impact of vehicles, but also allowing more freedom to explore beaches and hillsides impossible today.] While reality is always going to be viscerally superior, I personally would love to have an immersive experience of exploring the Moon and Mars in safety and low cost. On Earth, one could climb sections of Everest, or explore the oceans. More expensive trips could use robots to physically interact with the world as one explores the virtual immersive one. Or perhaps go for implanted memories, as supplied by Rekal Corp. in PK Dick’s “We Can Remember It for You Wholesale”.

I’ve always wanted to see the Northern Lights. However in considering such trips, I find the probability of success compared to the expense of the trip to be prohibitive. On the other hand, it won’t be long before I can get a high quality VR experience for a very modest price.

An example of Bitcoin as a computational parasite.

Bitcoin uses more [electric?] energy than Argentina.

Is Satoshi an alien AI that is intent on wrecking human technological civilization with this insidious invention? ;)

Consider this from a thermodynamic point of view… Bitcoin represents a store of low entropy created through a concentration of energy. Much like charging a battery, free energy is then available for use. One wouldn’t consider charging a battery a waste of energy, although one would look for the most efficient waste-less way of charging, and there are schemes for making cryptocurrencies more efficient without losing the ‘proof’ that contributes to their utility.

The Black Cloud – I remember a year or two after its publication obtaining that same paperback from a local midwest pharmacist’s paperback

section. A quick check to see if it was still there. The story was quite distinct from the Robert Heinlein and Poul Anderson stories I used to search for in the bookmobile that stopped at grammar school…But in retrospect, it was also interesting to consider the log rolling going on in the story. Fred’s friend the Cloud argued persuasively for the Steady State universe. And the Cloud, being the cosmic roving entity it was,

should have known.

In the story the Earth and the Cloud had a period of conversation conducted by radio telescopes adapted after the critter spread out into

something of circumstellar disk that had dropped by to visit our sun.

There was, I suspect, a light lag in the direct communications in the story, but overall the Cloud’s reflexes were near as fast as human.

And that’s what I still don’t get. Granted, you could place in parallel

a lot thumb drives and mother boards in a circumstellar disk, but it seems like there would be some architecture issues and lags.

It looks like though a lot of fundamental work has been done on

one aspect of Kardeshev civiliaztion computer fire power: the extension chord.

Absolutely correct. But note that modularity is how your brain is organized, especially the cerebrum. Computation happens at the column (and mini-column) unit. Then the result is spread out and traverses to other areas of the cerebrum and other parts of the brain. A circumstellar disk of computation would work in a similar fashion. fast computation locally to parallelize, and then a slower spread around the disk if needed. If all that computation needs to collected in one place, e.g. Earth, then we will have to accept that huge computational jobs that employ the whole disk will take time to complete, just as with super-computer modeling jobs.

Achieving artificial general intelligence in a top-down manner may be a hard nut to crack. In the bottom-up mode it may well be self-organizing like the putative primordial soup of organic molecules at the dawn of life (on Earth). It would be mediated by increasing connectivity within and across domains, from one’s refrigerator to a planetary lander.

Such a transition to artificial general intelligence may be so insidious that it could be overlooked and when later quite obious, be accepted by common subliminal consensus as being quite natural.

Why just one intelligence? At best it might be a hive mind, just as our brains hold many agents that create the illusion of a single identity. Why not many, intelligent machines lacking consciousness, mimicking life, rather than a mythical deity?

Both the physical brain and the functional mind are now recognized to be composed of disparate but well-integrated components. Each of the components is intelligent within its own domain but nearly non-functional in regard to the functions of other domains.

In an intelligent internet or in a Matrioshka brain, each component might be so extensive and intensive as to require dedicated ancillary capabilities, allowing each component varying degrees of autonomy within the integrated whole.

The late great Robert Bradbury (he said he had no idea if he was related to science fiction author Ray Bradbury, by the way) was an early advocate for Matrioshka Brains as the real reason Dyson Shells would be built…

https://www.gwern.net/docs/ai/1999-bradbury-matrioshkabrains.pdf

https://www.scienceabc.com/nature/universe/matrioshka-brain.html

https://www108.lamp.le.ac.uk/ojs1/index.php/pst/article/view/2559/2394?acceptCookies=1

https://www.orionsarm.com/eg-article/4847361494ea5

One thing he did for me at least was to expand the paradigm of our concepts of the forms alien life may take. Popular level science fiction Star Trek and Star Wars aliens are pedestrians by comparison.

Neither Buddhism, nor Janism nor two and a half of the six philosophic schools of Hinduism acknowledge any universal deities. In those systems the deities are different from grasshoppers and bullfrogs in degree, but not in kind.

“If life was a simulation it would render any action good or bad meaningless. And so the idea is diametrically opposed to Buddhist teachings.”

Maybe whoever is watching the simulation rewards us with the simulation of karmic reward, retribution, or the simulation of an afterlife.

There could be a bajillion beings watching us and they vote on what the simulation does next.

Is the soul comprised of dark energy? I dunno.