Let’s take a brief break from research results and observational approaches to consider the broader context of how we do space science. In particular, what can we do to cut across barriers between different disciplines as well as widely differing venues? Working on a highly directed commercial product is a different process than doing academic research within the confines of a publicly supported research lab. And then there is the question of how to incorporate ever more vigorous citizen science.

SpaceML is an online toolbox that tackles these issues with a specific intention of improving the artificial intelligence that drives modern projects, with the aim of boosting interdisciplinary work. The project’s website speaks of “building the Machine Learning (ML) infrastructure needed to streamline and super-charge the intelligent applications, automation and robotics needed to explore deep space and better manage our planetary spaceship.”

I’m interested in the model developing here, which makes useful connections. Both ESA and NASA have taken an active interest in enhancing interdisciplinary research via accessible data and new AI technologies, as a recent presentation on SpaceML notes:

NASA Science Mission Directorate has declared [1] a commitment to open science, with an emphasis on continual monitoring and updating of deployed systems, improved engagement of the scientific community with citizen scientists, and data access to the wider research community for robust validation of published research results.

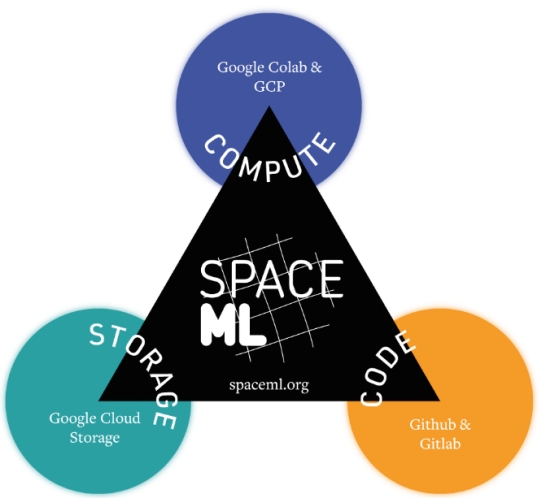

Within this context, SpaceML is being developed in the US by the Frontier Development Lab and hosted by the SETI Institute in California, while the UK presence is via FDL at Oxford University and works in partnership with the European Space Agency. This is a public-private collaboration that melds data storage, code-sharing and data analysis in the cloud. The site includes analysis-ready datasets, space science projects and tools.

Bill Diamond, CEO of the SETI Institute, explains the emergence of the approach:

“The most impactful and useful applications of AI and machine learning techniques require datasets that have been properly prepared, organized and structured for such approaches. Five years of FDL research across a wide range of science domains has enabled the establishment of a number of analysis-ready datasets that we are delighted to now make available to the broader research community.”

The SpaceML.org website includes a number of projects including the calibration of space-based instruments in heliophysics studies, the automation of meteor surveillance platforms in the CAMS network (Cameras for Allsky Meteor Surveillance), and one of particular relevance to Centauri Dreams readers, a project called INARA, which stands for Intelligent ExoplaNET Atmospheric RetrievAl. Its description:

“…a pipeline for atmospheric retrieval based on a synthesized dataset of three million planetary spectra, to detect evidence of possible biological activity in exoplanet atmospheres.”

SpaceML will curate a central repository of project notebooks and datasets generated by projects like these, with introductory material and sample data allowing users to experiment with small amounts of data before plunging into the entire dataset. New datasets growing out of ongoing research will be made available as they emerge.

I think researchers of all stripes are going to find this approach useful as it should boost dialogue among the various sectors in which scientists engage. I mentioned citizen scientists earlier, but the gap between academic research labs, which aim at generating long-term results, and industry laboratories driven by the need to develop commercial products to pay for investment is just as wide. Availability of data and access to experts across a multidisciplinary range creates a promising model.

James Parr is FDL Director and CEO at Trillium Technologies, which runs both the US and the European branches of Frontier Development Lab. Says Parr:

“We were concerned on how to make our AI research more reproducible. We realized that the best way to do this was to make the data easily accessible, but also that we needed to simplify both the on-boarding process, initial experimentation and workflow adaptation process. The problem with AI reproducibility isn’t necessarily, ‘not invented here’ – it’s more, ‘not enough time to even try’. We figured if we could share analysis ready data, enable rapid server-side experimentation and good version control, it would be the best thing to help make these tools get picked up by the community for the benefit of all.”

So SpaceML is an AI accelerator, one distributing open-source data and embracing an open model for the deployment of AI-enhanced space research. The current datasets and projects grow out of five years of applying AI to space topics ranging from lunar exploration to astrobiology, completed by FDL teams working in multidisciplinary areas in partnership with NASA and ESA and commercial partners. The growth of international accelerators could quicken the pace of multidisciplinary research.

What other multidisciplinary efforts will emerge as we streamline our networks? It’s a space I’ll continue to track. For more on SpaceML, a short description can be found in Koul et al., “SpaceML: Distributed Open-source Research with Citizen Scientists for the Advancement of Space Technology for NASA,” COSPAR 2021 Workshop on Cloud Computing for Space Sciences” (preprint).

This reminds me of the same issue in bioinformatics 20 years ago. At that time, there was a lot of algorithm development but a paucity of quality datasets to grind on. At the same time, there was an issue of how to create quality datasets, and ETL tools to make datasets into tables suitable for ML in those days. This was a general problem and a number of companies appeared to provide hosting and analysis of good datasets. We see the issue of building good datasets in the astronomy and astrobiology domains. For a post a few weeks back, I considered building a dataset to test a question but found that the inordinate amount of time needed to create even a small set was not worth the effort starting with published data in a PDF and trying to manually supplement it with data from a catalog.

The INARA dataset sounds very interesting and I will try to peruse it to see just how it can be used. I hope this facilitates “citizen science” for biosignature detection.

This talk from 2018 is a quick overview of what they are attempting.

The full INARA dataset is 55GB. A 150KB dataset is offered for playing with.

Machine learning techniques and newer learning technologies would become the dominant way for interrogating the unthinkably massive data to be captured by tools such as SKA, all sky and all the time. However the ‘photographic plate’ paradigm for capturing static information and limits on the current storage technology hamper the rapid discovery of new science that should be soon occurring. Imagine vast data stores that capture all sky observation in terms of all the spacetime dimensions with AI in train to recognise the features of essentially a digital version of the live universe.

If at the smallest scales that include some semblance of stability, the universe is discontinuous quanta, then in a sense it IS a digital universe – and its own computer, perhaps?

I wasn’t suggesting anything more ambitious than a digital model storing astronomical data, but your idea was explored in the mini series Devs: https://en.wikipedia.org/wiki/Devs

“Scan for life signs on the surface” is becoming a real thing…

http://nccr-planets.ch/blog/2021/06/18/scientists-detect-signatures-of-life-remotely/

The good, old task, that many time has been analyzed by multiple Sci-Fi novels, one example: “ The Hitchhiker’s Guide to the Galaxy”…

Even if we will build our “Deep Thought”, the main problem remains – what will be the question?

It seams to me that the task to “compose the right question” – much more complicated than AI invention.

Let try to explain and define to our self what is a life and an intelligence, before we can try to begin to compose questions to “Deep Thought”.