Looking into Astro2020’s recommendations for ground-based astronomy, I was heartened with the emphasis on ELTs (Extremely Large Telescopes), as found within the US-ELT project to develop the Thirty Meter Telescope and the Giant Magellan Telescope, both now under construction. Such instruments represent our best chance for studying exoplanets from the ground, even rocky worlds that could hold life. An Astro2020 with different priorities could have spelled the end of both these ELT efforts in the US even as the European Extremely Large Telescope, with its 40-meter mirror, moves ahead, with first light at Cerro Armazones (Chile) projected for 2027.

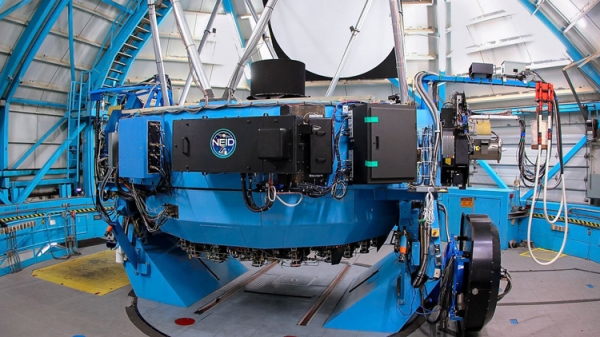

So the ELTs persist in both US and European plans for the future, a context within which to consider how planet detection continues to evolve. So much of what we know about exoplanets has come from radial velocity methods. These in turn rely critically on spectrographs like HARPS (High Accuracy Radial Velocity Planet Searcher), which is installed at the European Southern Observatory’s 3.6m telescope at La Silla in Chile, and its successor ESPRESSO (Echelle Spectrograph for Rocky Exoplanet and Stable Spectroscopic Observations). We can add the NEID spectrometer on the WIYN 3.5m telescope at Kitt Peak to the mix, now operational and in the hunt for ever tinier Doppler shifts in the light of host stars.

We’re measuring the tug a planet puts on its star by looking radially — how is the star pulled toward us, then away, as the planet moves along its orbit? Given that the Earth produces a movement of a mere 9 centimeters per second on the Sun, it’s heartening to see that astronomers are closing on that range right now. NEID has demonstrated a precision of better than 25 centimeters per second in the tests that led up to its commissioning, giving us another tool for exoplanet detection and confirmation.

But this is a story that also reminds us of the vast amount of data being generated in such observations, and the methods needed to get this information distributed and analyzed. On an average night, NEID will collect about 150 gigabytes of data that is sent to Caltech, and from there via a data management network called Globus to the Texas Advanced Computing Center (TACC) for analysis and processing. TACC, in turn, extracts metadata and returns the data to Caltech for further analysis. The results are made available by the NASA Exoplanet Science Institute via its NEID Archive.

Image: The NEID instrument is shown mounted on the 3.5-meter WIYN telescope at the Kitt Peak National Observatory. Credit: NSF’s National Optical-Infrared Astronomy Research Laboratory/KPNO/NSF/AURA.

What a contrast with the now ancient image of the astronomer on a mountaintop coming away with photographic plates that would be analyzed with instruments like the blink comparator Clyde Tombaugh used to discover Pluto in 1930. The data now come in avalanche form, with breakthrough work occurring not only on mountaintops but in the building of data pipelines like these that can be generalized for analysis on supercomputers. The vast caches of data contain the seeds of future discovery.

Joe Stubbs leads the Cloud & Interactive Computing group at TACC:

“NEID is the first of hopefully many collaborations with the NASA Jet Propulsion Laboratory (JPL) and other institutions where automated data analysis pipelines run with no human-in-the-loop. Tapis Pipelines, a new project that has grown out of this collaboration, generalizes the concepts developed for NEID so that other projects can automate distributed data analysis on TACC’s supercomputers in a secure and reliable way with minimal human supervision.”

NEID also makes a unique contribution to exoplanet detection by being given over to the analysis of activity on our own star. Radial velocity is vulnerable to confusion over starspots — created by convection on the surface of exoplanet host stars and mistaken for planetary signatures. The plan is to use NEID during daylight hours with a smaller solar telescope developed for the purpose to track this activity. Eric Ford (Penn State) is an astrophysicist at the university where NEID was designed and built:

“Thanks to the NEID solar telescope, funded by the Heising-Simons Foundation, NEID won’t sit idle during the day. Instead, it will carry out a second mission, collecting a unique dataset that will enhance the ability of machine learning algorithms to recognize the signals of low-mass planets during the nighttime.”

Image: A new instrument called NEID is helping astronomers scan the skies for alien planets. TACC supports NEID with supercomputers and expertise to automate the data analysis of distant starlight, which holds evidence of new planets waiting to be discovered. WIYN telescope at the Kitt Peak National Observatory. Credit: Mark Hanna/NOAO/AURA/NSF.

Modern astronomy in a nutshell. We’re talking about data pipelines operational without human intervention, and machine-learning algorithms that are being tuned to pull exoplanet signals out of the noise of starlight. In such ways does a just commissioned spectrograph contribute to exoplanetary science through an ever-flowing data network now indispensable to such work. Supercomputing expertise is part of the package that will one day extract potential biosignatures from newly discovered rocky worlds. Bring on the ELTs.

Every activity that needs to generate a lot of data from many different “experiments” eventually creates a workflow data analysis pipeline.

Think of all those rooms of women looking for specific cloud chamber tracks for subatomic particle collisions back in the day. Now that computers are relatively dirt cheap, data is streamed directly to specific processes to automatically do the analysis to find “needles in the haystack”, create statistical information, and to extract patterns.

Sometimes workflows still need humans in the loop – whether to do spot check QA, or to detect patterns that cannot be done by machine learning or AI. Humans will also remain to spot interesting anomalies that are detected, and to help devise new ideas for data analysis. Will that ever be fully automatable by computer – not in what remains of my lifetime, and hopefully for generations to come.

Possibly starting with weather forecasting, the trend has been to create ever more detailed data collection. (I smile when I think of the statistics we once learned to deal with small samples to infer population information.) Computers generated their own data exhaust which now is greater than primary data, eliciting analysis of this streaming data exhaust. Inexpensive monitoring (“edge” sensors and computing) are generating huge amounts of data, analyzed for good or ill in our “surveillance” societies. My home security cameras capture and store video clips triggered by motion detection. Our medical appliances and apps can generate more readings than was ever captured by periodic doctor visits. My electrical and gas usage is monitored and available for analysis and comparisons both at the utility and for the individual consumer. I don’t doubt that this trend will continue, allowing ever more data collection of ever more things and systems, with automation taking care of the collection and analysis. I don’t see this trend ending as the costs of data collection, storage, and analysis keep falling.

It is science fiction compared to the world I was born into.

7 Dec 2017 AlphaZero AI beats champion chess program after teaching itself in four hours.

AlphaZero, the game-playing AI created by Google sibling DeepMind, has been repurposed from AlphaGo, which has repeatedly beaten the world’s best Go players, and has been generalised so that it can now learn other games.

AlphaZero has in turn been succeeded by a program known as MuZero which learns without being taught the rules.

Vertebrate brains have evolved programs to optimize survival in planetary biological ecosystems. Computer brains are for now constrained by human-fabricated hardware, but their programming in breaking free of the constraints inherited from humans.

Go experts were impressed by the program’s performance and its nonhuman play style; Ke Jie stated that “After humanity spent thousands of years improving our tactics, computers tell us that humans are completely wrong… I would go as far as to say not a single human has touched the edge of the truth of Go.”

Will we even approach the grokking of it when they “think” in ways beyond our ken?

The aim is to get from narrow AI to general AI (AGI) that can truly operate in the world as intelligent animals like us do. Some feel it is mostly a matter of scale, others that there is a fundamental limitation of the approaches we are taking. In any case, there are a lot of valid critiques of supposedly superhuman narrow AI. For example, change the colors of a video game and the trained AI fails. Change a detail of the game and the AI fails. Humans OTOH, are pretty robust in this regard. It is the same with vision. Change a few key pixels in an image that are imperceptible to human eyes and change the AIs classification of the object (e.g. a dog to an ostrich).

Despite decades of data input, Doug Lenat’s Cyc project still cannot imbue a computer to understand the world or have “common sense”.

The military are on the cusp of deploying battlefield robots. What could possibly go wrong? But I think they will prove remarkably prone to being destroyed or captured by humans. Terminators they are not.

But if/when such machine do become truly intelligent, I suspect that we will have a lot of trouble understanding them, especially if they can “evolve” their architectures and ways of thinking. A future Susan Calvin will have her work cut out to understand them.

“…the US-ELT project to develop the Thirty Meter Telescope and the Giant Magellan Telescope, both now under construction.”

I wish the TMT was under construction. The only ELT with a view of the complete northern hemisphere sky. Unfortunately, the quagmire with the “native” protesters continues. The outlook for a solution for the Mauna Kea site doesn’t look.good. And the suboptimal La Palma site has run into hurdles too.

https://www.staradvertiser.com/2021/10/11/hawaii-news/construction-of-tmt-delayed-at-least-2-years/

Why suboptimal? AFAIK, La Palma has as good a seeing as Mauna Kea.

I’m not aware of a La Palma site problem, but the TMT issues are why I believe that without the Astro2020 endorsement, the project would fail. So I think the Decadal is good news for US ELT development.

There has to be someplace else besides Mauna Kea Observatories in Hawaii or La Palma, Canary Islands to build the TMT…

https://english.elpais.com/spain/2021-11-09/la-palma-eruption-experts-say-deepest-magma-reserves-are-falling.html

Eruption is on the opposite side of the island and it’s not probable it will continue for a decade.

You might be interested in teh fact that there’s a proposal in China to build a telescope on Tibetan Plateau

https://www.nature.com/articles/s41586-021-03711-z

”e we report the results of three years of monitoring of testing an area at a local summit on Saishiteng Mountain near Lenghu Town in Qinghai Province. The altitudes of the potential locations are between 4,200 and 4,500 metres. An area of over 100,000 square kilometres surrounding Lenghu Town has a lower altitude of below 3,000 metres, with an extremely arid climate and unusually clear local sky (day and night)6. Of the nights at the site, 70 per cent have clear, photometric conditions, with a median seeing of 0.75 arcseconds. The median night temperature variation is only 2.4 degrees Celsius, indicating very stable local surface air. The precipitable water vapour is lower than 2 millimetres for 55 per cent of the night.”

” A comparison of the total seeing at Lenghu with other known best sites in the world is shown in Table 1. The median value at Lenghu is the same as that at Mauna Kea (0.75 arcseconds) and is better than those at Cerro Paranal and La Palma.”

We will see how it goes, but I believe they certainly have the will and means to do it if a decision would be made.

https://www.technologyreview.com/2021/08/19/1032320/chinese-astronomers-observatory-tibetan-plateau-lenghu/

”In some ways, this new research is an affirmation of China’s current astronomy plans for the area around Lenghu. Those plans include a 2.5-meter imaging survey telescope that began construction this year, a 1-meter solar infrared telescope that will be part of an international array of eight telescopes, and two others at 1.8 meters and 0.8 meters, for planetary science.

As Deng points out, Tsinghua University and the University of Arizona are working together on building a 6.5-meter telescope to operate on the Saishiteng Mountain summit. And there are nascent plans for a 12-meter telescope to be located there as well. “It will be very crowded at the mountain top,” says Deng.”

Based on what I have seen happening in the last few years, I’d say that TMT doesn’t stand a chance of being built on Mauna Kea. And I’m not referring to the particular situation there, just the general vibe in the US.

The sooner they find a new site, the better.

On this same theme of huge data pipelines and automated analysis in search for planets, there is a new paper on Planet Nine that inspects years of ZTF survey data in search of P9:

https://findplanetnine.blogspot.com/2021/10/the-hunt-is-on.html

Read a some time ago that there is a certain plateau in Antarctica that is the best location anywhere on earth for an astronomical observatory. The air is extremely dry (good for infrared imaging), very stable and with very long nights. It is a difficult environment but certainly better than in space. It ought to be much less costly to build and operate a large telescope there than in orbit. I wonder how much better (whatever that metric may be) it would be relative to astronomical sites such as in Chile.

https://www.popularmechanics.com/science/environment/a33472034/best-stargazing-location/