It’s no small matter to add 301 newly validated planets to an exoplanet tally already totalling 4,569. But it’s even more interesting to learn that the new planets are drawn out of previously collected data, as analyzed by a deep neural network. The ‘classifier’ in question is called ExoMiner, describing machine learning methods that learn by examining large amounts of data. With the help of the NASA supercomputer called Pleiades, ExoMiner seems to be a wizard at separating actual planetary signatures from the false positives that plague researchers.

ExoMiner is described in a paper slated for The Astrophysical Journal, where the results of an experimental study are presented, using data from the Kepler and K2 missions. The data give the machine learning tools plenty to work with, considering that Kepler observed 112,046 stars in its 115-degree square search field, identifying over 4000 candidates. More than 2300 of these have been confirmed. The Kepler extended mission K2 detected more than 2300 candidate worlds, with over 400 subsequently confirmed or validated. The latest 301 validated planets indicate that ExoMiner is more accurate than existing transit signal classifiers.

How much more accurate? According to the paper, ExoMiner retrieved 93.6% of all exoplanets in its test run, as compared to a rate of 76.3% for the best existing transit classifier.

We see many more candidate planets than can be readily confirmed or identified as false positives in all our large survey missions. TESS, the Transiting Exoplanet Survey Satellite, for example, working with an area 300 times larger than Kepler’s, has detected 2241 candidates thus far, with about 130 confirmed. Obviously, pulling false positives out of the mix is difficult using our present approaches, which is why the ExoMiner methods are so welcome.

Hamed Valizadegan is ExoMiner project lead and machine learning manager with the Universities Space Research Association at NASA Ames:

“When ExoMiner says something is a planet, you can be sure it’s a planet. ExoMiner is highly accurate and in some ways more reliable than both existing machine classifiers and the human experts it’s meant to emulate because of the biases that come with human labeling…Now that we’ve trained ExoMiner using Kepler data, with a little fine-tuning, we can transfer that learning to other missions, including TESS, which we’re currently working on. There’s room to grow.”

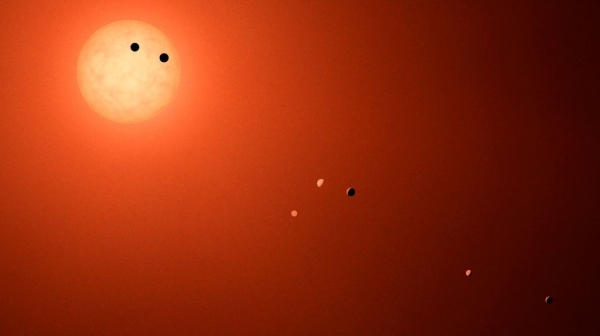

Image: Over 4,500 planets have been found around other stars, but scientists expect that our galaxy contains millions of planets. There are multiple methods for detecting these small, faint bodies around much larger, brighter stars. The challenge then becomes to confirm or validate these new worlds. Credit: NASA/JPL-Caltech.

The paper describes the most common approach to detecting exoplanet candidates and vetting them. Imaging data are processed to identify ‘threshold crossing events’, after which a transit model is fitted to each signal, with diagnostic tests applied to subtract non-exoplanet effects. This produces data validation reports for these crossing events, which in turn are filtered to identify likely exoplanets. The data validation reports for the most likely events are then reviewed by vetting teams and released as objects of interest for follow-up work.

Machine learning (ML) methods speed the process. As described in the paper:

ML methods are ideally suited for probing these massive datasets, relieving experts from the time-consuming task of sifting through the data and interpreting each DV report, or comparable diagnostic material, manually. When utilized properly, ML methods also allow us to train models that potentially reduce the inevitable biases of experts. Among many different ML techniques, Deep Neural Networks (DNNs) have achieved state-of-the-art performance (LeCun et al. 2015) in areas such as computer vision, speech recognition, and text analysis and, in some cases, have even exceeded human performance. DNNs are especially powerful and effective in these domains because of their ability to automatically extract features that may be previously unknown or highly unlikely for human experts in the field to grasp…

The ExoMiner software learns by using data on exoplanets that have been confirmed in the past, and also by examining the false positives thus far generated. Given the sheer numbers of threshold crossing events Kepler and K2 have produced, automated tools to examine these massive datasets greatly facilitate the confirmation process. Remember that two goals are defined here. A ‘confirmed’ planet is one that is detected via other observational techniques, as when radial velocity methods, for example, are applied to identify the same planet.

A planet is ‘validated’ statistically when it can be shown how likely the find is to be a planet based on the data. The 301 new exoplanets are considered machine-validated. They have been in candidate status until ExoMiner went to work on them to rule out false positives. As with the analysis we examined yesterday, refining filtering techniques at Proxima Centauri to screen out flare activity, this work will be applied to future catalogs from TESS and the ESA’s PLATO mission. According to Valizadegan, the team is already at work using ExoMiner with TESS data.

Usefully, ExoMiner offers what the authors call “a simple explainability framework” that provides feedback on the classifications it makes. It isn’t a ‘black box,’ according to exoplanet scientist Jon Jenkins (NASA Ames), who goes on to say: “We can easily explain which features in the data lead ExoMiner to reject or confirm a planet.”

Looking forward, the authors explain the keys to ExoMiner’s performance. The reference to Kepler Objects of Interest (KOIs) below refers to a subset defined within the paper:

[S]ince the general concept behind vetting transit signals is the same for both Kepler and TESS data, and ExoMiner utilizes the same diagnostic metrics as expert vetters do, we expect an adapted version of this model to perform well on TESS data. Our preliminary results on TESS data verify this hypothesis. Using ExoMiner, we also demonstrate that there are hundreds of new exoplanets hidden in the 1922 KOIs that require further follow-up analysis. Out of these, 301 new exoplanets are validated with confidence using ExoMiner.

The paper is Valizadegan et al., “ExoMiner: A Highly Accurate and Explainable Deep Learning Classifier to Mine Exoplanets,” accepted at The Astrophysical Journal (preprint).

This is a good article. It would be interesting to compare the results with human based “citizen scientist” detection, like Planet Hunters (or the ongoing Planet Hunters TESS)

Wondering why the confirm ratio for TESS candidates (130/2241) is so much lower than that for Kepler (2300/4000) ? Is is because scientists have had longer to confirm Kepler candidates or is it something else?

I think it is safe to say that at least in part the difference in number of confirmations is due to the difference in time we have had the two data sets (Kepler versus TESS) Kamal. I am a citizen scientist working on TESS data and we are only in the third year, whereas Kepler data has been available for more than a decade. Once a planet candidate has been found it has to be confirmed by another method (usually radial velocity I think) and with several observation platforms so it takes a long time. TESS will probably eventually provide somewhere around 20,000 confirmed planets according to estimates I have seen.

“It isn’t a ‘black box,’” – yet. When we have enough big computers in numbers and task variety hooked up together, they may unnoticed start exchanging their unorthodox ways of “thinking”, repurposing them to new objectives.

Just like gene/DNA transfer among organisms (including virus => placental mammals).

I haven’t had a chance to do more than skim this paper. However, if they are certain it isn’t a black box (and I am not clear how they can make that claim given that they are using DNNs), it has no bearing on the idea that many neural nets acting together can generate emergent behavior that is not based on their trained task. A simple test is to take a ANN trained for one task, e.g. vision, and test it against another task (e.g. voice recognition). It fails, miserably. A pretrained visual recognition ANN can be retrained for a similar task with fewer learning epochs than an untrained ANN – in some cases.

The black/white box issue is a red herring. Our brains, even the replicated cortical columns, are black boxes. We have only the crudest ideas of how they work in detail. The only analogy that works AFAICS is that human brains trained in different tasks/disciplines can, when organized into social structures, achieve truly remarkable feats, rather like the eusocial insects, but at a far higher level.

Now if you want AI involved doing nefarious things, let me introduce you to social media, especially FB. It is the paperclip maximizer thought experiment, but consuming attention to convert it into advertising $$$.

Within 10 parsecs there are 283 M dwarfs of which 243 may be flaring. With ExoMiner’s ML and Gilberts algorithm for identify flares we could see a surge in nearby exoplanets. Machine learning to subtract flares around these faint stars and removing the interference that they create would be a major task of the supercomputer called Pleiades. It would be great if the GeForce RTX 3090 with 24GBs could be used to mine the datasets for exoplanets… by next year the RTX 4090 should be out.

Early predictions for Nvidia and AMD’s next-gen cards set up a titanic GPU battle.

https://www.pcgamer.com/early-predictions-for-nvidia-and-amds-next-gen-cards-set-up-a-titan-gpu-battle/

Reading the paper more thoroughly indicates to me that the authors have done a good analysis of the ExoMiner ML architecture and learning to detect exoplanets and eliminate false positives. Explainability is achieved by breaking up what could have been a monolithic DNN into components that detect specific features and can be used to explain why a signal was a valid exoplanet or not. I was particularly interested in their explanation for false positives and how they might reduce these in future.

Ongoing work to use the trained model as a basis for analyzing the TESS data was interesting too.

As a general technique, one can see that this model could be coupled with some future survey telescope that looks for the radial velocity data that is used to validate transit data. I can even imagine that a similar approach could be used for astrometry survey data.

With the likely hundreds of billions of planets in the galaxy alone, there will never be time for human attention to be spent looking at the data and following it up with targeted telescope time. (Who would even care by then?). Catalogs will be filled with ML analyzed data, and hence will have many errors – planets that are not actually there, or unsuspected planets that exist but were not detected – the latter ideal for future interstellar travelers to migrate to if they do not want to be found. Just naming the planets with common names to match their characteristics will be a challenge and requiring automation too.

I hope many in their HZs have flags indicating life, and a least a few with flags indicating technological civilizations.

Well, I will care, might even get your name on the planet you confirm, like comets. If the data can be analyzed with this ExoMiner and the advancing PC’s GPU’s that can handle ML and AI, there would be many doing the same. Background and nonuse time on personal computers in the thousands to millions, if your surname could possibly be used to name the exoplanet would be a huge incentive. Plus at little cost to the government or institutions.