Over the past several years we’ve looked at two missions that are being designed to go beyond the heliosphere, much farther than the two Voyagers that are our only operational spacecraft in what we can call the Local Interstellar Medium. Actually, we can be more precise. That part of the Local Interstellar Medium where the Voyagers operate is referred to as the Very Local Interstellar Medium, the region where the LISM is directly affected by the presence of the heliosphere. The Interstellar Probe design from Johns Hopkins Applied Physics Laboratory and the Jet Propulsion Laboratory’s Solar Gravity Lens (SGL) mission would pass through both regions as they conduct their science operations.

Both probes have ultimate targets beyond the VLISM, with Interstellar Probe capable of looking back at the heliosphere as a whole and reaching distances are far as 1000 AU still operational and returning data to Earth. The SGL mission begins its primary science mission at the Sun’s gravitational lens distance on the order of 550 AU, using the powerful effects of gravity’s curvature of spacetime to build what the most recent paper on the mission calls “a ‘telescope’ of truly gigantic proportions, with a diameter of that of the sun.” The vast amplification of light would allow a planet on the other side of the Sun to be imaged at stunning levels of detail.

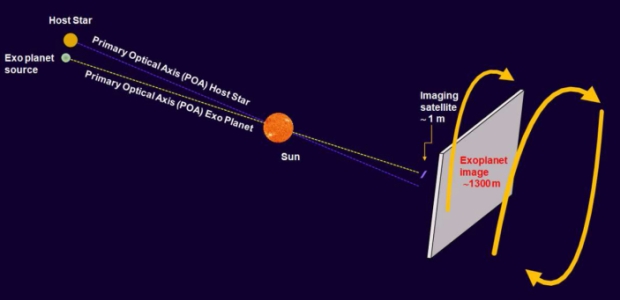

Image: This is Figure 1 from the just released paper on the SGL mission. Caption: A visualization of the key primary optical axes (POA) and the projected image plane of the exoplanet. The imaging spacecraft is the tiny element in front of the exoplanet image plane. Credit: Helvajian et al.

Let’s poke around a bit in “Mission Architecture to Reach and Operate at the Focal Region of the Solar Gravitational Lens,” just out in the Journal of Spacecraft and Rockets, which sets out the basics of how such a mission could be flown. Remember that this work has proceeded through the NASA Innovative Advanced Concepts (NIAC) office, with Phase I, II and now III studies resulting in the refinement of a design that can satisfy the requirements of the heliophysics decadal survey. JHU/APL’s Interstellar Probe takes aim at the same decadal, with both missions designed to return data relevant to our own star and, in SGL’s case, a more distant one.

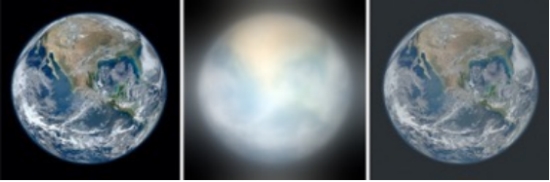

Given that it has taken Voyager 1 well over 40 years to reach 159 AU, getting a payload to the gravitational lens region for operations there and beyond as the craft departs the Sun is a challenge. But the rewards would be great if it can be made to happen. The JPL work and a great deal of theoretical study prior to it have revealed that an optical telescope of no more than meter-class equipped with an internal coronagraph for blocking the Sun’s light would see light from the target exoplanet appearing in the form of an ‘Einstein ring’ surrounding the solar disk. High-resolution imagery of an exoplanet can be extracted from this data. We can also trade spatial for spectral resolution. From the paper:

The direct high-resolution images of an exoplanet obtained with the SGL could lead to insight on the on-going biological processes on the target exoplanet and find signs of habitability. By combining spatially resolved imaging with spectrally resolved spectroscopy, scientific questions such as the presence of atmospheric gases and its circulation could be addressed. With sufficient SNR and visible to mid-infrared (IR) sensing [26], the inspection of weak biosignatures in the form of secondary metabolic molecules like dimethyl-sulfide, isoprene, and solid-state transitions could also be probed in the atmosphere. Finally, the addition of polarimetry to the spatially and spectrally resolved signals could provide further insight such as atmospheric aerosols, dust, and, on the ground, properties of the regolith (i.e., minerals) and bacteria and fauna (i.e., homochirality)…

I won’t labor the issue, as we’ve discussed gravity lens imaging on many an occasion in these pages, but I did want to make the point about spectroscopy as a way of underlining the huge reward obtainable from a mission that can collect data at these distances. The paper is rich in detailing the progress of our thinking on this, but I turn to the mission architecture for today, offering as it does a remarkable new way to conceive of deep space missions both in terms of configuration and propulsion. For we’re dealing here with spacecraft that are modular, reconfigurable and highly adaptable using clusters of spacecraft that practice self-assembly during cruise.

The SGL mission is based on a constellation of identical craft, the primary components being what the authors call ‘proto-mission capable’ (pMC) spacecraft, with final ‘mission capable’ (MC) craft being built as the mission proceeds. Smaller pMC nanosats, in other words, dock during cruise to build an MC; five or perhaps six of the latter are assumed in the mission description in this paper to allow full capability during the observational period within the focal region of the gravity lens. The pMC craft use solar sails for a close pass by the Sun, all of them launched into a parking orbit before deployment toward the Sun. The sailcraft fly in formation following perihelion, dispose of their thermal shielding, then their sails, and begin assembly into MC spacecraft.

How to separate a final, fully functional MC craft into the constituent units from which it will be assembled in flight is no small issue, and bear in mind the need for extreme adaptability, especially as the craft reach the gravitational lensing region. Near-autonomous operations are demanded. The SGL study used simulations based on current engineering methodology (CEM) tools, modifying them as needed. The need for in-flight assembly stood out from the alternative. From the paper;

Two types of distributed functionality were explored: a fractionated spacecraft system that operates as an “organism” of free-flying units that distribute function (i.e., virtual vehicle) or a configuration that requires reassembly of the apportioned masses. Given that the science phase is the strong driver for power and propellant mass, the trade study also explored both a 7.5-year (to ?800 AU) and 12.5-year (to ?900 AU) science phase using a 20 AU/year exit velocity as the baseline. The distributed functionality approach that produced the lowest functional mass unit is a cluster of free-flying nanosatellites (i.e., pMC) each propelled by a solar sail but then assembled to form an MC spacecraft.

Out of all this what emerges is a pMC design with the capability of a 6U CubeSat nanosatellite, self-contained and three-axis stabilized, each of these units to carry a critical part of the larger MC spacecraft. Power and data are shared as the pMCs dock. The current design for the pMC is a round disk approximately 1 meter in diameter and 10 cm thick, with the assembled MC spacecraft visualized as stacked pMC units. One pMC would carry the primary and secondary mirrors, a second the science package, optical communications package and star tracker sensors, and so on. In-space assembly need not be rushed. The paper mentions a time period of several months as needed to complete the operation.

The 28-year cruise phase ends in the region of 550 AU, with two of the five or six MC spacecraft now maneuvering to track the primary optical axis of the exoplanet host star, which is the line connecting the center of the star to the center of the Sun. The host star is thus a key navigational resource which will be used to determine the precise position of the exoplanet under study. Interestingly, motion in the image plane has to be accounted for – this is due to the effect of the wobble of the Sun caused by gas giants in our Solar System. Such wobbles are hugely helpful for those using radial velocity methods to study planets around other stars. Here they become a complicating factor in extracting the data the mission will need to construct its exoplanet imagery.

The disposition of the spacecraft at 550 AU is likewise interesting. All of the MC spacecraft are, as the acronym makes clear, capable of conducting the mission. It now becomes necessary to subtract the Sun’s coronal light from the incoming data, which is accomplished by having one of the spacecraft follow an inertial path down the center of the spiral trajectory the other craft will follow (the other craft all move in a noninertial frame to make it possible to acquire the SGL photons). Having one craft on an inertial path means it sees no exoplanet photons, and thus its coronal image can be subtracted from the data gathered by the other four craft. The inertial path spacecraft also acts as a local reference frame that can be used for navigation.

Image: A meter-class telescope with a coronagraph to block solar light, placed in the strong interference region of the solar gravitational lens (SGL), is capable of imaging an exoplanet at a distance of up to 30 parsecs with a few 10 km-scale resolution on its surface. The picture shows results of a simulation of the effects of the SGL on an Earth-like exoplanet image. Left: original RGB color image with (1024×1024) pixels; center: image blurred by the SGL, sampled at an SNR of ~103 per color channel, or overall SNR of 3×103; right: the result of image deconvolution. Credit: Turyshev et al., “Direct Multipixel Imaging and Spectroscopy of an Exoplanet with a Solar Gravity Lens Mission,” Final Report NASA Innovative Advanced Concepts Phase II.

The spacecraft are moving at more than 20 AU per year and have up to five years between 550 and 650 AU to lock onto the primary optical axis of the exoplanet host star. As the craft reach 650 AU, the optical axis of the host star becomes what the authors call a ‘navigational steppingstone’ toward locating the image of the exoplanet, which once acquired begins a science phase lasting in the area of ten years.

The details of image acquisition are themselves fascinating and as you would imagine, complex – I send you to the paper for more. My focus today is the novelty of the architecture here. If we can assemble a mission capable spacecraft (and indeed a small fleet of these) out of the smaller pMC units, we reduce the size of sail needed for the perihelion acceleration phase and make it possible to achieve payload sizes for missions far beyond the heliosphere that would not otherwise be possible. We build this out of a known base; in-space assembly and autonomous docking have been demonstrated, and technologies for assembly operations continue to be refined. NASA’s On-Orbit Autonomous Assembly from Nanosatellites and CubeSat Proximity Operations Demonstration mission are examples of this ongoing research.

What a long and winding path it is to extend the human presence via robotic probe ever further from our planet. This paper examines technologies needed to advance this movement, and again I point to the ongoing Interstellar Probe study at JHU/APL as another rich source for current and projected thinking about the needed technologies. In the case of the SGL mission, what is being proposed could have a major impact on the search for life elsewhere in the universe. Imagine a green and blue exoplanet seen with weather patterns, oceans, continents and rich spectral data on its atmosphere.

But I come back to that mission architecture and the idea of self-assembly. As the authors write:

We realize that this architecture fundamentally changes how space exploration could be conducted. One can imagine small- to medium-scale spacecraft on fast-traveling scouting missions on quick cadence cycles that are then followed by flagship-class space vehicles. The proposed mission architecture leverages a global technology base driven by miniaturization and integration, and other technologies that are coming into fruition, including composite materials based on hierarchical structures, edge-computing platforms, small-scale power generation, and storage. These advances have had an effect on the small spacecraft industry with the development of a worldwide CubeSat and nanosat ecosystem that have continually demonstrated increasing functionality in missions…

We’ll continue to track robotic self-assembly and autonomy issues with great interest. I’m convinced the concept opens up mission possibilities we’ve yet to imagine.

The paper is Helvajian, “Mission Architecture to Reach and Operate at the Focal Region of the Solar Gravitational Lens,” Journal of Spacecraft and Rockets. Published online 1 February 2023 (full text). For earlier Centauri Dreams articles on the SGL mission, see JPL Work on a Gravitational Lensing Mission, Good News for a Gravitational Focus Mission and

Solar Gravitational Lens: Sailcraft and In-Flight Assembly.

Would not the orbiting planet quickly move it out of the imaging “frame” requiring considerable maneuverability of the imaging platform to track it?

This was my initial assumption when I first saw the concept several years ago, but it turns out the relative motion is minor. I don’t remember what the needed velocity is for the closest star and don’t trust my math skills enough to share an answer. It is less than a vehicle’s orbital velocity meaning the right trajectory will keep the target in view.

FYI–

The proper motion and parallax of a star are usually noted in the appropriate stellar catalogs.

4.74 ( Proper Motion / Parallax ) = motion across line of sight

Proper Motion (“/yr)

Parallax (“)

motion (km/sec)

These velocities are on the order of 10-100 km/sec for stars orbiting the galactic center along with the sun in the LSR. Planetary orbital velocities around the primary star will be about the same.

But I have no knowledge of the apparent field of view, or the effective magnification, of a gravitationally lensed image. If the image of a planet just fits in the FOV, as the illustration suggests, I have no idea how we could find the planet and track it. OTOH, if the image is on the order of an AU or so, we could easily image an entire system with a mosaic of several “exposures”.

The imaging process is as you mention, taken pixel by pixel, at the end of some time, when the planet is not visible, further analysis on the images and a mosaic image is assembled to be sent back to Earth.

The mission analysis assumes an Earth-like planet that is both orbiting and spinning and so the data acquisition architecture has to account for this, but it turns out the SNR calcs for an orbiting planet are not hard to achieve. The delta-V maneuvers in the cross track are minimal for a 1m aperture telescope and even better for a > 1 m aperture imager.

As a 31 year old, I’m starting to feel my own mortality clawing at the edges of my mind as I wonder if I have enough time left to be able to potentially see an image of a terraqueous exoplanet from an SGL mission. Staggering the timescales of space exploration.

Will the mission be able to focus on a single planet, or will information from all or some of the other planets have to be parsed out? Also, how likely is it that planets from each star in a binary system could be studied by the same probe?

A slight change to a design would perhaps have a two stage laser sail. One to get it there and the other smaller sail against the larger one used to allow insertion of it into an orbit around 550 AU minor planet with laser pulses from earth. That orbit of the object would allow it to scan a much greater part of the sky or object, the orbit could also perhaps be changed by intermittent laser pulses from earth.

Wow. Such an inspiring mission profile. It is wonderful to see how Claudio Maccone’s FOCAL mission has been refined via Turyshev & Toth’s detailed mathematics into this latest design iteration. There can be no doubt that this is all feasible. I merely require the wherewithall to live until 150 years of age so as to be able to gaze upon the surface of an exosolar world! :)

I might have to be accepting of another embodiment under different physical and chemical laws in another universe amongst the multiverses: attractions and aversions carried over might manifest there in forms unrecognizably different from this “here and now”.

Why the inertial path spacecraft doesn’t see any photons from the planet?

And why there must be separate spacecraft? Can’t simply a bigger sail be manufactured? Why multiply by 6 the rockets, power systems, computers, … that can fail and kill the mission?

The outbound trajectory of the spacecraft during the science phase is a spiral increasing in radius — Turyshev and team discuss this here:

https://journals.aps.org/prd/abstract/10.1103/PhysRevD.105.044012

The craft on the inertial trajectory sees no exoplanet photons, as explained in the paper — it’s up in full text, so I’ll send you there for more. But here’s the gist of it, as stated there:

“Having a spacecraft moving in an inertial frame serves two purposes: i) because the spacecraft is far from the POA of exoplanet, the light on its sensor is only the solar corona leakage light from the coronagraph and the light leakage from the host star, and no exoplanet photons should be present, and, consequently, the image from the inertial traveling frame spacecraft can be subtracted by the four in the noninertial frame; ii) the SGL spacecraft traveling the inertial path serves as a local reference frame and can be used for navigation.”

Self-assembly allows the use of small sails, as sails require a very large surface-area-to-mass ratio. The authors argue that in the near-term, while we develop structural materials for sailcraft of this size, we can still put a large payload at the SGL through self-assembly and smaller craft.

Oh, thanks, now I understand the point of the inertial path spacecraft!

That alone seems a good reason for having separate spacecrafts, not so much for the size argument, since, for transporting 6 times more mass you certainly need 6 times more sail area BUT only 2.45 times more sail radius.

A couple commenters have remarked on the need for high maneuverability to track the target planet. From my perspective that pales in comparison to other problems.

Ex. Eliminating image distorsion caused by chaotic disturbances in the solar atmosphere. This will preumably not be done onboard, but using earth based computing power.

Tracking the planet accurately at monstrously high magnification requires an extremely precise knowledge of its orbital parameters.

I’d like to read more about the practical mechanics of observation and also data processing would work. The info I’ve read thus far has been very light on such details.

Am I correct in assuming one would need to commit to observing a single star prior to launch? Could one instead go into an orbit around the Sun at 550 AU and that would that allow the craft to serially observe more than one system? Perhaps it would spend some years observing one system, then move on to the next? What kind of propulsion or trajectory would allow one to achieve orbit at 550 AU ?

The telescope needs to stay on teh SGL for some time to collect the photons to image the planet. To move to another SGL for a different star’s exoplanet requires traversing to that SGL while stile traveling outwards at around 20 AU/yr. If just using onboard propellant and traveling at orbital velocity to start and stop on the new SGL to track the new star, the time taken to reach that new SGL is quite long. One can shorten that time with more propellant, but that comes at a cost of needing yet more energy to reach the first SGL.

Therefore it appears that unless the second SGL is very close by, i.e. there are 2 potential exoplanet candidates in nearly the same line of sight, then a single star is it. This might make multiple exoplanets in the same star system, like Trappist 1, more interesting as there are several potential candidates that look interesting.

“How to separate a final, fully functional MC craft into the constituent units from which it will be assembled in flight is no small issue, and bear in mind the need for extreme adaptability, especially as the craft reach the gravitational lensing region. Near-autonomous operations are demanded”

I suggest looking into drone technology and solutions(of course space is a whole different environment but still interesting) , there is a very rapid progress in that field which requires autonomous movement and building of extensive network of robotic vehicles.

https://www.youtube.com/watch?v=MlFtHuXPbv4

https://www.youtube.com/watch?v=-82LILQKDl0

https://www.youtube.com/watch?v=otGI-i5rVRA

https://www.youtube.com/watch?v=n9tu-L59YqQ

Micro Drones Killer Arms Robots – Autonomous Artificial Intelligene – WARNING !!

Autonomous, self-assembly of components would be interesting for all sorts of missions, from large structures like a space station, to large spaceships of the size of terrestrial ships, to multi-components telescopes. Imagine if the JWST telescope could have been made of several components, launched separately, and then reassembled in orbit. Multiple components that can assemble would conceivably allow for the automated repair of failing components. This however comes at a cost that complex systems must assemble correctly in space without failures. I would think that such an assembly might be particularly appropriate for spacecraft with nuclear engines, especially if the nuclear fuel could be launched separately and the ship could install the fuel autonomously, perhaps only in the last phase of assembly and testing.

For super-large telescopes, it might well be preferable for the primary mirror to be constructed in space and the rest of the telescope then launched to join it and to integrate with it.

Thinking somewhat along a different track, what if the components themselves are reconfigured after launch, analogous to larvae pupating and emerging as adult, winged insects? Once the propulsion unit has done its task, it could be disassembled and rebuilt as a functional component of the final probe. Something to consider when such technology matures.

Hordes of CubeSats and nanosats designed capable for varieties of tasks could be directed to build everything from more of themselves to von Neumann probes, from generation ships to Matrioshka brains. Indeed such a system could be a self-adapting, self-sustaining and self-propagating galaxy-wide infrastructure. The initial outlay could be quite modest.

If we say a space craft fell the distance from the moon at 1g we would be travelling in excess of 70km/s. Could we not have a system of lens and reflectors on the falling space craft that trap the laser light from earth. The laser light is concentrated and then trapped between a reflector and highly reflective ‘fuel’ discs stored in a cassette magazine, in essence a multi bounce gets as much laser energy into velocity of the exhausting discs. These discs or even graphene reflectors can be accelerated to millions if not billions of g’s over short distances of say 5m meters.

Imagine a fresnel lens with stand off legs attached to a long tube, the tube has a very small hole at one end and the other is wide open. The laser light is concentrated into the openning and diverges on the other side of the hole into the tube where mirror fuel discs are loaded from a side entrance. The tube is highly reflective and allows the recycling of laser light inside to accelerate the mirror fuel discs out at very high velocities providing thrust for the main craft. The design would fit very well with Starshot because we can send paying craft out while the system is built out and even the fuel discs could be small simple spacecraft. The idea could a lot bigger if the craft is allowed to fall towards the earth from further away limited by the resolution of the laser system. This idea can be tested easy enough in a lab.

Deploying a telescope out into the Oort Cloud somewhere, say, in this case about 550 AUs, seems to be explicable with a combination of propulsion and orbital mechanics taken to a higher state of the art. At least it seems as generalist reader over here. But as I have read about this for some time I have had some difficulty with aspects of the optics. Some, evidently have, deciphered it; others like me are still struggling.

But here’s a model that might be worth using as a straw man.

If the solar radius is viewed from a minimum gravitational lens distance of 550 AUs, it subtends an angle of 4.8749 e-4 degrees or 1.754 arcseconds. That is, it blocks out a3.5 arc second field of view.

Now if a solar system were ten parsecs away or about 33 light years off, the radial offset of an Earth-like planet from a Sol like star (1 AU) would be 0.1 arc seconds. Hence, the Earth analog would be in the field of view but the field of view would encompass a radius of 17 AUs.

If I am wrong on any of this, let me or the rest of us know.

In this situation, there would have to be some fore-knowledge of where the target planet should be. You would need a tracker observatory probably closer to Terra home. You still need a means to locate a body orbiting an object about a hundredth of an AU in diameter and in turn a planet about 1/10,000 of an AU wide. To relay information from a stellar observatory not experiencing this occultation by the sun to 550 AUs out, the lag would be about 3.174 days based on the speed of light.

Depending on the nature of the terrestrial analog around the G2V star,

orbital elements could be straight forward – or they could be complicated by an incomplete data set. E.g,, elliptic, out of plane path, maybe both. In these circumstances I don’t know if a stellar transit is a blessing or not. Probably not for gravity lens observing , unless you are trying to extract distant stellar energy….? But that’s a whole other story or controversy.

Now what about other type stars and HZs? Likely mostly dimmer and less massive primaries. These will likely have shorter periods. And depending on the period, the tracking reporting lag could be an orbit or two behind. Closer or farther stars,” your EPA mileage will vary”.

So, I suspect that the FOV will contract with moving out beyond 550

AU with a corresponding increase in magnification, but no longer benefitting with as steep increase as obtained when entering “the zone”.

If this is not quite the case, I hope it is analogous enough to be close.

Deploying a telescope out into the Oort Cloud out to ~550 AUs: this seems explicable and feasible with a combination of conventional propulsion and orbital mechanics taken to a higher state of the art. At least it seems as a generalist reader over here.

But as I have read about this for some time, I have had more difficulty with following the optics. Some, evidently have deciphered it; others like me might still be struggling. But here’s a model that might be worth using as a straw man. Perhaps missing or difficult links will become more apparent.

If the solar radius is viewed from a minimum gravitational lens distance of 550 AUs, it subtends an angle of 4.8749 e-4 degrees or 1.754 arcseconds. That is, it blocks out a 3.5 arc second field of view FOV.

Now if a stellar system were ten parsecs away or about 33 light years off, the maximum radial offset of an Earth-like planet from a Sol like star (1 AU) would 0.1 arc seconds. Hence, the Earth analog would be in the “nominal” field of view (FOV) but the FOV would encompass a radius of 17 AUs – If the center of the nominal FOV can be considered the center of the target star.

FOV, used for now, might be misleading in these circumstances. Because it is not clear to me how much of the blocked celestial sphere is transferred back via the gravity lens phenomenon. For argument’s sake, of this celestial “blockage” region, it could range from the infinitesimal to the whole. The image obtained might be treated akin to a point source from which we might extract image data somewhat akin to extracting the spectrum of a similar un-dimensioned source. So the aspect that confuses me here is how one searches for a point source in this so-called FOV, more characterized by the blockage of the sun’s angular width. The FOV might be described as an area within an FOB, a field of blockage.

In this situation, there would have to be some fore-knowledge of where the target planet should be. You would need a tracker observatory probably closer to Terra home. You still need a means to locate a body orbiting an object about a hundredth of an AU in diameter and in turn a planet about 1/10,000 of an AU wide. To relay information from a stellar observatory not experiencing this occultation by the sun to 550 AUs out, the lag would be about 3.174 days based on the speed of light.

And then, presumably, the observatory would need to slew toward this planetary target from the reference point of the stellar primary – or perhaps even the center of mass in a binary system.

Alpha Centauri could be such an example.

From the earlier draft, the significant entry here is what to do about slew? For the above ten parsec distant star with a planetary maximum offset of 0.1 arc seconds, it is assumed here that an equal and opposite offset would be required by the observatory platform at its 550 AU offset. Thus far its drift further outward has been addressed ( e.g., greater than 550 AU acceptable), but fixing and continually correcting for planetary offset and orbital motion would be required as well. In terms of a circular 550 AU orbital plane, we can consider 0.1 arc seconds circular arc distance or 1/129600th of the total path: about 400,000 kilometers magnitude and sinusoidally oscillatory. At these distances orbital mechanics will not provide for halo orbits. Consequently, active propulsion would be necessary to maintain continued tracking. If the target requires a finite time to assimilate a high definition image, this will govern the control system response as well.

One hopes that candidate subsequent targets after this survey of a year or more might be selected in the same constellation….

Depending on the nature of the terrestrial analog around the G2V star, orbital elements could be straight forward – or they could be complicated by an incomplete data set. E.g,, elliptic, out of plane path, maybe both. In these circumstances I don’t know if a stellar transit is a blessing or not. Probably not for gravity lens observing, unless you are trying to extract distant stellar energy….? But that’s a whole other story or controversy.

Now what about other type stars and HZs? Likely mostly dimmer and less massive primaries. These will likely have shorter periods. And depending on the period, the tracking reporting lag could be an orbit or two behind. Closer or farther stars,” your EPA mileage will vary”.

So, I suspect as well that the FOV will contract with moving out beyond 550 AUs with a corresponding increase in magnification, but no longer benefitting with as steep increase as obtained when entering “the zone”.

The 1 m telescope has to traverse the image plane in order to capture the image of the exoplanet.

I would propose a different model. The telescope is mounted on a tether with other parts of the craft as counterweights. The combo is spun and the length of the tether changed rhythmically, so that the telescope traverses the full image plane in a spiral. This would be repeated over the mission duration. No propellant is used, just electrical energy to run the motor that changes the tether length. Keeping the tether taught with the rotation should prevent fouling of the reel. This could be easily tested in orbit, although a 1.3 km tether extension at the maximum extent may be a push.

Alternatively, there could be 2 counterweights, one at each end of the tether, and the telescope traverses the rotating tether. The dynamics would be interesting, but at least the tether would be unrolled just once and would only have to handle the stresses as the telescope traversed the tether from its center to one of the 2 counterweights.

A.T.,

Still I am struggling to get a grasp on this observational program, but the more I think about it, the more I am inclined to think that the pointing needs to center on the genuine target. Whatever the actual field of view for the processed signal ( from 17 AU radius down to the infinitesimal), likely the best results would be when the point source is centered in the field. And hence that’s why the large slewing on a round table 550 AU in radius. Unless that’s all wrong somehow – which would be so much the better.

Regarding the tethered operation, over here I’m wondering if the mission should entail more than one 1-meter aperture telescope. Maybe four to detect seasonal changes in an orbital period. That would allow some deployment in advance, north, south, east and west about the stellar target depending on planetary orbital projection.

In the example above, the slewing maneuver at 550 AU for a 10 parsec away Earth around a Sol like star entails about 400,000 km radial motions. And the normal of the exoplanet orbital plane could be arbitrary with respect to the gravitational lens telescope. Though at 550 AUs, the orbital plane is likely to provide circular orbital speeds of about 1 km/sec or more. Though it sounds like continued increase radial distance past 550 AU is an objective.

After an exoplanet year or two of observation, there might be incentive to move on to another target. And if slewing means moving 400,000 km or so per year, for an 0.1 arcsecond planetary radius target, the setting up for the next one might take quite a while. For example, with 4 pi radian^2 celestial sphere area, there might be a high priority of near enough targets of 100 cases – but scattered all over the sky. As a wild guess, five degrees might separate each target. We could pick a speed based on propellant resources, but that is 1800 x the slew angle for the 400,000 km case – 720 million km.

I am sure that in enactment, it would be desired that the observatory would not be a one trick pony. On the other hand…

I wonder if there is an argument for sending a continuous stream of craft that spend overlapping amounts of time as part of the “lens observatory.” Once an operational minimum are in place, you can continuously improve on it and integrate gained knowledge into the stream rather than having decades between upgrades.

As knowledge accumulates, you could perhaps expand the window of usefulness so that older craft that would otherwise be exiting it continue to contribute, and meanwhile newer craft might drag that window inward as well. You could, in other words, “open the eye” further without ever “blinking.”

This seems conceptually more practical than either figuring out a propulsion scheme to slow down at the other end or being limited to a relatively short time at the focus. It also maybe loosens individual mass constraints, since you can string things out over time rather than needing bursts of perfection every few decades.

Even so, I don’t think mass will be a major constraint going forward. The idea that you need to be stingy with every milligram is largely a product of expendable rocketry, with which a mission like this would never happen anyway. The solar sails and complex self-assembly on decade timescales are probably unnecessary. Currently operating in-space propulsion technologies seem adequate (as a beginning).

Some topics, of course, one might simply peruse like a news item.

But this one has caught me up in some of the intermediate steps, trying to get an understanding. And a few things are falling into place. A remaining issue here is the following:

Assuming a planet is acquired in the vicinity of a star, say 10 parsecs away, then one might slew the observing telescope at 550 AUs distance from the sun to the direct line of sight to the planet. Thus, a fix is on a point source of light which is aquired through an effective solar coronagraph. The sun is blotted out by the on station telescope with the coronagraph, but the light from the planetary source is transmitted through the solar “lens” as a ring “signal” around its radius…

… And then it is reconstructed with some sort of deconvolution; e.g., a Fourier transform or some other inverse. Spectral data is extracted from planetary or stellar point sources, but the spectral data extraction is obtained by other transformations.

Can anyone throw any “light” on this one?