If space is infused with ‘dark energy,’ as seems to be the case, we have an explanation for the continuing acceleration of the universe’s expansion. Or to speak more accurately, we have a value we can plug into the universe to make this acceleration happen. Exactly what causes that value remains up for grabs, and indeed frustrates current cosmology, for something close to 70 percent of the total mass-energy of the universe needs to be comprised of dark energy to make all this work. Add on the mystery of ‘dark matter’ and we actually see only some 4 percent of the cosmos.

So there’s a lot out there we know very little about, and I’m interested in mission concepts that seek to probe these areas. The conundrum is fundamental, for as a 2017 study from NASA’s Innovative Advanced Concepts office tells me, “…a straightforward argument from quantum field theory suggests that the dark energy density should be tens of orders of magnitude larger than what is observed.” Thus we have what is known as a cosmological constant problem, for the observed values depart from what we know of gravitational theory and may well be pointing to new physics.

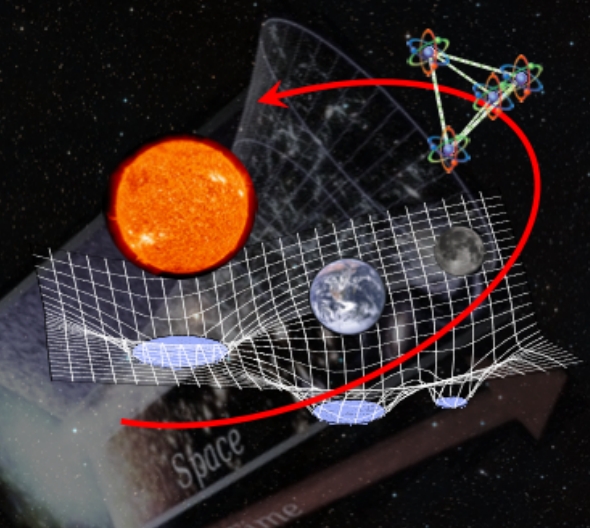

The report in question comes from Nan Yu (Jet Propulsion Laboratory) and collaborators, a Phase I effort titled “Direct probe of dark energy interactions with a Solar System laboratory.” It lays out a concept called the Gravity Probe and Dark Energy Detection Mission (GDEM) that would involve a tetrahedral constellation of four spacecraft, a configuration that allows gravitational force measurements to be taken in four simultaneous directions. These craft would operate about 1 AU from the Sun while looking for traces of a field that could explain the dark energy conundrum.

Now JPL’s Slava Turyshev has published a new paper which is part of a NIAC Phase II study advancing the GDEM concept. I’m drawing on the Turyshev paper as well as the initial NIAC collaboration, which has now proceeded to finalizing its Phase II report. Let’s begin with a quote from the Turyshev paper on where we stand today, one that points to critical technologies for GDEM:

Recent interest in dark matter and dark energy detection has shifted towards the use of experimental search (as opposed to observational), particularly those enabled by atom interferometers (AI), as they offer a complementary approach to conventional methods. Situated in space, these interferometers utilize ultra-cold, falling atoms to measure differential forces along two separate paths, serving both as highly sensitive accelerometers and as potential dark matter detectors.

Thus armed with technology, we face the immense challenge of such a mission. The key assumption is that the cosmological constant problem will be resolved through the detection of light scalar fields that couple to normal matter. Like temperature, a scalar field has no direction but only a magnitude at each point in space. This field, assuming it exists, would have to have a mass low enough that it would be able to couple to the particles of the Standard Model of physics with about the same strength as gravity. Were we to identify such a field, we would move into the realm of so-called ‘fifth forces,’ a force to be added to gravity, the strong nuclear force, the weak nuclear force and electromagnetism.

Can we explain dark energy by attempting to modify General Relativity? Consider that its effects are not detectable with current experiments, meaning that if dark energy is out there, its traces are suppressed on the scale of the Solar System. If they were not, the remarkable success scientists have had at validating GR would not have happened. A workable theory, then, demands a way to make the interaction between a dark energy field and normal matter dependent on where it occurs. The term being used for this is screening.

We’re getting deep into the woods here, and could go further still with an examination of the various screening mechanisms discussed in the Turyshev paper, but the overall implication is that the coupling between matter and dark energy could change in regions where the density of matter is high, accounting for our lack of detection. GDEM is a mission designed to detect that coupling using the Solar System as a laboratory. In Newtonian physics, the gravitational gradient tensor (CGT), which governs how the gravitational force changes in space, would have a zero trace value in a vacuum in regions devoid of mass. That’s a finding consistent with General Relativity.

But if in the presence of a dark energy field, the CGT trace value would be other than zero. The discovery of such a variance from our conventional view of gravity would be revolutionary, and would support theories deviating from General Relativity.

Image: Illustration of the proposed mission concept – a tetrahedral constellation of spacecraft carrying atomic drag-free reference sensors flying in the Solar system through special regions of interest. Differential force measurements are performed among all pairs of spacecraft to detect a non-zero trace value of the local field force gradient tensor. A detection of a non-zero trace, and its modulations through space, signifies the existence of a new force field of dark energy as a scalar field and shines light on the nature of dark energy. Credit: Nan Yu.

The constellation of satellites envisioned for the GDEM experiment would fly in close formation, separated by several thousand kilometers in heliocentric orbits. They would use high-precision laser ranging systems and atom-wave interferometry, measuring tiny changes in motion and gravitational forces, to search for spatial changes in the gravitational gradient, theoretically isolating any new force field signature. The projected use of atom interferometers here is vital, as noted in the Turyshev paper:

GDEM relies on AI to enable drag-free operations for spacecraft within a tetrahedron formation. We assume that AI can effectively measure and compensate non-gravitational forces, such as solar radiation pressure, outgassing, gas leaks, and dynamic disturbances caused by gimbal operations, as these spacecraft navigate their heliocentric orbits. We assume that AI can compensate for local non-gravitational disturbances at an extremely precise level…

From the NIAC Phase 1 report, I find this:

The trace measurement is insensitive to the much stronger gravity field which satisfies the inverse square law and thus is traceless. Atomic test masses and atom interferometer measurement techniques are used as precise drag-free inertial references while laser ranging interferometers are employed to connect among atom interferometer pairs in spacecraft for the differential gradient force measurements.

In other words, the technology should be able to detect the dark energy signature while nulling out local gravitational influences. The Turyshev paper develops the mathematics of such a constellation of satellites, noting that elliptical orbits offer a sampling of signals at various heliocentric distances, which improves the likelihood of detection. Turyshev’s team developed simulation software that models the tetrahedral spacecraft configuration while calculating the trace value of the CGT. This modeling along with the accompanying analysis of spacecraft dynamics demonstrates that the needed gravitational observations are within range of near-term technology.

Turyshev sums up the current state of the GDEM concept this way:

…the Gravity Probe and Dark Energy Detection Mission (GDEM) mission is undeniably ambitious, yet our analysis underscores its feasibility within the scope of present and emerging technologies. In fact, the key technologies required for GDEM, including precision laser ranging systems, atom-wave interferometers, and Sagnac interferometers, either already exist or are in active development, promising a high degree of technical readiness and reliability. A significant scientific driver for the GDEM lies in the potential to unveil non-Einsteinian gravitational physics within our solar system—a discovery that would compel a reassessment of prevailing gravitational paradigms. If realized, this mission would not only shed light on the nature of dark energy but also provide critical data for testing modern relativistic gravity theories.

So we have a mission concept that can detect dark energy within our Solar System by measuring deviations found within the classic Newtonian gravitational field. And GDEM is hardly alone as scientists work to establish the nature of dark energy. This is an area that has fostered astronomical surveys as well as mission concepts, including the Nancy Grace Roman Space Telescope, the European Space Agency’s Euclid, the Vera Rubin Observatory and the DESI collaboration (Dark Energy Spectroscopic Instrument). GDEM extends and complements these efforts as a direct probe of dark energy which could further our understanding after the completion of these surveys.

There is plenty of work here to bring a GDEM mission to fruition. As Turyshev notes in the paper: “Currently, there is no single model, including the cosmological constant, that is consistent with all astrophysical observations and known fundamental physics laws.” So we need continuing work on these dark energy scalar field models. From the standpoint of hardware, the paper cites challenges in laser linking for spacecraft attitude control in formation, maturation of high-sensitivity atom interferometers and laser ranging with one part per 1014 absolute accuracy. Identifying such technology gaps in light of GDEM requirements is the purpose of the Phase II study.

As I read this, the surveys currently planned should help us hone in on dark energy’s effects on the largest scales, but its fundamental nature will need investigation through missions like GDEM, which would open up the next phase of dark energy research. The beauty of the GDEM concept is that it does not depend upon a single model, and the NIAC Phase I report notes that it can be used to test any modified gravity models that could be detected in the Solar System. As to size and cost, this looks like a Large Strategic Science Mission, what NASA used to refer to as a Flagship mission, about what we might expect from an effort to answer a question this fundamental to physics.

The paper is Turyshev et al., “Searching for new physics in the solar system

with tetrahedral spacecraft formations,” Phys. Rev. D 109 (25 April 2024), 084059 (abstract / preprint).

It great that atom interferometry is being mentioned since it allows exploration of gravitational waves far outside the sensitivity range of LIGO and similar observatories. However, there is much work to be done. There are a number of terrestrial experiments underway to refine the instruments and test their ability to make predicted observations.

Although focused on long baseline interferometry, I found the following summary and survey of atom interferometry very helpful. It’s long and technical but a lot can still be learned from skimming through it.

https://arxiv.org/abs/2310.08183

The z axis (a function of sin i) is in the equations, but I’m not reading where they flag it. They should!

They’re talking a trajectory e=0.59 and perihelion 0.6 AU. Venus orbits a near-circle at 0.723 AU. The satellites will need to run over 3.39° inclination.

During the big bang there must have been enormous amounts of gravity waves about and surely they would have altered the quantum vacuum energy locally. Perhaps dark energy is merely matter entering into a lower quantum vacuum energy region and therefore accelerating into it, a bit like going down hill but over an enormous distance.

I have never seen any considerations of gravitational effects in the Big Bang theory, in spite of densities well beyond the critical densities for a black hole formation. This is one reason I have large doubts that we understand Big Bang.

There seems to be a number of different phenomena to account for.

1. The Hubble constant seems to have different values using different ways to measure it.

2. There have been suggestions to revive the idea of oscillations as the universe expands and then collapses (from JWST observations of the early universe) which would conflict with the current observations that the universe will expand indefinitely.

3. Some suggestions that String Theory is incompatible with DE.

Is there some explanation that resolves these observations and theories with an elegant theory? Or will the GDEM measurements add further complexity to the phenomena?

I am pleased that these experiments are at the cutting edge of science, rather than filling in knowledge gaps. The LHC is a very costly machine that AFAIK has only confirmed the Higgs particle. At this point governments are looking to fund a far larger machine. If measurements to detect the presence (but not, apparently, the nature) of DE succeed, this would seem to pave the way for the search for the nature of DE. Or these experiments may detect nothing, similar to the status of DM.

Do we need another Newton/Einstein to find a solution, or do we have enough physicists and cosmologists already to solve the problem? Or will an AI prove to be the agent that comes up with the needed theory?

Maybe there is NO all-encompassing “theory of everything” that ties up all the loose ends in one neat set of equations that will fit on a T-shirt. Perhaps the universe can only be partially understood as a set of totally different approximations that only work under certain limited conditions. The Laws of Nature may be like the laws of English grammar, a set of contradictory statements that give semi-consistent results only some of the time, and only under certain circumstances.

We keep on searching for meaning in nature, when perhaps meaning is the most subjective concept of all. Maybe its all in our minds after all. There may be a reason for everything, but a purpose to nothing.

https://thirdmindbooks.cdn.bibliopolis.com/pictures/2226.jpg?auto=webp&v=1440450143

There may not be a TOE, but the physical sciences do tend to operate that way as there is no algorithmic driver to create diversity as there is in biology and the social sciences/humanities. Particles do not change their fundamental properties (AFAIK) and the macro structures all the way up to galactic structures seem to follow rules that can be described relatively simply, based on rules from lower levels. Emergent phenomena are based on simpler rules and not new ones. While I can accept constants may not be constants over long periods due to underlying rules, I think major discrepancies in observations of what those constants are and the resulting phenomena imply we are using the wrong rules. Otherwise we end up with rules that are like the platonic structures for each level of the heavens, with no underlying reasons other than the mind of humans.

If the rules seem to change arbitrarily, it might imply agency behind those rules that operate. I would prefer not to be living in some simulation that requires different rules to make it work, for the same reason that I prefer algorithms to explain evolution rather than agency for living organisms. (But what I prefer is irrelevant to the universe.)

The problem I have with the Lamda cold dark matte theory is that there is absolutely no concrete physical evidence for dark matter and dark energy. Like Allex Tolley I think that these are imaginary constructs until proven. It is rather far fetched that something can be detected only on the large scales, but there are no physical, provable evidence in the laboratory or nearby. How can we differentiate between the gravitational lensing caused by dark matter and ordinary matter. What we are seeing could just be gravitational lensing caused by ordinary matter since these are both controlled by general relativity.

Oh,really? Relativity and quantum? Cause and effect vs probability functions? Particles or waves? And Classical physics works in most cases, but not all the time, or all environments. (And yet, other models reduce to it at our temporal and spatial domain!)

Reality, like the elephant being fondled by the blind men, seems to look different depending on how you study it–or should I say, how you think about it.

Emergent phenomena, the problem of consciousness, fractal mathematics, cellular automata, the entire universe seems to operate simultaneously under contradictory and inconsistent rules–or are we simply too crude to link them all together neatly yet. And can we ever correctly understand any system we are a subset of?

As for biological systems, yes, I believe in Darwinian natural selection, it is self-evident how adaptations to environmental change arises in organisms. Its easy to see how white-furred creatures tend to survive more in a snowy environment and pass their characteristics preferentially to their offspring. But organisms don’t just possess one characteristic for evolution to work on, they have untold billions in their internal chemistry, anatomy and physiology. How can natural selection work when all those characteristics are competing simultaneously in every life form? In any living thing, the number of items that need to simultaneously evolve to fit the environment far exceeds the number of individuals in that species. How can Darwinian statistics operate in a matrix where the number of evolving traits is far greater than the number of reproducing members of the species. There may be billions of genus Homo around today, but the human form and brain was the result of a species that for most of its existence only consisted of several hundred thousand individuals.

Maybe it will all eventually come together neatly under one grand unified model…but we really don’t know, do we? We are just biased that way. That’s just how we want it to turn out.

If you assume the earth is the center of the universe you can still navigate ships, devise a calendar and predict eclipses. And a perfect understanding of Newtonian mechanics is no advantage to pitching a curve ball.

No, I’m a devout materialist, I do not believe in vitalism, or magic, and I certainly don’t believe in Jesus. But I do believe we are missing something.

“Contemplating the teeming life of the shore, we have an uneasy sense of the communication of some universal truth that lies just beyond our grasp. What is the message signaled by the hordes of diatoms, flashing their microscopic lights in the night sea? What truth is expressed by the legions of barnacles, whitening the rocks with their habitations, each small creature within finding the necessities of its existence in the sweep of the surf? And what is the meaning of so tiny a being as the transparent wisp of protoplasm that is a sea lace, existing for some reason inscrutable to us — a reason that demands its presence by the trillion amid the rocks and weeds of the shore? The meaning haunts and ever eludes us, and in its very pursuit we approach the ultimate mystery of Life itself.”

from ‘The Edge of the Sea’

– Rachel Carson (1955)

Astrophysicists like physicists do tend to stick to principles which are based on the physical cosmology and the cause and effect phenomenal world which by no means is the ultimate truth. Their idea of a theory of everything is limited to physical cosmology, but does not invalidate a religious or non physical cosmology. I that is what you mean, I completely agree with you. In astrophysics like physics, past theories are discarded which turn out to be imaginary without realizing how powerful the imagination is. Many ideas that are physically valid today have come through the imagination first as in science fiction. Such theories like the Ether theory have a symbolic value which would only be interesting to a depth psychologist or philosopher.

@Henry

Relativity and quantum models both describe physics well. So far using quantum models to align relativity to quantum theory has failed, but that doesn’t mean that it will always fail, or that relativity should be defined at the quantum level.

Where the wave-particle duality once occurred, quantum electrodynamics seems to have resolved this (IMHO – as I am not a physicist.)

Quantum models still suffer from the Copenhagen vs many worlds approaches.

Consciousness is not understood at all, but I really doubt it is something fundamentally different. Now that it is becoming more aligned with Doug Hofstadter’s view as a continuum, simpler organisms may become the model organisms to test theories, rather than more recently evolved mammals.

Rachel Carson was an excellent writer, but she was writing just 2 years after the discovery of the structure of DNA and the extraordinary work in molecular biology and evolution that has been done since. We can have some “Gaia” sense/worship if we choose, but I don’t see it as necessary. I remain a reductionist rather than a holist. As for the many traits that you seem to imply render “Darwinian statistics” inoperable, there is no reason to assume all traits are equally important. Pepper moth populations did evolve due to the color of their wings in industrializing Britain, regardless of other traits in their genes. Niles Eldridge was resentful of the selfish-gene promotors like Dawkins, but his claims of meta processes governing evolutionary forms have yet to be demonstrated.

We use simplified higher rules to express phenomena because the lower-level rules are too complex to use and are unnecessary, although some supercomputers could do them. Richard Feinman taught the details of QED, but it takes a lot of computation to end up with the same values as the experimental observations. His version is likely closer to reality, but we do not need it for calculations. However, the use of simplified rules is not the same as saying that the universe uses different rules for these phenomena. It only indicates that the math we use is different. If there is one thing that intrigues me about math is how disparate areas of math are being shown by theorists to have different views of each other. I see that as a reflection of the apparent disparity between physical descriptions of phenomena.

Interesting thoughts on massive gravity:

https://iai.tv/articles/new-theory-of-gravity-solves-accelerating-universe-claudia-de-rham-auid-2834

Interesting, but I don’t think that we can create any new theories of physics at this late date. In otherwords, we know too much about our physical world and universe, so can’t throw away what we know before and make completely new rules. Consequently, we should always assume that first principles are valid everywhere in the universe and must match observations. If observations they don’t match general relativity, they our observations are in error. We’ve just have too much actual, physical evidence to support it. Einstein rules on the large scales.

As someone smart once said “If I had an hour to solve a problem, I’d spend 55 minutes thinking about the problem and 5 minutes thinking about solutions.”

I’d suggest first eliminating attempts to resolve “…a straightforward argument from quantum field theory suggests that the dark energy density should be tens of orders of magnitude larger than what is observed.” There is something wrong with that straightforward argument. But it does identify a theoretical line thought that should be abandoned. Also abandon String Theory – it has enough problems of its own… but the sunk cost fallacy drives it on.

Let’s get a core problem statement and then some clever minds ponder…

We need to better understand the variance between different efforts to measure the constants (cosmological, Hubble) and plot this variance as well as we can. Perhaps GEDM is intended to help with this measurement.

We need to clarify the assumptions currently being made in our theoretical frameworks and find experimental methods to challenge each of them. Some of the assumptions are likely to be invalid considering the empirical data.

What’s left?

How is this much different from Grace satellites other than it’s in solar orbit rather than Earth orbit.

https://en.wikipedia.org/wiki/GRACE_and_GRACE-FO

I think it should also do solar weather, so we are not just looking at nearside of the sun.

Also we should be looking at solar polar regions.

Make a lot of them, and launch a lot of them.

One might be tempted to make something like Starlink satellites

for the solar system.

Some links of note:

Gravitational lasers?

https://www.livescience.com/space/black-holes/einsteins-predictions-mean-rare-gravitational-lasers-could-exist-throughout-the-universe-new-paper-claims

This is quite interesting

https://phys.org/news/2024-01-wide-binary-stars-reveals-evidence.amp

Newtonian effects break down about 2000 Astronomical Units out with an acceleration of 1 nanometer per second squared:

I have wondered if there is a way to hitch a ride on the universe…

https://www.trekbbs.com/threads/the-nature-of-the-universe-time-travel-and-more.313265/page-20

Electrogravitics yet?

https://phys.org/news/2023-07-combining-continuum-mechanics-einstein-field.html

https://phys.org/news/2024-01-astrophysicist-theory-gravity-law.html

Dark Energy has a home in the tachocline?

https://phys.org/news/2021-09-dark-energy-scientists-possibility.html

More:

https://phys.org/news/2024-05-cosmic-glitch-gravity-strange-behavior.html

https://phys.org/news/2024-05-reveals-quantumness-gravity.html