Centauri Dreams

Imagining and Planning Interstellar Exploration

Finding a Terraforming Civilization

Searching for biosignatures in the atmospheres of nearby exoplanets invariably opens up the prospect of folding in a search for technosignatures. Biosignatures seem much more likely given the prospect of detecting even the simplest forms of life elsewhere – no technological civilization needed – but ‘piggybacking’ a technosignature search makes sense. We already use this commensal method to do radio astronomy, where a primary task such as observation of a natural radio source produces a range of data that can be investigated for secondary purposes not related to the original search.

So technosignature investigations can be inexpensive, which also means we can stretch our imaginations in figuring out what kind of signatures a prospective civilization might produce. The odds may be long but we do have one thing going for us. Whereas a potential biosignature will have to be screened against all the abiotic ways it could be produced (and this is going to be a long process), I suspect a technosignature is going to offer fewer options for false positives. I’m thinking of the uproar over Boyajian’s Star (KIC 8462852), where the false positive angles took a limited number of forms.

If we’re doing technosignature screening on the cheap, we can also worry less about what seems at first glance to be the elephant in the room, which is the fact that we have no idea how long a technological society might live. The things that mark us as tool-using technology creators to distant observers have not been apparent for long when weighed against the duration of life itself on our planet. Or maybe I’m being pessimistic. Technosignature hunter Jason Wright at Penn State makes the case that we simply don’t know enough to make statements about technology lifespans.

On this point I want to quote Edward Schwieterman (UC-Riverside) and colleagues from a new paper, acknowledging Wright’s view that this argument fails because the premise is untested. We don’t actually know whether non-technological biosignatures are the predominant way life presents itself. Consider:

In contrast to the constraints of simple life, technological life is not necessarily limited to one planetary or stellar system, and moreover, certain technologies could persist over astronomically significant periods of time. We know neither the upper limit nor the average timescale for the longevity of technological societies (not to mention abandoned or automated technology), given our limited perspective of human history. An observational test is therefore necessary before we outright dismiss the possibility that technospheres are sufficiently common to be detectable in the nearby Universe.

So let’s keep looking, which is what Schwieterman and team are advocating in a paper focusing on terraforming. In previous articles on this site we’ve looked at the prospect of detecting pollutants like chlorofluorocarbons (CFCs), which emerge as byproducts of industrial activity, but like nitrogen dioxide (NO₂) these industrial products seem a transitory target, given that even in our time the processes that produce them are under scrutiny for their harmful effect on the environment. What the new paper proposes is that gases that might be produced in efforts to terraform a planet would be longer lived as an expanding civilization produced new homes for its culture.

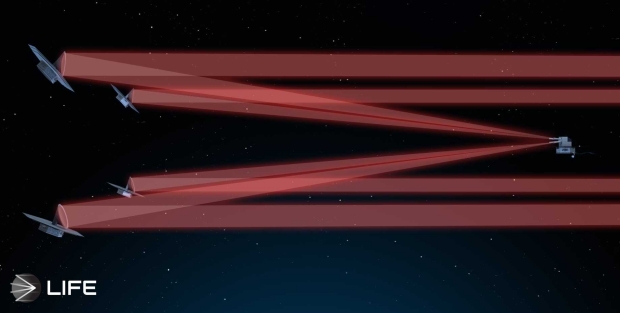

Enter the LIFE mission concept (Large Interferometer for Exoplanets), a proposed European Space Agency observatory designed to study the composition of nearby terrestrial exoplanet atmospheres. LIFE is a nulling interferometer working at mid-infrared wavelengths, one that complements NASA’s Habitable Worlds Observatory, according to its creators, by following “a complementary and more versatile approach that probes the intrinsic thermal emission of exoplanets.”

Image: The Large Interferometer for Exoplanets (LIFE), funded by the Swiss National Centre of Competence in Research, is a mission concept that relies on a formation of flying “collector telescopes” with a “combiner spacecraft” at their center to realize a mid-infrared interferometric nulling procedure. This means that the light signal originating from the host star of an observed terrestrial exoplanet is canceled by destructive interference. Credit: ETH Zurich.

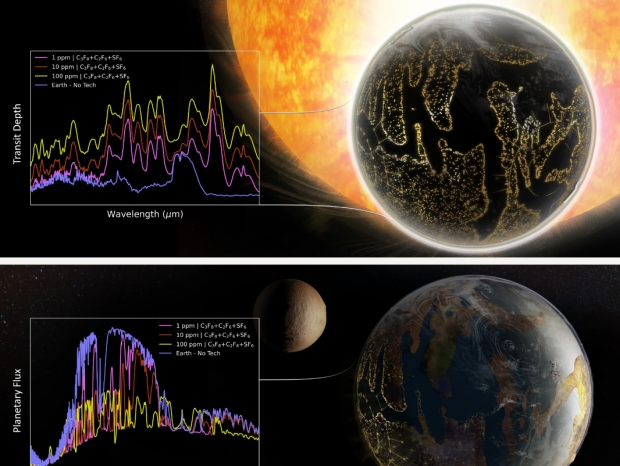

In search of biosignatures, LIFE will collect data that can be screened for artificial greenhouse gases, offering high resolutions for studies in the habitable zones of K- and M-class stars in the mid-infrared. The Schwieterman paper analyzes scenarios in which this instrument could detect fluorinated versions of methane, ethane, and propane, in which one or more hydrogen atoms have been replaced by fluorine atoms, along with other gases. The list includes Tetrafluoromethane (CF₄), Hexafluoroethane (C₂F₆), Octafluoropropane (C₃F₈), Sulfur hexafluoride (SF₆) and Nitrogen trifluoride (NF₃). These gases would not be the incidental byproducts of other industrial activity but would represent an intentional terraforming effort, a thought that has consequences.

After all, any attempt to transform a planet the way some people talk about terraforming Mars would of necessity be dealing with long-lasting effects, and terraforming gases like these and others would be likely to persist not just for centuries but for the duration of the creator civilization’s lifespan. Adjusting a planetary atmosphere should present a large and discernable spectral signature precisely in the infrared wavelengths LIFE will specialize in, and it’s noteworthy that gases like those studied here have long lifetimes in an atmosphere and could be replenished.

LIFE will work via direct imaging, but the study also takes in detection through transits by calculating the observing time needed with the James Webb Space Telescope’s instruments as applied to TRAPPIST-1 f. The results make the detection of such gases with our current technologies a clear possibility. As Schwieterman notes, “With an atmosphere like Earth’s, only one out of every million molecules could be one of these gases, and it would be potentially detectable. That gas concentration would also be sufficient to modify the climate.”

Indeed, working with transit detections for TRAPPIST-1 f produces positive results with JWST’s MIRI Low Resolution Spectrometer (LRS) and NIRSpec instrumentation (with “surprisingly few transits”). But while transits are feasible, they’re also more scarce, whereas LIFE’s direct imaging in the infrared takes in numerous nearby stars.

From the paper:

We also calculated the MIR [mid infrared] emitted light spectra for an Earth-twin planet with 1, 10, and 100 ppm of CF₄, C₂F₆, C₃F₈, SF₆, and NF₃… and the corresponding detectability of C₂F₆, C₃F₈, and SF₆ with the LIFE concept mission… We find that in every case, the band-integrated S/Ns were >5σ for outer habitable zone Earths orbiting G2V, K6V, or TRAPPIST-1-like (M8V) stars at 5 and 10 pc and with integration times of 10 and 50 days. Importantly, the threshold for detecting these technosignature molecules with LIFE is more favorable than standard biosignatures such as O₃ and CH₄ at modern Earth concentrations, which can be accurately retrieved… indicating meaningfully terraformed atmospheres could be identified through standard biosignatures searches with no additional overhead.

Image: Qualitative mid-infrared transmission and emission spectra of a hypothetical Earth-like planet whose climate has been modified with artificial greenhouse gases. Credit: Sohail Wasif/UCR.

The choice of TRAPPIST-1 is sensible, given that the system offers seven rocky planet targets aligned in such a way that transit studies are possible. Indeed, this is one of the most highly studied exoplanetary systems available. But the addition of the LIFE mission’s instrumentation shows that direct imaging in the infrared expands the realm of study well beyond transiting worlds. So whereas CFCs are short lived and might flag transient industrial activity, the fluorinated gases discussed in this paper are chemically inert and represent potentially long-lived signatures for a terraforming civilization.

The paper is Schwieterman et al., “Artificial Greenhouse Gases as Exoplanet Technosignatures,” Astrophysical Journal Vol. 969, No. 1 (25 June 2024), 20 (full text).

Space Exploration and the Transformation of Time

Every now and then I run into a paper that opens up an entirely new perspective on basic aspects of space exploration. When I say ‘new’ I mean new to me, as in the case of today’s paper, the relevant work has been ongoing ever since we began lofting payloads into space. But an aspect of our explorations that hadn’t occurred to me was the obvious question of how we coordinate time between Earth’s surface and craft as distant as Voyager, or moving as close to massive objects as Cassini. We are in the realm of ‘time transformations,’ and they’re critical to the operation of our probes.

Somehow considering all this in an interstellar sense was always much easier for me. After all, if we get to the point where we can push a payload up to relativistic speeds, the phenomenon of time dilation is well known and entertainingly depicted in science fiction all the way back to the 1930s. But I remember reading a paper from Roman Kezerashvili (New York City College of Technology) that analyzed the relativistic effects of a close solar pass upon a spacecraft, the so-called ‘sundiver’ maneuver. Kezerashvili and colleague Justin Vazquez-Poritz showed that without calculating the effects of General Relativity induced by the Sun’s mass at perihelion, the craft’s course could be seriously inaccurate, its destination even missed entirely. Let me quote this:

…we consider a number of general relativistic effects on the escape trajectories of solar sails. For missions as far as 2,550 AU, these effects can deflect a sail by as much as one million kilometers. We distinguish between the effects of spacetime curvature and special relativistic kinematic effects. We also find that frame dragging due to the slow rotation of the Sun can deflect a solar sail by more than one thousand kilometers.

Clearly, what seem like tiny effects get magnified as we examine their consequences on spacecraft moving under differing conditions of velocity and gravity. The measurement of time is a key aspect of this. And even the tiniest adjustments are critical if we are to build communication networks that operate accurately even in so close an environment as that between the Earth and the Moon. Thus the occasion for this musing, a paper from the Jet Propulsion Laboratory’s Slava Turyshev and colleagues that discusses how the effects of gravity and motion can be understood between the Earth and the network of assets we’re building around the Moon and on its surface. Exploration in this space will depend upon synchronizing our tools.

The Turyshev paper puts it this way:

As our lunar presence expands, the challenge of synchronizing an extensive network of assets on the moon and in cis-lunar space with Earth-based systems intensifies. To address this, one needs to establish a common system time for all lunar assets. This system would account for the relativistic effects that impact time measurement due to different gravitational and motion conditions, ensuring precise and efficient operations across cislunar space.

And in fact a recent memorandum from the White House Cislunar Technology Strategy Interagency Working Group was released on April 2 of this year noting the “policy to establish time standards at and around celestial bodies other than Earth to advance the National Cislunar S&T [Science and Technology] Strategy.” So here is a significant aspect of our growth into a cislunar culture that is growing organically out of our current explorations, and will be critical as we expand deeper into the system. One day we may go interstellar, but we won’t do it with a Solar System-wide infrastructure.

As an avid space buff, I should have been aware of this all along, especially since gravitational time dilation is easily demonstrated. A clock on the lunar surface, for example, runs a bit faster than a clock on Earth. Because time runs slower closer to a massive object, our GPS satellites have to deal with this effect all the time. Clearly, any spacecraft moving away from Earth experiences time in ways that vary according to its velocity and the gravitational fields it encounters during the course of its mission. These effects, no matter how minute, have to be plugged into operational software adjusting for the variable passage of time.

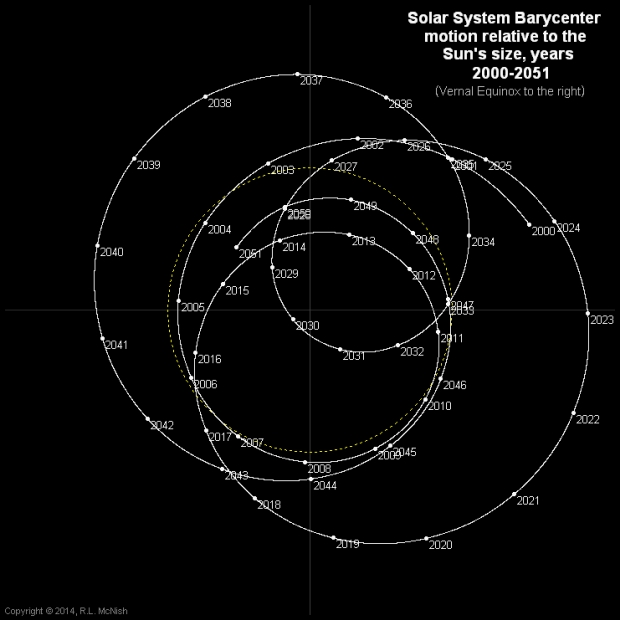

So moving from time and space coordinates in one inertial frame (the Earth’s surface), we need to reckon with their manifestation in another inertial frame, that aboard a spacecraft, to make clocks synchronize accurately and hence enable essential navigation, not to mention communications and scientific measurements. The necessary equations to handle this task are known in the trade as ‘relativistic time transformations,’ and it’s critical to have a reference system like the Solar System Barycentric coordinate frame (SSB) that is built around the center of mass of the Solar System itself. This allows accurate trajectory calculations for space navigation.

Image: The complexity of establishing reference systems for communications and data return is suggested by movements in the Solar System’s barycenter itself, shown here in a file depicting its own motion. Credit: Larry McNish / via Wikimedia Commons.

As you would guess, the SSB coordinate frame has been around for some time, becoming formalized as we began sending spacecraft to other planetary targets. It was a critical part of mission planning for the early Pioneer probes. Synchronization with resources on Earth occurs when data from a spacecraft are time-stamped using SSB time so that they can be converted into Earth-based time systems. Supervising all this is an international organization called the International Earth Rotation and Reference Systems Service (IERS), which maintains time and reference systems, with its central bureau hosted by the Paris Observatory.

‘Systems’ is in the plural not just because we have an Earth-based time and a Solar System Barycentric coordinate frame, but also because there are other time scales. We use Coordinated Universal Time as a global standard, and a familiar one. But there are others. There is, for example, an International Atomic Time (TAI – from the French ‘Temps Atomique International’), a standard that is based on averaging atomic clocks around the world. There is also a Terrestrial Time (TT), which adds to TAI a scale reflecting time on the surface of the Earth without the effect of Earth’s rotation.

But we can’t stop there. Universal Time (UT) adjusts for location, affected by the longitude and the polar motion of the Earth, both of which have relevance to celestial navigation and astronomical observations. Barycentric Dynamical Time (TDB, from ‘Temps Dynamique Barycentrique’) accounts for gravitational time dilation effects, while Barycentric Coordinate Time (TCB, from ‘Temps Coordonné Barycentrique’) is centered, as mentioned before, on the Solar System’s barycenter but excluding gravitational time dilation effects near Earth’s orbit. All of these transformations aim to account for relativistic and gravitational effects to keep observations consistent.

These time transformations (i.e., the equations necessary for accounting for these differing and crucial effects) have been a part of our space explorations for a long time, but they hover beneath the surface and don’t usually make it into the news. But consider the complications of a mission like New Horizons, moving into the outer Solar System and needing to account not only for the effects of that motion but the gravitational time dilation effects of an encounter not only with Pluto but the not insignificant mass of Charon, all of this coordinated in such a way that data returning to Earth can be precisely understood and referenced according to Earth’s clocks.

The Turyshev paper focuses on the transformations between Barycentric Dynamical Time and time on the surface of the Moon, and the needed expressions to synchronize Terrestrial Time with Lunar Time (TL). We’re going to be building a Solar System-wide infrastructure one of these days, an effort that is already underway with the gradual push into cislunar space that will demand these kinds of adjustments. These relativistic corrections will be needed to work in this environment with complete coordination between Earth’s surface, the surface of the Moon, and the Solar System’s barycenter.

The paper produces a new Luni-centric Coordinate Reference System (LCRS). We are talking about a lunar presence involving numerous landers and rovers in addition to orbiting craft. It is the common time reference that ensures accurate timing between all these vehicles and also allows autonomous systems to function while maintaining communication and data transmission. Moreover, the LCRS is needed for navigation:

An LCRS is vital for precise navigation on the Moon. Unlike Earth, the lunar surface presents unique challenges, including irregular terrain and the absence of a global magnetic field. A dedicated reference system allows for precise positioning and movement of landers and rovers, ensuring they can target and reach specific, safe landing sites. This is particularly important for resource utilization, such as locating and extracting water ice from the lunar poles, which requires high positional accuracy.

Precise location information through these time and position transformations will be, clearly, a necessary step wherever we go in the Solar System, and a vital part of shaping the activity that will build that system-wide infrastructure so necessary if we are to seriously consider future probes into the Oort Cloud and to other stars. Turyshev and team refer to all this as the establishment of a ‘geospatial context’ within which the placement of instruments can be optimized, but the work also becomes vital for everything from the creation of bases to necessary navigational tools. For the immediate future, we are firming up the steps that will give us a foothold on the Moon.

The paper is Turyshev et al., “Time transformation between the solar system barycenter and the surfaces of the Earth and Moon,” now available as a preprint. If you want to really dig into time transformations, the IERS Conventions document is available online. The Roman Kezerashvili paper cited above is R. Ya. Kezerashvili and J. F. Vazques-Poritz, “Escape Trajectories of Solar Sails and General Relativity,” Physics Letters B Volume 681, Issue 5 (16 November 2009), pp. 387-390 (abstract).

The Ambiguity of Exoplanet Biosignatures

The search for life on planets beyond our Solar System is too often depicted as a binary process. One day, so the thinking goes, we’ll be able to directly image an Earth-mass exoplanet whose atmosphere we can then analyze for biosignatures. Then we’ll know if there is life there or not. If only the situation were that simple! As Alex Tolley explains in his latest essay, we’re far more likely to run into results that are so ambiguous that the question of life will take decades to resolve. Read on as Alex delves into the intricacies of life detection in the absence of instruments on a planetary surface.

by Alex Tolley

“People tend to believe that their perceptions are veridical representations of the world, but also commonly report perceiving what they want to see or hear.” [17]

Evolution has likely selected us to see dangerous things whether they are there or not. Survival favors avoiding a rustling bush that may hide a saber-toothed cat. We see what we are told to see, from gods in the sky that may become etched as a group of bright stars in the sky. The post-Enlightenment world has not eradicated those motivated perceptions, as the history of astronomy and astrobiology demonstrates.

There have been some famous misperceptions of life and ETI in the past. Starting with Giovanni Schiaparelli’s perceptions of channels (canali) on Mars, followed by Lowell’s creation of a Martian civilization from whole cloth based on his interpretation of canali as canals. As a consensus seemed to be building that plants existed on Mars, in 1957 and later in 1959 Sinton claimed that he had detected absorption bands from Mars indicating organic matter probably pointing to plant life. These “Sinton bands” later proved to be the detection of deuterium in the Earth’s atmosphere.

I note in passing that Dr. Wernher Von Braun assumed that the Martian atmosphere had a surface pressure 1/12th of Earth’s, based on a few telescopic and spectrographic observations and calculations. This assumption was used in his The Mars Project [23] and Project Mars: A Technical Tale [24], to design the winged landers that were depicted in the movie “The Conquest of Space”. How wrong those assumptions proved!

Briefly, in 1967, regular radio pulses discovered by astrophysicist Jocelyn Bell were thought to be a possible ET beacon, perhaps influenced by the interest in Frank Drake’s initial Project Ozma search for radio signals that was started in 1960. They were quickly identified as emitted by a pulsar, a new degenerate stellar type.

Not to be outdone, nn 1978, Fred Hoyle and Chandra Wickramasinghe published their popular science book – Lifecloud: The Origin of Life in the Universe [5]. In the chapter “Planets of Life”, they made the inference that the spectra they observed around stars most closely matched cellulose (a macromolecule of simple hexose sugars, composed of the common elements carbon, hydrogen, and oxygen, and the major material of plants). This became the basis of their claims of the ubiquity of life, panspermia, and cometary delivery of viruses to Earth. Again, this assertion of the ubiquity of life proved to be incorrect based on faulty logical inference, which was in turn based on the incorrect interpretation of cellulose as the molecule identified from the light. It remains a cautionary tale.

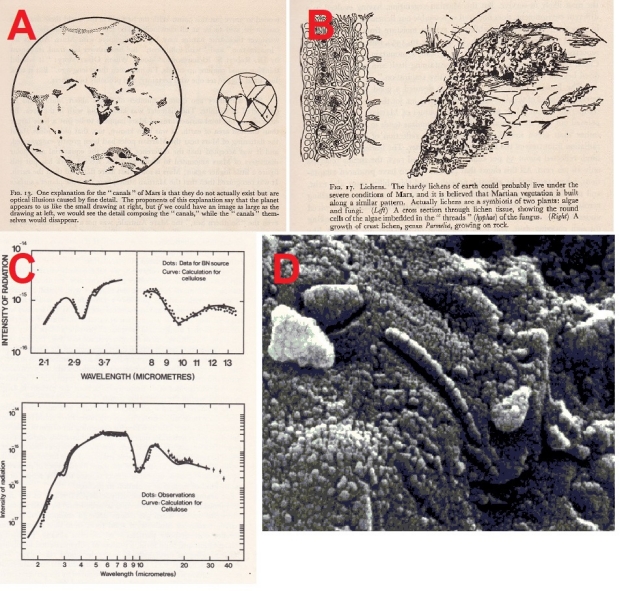

Figure 1. A) Illusory lines between objects are interpreted as canals. – Source [16] B) The assumption that lichen-like plants were abundant and produced teh dark areas on Mars. Source [16] C) The spectral fit that convinced Hoyle and Wickramasinghe that they had detected cellulose around other stars. – Source [5] D) View of a purported “fossil” in the famous Mars meteorite, Allan Hills 84001. Doubters argue that the feature is too small to be a sign of Mars life. (Image credit: NASA).

The latest possible misinterpretation may be the results of the Hephaistos Project that claimed 7 stars had anomalous longer wavelength intensities that might indicate a technosignature of a Dyson sphere or swarm [19]. Almost immediately a natural explanation appeared suggesting a data contamination issue with a distant galaxy in the same line of sight.

These historical observational misinterpretations are worth bearing in mind, as the odds are we’ll find life beyond our Solar System, if only because of the vast number of planets that have conditions that could bear life on their surfaces, perhaps even an “Earth 2.0” We will be restricted to data from the electromagnetic (em) spectrum with no hope of acquiring the ground truth from probes sent to those systems.

Returning to the early search for life, the limitations of 1960s technology optical astronomy were highlighted in the US publication of Intelligent Life in the Universe by Iosif Samuilovich Shklovsky, with additional content by Sagan [4], as well as by earlier papers by Sagan [3]. Carl Sagan noted that hypothetical Martian astronomers would not be able to confirm the detection of life and intelligence on Earth using the many terrestrial techniques available at the time, and highlighted some of the issues, including the resolution needed to detect human artifacts, and the ambiguity of spectral data. He asserted that Martian astronomers required ground truth, i.e. a probe in Earth’s orbit or a lander.

From the paper [3]

Moreover, with Earth at 1 km resolution “no seasonal variations in the contrast of vegetation could be detected…. [I]t is estimated that better than 35 m resolution, with global coverage, would be needed to detect life on a hypothetical Earth with no intelligent life.

The best telescopic resolution based on a hypothetical solar gravitational line telescope (SGL) would be far too low to detect life without ambiguity. A simulated image is shown in Figure 2 below.

Figure 2. A 1024×1024 pixel image simulation of an exoplanet at a distance of up to 30 parsecs, imaged with a possible solar gravitational lens telescope, has a surface resolution of a few 10s of km per pixel, Credit: Turyshev et al., “Direct Multipixel Imaging and Spectroscopy of an Exoplanet with a Solar Gravity Lens Mission,” Final Report NASA Innovative Advanced Concepts Phase II. – Source [26]

Given the limitations outlined by Sagan and Shklovskii, how might we detect that exoplanet life soon? In an influential 1967 paper, “Life Detection by Atmospheric Analysis”, Hitchcock and Lovelock argued that a living planet would create disequilibria in the atmospheric gases of the planet [1].

“Living systems maintain themselves in a state of relatively low entropy at the expense of their nonliving environments. We may assume that this general property is common to all life in the solar system. On this assumption, evidence of a large chemical free energy gradient between surface matter and the atmosphere in contact with it is evidence of life. Furthermore, any planetary biota which interacts with its atmosphere will drive that atmosphere to a state of disequilibrium which, if recognized, would also constitute direct evidence of life, provided the extent of the disequilibrium is significantly greater than abiological processes would permit. It is shown that the existence of life on Earth can be inferred from knowledge of the major and trace components of the atmosphere, even in the absence of any knowledge of the nature or extent of the dominant life forms. Knowledge of the composition of the Martian atmosphere may similarly reveal the presence of life there.”

This proxy has become the core approach for searching for biosignatures of exoplanets, as we are rapidly evolving the technology to detect gas mixtures via transmission spectroscopy of these worlds.

We have no hope of sending probes to those worlds within the foreseeable future, and the speed of light limits when we could even receive information from a probe that landed on such a planet. Therefore, unlike our system, where samples can be both locally analyzed or returned to Earth, only remote observation is currently possible for exoplanets.

For terrestrial worlds like our contemporary Earth, the signature would be the presence of both oxygen (O2) from oxygenic photosynthesis and methane (CH4) from methanogenic bacteria or archaea. So strong is this idea, that my first post on Centauri Dreams was to review a paper that discussed what the atmospheric biosignature would be for an early Earth before photosynthesis had made O2 the 2nd most common gas in the atmosphere, a period that encompassed most of Earth’s history [2].

However, there are increasing concerns from the astrobiology community about this approach because of possible false positives. For example, O2 could be entirely generated by photolysis of water, and even CH4 might be sufficiently produced by geologic means to maintain that disequilibrium, creating false positives or at best ambiguity of the spectral analysis as a biosignature.

Astrobiologists have continued to explore other possible biosignatures, usually with terrestrial life as the template. For example, Sara Seager published a catalog of small, detectable molecules that included some that are only made by life forms. This included phosphine (AKA phosphane, PH3). In 2021, Greaves reported that PH3 had been discovered in the spectra of Venus’s atmosphere [27]. As PH3 is principally produced by life on Earth, this set off a flurry of observations, experiments, and even a soon-to-be-launched probe to the temperate zone of the Venusian atmosphere. Unfortunately, in this case, confirmation of the signal was not made. It was also suggested that the very similar spectral signature of sulfur dioxide was the culprit. The original observation remains controversial, but at least we will eventually get the ground truth we need. But as we will see later, there is a theoretical abiotic route to PH3 production via Venusian volcanic emissions which adds ambiguity to the finding as a biosignature.

In 2018, Sara Walker published a long paper on the issue of dealing with false positives for any biosignature [7]. Much of the paper relied on the use of Bayesian statistical methods, although a potential flaw was the issue of assigning the prior probabilities. Much of the paper dealt with data that would need some sort of sample, even ground truth, such as a sample taken by an in situ probe, as originally suggested by Sagan and Shklovskii. Purely electromagnetic spectrum (em) data would exclude these sample analyses.

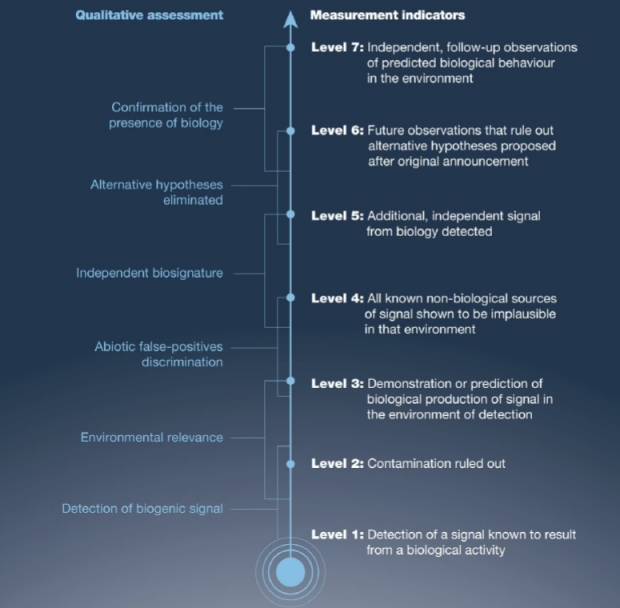

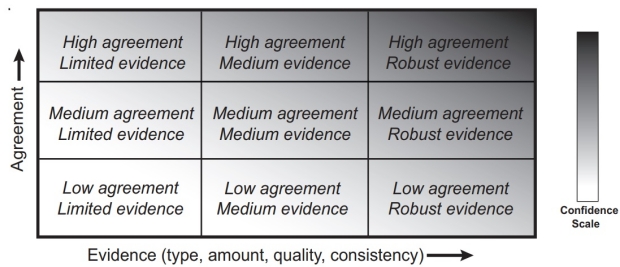

A 2021 paper by Green et al [8] suggested that the detection of life should be viewed on a scale of increasing certainty, rather than a binary true or false determination. In other words, ambiguity was to be encompassed:

“The Community Workshop Report argues (with reasonable grounds) that the first detection of an extraterrestrial biosignature will likely be ambiguous and require significant follow-on work.”

They suggested a new Confidence of Life Detection scale (CoLD), to indicate reliability based on NASA’s Technology Readiness Level (TRL) approach: Confidence is increased with confirmation and as abiotic causes are ruled out. A modified chart is shown in Figure 3.

Figure 3. CoLD scale. Printed with permission in the Vickers et al paper.

At about the same time, NASA sponsored a biosignature workshop [9] to assess and report on life detection to address the recognized problems of reliability of life detection as an immature science. Their effort was especially targeted at communicating a possible life detection. It should be noted that NASA did a very poor job of this in the past, notably the press conferences on the “Martian microbes” from the ALH84001 meteorite in 2006, and the arsenic-based bacteria in 2010, billed as a possible different alien biology that could exist on another world [28]. Nasa clearly wanted to avoid such premature announcements and tread a more cautious approach. [In an ironic twist, researchers have discovered arsenic metabolism in some deep sea marine microbes [29].

This has gained importance because of the increasingly attention-grabbing approach of articles on the discovery of exoplanets that resemble Earth, often using the “Earth 2.0” label. It is highly unlikely any exoplanet with similar dimensions and orbits in the habitable zone (HZ) is a terrestrial-type verdant world suitable for eventual colonization if or when our starships can reach them.

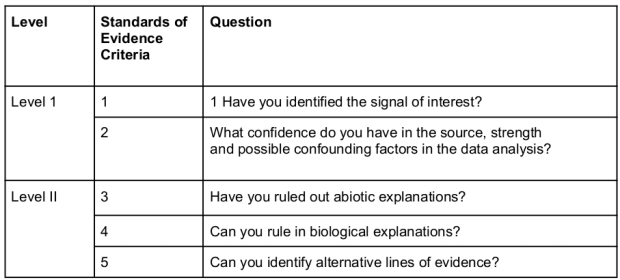

The workshop produced this Standards of Evidence scale published in 2022:

Table 1. Standards of Evidence Life Detection scale, produced by a community wide Effort [9] Credit: Walker et al [14]

In 2023, Smith, Harrison, and Mathis published an extensive critique of the reliability of biosignatures for life detection. In their essay [10] they state in the abstract that:

“Our limited access to otherworlds suggests this observation is more likely to reflect out-of-equilibrium gases than a writhing octopus. Yet, anything short of a writhing octopus will raise skepticism about what has been detected.”

In the introduction, they state that atmospheric gas disequilibria are byproducts of life on Earth, and not unique, for example, abiotic production of O2 in the atmosphere. The following includes a critique of the Krissansen-Totton et al paper that I sourced in my first Centauri Dreams post:

“Often these models don’t rely on any underlying theory of life, and instead consider specific sources and sinks of chemical species, and rules of their interactions. Astrobiologists label these sources, sinks, or transformations as being due to life or nonlife, tautologically defined by the fluxes they influence. For example, defining life via biotic fluxes of methane from methanogenesis [6,7] and defining abiotic fluxes via rates of serpentinization and impacts.”

And:

“This leads to the conclusion that most exoplanet biosignatures are futile if our goal is to detect life outside the solar system with confidence.”

Figure 4 below shows the 4 approaches they suggest to firm up the strength of biosignatures.

1. Biological research on terrestrial life,

2. Looking for life with probes sent to planets in our system

3. Experimental work on abiogenesis and molecular outcomes

4. SETI via technosignatures

Figure 4. The 4 approaches to explore biosignatures. Credit: Smith, Harrison & Mathis.

They conclude:

“As astrobiologists we believe the search for life beyond Earth is one of the most pressing scientific questions of our time. But if we as a community can’t decide how to formalize our ideas into testable hypotheses to motivate specific measurements or observational goals, we are taking valuable observational time and resources away from other disciplines and communities that have clearly articulated goals and theories. It’s one thing to grope around in the dark, or explore uncharted territory, but do so at the cost of other scientific endeavors become increasingly difficult to justify. One of the most significant unification of biological phenomena–Darwin’s theory of natural selection–emerged only after Darwin went on exploratory missions around the world and documented observations. It’s possible the data required to develop a theory of life that can make predictions about living worlds simply has not been documented sufficiently. But if that’s the case we should stop aiming to detect something we cannot understand, and instead ask what kinds of exploration are needed to help us formalize such a theory.”

One month later, Vickers, Peter, et al. published “Confidence of Life Detection: The Problem of Unconceived Alternatives.” [11]. This paper aimed to demolish the idea of using Bayesian probabilities as there was little hope of even conceiving of novel ways life may arise, nor the abiotic mimics of possible signatures.

From the abstract:

“It is argued that, for most conceivable potential biosignatures, we currently have not explored the relevant possibility space very thoroughly at all. Not only does this severely limit the circumstances in which we could reasonably be confident in our detection of extraterrestrial life, it also poses a significant challenge to any attempt to quantify our degree of (un)certainty.”

From the introduction:

“(…) the problem of unconceived abiotic explanations for phenomena of interest……., we stress that articulating our uncertainty requires an assessment of the extent to which we have explored the relevant possibility space. It is argued that, for most conceivable potential biosignatures, we currently have not explored the relevant possibility space very thoroughly at all.”

From section 2 – The challenges of known and unknown false positives:

“As Meadows et al. (2022, p. 26) note, “[I]f the scope of possible abiotic explanations is known to be poorly explored, it suggests we cannot adequately reject abiotic mechanisms.” Conversely, if it is known to be thoroughly explored, we probably can reject abiotic mechanisms.”

In effect, they are reiterating the problems of inference. For example, it was thought since Roman times that all swans were white as no examples of differently colored swans had been seen in the European, Asian, and African continents. This remained the case until black swans were discovered in Australia.

Today the more popular phrase that covers the issue of inference is “Absence of Evidence is not Evidence of Absence”.

They critique Green’s CoLD scale and suggest that the Intergovernmental Panel on Climate Change (IPCC) approach using a 2D scale of scientific consensus and strength of evidence may be more suitable.

Figure 5. IPCC framework for climate as a biosignature confidence scale. Credit: Vickers et al.

As Vickers was sowing doubts about biosignature detection reliability and how best to handle the uncertainties, Sara Walker and collaborators published “False Positives and the Challenge of Testing the Alien Hypothesis” [12] repeating their earlier argument for Bayesian methods, and the need for definitive biosignatures to avoid false positives. Those definitive biosignatures may be based on the Assembly Theory of the composition of organic molecules [13]. I would include the complementary approach, even though it does allow for false positives [14]. However, these approaches require samples that will not be available for exoplanets. Walker ends with the suggestion that the approach of levels (or ladders) of certainty such as the Confidence of Life Detection (CoLD) scale, and the community Standards of Evidence Life Detection scale should be used because we need more data to understand planetary types and life, and that data will improve the probabilities of evaluating the specific probability of life on a planet, especially with the rapidly increasing number of exoplanets.

Whatever the pros and cons of different approaches, it appears that continuing research and cataloging of exoplanets will help narrow down the uncertainties of life detection. Ideally, several orthogonal approaches can be used to triangulate the probability that the biosignature is a true positive.

Sagan was right in that we need the ground truth of close observation and samples to validate electromagnetic data. While ground truth can eventually be acquired for planets in our solar system, we don’t have that for exoplanets, nor will we have that for the foreseeable future, unless that low probability SETI radio or optical signal is detected. In time, with a catalog of exoplanet data, it might be possible to collect enough examples to determine if there are abiotic mimics of different gas disequilibria, or other phenomena like the chlorophyll “red edge”. But we cannot know with certainty, and any abiotic mimic reduces the confidence of biotic interpretations.

Therefore, biosignatures from exoplanets will remain uncertain indications of life. We cannot escape from this. It will be up to the community and the responsible media to make this clear. [And good luck with the media].

As if this issue were not relevant, Payne and Kalteneggar just published a paper indicating that the O2 + CH4 signature is stronger in the last 100-300m than today, principally due to the greater partial pressure of O2 in the atmosphere during that period [15]. It was covered by the press as “Earth Was More Attractive to Aliens Back When Dinosaurs Roamed” [21]. C’est la vie.

References

1. Hitchcock, D. R., and J. E. Lovelock. “Life Detection by Atmospheric Analysis.” Icarus, vol. 7, no. 1–3, Jan. 1967, pp. 149–59. https://doi.org/10.1016/0019-1035(67)90059-0.

2. Tolley, Alex Detecting Early Life on Exoplanets. Centauri Dreams February 23, 2018.

https://www.centauri-dreams.org/2018/02/23/detecting-early-life-on-exoplanets/

3. Kilston, S. D., Drummond, R. R., & Sagan, C. (1966). A search for life on Earth at kilometer resolution. Icarus, 5(1–6), 79–98. https://doi.org/10.1016/0019-1035(66)90010-8

4. Shklovskii, I.S., and Carl Sagan. Intelligent Life in the Universe. San Francisco, CA, United States of America, Holden-Day, Inc., 1966.

5. Hoyle, Fred, and N. Chandra Wickramasinghe. Lifecloud: The Origin of Life in the Universe. HarperCollins Publishers, 1978.

6. Stirone, S., Chang, K., & Overbye, D. (2020). Life on Venus? Astronomers see a signal in its clouds. The New York Times. https://www.nytimes.com/2020/09/14/science/venus-life-clouds.html

7. Walker SI, et al Exoplanet Biosignatures: Future Directions. Astrobiology. 2018 Jun;18(6):779-824. doi: 10.1089/ast.2017.1738. PMID: 29938538; PMCID: PMC6016573.

8. J. Green, T. Hoehler, M. Neveu, S. Domagal-Goldman, D. Scalice, and M. Voytek, 2021, “Call for a Framework for Reporting Evidence for Life Beyond Earth,” Nature 598:575-579, https://doi.org/10.1038/s41586-021-03804-9.

9. “Independent Review of the Community Report From the Biosignature Standards of Evidence Workshop.” National Academies Press eBooks, 2022, https://doi.org/10.17226/26621.

10. Smith, Harrison B., and Cole Mathis. “Life Detection in a Universe of False Positives.” BioEssays, vol. 45, no. 12, Oct. 2023, https://doi.org/10.1002/bies.202300050.

11, Vickers, Peter, et al. “Confidence of Life Detection: The Problem of Unconceived Alternatives.” Astrobiology, vol. 23, no. 11, Nov. 2023, pp. 1202–12. https://doi.org/10.1089/ast.2022.0084.

12, Foote, Searra, Walker, Sara, et al. “False Positives and the Challenge of Testing the Alien Hypothesis.” Astrobiology, vol. 23, no. 11, Nov. 2023, pp. 1189–201. https://doi.org/10.1089/ast.2023.0005.

13. Tolley, A (2024) “Alien Life or Chemistry? A New Approach Alien Life or Chemistry? A New Approach, Centauri Dreams https://www.centauri-dreams.org/2024/01/24/alien-life-or-chemistry-a-new-approach/

14, Tolley, A (2018) “Detecting Life On Other Worlds”, Centauri Dreams https://www.centauri-dreams.org/2018/08/10/detecting-life-on-other-worlds/

15. R C Payne, L Kaltenegger, Oxygen bounty for Earth-like exoplanets: spectra of Earth through the Phanerozoic, Monthly Notices of the Royal Astronomical Society: Letters, Volume 527, Issue 1, January 2024, Pages L151–L155, https://doi.org/10.1093/mnrasl/slad147

16. Ley, Willy, and Wernher Von Braun. The Exploration of Mars. 1956

17. Leong, Y.C., Hughes, B.L., Wang, Y. et al. Neurocomputational mechanisms underlying motivated seeing. Nat Hum Behav 3, 962–973 (2019). https://doi.org/10.1038/s41562-019-0637-z

18. BBC News. “Arsenic-loving Bacteria May Help in Hunt for Alien Life.” BBC News, 2 Dec 2010, https://www.bbc.co.uk/news/science-environment-11886943.

19. Sankaran, Vishwam. “Dyson Spheres: Alien Power Plants May Be Drawing Energy From 7 Stars in the Milky Way.” The Independent, 17 May 2024, https://www.independent.co.uk/space/aliens-dyson-spheres-milky-way-power-plants-b2546601.html.

20. “Astrobiology at Ten.” Nature, vol. 440, no. 7084, Mar. 2006, p. 582. https://doi.org/10.1038/440582a.

21. Nield, David. “Earth Was More Attractive to Aliens Back When Dinosaurs Roamed.” ScienceAlert, 10 Nov. 2023 https://www.sciencealert.com/earth-was-more-attractive-to-aliens-back-when-dinosaurs-roamed.

22. Choi, Charles Q. “Mars Life? 20 Years Later, Debate Over Meteorite Continues.” Space.com, 10 Aug. 2016, https://www.space.com/33690-allen-hills-mars-meteorite-alien-life-20-years.html.

23, Von Braun, Wernher. The Mars Project. University of Illinois Press, 1953.

24, Von Braun, Wernher. Project Mars: A Technical Tale. Apogee Books, 2006.

25. Sinton, W M, “Radiometric Observations of Mars,” Astrophysical Journal, vol. 131, p. 459-469 (1960).

26. Turyshev et al., “Direct Multipixel Imaging and Spectroscopy of an Exoplanet with a Solar Gravity Lens Mission,” Final Report NASA Innovative Advanced Concepts Phase II. https://arxiv.org/abs/2002.11871

27. Greaves, J.S., Richards, A.M.S., Bains, W. et al. “Phosphine gas in the cloud decks of Venus.” Nat Astron 5, 655–664 (2021). https://doi.org/10.1038/s41550-020-1174-4

28. NASA (2010) NASA-Funded Research Discovers Life Built With Toxic Chemical URL: https://www.prnewswire.com/news-releases/nasa-funded-research-discovers-life-built-with-toxic-chemical-111207604.html

29. Saunders, J. K., Fuchsman, C. A., McKay, C., & Rocap, G. (2019). “Complete arsenic-based respiratory cycle in the marine microbial communities of pelagic oxygen-deficient zones.” Proceedings of the National Academy of Sciences, 116(20), 9925-9930. https://doi.org/10.1073/pnas.1818349116

The Physics of Starship Catastrophe

Now that gravitational wave astronomy is a viable means of investigating the cosmos, we’re capable of studying extreme events like the merger of black holes and even neutron stars. Anything that generates ripples in spacetime large enough to spot is fair game, and that would include supernovae events and individual neutron stars with surface irregularities. If we really want to push the envelope, we could conceivably detect the proposed defects in spacetime called cosmic strings, which may or may not have been formed in the early universe.

The latter is an intriguing thought, a conceivably observable one-dimensional relic of phase transitions from the beginning of the cosmos that would be on the order of the Planck length (about 10-35 meters) in width but lengthy enough to encompass light years. Oscillations in these strings, if indeed they exist, would theoretically generate gravitational waves that could be involved in the large-scale structure of the universe. Because new physics could well lurk in any detection, cosmic strings remain a tantalizing subject for speculation in gravitational wave astronomy.

Remember the resources that are coming into play in this field. In addition to LIGO (Laser Interferometer Gravitational-Wave Observatory), we have KAGRA (Kamioka Gravitational Wave Detector) in Japan and Virgo (VIRgo interferometer for Gravitational-wave Observations) in Italy. The LISA observatory (Laser Interferometer Space Antenna) is currently scheduled for a launch some time in the 2030s.

For that matter, could a cosmic string be detected in other ways? One possibility is in any signature it might leave in the cosmic microwave background (CMB). Another, and this seems promising, is the potential for gravitational lensing as light from background objects travels through the distorted spacetime produced by the string. That would be an interesting signature to find, and indeed, one of the exciting aspects of gravitational wave astronomy is speculation on what new phenomena it would allow us to detect.

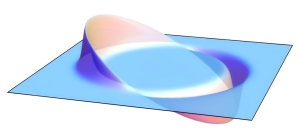

As witness a new paper from Katy Clough (Queen Mary University, London) and colleagues, who ask whether an artificial gravitational event could generate a signal that an observatory like LIGO could detect. Now we nudge comfortably into science fiction, for at issue is what would happen if a starship powered by a warp drive were to experience a malfunction. Given the curvature of spacetime induced by an Alcubierre-style drive, a problem in its operations could be detectable, although not, the team points out, at the frequencies currently observed by LIGO.

An Alcubierre warp drive would produce a spacetime that is truly exotic, but one that can be described within the theory of General Relativity. The speed of light is never exceeded by our starship, thus satisfying Special Relativity, but a craft that can contract spacetime in front of it and expand spacetime behind it would theoretically cross distances faster than the speed of light as witnessed by an outside observer.

Huge problems would be created by such a craft, including some that may be insurmountable. It seems to violate what is known as the Null Energy Condition, for one thing, which demands negative energy seemingly not allowed in standard theories of spacetime. But the authors note that “The requirement that warp drives violate the NEC may be considered a practical rather than fundamental barrier to their construction since NEC violation can be achieved by quantum effects and effective descriptions of modifications to gravity, albeit subject to quantum inequality bounds and other semiclassical considerations that seem likely to prove problematic.”

Image: Two-dimensional visualization of an Alcubierre drive, showing the opposing regions of expanding and contracting spacetime that displace the central region. Credit: AllenMcC., CC BY-SA 3.0

Problematic is a useful word, and it seems appropriate here. It’s also appropriate when we consider that a functioning warp drive raises paradoxical issues with regard to time travel, allowing closed time-like curves (in other words, the possibility of traveling into the past, with all the headaches that causes for causality and our view of reality). That puts us in the realm of rotating black holes and wormholes, powerful gravitational wave generators. The authors also point out that a warp drive would be a difficult thing to control and deactivate, as Miguel Alcubierre himself pointed out in a 2017 paper.

So how would we detect a starship of this variety? The authors note that at constant velocity, an Alcubierre drive spacecraft would not generate gravitational waves, but interesting phenomena would be observed if the drive bubble were to collapse, accelerate or decelerate:

There is (to our knowledge) no known equation of state that would maintain the warp drive metric in a stable configuration over time – therefore, whilst one can require that initially, the warp bubble is constant, it will quickly evolve away from that state and, in most cases, the warp fluid and spacetime deformations will disperse or collapse into a central point….This instability, whilst undesirable for the warp ship’s occupants, gives rise to the possibility of generating gravitational waves.

In other words, a working warp drive craft may well be undetectable, but a prototype that fails could throw an observable signature. The paper homes in on the collapse of a warp drive bubble, which could be created by the breakdown of the containment field that the makers of the starship use to support it. So we have a potential gravitational wave signature for a technological catastrophe as an advanced civilization experiments with the distortion of spacetime for interstellar travel.

Such events are presumably rare. I’m reminded of Greg Benford’s story “Bow Shock,” in which as astronomer studying what he thinks is a runaway neutron star – “a faint finger in maps centered on the plane of the galaxy, just a dim scratch” – is in fact a technological object. Here’s a clip:

“What you wrote,” she said wonderingly. “It’s a…star ship?”

“Was. It got into trouble of some kind these last few days. That’s why the wake behind it – ” he tapped the Fantis’ image – “got longer. Then, hours later, it got turbulent, and—it exploded.”

She sipped her coffee. “This is…was…light years away?”

“Yes, and headed somewhere else. It was sending out a regular beamed transmission, one that swept around as the ship rotated, every 47 seconds.”

Her eyes widened. “You’re sure?”

“Let’s say it’s a working hypothesis.”

Great scenario for a science fiction story, and there are a number of papers on starship detection from other angles in the scientific literature. In Benford’s case, the starship is thought to be of the Bussard ramjet variety, definitely not moving through warp drive methods. All this reminds me that a survey of starship detection papers is overdue in these pages, and I’ll plan to get to that in coming weeks. But back to warp drives.

Let’s assume things occasionally go wrong at whatever level of technology we’re looking at. We’re witnessing SpaceX actively developing Starship, a craft that gets a little better, and sometimes a lot better, each time it is launched, but development is hard and there are errors along the way. Throw an error into an Alcubierre-style starship and gravitational effects should show up involving nasty tidal outcomes.

To investigate these, Clough and colleagues develop a structured framework to simulate warp bubble collapse and analyze the gravitational wave signatures that would be produced at the point of collapse. Other types of signal may also be produced, but the paper notes: “Since we do not know the type of matter used to construct the warp ship, we do not know whether it would interact (apart from gravitationally) with normal matter as it propagates through the Universe.”

We don’t have equipment tuned to pick up such signals. We have the needed sensitivity in observatories like LIGO, but we would need to tune it to a different range of gravitational waves. The paper continues:

…for a 1km-sized ship, the frequency of the signal is much higher than the range probed by existing detectors, and so current observations cannot constrain the occurrence of such events. However, the amplitude of the strain signal would be significant for any such event within our galaxy and even beyond, and so within the reach of future detectors targeting higher frequencies… We caution that the waveforms obtained are likely to be highly specific to the model employed, which has several known theoretical problems, as discussed in the Introduction. Further work would be required to understand how generic the signatures are, and properly characterise their detectability.

A funding request to study starships undergoing catastrophic failure is going to be a tough sell. But probing the question produces the formalism developed by the Clough team and gives us further insights into warp drive prospects. Fascinating.

The paper is Clough et al, “What no one has seen before: gravitational waveforms from warp drive collapse” (preprint).

An X-Ray Study of Exoplanet Habitability

Great observatories work together to stretch the boundaries of what is possible for each. Data from the Chandra X-ray Observatory were used in tandem with the James Webb Space Telescope, for example, to observe the death of a star as it was consumed by a black hole. JWST’s infrared look at this Tidal Disruption Event (TDE) helped show the structure of stellar debris in the accretion disk of the black hole, while Chandra charted the high-energy processes at play in the cataclysmic event.

Or have a look at the image below, combining X-ray and infrared data from these two instruments along with the European Space Agency’s XMM-Newton, the Spitzer Space Telescope and optical data from Hubble and the European Southern Observatory’s New Technology Telescope to study a range of targets.

Image: Four composite images deliver dazzling views from NASA’s Chandra X-ray Observatory and James Webb Space Telescope of two galaxies, a nebula, and a star cluster. Each image combines Chandra’s X-rays — a form of high-energy light — with infrared data from previously released Webb images, both of which are invisible to the unaided eye. Data from NASA’s Hubble Space Telescope (optical light) and retired Spitzer Space Telescope (infrared), plus the European Space Agency’s XMM-Newton (X-ray) and the European Southern Observatory’s New Technology Telescope (optical) is also used. These cosmic wonders and details are made available by mapping the data to colors that humans can perceive. Credit: X-ray: Chandra: NASA/CXC/SAO, XMM: ESA/XMM-Newton; IR: JWST: NASA/ESA/CSA/STScI, Spitzer: NASA/JPL/CalTech; Optical: Hubble: NASA/ESA/STScI, ESO; Image Processing: L. Frattare, J. Major, and K. Arcand.

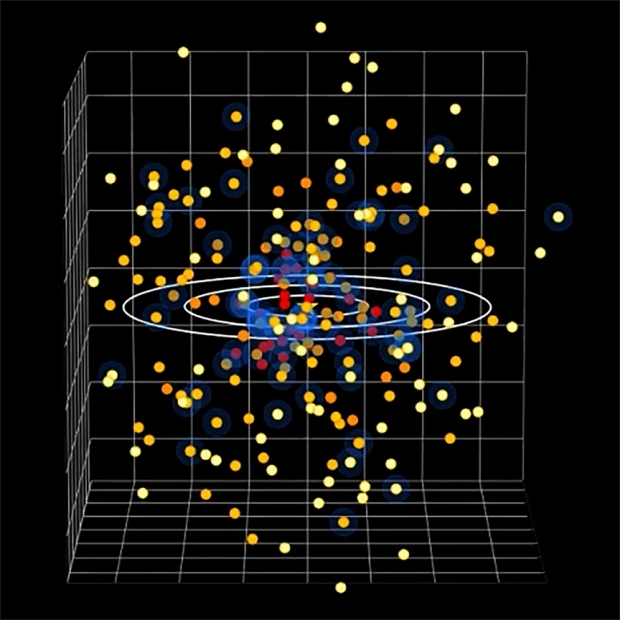

Working at multiple wavelengths obviously pays dividends, and with Chandra in the news because of proposed budget cuts, it’s worth noting that its observations have a role to play in the search for habitable worlds in our own stellar neighborhood. Currently the observatory is being used in conjunction with XMM-Newton in an ongoing exploration of habitability in terms of radiation. Which stars close enough to Earth for us to image planets in their habitable zones are also benign in terms of the radiation bath to which these same planets would be exposed?

High levels of ultraviolet and X-ray radiation can break chemical bonds and damage DNA in biological systems, not to mention their effect in stripping away planetary atmospheres. Thus ionizing radiation becomes a key factor in habitability in the kind of planets whose atmospheres we are first going to be able to study, those orbiting nearby M-dwarfs where the habitable zone is dauntingly close to the parent star.

The new study is led by Breanna Binder (California State Polytechnic University), who told the American Astronomical Society’s recent meeting in Madison, Wisconsin that of some 200 promising target stars, only a third have previously been examined by X-ray telescopes. Of these, many appeared quiet at visible wavelengths but quite active in X-ray emissions. Clearly we need to learn more about this critical variable.

With a generation of Extremely Large Telescopes soon to come online and future space observatories like the Habitable Worlds Observatory in the works, we will be able to take apart light from individual planets in search of biosignatures. Binder’s team will try to identify stars where the X-ray background is not dissimilar to Earth’s, so that the chances of life evolving within a protective atmosphere are enhanced. Ten days of Chandra observations along with 26 days of data from XMM-Newton were collected to home in on the X-ray behavior of some 57 nearby stars, ranging from 4.2 to 62 light years away.

This continuing effort is in a sense a shot in the dark, for while some of the stars are known to have planets, many are not. The necessary geometry of planetary transits means that many stars are likely orbited by habitable zone planets we can’t yet detect, and radial velocity methods are likewise not tuned for planets of this size. Edward Schwieterman (University of California Riverside) points to what comes next:

“We don’t know how many planets similar to Earth will be discovered in images with the next generation of telescopes, but we do know that observing time on them will be precious and extremely difficult to obtain. These X-ray data are helping to refine and prioritize the list of targets and may allow the first image of a planet similar to Earth to be obtained more quickly.”

Image: A three-dimensional map of stars near the Sun that are close enough to Earth for planets in their habitable zones to be directly imaged using future telescopes. Planets in their stars’ habitable zones likely have liquid water on their surfaces. A study with Chandra and XMM-Newton of some of these stars (shown in blue haloes) indicates those that would most likely have habitable exoplanets around them based on a second condition — whether they receive lethal radiation from the stars they orbit. Credit: Cal Poly Pomona/B. Binder; Illustration: NASA/CXC/M.Weiss.

More on this continuing study as results become available. Meanwhile, note in relation to the budgetary woes Chandra is experiencing that a letter to NASA administrator Bill Nelson went out on June 6 from several U.S. senators and members of the House of Representatives urging that restrictions on its funding be rescinded. The letter bears all too few signatures, but it’s at least a step in the right direction. Quoting from it:

Premature termination of the Chandra mission would jeopardize this critical workforce, potentially driving talent to other countries. Chandra should serve as a bridge to a promising future in high energy astrophysics at NASA, including the development of its eventual flagship-scale successor, as recommended by the 2020 Decadal Survey in Astronomy and Astrophysics.

We strongly urge NASA to maintain full FY25 funding for the Chandra mission at the $68.7 million level, as outlined in NASA’s FY24 budget request, and to halt plans for significant reductions in FY25 until Congress determines Chandra’s appropriations. The proposed budget cuts would cause damage to U.S. leadership in high energy astrophysics and prematurely end the mission of a national treasure whose most significant discoveries may still be ahead.

Shutting Down Chandra: Will We Lose Our Best Window into the X-ray Universe?

Our recent discussions of X-ray beaming to propel interstellar lightsails seem a good segue into Don Wilkins’ thoughts on the Chandra mission. Chandra, of course, is not a deep space probe but an observatory, and a revolutionary one at that, with the capability of working at the X-ray wavelengths that allow us to explore supernovae remnants, pulsars and black holes, as well as making observations that advance our investigation of dark matter and dark energy. This great instrument swims into focus today because it faces a funding challenge that may result in its shutdown. It’s a good time, then, to take a look at what Chandra has given us since launch, and to consider its significance as efforts to save the mission continue. We should get behind this effort. Let’s save Chandra.

by Don Wilkins

On July 23, 1999, the Chandra X-ray Observatory deployed from Space Shuttle Columbia. Chandra along with the Hubble Space Telescope, Spitzer Space Telescope (decommissioned when its liquid helium ran out) and Compton Gamma Ray Observatory (de-orbited after gyroscope failure), composed NASA’s fleet of ‘Great Observatories,’ so labeled because of their coverage from gamma rays through X-rays, visible light and into the infrared, spanning the electromagnetic spectrum. This scientific fleet expanded – and continues to expand – our understanding of the cosmos.

A two-stage Inertial Upper Stage booster put the observatory into an elliptical orbit. Apogee is approximately 139,000 kilometers (86,500 miles) — more than a third of the distance to the Moon – with perigee at 16,000 kilometers (9,942 miles) and an orbital period of 64 hours and 18 minutes. The numbers are significant: The consequence of this orbit is that about 85 percent of the time, Chandra orbits above the charged particle belts surrounding the Earth, allowing periods of uninterrupted observing time up to 55 hours.

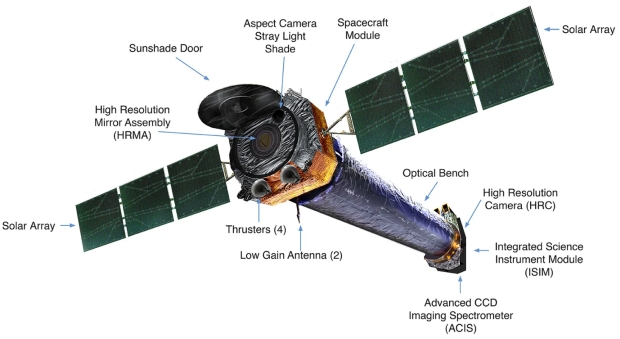

Figure 1. The Chandra Observatory monitors X-rays, a critical and unique window into the hottest and most energetic places in the Universe. Credit: NASA.

A Huge Return on Investment

Chandra’s achievements include:

- The first image of the compact object, possibly a neutron star or black hole, at the center of the supernova remnant Cassiopeia A.

- The detection of jets from and a ring around the Crab Nebula.

- The detection of X-ray emitting loops, rings and filaments encircling Messier 87’s supermassive black hole.

- The discovery, by high school students, of a neutron star in the supernova remnant IC 443.

- The detection of X-ray emissions from Sagittarius A*, the black hole at the center of the Milky Way. Chandra observed a Sagittarius A* X-ray flare 400 times brighter than normal, and found a large halo of hot gas surrounding the Milky Way.

- The X-ray observation of a supernova, SN 1987A, shock wave.

- The observation of streams of high-energy particles light years in length flowing from neutron stars.

- Observations of planets within the Solar System found X-rays associated with Uranus that may not be the reflection of solar X-rays. Chandra also found that Jupiter’s X-ray emissions occur at its poles, and it detected X-ray emissions from Pluto.

- Found a dip in X-ray intensity as the planet HD 189733b transited its parent star. This is the first time a transit of an exoplanet was observed using X-rays.

- Detected the shadow of a small galaxy as it is cannibalized by a larger one.

- Found a mid-mass black hole, between stellar-sized black holes and galactic core black holes, in M82.

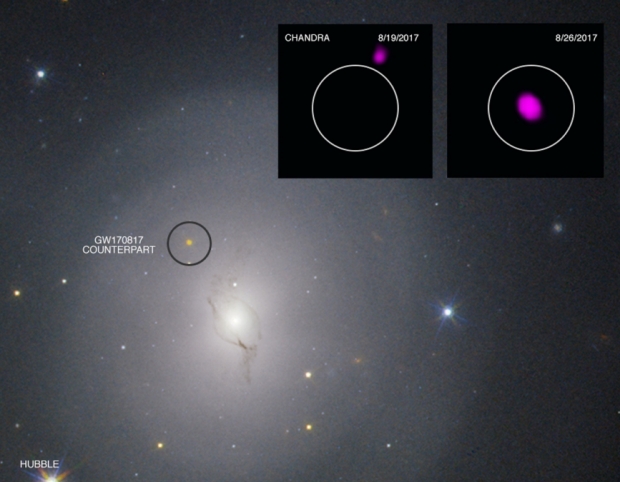

- Associated X-rays with the gamma ray burst GRB 91216 (Beethoven Burst). Chandra observations also associated X-rays with a gravitational source (Figure 2).

- Produced controversial data suggesting RX J1856.5-3754 and 3C58 are quark stars.

- Measured the Hubble constant as 76.9 km/s per megaparsec (a megaparsec is equal to 3.26 million light years).

- Found strong evidence dark matter exists by observing super cluster collisions. Observations placed limits on dark matter self-interaction cross-sections.

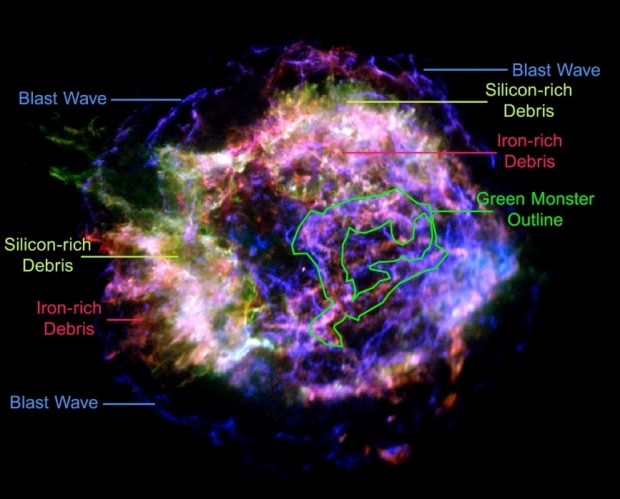

- Combined with the outputs of other telescopes yielded insights into astronomical phenomena, Figure 3.

Building Chandra was a significant technical challenge. Mirror alignment accuracy, from one end of the mirror assembly to the other, a distance of 2.7 meters or 9 feet, is accurate to 1.3 micrometers (50 millionths of an inch). X-rays are focused using complex geometrical shapes which must be precisely formed and kept ultraclean. Even today, no X-ray telescope provides the sharpness of image that Chandra does.

Figure 2 Chandra made the first X-ray detection of a gravitational wave source. The inset shows both the Chandra non-detection, or upper limit, of X-rays from GW170817 and its subsequent detection. The main panel is the Hubble image of NGC 4993. Credit: NASA.

Figure 3. The image is a combination of the outputs of three telescopes. Webb highlights infrared emission from dust; Chandra the X-ray emissions and Hubble shows stars in the field. Credit: NASA/CXC/SAO

Chandra’s Demise?

This list of discoveries may be ending. Although it is estimated Chandra has another decade of operation, proposed budget cuts eliminate the $74 million necessary for Chandra operation, maintenance and analysis of data. Unless the funding is restored by way of a budget line item directing NASA to spend the funds on Chandra, the agency’s X-ray observation is stopped for years until a new telescope can be brought on line. The skills of highly talented scientists and engineers working on Chandra will be dissipated.

The problem is significant. NASA’s own NuStar instrument (Nuclear Spectroscopic Telescope Array) and the joint NASA/JAXA XRISM effort (X-Ray Imaging and Spectroscopy Mission) have their virtues, as does the European Space Agency’s XMM-Newton (X-ray Multi-Mirror Mission). But Chandra’s eyes are sharper, with imagery 10 times crisper than XMM-Newton’s, 30 times sharper than NuStar, and fully 150 times sharper than XRISM.

A possible successor is in the planning stages. With 100 times Chandra’s resolution, the Lynx X-ray Observatory could study individual stars more than 16,000 light-years away, or 12.5 times the range of Chandra, using an X-ray Mirror Assembly that is the most powerful X-ray optic ever conceived. Even so, it is probable three decades or more would elapse before the new observatory would orbit and it might well involve significant cost overruns. ESA plans the Athena mission (Advanced Telescope for High ENergy Astrophysics), a large-aperture grazing-incidence X-ray telescope, but Athena would not come online until the late 2030s. In the meantime, we have a healthy Chandra.

Although we are dealing with a 25 year old spacecraft, its efficiency is both high and stable, and enough fuel remains for another decade of operations, while the cost of operations has remained stable for decades. Considered as a bridge to Lynx, Chandra is at the mercy of Congress largely through the FY25 appropriations process. The particulars of how to support this great observatory are available on the Save Chandra website, which is the voice of a grassroots effort within the astronomical community.

Saving Chandra would avert what some are describing as “an extinction-level-event for X-ray astronomy in the US.” An open community letter with 87 pages of signatures recently submitted to NASA Science Leadership strongly supports this statement.