Centauri Dreams

Imagining and Planning Interstellar Exploration

Stitching the Stars: Graphene’s Fractal Leap Toward a Space Elevator

The advantages of a space elevator have been percolating through the aerospace community for quite some time, particularly boosted by Arthur C. Clarke’s novel The Fountains of Paradise (1979). The challenge is to create the kind of material that could make such a structure possible. Today, long-time Centauri Dreams reader Adam Kiil tackles the question with his analysis of a new concept in producing graphene, one which could allow us to create the extraordinarily strong cables needed. Adam is a satellite image analyst located in Perth, Australia. While he has nursed a long-time interest in advanced materials and their applications, he also describes himself as a passionate advocate for space exploration and an amateur astronomer. Today he invites readers to imagine a new era of space travel enabled by technologies that literally reach from Earth to the sky.

by Adam Kiil

In the quiet predawn hours, a spider spins its web, threading together a marvel of biological engineering: strands that are lightweight, elastic, and capable of absorbing tremendous energy before failing. This isn’t just nature’s artistry; it’s a lesson in hierarchical design, where proteins self-assemble into beta-pleated sheets and amorphous regions, creating a material tougher than Kevlar — able to dissipate impacts like a shock absorber — while outperforming steel in strength-to-weight ratio, though falling short of Kevlar’s raw tensile strength.

As we gaze upward toward the stars, dreaming of bridges to orbit, such bio-inspired ingenuity beckons. Could we mimic this to construct a space elevator tether, a ribbon stretching 100,000 kilometers from Earth’s equator to geostationary orbit and beyond? The demands are staggering: a material with a specific strength exceeding 50 GPa·cm³/g to support its own weight against gravity’s pull, all while withstanding radiation, micrometeorites, and immense tensile stresses. [GPa is a reference to gigapascals, the units used to measure tensile strength at high pressures and stresses. Thus GPa·cm³/g represents the ratio of strength to density].

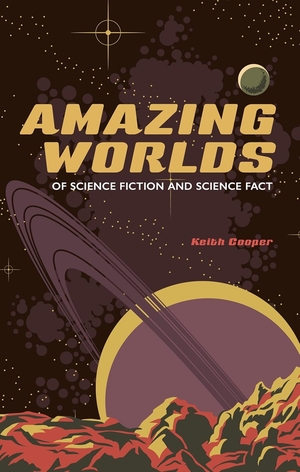

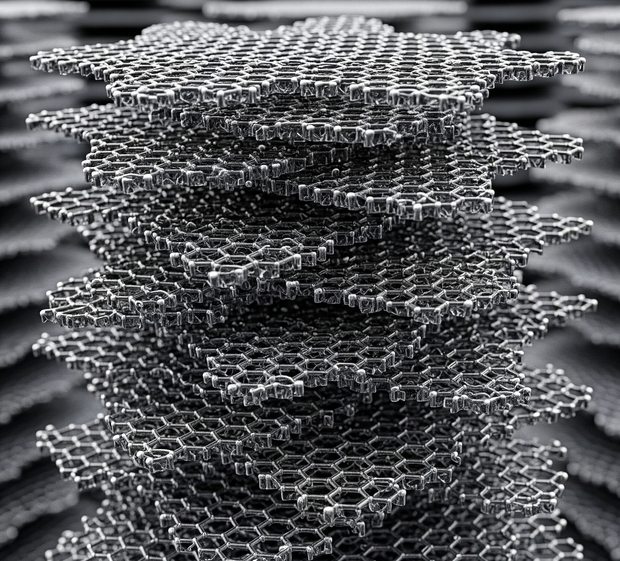

Image: A space elevator is a revolutionary transportation system designed to connect Earth’s surface to geostationary orbit and beyond, utilizing a strong, lightweight cable – potentially made of graphene due to its extraordinary tensile strength and low density—anchored to an equatorial base station and extending tens of thousands of kilometers to a counterweight in space. This megastructure would enable low-cost, efficient transport of payloads and people into orbit, leveraging a climber mechanism that ascends the cable, potentially transforming space access by reducing reliance on traditional rocket launches. Credit: Pat Rawlings/NASA.

Enter a recent breakthrough in graphene production from professor Chris Sorensen at Kansas State University and Vancouver-based HydroGraph Clean Power, whose detonation synthesis yields pristine, fractal, and reactive graphene — potentially a key ingredient in weaving this cosmic thread.

But this alone may not suffice; we must think from first principles, exploring uncharted solutions to assemble nanoscale wonders into macroscale might.

Graphene’s Promise and Perils: The Historical Context

Graphene, that atomic-thin honeycomb of carbon, tantalizes with its theoretical tensile strength of 130 GPa and density of 2.2 g/cm³, yielding a specific strength around 59 GPa·cm³/g—right on the cusp of space elevator viability.

Yet, production has long been the bottleneck. Chemical vapor deposition churns out high-quality but limited sheets; mechanical exfoliation delivers impure, aggregated flakes. These yield composites where graphene platelets, bound weakly by van der Waals forces (mere 0.1-1 GPa), slip under strain, like loose pages in a book. For a tether, we need seamless load transfer, hierarchical reinforcement, and defect minimization—echoing the energy-dissipating nanocrystals in spider silk’s protein matrix.

Sorensen’s Detonation Concept: Fractal and Reactive Graphene

Chris Sorensen’s innovation at HydroGraph Clean Power flips the script. Using a controlled detonation of acetylene and oxygen in a sealed chamber, his team produces graphene with over 99.8% purity, fractal morphology, and tunable reactivity—all at scale, with zero waste and low emissions.

The fractal form — branched, snowflake-like platelets with 200 m²/g surface area — enhances interlocking, outperforming traditional graphene by 10-100 times in composites, but crucially, these gains shine at ultra-low loadings (0.001%) and under modest stresses, not yet the gigapascal realms of a space elevator.

Reactive variants add edge functional groups like carboxylic acids (COOH), enabling covalent bonding—yet, note that simple condensation reactions here yield strengths akin to polymer chains (1-5 GPa), not the in-plane prowess of graphene’s sp² lattice.This fractal graphene could form a foundational scaffold, reconfigurable into aligned structures that mimic bone’s porosity or silk’s hierarchy. Earthly spin-offs abound: tougher concrete, sensitive sensors, efficient batteries. But for the stars, we must bridge the gap from nanoplatelets to kilometer-long cables.

Image: Conceptual view of Hydrographs’ turbostratic, 50nm nanoplatelets, 99.8% pure carbon, sp2 bonded graphene. Credit: Adam Kiil.

From First Principles: Many Paths to a Cosmic Thread

To transcend these limits, let’s reason from fundamentals. A space elevator tether must maximize tensile strength while minimizing density and defects, distributing stress across scales like spider silk’s beta-sheets (crystalline strength) embedded in an extensible amorphous matrix.

Graphene’s strength derives from its delocalized electrons in a defect-free lattice; any assembly must preserve this while forging inter-platelet bonds rivaling intra-platelet ones. Current methods fall short, so here are myriad speculative solutions, drawn from physics, chemistry, and biology—some extant, others nascent or hypothetical, demanding innovation:

- Edge-Fusion via Plasma or Laser Annealing: Functionalize edges with hydrogen or halogens, then use plasma arcs or femtosecond lasers to fuse platelets into seamless, extended sheets or ribbons, healing defects to approach single-crystal continuity. This could yield tensile strengths nearing 100 GPa by eliminating weak interfaces.

- Supramolecular Self-Assembly in Liquid Crystals: Disperse fractal graphene in nematic solvents, applying shear or electric fields to align platelets into helical fibrils, stabilized by pi-pi stacking and hydrogen bonding. Inspired by silk’s pH-induced assembly, this bottom-up approach might create defect-tolerant bundles with built-in energy dissipation.

- Bio-Templating with Engineered Proteins: Design peptides (via AI like AlphaFold) that bind graphene edges, mimicking silk spidroins’ repetitive motifs to fold platelets into hierarchical nanocrystals. Extrude through microfluidic spinnerets, acidifying to trigger beta-sheet formation, embedding graphene in a tough, elastic matrix.

- Covalent Cross-Linking with Boron or Nitrogen Dopants: Introduce boron atoms during detonation to create sp³ bridges between platelets, forming diamond-like nodes in a graphene network. This could boost shear strength to 10-20 GPa without sacrificing tensile properties, verified by molecular dynamics.

- Electrospinning with Magnetic Alignment: Mix reactive graphene in a polymer dope, electrospin under magnetic fields to orient platelets, then pyrolyze the polymer, leaving aligned, sintered graphene fibers. Enhancements: Add ultrasonic waves for dynamic packing, targeting <1 defect per 100 nm².

- Hierarchical Bundling via 3D Printing: Nanoscale print graphene inks layer-by-layer, using click chemistry (e.g., thiol-ene) for instant cross-links. Scale up to micro-bundles, then macro-cables, tapering density like a tree trunk to root.

- Dynamic Compression and Sintering: Apply gigapascal pressures in a diamond anvil cell, combined with heat, to induce partial sp²-to-sp³ transitions at overlaps, creating hybrid structures akin to lonsdaleite—ultra-hard yet flexible.

- Biomineralization Analogs: Introduce calcium or silica ions to reactive groups, mineralizing interfaces like nacre, adding compressive strength and crack deflection.

- AI-Optimized Hybrid Composites: Simulate (via quantum computing) blends of fractal graphene with silk-mimetic polymers or boron nitride, optimizing ratios for 90% tensile efficiency. Fabricate via wet-spinning, testing at centimeter scales.

These aren’t exhaustive; hybrids abound—e.g., combining bio-templating with laser fusion. Each target’s aim: moving beyond low-load enhancements and polymer-like bonds to harness graphene’s full lattice strength.

Weaving and Laminating: Practical Steps Forward

Drawing from these, a viable process might start with a high-solids dispersion of reactive fractal graphene, extruded via wet-spinning into aligned fibers, where optimized cross-linkers (not mere condensations) ensure graphene-dominant strength. Stack into nacre-like laminates, using hot isostatic pressing (5-20 GPa) to forge sp³ bonds, elevating shear (and thus overall tensile) resilience to 10-20 GPa. Taper the structure: thick at the base for 7 GPa stresses, thinning upward.

Scaling leverages HydroGraph’s modular reactors, producing tonnage graphene for kilometer segments.

Join via overlap lamination, braid for redundancy, deploy from orbit. Prototypes must demonstrate cohesive failure, >90% load transfer, via nanoindentation.

A Bridge to the Cosmos

Sorensen’s detonation-born graphene, fractal and reactive, ignites possibility. Yet, as spider silk teaches, true mastery lies in hierarchy and adaptation.

Success means a tether with inter-platelet bond strength nearing single-crystal graphene (>100 GPa), verified by nanoindentation or pull-out tests, with >90% tensile transfer efficiency. Centimetre-scale prototypes should show minimal defects (<1 per 100 nm² via TEM), failing cohesively, not delaminating, like a spider’s web holding under a gale. The full tether, massing under 500 tonnes, could be deployed from orbit, a lifeline to the cosmos. This graphene tether embodies our ‘sea-longing,’ a bridge to the stars woven from carbon’s hexagons, inspired by nature’s spinners and builders.

By innovating from first principles—fusing, assembling, templating—we edge closer to stitching the stars. This isn’t mere materials science; it’s the warp and weft of humanity’s interstellar tapestry, a web to catch the dreams of Centauri and beyond.

3I/ATLAS: The Case for an Encounter

The science of interstellar objects is moving swiftly. Now that we have the third ‘interloper’ into our Solar System (3I/ATLAS), we can consider how many more such visitors we’re going to find with new instruments like the Vera Rubin Observatory, with its full-sky images from Cerro Pachón in Chile. As many as 10,000 interstellar objects may pass inside Neptune’s orbit in any given year, according to information from the Southwest Research Institute (SwRI).

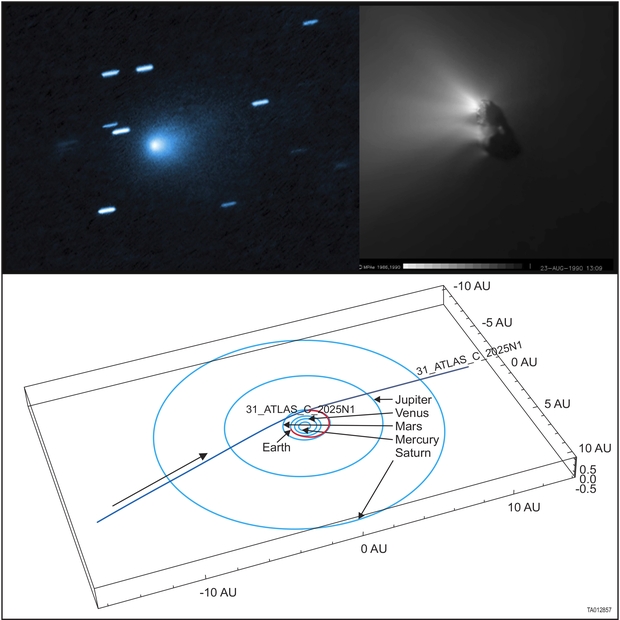

The Gemini South Observatory, likewise at Cerro Pachón, has used its Gemini Multi-Object Spectrograph (GMOS) to produce new images of 3I/ATLAS. The image below was captured during a public outreach session organized by the National Science Foundation’s NOIRLab and the Shadow the Scientists initiative that seeks to connect citizen scientists with high-end observatories.

Image: Astronomers and students working together through a unique educational initiative have obtained a striking new image of the growing tail of interstellar Comet 3I/ATLAS. The observations reveal a prominent tail and glowing coma from this celestial visitor, while also providing new scientific measurements of its colors and composition. Credit: Gemini Observatory/NSF NOIRlab.

Immediately obvious is the growing size of the coma, the cloud of dust and gas enveloping the nucleus as 3I/ATLAS moves closer to the Sun and continues to warm. Analyzing spectroscopic data will allow scientists to understand more about the object’s chemistry. So far we’re seeing cometary dust and ice not dissimilar to comets in our own system. We won’t have this object long, as its orbit is hyperbolic, taking it inside the orbit of Mars and then off again into interstellar space. Perihelion should occur at the end of October. It’s interesting to consider, as Marshall Eubanks and colleagues do in a new paper, whether we already have spacecraft that can learn something further about this particular visitor.

Note this from the paper (citation below):

Terrestrial observations from Earth will be difficult or impossible roughly from early October through the first week of November, 2025… [T]he observational burden during this period will, to the extent that they can observe, largely fall on the Psyche and Juice spacecraft and the armada of spacecraft on and orbiting Mars. Our recommendation is that attempts should be made to acquire imagery from encounter spacecraft during the entire period of the passage of 3I through the inner solar system, and in particular from the period in October and November of 2025, when observations from Earth and the space telescopes will be limited by 3I’s passage behind the Sun from those vantage points.

As we consider future interstellar encounters, flybys begin to look possible. Such was the conclusion of an internal research study performed at SwRI, which examined costs and design possibilities for a mission that may become a proposal to NASA. SwRI was working with software that could create a large number of simulated interstellar objects, while at the same time calculating a trajectory from Earth to each. Matthew Freeman is project manager for the study. It turns out that the new visitor is itself within the study’s purview:

“The trajectory of 3I/ATLAS is within the interceptable range of the mission we designed, and the scientific observations made during such a flyby would be groundbreaking. The proposed mission would be a high-speed, head-on flyby that would collect a large amount of valuable data and could also serve as a model for future missions to other ISCs [interstellar comets].”

Image: Upper left panel: Comet 3I/ATLAS as observed soon after its discovery. Upper right panel: Halley’s comet’s solid body as viewed up close by ESA’s Giotto spacecraft. Lower panel: The path of comet 3I/Atlas relative to the planets Mercury through Saturn and the SwRI mission interceptor study trajectory if the mission were to be launched this year. The red arc in the bottom panel is the mission trajectory from Earth to interstellar comet 3I/ATLAS. Courtesy of NASA/ESA/UCLA/MPS.

So we’re beginning to undertake the study of actual objects from other stellar systems, and considering the ways that probes on fast flyby missions could reach them. 3I/ATLAS thus makes the case for further studies of flyby missions. SwRI’s Mark Tapley, an expert in orbital mechanics, is optimistic indeed:

“The very encouraging thing about the appearance of 3I/ATLAS is that it further strengthens the case that our study for an ISC mission made. We demonstrated that it doesn’t take anything harder than the technologies and launch performance like missions that NASA has already flown to encounter these interstellar comets.”

The paper on a fast flyby mission to an interstellar object is Eubanks et al., “3I/ATLAS (C/2025 N1): Direct Spacecraft Exploration of a Possible Relic of Planetary Formation at “Cosmic Noon,” available as a preprint.

Ancient Life on Ceres?

We keep going through revolutions in the way science fiction writers handle asteroids. Discovered in 1801, Ceres and later Pallas (1802) spawned the notion that there once existed a planet where what came to be thought of as the asteroid belt now exists. Heinrich Olbers was thinking of the Titius-Bode law when he suggested this, pointing to the mathematical consistency of planetary orbits implicit in the now discredited theory. Robert Cromie wrote a novel called Crack of Doom in 1895 that imagined a fifth planet blown apart by futuristic warfare, a notion picked up by many early science fiction writers.

Nowadays, that notion seems quaint, and asteroids more commonly appear in later SF either as resource stockpiles or terraformed habitats, perhaps hollowed out to become starships. Nonetheless, there was a flurry of interest in asteroids as home to extraterrestrial life in the 1930s (thus Clark Ashton Smith’s “Master of the Asteroid”), and actually none other than Konstantin Tsiolkovsky wrote an even earlier work called “On Vesta” (1896), where he imagines a technological civilization emerging on the asteroid. Before someone writes to scold me for leaving it out, I have to add that Antoine de Saint-Exupéry’s The Little Prince (1943) assumes an asteroid as the character’s home world.

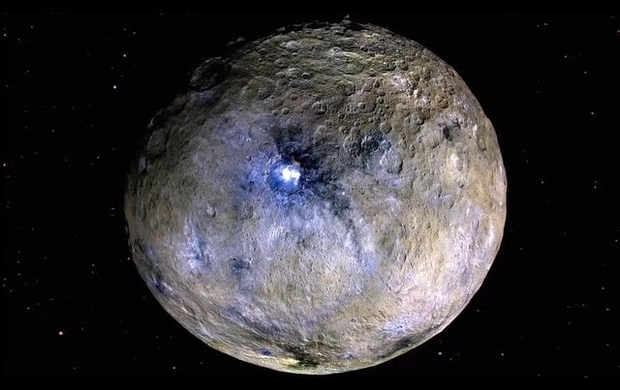

The Dawn mission that did so much to reveal the surface of Ceres made it clear how cold and seemingly inimical to life this largest of all asteroids seems to be. But even here, there is new speculation about a warmer past and the possibility of at least primitive life emerging under the frigid surface. The reservoir of interior salty water whose surface residue can be seen in Ceres’ reflective regions now appears, via Dawn data, to contain carbon molecules. So we have water, carbon molecules and a likely source of chemical energy here.

Image: Dwarf planet Ceres is shown in these enhanced-color renderings that use images from NASA’s Dawn mission. New thermal and chemical models that rely on the mission’s data indicate Ceres may have long ago had conditions suitable for life. Credit: NASA/JPL-Caltech/UCLA/MPS/DLR/IDA.

The new work from lead author Samuel Courville (Arizona State University) and team is built around computer models of Ceres, looking at its chemistry and thermal characteristics since the early days of the Solar System. The evidence shows that as late as 2.5 billion years ago, the asteroid’s internal ocean was still being heated by radioactive decay of elements deep in the interior, providing dissolved gases carried by upwelling hot water exposed to the rocks in Ceres’ core.

That’s a significant marker, because we know that here on Earth, mixing hot water, metamorphic rock and ocean provides everything life needs. Demonstrating that Ceres once had hydrothermal fluid feeding into its ocean would imply that microbes could have formed there, even if today they are long gone. Today heat from radioactive decay should be insufficient to keep Ceres’ water from turning into a concentrated brine, but the situation several billion years ago was similar to that of many Solar System objects which lack any source of tidal heating because they do not orbit a large planet.

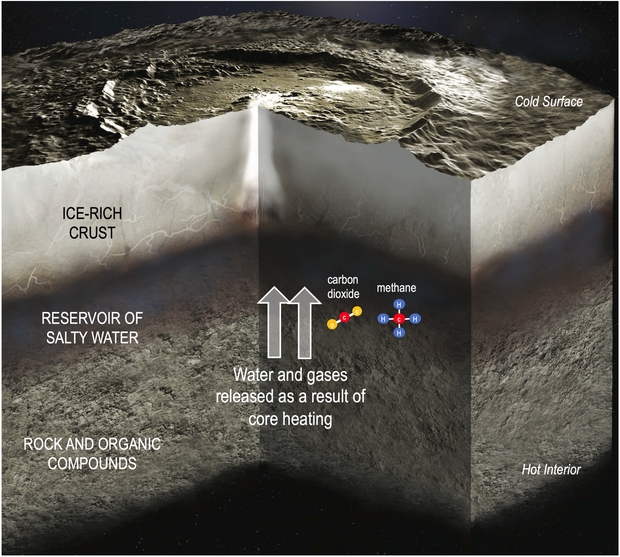

Image: This illustration depicts the interior of dwarf planet Ceres, including the transfer of water and gases from the rocky core to a reservoir of salty water. Carbon dioxide and methane are among the molecules carrying chemical energy beneath Ceres’ surface. Credit: NASA/JPL-Caltech.

From the paper:

Since Ceres is not subject to as many complex evolutionary factors (e.g., tidal heating) as are many other candidate ocean worlds that orbit gas giants, it is an ideal body to study evolutionary pathways relevant to candidate ocean worlds in the ~500- to 1000-km radius range. Being in large numbers, these bodies might represent the most abundant type of habitable environment in the early solar system.

An interesting thought! We can even speculate on how long this window of astrobiological opportunity might have remained open:

From a chemical energy perspective, the most habitable periods for these objects were when the rocky interiors underwent thermal metamorphism. Metamorphism leads to an influx of fluids into the ocean. These fluids could provide a steady source of chemical disequilibrium for several hundred million years. In the case of Ceres, the metamorphic period between ~0.5 and 2 Gyr would have created a potentially habitable environment at the seafloor if the rocky mantle reached temperatures greater than ~700 K. The decreasing temperature of Ceres’s interior over the past ~1 Gyr would likely render it thermodynamically inactive at present.

A sample return mission to Ceres? Such is recommended as a New Frontiers mission in the decadal survey Origins, worlds, and life: a decadal strategy for planetary science and astrobiology 2023–2032 (The National Academies Press, 2022). The document cites the need for samples “…collected from young carbonate salt deposits, typified by those identified by the Dawn mission at Occator crater, as well as some of Ceres’s typical dark materials.”

The paper is Courville et al., “Core metamorphism controls the dynamic habitability of mid-sized ocean worlds—The case of Ceres,” Science Advances Vol. 11 Issue 34 (20 August 2025). Full text.

Amazing Worlds: A Review

I hardly ever watch a film version of a book I love because my mental images from the book get mangled by the film maker’s vision. There’s also the problem of changes to the plot, since film and novels are entirely different kinds of media. The outliers, though, are interesting (and I sure did love Bladerunner). And when I heard that AppleTV would do Asimov’s Foundation books, I resolved to watch because I was satisfied there was no way on Earth my book images would conflict with what a filmmaker might do. How could anyone possibly produce a film version of these books?

Judging from the comments I see online, a lot of people realize how remote the AppleTV series is from the source. But here we get into something interesting about the nature of science fiction, and it’s something I have been thinking about since reading Keith Cooper’s book Amazing Worlds of Science Fiction and Science Fact. For the streaming variant of Foundation is visually gorgeous, and it pulls a lot of taut issues out of what I can only describe as the shell or scaffolding of the Asimov titles. Good science fiction is organic, and can grow into productive new directions.

A case in point: The ‘moonshrikes.’ They may not be in the books, but what a marvelous addition to the story. These winged creatures the size of elephants take advantage of another science fictional setting, a ‘double planet,’ two worlds so tightly bound that their atmospheres mix. You may remember Robert Forward playing around with this idea in his novel Rocheworld (1990), and to my knowledge that is the fictional origin of what appears to be a configuration well within the laws of physics. In the streaming Foundation, a scene where Hari Seldon watches moonshrikes leaping off cliffs to soar into the sky and graze on the sister world is pure magic.

Keith Cooper is all about explaining how this kind of magic works, and he goes at the task in both literary and filmed science fiction. Because the topic is the connection between real worlds and imagined ones, he dwells on that variant of science fiction called ‘hard SF’ to distinguish it from fantasy. As we’ve seen recently in talking about neutron stars and possible life forms there, the key is to imagine something that seems fantastic and demonstrate that it is inherently plausible. Asimov could do this, as could Clarke, as could Heinlein, and of course the genre continues into Benford, Baxter, Vinge, Reynolds, Niven and so on.

Image: The moonshrikes take wing. Credit: AppleTV / Art of VFX.

It’s hard to know where to stop with lists like that (and yes, I should mention Brin and Bear and many more), but the point is, this is the major thrust of science fiction, and while AppleTV’s Foundation takes off on explorations far from the novels, its lush filmography contains within it concepts that have been shrewdly imagined and presented with lavish attention to detail. Other worlds, as Keith Cooper will remind us in his fine book, are inescapably alien, yet they can be (at least to our imaginations, since we can’t directly see most of them yet) astonishingly beautiful. Cooper’s intuitive eye gets all that.

The rich history of science fiction, from the pulp era through to today’s multimedia extravaganzas, gets plenty of attention. I’m pleased to report that Cooper’s knowledge of SF history is deep and he moves with ease through its various eras. His method is to interview and quote numerous writers on the science behind their work, and numerous scientists on the origins of their interest. Thus Alison Sinclair, whose 1996 novel Blueheart takes place on an ocean world. Sinclair, a biochemist with a strong background in neuroscience, knows about the interplay between the real and the imagined.

Sinclair talks about how Blueheart’s ocean, being warmer and less salty than Earth’s oceans on average, is therefore less dense and floats atop a deeper layer of denser water. The aquatic life on Blueheart lives in that top layer, but when that life dies its remains, along with the nutrients those remains contain, would sink right to the bottom of the dense layer. She raises an additional point that on Earth, deep water is mixed with surface water by winds that drive surface water away from coasts, allowing deep water to well up, but with no continents on Blueheart there are no coasts, and with barely any land there’s no source of nutrients to replenish those that have sunk to the bottom.

Here is the science fictional crux, the hinge where an extrapolated problem is resolved through imaginative science. Sinclair, with an assist from author Tad Williams, will come up with a ‘false bottom,’ a layer of floating forests with a root system dense enough to act as a nutrient trap. It’s an ingenious solution if we don’t look too hard, because the question of how these floating thickets form in the first place when nutrients are in the oceanic deep still persists, but the extent to which writers trace their planet building backwards remains highly variable. It’s no small matter imagining an entire ecosystem over time.

The sheer variety of exoplanets we have thus far found and continue to hypothesize points to science fiction’s role in explaining research to the public. Thus Cooper delves deeply into desert worlds including the ultimate dry place, Frank Herbert’s Arrakis, from the universe he created in Dune (1965) and subsequent novels. Here he taps climatologists from the University of Bristol, where Alexander Farnsworth and team have modeled Arrakis, with Farnsworth noting that world-building creates huge ‘blue-sky’ questions. As he puts it, SF “…asks questions that probably wouldn’t be asked scientifically by anyone else.”

Solid point. Large, predatory creatures don’t work on desert worlds like Arrakis (there go the sand worms), but Arrakis does force us to consider how adaptation to extremely dry environments plays out. Added into the team’s simulations were author Herbert’s own maps of Arrakis, with seas of dunes at the equator and highlands in the mid-latitudes and polar regions, and the composition of its atmosphere. Herbert posits high levels of ozone, much of it produced by sand worms. Huge storms of the kind found in the novel do fit the Bristol model and lead Cooper into a discussion of Martian dust storms, factoring in surface heating and differences in albedo. All told, Dune is an example of a science fiction novel tat compels study because of the effort that went into its world building, and recent work helps us see when its details go awry.

Image: Judging from the comments of many scientists I’ve known, Frank Herbert’s Dune inspired more than a few careers that have led to exoplanet research. The publishing history is lengthy, but here’s the first appearance of the planet Arrakis in “Dune World,” the first half of the original novel, as serialized beginning with the December, 1963 issue of Analog.

The explosion of data on exoplanets, of which there were close to 6000 confirmed as Cooper was wrapping up his manuscript, has induced subtle shifts in science fiction that are acknowledged by writers as well as scientists (and the two not infrequently overlap). I think Cooper is on target as he points out that in the pre-exoplanet discovery era, Earth-like worlds were a bit easier to imagine and use as settings. But we still search for a true Earth analogue in vain.

…it’s probably fair to say that SF before the exoplanet discoveries of the 1990s was biased towards imagining worlds that were like something much closer to home. Alas, comfortably habitable worlds like Earth are, so far, in short supply. Instead, at best, we might be looking at habitable niches rather than whole welcoming worlds. Increasingly, more modern SF reflects this; think of the yin-yang world of unbearable heat and deathly cold from Charlie Jane Anders’s Locus award-winning 2019 novel The City in the Middle of the Night or the dark, cloud-smothered moon LV-426 in Alien (1979) and Aliens (1986) that has to be terraformed to be rendered habitable (although that example actually pre-dates the discovery of exoplanets).

Changes in the background ‘universe’ of a science fiction tale are hardly new. It was in 1928 that Edward E. ‘Doc’ Smith published The Skylark of Space, an award-winning tale which broached the idea that science fiction need not be confined to the Solar System. In the TV era, Star Trek reminded us of this when we suddenly had a show where the Earth was seldom mentioned. Both had some precursors, but the point is that SF adapts to known science but then can make startling imaginative jumps.

Thus novelist Stephen Baxter, a prolific writer with a background in mathematics and engineering:

’Now that we know planets are out there, it’s different because as a writer you’re exploring something that’s already defined to some extent scientifically, but it’s still very interesting…You know the science and might have some data, so you can use all that as opposed to either deriving it or just imagining it.”

What a terrific nexus for discovery and imagination. If you’re been reading science fiction for as long as I have, you’ll enjoy how famous fictional worlds map up against the discoveries we’re making with TESS and JWST. I found particular satisfaction in Cooper’s explorations of Larry Niven’s work, which clearly delights any number of scientists because of its imaginative forays within known physics and the sheer range of planetary settings he deploys.

No wonder fellow SF writers like Alastair Reynolds and Paul MacAuley cite him within these pages as an influence on their subsequent work. Niven, as McAuley points out, can meld Earth-like features with profound differences that breed utterly exotic locales. This is a man who has, after all, written (like Clement and Forward) about extreme environments for astrobiology (think of his The Integral Trees, for example, with hot Jupiters and neutron star life).

And then there’s Ringworld, with its star-encircling band of technology, and the race known as Pierson’s Puppeteers, developed across a range of stories and novels, who engineer a ‘Klemperer Rosette’ out of five worlds, one of them their home star. Each is at the point of a pentagon and all orbit a point with a common angular momentum. Their home world, Hearth, is an ‘ecumenopolis,’ a world-spanning city on the order of Asimov’s Trantor. Here again the fiction pushes the science to come up with explanations. Exoplanet scientist and blogger Alex Howe (NASA GSFC) explains his own interest:

“The Puppeteer’s Hearth is one of the things that keyed me in to the waste heat problem,” says Howe, who is a big fan of Niven: “I describe Larry Niven as re-inventing hard science fiction… not as SF that conforms strictly to known physics, but as SF that invents new physics or perhaps extrapolates from what we currently know, but applies it rigorously.”

Howe is an interesting example of the involvement of scientists with science fiction. A writer himself, he maintains his own blog devoted to the subject and has been working his way through all the classic work in the field. I’ve focused on SF in this review, but need to point out that Cooper’s work is equally strong coming in the non-fictional direction, with productive interviews with leading exoplanetologists. For now that we’re actually studying real planets around other stars, worlds like TOI-1452b, a habitable zone super-Earth around a binary, point to how fictional some of these actual planets seem.

So with known planets as a steadily growing database, we can compare and contrast the two approaches. Thus we meet Amaury Triaud (University of Birmingham), a co-discoverer of the exotic TRAPPIST-1 system and its seven small, rocky worlds. The scientist worked with Nature to coax Swiss SF writer Laurence Suhner into setting a story in that system.

Says Triaud: “If you were in your back garden with a telescope on one of these planets, you’d be able to actually see a city on one of the other planets.” Similarly, the snowball planet Gethen from Ursula le Guin’s The Left Hand of Darkness (1969) is put through analysis by planetary scientist Adiv Paradise (University of Toronto). Thus we nudge into studies of Earth’s own history extrapolated into fictional planets that invoke entirely new questions.

Here’s Paradise on snowball planets and their fate. Must they one day thaw?

“If you have a planet that doesn’t have plate tectonics, and doesn’t have much volcanism, can the carbon dioxide still escape from the outside?… You might end up with a planet where all the carbon dioxide gets locked into the mantle, and volcanism shuts off and you end up with a runaway snowball that might suppress volcanism – we don’t fully understand the feedback between surface temperature and volcanism all that well. In that case, the snowball would become permanent, at least until the star becomes brighter and melts it.”

Cooper’s prose is supple, and it allows him to explain complicated concepts in terms that newcomers to the field will appreciate. Beyond the ‘snowball’ process, the carbonate-silicate cycle so critical to maintaining planetary climates gets a thorough workout, as does the significance of plate tectonics and the consequences if a world does not have this process. Through desert worlds to water worlds to star-hugging M-dwarf planets, we learn about how atmospheres evolve and the methods scientists are using to parse out their composition.

Image: NASA’s playful poster of the TRAPPIST-1 system as a travel destination. Credit: NASA.

Each world is its own story. I hope I’ve suggested the scope of this book and the excitement it conveys even to someone who has been immersed in both science fiction and exoplanetary science for decades. Amazing Worlds of Science Fiction and Science Fact would make a great primer for anyone looking to brush up on knowledge of this or that aspect of exoplanet discovery, and a useful entry point for those just wanting to explore where we are right now.

I also chuckle at the title. Amazing Stories was by consensus the first true science fiction magazine (1926). Analog, once Astounding with its various subtitles, used ‘Science Fiction – Science Fact’ on its cover (I remember taking heat from my brother in law about this, as he didn’t see much ‘fact’ in what I was reading. But then, he wasn’t an SF fan). As a collector of old science fiction magazines, I appreciate Keith Cooper’s nod in their direction.

Claudio Maccone (1948-2025)

In all too many ways, I wasn’t really surprised to learn that Claudio Maccone had passed away. I had heard the physicist and mathematician had been in ill health, and because he was a poor correspondent in even the best of times, I was left to more or less assume the worst. His death, though, seems to have been the result of an accident (I’m reminded of the fall that took Freeman Dyson’s life). Claudio and I spent many hours together, mostly at various conferences, where we would have lengthy meals discussing his recent work.

Image: I took this photo of Claudio in Austin, TX in 2009. More on that gathering below.

With degrees in both physics and mathematics from the University of Turin, Claudio received his PhD at King’s College London in 1980. His work on spacecraft design began in 1985, when he joined the Space Systems Group of Alenia Spazio, now Thales Alenia Space Italia, which is where he began to develop ideas ranging from scientific uses for the lunar farside, SETI detections and signal processing, space missions involving sail concepts and, most significantly, a mission to the solar gravitational lens, which is how he and I first connected in 2003.

Coming into the community of deep space scientists as an outsider, a writer whose academic expertise was in far different subjects, I always appreciated the help I received early on from people who were exploring how we might overcome the vast distances between the stars. I had written about Claudio’s ideas on gravitational lensing and the kind of mission that might use it for observation of exoplanets, but was startled to find him waiting at breakfast one morning in Princeton, where Greg Matloff had invited me up for a conference.

Image: At one of the Breakthrough Starshot meetings not long after the project was announced. I took this in the lobby of our hotel in Palo Alto.

Ever the gentleman, Claudio wanted to thank me for my discussion of his work in my Centauri Dreams book, and that breakfast with Greg, his wife C, and Claudio remains a bright memory. As is the conference, chaired by Ed Belbruno, where I made many contacts talking to scientists about their work. I was already writing this site, which began in 2004, and over the years that followed, I would run into Claudio again and again, and not always at major conferences. He appeared, for example, at a founding session of what would grow into the Interstellar Research Group in Oak Ridge, quite a hike from Italy, but when it came to interstellar ideas, Claudio always wanted to be there.

One memorable trip was at Aosta in the Italian Alps, a meeting I particularly cherish because of our meals discussing local history as much as spaceflight. Several of the participants at the Aosta conference had brought their families, and one young boy was fascinated with something Claudio said one night at dinner about Italian history. I’ll never forget his asking Claudio if he could explain what had happened in the Thirty Years War. Claudio didn’t miss a beat. He began talking and in about fifteen minutes had laid out the causes of the conflict between Protestants and Catholics in 17th Century Europe within the context of the Holy Roman Empire, complete with names, dates and details.

I asked him afterwards if he had ever considered a career in history, and it turned out that we both shared an interest especially in Greek and Latin, and that yes, the subject appealed to him, whether it was 17th Century political evolution or the fine points of Pericles’ Funeral Oration. But here he voiced a caution. “You can’t do everything. You just can’t. You have to give so many things up to do your work.” True, of course, and yet somehow his knowledge was that of a polymath. He schooled me on Leibniz over wine and Beef Wellington one night in Dallas, a conversation that went on until late in the evening. In the Italian Alps, he took me into the history of Anselm of Canterbury, who had been born in Aosta, and explained the significance of his philosophy.

Mostly, of course, we talked about space. His fascination with SETI was obvious, and his work on the mathematics of first contact brought the Kosambi–Karhunen–Loève (KLT) theorem into play as a signal processing solution. His work on gravitational lensing and the mission he called FOCAL put that concept in the spotlight, paving the way for later approaches that could solve some of the problems he had identified. A look through the archives here will reveal dozens of articles I’ve written on this and other aspects of his work.

Version 1.0.0

I won’t go into the technical details here – this is a time for recollection more than analysis. But I need to mention that Claudio served as Technical Director for Scientific Space Exploration at the International Academy of Astronautics, a post of which he was rightly proud. Over 100 scientific papers bear his name, as do a number of books, the most influential being Mathematical SETI (2012) and Deep Space Flight and Communications (2009). His ongoing efforts to preserve the lunar farside for astronomical observations remind us of how precious this resource should be in our thinking. Toward the end of his life, he put his mathematical skills to work on the evolution of cultures on Earth and asked whether what he called Evo-SETI could be developed as a way to predict the likelihood of civilizations including our own surviving.

Claudio’s death caused a ripple of comment from people who had worked with him over the years. Greg Matloff, a close friend and colleague, wrote this:

I met Claudio during a Milan solar sail symposium in 1990. We collaborated for years on sails to the solar gravity focus. He was instrumental in my guest professorship in Siena in summer 1994 and my election to IAA. I knew that his health had become a challenge. Apparently, a book shelf had collapsed and he was pinned overnight until his student found him the following morning. I will miss Claudio forever but am glad that I saw him in Luxembourg last December.

I only wish I could have seen Claudio one more time, but it was not to be. I will always honor this good man and thank him for his generosity of spirit, his engaging humor and his willingness to bring me up to speed on concepts that, early on, I found quite a stretch. Now that the concept of a gravitational lensing mission is widespread in the literature and superb work continues at the Jet Propulsion Laboratory on completely new technologies to make this happen, I think Claudio’s place in the history of the interstellar idea is guaranteed.

Image: Claudio Maccone speaking before the United Nations Committee on the Peaceful Uses of Outer Space in Vienna 2010. He was proposing a radio-quiet zone on the farside that will guarantee radio astronomy and SETI a defined area in which human radio interference is impossible. It’s an idea with a pedigree, going back to 1994, when the French radio astronomer Jean Heidmann first proposed a SETI observatory in the farside Saha Crater with a link to the nearside Mare Smythii plain and thence to Earth. Credit: COPUOS.

A final happy memory. Claudio and I had met in Austin Texas and were headed to the Institute for Advanced Studies Austin, to meet with Marc Millis, Eric Davis and Hal Puthoff, among others. Claudio had just bought a new laptop so powerful that it could handle the kind of equations he threw at it. On the way, he bragged about it and showed it to me with delight all over his face.

But the joke was that with facial recognition, it refused to recognize him despite his protracted training of the gadget. I can still hear his normally calm voice gradually growing in volume (the meeting had already started and everyone was taking notes), until finally he burst out with “It’s me, damn you!” We all guffawed, and a few seconds later, amazingly, the computer let him in.

Claudio loved Latin, so here’s a bit from Seneca:

Non est ad astra mollis e terris via.

Which means “There is no easy way from the earth to the stars.”

He certainly would have agreed with that statement, but Claudio Maccone did everything in his power to tackle the problem and show us the possibilities that lay beyond our current technologies. His work was truly a gift to our future.

Generation Ships and their Consequences

Our ongoing discussion of the Project Hyperion generation ship contest continues to spark a wide range of ideas. For my part, the interest in this concept is deeply rooted, as Brian Aldiss’ Non-Stop (1958 in Britain, and then 1959 in the U.S. under the title Starship), was an early foray into science fiction at the novel length for me. Before that, I had been reading the science fiction magazines, mostly short stories with the occasional serial, and I can remember being captivated by the cover of a Starship paperback in a Chicago bookstore’s science fiction section.

Of course, what was striking about Criterion Books’ re-naming of the novel is that it immediately gave away the central idea, which readers would otherwise have had to piece together as they absorbed Aldiss’ plot twists. Yes, this was a starship, and indeed a craft where entire generations would play out their lives. Alex Tolley and I were kicking the Chrysalis concept around and I was reminded how, having been raised in Britain, Alex had been surprised to learn of the American renaming of the book. But in a recent email, he reminded me of something else, and I’ll pass that along to further seed the discussion.

What follows is from Alex, with an occasional interjection by me. I’ll label my contributions and set them in italics to avoid confusion. Alex begins:

I should mention that in Aldiss’ novel Non-Stop, the twist was that the starship was no longer in transit, but was in Earth’s orbit. The crew could not be removed from the ship as it slowly degenerated. The Earthers were the ‘giants’ visiting the ship to monitor it and study the occupants.

PG: Exactly so. To recapitulate, the starship had traveled to a planet around Procyon, and in a previous generation had experienced a pandemic evidently caused by human incompatibility with the amino acids found in its water. On the return trip, order breaks down and the crew loses knowledge of their circumstances, although we learn that there are other beings who sometimes appear and interact in mysterious ways with the crew. The twenty-three generations that have passed are far more than was needed to reach their destination, but now, in Earth orbit, their mutated biology causes scrutiny from scientists who restrict their movement while continuing to study them.

PG: The generation ship always raises questions like this, not to mention creating questions about the ethics of controlling populations for the good of the whole. I commented to Alex about the Chrysalis plan to have multiple generations of prospective crew members live in Antarctica to ensure their suitability for an interstellar voyage and its myriad social and ethical demands. He mentions J.G. Ballard’s story “Thirteen to Centaurus” below, a short story discussed at some length in these pages by Christopher Phoenix in 2016.

Image: The original appearance of “Thirteen to Centaurus,” in The July, 1962 issue of Amazing Fact and Science Fiction Stories. Rather than having to scan this out of my collection, I’m thankful to the Classics of Science Fiction site for having done the scanning for me.

I missed the multiple generations in Antarctica bit, probably because I knew the UK placed Antarctic hopefuls in a similar environment for at least several weeks to evaluate suitability. The 500-day Martian voyage simulation would be like a prison sentence for the very motivated. But several generations in some enclosed environment would perhaps be like the simulated starship in “Thirteen to Centaurus” or the 2014 US TV series Ascension. Note that Antarctica is just a way of suggesting an isolated environment, which the authors indicate is TBD. Like the 500-day Mars simulation, all the authors want is a way to test for psychological suitability.

To do this over a span of multiple generations seems very unethical, to say the least. How are they going to weed out the “unsuitable”, especially after the first generation? I also think that there is a flaw in the reasoning. Genetics is not deterministic, especially as the authors expect normal human partnering on the ship. The sexual reproduction of the genes will constantly create genetically different children. This implies that the nurture component of socialization will be very important. How will that be maintained in the simulation, let alone the starship? Will the simulation inhabitants have to resolve all problems and any anti-social behavior by themselves? What if it becomes a “Lord of the Flies” situation? Is the simulation ended and a new one started when a breakdown occurs? It is a pity that the starship cannot be composed of an isolated tribe that has presumably already managed to maintain multi-generational stability.

If we’re going to simulate an interstellar voyage, we could build the starship, park it in an orbit within the solar system, and monitor it for the needed time. This would test everything for reliability and stability, yet ensure that the population could be rescued if it all goes pear-shaped. The ethics are still an issue, but if the accommodation is very attractive, it is perhaps not too different from living on a small island in the early industrial period, isolated from the world. The Hebrides until the mid-20th century might be an example, although the adventurous could leave, which is not a possibility on the starship.

Ethics aside, I suspect that the Antarctica idea is more hopeful than viable. In my view, it will take a very different kind of society to maintain a 100+ year simulation. But there are advantages to doing this in Earth orbit. It could be that the crew becomes a separate basket of eggs to repopulate the Earth after a devastating war, as Moon or Mars colonies are sometimes depicted.

PG: I’ve always thought that rather than building a generation ship, such vessels would evolve naturally. As we learn how to exploit the resources of the Solar System, we’ll surely become adept at creating large habitats for scientists and workers. A natural progression would be for some crew, no longer particularly interested in living on a planet, to ‘cast off’ and set off on a generational journey.

Slow boating to star systems will probably require something larger, more like an O’Neill Island 3 design. Such colonies will be mature, and the remaining issue of propulsion “solved” by strapping on whatever is the most appropriate – fusion, antimatter, etc. The ethics problem is presumably moot in such colonies, as long as the colony votes to leave the solar system, and anyone preferring to stay is allowed to leave.

This is certainly what Heppenheimer and O’Leary were advocating when the space colony idea was new and shiny. On the other hand, maybe the energy is best used to propel a much smaller ship at high fractional c to achieve time dilation. If it fails, only the first-generation explorer crew dies. In extremis, this is Anderson’s Tau Zero situation.

PG: With your background in biology, Alex, what’s your take on food production in a generation ship? I realize that we have to get past the huge question of closed loop life support first, but if we do manage that, what is the most efficient way to produce the food the crew will need?

I think that by the time a Chrysalis ship can be built, they won’t be farming field crops as we do today. The time allocated to agricultural activities might be better spent on some other activity. Food production will be whatever passes for vertical farms and food factory culture, with 3-D printing of foods for variety.

The only value I can see for traditional crop farming is that it may be the only way to expand the population on the destination planet, and that means maintaining basic farming skills. The Chrysalis design did not allow animal husbandry, which means that the crew would be Vegan or Vegetarian only. In that future, that may even be the norm, and eating animal flesh a repellant idea.

In any case, space colonies should be the first to develop the technology for very long-duration missions, then generation starships if that is the only way to reach the stars, and assuming it is deemed a worthwhile idea. That techbro, Peter Thiel, cannot get seasteading going. I do wonder whether human crewed starships for colonization make much sense.

But multi-year exploration ships evoking the golden age of exploration in sailing ships might be a viable idea. Exciting opportunities to travel, discover new worlds (“new life, and new civilizations…”), yet returning to the solar system after the tour is over. It would need fast ships or some sort of suspended animation to reduce the subjective time during the long cruise phase, so that most of the subjective time would be the exploration of each world.

PG: I’ll add to that the idea that crews on generation-class ships and their counterparts on this kind of faster mission may well represent the beginning of an evolutionary fork in our species. Plenty of interesting science fiction to be written playing with the idea that there is a segment of any population that would prefer to experience life within a huge, living habitat, and thus eventually become untethered to planting colonies or exploiting a planetary surface for anything more than scientific data-gathering.

Like the university-crewed, habitat-based starship in Vonda McIntyre’s Starfarers tetralogy. The ship is based on O’Neill’s space colony technology, but it can travel at FTL velocities and is mostly about exploring new worlds. It is very Star Trek in vibes, but more exploratory, fewer phasers and photon torpedoes.

PG: So the wave of outward expansion could consist of the fast ships Alex mentions followed by a much slower and different kind of expansion through ships like Chrysalis. I’ll bring this exchange to a close here, but we’ll keep pondering interstellar expansion in coming months, including the elephant-in-the-room question Alex mentioned above. Will we come to assume that crewed starships are a worthwhile idea? Is the future outbound population most likely to consist of machine intelligence?