Centauri Dreams

Imagining and Planning Interstellar Exploration

Links for IRG Interstellar Symposium in Montreal

The preliminary program for the Interstellar Research Group’s 8th Interstellar Symposium in Montreal is now available. For those of you heading to the event, I want to add that the early bird registration period for attending at a discount is May 31. Registration fees go up after that date. Registering at the conference hotel can be handled here. Registration before the 31st is recommended to get a room within the block reserved for IRG.

First Contact: A Global Simulation

Now and again scientists think of interesting ways to use our space missions in contexts for which they were not designed. I’m thinking, for example, of the ‘pale blue dot’ image snapped by Voyager 1 in 1990, an iconic view that forcibly speaks to the immensity of the universe and the smallness of the place we inhabit. Voyager’s cameras, we might recall, were added only after a debate among mission designers, some of whom argued that the mission could proceed without any cameras aboard.

Fortunately, the camera advocates won, with results we’re all familiar with. Now we have a project out of The SETI Institute that would use a European Space Agency mission in a novel way, one that also challenges our thinking about our place in the cosmos. Daniela de Paulis, who serves as artist in residence at the institute, is working across numerous disciplines with researchers involved in SETI and astronautics to create A Sign in Space, the creation of an ‘extraterrestrial’ message. This is not a message beamed to another star, but a message beamed back at us.

The plan is this: On May 24, 2023, tomorrow as I write this on the US east coast, ESA’s ExoMars Trace Gas Orbiter, in orbit around Mars, will transmit an encoded message to Earth that will act as a simulation of a message from another civilization. The message will be detected by the Allen Telescope Array (ATA) in California, the Green Bank Telescope (GBT) in West Virginia and the Medicina Radio Astronomical Observatory in Italy. The content of the message is known only to de Paulis and her team, and the public will be in on the attempt to decode and interpret it. The message will be sent at 1900 UTC on May 24 and discussed in a live stream event beginning at 1815 UTC online.

The signal should reach Earth some 16 minutes after transmission, hence the timing of the live stream event. This should be an enjoyable online gathering. According to The SETI Institute, the live stream, hosted by Franck Marchis and the Green Bank Observatory’s Victoria Catlett, will feature key team members – scientists, engineers, artists and more – and will include control rooms from the ATA, the GBT, and Medicina.

Daniela de Paulis points to the purpose of the project:

“Throughout history, humanity has searched for meaning in powerful and transformative phenomena. Receiving a message from an extraterrestrial civilization would be a profoundly transformational experience for all humankind. A Sign in Space offers the unprecedented opportunity to tangibly rehearse and prepare for this scenario through global collaboration, fostering an open-ended search for meaning across all cultures and disciplines.”

The data are to be stored in collaboration with Breakthrough Listen’s Open Data Archive and the storage network Filecoin, the idea being to make the signal available to anyone who wants to have a crack at decoding it. A Sign in Space offers a Discord server for discussion of the project, while findings may be submitted through a dedicated form on the project’s website. For a number of weeks after the signal transmission, the A Sign in Space team will host Zoom discussions on the issues involved in reception of an extraterrestrial signal, with the events listed here.

Remembering Jim Early (1943-2023)

I was saddened to learn of the recent death of James Early, author of a key paper on interstellar sail missions and a frequent attendee at IRG events (or TVIW, as the organization was known when I first met him). Jim passed away on April 28 in Saint George, UT at the age of 80, a well-liked figure in the interstellar community and a fine scientist. I wish I had known him better. I ran into him for the first time in a slightly awkward way, which Jim, ever the gentleman, quickly made light of. What happened was this. In 2012 I was researching damage that an interstellar sail mission might experience in the boost phase of its journey. Somewhere I had seen what I recall as a color image in a magazine (OMNI?) showing a battered, torn sail docked in what looked to be a repair facility at the end of an interstellar crossing. It raised the obvious question: If we did get a sail up to, say, 5% of the speed of light, wouldn’t even the tiniest particles along the way create significant damage to the structure? The image was telling and to this day I haven’t found its source. I think of the image as ‘lightsail on arrival,’ and if this triggers a memory with anyone, please let me know. Anyway, although our paths crossed at the first 100 Year Starship symposium in Orlando in 2011, I didn’t know Jim’s work and didn’t realize he had analyzed the sail damage question extensively. When I wrote about the matter on Centauri Dreams a year later, he popped up in the comments:

I was saddened to learn of the recent death of James Early, author of a key paper on interstellar sail missions and a frequent attendee at IRG events (or TVIW, as the organization was known when I first met him). Jim passed away on April 28 in Saint George, UT at the age of 80, a well-liked figure in the interstellar community and a fine scientist. I wish I had known him better. I ran into him for the first time in a slightly awkward way, which Jim, ever the gentleman, quickly made light of. What happened was this. In 2012 I was researching damage that an interstellar sail mission might experience in the boost phase of its journey. Somewhere I had seen what I recall as a color image in a magazine (OMNI?) showing a battered, torn sail docked in what looked to be a repair facility at the end of an interstellar crossing. It raised the obvious question: If we did get a sail up to, say, 5% of the speed of light, wouldn’t even the tiniest particles along the way create significant damage to the structure? The image was telling and to this day I haven’t found its source. I think of the image as ‘lightsail on arrival,’ and if this triggers a memory with anyone, please let me know. Anyway, although our paths crossed at the first 100 Year Starship symposium in Orlando in 2011, I didn’t know Jim’s work and didn’t realize he had analyzed the sail damage question extensively. When I wrote about the matter on Centauri Dreams a year later, he popped up in the comments:

I presented a very low mass solution to the dust problem at the 100 Year Starship Symposium in a talk titled “Dust Grain Damage to Interstellar Vehicles and Lightsails”. An earlier published paper contains most of the important physics: Early, J.T., and London, R.A., “Dust Grain Damage to Interstellar Laser-Pushed Lightsail”, Journal of Spacecraft and Rockets, July-Aug. 2000, Vol. 37, No. 4, pp. 526-531.

I was caught by surprise by the reference. How did I miss it? Researching my 2005 Centauri Dreams book, I had been through the literature backwards and forwards, and JSR was one of the journals I combed for deep space papers. Later, at a TVIW meeting in Oak Ridge, we talked, had dinner and Jim kidded me about my research methods. As I saw it, his paper was a major contribution, and I should have known about it. Yesterday I asked Andrew Higgins (McGill University) about the paper and he had this to say in an email:

Jim Early’s paper (written with Richard London in 1999) on dust grain impacts addressed one of the bogeys of interstellar flight: The dust grain impact problem when traveling at relativistic speeds. Their analysis showed—counterintuitively—that the damage caused by a dust grain on an interstellar lightsail actually decreases as the sail exceeds a few percent of the speed of light. While the grain turns into an expanding fireball of plasma as it passes through the sail, the amount of thermal radiation deposited on the sail decreases as the fireball is receding more quickly from the sail. This was a welcome result suggesting sails might survive the interstellar transit, and their study remains the seminal work on dust grain interactions with thin structures at relativistic speeds.

Image: Dinner after the first day’s last plenary session in Oak Ridge in 2014. That’s Jim Benford at far left, then James Early, Sandy Montgomery and Michael Lynch. The family has set up a website honoring Jim and offering photos and an obituary. He got his bachelor’s degree in Aeronautics at MIT, following it with a master’s degree in mechanical engineering at Caltech, and a PhD in aeronautics and physics at Stanford University. He was involved with development activities for the Delta launch vehicle while obtaining his bachelor’s degree by working at NASA Goddard Space Flight Center in the summers and then at McDonnell-Douglas after finishing his master’s degree. He joined Lockheed and Hughes aircraft for a time before finally ending up at the Lawrence Livermore National Laboratory working on laser physics until he retired.

Image: Dinner after the first day’s last plenary session in Oak Ridge in 2014. That’s Jim Benford at far left, then James Early, Sandy Montgomery and Michael Lynch. The family has set up a website honoring Jim and offering photos and an obituary. He got his bachelor’s degree in Aeronautics at MIT, following it with a master’s degree in mechanical engineering at Caltech, and a PhD in aeronautics and physics at Stanford University. He was involved with development activities for the Delta launch vehicle while obtaining his bachelor’s degree by working at NASA Goddard Space Flight Center in the summers and then at McDonnell-Douglas after finishing his master’s degree. He joined Lockheed and Hughes aircraft for a time before finally ending up at the Lawrence Livermore National Laboratory working on laser physics until he retired.

Sail in Flight

So let’s look at Jim’s paper on sails, a subject he continued to work on for the next two decades. Although Robert Forward came up with sail ideas that pushed as high as 30 percent of the speed of light (and in the case of Starwisp, even higher), Jim and his co-author Richard London chose 0.1 c for cruise velocity in their paper, which provides technical challenges aplenty but at least diminishes the enormous energy costs of still faster missions, and certainly mitigates the problem of damage from dust and gas along the way. Depending on the methods used, the sail as analyzed in this paper may take a tenth of a light year to get up to cruise velocity. It’s worth mentioning that the sail does not have to remain deployed during cruise itself, but deceleration at the target star, depending on the methods used, may demand redeployment. Breakthrough Starshot envisions stowing the sail in cruise after its sudden acceleration to 20 percent of c. Early and London use beryllium sails as their reference point, these being the best characterized design at this stage of sail study, and assume a sail 20 nm thick. In terms of the interstellar medium the sail will encounter, the authors say this:

Local interstellar dust properties can be estimated from dust impact rates on spacecraft in the outer solar system and by dust interaction with starlight. The mean particle masses seen by the Galileo and Ulysses spacecraft were 2×10-12 and 1×10-12g, respectively. A 10-12g dust grain has a diameter of approximately 1 µm. The median grain size is smaller because the mean is dominated by larger grains. The Ulysses saw a mass density of 7.5×10-27g cm-3. A sail accelerating over a distance of 0.1 light years would encounter 700 dust grains/cm2 at this density. The surface of any vehicle that traveled 10 light years would encounter 700 dust grains/mm2. If a significant fraction of the particle energy is deposited in the impacted surface in either case, the result would be catastrophic.

The question then becomes, just how much of the particle’s energy will be deposited on the sail? The unknowns are all too obvious, but the paper adds that neither of the Voyagers saw dust grains larger than 1 ?m at distances beyond 50 AU, while a 1999 study on interstellar dust grain distributions found a flat distribution from 10-14 to 10-12 g with some grains as large as 10-11 g. Noting that a 10-12 dust grain has a diameter of about 1-?m, the authors use a 1-?m diameter grain for their impact calculations. The results are intriguing because they show little damage to the sail. Catastrophe averted:

At the high velocities of interstellar travel, dust grains and atoms of interstellar gas will pass through thin foils with very little loss of energy. There will be negligible damage from collisions between the nuclei of atoms. In the case of dust particles, most of the damage will be due to heating of the electrons in the thin foil. Even this damage will be limited to an area approximately the size of the dust particle due to the extremely fast, one-dimensional ambipolar diffusion explosion of the heated section of the foil. The total fraction of the sail surface damaged by dust collisions will be negligible.

The torn and battered lightsail in its dock, as seen in my remembered illustration, may then be unlikely, depending on cruise speed and, of course, on the local medium it passes through. Sail materials also turn out to offer excellent shielding for the critical payload behind the sail:

Interstellar vehicles require protection from impacts by dust and interstellar gas on the deep structures of the vehicle. The deployment of a thin foil in front of the vehicle provides a low mass, effective system for conversion of dust grains or neutral gas atoms into free electrons and ions. These charged particles can then be easily deflected away from the vehicle with electrostatic shields.

And because the topic has come up in a number of past discussions here, let me add this bit about interstellar gas and its effects on the lightsail:

The mass density of interstellar gas is approximately one hundred times that of interstellar dust particles though this ratio varies significantly in different regions of space. The impact of this gas on interstellar vehicles can cause local material damage and generate more penetrating photon radiation. If this gas is ionized, it can be easily deflected before it strikes the vehicle’s surface. Any neutral atom striking even the thin foil discussed in this paper will pass through the foil and emerge as an ion and free electron. Electrostatic or magnetic shields can then deflect these charged particles away from the vehicle.

Consequences for Sail Design

All of these findings have a bearing on the kind of sail we use. The thin beryllium sail appears effective as a shield for the payload, with a high melting point and, the authors conclude, the ability to be increased in thickness if necessary without increasing the area damaged by dust grains. Ultra-thin foils of tantalum or niobium offer higher temperature possibilities, allowing us to increase the laser power applied to the sail and thus the acceleration. But Early and London believe that the higher atomic mass of these sails would make them more susceptible to damage. Even so, “…the level of damage to thin laser lightsails appears to be quite small; therefore the design of these sails should not be strongly influenced by dust collision concerns.” Dielectric sails would be more problematic, suffering more damage from heated dust grains because of their greater thickness, and the authors argue that these sail materials need to be subjected to a more complete analysis of the blast wave dynamics they will experience. All in all, though, velocities of 0.1 c yield little damage to a thin beryllium sail, and thin shields of similar materials can ionize dust as well as neutral interstellar gas atoms, allowing the ions to be deflected and the interstellar vehicle protected. These are encouraging results, but the size of the problem is daunting, and given the apparent cost of the classically conceived interstellar probe, the prospect of impact damage calls for continued analysis of the medium through which the probe would pass. This is one of the advantages of sending not one large craft but a multitude of smaller ‘chipsat’ style vehicles in the Breakthrough Starshot model. Send enough of these and you can afford to lose a certain percentage along the way. I can only wish I could sit down with Jim Early again to kick around chipsat concepts, but what a fine memorial to know that your paper continues to influence evolving interstellar ideas. The paper is Early, J.T., and London, R.A., “Dust Grain Damage to Interstellar Laser-Pushed Lightsail,” Journal of Spacecraft and Rockets, July-Aug. 2000, Vol. 37, No. 4, pp. 526-531.

Assembly theory (AT) – A New Approach to Detecting Extraterrestrial Life Unrecognizable by Present Technologies

With landers on places like Enceladus conceivable in the not distant future, how we might recognize extraterrestrial life if and when we run into it is no small matter. But maybe we can draw conclusions by addressing the complexity of an object, calculating what it would take to produce it. Don Wilkins considers this approach in today’s essay as he lays out the background of Assembly Theory. A retired aerospace engineer with thirty-five years experience in designing, developing, testing, manufacturing and deploying avionics, Don tells me he has been an avid supporter of space flight and exploration all the way back to the days of Project Mercury. Based in St. Louis, where he is an adjunct instructor of electronics at Washington University, Don holds twelve patents and is involved with the university’s efforts at increasing participation in science, technology, engineering, and math. Have a look at how we might deploy AT methods not only in our system but around other stars.

by Don Wilkins

A continuing concern within the astrobiology community is the possibility alien life is detected, then misclassified as built from non-organic processes. Likely harbors for extraterrestrial life — if such life exists — might be so alien, employing chemistries radically different from those used by terrestrial life, as to be unrecognizable by present technologies. No definitive signature unambiguously distinguishes life from inorganic processes. [1]

Two contentious results from the search for life on Mars are examples of this uncertainty. Lack of knowledge of the environments producing the results prevented elimination of abiotic origins for the molecules under evaluation. The Viking Lander’s metabolic experiments provide debatable results as the properties of Martian soil were unknown. An exciting announcement of life detection in the ALH 84001 meteorite is challenged as the ambiguous criteria to make the decision are not quantitative.

Terrestrial living systems employ processes such as photosynthesis, whose outputs are potential biosignatures. While these signals are relatively simple to identify on Earth, the unknown context of these signals in alien environments makes distinguishing between organic and inorganic origins difficult if not impossible.

The central problem arises in an apparent disconnect between physics and biology. In accounting for life, traditional physics provides the laws of nature, and assumes specific outcomes are the result of specific initial conditions. Life, in the standard interpretation, is encoded in the initial period immediately after the Big Bang. Life is, in other words, an emergent property of the Universe.

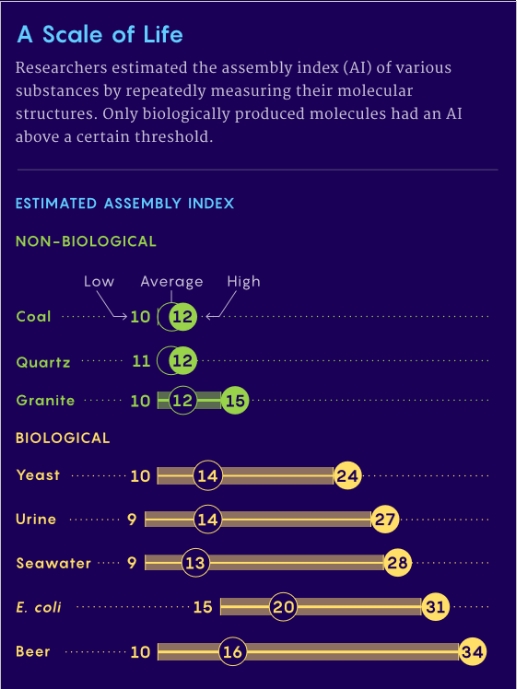

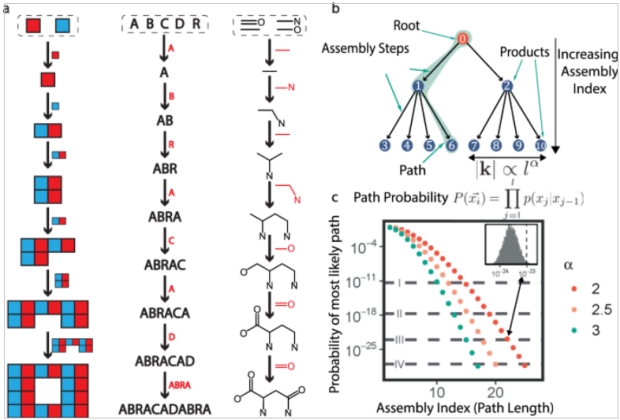

Assembly theory (AT) offers a possible solution to the ambiguity. AT posits a numerical value, based on the complexity of a molecule, that can be assigned to a chemical, the Assembly Index (AI), Figure 1. This parameter measures the histories of an object, essentially the complexity of the processes which formed the molecule. Assembly pathways are sequences of joining operations, from basic building blocks to final product. In these sequences, sub-units generated within the sequence combine with other basic or compound sub-units later in the sequence, to recursively generate larger structures. [2]

The theory purports to objectively measure an object’s complexity by considering how it was made. The assembly index (AI) is produced by calculating the minimum number of steps needed to make the object from its ingredients. The results showed, for relatively small molecules (mass?<?~250 Daltons), AI is approximately proportional to molecular weight. The relationship with molecular weight is not valid for large molecules greater than 250 Daltons. Note: One Dalton or atomic mass unit is a equal to one twelfth of the mass of a free carbon-12 atom at rest.

Figure 1. A Comparison of Assembly Indices for Biological and Abiotic Molecules.

Analyzing a molecule begins with basic building blocks, a shared set of objects, Figure 2. AI measures the smallest number of joining operations required to create the object. The assembly process is a random walk on weighted trees where the number of outgoing edges (leaves) grows as a function of the depth of the tree. A probability estimate an object forms by chance requires the production of several million trees and calculating the likelihood of the most likely path through the “forest”. Probability is related to the number of joining operations required or the path length traversed to produce the molecule. As an example, the probability of Taxol forming ranges between 1:1035 to 1:1060 with a path length of 30. In Figure 2, alpha biasing controls how quickly the number of joining operations grows with the depth of the tree.

Figure 2. Calculating Complexity

AT does not require extremely fine-tuned initial conditions demanded in the physics-based origins of life. Information to build specific objects accumulates over time. A highly improbable fine-tuned Big Bang is no longer needed. AT takes advantage of concepts borrowed from graph (networks of interlinked nodes) theory. [3] According to Sara Walker of Arizona State University and a lead AT researcher, information “is in the path, not the initial conditions.”

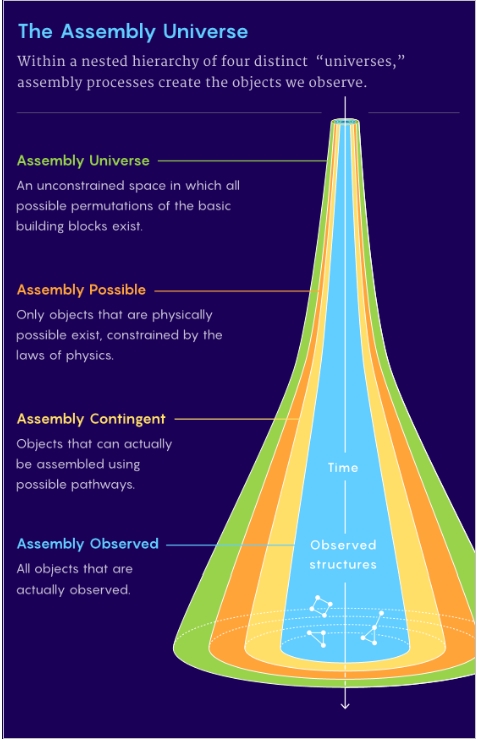

To explain why some objects appear but others do not, AT posits four distinct classifications, Figure 3. All possible basic building block variations are allowed in the Assembly Universe. Physics, temperature or catalysis are examples, constraining the combinations, eliminating constructs which are not physical in the Assembly Possible. Only objects that can be assembled comprise the Assembly Contingent level. Observable objects are grouped in the Assembly Observed.

Figure 3. The four “universes” of Assembly Theory

Chiara Marletto, a theoretical physicist at the University of Oxford, with David Deutsch, a physicist also at Oxford, are developing a theory resembling AT, the constructor theory (CT). Mimicking the thermodynamics Carnot cycle, CT uses machines or constructors operating in a cyclic fashion, starting at a original state, processing through a pattern until the process returns to the original state to explain a non-probabilistic Universe.

A team headed by Lee Cronin of the University of Glasgow and Sara Walker proposes AT as a tool to distinguish between molecules produced by terrestrial or extraterrestrial life and those built by abiotic processes. [4] AT analysis is susceptible to false negatives but current work produces no false positives. After completing a series of demonstrations, the researchers believe an AT life detection experiment deployable to extraterrestrial locations is possible.

Researchers believe AI estimates can be made using mass or infrared spectrometry. [5-6] While mass spectrometry requires physical access to samples, Cronin and colleagues showed a combination of AT and infrared spectrometry sensors similar to those on the James Webb Space Telescope could analyze the chemical environment of an exoplanet, possibly detecting alien life.

References

[1] Philip Ball, A New Idea for How to Assemble Life, Quanta, 4 May 2023,

https://www.quantamagazine.org/a-new-theory-for-the-assembly-of-life-in-the-universe-20230504?mc_cid=088ea6be73&mc_eid=34716a7dd8

[2] Abhishek Sharma, Dániel Czégel, Michael Lachmann, Christopher P. Kempes, Sara I. Walker, Leroy Cronin, “Assembly Theory Explains and Quantifies the Emergence of Selection and Evolution,”

https://arxiv.org/abs/2206.02279

[3] Stuart M. Marshall, Douglas G. Moore, Alastair R. G. Murray, Sara I. Walker, and Leroy Cronin, Formalising the Pathways to Life Using Assembly Spaces, Entropy 2022, 24(7), 884, 27 June 2022, https://doi.org/10.3390/e24070884

[4] Yu Liu, Cole Mathis, Micha? Dariusz Bajczyk, Stuart M. Marshall, Liam Wilbraham, Leroy Cronin, “Ring and mapping chemical space with molecular assembly trees,” Science Advances, Vol. 7, No. 39

https://www.science.org/doi/10.1126/sciadv.abj2465

[5] Stuart M. Marshall, Cole Mathis, Emma Carrick, Graham Keenan, Geoffrey J. T. Cooper, Heather Graham, Matthew Craven, Piotr S. Gromski, Douglas G. Moore, Sara I. Walker, Leroy Cronin, “Identifying molecules as biosignatures with assembly theory and mass spectrometry,” Nature Communications volume 12, article number: 3033 (2021)

https://www.nature.com/articles/s41467-021-23258-x

[6] Michael Jirasek, Abhishek Sharma, Jessica R. Bame, Nicola Bell1, Stuart M. Marshall,Cole Mathis, Alasdair Macleod, Geoffrey J. T. Cooper!, Marcel Swart, Rosa Mollfulleda, Leroy Cronin, “Multimodal Techniques for Detecting Alien Life using Assembly Theory and Spectroscopy,” https://arxiv.org/ftp/arxiv/papers/2302/2302.13753.pdf

Game Changer: Exploring the New Paradigm for Deep Space

The game changer for space exploration in coming decades will be self-assembly, enabling the growth of a new and invigorating paradigm in which multiple smallsat sailcraft launched as ‘rideshare’ payloads augment huge ‘flagship’ missions. Self-assembly allows formation-flying smallsats to emerge enroute as larger, fully capable craft carrying complex payloads to target. The case for this grows out of Slava Turyshev and team’s work at JPL as they refine the conceptual design for a mission to the solar gravitational lens at 550 AU and beyond. The advantages are patent, including lower cost, fast transit times and full capability at destination.

Aspects of this paradigm are beginning to be explored in the literature, as I’ve been reminded by Alex Tolley, who forwarded an interesting paper out of the University of Padua (Italy). Drawing on an international team, lead author Giovanni Santi explores the use of CubeSat-scale spacecraft driven by sail technologies, in this case ‘lightsails’ pushed by a laser array. Self-assembly does not figure into the discussion in this paper, but the focus on smallsats and sails fits nicely with the concept, and extends the discussion of how to maximize data return from distant targets in the Solar System.

The key to the Santi paper is swarm technologies, numerous small sailcraft placed into orbits that allow planetary exploration as well as observations of the heliosphere. We’re talking about payloads in the range of 1 kg each, and the intent of the paper is to explore onboard systems (telecommunications receives particular attention), the fabrication of the sail and its stability, and the applications such systems can offer. As you would imagine, the work draws for its laser concepts on the Starlight program pursued for NASA by Philip Lubin and the ongoing Breakthrough Starshot project.

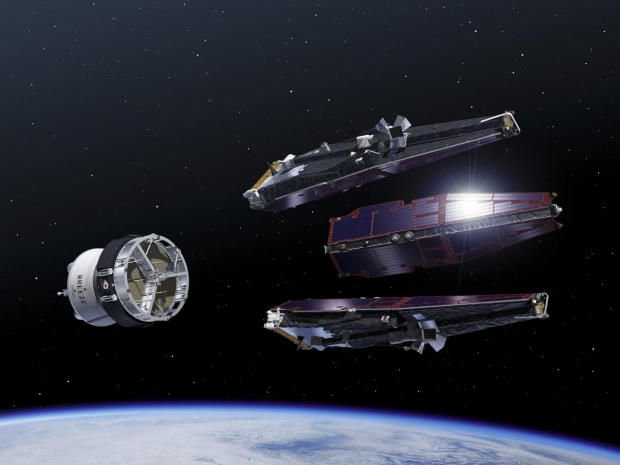

Image: NASA’s Starling mission is one early step toward developing swarm capabilities. The mission will demonstrate technologies to enable multipoint science data collection by several small spacecraft flying in swarms. The six-month mission will use four CubeSats in low-Earth orbit to test four technologies that let spacecraft operate in a synchronized manner without resources from the ground. Credit: NASA Ames.

The authors argue that ground-based direct energy laser propulsion, with its benefits in terms of modularity and scalability, is the baseline technology needed to make small sailcraft exploration of the Solar System a reality. Thus there is a line of development which extends from early missions to targets like Mars, with accompanying reductions in the power needed (as opposed to interstellar missions like Breakthrough Starshot), and correspondingly, fewer demands on the laser array.

The paper specifically does not analyze close-pass perihelion maneuvers at the Sun of the sort examined by the JPL team, which assumes no need for a ground-based array. I think the ‘Sundiver’ maneuver is the missing piece in the puzzle, and will come back to it in a moment.

Breakthrough Starshot envisions a flyby of a planetary system like Proxima Centauri, but the missions contemplated here, much closer to home, must find a way to brake at destination in cases where extended planetary science is going to be performed. Thus we lose the benefit of purely sail-based propulsion (no propellant aboard) in favor of carrying enough propulsive mass to make the needed maneuvers at, say, Mars:

…the spacecraft could be ballistically captured in a highly irregular orbit, which requires at least an high thrust maneuver to stabilize the orbit itself and to reduce the eccentricity…The velocity budget has been estimated using GMAT suite to be ?v ? 900?1400 m s?1, depending on the desired final orbit eccentricity and altitude. A chemical thruster with about 3 N thrust would allow to perform a sufficiently fast maneuver. In this scenario, the mass of the nanosatellite is estimated to be increased by a wet mass of 5 kg; moreover, an increase of the mass of reaction wheels needs to be taken into account given the total mass increment.

Even so, swarms of nanosatellites allow a reduction of the payload mass of each individual spacecraft, with the added benefit of redundancy and the use of off-the-shelf components. The authors dwell on the lightsail itself, noting the basic requirement that it be thermally and mechanically stable during acceleration, no small matter when propelling a sail out of Earth orbit through a high-power laser beam. Although layered sails and sails using nanostructures, metamaterials that can optimize heat dissipation and promote stability, are an area of active research, this paper works with a thin film design that reduces complexity and offers lower costs.

We wind up with simulations involving a sail made of titanium dioxide with a radius of 1.8 m (i.e. a total area of 10 m2) and a thickness of 1 µm. The issue of turbulence in the atmosphere, a concern for Breakthrough Starshot’s ground-based laser array, is not considered in this paper, but the authors note the need to analyze the problem in the next iteration of their work along with close attention to laser alignment, which can cause problems of sail drifting and spinning or even destroy the sail.

But does the laser have to be on the Earth’s surface? We’ve had this discussion before, noting the political problem of a high-power laser installation in Earth orbit, but the paper notes a third possibility, the surface of the Moon. A long-term prospect, to be sure, but one having the advantage of lack of atmosphere, and perhaps placement on the Moon’s far side could one day offer a politically acceptable solution. It’s an intriguing thought, but if we’re thinking of the near term, the fastest solution seems to be the Breakthrough Starshot choice of a ground-based facility on Earth.

What we have here, then, could be described as a scaled-down laser concept, a kind of Breakthrough Starshot ‘lite’ that focuses on lower levels of laser power, larger payloads (even though still in the nanosatellite range), and targets as close as Mars, where swarms of sail-driven spacecraft might construct the communications network for a colony on the surface. A larger target would be exploration of the heliosphere:

…in this last mission scenario the nanosatellites would be radially propelled without the need of further orbital maneuvers. To date, the interplanetary environment, and in particular the heliospheric plasma, is only partially known due to the few existing opportunities for carrying out in-situ measurements, basically linked to scientific exploration missions [76]. The composition and characteristics of the heliospheric plasma remain defined mainly through theoretical models only partially verified. Therefore, there is an urgent need to perform a more detailed mapping of the heliospheric environment especially due to the growth of the human activities in space.

Image: An artist’s concept of ESA’s Swarm mission being deployed. This image was taken from a 2015 workshop on formation flying satellites held at Technische Universiteit Delft in the Netherlands. Extending the swarm paradigm to smallsats and nanosatellites is one step toward future robotic self-assembly. Credit: TU Delft.

Spacecraft operating in swarms optimized for the study of the heliosphere offer tantalizing possibilities in terms of data return. But I think the point that emerges here is flexibility, the notion that coupling a beamed propulsion system to smallsats and nanosats offers a less expensive, modular way to explore targets previously within reach only by expensive flagship missions. I’ll also argue that a large, ground-based laser array is aspirational but not essential to push this paradigm forward.

Issues of self-assembly and sail design are under active study, as is the question of thermal survival for operations close to the Sun. We should continue to explore close solar passes and ‘sundiver’ maneuvers to shorten transit times to targets both relatively near or as far away as the Kuiper Belt. We need test missions to firm up sail materials and operations, even as we experiment with self-assembly of smallsats into larger craft capable of complex operations at target. The result is a modular fleet that can make fast flybys of distant targets or assemble for orbital operations where needed.

The paper is Santi et al., “Swarm of lightsail nanosatellites for Solar System exploration,” available as a preprint.

An Ice Giant’s Possible Oceans

Further fueling my interest in reaching the ice giants is a study in the Journal of Geophysical Research: Planets that investigates the possibility of oceans on the major moons of Uranus. Imaged by Voyager 2, Uranus is otherwise unvisited by our spacecraft, but Miranda, Ariel, Titania, Oberon and Umbriel hold considerable interest given what we are learning about oceans beneath the surface of icy moons. Hence the need to examine the Voyager 2 data in light of updated computer modeling.

Julie Castillo-Rogez (JPL) is lead author of the paper:

“When it comes to small bodies – dwarf planets and moons – planetary scientists previously have found evidence of oceans in several unlikely places, including the dwarf planets Ceres and Pluto, and Saturn’s moon Mimas. So there are mechanisms at play that we don’t fully understand. This paper investigates what those could be and how they are relevant to the many bodies in the solar system that could be rich in water but have limited internal heat.”

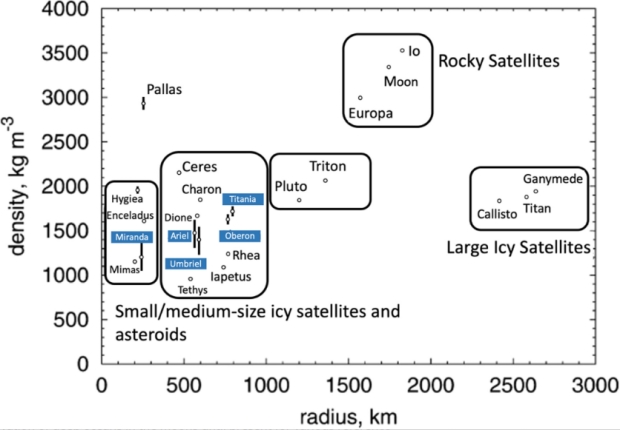

Image: This is Figure 1 from the paper. Caption: Densities and mean radii of the Uranian moons compared to those of other large moons and dwarf planets. Miranda has a low density similar to Saturn’s moon Mimas, whereas the densities of the other Uranian moons are more similar to Saturn’s moons Dione and Rhea. After Hussmann et al. (2006).

The interest is more than theoretical, for as we’ve recently discussed the Planetary Science and Astrobiology Decadal Survey for 2023–2032 has put a Uranus Orbiter and Probe mission on its short list of priorities. A mission to Uranus would open up the prospect for confirming oceans, or the lack of same, within the five large moons. Recent work explored in the Castillo-Rogez paper has made the case that magnetic fields induced by such oceans should be detectable by a Uranus orbiter’s flybys.

Much has happened to call for new modeling of this system. The paper notes recent advances in surface chemistry and geology, revised models of system dynamics, and the knowledge gained on icy bodies in the size range of the Uranian moons as studies have continued on Enceladus and the moons of Saturn as well as Pluto and Charon, not to mention the availability of data from the Dawn mission at Ceres. The team’s modeling produces likely interior structures that are promising for four of the moons.

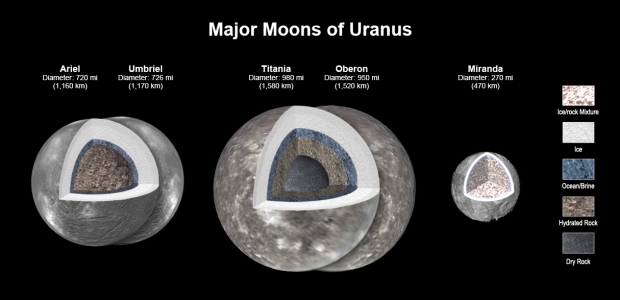

These moons are indeed small objects, and while Uranus has 27 moons, it is only when we reach the size of Ariel (1160 kilometers) that we can start talking realistically about interior oceans. Titania is the largest of these at 1580 kilometers. The paper argues that of the five largest moons, we can exclude Miranda (470 kilometers) as being too small to sustain the heat to support an internal ocean. But the other four appear promising, revising and contradicting earlier work that had focused primarily on Titania and Oberon in the belief that Ariel, Umbriel, and Miranda would be frozen at present.

Image: New modeling shows that there likely is an ocean layer in four of Uranus’ major moons: Ariel, Umbriel, Titania, and Oberon. Salty – or briny – oceans lie under the ice and atop layers of water-rich rock and dry rock. Miranda is too small to retain enough heat for an ocean layer. Credit: NASA/JPL-Caltech.

Of the large Uranian moons, Ariel may emerge as the best possibility. From the paper:

Ariel is particularly interesting as a future mission target because of the potential detection of NH3-bearing species on its surface (Cartwright et al., 2021) that could be evidence of recent cryovolcanic activity, considering these species should degrade on a geologically short timescale. Geologic features, visible in Voyager 2 Imaging Science Subsystem images of Ariel, show some evidence for cryovolcanism in the form of double ridges and lobate features that may represent emplaced cryolava (Beddingfield & Cartwright, 2021).

But oceans tens of kilometers deep at Titania and Oberon may yet excite astrobiological interest, depending on what we learn about heat sources here.

Based on current understanding, we conclude that the Uranian moons are more likely to host residual or “relict” oceans than thick oceans. As such, they may be representative of many icy bodies, including Ceres, Callisto, Pluto, and Charon (De Sanctis et al., 2020). The detection and characterization (depth and thickness) of deep oceans inside the Uranian moons… and refined constraint on surface ages would help assess whether these bodies still hold residual heat from a recent resonance crossing event and/or are undergoing tidal heating due to dynamical circumstances that are currently unknown (as was the case at Enceladus before the Cassini mission).

The Uranus Orbiter and Probe mission holds great allure for answering some of these questions. The issue of detection by a spacecraft is still charged, however. The authors note from the outset that an ocean maintained primarily by ammonia would be well below the water freezing point, in which case its electrical conductivity might be too low to register on the UOP’s sensors. In other words, ammonia essentially acts as an antifreeze, with electrical conductivity near zero. Temperatures below ~245 K would mean an ocean would have to be detected by the exposure of deep ocean material, in which case we come back to Ariel as the most likely target for the closest scrutiny.

The paper is Castillo-Rogez et al., “Compositions and Interior Structures of the Large Moons of Uranus and Implications for Future Spacecraft Observations,” JGR Planets Vol. 128, Issue 1 (January 2023). Abstract.