Centauri Dreams

Imagining and Planning Interstellar Exploration

Re-thinking the Early Universe?

I hadn’t intended to return so quickly to the issue of high-redshift galaxies, but SPT0418-47 jibes nicely with last week’s piece on 13.5 billion year old galaxies as studied by Penn State’s Joel Leja and colleagues. In that case, the issue was the apparent maturity of these objects at such an early age in the universe.

Today’s work, reported in a paper in The Astrophysical Journal Letters, comes from a team led by Bo Peng at Cornell University. It too uses JWST data, in this case targeting a previously unseen galaxy the instrument picked out of the foreground light of galaxy SPT0418-47. In both cases, we’re seeing data that challenge conventional understanding of conditions in this remote era. This is evidence, but of what? Are we wrong about the basics of galaxy formation? Do we need to recalibrate the models we use to understand astrophysics at high-redshift?

SPT0418-47 is the galaxy JWST was being used to study, an intriguing subject in its own right. This is an infant galaxy still forming stars in the early universe, observable through the bending of its light by a foreground galaxy to form an Einstein ring. In other words, we’re seeing gravitational lensing at work here, magnifying the young galaxy’s light, out of which information can be extracted about the primordial object. And within that light, astronomers have now found a second galaxy which manifested itself in two places in the ring.

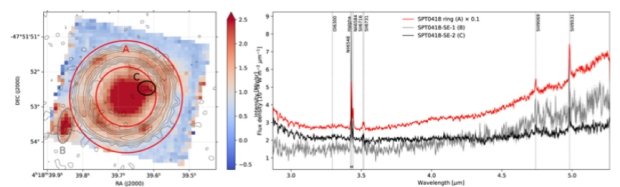

Image: This is Figure 1 from the paper. Caption: Figure 1. Left: H? pseudo-narrowband image of the SPT0418 system, averaged over the channels including the H? emission in the original spectral cube. The strongly lensed ring and the two newly discovered sources (SE-1 and SE-2) are highlighted by a red annulus and gray and black ellipses, marked as “A,” “B,” and “C,” respectively. The lensing galaxy is shown as the central bright source. The 835 ?m continuum is plotted as the thin black contours, with the levels 2, 4, 8, 16, 32 × ? where ? = 56.7 ?Jy beam ?1. Right: the spectra of the three sources integrated over the regions highlighted in the left panel, using the same color scheme. The spectrum for the ring is scaled by a factor of 0.1 for clarity. The small black bar below the H? line marks the wavelength coverage of the pseudo-narrowband image. The potentially detected lines are marked by vertical dotted lines. Credit: The Astrophysical Journal Letters (2023). DOI: 10.3847/2041-8213/acb59c.

ALMA (the Atacama Large Millimeter/submillimeter Array) data could do no more than hint at the background galaxy’s existence, but working with spectral data from JWST’s NIRSpec instrument, Peng discovered the new light source within the Einstein ring. The unexpected find was a galaxy being gravitationally lensed by the same foreground galaxy that had made SPT0418-47 available for study, though considerably fainter.

What stands out here is the analysis of the chemical composition of the new galaxy’s light, which shows strong emission lines from hydrogen, nitrogen and sulfur atoms whose redshift showed the object to be about 10 percent of the age of the universe. The new galaxy, dubbed SPT0418-SE, appears to be close enough to SPT0418-47 that the two galaxies will interact with each other, making the duo a case study for galactic mergers. All of which is helpful, but here again we run into a fascinating problem. The newly discovered galaxy shows levels of metallicity comparable to our Sun.

It’s a conundrum. The Sun drew on earlier stellar generations to build up elements heavier than helium and hydrogen, and the Sun is roughly 4.6 billion years old. Amit Vishwas (Cornell Center for Astrophysics and Planetary Sciences) is second author on the paper:

“We are seeing the leftovers of at least a couple of generations of stars having lived and died within the first billion years of the universe’s existence, which is not what we typically see. We speculate that the process of forming stars in these galaxies must have been very efficient and started very early in the universe, particularly to explain the measured abundance of nitrogen relative to oxygen, as this ratio is a reliable measure of how many generations of stars have lived and died.”

But let’s turn back a minute, for we’re looking at two early galaxies, and it’s intriguing that SPT0418-47, the first of these, shows its own anomalies. Data from ALMA allow astronomers to see that although 12 billion years old, this object has a more mature structure than would be expected. No spiral arms are apparent, but a rotating disk and bulge are found, with stars packed tightly around the galactic center. Simona Vegetti (Max Planck Institute for Astrophysics), co-author on the 2020 paper on SPT0418-47 (citation below), had this to say three years ago:

“What we found was quite puzzling; despite forming stars at a high rate, and therefore being the site of highly energetic processes, SPT0418-47 is the most well-ordered galaxy disc ever observed in the early Universe. This result is quite unexpected and has important implications for how we think galaxies evolve.”

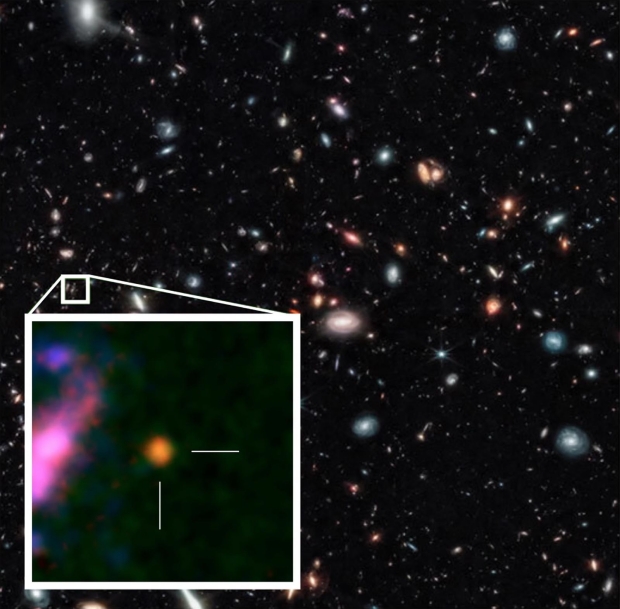

Image: Astronomers using the Atacama Large Millimeter/submillimeter Array (ALMA), in which the European Southern Observatory (ESO) is a partner, have revealed an extremely distant and therefore very young galaxy that looks surprisingly like our Milky Way. The galaxy is so far away its light has taken more than 12 billion years to reach us: we see it as it was when the Universe was just 1.4 billion years old. It is also surprisingly unchaotic, contradicting theories that all galaxies in the early Universe were turbulent and unstable. This unexpected discovery challenges our understanding of how galaxies form, giving new insights into the past of our Universe. Credit: Rizzo et al./European Southern Observatory.

So the new galaxy, SPT0418-SE, adds to earlier evidence that the early universe was considerably less chaotic than we once thought. The new paper summarizes the issue with reference to the unexpectedly strong emission lines found in the data:

This spectroscopic study of a z > 4 galaxy opens up many questions, including the spatial arrangement and stellar/gas/metallicity distribution of the companion; the merging hypothesis of SPT0418-47; the dark-matter halo of the system; the overdensity of this potentially crowded field; reconciling the relatively high chemical abundances with the short formation time and the moderate stellar mass for the whole system; and interpreting the small [N ii] 122 and 205 ?m luminosities in the context of either a soft radiation field and/or a high N/O.

But again that note of high-redshift caution that I mentioned last week:

We attempt to reconcile the high metallicity in this system by invoking early onset of star formation with continuous high star-forming efficiency or by suggesting that optical strong line diagnostics need revision at high redshift. We suggest that SPT0418-47 resides in a massive dark-matter halo with yet-to-be-discovered neighbors.

Clearly scientists will be looking hard at how high-redshift targets are interpreted even as they continue to hypothesize about astrophysical mechanisms and star formation efficiency to explain seemingly mature objects at this early era. The game is afoot, as Sherlock Holmes used to say, and we’re a long way from reaching firm conclusions. The data are going to start coming fast and furious as we keep mining JWST and using ALMA to examine the universe in this early stage, as witness the image below, which I found just this morning. It shows us another remarkable object.

Image: The radio telescope array ALMA has pin-pointed the exact cosmic age of a distant JWST-identified galaxy, GHZ2/GLASS-z12, at 367 million years after the Big Bang. ALMA’s deep spectroscopic observations revealed a spectral emission line associated with ionized Oxygen near the galaxy, which has been shifted in its observed frequency due to the expansion of the Universe since the line was emitted. This observation confirms that the JWST is able to look out to record distances, and heralds a leap in our ability to understand the formation of the earliest galaxies in the Universe. Credit: NASA / ESA / CSA / T. Treu, UCLA / NAOJ / T. Bakx, Nagoya U.

The paper is Bo Peng et al., “Discovery of a Dusty, Chemically Mature Companion to a z ? 4 Starburst Galaxy in JWST ERS Data,” The Astrophysical Journal Letters 944 No. 2 L36 (17 February 2023). Full text. The paper on SPT0418-47 is Rizzo et al., “A dynamically cold disk galaxy in the early Universe,” Nature 584 (12 August 2020), pp. 201–204. Abstract. The GHZ2/GLASS-z12 paper is Bakx et al., “Deep ALMA redshift search of a z 12 GLASS-JWST galaxy candidate,” Monthly Notices of the Royal Astronomical Society Volume 519, Issue (4 March 2023), pp. 5076–5085 (abstract).

High Redshift Caution

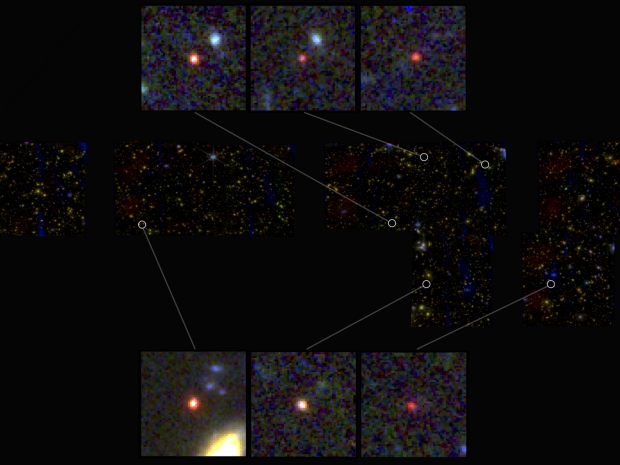

When something turns up in astronomical data that contradicts long accepted theory, the way forward is to proceed with caution, keep taking data and try to resolve the tension with older models. That would of course include considering the possibilities of error somewhere in the observations. All that is obvious enough, but a new paper on JWST data on high-redshift galaxies is striking in its implications. Researchers examining this primordial era have found six galaxies, from no more than 500 to 700 million years after the Big Bang, that give the appearance of being massive.

We’re looking at light from objects 13.5 billion years old that should be anything but mature, if compact, galaxies. That’s a surprise, and it’s fascinating to see the scrutiny to which these findings have been exposed. The editors of Nature have helpfully made available a peer review file containing back and forth comments between the authors and reviewers that give a jeweler’s eye look at how intricate the taking of high-redshift measurements can be. Reading this material offers an inside look at how the scientific community tests and refines its results enroute to what may need to be a modification of previous models.

It’s the availability of that peer review file that, as much as the findings themselves, occasions this post, as it offers laymen like myself a chance to see the scientific publication process at work. That cannot be anything but salutary in an era when complicated ideas are routinely pared into often misleading news headlines.

Image: Images of six candidate massive galaxies, seen 500-700 million years after the Big Bang. One of the sources (bottom left) could contain as many stars as our present-day Milky Way, according to researchers, but it is 30 times more compact. Credit: NASA, ESA, CSA, I. Labbe (Swinburne University of Technology). Image processing: G. Brammer (Niels Bohr Institute’s Cosmic Dawn Center at the University of Copenhagen). All Rights Reserved.

One note of caution emerges in the abstract to this work: “If verified with spectroscopy, the stellar mass density in massive galaxies would be much higher than anticipated from previous studies based on rest-frame ultraviolet-selected samples.”

That’s a pointer to what seems to be needed next. Penn State’s Joel Leja modeled the light from these objects, and I like the openness to alternative explanations that he injects here:

“This is our first glimpse back this far, so it’s important that we keep an open mind about what we are seeing. While the data indicates they are likely galaxies, I think there is a real possibility that a few of these objects turn out to be obscured supermassive black holes. Regardless, the amount of mass we discovered means that the known mass in stars at this period of our universe is up to 100 times greater than we had previously thought. Even if we cut the sample in half, this is still an astounding change.”

So the question of mass looms just as large as the formation process even if these do not turn out to be galaxies. No wonder Leja says the research team has been calling the six objects ‘universe breakers.’ On the one hand, the question of mass gets into fundamental issues of cosmology and the models that have long served astronomers. If galaxies actually form at this level at such an early time in the universe, then the mechanisms of galaxy formation demand renewed scrutiny. Leja is suggesting that a spectrum be produced for each of the new objects that can confirm the accuracy of our distance measurements, and also demonstrate what these ‘galaxies’ are made up of.

The paper on this remarkable finding itself continues to evolve. It’s Labbé et al., “A population of red candidate massive galaxies ~600 Myr after the Big Bang.” Nature 22 February 2023 (abstract). Note this editorial comment from the abstract page: “We are providing an unedited version of this manuscript to give early access to its findings. Before final publication, the manuscript will undergo further editing.” I’d like to read that final edit before commenting any further.

How Common Are Planets Around Red Dwarf Stars?

We’re beginning to learn how common planets are around stars of various types, but M-dwarfs get special attention given their role in future astrobiological studies. As I’ve just been talking about CARMENES, the Calar Alto high-Resolution search for M dwarfs with Exoearths with Near-infrared and optical Échelle Spectrographs program, I’ll fold in today’s news about their release of 20,000 observations covering more than 300 stars, for we can mine some data here about planet occurrence rates.

59 new planets turn up in the spectroscopic data gathered at the Calar Alto Observatory in Span, with about 12 thought to be in the habitable zone of their star. I’ll await with interest our friend Andrew LePage’s assessment. His habitable zone examinations serve as a highly useful reality check.

I mentioned spectrographic data above. The CARMENES instruments are built for optical as well as near-infrared studies, and have been used to explore nearby red dwarfs and their possible planets since 2015. The original project ended at the end of 2020, covering the data released here, although later observations are continuing, so we can expect further releases. Remember, this is radial velocity rather than transit work. CARMENES offers an accuracy of about 1 meter per second, meaning astronomers can measure the tiny motions induced by an orbiting planet with that degree of sensitivity. At this level, low-mass stars yield up Earth-sized planets.

The new CARMENES planets appear in the graphic below, drawn from publication of the project’s first large dataset as published in Astronomy & Astrophysics. 19,633 spectra for a sample of 362 targets were collected. The current paper covers only the visible-light data, with the infrared data to be made public when processing is complete. CARMENES has now observed about half of all nearby red dwarfs, limited of course by the fact that its location in Spain precludes southern hemisphere observations. A bit more on the original 362 target stars:

The sample was designed to be as complete as possible by including M dwarfs observable from the Calar Alto Observatory with no selection criteria other than brightness limits and visual binarity restrictions. To best exploit the capabilities of the instrument, variable brightness cuts were applied as a function of spectral type to increase the presence of late-type targets. This effectively leads to a sample that does not deviate significantly from a volume-limited one for each spectral type. The global completeness of the sample is 15% of all known M dwarfs out to a distance of 20 pc and 48% at 10 pc.

Image: An animated illustration of the CARMENES planets. All planets discovered with the same method as CARMENES, but with other instruments, are shown as grey dots. With the data collected in the period 2016-2020, CARMENES has discovered and confirmed 6 Jupiter-like planets (with masses more than 50 times that of the Earth), 10 Neptunes (10 to 50 Earth masses) and 43 Earths and super-Earths (up to 10 Earth masses). The vertical axis indicates what star type the planets orbit around, from the coolest and smallest red dwarfs to brighter and hotter stars (the Sun would correspond to the second from the top). The horizontal axis depicts the distance from the planet to the star by showing the time it takes to complete the orbit. Planets in the habitable zone (blue-shaded area) can harbor liquid water on their surface. Credit: © Institut d’Estudis Espacials de Catalunya (IEEC).

As to the title of today’s post, the authors have calculated new planet occurrence rates from a subset of the overall sample, with low-mass planets tagged at 1.06 planets per star in periods of 1 day to 100 days, “and an overabundance of short-period planets around the lowest-mass stars of our sample compared to stars with higher masses.” Nearly every M-dwarf, in other words, appears to host at least one planet. The long-period giant planet occurrence rate comes in at 3%.

CARMENES Legacy Plus, which continues the original project, began in 2021 and continues to observe the same stars at least until the end of this year. Juan Carlos Morales is a researcher at IEEC (Institut d’Estudis Espacials de Catalunya):

“In order to determine the existence of planets around a star, we observe it a minimum of 50 times. Although the first round of data have already been published to grant access to the scientific community, the observations are still ongoing.”

The paper is Ribas et al., “The CARMENES search for exoplanets around M dwarfs Guaranteed time observations Data Release 1 (2016-2020),” Astronomy & Astrophysics Vol. 670, A139 (22 February 2023). Full text.

Uranus Orbiter and Probe: Implications for Icy Moons

What do you get if you shake ice in a container with centimeter-wide stainless steel balls at temperature of –200 ?C? The answer is a kind of ice with implications for the outer Solar System. I just ran across an article in Science (citation below) that describes the resulting powder, a form of ‘amorphous ice,’ meaning ice that lacks the familiar crystalline arrangement of regular ice. There is no regularity here, no ordered structure. The two previously discovered types of amorphous ice – varying by their density – are uncommon on Earth but an apparently standard constituent of comets.

The new medium-density amorphous ice may well be produced on outer system moons, created through the shearing process that the researchers, led by Alexander Rosu-Finsen at University College London, produced in their lab work. There is a good overview of this water ‘frozen in time’ in a recent issue of Nature. The article quotes Christoph Salzmann (UCL), a co-author on the Science paper:

The team used a ball mill, a tool normally used to grind or blend materials in mineral processing, to grind down crystallized ice. Using a container with metal balls inside, they shook a small amount of ice about 20 times per second. The metal balls produced a ‘shear force’ on the ice, says Salzmann, breaking it down into a white powder.

Firing X-rays at the powder and measuring them as they bounced off — a process known as X-ray diffraction — allowed the team to work out its structure. The ice had a molecular density similar to that of liquid water, with no apparent ordered structure to the molecules — meaning that crystallinity was “destroyed”, says Salzmann. “You’re looking at a very disordered material.”

Disruptions in icy surfaces caused by the process would have implications for the interface between ice and liquid water that is presumed to exist on moons like Europa and Enceladus. The surface might be given to disruptions that would expose ocean beneath.

What goes on in the icy moons of the outer system is always of interest, especially given the astrobiological possibilities, and it was probably the thought of an ice giant orbiter at Uranus that triggered my interest in the amorphous ice issue. Kathleen Mandt (Johns Hopkins University Applied Physics Laboratory) just wrote the former up in Science as well, noting how little we’ve learned since the solitary Voyager flyby of the planet in 1986. In addition to planetary structure and atmosphere, we could do with a lot more information about its moons and their possible liquid water oceans.

Image: This 2006 image taken by the Hubble Space Telescope shows bands and a new dark spot in Uranus’ atmosphere. Credit: NASA/Space Telescope Science Institute.

The planetary science decadal survey released in 2022, called Origins, Worlds, and Life, which reviewed over 500 white papers and 300 presentations over the course of its 176 meetings, flagged the need for such a mission in the coming decade, a planetary flagship mission as a next step forward after Europa Clipper. I don’t want to downplay the role of such a mission in deepening our understanding of ice giant formation and migration, not to mention the Uranian atmosphere, but the moon system here has proven an extreme challenge for observers. Its study becomes a major driver for the Uranus Orbiter and Probe (UOP) mission:

The system’s extreme obliquity…limits visibility of the moons to one hemisphere during southern and northern summers. Voyager 2 could only image the moons’ southern hemispheres, but what was seen was unexpected. The five largest moons, predicted to be cold dead worlds, all showed evidence of recent resurfacing, suggesting that geologic activity might be ongoing. One or more of these moons could have potentially habitable liquid water oceans under an ice shell, making them “ocean worlds.” Ariel, the most extensively resurfaced moon, is a strong ocean worlds candidate along with the two largest moons, Titania and Oberon… UOP will image and measure the composition of the full surfaces of the moons to search for ongoing geologic activity, and measure whether magnetic fields vary in their interiors owing to the presence of liquid water.

Miranda, Ariel, Umbriel, Titania and Oberon, the five largest moons of Uranus, could all contain subsurface oceans (and I don’t want to leave out of the UOP story the fact that it would carry a Uranus atmospheric probe designed to reach a depth of at least 1 bar in pressure, a fascinating investigation in itself). The decadal survey has recommended a launch by 2032 to take advantage of a Jupiter gravity assist that would allow arrival before the northern autumn equinox in 2050 for better study of the moon system. “The space science community has waited more than 30 years to explore the ice giants,” writes Mandt, “and missions to them will benefit many generations to come.”

The first of today’s papers is Rosu-Finsen et al., “Medium-density amorphous ice,” Science Vol. 379, No. 6631 (2 February 2023), pp. 474-478 (abstract). The Mandt article is “The first dedicated ice giants mission,” Science Vol. 379, No. 6633 (16 February 2023), pp. 640-642 (full text).

Wolf 1069b: Why System Architecture Matters

Let’s look at a second red dwarf planet in this small series on such, this one being Wolf 1069b. I want to mention it partly because of the prior post on K2-415b, where we had the good fortune to be dealing with a transiting world around an M-dwarf that should be useful in future atmospheric characterization efforts. Wolf 1069b, by contrast, was found by radial velocity methods, and I’m less interested in whether or not it’s in a ‘habitable’ orbit than in the system architecture here, which raises questions.

This work, recounted in a recent paper in Astronomy & Astrophysics, describes a planet that is not just Earth-sized, as is K2-415b, but roughly equivalent to Earth in mass, making a future search for biosignatures interesting once we have the capability of collecting photons directly from the planet. If the planet has an atmosphere, argue the authors of the paper, its surface temperature could reach 13 degrees Celsius, certainly a comfortable temperature for liquid water. A putative atmosphere would also shield the world from harmful radiation from the host star, although Wolf 1069 appears so far to be an unusually quiescent M-dwarf.

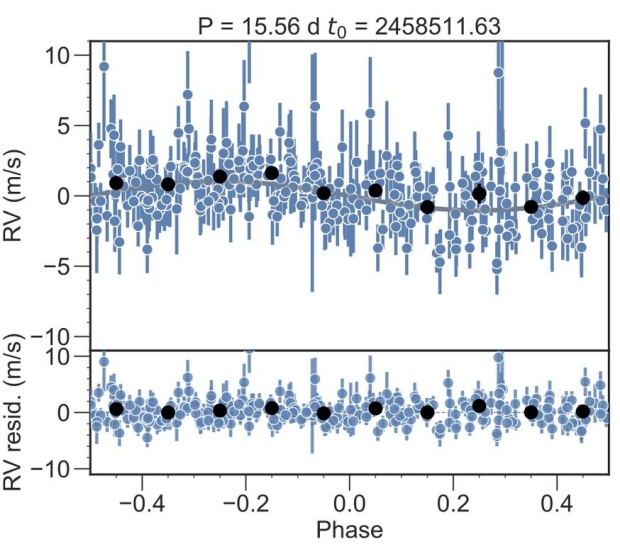

In fact, the lack of distorting surface activity on the star makes possible a high degree of accuracy in the radial velocity measurements here. The data, pulled in by one of the two CARMENES spectrographs, were taken by Diana Kossakowski (Max Planck Institute for Astronomy in Heidelberg), who is lead author of the paper on this work, and colleagues. The CARMENES instruments operate with the 3.5-metre telescope of the Calar Alto Observatory near Almería in southern Spain, and Kossakowski and team have been working the numbers on Wolf 1069 for the past four years.

Image: The figure shows measurements of the velocities at which the star Wolf 1069 moves towards or away from us by the mean. The measuring points were arranged in such a way that they depict the orbital period of the planet. This shows the tiny but significant variation in motion caused by the planet 1.3 times the mass of Earth orbiting in 15.56 days, and is illustrated by the gray line with the black dots. Credit: © D. Kossakowski et. al. from A&A 2023).

CARMENES is itself a research consortium (the Calar Alto high-Resolution search for M dwarfs with Exoearths with Near-infrared and optical Échelle Spectrographs program). The eleven German and Spanish institutions involved are focusing on Earth-like exoplanets near M-dwarfs, in other words, and I think we can expect the first doubtlessly controversial findings related to biomarkers will emerge on such worlds.

Wolf 1069b is on a 15.6 day orbit around an M-dwarf about 30 light years away in Cygnus. That distance is, of course, intriguing as we build the catalog for nearby worlds for future study of biomarkers or, one day, probes; the planet counts as the sixth-closest Earth mass world in a habitable zone orbit (the others are Proxima Centauri b, GJ 1061 d, Teegarden’s Star c, and GJ 1002 b and c). Keep in mind that only 1.5 percent of all the more than 5000 exoplanets yet detected have masses below two Earth masses – K2-415b, for all its interest, evidently weighs in at three.

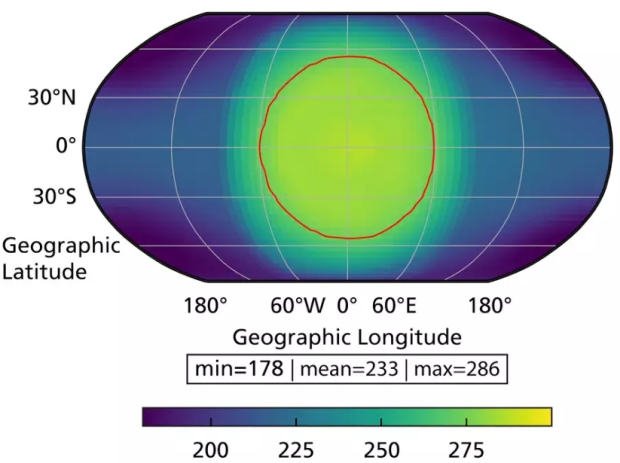

Tidal lock is likely, though perhaps not a show-stopper for life, especially if the early indications of Wolf 1069’s low levels of activity are born out by future observation, and if an atmosphere is indeed present (without one, the authors estimate, the surface temperature would be 250 K, or -23 °C, as opposed to the + 13 °C mentioned above). So that interesting scenario of daylight (or night) that goes on forever emerges here.

Image: Simulated surface temperature map of Wolf 1069 b, assuming a modern Earth-like atmosphere. The map is centered at a point that always faces the central star. The temperatures are given in Kelvin (K). 273.15 K corresponds to 0 °C. Liquid water would be possible on the planet’s surface inside the red line. Credit: © Kossakowski et al. (2023) / MPIA.

But it’s something that Max Planck Institute for Astronomy scientist Remo Burn said that catches my eye:

“Our computer simulations show that about 5% of all evolving planetary systems around low-mass stars, such as Wolf 1069, end up with a single detectable planet. The simulations also reveal a stage of violent encounters with planetary embryos during the construction of the planetary system, leading to occasional catastrophic impacts,”

That’s a noteworthy thought, for such impacts could generate a planetary core that remains liquid today, resulting in a global magnetic field that would offer further shielding effects from stellar activity. The question would be whether Wolf 1069b really is alone, and on this the results are simply not in. What the researchers have been able to do is to exclude additional planets of Earth mass or more and orbital periods of less than 10 days. What they cannot do yet is rule out planets on wider orbits.

If alone around its star, Wolf 1069b is the only one of the six Earth-mass planets in habitable zones nearest to Earth that is found without an inner planet keeping it company. Note that the mass of Wolf 1069 is 0.167±0.011 solar masses. And now let’s turn to the paper:

This notion is supported by the works of Burn et al. (2021), Mulders et al. (2021), and Schlecker et al. (2021), where we expect a lower planet occurrence rate for stars with M* < 0.2 M? than for stars with 0.2 M? < M* < 0.5 M? for both the pebble and core accretion scenarios.

The authors run this out on a rather lengthy speculative thread:

Granted, these are theoretical predictions as more observation-based evidence is required to confirm this, and Wolf 1069b could still be accompanied by closer-in and outer planets. Nevertheless, the concept that only one planet survives is predicted by formation models if there were at least one giant impact at the late stage. This would enhance the chance of having a massive moon similar to the Earth and might also stir up the interior of the planet to prevent stratification and sustain a magnetic field (e.g., Jacobson et al. 2017). As remote as this appears, the search for exo-moons is no longer so far-fetched in recent times (e.g., Martínez-Rodríguez et al. 2019; Dobos et al. 2022).”

In the absence of data on these matters, speculation is welcome, but I can only imagine that when we get the right instrumentation online to make direct observations of planets like Wolf 1069b, we’re going to find more than our share of surprises. Whether or not an exo-moon hinting at an impact hinting at a magnetic field is one of them remains to be seen. A lot of ‘ifs’ creep into discussions of ‘habitable’ worlds. Would a tidally locked red dwarf planet look something like the speculation we see below?

Image: Artist’s conception of a rocky Earth-mass exoplanet like Wolf 1069 b orbiting a red dwarf star. If the planet had retained its atmosphere, chances are high that it would feature liquid water and habitable conditions over a wide area of its dayside. Credit: © NASA/Ames Research Center/Daniel Rutter.

The paper is Kossakowski et al., “The CARMENES search for exoplanets around M dwarfs Wolf 1069 b: Earth-mass planet in the habitable zone of a nearby, very low-mass star,” Astronomy & Astrophysics Vol. 670, A84 (10 February 2023). Full text.

The Relevance of K2-415b

I want to mention the recent confirmation of K2-415b because this world falls into an interesting category: Planets with major implications for studying their atmospheres. Orbiting an M5V M-dwarf every 4.018 days at a distance of 0.027 AU, this is not a planet with any likelihood for life. Far from it, given an equilibrium temperature expected to be in the range of 400 K (the equivalent figure for Earth is 255 K). And although it’s roughly Earth-sized, K2-415b turns out to be at least three times more massive.

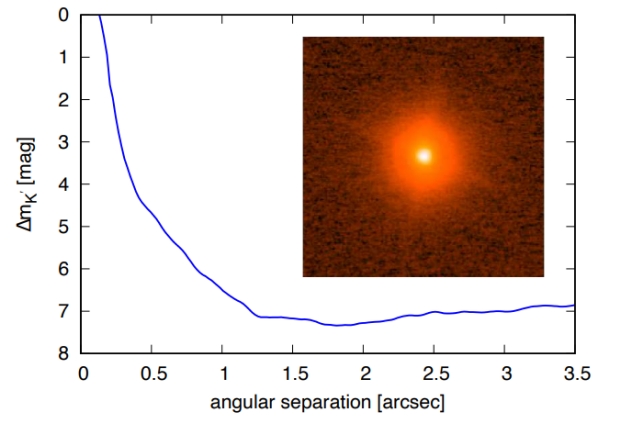

What this planet has going for it, though, is that it transits a low mass star, and at 70 light years, it’s close. Consider: If we want to take advantage of transmission spectroscopy to study light being filtered through the planetary atmosphere during ingress and egress from the transit, nearby M-dwarf systems make ideal targets. Their habitable zones are close in, so we get frequent transits around small stars. But the number of Earth-sized transiting worlds around nearby examples of such stars is small, totalling 14 in eight 8 different systems (limiting the range to 30 pc, or roughly 100 light years). That includes the high-priority seven around TRAPPIST-1.

Image: This is Figure 3 from the paper. Caption: Sensitivity plot (5 ? contrast curve) for K2-415 in the K0 band based on the combined IRCS image. The inset shows the zoomed image of the target with a FoV of 4” × 4”. Credit: Hirano et al.

As we move into ever deepening searches for atmospheric biomarkers, worlds like these are going to be in the vanguard, fodder for the emerging class of 30-meter telescopes and, of course, space-based observatories like the James Webb Space Telescope. We’re going to get to know this small group of worlds well as this decade continues, which will also greatly assist us in understanding how M-dwarf planets evolve. When it comes to atmospheres around small red stars, there are no guarantees.

After all, these stars especially in their youth are known to be intense emitters of extreme ultraviolet and X-ray radiation, with striking levels of flare activity. Can an atmosphere survive this bombardment, or an intense stellar wind? Will these planets evolve secondary atmospheres through surface outgassing from volcanoes and interactions with magma? Doubtless we’ll find examples of various scenarios, which should deepen our knowledge of how the atmospheres of habitable worlds emerge.

It will also be interesting to see if the apparent and recently noted (in TESS data) lack of detected planets around the lowest mass stars will be supported by later datasets. K2-415b forces that question, in that it orbits one of the lowest mass stars hosting an Earth-sized planet. Counting planets of any size, only ten transiting planet-hosting stars are cooler than K2-415. One of these is TRAPPIST-1.

K2, the extended Kepler mission, observed K2-415b, an unusually close system at 70 light years given that of the original Kepler target stars, fewer than one percent were closer than 600 light years (we have much to thank K2 for as it moved out of the original Kepler field, a classic case of making a virtue out of necessity). The follow-up campaign described in today’s paper validates the planet. And the authors note that future radial velocity studies will be on the lookout for additional planets in this system.

This too could get interesting. Lead author Teruyuki Hirano (Graduate University for Advanced Studies, Japan) and colleagues explain::

K2-415b is located slightly inner of the classical habitable zone (Kopparapu et al. 2016) based on the insolation flux onto the planet, but an outer planet, if any, in the system with a slightly longer period (e.g., 10 ? 15 days) could sit inside the habitable zone. Little is known on the properties of multi-planet systems around the lowest mass stars (< 0.3 M), but assuming that their properties are similar to those in the “Kepler-multi” systems, the planets could have a typical spacing of ? 20 mutual Hill radii (Weiss et al. 2018). Recently, Hoshino & Kokubo (2023) also showed that the typical orbital spacing of planets formed by giant impacts is ? 20 mutual Hill radii independently of stellar masses through N-body simulations. Thus, it is quite possible that a secondary planet having ? 1 M? has an orbital period of 10 ? 15 days.

The paper is Hirano et al., “An Earth-sized Planet around an M5 Dwarf Star at 22 pc,” accepted for publication at the Astrophysical Journal and available as a preprint.