Centauri Dreams

Imagining and Planning Interstellar Exploration

A Nod to ‘Stapledon Thinking’

Taking a long walk in the early morning hours (and I do mean ‘early,’ as I usually walk around 4 AM – Orion is gorgeously high in the sky this time of year in the northern hemisphere), I found myself musing on terminologies. The case in point: The Fermi Paradox. Using that phrase, the issue becomes starkly framed. If there are other civilizations in the galaxy, why don’t we have evidence for them? Much ink, both physical and digital, has been spilled over the issue, but I will argue that we should soften the term ‘paradox.’ I prefer to call the ‘where are they’ formulation the Fermi Question.

I prefer ‘question’ rather than ‘paradox’ because I don’t think we have enough data to declare what we do or do not see about other civilizations a paradox. A paradox is a seemingly self-contradictory statement that demands explanation. But is anything actually demanded here? There are too many imponderables in this case to even frame the contradiction. How can we have a paradox when we are fully aware of our own limitations at data gathering, to the point that we have no consensus on what or where to target in our searches? Possibilities for technosignatures abound but which are the most likely to be productive? Should we truly be surprised that we do not see a clear signature of another civilization?

We make our best choices based on the technologies we have. On the galactic scale, that means looking for anomalies that might flag a widespread Kardashev Type III civilization. We know that such a civilization’s technologies would far surpass our own and its communications might involve methods – the use of gravitational waves, for example – that are far beyond our capabilities to detect. The Fermi Question tells us about our limitations but doesn’t point to a genuine conundrum. We should also keep in mind that although all the debates on this matter have elevated its status, the statement Fermi made could be considered relatively light lunch-time banter.

On the scale of the Solar System, it would be better to say that we do indeed lack evidence of extraterrestrial visitation, but also that we really have only begun to look for it. A lengthy book could be written on where or why another civilization might plant a ‘lurker’ probe in our Solar System, conceivably one that could have arrived millions of years ago and could even today be quietly returning data on what it sees. We have yet to examine our own Moon in the kind of detail now available to us through the Lunar Reconnaissance Orbiter, and as my friend Jim Benford never tires of pointing out, we know almost nothing about other nearby objects such as Earth’s co-orbitals. Until we sift through actual data, we’d better use caution about these matters.

So while I don’t think it rises to the level of a paradox, the Fermi Question is an incentive to continue searching for data whose anomalous nature may be useful. And while we do this, working the borders between SETI and philosophy seems inevitable. Because when we discuss extraterrestrial civilizations, we’re talking about behaviors that can be detected, without having the slightest notion of what those behaviors might be. What we call ‘human nature’ is hard enough to pin down, but how truly alien cultures would address the issues in their realm is purely imaginative conjecture.

I’m reminded of something Milan ?irkovi? said in The Great Silence: Science and Philosophy of Fermi’s Paradox (Oxford University Press, 2018):

As we learn more, the shore of the ‘ocean of unknown’ lengthens, to paraphrase Newton’s famous sentence and, while we may imagine (on some highly abstract level) that it will eventually contract, this era is not yet in sight.

Thus, we have another prediction: there will be many new explanatory hypotheses for Fermi’s paradox in the near future, as the astrobiological revolution progresses and exploratory engineering goes farther and farther.

Pondering Smaller Stars

That said, I note with interest a paper from Jacob Haqq-Misra (Blue Marble Space Institute of Science, Seattle) and Thomas Fauchez (American University) that makes the case for low-mass stars as the logical venue for expanding civilizations. If we wonder where alien civilizations are, the question might be resolved by the idea that if such cultures expanded into the cosmos, they would select K- or M-dwarf stars as their destinations. Here we’re squarely up against issues of philosophy and sociology, because we’re asking about what another species would consider its goals.

But let’s go with this, because the authors are perfectly aware of all that we don’t know, and also aware of the need for shaping our questions through broad theorizing.

Why smaller stars? Here we’re dealing with an interesting hypothesis, that G-class stars are the most likely place for life to develop in the first place. Haqq-Misra has written about this before (as have a number of other scientists referenced in the paper), and here makes the statement “We first assume that technological civilizations only arise on habitable planets orbiting G-dwarf stars (with ?10 Gyr main sequence lifetimes) because either biogenesis or complex life is more favored in such systems.” And the idea is that stars like our Sun have lifetimes far shorter than the trillions of years available to M-dwarfs or the 17 to 70 billion years for K-dwarfs.

That’s a big assumption, but it leads to a conjectured motivation: A civilization will choose to maximize its longevity, just as an individual human will try to stay alive as long as possible. A culture that manages to become long-lived will have to cope with the eventual loss of its home G-class star and will look for longer-lived destinations that can serve as more lasting home worlds. And while I’ve mentioned M-dwarfs, the K-class seems to hit the sweet spot, so that the authors title a section of their paper “The K-dwarf Galactic Club.”

K-dwarfs are plentiful compared to G-class stars like the Sun, accounting for about 13 percent of the galactic stellar inventory (G-class stars comprise about 6%). M-dwarfs are the most common star, at about 73 percent, according to the authors’ figures. We’ve already seen, though, that K-dwarfs offer significantly longer lifespans than G-class stars, and because of their size and the nature of habitable orbits, they offer possible living planets without tidal lock.

If the authors are correct that life is more likely to arise around G-dwarfs, then it could be said that a K-class star offers a living experience closer to that of the homeworld for any civilization that chooses to migrate to it. This is because the spectra of these stars are much closer to G-star spectra than to M-dwarfs. With M-dwarfs, as well, we have to contend with higher stellar activity than on the more quiescent K-dwarfs. Planetary environments are thus much closer to G-dwarf norms than around M-dwarfs.

The problem of what we might call exo-sociology looms large in this discussion, and it’s one the authors frankly acknowledge. Whether or not life exists anywhere else remains an open question, and we have no knowledge of what extraterrestrial civilizations, if out there, would consider important or desirable as guides to their activity. But if we’re asking philosophical questions, we can ponder what any living intelligence would consider its primary goal, as the authors do in this excerpt from their paper:

Newman & Sagan (1981) performed a detailed mathematical analysis of population diffusion in the galaxy and concluded that only long-lived civilizations could have established a Galactic Club; however, such a possibility was excluded because the authors “believe that their motivations for colonization may have altered utterly.” Although it remains possible that long-lived technological civilizations do not expand, it also remains possible that such civilizations pursue galactic settlement in order to ensure their longevity. The numerical simulations by Carroll-Nellenback et al. (2019) found solutions where “our current circumstances are consistent with an otherwise settled, steady-state galaxy.”

Image: M31, the Andromeda Galaxy. What might drive the expansion of a civilization outward from its native system, and how can we, with no knowledge of other civilizations, plausibly come up with motivations for such activity? Credit & Copyright: Robert Gendler.

We have a scenario, then, of long-lived civilizations preferentially expanding to particular categories of stars to ensure the survival of their species, but otherwise not expanding exponentially into the galaxy. Such a scenario would fit with what we see, being a level of activity that would not necessarily announce itself through contact with other civilizations. Cultures like these would be content to mind their own business as long as their survival could be ensured, but we might or might not expect them to actively probe stellar systems as relatively short lived as those around G-class stars. We should look in our own system, say the authors, but a failure to find evidence of extraterrestrial travelers might simply reflect a preference to visit other types of star.

This makes identifying these cultures a tricky matter indeed:

We can exclude scenarios in which all G-dwarf stars would have been settled by now, but the possibility remains open that a Galactic Club exists across all K-dwarf or M-dwarf stars. The search for technosignatures in low-mass systems provides one way to constrain the presence of such a Galactic Club (e.g., Lingam & Loeb 2021; Socas-Navarro et al. 2021; Wright et al. 2022; Haqq-Misra et al. 2022). Existing searches to-date have placed some limits on radio transmissions (e.g., Harp et al. 2016; Enriquez et al. 2017; Price et al. 2020; Zhang et al. 2020) and optical signals (e.g., Howard et al. 2007; Tellis & Marcy 2015; Schuetz et al. 2016) that might be associated with technological activity, but such limits can only weakly constrain the Galactic Club hypothesis. Further research into understanding the breadth of possibilities for detecting extraterrestrial technology will become increasingly important as observing facilities become more adept at characterizing terrestrial planets in low-mass exoplanetary systems.

Thus the value of the Fermi Question at highlighting the staggering depth of our ignorance about what is actually out there. I enjoy creative solutions to conjectural problems and am all for applying what I might call ‘Stapledon thinking’ (after the brilliant British philosopher and science fiction writer) as forays into the darkness. We can expect many more ‘solutions’ to the Fermi Question as our capabilities increase. The outcome we can hope for is that one of these days a present or pending astronomical instrument will deliver data on a phenomenon that will resolve itself into a true technosignature (or even an attempt to communicate). Until then, these stunningly interesting questions will drive thinking both philosophic and scientific.

The paper is Haqq-Misra & Fauchez, “Galactic settlement of low-mass stars as a resolution to the Fermi paradox,” accepted at the Astronomical Journal and available as a preprint. Be aware as well of Michaël Gillon, Artem Burdanov and Jason Wright’s paper “Search for an Alien Message to a Nearby Star,” Astronomical Journal Vol. 164, No. 5 (27 October 2022). Abstract. And for all things Fermi, see Milan ?irkovi?, The Great Silence: Science and Philosophy of Fermi’s Paradox (Oxford University Press, 2018).

Biosignatures: The Case for Nitrous Oxide

Are we overlooking a potential biosignature? A new study makes the case that nitrous oxide could be a valuable indicator of life on other worlds, and one that can be detected with current and future instrumentation. In today’s essay, Don Wilkins takes a close look at the paper. A retired aerospace engineer with thirty-five years experience in designing, developing, testing, manufacturing and deploying avionics, Don tells me he has been an avid supporter of space flight and exploration all the way back to the days of Project Mercury. Based in St. Louis, where he is an adjunct instructor of electronics at Washington University, Don holds twelve patents and is involved with the university’s efforts at increasing participation in science, technology, engineering, and math.

by Don Wilkins

Biosignatures, specific signals produced by life, are the focus of intense study within the astronomical community. Gases such as nitrogen (N2), oxygen and methane are sought in planetary atmospheres as their large scale production is linked to life, although abiotic sources contribute to false positives. The James Webb Space Telescope (JWST) provides a new, powerful tool to explore the atmospheres of temperate terrestrial exoplanets in the approximately known 5,000 exoplanetary systems.

A paper by a UC Riverside, University of Maryland and NASA team led by Dr. Edward Schwieterman proposes the biosignature list expand to include nitrous oxygen (N2O), the so-called laughing gas once employed by anesthesiologists. Nitrous oxide is excluded from the current list of biosignatures. The gas, even though produced in quantity by biological processes, would be difficult to detect across interstellar ranges at current N2O concentrations in Earth’s atmosphere.

Dr. Schwieterman and his team believe the exclusion of nitrous oxide is not merited and propose several conditions where N2O could accumulate in quantities and be detected by current sensors. The paper notes that large quantities of atmospheric N2O can accumulate if:

1. Ocean conditions allow significant biological release of N2O.

2. The target exoplanet orbits a late K-dwarf or inactive early M dwarf, comparably low level UV emitters. The reduction rate of N2O into N2 and nitrous oxides is proportional to its exposure to UV.

3. Metal enzymatic catalysts, such as copper, are not abundant enough to partially terminate the reduction process. During the Proterozoic (approximately 2500 to 540 million years ago), high concentrations of atmospheric N2O produced by biological processes may have been present as copper catalysts were not readily available to reduce the gas.

4. The nitrous oxide reductase enzyme, the last step of the denitrification metabolism that yields fixed N2 from N2O, never evolves.

5. Phosphorous in a form suitable for biological activities is limited, although this shortage would adversely affect the possibility of life.

Dr. Schwieterman and his team examined contributors to false positives. A small amount of nitrous oxide and nitrogen dioxide (NO2) is created by lightning. The presence of NO2 is a strong indicator the N2O is produced by an abiotic source.

Another source of false positives is chemodenitrification, N2O production through abiotic reduction of nitric oxide (NOx) by ferrous iron.

Stellar activity can produce abiotic N2O production. This false positive is associated with young and magnetically active stars.

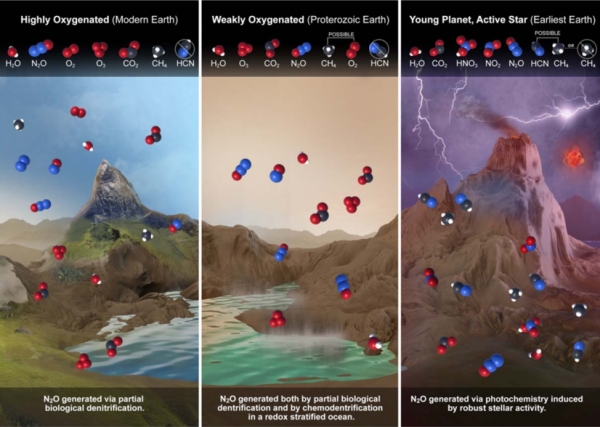

Image: This is Figure 13 from the paper. Caption: A concept image illustrating the interpretability of N2O as a biosignature in the context of the planetary environment. The left panel illustrates a scenario like the modern Earth, with a high-oxygen atmosphere and N2O generated overwhelmingly via partial biological denitrification. In this case, the simultaneous presence of O2, O3 , N2O, and CH4 indicates a strong chemical disequilibrium. The middle panel illustrates a weakly oxygenated planetary environment like the Proterozoic Earth, with N2O generated both by partial biological denitrification and by chemodenitrification of nitrogenous intermediates (likely substantially biogenic) in a redox stratified ocean. In this case, molecular oxygen (O2 ) and methane may have concentrations that are too low to detect directly, but detectable N2O and O3 would be a strong biosignature. A false positive is unlikely, because an abiotic O2 atmosphere would be unstable in combination with a reducing ocean. The right panel illustrates the most likely false-positive scenario, where an active star splits N2 via SPEs [solar proton events], resulting in photochemically produced N2O. This scenario would predict additional photochemical products, such as HCN, that would be indicative of abiotic origins. Stellar characterization would confirm the magnitude of the stellar activity. Vigorous atmospheric production of NOx species could be inferred from spectrally active NO2. Credit: Schwieterman et al.

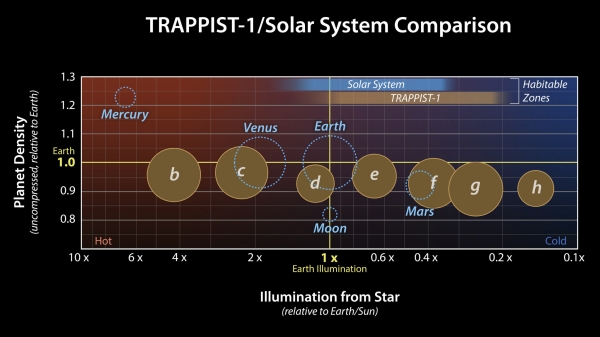

Several candidates for a N2O search tightly orbit TRAPPIST-1 (the name is derived from Transiting Planets and Planetesimals Small Telescope, a Belgian robotic telescope at the European Southern Observatory’s installation at La Silla, Chile). An ultracool red dwarf older than Earth’s Sun, TRAPPIST-1 is a type M star, 40 light years distant from Earth. Seven rocky exoplanets are found here, likely made of materials similar to Earth – iron, oxygen, magnesium, and silicon – but these worlds are 8% less dense than Earth. This implies that the ratio between the constituent materials on the TRAPPIST-1 worlds differs from the ratio on Earth.

It is possible each of the four outer, cooler planets incorporates a large core, a mantle and a planet girding ocean. Finding N2O in the atmosphere of these worlds would be a significant clue pointing to the existence of life.

Image: A planet’s density is determined by its composition as well as its size: Gravity compresses the material a planet is made of, increasing the planet’s density. Uncompressed density adjusts for the effect of gravity and can reveal how the composition of various planets compare. Credit: NASA/JPL-Caltech.

The conditions around the four outer planets of TRAPPIST-1 appear ideal for a search for biological nitrous oxide. The authors use TRAPPIST 1e as a test case. From the paper:

We used the biogeochemical model cGENIE to inform the maximum plausible N2O fluxes for an Earthlike biosphere, which could be 1–2 orders of magnitude larger than those on present-day Earth, assuming nutrient-rich oceans and evolutionary or environmental conditions that limit the last step in the denitrification process. Even for maximal biospheric N2O fluxes of 100 Tmol yr?1, an Earthlike atmosphere will never enter an N2O runaway, but would attain much larger concentrations than those found on Earth today. We find that late K-dwarf and inactive early M-dwarf stars can maintain the highest N2O levels at any given surface flux, potentially exceeding 1000 ppm. We show that for N2O fluxes of 10–100 Tmol yr?1, JWST could detect N2O at 2.9 ?m on TRAPPIST-1e within its mission lifetime.

As we look toward future instrumentation, K-dwarfs turn out to be particularly susceptible to this analysis:

Terrestrial planets orbiting K-dwarf stars are particularly appealing targets for N2O searches with future MIR [mid-IR] missions, due to favorable planet–star angular separations and because N2O fluxes of only 2 to 3 times those of Earth’s modern global average can produce N2O signatures comparable to those of O3.

However, additional research to strengthen the case for N2O as a biosignature is needed. Evaluation of the biological, geological and chemical combinations producing large quantities of N2O can determine suitable exoplanet candidates where a search for nitrous oxide will support findings of life.

The paper is Edward W. Schwieterman et al., “Evaluating the Plausible Range of N2O Biosignatures on Exo-Earths: An Integrated Biogeochemical, Photochemical, and Spectral Modeling Approach,” The Astrophysical Journal 937:109 (22pp), 2022 October 1 (full text).

Mapping Black Holes in (and out of) the Milky Way

Some years back, I reminisced in these pages about reading Poul Anderson’s World Without Stars, an intriguing tale first published in 1966 about a starship in intergalactic space that was studying a civilization for whom the word ‘isolation’ must have taken on utterly new meaning. Imagine a star system tens of thousands of light years away from the Milky Way, a place where an entire galaxy is but a rather dim feature in the night sky. Poul Anderson discussed this with Analog editor John Campbell:

One point came up which may interest you. Though the galaxy would be a huge object in the sky, covering some 20? of arc, it would not be bright. In fact, I make its luminosity, as far as this planet is concerned, somewhere between 1% and 0.1% of the total sky-glow (stars, zodiacal light, and permanent aurora) on a clear moonless Earth night. Sure, there are a lot of stars there — but they’re an awfully long ways off!

For more on galactic brightness, see The Milky Way from a Distance. The Anderson tale was originally serialized as The Ancient Gods in the June and July, 1966 issues of Campbell’s magazine. Long-time readers will remember its cover, which I ran back in 2012, along with a discussion of how artist Chesley Bonestell approached the cover art, which shows the distant galaxy as far brighter than it would actually appear. Bonestell brightened it even knowing this to make the cover interesting while still suggesting just how far away the vast ‘city of stars’ actually was in the story.

Where Black Holes Roam

Intergalactic space is, I would assume, about as empty a place as could be. Yet new work out of the University of Sydney delves into just what we might find if we could see what’s out there. And it turns out that it is quite a lot. The university’s David Sweeney is lead author on a paper in Monthly Notices of the Royal Astronomical Society. The researchers discuss what they describe as the ‘galactic underworld,’ which is comprised of the compact remnants of massive stars. In other words, stars that have collapsed onto themselves and produced neutron stars and black holes.

Remember that black holes and neutron stars form from stars more than eight times the size of our Sun. If less than about 25 times the mass of the Sun, the star forms a neutron star, its tiny sphere jammed with neutrons prevented from collapsing further by neutron degeneracy pressure. Sweeney and team say that thirty percent of the black holes and neutron stars out there have been completely ejected from the galaxy. Given the age of the galaxy, over 13 billion years, a vast number of such objects must have formed, the 30 percent ejected by the ‘kick’ induced by their creation in a supernova.

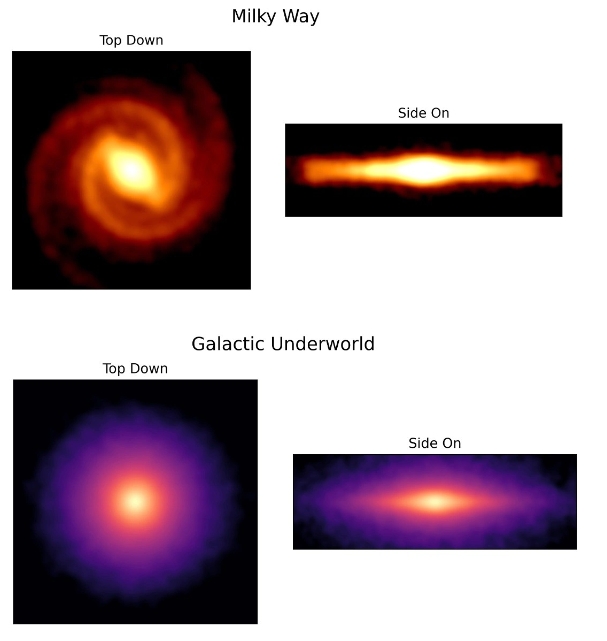

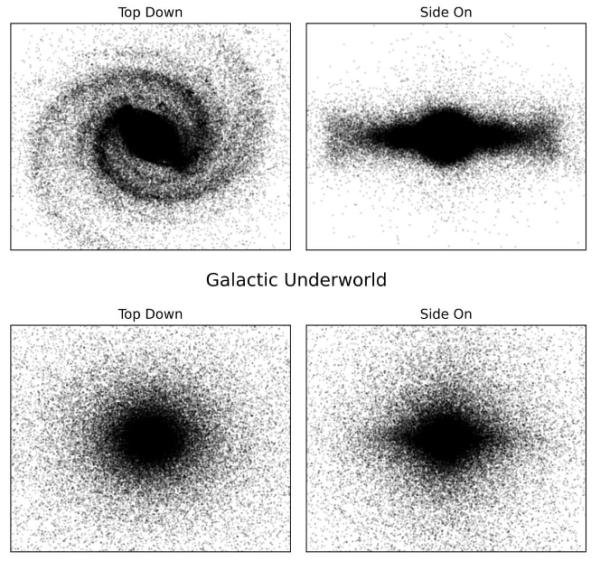

Image: A colour rendition of the visible Milky Way galaxy (top) compared with the range of the galactic underworld (bottom). Credit: Sydney University.

As you can see in the image, the galaxy’s underworld turns out to stretch well beyond the visible limits of the disk. Peter Tuthill (Sydney Institute for Astronomy) notes the challenges involved in creating this first chart of an unseen population:

“One of the problems for finding these ancient objects is that, until now, we had no idea where to look. The oldest neutron stars and black holes were created when the galaxy was younger and shaped differently, and then subjected to complex changes spanning billions of years. It has been a major task to model all of this to find them. Newly-formed neutron stars and black holes conform to today’s galaxy, so astronomers know where to look. It was like trying to find the mythical elephant’s graveyard”

The researchers used a stellar population synthesis computer code called GALAXIA, modifying it to include stars that have exhausted their nuclear fusion life cycle, leaving behind a remnant black hole or neutron star, and excluding stars below 8 solar masses. Additional custom code was then produced to capture velocity changes to the star caused by supernovae explosions (the so-called ‘natal kick’). The effects of the kick were added to each remnant’s velocity and transformed to galactocentric coordinates, with subsequent custom code showing evolution of the stars’ paths over time.

The distribution map that emerged depicts a galaxy, and thus its remnants, changing over time, so that the Milky Way’s present shape does not predict the distribution of neutron stars and black holes surrounding it. In fact, the relatively thin and flattened disk structure gives way to triple the scale height of the Milky Way we see.

Image: Point-cloud chart of the visible Milky Way galaxy (top) versus the galactic underworld. Credit: Sydney University.

As the paper notes:

The spatial distribution of compact remnants is different from that of visible stars. The remnants are more dispersed in the vertical direction with the scale height being about 3 times larger than that of the visible stars. This is mainly due to the significant velocity kicks received by the remnants at the time of their birth.

Also interesting are these two points:

The spatial distribution of BHs is more centrally concentrated as compared to the NSs due to the smaller velocity kick they receive.

For some remnants the kick is so large that their total velocity becomes greater than their escape velocity (40% of NS and 2% of BHs). We are able to estimate a Galactic mass loss in ejected compact remnants as 2.1×108M? or ?0.4% of the stellar mass of the Galaxy.

If 30 percent of the stellar remnants over the course of the galaxy’s evolution have been ejected into intergalactic space, that leaves 70 percent that still moves through the visible disk, so that neutron stars and black holes from the earliest days of the galaxy still move unattached to any nearby star through stellar neighborhoods like our own.

Black Holes and Their Neighbors in Space

In addition to these ‘free floating’ black holes, there are those in gravitational dance with nearby stars, leaving traces that are detectable. Making that point is the recent discovery of a black hole about 12 times the mass of the Sun at roughly 1650 light years from the Solar System, one that appears to be orbited by a visible star. This is “closer to the Sun than any black hole X-ray binaries with known distances…or any of the black holes identified through other techniques.”

The work, led by Sukanya Chakrabarti (University of Alabama, Huntsville), likewise highlights the role these remnants can play in the disk we see today. Says Chakrabarti:

“In some cases, like for supermassive black holes at the centers of galaxies, [black holes] can drive galaxy formation and evolution. It is not yet clear how these non-interacting black holes affect galactic dynamics in the Milky Way. If they are numerous, they may well affect the formation of our galaxy and its internal dynamics.”

Note the term ‘non-interacting,’ which the author uses to distinguish this kind of black hole from those that show an accretion disk of dust accumulating from another object. As you might imagine, interacting black holes – or the features they produce – are easier to detect at visible wavelengths.

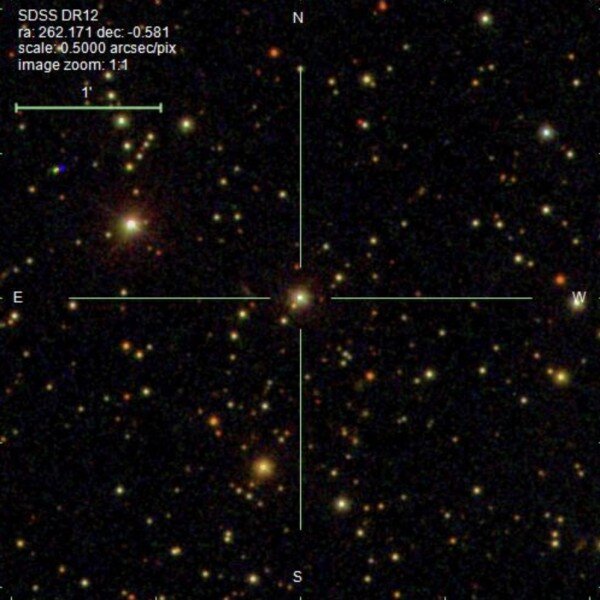

Finding the black hole in this work involved analyzing data on almost 200,000 binary stars, as accumulated from the European Space Agency’s Gaia mission. The intent was to find objects that seemed to have a dark companion of large mass, looking for the gravitational effects of a black hole on a visible star. The most interesting sources were followed up by the Automated Planet Finder in California, Chile’s Giant Magellan Telescope and the W.M. Keck Observatory in Hawaii. Spectroscopic measurements confirmed that the binary system contains a visible star cataloged as Gaia DR3 4373465352415301632 orbiting a dark, massive object.

Image: The cross-hairs mark the location of the newly discovered black hole. Credit: Sloan Digital Sky Survey / S. Chakrabarti et al.

As to how this system of star and black hole originally formed, this interesting speculation:

Given the combination of the large mass of the dark companion and a semi-major axis of Gaia DR3 4373465352415301632 that is neither very large nor very small, the formation channel for this system is not immediately clear. However, the most natural scenario may be that the visible G star was originally the outer tertiary component orbiting a close inner binary with two massive stars.

So here we have a search for black holes bound to visible stars, with the authors estimating that perhaps a million such stars have black hole companions. That’s an early estimate for one population of black holes, but this object, in a 185-day orbit from the star, does not represent the class of black holes and neutron stars that may move through the galaxy untethered to any visible object, as found in the investigations of the Sydney team. Just how many black holes may be peppered through the several hundred billion stars of the Milky Way, and how widely spaced are they likely to be?

Finding untethered black holes, whether within or outside the galactic disk, is not work for the faint-hearted. Surely microlensing studies are our best way to proceed?

The paper is Sweeney et al., “The Galactic underworld: the spatial distribution of compact remnants,” Monthly Notices of the Royal Astronomical Society, Volume 516, Issue 4 (November 2022), pp. 4971–4979 (abstract / preprint). The black hole discovery paper is Chakrabarti et al., “A non-interacting Galactic black hole candidate in a binary system with a main-sequence star,” in process at the Astrophysical Journal (preprint).

Ion Propulsion for the Nearby Interstellar Medium

When scientists began seriously looking at beaming concepts for interstellar missions, sails were the primary focus. The obvious advantage was that a large sail need carry no propellant. Here I’m thinking about the early work on laser beaming by Robert Forward, and shortly thereafter George Marx. Forward’s first published work on laser sails came during his tenure at Hughes Aircraft Company, having begun as an internal memo within the firm, and later appearing in Missiles and Rockets. Theodore Maiman was working on lasers at Hughes Research Laboratories back then, and the concept of wedding laser beaming with a sail fired Forward’s imagination.

The rest is history, and we’ve looked at many of Forward’s sail concepts over the years on Centauri Dreams. But notice how beaming weaves its way through the scientific literature on interstellar flight, later being applied in situations that wed it with technologies other than sails.

Thus Al Jackson and Daniel Whitmire, who in 1977 considered laser beaming in terms of Robert Bussard’s famous interstellar ramjet concept. A key problem, lighting proton-proton fusion at low speeds during early acceleration, could be solved by beaming energy to the departing craft by laser.

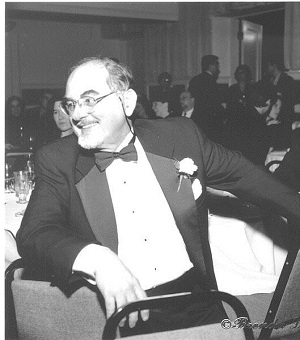

Image: Physicist A. A. Jackson, originator of the laser-powered ramjet concept.

In other words, a laser beam originating in the Solar System powers up reaction mass until the ramjet reaches about 0.14 c. The Bussard craft then switches over to full interstellar mode as it climbs toward relativistic velocities. Jackson and Whitmire would go on in the following year to confront the problem that a ramscoop produced enough drag to nullify the concept. A second design emerged, using a space-based laser to power up a starship that used no ramscoop but carried its own reaction mass onboard.

The beauty of the laser-powered rocket is that it can accelerate into the laser beam as well as away from it, since the beam provides energy but is not used to impart momentum, as in Forward’s thinking about sails. In the paper, huge lasers are involved, up to 10 kilometers in diameter, with a diffraction limited range of 500 AU.

But note this: As far back as 1967, John Bloomer had proposed using an external energy source on a departing spacecraft, but focusing the beam not on a departing fusion rocket but one carrying an electrical propulsion system bound for Alpha Centauri. So we have been considering electric propulsion wed with lasers as far back as the Apollo era.

Now we can swing our focus back around to the paper by Angelo Genovese and Nadim Maraqten that was presented at the recent IAC meeting in Paris. Here we are looking not at full-scale missions to another star, but the necessary precursors that we’ll want to fly in the relatively near-term to explore the interstellar medium just outside the Solar System. The problem is getting there in a reasonable amount of time.

As we saw in the last post, electric propulsion has a rich history, but taking it into deep space involves concepts that are, in comparison with laser sail proposals, largely unexplored. A brief moment of appreciation, though, for the ever prescient Konstantin Tsiolkovsky, who sometimes seems to have pondered almost every notion we discuss here a century ago. Genovese and Maraqten found this quote from 1922:

“We may have a case when, in addition to the energy of ejected material, we also have an influx of energy from the outside. This influx may be supplied from Earth during motion of the craft in the form of radiant energy of some wavelength.”

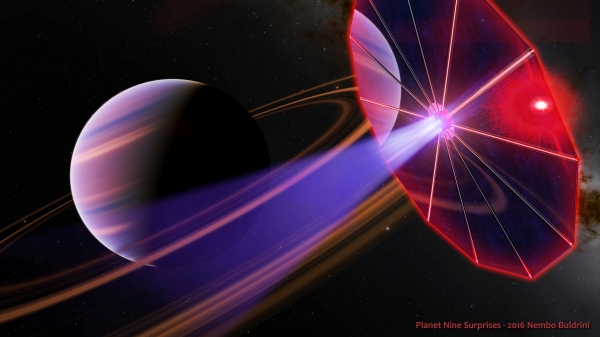

Tsiolkovsky wouldn’t have known about lasers, of course, but the gist of the case is here. Angelo Genovese took the laser-powered electric propulsion concept to Chattanooga in 2016 when the Interstellar Research Group (then called the Tennessee Valley Interstellar Workshop) met there for a symposium. Out of this talk emerged EPIC, the Electric Propulsion Interstellar Clipper, shown in the image below, which is Figure 9 in the current paper. Here we have a monochromatic PV collector working with incoming laser photons to convert needed electric power for 50,000 s ion thrusters.

Image: An imaginative look at laser electric propulsion for a near-term mission, in this case a journey to the hypothesized Planet 9. Credit: Angelo Genovese/Nembo Buldrini.

Do notice that by ‘interstellar’ we are referring to a mission to the nearby interstellar medium rather than a mission to another star. Stepping stones are important.

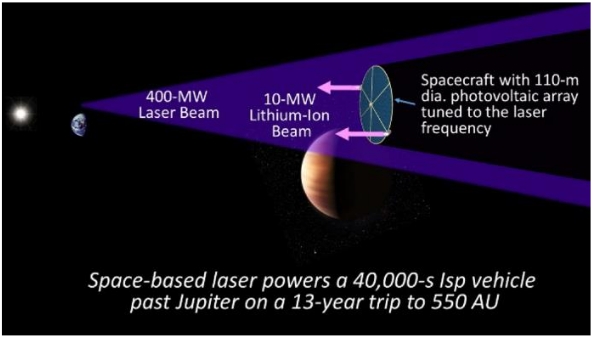

Genovese and Maraqten also note John Brophy’s work at NASA’s Innovative Advanced Concepts office that delves into what Brophy considers “A Breakthrough Propulsion Architecture for Interstellar Precursor Missions.” Here Brophy works with a 2-kilometer diameter laser array beaming power across the Solar System to a 110 meter diameter photovoltaic array to feed an ion propulsion system with an ISP of 40,000 seconds. That gets a payload to 550 AU in a scant 13 years, an interesting distance as this is where gravitational lensing gets exploitable. Can we go faster and farther?

Image: John Brophy’s work at NIAC examines laser electric propulsion as a means of moving far beyond the heliosphere, all the way out to where the Sun’s gravitational lens begins to produce useful scientific results. Credit: John Brophy.

An advanced mission to 1000 AU emerged in a study Genovese performed for the Initiative for Interstellar Studies back in 2014. Here the author had considered nuclear methods for powering the craft, with reactor specific mass of 5 kg/kWe. Genovese’s calculations showed that such a craft could reach this distance in 35 years, moving at 150 km/s. This saddles us, of course, with the nuclear reactor needed for power aboard the spacecraft. In the current paper, he and co-author Maraqten ramp up the concept:

The TAU mission could greatly profit from the LEP concept. Instead of a huge nuclear reactor with a mass of 12.5 tons (1-MWe class with a specific mass of 12.5 kg/kWe), we could have a large monochromatic PV collector with 50% efficiency and a specific mass of just 1 kg/kWe… This allows us to use a more advanced ion propulsion system based on 50,000s ion thrusters. The much higher specific impulse allows a substantial reduction in propellant mass from 40 tons to 10 tons, leading to a TAU initial mass of just 23 tons instead of 62 tons. The final burnout speed is 240 km/s (50 AU/yr), 1000 AU are reached in just 25 years (Genovese, 2016 [26]).

In fact, the authors rank electric propulsion possibilities this way:

- Present EP performance involves ISP in the range of 7000 s, which can deliver a fairly near-term 200 AU mission with a cruise time in the range of 25 years.

- Advanced EP concepts with ISP of 28,000 s draw on an onboard nuclear reactor, and produce a mission to 1000 AU with a trip time of 35 years. The authors consider this ‘mid-term development.’

- In terms of long-term possibilities, very advanced EP concepts with ISP of 40,000 s can be powered by a 400 MW space laser array, giving us a 1000 AU mission with a trip time of 25 years.

So here we have a way to cluster technologies in the service of an interstellar precursor mission that operates well within the lifetime of the scientists and engineers who are working on the project. I mention this latter fact because it always comes up in discussions, although I don’t really see why. Many of the team currently working on Breakthrough Starshot, for example, would not see the launch of the first probes toward a target like Proxima Centauri even if the most optimistic scenarios for the project were realized. We don’t do these things for our ourselves. We do them for the future.

The Maraqten & Genovese paper is “Advanced Electric Propulsion Concepts for Fast Missions to the Outer Solar System and Beyond,” 73rd International Astronautical Congress (IAC), Paris, France, 18-22 September 2022 (available here). The laser rocket paper is Jackson and Whitmire, “Laser Powered Interstellar Rocket,” Journal of the British Interplanetary Society, Vol. 31 (1978), pp.335-337. The Bloomer paper is “The Alpha Centauri Probe,” in Proceedings of the 17th International Astronautical Congress (Propulsion and Re-entry), Gordon and Breach. Philadelphia (1967), pp. 225-232.

Ion Propulsion: The Stuhlinger Factor

How helpful can electric propulsion become as we plan missions into the local interstellar medium? We can think about this in terms of the Voyager probes, which remain our only operational craft beyond the heliosphere. Voyager 1 moved beyond the heliopause in 2012, which means 35 years between launch and heliosphere exit. But as Nadim Maraqten (Universität Stuttgart) noted in a presentation at the recent International Astronautical Congress, reaching truly unperturbed interstellar space involves getting to 200 AU. We’d like to move faster than Voyager, but how?

Working with Angelo Genovese (Initiative for Interstellar Studies), Maraqten offers up a useful analysis of electric propulsion, calling it one of the most promising existing propulsion technologies, along with various sail concepts. In fact, the two modes have been coupled in some recent studies, about which more as we proceed. The authors believe that the specific impulse of an EP spacecraft must exceed 5000 seconds to make interstellar precursor missions viable in a timeframe of 25-30 years, acknowledging that this ramps up the power needed to reach the desired delta-v.

Electric propulsion is a method of ionizing a propellant and subsequently accelerating it via electric or magnetic fields or a combination of the two. The promise of these technologies is great, for we can achieve higher exhaust velocities by far with electric methods than through any form of conventional chemical propulsion. We’ve seen that promise fulfilled in missions like DAWN, which in 2015 became the first spacecraft to orbit two destinations beyond Earth, having reached Ceres after previously exploring Vesta. We can use electric methods to reduce propellant mass or achieve, over time, higher velocities. [Addendum: Thanks to several readers who noticed that I had reversed the order of Vesta and Ceres in the DAWN mission above. I’ve fixed the mistake.]

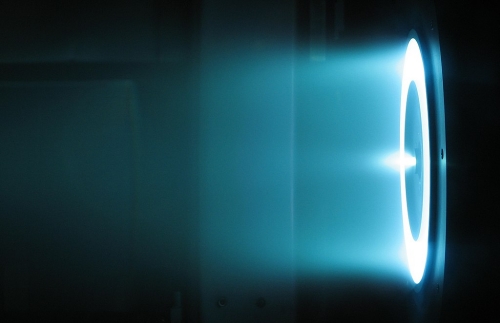

Image: 6 kW Hall thruster in operation at the NASA Jet Propulsion Laboratory

Unlike chemical propulsion, electric concepts have a relatively recent history, having appeared in Robert Goddard’s famous notebooks as early as 1906. In fact, Goddard’s 1917 patent shows us the first example of an electrostatic ion accelerator useful for propulsion, even if he worked at a time when our understanding of ions was incomplete, so that he considered the problem as one of moving electrons instead. Konstantin Tsiolkovsky had also conceived the idea and wrote about it in 1911, this from the man who produced the Tsiolkovsky rocket equation in 1903 (although Robert Goddard would independently derive it in 1912, and so would Hermann Oberth about a decade later).

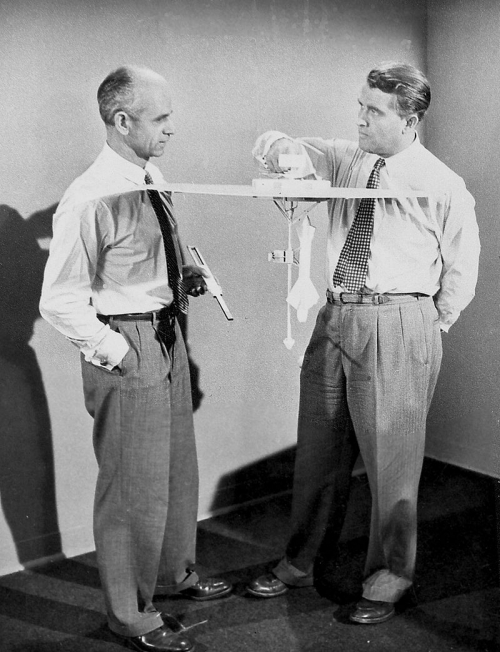

As Maraqten and Genovese point out, Hermann Oberth wound up devoting an entire chapter (and indeed, the final one) of his 1929 book Wege zur Raumschiffahrt (Ways to Spaceflight) to what he describes as an ‘electric spaceship.’ That caught the attention of Wernher von Braun, and via him Ernst Stuhlinger, who conceived of using these methods rather than chemical propulsion to make von Braun’s idea of an expedition to Mars a reality. It had been von Braun’s idea to use chemical propulsion with a nitric acid/hydrazine propellant, as depicted in a famous series on space exploration that ran in Collier’s from 1952-1954.

But Stuhlinger thought he could bring the mass of the spacecraft down by two-thirds while expelling ions and electrons to achieve far higher exhaust velocity. It was he who introduced the idea of nuclear-electric propulsion, by replacing a power system based on solar energy with a nuclear reactor, thus moving us from SEP (Solar Electric Propulsion) to NEP (Nuclear Electric Propulsion). Let me quote Maraqten and Genovese on this:

Stuhlinger immersed himself in electric propulsion theory, and in 1954 he presented a paper at the 5th International Astronautical Congress in Vienna entitled, “Possibilities of Electrical Space Ship Propulsion”, where he conceived the first Mars expedition using solar-electric propulsion [4]. The spacecraft design he proposed, which he nicknamed the “Sun Ship”, had a cluster of 2000 ion thrusters using caesium or rubidium as propellant. He calculated that the total mass of the “Sun Ship” would be just 280 tons instead of the 820 tons necessary for a chemical-propulsion spaceship for the same Mars mission. In 1955 he published: “Electrical Propulsion System for Space Ships with Nuclear Source” in the Journal of Astronautics, where he replaced the solar-electric power system with a nuclear reactor (Nuclear Electric Propulsion – NEP). In 1964 Stuhlinger published the first systematic analysis of electric propulsion systems: “Ion Propulsion for Space Flight” [3], while the physics of electric propulsion thrusters was first described comprehensively in a book by Robert Jahn in 1968 [5].

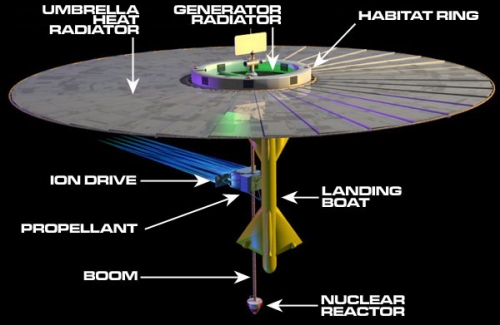

In 1957, the Walt Disney television program ‘Mars and Beyond’ (shown in the series ‘Tomorrowland’) featured the fleet of ten nuclear-electric powered spacecraft that Stuhlinger envisioned for the journey. As you can see in the image below, this is an unusual design, a vehicle that became known as an ‘umbrella ship.’ I’ve quoted him before on this, but let me run the passage again. It’s from Stuhlinger’s 1955 paper “Electrical Propulsion System for Space Ships with Nuclear Power Source”:

A propulsion system for space ships is described which produces thrust by expelling ions and electrons instead of combustion gases. Equations are derived from the optimum mass ratio, power, and driving voltage of a ship with given payload, travel time, and initial acceleration. A nuclear reactor provides the primary power for a turbo-electric generator; the electric power then accelerates the ions. Cesium is the best propellant available because of its high atomic mass and its low ionization energy. A space ship with 150 tons payload and an initial acceleration of 0.67 x 10-4 G, traveling to Mars and back in a total travel time of about 2 years, would have a takeoff mass of 730 tons.

Image: Ernst Stuhlinger’s Umbrella Ship, built around ion propulsion. Notice the size of the radiator, which disperses heat from the reactor at the end of the boom. The source for this concept was a Stuhlinger paper called “Electrical Propulsion System for Space Ships with Nuclear Power Source,” which ran in the Journal of the Astronautical Sciences 2, no. Pt. 1 in 1955, pp. 149-152. Credit: Winchell Chung.

While I’ve only talked about Stuhlinger’s work on electric propulsion here, his contribution to space sciences was extensive, ranging from a staging system crucial to Explorer 1 (this involved his pushing a button at the precise time required, hence his nickname as ‘the man with the golden finger’), to his work as director of the Marshall Space Flight Center Science Laboratory, which involved an active role in plans for lunar exploration.

For his contributions to electric propulsion, the Electric Rocket Propulsion Society renamed its award for outstanding achievement as the Stuhlinger Medal after his death. In terms of his visibility to the public, those interested in space advocacy will know about his letter to Sister Mary Jucunda, a nun based in Zambia, which laid out to a profound skeptic the rationale for pursuing missions to far destinations at a time of global crisis.

Image: In the above photo, taken at the Walt Disney Studios in California, Wernher von Braun (right) and Ernst Stuhlinger are shown discussing the technology behind nuclear-electric spaceships designed to undertake the mission to the planet Mars. As a part of the Disney ‘Tomorrowland’ series on the exploration of space, the nuclear-electric vehicles were shown in the program “Mars and Beyond,” which first aired in December 1957. Credit: NASA MSFC.

In the next post, I want to look at the deep space applications that Maraqten and Genovese considered in their IAC presentation.

For more details on Stuhlinger’s Mars ship, see Adam Crowl’s Stuhlinger Mars Ship Paper, and the followup I wrote in these pages back in 2015, Ernst Stuhlinger: Ion Propulsion to Mars. The Maraqten & Genovese paper is “Advanced Electric Propulsion Concepts for Fast Missions to the Outer Solar System and Beyond,” 73rd International Astronautical Congress (IAC), Paris, France, 18-22 September 2022 (available here). Ernst Stuhlinger’s paper on nuclear-electric propulsion is “Electrical Propulsion System for Space Ships with Nuclear Source,” appearing in the Journal of Astronautics Vol. 2, June 1955, p. 149, and available in manuscript form here. For more background on electric propulsion, see Choueiri, E., Y., “A Critical History of Electric Propulsion: The First 50 Years (1906-1956),” Journal of Propulsion and Power, vol. 20, pp. 193-203, 2004.

M-Dwarfs: The Asteroid Problem

I hadn’t intended to return to habitability around red dwarf stars quite this soon, but on Saturday I read a new paper from Anna Childs (Northwestern University) and Mario Livio (STScI), the gist of which is that a potential challenge to life on such worlds is the lack of stable asteroid belts. This would affect the ability to deliver asteroids to a planetary surface in the late stages of planet formation. I’m interested in this because it points to different planetary system architectures around M-dwarfs than we’re likely to find around other classes of star. What do observations show so far?

You’ll recall that last week we looked at M-dwarf planet habitability in the context of water delivery, again involving the question of early impacts. In that paper, Tadahiro Kimura and Masahiro Ikoma found a separate mechanism to produce the needed water enrichment, while Childs and Livio, working with Rebecca Martin (UNLV) ponder a different question. Their concern is that red dwarf planets would lack the kind of late impacts that produced a reducing atmosphere on Earth. On our planet, via the reaction of the iron core of impactors with water in the oceans, hydrogen would have been released as the iron oxidized, making an atmosphere in which simple organic molecules could emerge.

If we do need this kind of impact to affect the atmosphere to produce life (and this is a big ‘if’), we have a problem with M-dwarfs, for delivering asteroids seems to require a giant planet outside the radius of the snowline to produce a stable asteroid belt.

Depending on the size of the M-dwarf, the snowline radius is found from roughly 0.2 to 1.2 AU, close enough that radial velocity surveys are likely to detect giant planets near but outside this distance. The transit method around such small stars is likewise productive, but we find no such giant planets in those M-dwarf systems where we currently have discovered probable habitable zone planets:

The Kepler detection limit is at orbital periods near 200 days due to the criterion that three transits need to be observed in order for a planet to be confirmed (Bryson et al. 2020). However, in the case of low signal-to-noise observations, two observed transits may suffice, which allows longer-period orbits to be detected. This was the case for Kepler-421 b, which has an orbital period of 704 days (Kipping et al. 2014). Furthermore, any undetected exterior giant planets would likely raise a detectable transit timing variation (TTV) signal on the inner planets (Agol et al. 2004). For these reasons, while the observations could be missing long-period giant planets, the lack of giant planets around low-mass stars that are not too far from the snow line is likely real.

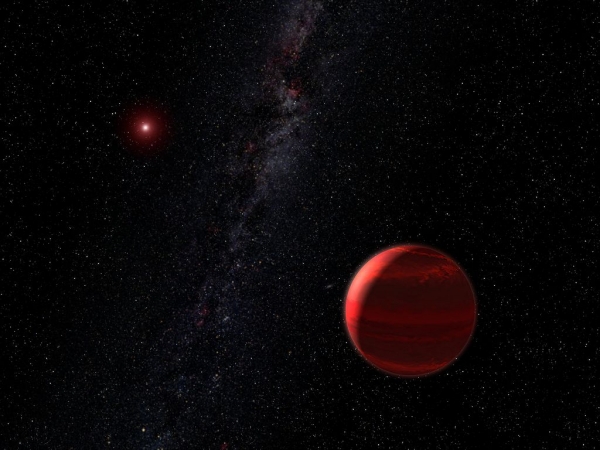

Image: A gas giant in orbit around a red dwarf star. How common is this scenario? We know that such planets can exist, but so far have never detected a gas giant outside the snowline around a system with a planet in the habitable zone. Credit: NASA, ESA and G. Bacon (STScI).

In the search for stable asteroid belts, what we are looking for is a giant planet beyond the snowline, with the asteroid belt inside its orbit, as well as an inner terrestrial system of planets. None of the currently observed planets in the habitable zone around M-dwarfs shows a giant planet in the right position to produce an asteroid belt. Which is not to say that such planets do not exist around M-dwarfs, but that we do not yet find any in systems where habitable zone planets occur. Let me quote the paper again:

By analyzing data from the Exoplanet Archive, we found that there are observed giant planets outside of the snow line radius around M dwarfs, and in fact the distribution peaks there. This, combined with observations of warm dust belts, suggests that asteroid belt formation may still be possible around M dwarfs. However, we found that in addition to a lower occurrence rate of giant planets around M dwarf stars, multiplanet systems that contain a giant planet are also less common around M dwarfs than around G-type stars. Lastly, we found a lack of hot and warm Jupiters around M dwarfs, relative to the K-, G-, and F-type stars, potentially indicating that giant planet formation and/or evolution does take separate pathways around M dwarfs.

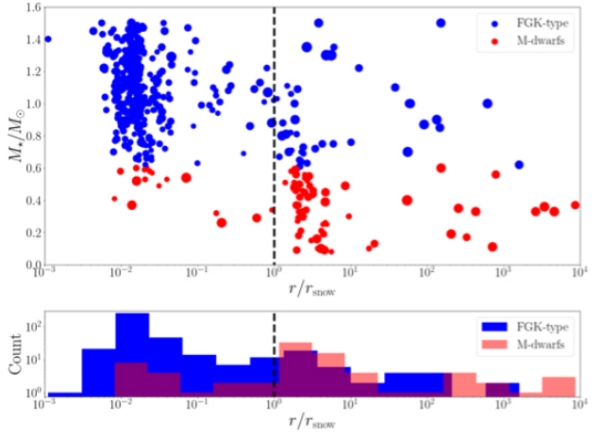

Image: This is Figure 2 from the paper. Caption: Locations of the giant planets, r, normalized by the snow-line radius in the system, vs. the stellar mass, M?. The point sizes in the top plot are proportional to m?. Red dots indicate planets around M dwarf stars and blue dots indicate planets around FGK-type stars. The point sizes in the legend correspond to Jupiter-mass planets. The bottom plot shows normalized histograms of the giant planet locations for both single planet and multiplanet systems. The location of the snow line is marked by a black dashed vertical line. Credit: Childs et al.

The issues raised in this paper all point to how little we can say with confidence at this point. Are asteroid impacts really necessary for life to emerge? The question would quickly be resolved by finding biosignatures on an M-dwarf planet without a gas giant in the system, presuming no asteroid belt had formed by other methods. As one with a deep curiosity about M-dwarf planetary possibilities, I find this work intriguing because it points to different architectures around red dwarfs than other stars. It’s a difference we’ll explore as we begin to fill in the blanks by evaluating M-dwarf planets for early biosignature searches.

The paper is Childs et al., “Life on Exoplanets in the Habitable Zone of M Dwarfs?,” Astrophysical Journal Letters Vol. 937, No. 2 (4 October 2022), L42 (full text).