Centauri Dreams

Imagining and Planning Interstellar Exploration

Getting Down to Business with JWST

So let’s get to work with the James Webb Space Telescope. Those dazzling first images received a gratifying degree of media attention, and even my most space-agnostic neighbors were asking me about what exactly they were looking at. For those of us who track exoplanet research, it’s gratifying to see how quickly JWST has begun to yield results on planets around other stars. Thus WASP-96 b, 1150 light years out in the southern constellation Phoenix, a lightweight puffball planet scorched by its star.

Maybe ‘lightweight’ isn’t the best word. Jupiter is roughly 320 Earth masses, and WASP-96b weighs in at less than half that, but its tight orbit (0.04 AU, or almost ten times closer to its Sun-like star than Mercury) has puffed its diameter up to 1.2 times that of Jupiter. This is a 3.5-day orbit producing temperatures above 800 ?.

As you would imagine, this transiting world is made to order for analysis of its atmosphere. To follow JWST’s future work, we’ll need to start learning new acronyms, the first of them being the telescope’s NIRISS, for Near-Infrared Imager and Slitless Spectrograph. NIRISS was a contribution to the mission from the Canadian Space Agency. The instrument measured light from the WASP-96 system for 6.4 hours on June 21.

Parsing the constituents of an atmosphere involves taking a transmission spectrum, which examines the light of a star as it filters through a transiting planet’s atmosphere. This can then be compared to the light of the star when no transit is occurring. As specific wavelengths of light are absorbed during the transit, atmospheric gasses can be identified. Moreover, scientists can gain information about the atmosphere’s temperature based on the height of peaks in the absorption pattern, while the spectrum’s overall shape can flag the presence of haze and clouds.

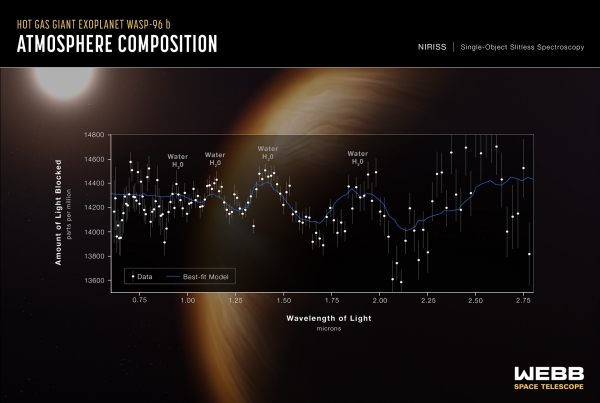

These NIRISS observations captured 280 individual spectra detected in a wavelength range from 0.6 microns to 2.8 microns, thus taking us from red into the near infrared. Even with a relatively large object like a gas giant, the actual blockage of starlight is minute, here ranging from 1.36 percent to 1.47 percent. As the image below reveals, the results show the huge promise of the instrument as we move through JWST’s Cycle 1 observations, nearly a quarter of which are to be devoted to exoplanet investigation.

Image: A transmission spectrum is made by comparing starlight filtered through a planet’s atmosphere as it moves across the star, to the unfiltered starlight detected when the planet is beside the star. Each of the 141 data points (white circles) on this graph represents the amount of a specific wavelength of light that is blocked by the planet and absorbed by its atmosphere. The gray lines extending above and below each data point are error bars that show the uncertainty of each measurement, or the reasonable range of actual possible values. For a single observation, the error on these measurements is remarkably small. The blue line is a best-fit model that takes into account the data, the known properties of WASP-96 b and its star (e.g., size, mass, temperature), and assumed characteristics of the atmosphere. Credit: NASA, ESA, CSA, and STScI.

No more detailed infrared transmission spectrum has even been taken of an exoplanet, and this is the first that includes wavelengths longer than 1.6 microns at such resolution, as well as the first to cover the entire frequency range from 0.6 to 2.8 microns simultaneously. Here we can detect water vapor and infer the presence of clouds, as well as finding evidence for haze in the shape of the slope at the left of the spectrum. Peak heights can be used to deduce an atmospheric temperature of about 725 ?.

Moving into wavelengths longer than 1.6 microns gives scientists a part of the spectrum that is made to order for the detection of water, oxygen, methane and carbon dioxide, all of which are expected to be found in other exoplanets observed by the instrument, and a portion of the spectrum not available from predecessor instruments. All this bodes well for what JWST will have to offer as it widens its exoplanet observations.

Spatial-Temporal Variance Explanation for the Fermi Paradox

Just how likely is it that the galaxy is filled with technological civilizations? Kelvin F Long takes a look at the question using diffusion equations to probe the possible interactions among interstellar civilizations. Kelvin is an aerospace engineer, physicist and author of Deep Space Propulsion: A Roadmap to Interstellar Flight (Springer, 2011). He is the Director of the Interstellar Research Centre (UK), has been on the advisory committee of Breakthrough Starshot since its inception in 2016, and was the co-founder of Icarus Interstellar and the Initiative/Institute for Interstellar Studies, He has served as editor of the Journal of the British Interplanetary Society and continues to maintain the Interstellar Studies Bibliography, currently listing some 1400 papers on the subject.

by Kelvin F Long

Many excellent papers have been written about the Fermi paradox over the years, and until we find solid evidence for the existence of life or intelligent life elsewhere in the galaxy the best we can do is to estimate based on what we do know about the nature of the world we live in and the surrounding universe we observe across space and time.

Yet ultimately to increase the chances of finding life we need to send robotic probes external to our solar system to visit the planets around other stars. Whilst telescopes can do a lot of significant science, in principle a probe can conduct in-situ reconnaissance of the system to include orbiters, atmospheric penetrators and even landers.

Currently, the Voyager 1 and 2 probes are taking up the vanguard of this frontier and hopefully in the years ahead more will follow in their wake. Although these are only planetary flyby probes and would take tens of thousands of years to reach the nearest stars, our toes have been dipped into the cosmic ocean at least, and this is a start.

If we can send a probe out into the Cosmos, it stands to reason that other civilizations may do the same. As probes from different civilizations explore space, there is a possibility that they may encounter each other. Indeed, it could be argued that the probability of species-species first contact is more with their robotic ambassadors rather than the original biological organisms that launched them on their vast journeys.

However, the actual probability of two different probes from alternative points of origin (different species) interacting is low. This is for several reasons. The first relates to astrobiology in that we do not yet know how frequent life is in the galaxy. The second relates to the time of departure of the probes within the galaxy’s history. Two probes may appear in the same region of space, but if this happens millions of years apart then they will not meet. Third, and an issue not often discussed in the literature, is the fact that each probe will have a different propulsion system and so its velocity of motion will be different.

As a result, not only do probes have to contend with relativistic effects with respect to their world of origin (particularly if they are going close to the speed of light), but they will also have to deal with the fact that their clocks are not synchronised with each other. The implication is that for probes interacting from civilizations that are far apart, the relativistic effects become so large that it creates a complex scenario of temporal synchronization. This becomes more pronounced the larger the different species of probes, and the larger the difference in the respective average speeds. This is a state we might call ‘temporal spaghettification’, in reference to the complex space-time history of the spacecraft trajectories relative to each other.

An implication of this is that ideas like the Isaac Asimov Foundation series, where vast empires are constructed across hundreds or thousands of light years of space, do not seem plausible. This is particularly the case for ultra-fast speeds (where relativistic effects dominate) that do approach the speed of light. In general, the faster the probe speeds and the further apart the separate civilizations, the more pronounced the effect. In 2016 this author framed the idea as a postulate:

“Ultra-relativistic spaceflight leads to temporal spaghettification and is not compatible with galaxy wide civilizations interacting in stable equilibrium.”

Another consequence of ultra-fast speeds is that if civilizations do interact, it will not be possible to prevent the technology (i.e. power and propulsion) associated with the more advanced race from eventually emerging within the other species at some point in the future. Imagine, for example, if a species turned up with faster than light drives and simply chose to share that technology, even if for a price, as a part of a cultural information exchange.

Should such a culture refuse to share that technology with us, we would likely work towards its fruition anyway. This is because our knowledge of its existence will promote research within our own science to work towards its realisation. Alternatively, knowledge of that technology will eventually just leak out and be known by others.

There is also a statistical probability that if it can be invented by one species, it will be invented by another; as a law of large numbers. As a result when one species has this technology and starts interacting with others, eventually many other species will obtain it, even if it takes a long time to mature. We might think of this as a form of technological equilibration, in reference to an analogy to thermodynamics.

Ultimately, this implies that it is not possible to contain the information associated with the technology forever once species-species interaction begins. Indeed, it has been discovered that even the gravitational prisons of light (black holes) are leaky through Hawking evaporation. The idea that there is no such thing as a permanently closed system was also previously framed as a second postulate by this author:

“No information can be contained in any system indefinitely.”

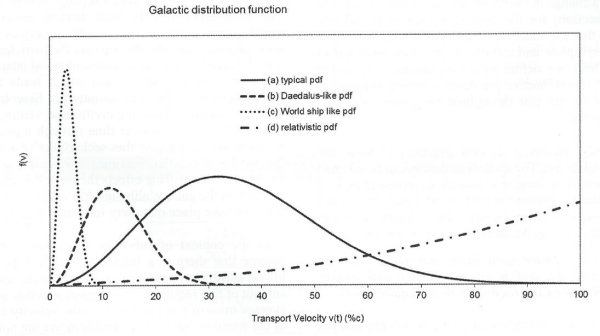

Adopting analogues from plasma physics and the concept of distribution functions, we can imagine a scenario in which within a galaxy there are multiple populations, each sending out waves of probes at some average velocity of expansion rate. If most of the populations adopted fusion propulsion technology, for example, as their choice of interstellar transport, then the average velocity might be around 0.1c (i.e. plausible speeds for fusion propulsion are 0.05-0.15c) and this would then define the peak of a velocity distribution function.

The case of human-carrying ships may be represented by world ships traveling at the slow speeds of 0.01-0.03c. In the scenario of the majority of the populations employing a more energetic propulsion method, such as using antimatter fuel, the peak would shift to the right. In general, the faster the average expansion speed, the further to the right the peak would shift, since the peak represents the average velocity.

The more the populations interacted, the greater the technological equilibration over time, and this could see a gradual shift into the relativistic and then ultra-relativistic (>0.9c) speed regimes. Yet, due to the limiting factor of the speed of light limit (~300,000 km/s or 1c), the peak would start to move asymptotically towards some infinite value.

There is also the special case of faster-than-light travel (ftl), but by the second postulate if any one civilization develops it then eventually many of the others will also develop it. Then as the mean velocity of many of the galactic populations tends towards some ftl value, you get a situation where many civilizations can now leave the galaxy, creating a massive population expansion outwards, as starships are essentially capable of reaching other galaxies. That population would also be expanding inwards to the other stars within our galaxy since trip times are so short. Indeed, ships would also be arriving from other galaxies due to the ease of travel. But if this were the case, starships would be arriving in Earth orbit by now.

In effect, the more those civilizations interact, the more the average speed of spacecraft in the galaxy would shift to higher speeds, and eventually this average would begin to move asymptotically towards ftl (assuming it is physically possible), which is an effect we might refer to as ‘spatial runaway’ since there is no longer any tendency towards some equilibrium speed limit. In addition, the ubiquity of ftl transport comes with all sorts of implications for communications and causality and in general creates a chaotic scenario that does not lean towards a stable state.

This then leads to the third postulate:

“Faster than light spaceflight leads to spatial runaway, and is not compatible with galaxy wide civilizations interacting in stable equilibrium.”

Each species that is closely interacting may start out with different propulsion systems so that they have an average speed of population expansion, but if technology is swapped there will be some sort of equilibration that will occur such that all species tend towards some mean velocity of population diffusion.

The modeling of a population density of a substance is borrowed from stochastic potential theory, with discrete implementation for the quantization of space and time intervals by the use of average collision parameters. This is analogous to problems such as Brownian motion, where particles undergo a random walk. This can be adopted as an analogy to explain the motion of a population of interstellar probes dispersing through the galaxy from a point of origin.

Modeling population interaction is best done using the diffusion equation of physics, which is derived from Fick’s first and second law for the dispersion of a material flux, and also the continuity equation. This is a second order partial differential equation and its solution for a population that starts with some initial high density and drops to some low density. It is given by a flux equation which is a function of both distance and time. This equation is proportional to the exponential of the negative distance squared.

Using this physics as a model, it is possible to show that the galaxy can be populated within only a couple of million years, but even faster if the population is growing rapidly, as for instance via von Neumann self-replication. A key part of the use of the diffusion equation is the definition of a diffusion coefficient which is equal to ½(distance squared/time), where the distance is the average collision distance between stars (assumed to be around 5 light years) and time is the average collision time between stars (assumed to be between 50-100 years for 0.05-0.1c average speed). These relatively low cruise speeds were chosen because the calculations were conducted in relation to fusion propulsion designs only.

For probes that eventually manufacture another probe on average (i.e., not fully self-reproducing), this might be seen as analogous to a critical nuclear state. Where the probe reproduction rate drops to less than unity on average, this is like a sub-critical state and eventually the probe population will fall-off until some stagnation horizon is reached. For example, calculations by this author using the diffusion equation show that with an initial population as large as 1 million probes, each traveling at an average velocity of 0.1c, after about ~1,000 years the population would have stagnated at a distance of approximately ~100 light years.

If however, the number of probes being produced is greater than unity, such as through self-replicating von Neumann probes, then the population will grow from a low density state to a high density state as a type of geometrical progression. This is analogous to a supercritical state. For example, if each probe produced a further two probes on average from a starting population of 10 probes, then by the 10th generation there would be a total of 10,000 probes in the population.

Assume that there are at least 100 billion stars in the Milky Way galaxy. For the number of von Neumann probes in the population to equal that number of stars would only require a starting population of less than 100 probe factories, with each producing 10 replication probes, and after only 10 generations of replication. This underscores the argument made by some such as Boyce (Extraterrestrial Encounter, A Personal Perspective, 1979) that von Neumann-like replication probes should be here already. The suggestion of self-replicating probes was advanced by Bracewell (The Galactic Club: Intelligent Life in Outer Space, 1975) but has its origins in automata replication and the research of John von Neumann (Theory of Self-Reproducing Automata, 1966).

Any discussion about robotic probes interacting is also a discussion about the number of intelligent civilizations – such probes had to be originally designed by someone. It is possible that these probes are no longer in contact with their originator civilization, which may be many hundreds of light years away. This is why such probes would have to be fully autonomous in their decision making capability. Indeed, it could be argued that the probability of the human species first meeting an artificial intelligence-based robotic probe is more likely than meeting an alien biological organism. It may also be the case that in reality there is no difference, if biological entities have figured out how to go fully artificial and avoid their mortal fate.

Indeed, when considering the future of Homo Sapiens and our continued convergence with technology the science and science fiction writer Arthur C Clarke referred to a new species that would eventually emerge, which he called Homo Electronicus. He depicted it thus:

“One day we may be able to enter into temporary unions with any sufficiently sophisticated machines, thus being able not merely to control but to become a spaceship or a submarine or a TV network….the thrill that can be obtained from driving a racing car or flying an aeroplane may be only a pale ghost of the excitement our great grandchildren may know, when the individual human consciousness is free to roam at will from machines to machine, through all reaches of sea and sky and space.” (Profiles of the Future, 1962).

So even the idea of separating a biological organism from a machine intelligence may be an incorrect description of the likely encounter scenarios of the future. A von Neumann robotic spacecraft could turn up in our orbit tomorrow and from a cultural information exchange perspective there may be no distinction. It is certainly the case that robotic probes are more suited for the environment of space than biological organisms that require a survival environment.

Consider a thought experiment. Assume the galaxy’s disc diameter is 100,000 light years and consider only one dimension of space. A population of probes starts out at one end with an average diffusion wave speed of around 10 percent of the speed of light (0.1c). We assume no stopping and instantaneous time between populations of diffusion waves (in reality, there would be a superposition of diffusion waves propagating as a function of distance and time). This diffusion wave would take on the order of 1 million years to cross from one side of the galaxy to the other. We can continue this thought experiment and imagine that the same population starts at the centre and expands out as a spherical diffusion wave. Assuming that the wave did not dissipate and continued to grow, then the time to cover the galactic disc would be approximately half than if it had started on one side.

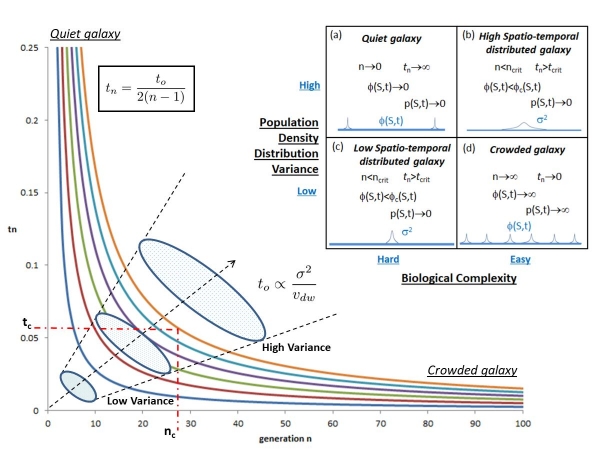

Now imagine there are two originating civilizations, each sending out populations of probes that continue to grow and do not dissipate. These two civilizations are located at opposite ends of the galaxy. The time for the galaxy to be covered by the two populations will now be half of a single population starting out on the edge of the disc. We can continue to add more numbers of populations n=1,2,3,4,5,6….and we get t, t/2, t/4, t/6, t/8, t/10…and we eventually find that for n>1 it follows a geometrical series of the form tn=t0/2(n-1), where t0 is the galactic crossing timescale (i.e. 1 million years) assumed for an initiating population of probes derived from a single civilization which is a function of the diffusion wave speed.

So that for a high number of initiating populations where n ? infinity, the interaction time between populations will be low so that tn ? 0, and the probability of interaction is therefore high. However, for a low number of initiating populations where n ? 0, the interaction time between populations will be high, so that tn ? infinity; thus the timescales between potential interactions are a lot larger and the probability of interaction is therefore low.

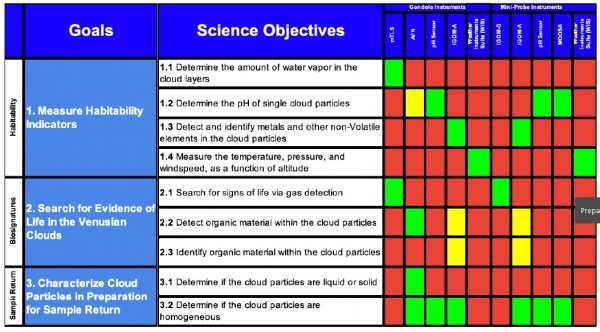

It is important to clarify the definition of interaction time used here. The shorter the interaction time, the higher the probability of interaction, since the time between effective overlapping diffusion waves is short. Conversely, where the interaction time is long, the time between overlapping diffusion waves is long and so the probability of interaction is low. The illustrated graphic below demonstrates these limits and the boxes are the results of diffusion calculations and the implications for population interaction.

As discussed by Bond & Martin (‘Is Mankind Unique?’, JBIS 36, 1983), the graphic illustrates two extreme viewpoints about intelligence within the galaxy. The first is known as Drake-Sagan chauvinism and advocates for a crowded galaxy. This has been argued by Shklovskii & Sagan (‘Intelligent Life in the Universe’, 1966), Sagan & Drake (The Search for Extraterrestrial Intelligence, 1975). In the graphic this occurs when n ? ? , tn ? 0, so that the probability of interaction is extremely high.

Especially since there are likely to be a large superposition of diffusion waves overlapping each other. This effect would become more pronounced for multiple populations of vN probes diffusing simultaneously. We note also that an implication of this model for the galaxy is that if there are large populations of probes, then there must have been large populations of civilizations to launch them, which implies that the many steps to complexity in astrobiology are easier than we might believe. In terms of diffusion waves this scenario is characterised by very high population densities such that ?(S,t) ? ? which also implies that the probability of probe-probe interaction is high p(S,t) ? ?. This is box (d) in the graphic.

The second viewpoint is known as Hart-Viewing chauvinism and advocates for a quiet galaxy. This has been argued by Tipler (‘Extraterrestrial Intelligent Beings do not Exist’, 1980), Hart (‘An Explanation for the Absence of Extraterrestrials on Earth’, 1975) and Viewing (‘Directly Interacting Extraterrestrial Technological Communities’, 1975). This occurs when n ? 0, tn ? ?, so that the probability of interaction is extremely low. In contrast with the first argument, this might imply that the many steps to complexity in astrobiology are hard. This scenario is characterised by very low population densities such that ?(S,t) ? 0 so that few diffusion waves can be expected and also that the probability of interaction is low p(S,t) ? 0. This is box (a) in the graphic.

In discussing biological complexity, we are referring to the difficulty in going from single celled to multi-celled organisms, but then also to large animals, and then to intelligent life which proceeds towards a state of advanced technological attainment. A state where biology is considered ‘easy’ is when all this happens regularly provided the environmental conditions for life are met within a habitat. A state where biology is considered ‘hard’ may be, for example, where it may be possible for life to emerge purely as a function of chemistry but building that up to more complex life such as to an intelligent life-form that may one day build robotic probes is a lot more difficult and less probable. This is a reference to the science of astrobiology which will not be discussed further here. However, since the existence of robotic probes would require a starting population of organisms it has to be mentioned at least.

Given that these two extremes are the limits of our argument, it stands to reason that there must be transition regimes in between which either work towards or against the existence of intelligence and therefore the probability of interaction. The right set of parameters would be optimum to explain our own thinking around the Fermi paradox in terms of our theoretical predictions being in contradiction to our observations.

As shown in the graphic it comes down to the variance ?2 of the statistical distribution for the distance S of a number of probe populations ni within a region of space in a galaxy (not necessarily a whole galaxy), where the variance is also the square root of the standard distribution ? relative to a mean distance between population sources ?S. In other words whether the originating civilizations that initiated the probe populations are closely compacted or widely spread out.

A region of space which had a high probe population density (not spread out or sharp distribution function) would be characterised by a low variance. A region with a low probe population density (widely distributed or flattened distribution function) would be characterised by a high variance. The starting interaction time to of two separate diffusion waves from independent civilizations would then be proportional to the variance and the diffusion wave velocity vdw of each population such that to is proportional to ?2/vdw.

Going back to the graphic there comes a point where the number of populations of probes becomes less than some critical number n<nc, the value of which we do not know, but as this threshold is crossed the interaction time will also increase past that critical value tn>tc. In box (c) of the graphic, biology is ‘hard’ and so despite the low variance the population density will be less than some critical value ?(S,t)<?c(S,t) which means that the probability of probe-probe interaction will be low p(S,t) ? 0. This is referred to as a low spatio-temporal distributed galaxy. Whereas for box (b) of the graphic although biology may be ‘easy’, the large variance of the populations makes for a low population density of the total combined and so also a low probability of probe-probe interaction. This is referred to as a high spatio-temporal distributed galaxy.

Taking all this into account and assessing the Milky Way, we don’t see evidence of a crowded galaxy, which would rule out box (d) in the graphic. In this author’s opinion the existence of life on Earth and its diversity does not imply (at least) consistency with a quiet galaxy (unless one is invoking something special about planet Earth). This is indicated in (a). On the basis of all this, we might consider a fourth postulate along the following lines:

“The probability of interaction for advanced technological intelligent civilizations within a galaxy strongly depends on the number of such civilizations, and their spatial-temporal variance.”

Due to the exponential fall-off in the solution of the diffusion wave equation, the various calculations by this author suggest that intelligent life may occur at distances of less than ~200 ly, which for a 100-200 kly diameter galaxy might suggest somewhere in the range of ~500-1,000 intelligent civilizations along a galactic disc. Given the vast numbers of stars in the galaxy this would lean towards a sparsely populated galaxy, but one where civilizations do occur. Then considering the calculated time scales for interaction, the high probability of von Neumann probes or other types of probes interacting therefore remains.

We note that the actual diffusion calculations performed by this author showed that even with a seed population of 1 billion probes, the distance where the population falls off was at around ~164 ly. This is not too dissimilar to the independent conclusion of Betinis (“On ETI Alien Probe Flux Density”, JBIS, 1978) who calculated that the sources of probes would likely be somewhere within 70-140 ly. Bond and Martin (‘A Conservative Estimate of the Number of Habitable Planets in the Galaxy’ 1978) also calculated that the average distance between habitable planets was likely ~110 ly and ~140 ly between intelligent life relevant planets. Sagan (‘Direct Contact Among Galactic Civilizations by Relativistic Interstellar Spaceflight’, 1963) also calculated that the most probable distance to the nearest extant advanced technical civilization in our galaxy would be several hundred light years. This all implies that an extraterrestrial civilization would be at less than several hundred light years distance, and this therefore is where we should focus search efforts.

When it comes down to the Fermi paradox, this analysis implies that we live in a moderately populated galaxy, and so the probability of interaction is low when considering both the spatial and temporal scales. However, when it comes to von Neumann probes it is clear that the galaxy could potentially be populated in a timescale of less than a million years. This implies they should be here already. As we perhaps ponder recent news stories that are gaining popular attention, we might once again consider the words of Arthur C Clarke in this regard:

“I can never look now at the Milky Way without wondering from which of those banked clouds of stars the emissaries are coming…I do not think we will have to wait for long.” (‘The Sentinel’, 1951).

The content of this article is by this author and appears in a recently accepted 2022 paper for the Journal of the British Interplanetary Society titled ‘Galactic Crossing Times for Robotic Probes Driven by Inertial Confinement Fusion Propulsion’, as well as in an earlier paper published in the same journal titled ‘Unstable Equilibrium Hypothesis: A Consideration of Ultra-Relativistic and Faster than Light Interstellar Spaceflight’, JBIS, 69, 2016.

Probing the Galaxy: Self-Reproduction and Its Consequences

In a long and discursive paper on self-replicating probes as a way of exploring star systems, Alex Ellery (Carleton University, Ottawa) digs, among many other things, into the question of what we might detect from Earth of extraterrestrial technologies here in the Solar System. The idea here is familiar enough. If at some point in our past, a technological civilization had placed a probe, self-replicating or not, near enough to observe Earth, we should at some point be able to detect it. Ellery believes such probes would be commonplace because we humans are developing self-replication technology even today. Thus a lack of probes would indicate that there are no extraterrestrial civilizations to build them.

There are interesting insights in this paper that I want to explore, some of them going a bit far afield from Ellery’s stated intent, but worth considering for all that. SETA, the Search for Extraterrestrial Artifacts, is a young endeavor but a provocative one. Here self-replication attracts the author because probing a stellar system is a far different proposition than colonizing it. In other words, exploration per se — the quest for information — is a driver for exhaustive seeding of probes not limited by issues of sustainability or sociological constraints. Self-replication, he believes, is the key to exponential exploration of the galaxy at minimum cost and greatest likelihood of detection by those being studied.

Image: The galaxy Messier 101 (M101, also known as NGC 5457 and nicknamed the ‘Pinwheel Galaxy’) lies in the northern circumpolar constellation, Ursa Major (The Great Bear), at a distance of about 21 million light-years from Earth. This is one of the largest and most detailed photos of a spiral galaxy that has been released from Hubble. How long would it take a single civilization to fill a galaxy like this with self-replicating probes? Image credit: NASA/STScI.

Growing the Idea of Self-Reproduction

Going through the background to ideas of self-replication in space, Ellery cites the pioneering work of Robert Freitas, and here I want to pause. It intrigues me that Freitas, the man who first studied the halo orbits around the Earth-Moon L4 and L5 points looking for artifacts, is also responsible for one of the earliest studies of machine self-replication in the form of the NASA/ASEE study in 1980. The latter had no direct interstellar intent but rather developed the concept of a self-replicating factory on the lunar surface using resources mined by robots. Freitas would go on to explore a robot factory coupled to a Daedalus-class starship called REPRO, though one taken to the next level and capable of deceleration at the target star, where the factory would grow itself to its full capabilities upon landing.

I should mention that following REPRO, Freitas would turn his attention to nanotechnology, a world where payload constraints are eased and self-reproduction occurs at the molecular level. But let’s stick with REPRO a moment longer, even though I’m departing from Ellery in doing so. For in Freitas’ original concept, half the REPRO payload would be devoted to self-reproduction, with a specialized payload exploiting the resources of a gas giant moon to produce a new REPRO probe every 500 years.

As you can see, the REPRO probe would have taken Project Daedalus’ onboard autonomy to an entirely new level. Freitas’ studies foresaw thirteen distinct robot species, among them chemists, miners, metallurgists, fabricators, assemblers, wardens and verifiers. Each would have a role to play in the creation of the new probe. The chemist robots, for example, were to process ore and extract the heavy elements needed to build the factory on the moon of the gas giant planet. Aerostat robots would float like hot-air balloons in the gas giant’s atmosphere, where they would collect the needed propellants for the next generation REPRO probe. Fabricators would turn raw materials (produced by the metallurgists) into working parts, from threaded bolts to semiconductor chips, while assemblers created the modules that would build the initial factory. Crawler robots would specialize in surface hauling, while wardens, as with Project Daedalus, remained responsible for maintenance and repair of ship systems.

I spend so much time on this because of my fascination with the history of interstellar ideas. In any case, I don’t know of any earlier studies that explored self-reproduction in the interstellar context and in terms of mission hardware than Freitas’ 1980 paper “A Self-Reproducing Interstellar Probe” in JBIS, which is conveniently available online. This was a step forward in interstellar studies, and I want to highlight it with this quotation from its text:

A major alternative to both the Daedalus flyby and “Bracewell probe” orbiter is the concept of the self -reproducing starprobe. Replicating spacefaring machines recently have received a cursory examination by Calder [4] and Boyce [5], but the basic feasibility of this approach has never been seriously considered despite its tremendous potential. In theory, each self -reproducing device dispatched by the launching society would become an independent agent, slowly scouting the Galaxy for evidence of life, intelligence and civilization. While such machines might be costlier to design and construct, given sufficient time a relatively few replicating starprobes could search the entire Milky Way.

The present paper addresses the plausibility of self-reproducing starprobes and the basic parameters of feasibility. A subsequent paper [10] compares reproductive and nonreproductive probe search strategies for missions of interstellar and galactic exploration.

Hart, Tipler and the Spread of Intelligence

These days, as Freitas went on to explore, massive redundancy, miniaturization and self-assembly at the molecular level have moved into tighter focus as we contemplate missions to the stars, and the enormous Daedalus-style craft (54,000 tons initial mass, including 50,000 tonnes of fuel and 500 tonnes of scientific payload) and its successors, while historically important, also resonate a bit with Captain Nemo’s Nautilus, as spectacular creations of the imagination that defied no laws of physics, but remain in tension with the realities of payload and propulsion. These days we explore miniaturization, with Breakthrough Starshot’s tiny payloads as one example.

But back to Ellery. From a philosophical standpoint, self-reproduction, he rightly points out, had also been considered by Michael Hart and Frank Tipler, each noting that if self-replication were possible, a civilization could fill the galaxy in a relatively short (compared to the age of the galaxy) timeframe. Ultimately self-reproducing probes exploit local materials upon arrival and make copies of themselves, a wave of exploration that would ensure every habitable planet had an attendant probe. Thus the Hart/Tipler contention that the lack of evidence for such a probe is an indication that extraterrestrial intelligence does not exist, an idea that still has currency.

Would any exploring civilization turn to self-replication? The author sees many reasons to do so:

There are numerous reasons to send out self-replicating probes – reconnaissance prior to interstellar migration, first-mover advantage, insurance against planetary disaster, etc – but only one not to – indifference to information growth (which must apply to all extant ETI without exception). Self-replicating probes require minimal capital investment and represent the most economical means to explore space, interstellar space included. In a real sense, self-replicating machines cannot become obsolete – new design developments can be broadcast and uploaded to upgrade them when necessary. Once the self-replicating probe is established in a star system, the probe may be exploited in various ways. The universal construction capability ensures that the self-replicating probe can construct any other device.

Probes that can fill the galaxy extract maximum information and can not only monitor but communicate with local species. Should a civilization choose to implement panspermia in systems devoid of life, the capability is implicit here, including “the prospect of exploiting microorganism DNA as a self-replicating message.” Such probes could also, in the event of colonization at a later period, establish needed infrastructure for the new arrivals, with the possibility of terraforming.

Thus probes like these become a route from Kardashev II to III. In fact, as Ellery sees it, if a Kardashev Type I civilization is capable of self-reproduction technology – and remember, Ellery believes we are on the cusp of it now – then the entire Type I phase may be relatively short on the way to Kardashev Types II and III, perhaps as little as a few thousand years. It’s an interesting thought given our current status somewhere around Kardashev 0.72, beset by problems of our own making and wondering whether we will survive long enough to establish a Type I civilization.

Image: NASA’s James Webb Space Telescope has produced the deepest and sharpest infrared image of the distant universe to date. Known as Webb’s First Deep Field, this image of galaxy cluster SMACS 0723 is overflowing with detail. Thousands of galaxies – including the faintest objects ever observed in the infrared – have appeared in Webb’s view for the first time. This slice of the vast universe covers a patch of sky approximately the size of a grain of sand held at arm’s length by someone on the ground. If self-reproducing probes are possible, are all galaxies likely to be explored by other civilizations? Credit: NASA, ESA, CSA, and STScI.

Early Days for SETA

The question of diffusion through the galaxy here gets a workover from a theory called TRIZ (Teorija Reshenija Izobretatel’skih Zadach), which Ellery uses to analyze the implications of self-reproduction, finding that the entire galaxy could be colonized within 24 probe generations. This produces a population of 424 billion probes. He’s assuming a short replication time at each stop – a few years at most – and thus finds that the spread of such probes is dominated by the transit time across the galactic plane, a million year process to complete assuming travel at a tenth of lightspeed.

Given this short timespan compared with the age of the Galaxy, our Galaxy should be swarming with self-replicating probes yet there is no evidence of them in our solar system. Indeed, it only requires a civilization to exist long enough to send out such probes as they would thenceforth continue to propagate through the Galaxy even if the sending civilization were no more. And of course, it requires only one ETI to do this.

Part of Ellery’s intent is to show how humans might create a self-replicating probe, going through the essential features of such and arguing that self-replication is near- rather than long-term, based on the idea of the universal constructor, a machine that builds any or all other machines including itself. Here we find intellectual origins in the work of Alan Turing and John von Neumann. Ellery digs into 3D printing and ongoing experiments in self-assembly as well as in-situ resource utilization of asteroid material, and along the way he illustrates probe propulsion concepts.

At this stage of the game in SETA, there is no evidence of self-replication or extraterrestrial probes of any kind, the author argues:

There is no observational evidence of large structures in our solar system, nor signs of large-scale mining and processing, nor signs of residue of such processes. Our current terrestrial self-replication scheme with its industrial ecology is imposed by the requirements for closure of the self-replication loop that (i) minimizes waste (sustainability) to minimize energy consumption; (ii) minimizes materials and components manufacture to minimize mining; (iii) minimizes manufacturing and assembly processes to minimize machinery. Nevertheless, we would expect extensive clay residues. We conclude therefore that the most tenable hypothesis is that ETI do not exist.

The answer to that contention is, of course, that we haven’t searched for local probes in any coordinated way, and that now that we are becoming capable of minute analysis of, for instance, the lunar surface (through Lunar Reconnaissance Orbiter imagery, for one), we can become more systematic in the investigation, taking in Earth co-orbitals, as Jim Benford has suggested, or looking for signs of lurkers in the asteroid belt. Ellery notes that the latter might demand searching for signs of resource exploitation there as opposed to finding an individual probe amidst the plethora of candidate objects.

But Ellery is adamant that efforts to find such lurkers should continue, citing the need to continue what has been up to now a meager and sporadic effort to conduct SETA. I’m going to recommend this paper to those Centauri Dreams readers who want to get up to speed on the scholarship on self-reproduction and its consequences. Indeed, the ideas jammed into its pages come at bewildering pace, but the scholarship is thorough and the references handy to have in one place. Whether self-reproducing probes are indeed imminent is a matter for debate but their implications demand our attention.

The paper is Ellery, “Self-replicating probes are imminent – implications for SETI,” International Journal of Astrobiology 8 July 2022 (full text). A companion paper published at the same time is “Curbing the fruitfulness of self-replicating machines,” International Journal of Astrobiology 8 July 2022 (full text).

Two Close Stellar Passes

Interstellar objects are much in the news these days, as witness the flurry of research on ‘Oumuamua and 2I/Borisov. But we have to be cautious as we look at objects on hyperbolic orbits, avoiding the assumption that any of these are necessarily from another star. Spanish astronomers Carlos and Raúl de la Fuente Marcos dug several years ago into the question of objects on hyperbolic orbits, noting that some of these may well have origins much closer to home. Let me quote their 2018 paper on this:

There are mechanisms capable of generating hyperbolic objects other than interstellar interlopers. They include close encounters with the known planets or the Sun, for objects already traversing the Solar system inside the trans-Neptunian belt; but also secular perturbations induced by the Galactic disc or impulsive interactions with passing stars, for more distant bodies (see e.g. Fouchard et al. 2011, 2017; Królikowska & Dybczy?ski 2017). These last two processes have their sources beyond the Solar system and may routinely affect members of the Oort cloud (Oort 1950), driving them into inbound hyperbolic paths that may cross the inner Solar system, making them detectable from the Earth (see e.g. Stern 1987).

Scholz’s Star Leaves Its Mark

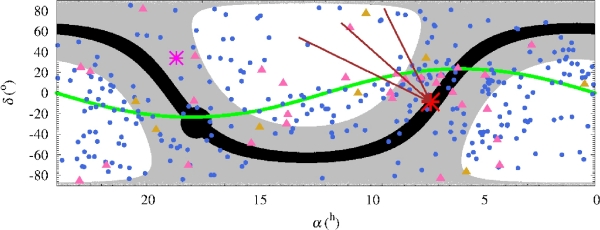

So much is going on in the outer reaches of the Solar System! In the 2018 paper, the two astronomers looked for patterns in how hyperbolic objects move, noting that anything approaching us from the far reaches of the Solar System seems to come from a well-defined location in the sky known as its radiant (also called its antapex). Given the mechanisms for producing objects on hyperbolic orbits, they identify distinctive coordinate and velocity signatures among these radiants.

Work like this relies on the past orbital evolution of hyperbolic objects using computer modeling and statistical analyses of the radiants, and I wouldn’t have dug quite so deeply into this arcane work except that it tells us something about objects that are coming under renewed scrutiny, the stars that occasionally pass close to the Solar System and may disrupt the Oort Cloud. Such passing stars are an intriguing subject in their own right and even factor into studies of galactic diffusion; i.e., how a civilization might begin to explore the galaxy by using close stellar passes as stepping stones.

But more about that in a moment, because I want to wrap up this 2018 paper before moving on to a later paper, likewise from the de la Fuente Marcos team, on close stellar passes and the intriguing Gliese 710. Its close pass is to happen in the distant future, but we have one well characterized pass that the 2018 paper addresses, that of Scholz’s Star, which is known to have made the most recent flyby of the Solar System when it moved through the Oort Cloud 70,000 years ago. In their work on minor objects with long orbital periods and extreme orbital eccentricity, the researchers find a “significant overdensity of high-speed radiants toward the constellation of Gemini” that may be the result of the passage of this star.

This is useful stuff, because as we untangle prior close passes, we learn more about the dynamics of objects in the outer Solar System, which in turn may help us uncover information about still undiscovered objects, including the hypothesized Planet 9, that may lurk in the outer regions and may have caused its own gravitational disruptions.

Before digging into the papers I write about today, I hadn’t realized just how many objects – presumably comets – are known to be on hyperbolic orbits. The astronomers work with the orbits of 339 of these, all with nominal heliocentric eccentricity > 1, using data from JPL’s Solar System Dynamics Group Small-Body Database and the Minor Planet Center Database. For a minor object moving with an inbound velocity of 1 kilometer per second, which is the Solar System escape velocity at about 2000 AU, the de la Fuente Marcos team runs calculations going back 100,000 years to examine the modeled object’s orbital evolution all the way out to 20,000 AU, which is in the outer Oort Cloud.

That overdensity of radiants toward Gemini that I mentioned above does seem to implicate the Scholz’s Star flyby. If so, then a close stellar pass that occurred 70,000 years ago may have left traces we can still see in the orbits of these minor Solar System bodies today. The uncertainties in the analysis of other stellar flybys relate to the fact that past encounters with other stars are not well determined, with Scholz’s Star being the prominent exception. Given the lack of evidence about other close passes, the de la Fuente Marcos team acknowledges the possibility of other perturbers.

Image: This is Figure 3 from the paper. Caption: Distribution of radiants of known hyperbolic minor bodies in the sky. The radiant of 1I/2017 U1 (‘Oumuamua) is represented by a pink star, those objects with radiant’s velocity > ?1?km?s?1 are plotted as blue filled circles, the ones in the interval (?1.5, ?1.0) km s?1 are shown as pink triangles, and those < ? 1.5?km?s?1 appear as goldenrod triangles. The current position of the binary star WISE J072003.20-084651.2, also known as Scholz’s star, is represented by a red star, the convergent brown arrows represent its motion and uncertainty as computed by Mamajek et al. (2015). The ecliptic is plotted in green. The Galactic disc, which is arbitrarily defined as the region confined between Galactic latitude ?5° and 5°, is outlined in black, the position of the Galactic Centre is represented by a filled black circle; the region enclosed between Galactic latitude ?30° and 30°? appears in grey. Data source: JPL’s SSDG SBDB. Credit: Carlos and Raúl de la Fuente Marcos.

The Coming of Gliese 710

Let’s now run the clock forward, looking at what we might expect to happen in our next close stellar passage. Gliese 710 is an interesting K7 dwarf in the constellation Serpens Cauda that occasionally pops up in our discussions because of its motion toward the Sun at about 24 kilometers per second. Right now it’s a little over 60 light years away, but give it time – in about 1.3 million years, the star should close to somewhere in the range of 10,000 AU, which is about 1/25th of the current distance between the Sun and Proxima Centauri. As we’re learning, wait long enough and the stars come to us.

Note that 10,000 AU; we’ll tighten it up further in a minute. But notice that it is actually inside the distance between the closest star, Proxima Centauri, and the Centauri A/B binary.

Image: Gleise 710 (center), destined to pass through the inner Oort Cloud in our distant future. Credit: SIMBAD / DSS

An encounter like this is interesting for a number of reasons. Interactions with the Oort Cloud should be significant, although well spread over time. Here I go back to a 1999 study by Joan García-Sánchez and colleagues that made the case that spread over human lifetimes, the effects of such a close passage would not be pronounced. Here’s a snippet from that paper:

For the future passage of Gl 710, the star with the closest approach in our sample, we predict that about 2.4 × 106 new comets will be thrown into Earth-crossing orbits, arriving over a period of about 2 × 106 yr. Many of these comets will return repeatedly to the planetary system, though about one-half will be ejected on the first passage. These comets represent an approximately 50% increase in the flux of long-period comets crossing Earth’s orbit.

As far as I know, the García-Sánchez paper was the first to identify Gliese 710’s flyby possibilities. The work was quickly confirmed in several independent studies before the first Gaia datasets were released, and the parameters of the encounter were then tightened using Gaia’s results, the most recent paper using Gaia’s third data release. Back to Carlos and Raúl de la Fuente Marcos, who tackle the subject in a new paper appearing in Research Notes of the American Astronomical Society.

The researchers have subjected the Gliese 710 flyby to N-body simulations using a suite of software tools that model perturbations from the star and factor in the four massive planets in our own system as well as the barycenter of the Pluto/Charon system. They assume a mass of 0.6 Solar masses for Gliese 710, consistent with previous estimates. In addition to the Gaia data, the authors include the latest ephemerides information for Solar System objects as provided by the Jet Propulsion Laboratory’s Horizons System.

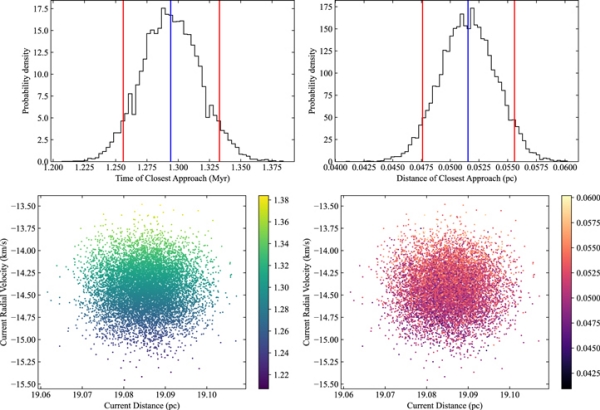

Image: This is Figure 1 from the paper. Caption: Future perihelion passage of Gliese?710 as estimated from Gaia?DR3 input data and the N-body simulations discussed in the text. The distribution of times of perihelion passage is shown in the top-left panel and perihelion distances in the top-right one. The blue vertical lines mark the median values, the red ones show the 5th and 95th percentiles. The bottom panels show the times of perihelion passage (bottom-left) and the distance of closest approach (bottom–right) as a function of the observed values of the radial velocity of Gliese?710 and its distance (randomly generated using the mean values and standard deviations from Gaia?DR3), both as color coded scatter plots of the distribution in the associated top panel. Histograms have been produced using the Matplotlib library (Hunter 2007) with sets of bins computed using Numpy (Harris et al. 2020) by applying the Freedman and Diaconis rule; instead of considering frequency-based histograms, we used counts to form a probability density so the area under the histogram will sum to one. The colormap scatter plot has also been produced using Matplotlib. Credit: Carlos and Raúl de la Fuente Marcos.

The de la Fuente Marcos paper now finds that the close approach of Gliese 710 will take it to within 10635 AU plus or minus 500 AU, putting it inside the inner Oort Cloud in about 1.3 million years – both the distance of the approach and the time of perihelion passage are tightened from earlier estimates. And as we’ve seen, Scholz’s Star passed through part of the Oort Cloud at perhaps 52,000 AU some 70,000 years ago. We thus get a glimpse of the Solar System influenced by passing stars on a time frame that begins to take shape and clearly defines a factor in the evolution of the Solar System.

What Gaia Can Tell Us

We can now back out further again to a 2018 paper from Coryn Bailer-Jones (Max Planck Institute for Astronomy, Heidelberg), which examines not just two stars with direct implications for our Solar System, but Gaia data (using the Gaia DR2 dataset) on 7.2 million stars to look for further evidence for close stellar encounters. Here we begin to see the broader picture. Bailer-Jones and team find 26 stars that have or will approach within 1 parsec, 7 that will close to 0.5 parsecs, and 3 that will pass within 0.25 parsecs of the Sun. Interestingly, the closest encounter is with our friend Gliese 710.

How often can these encounters be expected to occur? The authors estimate about 20 encounters per million years within a range of one parsec. Greg Matloff has used these data to infer roughly 2.5 encounters within 0.5 parsecs per million years. Perhaps 400,000 to 500,000 years should separate close stellar encounters as found in the Gaia DR2 data. We should keep in mind here what Bailer-Jones and team say about the current state of this research, especially given subsequent results from Gaia: “There are no doubt many more close – and probably closer – encounters to be discovered in future Gaia data releases.” But at least we’re getting a feel for the time spans involved.

So given the distribution of stars in our neighborhood of the galaxy, our Sun should have a close encounter every half million years or so. Such encounters between stars dramatically reduce the distance for any would be travelers. In the case of Scholz’s Star, for instance, the distances involved cut the current distance to the nearest star by a factor of 5, while Gliese 710 is even more provocative, for as I mentioned, it will close to a distance not all that far off Proxima Centauri’s own distance from Centauri A/B.

A good time for interstellar migration? We’ve considered the possibilities in the past, but as new data accumulate, we have to keep asking how big a factor stellar passages like these may play in helping a technological civilization spread throughout the galaxy.

The earlier de la Fuente Marcos paper is “Where the Solar system meets the solar neighbourhood: patterns in the distribution of radiants of observed hyperbolic minor bodies,” Monthly Notices of the Royal Astronomical Society Letters Vol. 476, Issue 1 (May 2018) L1-L5 (abstract). The later de la Fuente Marcos paper is “An Update on the Future Flyby of Gliese 710 to the Solar System Using Gaia DR3: Flyby Parameters Reproduced, Uncertainties Reduced,” Research Notes of the AAS Vol. 6, No. 6 (June, 2022) 136 (full text). The García-Sánchez et al. paper is “Stellar Encounters with the Oort Cloud Based on Hipparcos Data,” Astronomical Journal 117 (February, 1999), 1042-1055 (full text). The Bailer-Jones paper is “New stellar encounters discovered in the second Gaia data release,” Astronomy & Astrophysics Vol. 616, A37 (13 August 2018). Abstract.

The Great Venusian Bug Hunt

Our recent focus on life detection on nearby worlds concludes with a follow-up to Alex Tolley’s June essay on Venus Life Finder. What would the sequence of missions look like that resulted in an unambiguous detection of life in the clouds of Venus? To answer that question, Alex takes the missions in reverse order, starting with a final, successful detection, and working back to show what the precursor mission to each step would have needed to accomplish to justify continuing the effort. If the privately funded VLF succeeds, it will be in the unusual position of making an astrobiological breakthrough before the large space organizations could achieve it, but there are a lot of steps along the way that we have to get right.

by Alex Tolley

In my previous essay, Venus Life Finder: Scooping Big Science, I introduced the near-term, privately financed plan to send a series of dedicated life-finding probes to Venus’ clouds. The first was a tiny atmosphere entry vehicle with a dedicated instrument, the Autofluorescing Nephelometer (AFN). The follow-up probes would culminate in a sample return to Earth, all this before the big NASA and ESA probes had even reached Venus at the end of this decade to investigate planetary conditions.

When the discussion turns to missions on or around planets or moons that may be home to life, the focus is on whether these probes could be loaded with life-finding instruments to front-load life detection science. The VLF missions are perhaps the first, to make detecting life the primary science goal since the Viking Mars missions in the mid-1970s, with the possible exception of ESA’s Beagle 2 (Beagle 2 lander’s objectives included landing site geology, mineralogy, geochemistry, atmosphere, meteorology, climate; and search for biosignatures [8]).

The approach I am going to use here is to start with what an Earth laboratory might do to investigate samples with suspected novel life. I will then reverse the thinking for each mission stage until the decision to launch a Venusian atmosphere entry AFN becomes the obvious, logical choice.

So let us start with the what science and technology would likely employ on Earth, assuming that we have samples from the VLF missions previously undertaken that indicate that the conditions for life are not prohibitive, and earlier analyses that suggest that the collected particles are not just inanimate but appear to be, or contain life. As we do not know if this life is truly from a de novo abiogenesis or common to terrestrial life and thus perhaps from Earth, there are a number of basic tests that would be employed to determine if the VLF samples contain life.

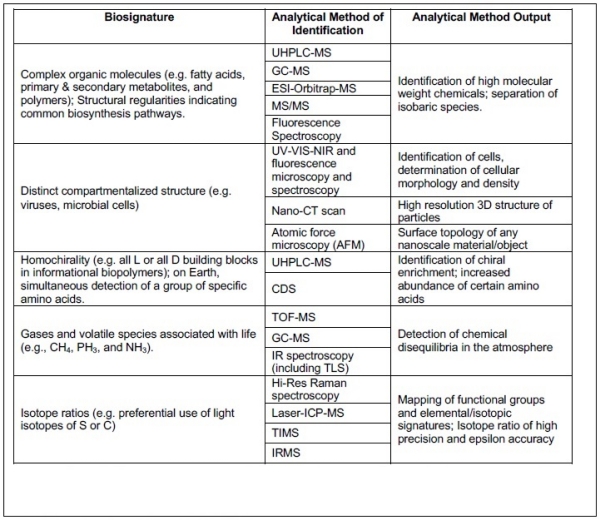

The key analyses would include:

1) Are there complex organic molecules with structural regularities rather than randomness? For example, terrestrial cell membranes are composed of lipid chains with a certain length of the carbon chain (phospho- and glycolipids peak at 16- and 18-length chains). Are there high abundances of certain molecules that might form the basis of an information storage molecule, e.g. the 4 bases used in DNA – adenine, thymine, guanine, cytosine, or an abundance subset of the many possible amino acids?

2) Are the cell-like particles compartmentalized? Are there cell membranes? Do the cells contain other compartments that manage key biological functions [5]?

3) Do the molecules show homochirality, as we see on Earth? If not, and the molecules are racemic as we see with amino acids in meteorites, then this indicates a non-biological formation. Terrestrial proteins are based on levorotatory amino acids (L-amino acids), whilst sugars are dextrorotatory (D-sugars).

4) Do the samples generate or consume gases that are associated with life? This can be deceptive as we learned with the ambiguous Viking experiment to detect gas emissions from cultured Martian regolith. Lab experiments can resolve such issues.

5) Do the samples have different Isotope ratios than the planetary material? On Earth, biology tends to alter the ratios of carbon and oxygen isotopes that are used as proxies in analyses of samples for paleo life. For example, photosynthesis reduces the C13/C12 ratio and therefore can be used to infer whether carbon compounds are biogenic.

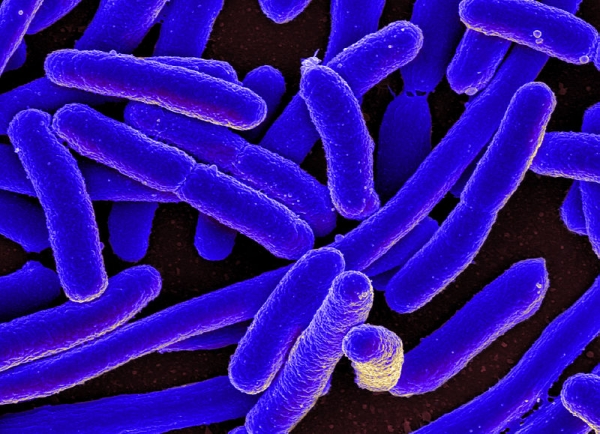

Note that the goals do not initially include using optical microscopes, or DNA sequencers. Terrestrial life is increasingly surveyed analyzing samples for DNA sequences. DNA reading instruments will only work if the same nucleobases are used by Venusian life. If they are, then there is the issue of whether they come from a common origin to terrestrial life. For bacteria-sized particles, electron microscopes are more appropriate.

The types of instruments used include mass spectrometers, liquid and gas chromatographs, optical spectrometers of various wavelengths, nanotomographs (nano-sized CT scans), atomic force microscopes, etc. These instruments tend to be rather large and heavy, although specially designed ones are being flown on the big missions, such as the Mars Perseverance rover. Table 1 below details these biosignature analyses to be done on the returned samples.

Table 1 (click to enlarge). Laboratory biosignature analyses for the returned samples, the instruments, and the specific outputs.

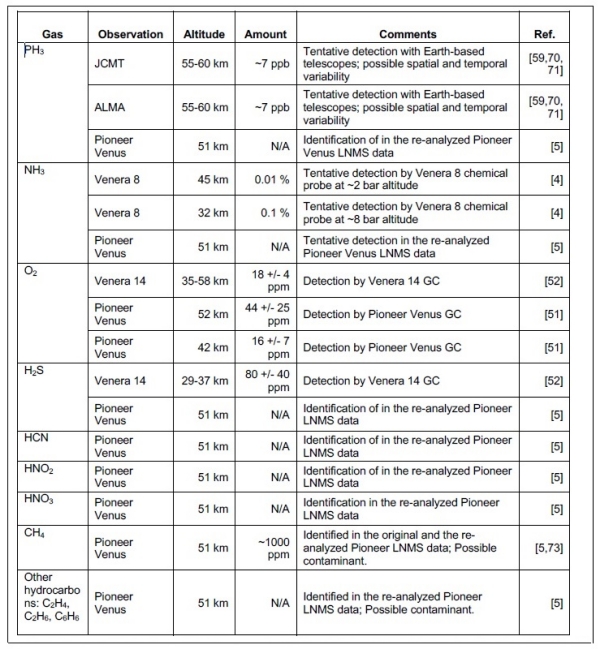

For the goal of detecting biosignature gases and their changes, table 2 shows the prior information collected from probes and telescopes that might indicate extant life on Venus.

Table 2 (click to enlarge). Prior data of potential biosignature gases in the Venusian atmosphere.

Given that these are the types of experiments on samples returned to Earth, how do we collect those samples for return? Unlike Mars, life on Venus is expected to be in the clouds, in a temperate habitable zone (HZ) layer. The problem is not dissimilar to collecting samples in the deep ocean. A container must be exposed to the environment and then closed and sealed. Apart from pressure, the ocean is a benign environment.

Imagine the difficulties of collecting a sample near the bottom of a deep, highly acidic lake. How would that be done given that it is not possible to take a boat out and lower an acidic resistant sample bottle? The VLF team has not decided how best to do this, but the sampling is designed to take place from a balloon floating in the atmosphere for the sample return mission.

Possible sampling methods include:

- Use of aerogels

- Filters

- Electrostatic sticky tape

- Funnels, jars, and bottles

- Fog Harp droplet collector

- Gas sampling bags

In order to preserve the sample from contamination and to ensure planetary protection from the returned samples, containment must be carefully designed to cover contingencies that might expose the sample to Earth’s biosphere.

Note that this Venus Return mission is no longer a small project. The total payload to reach LEO, that includes the transit vehicle, balloon and instrument gondola, plus Venus ascent vehicle, and transit vehicle to Earth, is 38,000 kg, far more massive than the Mars Perseverance Mission, double the launch capability of the Atlas V and Ariane V launchers, and requiring the Falcon Heavy. The number of components indicates a very complex and difficult mission, probably requiring the capabilities of a national space organization. This is definitely no longer a small, privately funded, mission.

But let’s backtrack again. The samples were deemed worth the cost of returning to Earth because prior missions have supported the case that life may be present in the atmosphere. What experiments would best be done to make that assessment, given a prior mission that indicated this ambitious, complex, and expensive effort was worth attempting?

The science goals for this intermediate Habitability Mission are:

1) Measure the physical conditions in the cloud layer to ensure they are not outside of a possible extremophile range. The most important metric is perhaps temperature, as no terrestrial thermophile can survive above 122 °C, nor metabolize in solids. A lower bound might be that water freezes at 0 °C, although salt water will lower that freezing point, so halophiles could live in salty water at below 0 °C. Is there water vapor in the clouds that indicates that the particles are not pure sulfuric acid? Allied with that, are the particles less acidic than pure H2SO4? Are there non-volatile elements, such as phosphorus and metals, that are used by terrestrial biology to harvest and transfer energy for metabolism?

2) Can the organic materials previously detected be identified to indicate biologic rather than abiologic chemistry? Are there any hints at compound regularity that will inform the sample return mission? Can we detect gas changes that indicate metabolism is happening, and are the gases in disequilibrium? Of particular interest may be the detection of phosphine (PH3) emissions, an unambiguous terrestrial biosignature, detected in the Venusian clouds by terrestrial ground-based telescopes in 2020.

3) Are the non-spherical particles detected in a prior mission solid or liquid, and are they homogeneous in composition (non-biologic) or not (possible life).

To be able to do these experiments, the mission will use balloons that can float in the Venusian clouds. They may need to be able to adjust their altitude to find the best layers (but this adds complexity, risk, and cost) and travel spatially, especially if there is a desire to sample the patchy cloud layers that are strongly UV absorbing and have been likened to algal blooms on Earth.

A balloon mission is quite complex and carries a number of instruments, so that cost and complexity is now substantial. A simpler, low-cost, prior mission is needed that will capture key data. What is the simplest, lowest mass mission possible that will inform the team that this balloon mission should definitely go ahead if the results are positive? What science goals and instrument[s] could best provide the data to inform this decision?

This earlier mission is designed around two sources of information that it can leverage. First, there are the many Venus entry probe missions from the early 1960s to the mid 1980s. The most intriguing information includes the observation that there were particles in the clouds that were not spherical as would be expected by physics, and this non-spherical nature might indicate cellular life, such as bacilli (rod-shaped bacteria).

Shape however, is insufficient, as life must be able to interact with the environment to feed, grow, and reproduce. On Earth, these functions require a range of organic molecules – proteins, DNA, RNA, lipids and sugars. This implies that organic compounds must be present in these non-spherical particles; otherwise the shape may be due to physical processes, including agglomeration and/or merging of spherical particles.

The VLF team is also testing some of the assumptions and technology in the lab, confirming for example that autofluorescing of carbon materials works in concentrated sulfuric acid. Their lab experiments show that linear carbon molecules like formaldehyde and methanol in concentrated H2SO4 result in both UV absorption and fluorescence over time, implying that the structures are altered, as is found in industrial processes. From the report:

If there is organic carbon in the Venus atmosphere, it will react with concentrated sulfuric acid in the cloud droplets, resulting in colored, strongly UV absorbing, and fluorescent products that can be detected (…). We have exposed several samples containing various organic molecules (e.g., formaldehyde) to 120 °C, 90% sulfuric acid for different lengths of time. As a result of the exposure to concentrated sulfuric acid all of the tested organic compounds produced visible coloration, increased absorbance (mainly in the UV range of the spectrum), and resulted in fluorescence (…)

It should also be noted that Misra has shown that remote autofluorescing can detect carbon compounds and distinguish between organic material (leaves, microbes on rocks, and fossils) and fluorescing minerals [6,7].

Table 3 (click to enlarge). The science goals for the balloon mission, showing that the AFN is the best single instrument to both detect and confirm the non-spherical particles found in the prior Venus probes, and the presence of organic compounds in the particles. It can also determine whether the particles are liquid or solid, and estimate the pH of the particles.

Of the possible choices of instruments, the Autofluorescing Nephelometer (AFN) best meets the requirements of being able to measure both particle shape and the presence of organic compounds. This can be seen in table 3 above for the science goals of the balloon mission. The instrument is described in the prior post and in more detail in the VLF Report [1]. Ideally, both conditions should be met with positive results, although even both together are suggestive but not unambiguous.

Organic compounds can form in concentrated H2SO4, and cocci are essentially spherical bacteria. Nevertheless, a positive result for one or both justifies the funding of the follow up mission. Conversely, a negative result for both, especially the absence of detectable organic compounds would put a nail in the coffin of the idea that there is life in the Venusian clouds (a classic falsification experiment) – at least for that 4-5 minute data acquisition mission as the probe falls through the HZ layers of clouds where these non-spherical particles have previously been detected.

It could certainly be argued that life is patchy, and just like failing to catch a fish does not mean there are no fish to be caught, it is possible that the probe fell though a [near] lifeless patch and that other attempts should be made, for example the balloon mission that will take measurements over a wider range of space and time.

The first VLF probe mission begs the question of why we should even consider Venus as an abode for life. The prior missions have shown that the surface is a very hot, dry, and acidic environment which is inimical to life as we know it. The only suggestions for the presence of life are the aforementioned patchy UV absorbing regions implying organic compounds in the clouds, and the presence of the biosignature gas PH3.

For life to be on Venus, either the conditions must once have been clement to allow abiogenesis, or life must have been seeded by panspermia to allow it ultimately to evolve to survive in the cloud refugia when the oceans were lost during the runaway greenhouse era. Is there any evidence that Venus was once our sister world with conditions like Earth, but warmer, before the runaway greenhouse conditions transformed the planet?

The scholarly literature is divided, from the optimistic view of Grinspoon [2] and others that Venus had an early ocean that lasted for long enough (e.g. 1 Gy), to support life [3], to the pessimistic view of Turbet [4] that modeling suggests Venus never had an ocean (and that Earth was only able to condense one during the faint young sun period.

It is to try to answer these questions that the science goals of NASA’s and ESA’s DAVINCI+, VERITAS, and EnVision probes are designed to meet.

The VLF team, however, have supported their plan with the optimistic view that early Venus was clement and that life could have taken hold, and therefore a series of dedicated, life-finding missions will best answer the question of whether there is life on Venus, rather than establishing that a paleo climate on Venus was indeed present and lasted long enough to allow life to emerge, or long enough for it to have been transferred from Earth by the time we are sure life on Earth was present.

If the first VLF mission returns positive results, then it seems likely that the following missions, however designed and by whom executed, will push forward the science goals toward more life detection. Negative results could well derail subsequent life detection goals. The time frame will overlap with the Mars sample return mission that will collect the Perseverance rover samples for analyses back on Earth. It may well also overlap with the early results of biosignature detection on exoplanets. Whatever the outcome, the end of this decade will be an exciting time and will pose fundamental questions about our place in the galaxy.

References

1. Seager S, et al Venus Life Finder Study (2021) Web accessed 02/18/2022 https://venuscloudlife.com/venus-life-finder-mission-study/

2. Grinspoon, David & Bullock, Mark. (2007). Searching for Evidence of Past Oceans on Venus, American Astronomical Society, DPS meeting #39, id.61.09; Bulletin of the American Astronomical Society, Vol. 39, p.540

3. Way, M. J.,et all (2016), Was Venus the first habitable world of our solar system?, Geophys. Res. Lett., 43, 8376-8383, doi:10.1002/2016GL069790.

4. Turbet, M., Bolmont, E., Chaverot, G. et al. Day-night cloud asymmetry prevents early oceans on Venus but not on Earth. Nature 598, 276-280 (2021). https://doi.org/10.1038/s41586-021-03873-w

5. Cornejo E, Abreu N, Komeili A. Compartmentalization and organelle formation in bacteria. Curr Opin Cell Biol. 2014 Feb;26:132-8. doi: 10.1016/j.ceb.2013.12.007. Epub 2014 Jan 16. PMID: 24440431; PMCID: PMC4318566.

6. Misra, A.K., Rowley, S.J., Zhou, J. et al. Biofinder detects biological remains in Green River fish fossils from Eocene epoch at video speed. Sci Rep 12, 10164 (2022). https://doi.org/10.1038/s41598-022-14410-8

7. Misra, A. et al (2021). Compact Color Biofinder (CoCoBi): Fast, Standoff, Sensitive Detection of Biomolecules and Polyaromatic Hydrocarbons for the Detection of Life. Applied Spectroscopy. 75. 000370282110339. DOI:10.1177/00037028211033911.

8. Beagle 2. https://en.wikipedia.org/wiki/Beagle_2 Accessed July 2, 2022

Drilling into Icy Moon Oceans

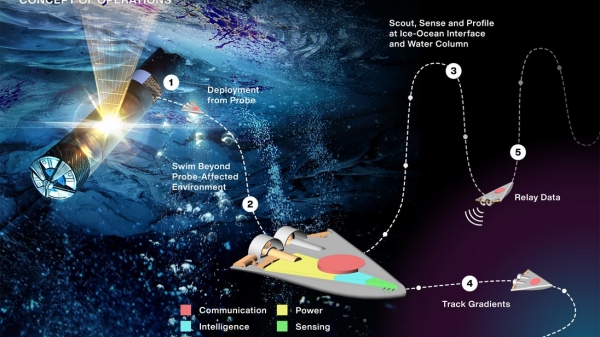

While we talk often about subsurface oceans in places like Europa, the mechanisms for getting through layers of ice remain problematic. We’ll need a lot of data through missions like Europa Clipper and JUICE just to make the call on how thick Europa’s ice is before determining which ice penetration technology is feasible. But it’s exciting to see how much preliminary work is going into the issue, because the day will come when one or another icy moon yields the secrets of its ocean to a surface lander.

By way of comparison, the thickest ice sheet on Earth is said to reach close to 5,000 meters. This is at the Astrolabe Subglacial Basin, which lies at the southern end of Antarctica’s Adélie Coast. Here we have glacial ice covering continental crust, as opposed to ice atop an ocean (although there appears to be an actively circulating groundwater system, which has been recently mapped in West Antarctica). The deepest bore into this ice has been 2,152 meters, a 63 hour continuous drilling session that will one day be dwarfed by whatever ice-penetrating technologies we take to Europa.

Consider the challenge. We may, on Europa, be dealing with an ice sheet up to 25 kilometers thick – figuring out just how thick it actually is may take decades if the above missions get ambiguous results. In any case, we will need hardware that can operate at cryogenic temperatures in a hard vacuum, with radiation shielding adequate to the Jovian surface environment. The lander, after all, remains on the surface to sustain communications with the Earth.

Moreover, we need a system that is reliable, meaning one that can work its way around problems it finds in the ice as it moves downward. Here again we need ice profiles that can be developed by future missions. We do know the ice we encounter will contain salts, sulfuric acids and other materials whose composition is currently unknown. And we will surely have to cope with liquid water ‘pockets’ on the way down, as well as the fact that the ice may be brittle near the surface and warmer at greater depths.

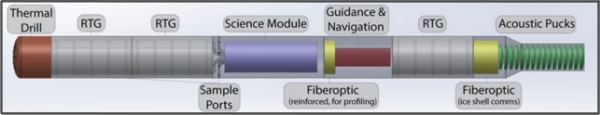

SESAME Program Targets Europan Ice

NASA’s SESAME program at Glenn Research Center, which coordinates work from a number of researchers, is doing vital early work on all these problems. On its website, the agency has listed a number of assumptions and constraints for a lander/ice penetrator mission, including the ability to reach up to 15 kilometers within three years (assuming we learn that the ice isn’t thicker than this). For preliminary study, a total system mass of less than 200 kg is assumed, and the overall penetration system must be able to survive three years of operations in this hostile environment.

So far this program is Europa-specific, the acronym translating to Scientific Exploration Subsurface Access Mechanism for Europa. The idea is to identify which penetration systems can reach liquid water. It’s early days for thinking about penetrating Europa and other icy moon oceans, but you have to begin somewhere, and SESAME is about figuring out which approach is most likely to work and developing prototype hardware.

SESAME is dealing with proposals from a number of sources. Johns Hopkins, for example, will be testing communication tether designs and analyzing problems with RF communications. Stone Aerospace is studying a closed-cycle hot water drilling technology running on a fission reactor. Georgia Tech is contributing data from projects in Antarctica and studying a subsurface access drill design, hoping to get it up to TRL 4. Honeybee Robotics is focused on a “hybrid, thermomechanical drill system combining thermal (melting) and mechanical (cutting) penetration approaches.”