Centauri Dreams

Imagining and Planning Interstellar Exploration

Planet Population around Orange Dwarfs

Last Friday’s post on K-dwarfs as home to what researchers have taken to calling ‘superhabitable’ worlds has caught the eye of Dave Moore, a long-time Centauri Dreams correspondent and author. Readers will recall his deep dives into habitability concepts in such essays as The “Habitability” of Worlds and Super Earths/Hycean Worlds, not to mention his work on SETI (see If Loud Aliens Explain Human Earliness, Quiet Aliens Are Also Rare). Dave sent this in as a comment but I asked him to post it at the top because it so directly addresses the topic of habitability prospects around K-dwarfs, based on a quick survey of known planetary systems. It’s a back of the envelope overview, but one that implies habitable planets around stars like these may be more difficult to find than we think.

by Dave Moore

To see whether K dwarfs made a good target for habitable planets, I decided to look into the prevalence and type of planets around K dwarfs and got carried away looking at the specs for 500 systems of dwarfs between 0.6 mass of the sun and 0.88.

Some points:

i) This was a quick and dirty survey.

ii) Our sampling of planets is horribly skewed towards the massive and close, but that being said, we can tell if certain types of planets are not in a system. For instance Jupiter and Neptune sized planets at approximately 1 au show up, so if a system doesn’t show them after a thorough examination, it won’t have them.

iii) I had trouble finding a planet list that was configurable to my needs. I finally settled on the Exoplanets Data Explorer configured in reverse order of stellar mass. This list is not as comprehensive as the Exosolar Planetary Encyclopedia.

iv) I concocted a rough table of the inner and outer HZ for the various classes of K dwarfs. Their HZs vary considerably. A K8 star’s HZ is between 0.26 au and 0.38 au while a K0’s HZ is between 0.72 au and 1.04 au. This means that you can have two planets orbiting at the same distance around a star and one I will classify as outside the HZ and the other inside the HZ.

v) Planets below 9 Earth mass I classified as Super-Earth/Sub-Neptune. Planets between 9 Earth masses and 30 are classified as Neptunes. Planets over that size are classified as Jupiters.

Image: An array of planets that could support life are shown in this artist’s impression. How many such worlds orbit K-dwarf stars, and are any of them likely to be ‘superhabitable’? Credit: NASA, ESA and G. Bacon (STScI).

What did I find:

By far the most common type are hot Super-Earths/Sub-Neptunes (SE/SNs). These are planets between 3 EM (Earth mass) and 6 EM. It is amazing the consistency of size these planets have. They are mostly in close (sub 10 day) orbits. There also appears to be a subtype of sub 2EM planets in very tight orbits (some quoted in hours) and given some of these were in multi-planet systems of SE/SNs, I would say these were SE/SNs, which have been evaporated down to their cores.

I also found 7 in the HZ and 2 outside the HZ.

I found 52 hot Jupiters and what I classified as 43 elliptical orbit Jupiters. These were Jupiter-sized planets in elliptical orbits under 3 au.

There were also 10 Jupiter classification planets in circular orbits under 3 au. and 3 outside that limit in what could be thought of as a rough analog of our system.

There were also 46 hot Neptunes and 14 in circular orbits further out, only one outside the habitable zone.

Trends:

At the lower mass end of the scale, K dwarf systems start off looking very much like M dwarfs except that everything, even those in multi-planet systems, is inside the habitable zone.

As you work your way up the mass scale, there is a slight increase in the average mass of the SE/SNs with 7-8 EM planets becoming more prevalent. More and more Jupiters appear, and Neptune-sized planets appear and become much more frequent. Also, you get the occasional monster system of tightly packed Jupiters and Neptunes like 55 Cancri.

An interesting development begins at about the mid mass range. You start getting SE/SNs in nice circular longer period orbits but still inside the HZ (28 in 20-100d orbits.)

Conclusions:

If we look at the TRAPPIST-1 system around an M-dwarf, its high percentage of volatiles (20% water/ice) implies that there is a lot of migration in from the outer system. If a planet has migrated in from outside the snow line, then there’s a good chance that even if it’s in the habitable zone, it will be a deep ocean planet.

Signs of migration are not hard to find. Turning back to the K-dwarfs, if we look at the Jupiters, only three show signs of little migration (analogs of our system). Ten migrated in smoothly but sit at a distance likely to have disrupted a habitable planet. Forty-three are in elliptical orbits, which are considered signs of planet-planet scattering.

Hot Jupiters can be accounted for by either extreme scattering or migration. As to inward migration, Martin Fogg did a series of papers showing that as Jupiter mass planets march inwards they scatter protoplanets, but these can reform behind the giant, and so Earth-like planets may occur outside of the hot Jupiter.

Neptunes in longer period circular orbits and the longer period SE/SNs all point to migration. These last groups are intriguing as they point to a stable system with the possibility of smaller planets further out. I would include the 7 planets in the habitable zone in this group. But if these planets all migrated inwards they may well be ocean planets.

K dwarfs have an interesting variety of systems, so they’d be useful to study, but I don’t see them as the major source of Earth analogs—at least not until we learn more.

Superhabitability around K-class Stars

We think of Earth as our standard for habitability, and thus the goal of finding an ‘Earth 2.0’ is to identify living worlds like ours orbiting similar Sun-like stars. But maybe Earth isn’t the best standard. Are there ways planets can be more habitable than our own, and if so where would we find them? That’s the tantalizing question posed in a paper by Iva Vilović (Technische Universität Berlin), René Heller (Max-Planck-Institut für Sonnensystemforschung) and colleagues in Germany and India. Heller has previously worked this issue in a significant paper with John Armstrong (citation below); see as well The Best of All Possible Worlds, which ran here in 2020.

The term for the kind of world we are looking for is ‘superhabitable,’ and the aim of this study is to extend the discussion of K-class stars as hosts by modeling the atmospheres we may find on planets there. While much attention has focused on M-class red dwarfs, the high degree of flare activity coupled with long pre-main sequence lifetimes makes K-class stars the more attractive choice, although less susceptible to near-term evaluation, as the paper shows in its sections on observability. It’s intriguing, for example, to realize that K-class stars are expected to live significantly longer than the Sun, as much as 100 billion years, and because they are cooler and less luminous than G-class stars, their habitable zone planets produce more frequent transits.

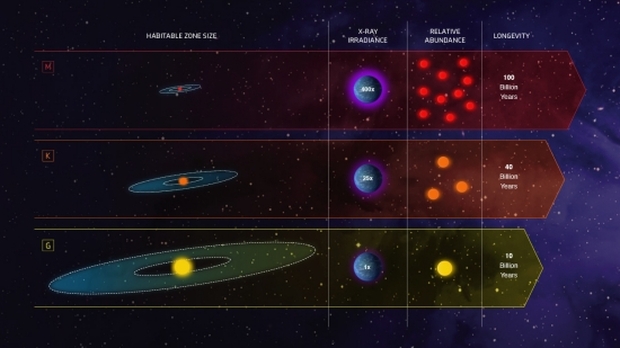

Image: This infographic compares the characteristics of three classes of stars in our galaxy: Sunlike stars are classified as G-stars; stars less massive and cooler than our Sun are K-dwarfs; and even fainter and cooler stars are the reddish M-dwarfs. The graphic compares the stars in terms of several important variables. The habitable zones, potentially capable of hosting life-bearing planets, are wider for hotter stars. The longevity for red dwarf M-stars can exceed 100 billion years. K-dwarf ages can range from 50 to 100 billion years. And, our Sun only lasts for 10 billion years. The relative amount of harmful radiation (to life as we know it) that stars emit can be 80 to 500 times more intense for M-dwarfs relative to our Sun, but only 5 to 25 times more intense for the orange K-dwarfs. Red dwarfs make up the bulk of the Milky Way’s population, about 73%. Sunlike stars are merely 8% of the population, and K-dwarfs are at 13%. When these four variables are balanced, the most suitable stars for potentially hosting advanced life forms are K-dwarfs. Image credit: NASA, ESA, and Z. Levy (STScI).

Let’s dig into this a little further. The contrast in brightness between star and planet is enhanced around K-dwarfs, and spectroscopic studies are aided by lower levels of stellar activity, which also enhances the habitability of planets. While an M-dwarf may be in a pre-Main Sequence phase for up to a billion years, K stars take about a tenth of this. They emit lower levels of X-rays than G-type stars and are also more abundant, making up about 13 percent of the galactic population as opposed to 8% for G-stars. With luminosity as low as one-tenth of a star like the Sun, they offer better conditions for direct imaging and their planets are far enough from the host to avoid tidal lock.

So we have an interesting area for investigation, as earlier studies have shown that photosynthesis works well under simulated K-dwarf radiation conditions. The authors go so far as to call these ‘Goldilocks stars’ for life-bearing planets, and there are about 1,000 such stars within 100 light years of the Sun, Thus modeling superhabitable atmospheres to support future observations stands as a valuable contribution.

The authors model these atmospheres by drawing on Earth’s own history as well as astrophysical parameters, finding that a superhabitable planet would be somewhat more massive than Earth so as to retain a thicker atmosphere to support a more extensive biosphere. Plate tectonics and a strong magnetic field are assumed, as are elevated oxygen levels that would “enable more extensive metabolic networks and support larger organisms.” Surface temperatures are some 5 degrees C warmer than present day Earth and increased atmospheric humidity supports the ecosystem.

The paper continues:

In terms of the atmospheric composition, key organisms and biological sources affecting Earth’s biosphere and their atmospheric signatures are considered. A superhabitable atmosphere would have increased levels of methane (CH4) and nitrous oxide (N2O) due to heightened production by methanogenic microbes, as well as denitrifying bacteria and fungi, respectively (Averill and Tiedje 1982, Wen et al. 2017). Furthermore, it would have decreased levels of molecular hydrogen (H2) due to higher enzyme consumption (Lane et al. 2010, Greening and Boyd 2020). Lastly…molecular oxygen (O2) levels could increase from present-day 21% by volume on Earth to 25% to reflect a thriving photosynthetic biosphere (Schirrmeister et al. 2015).

Given these factors, the authors deploy simulations using three different modeling tools (Atmos, POSEIDON and PandExo, the latter two to examine observability of transiting planets). Using Atmos, they simulate three pairs of superhabitable planets in differing locations in K-dwarf habitable zones, varying stellar radii and masses and star age. They focused on organisms and biological sources that had influenced Earth’s biosphere, including O2, H2, CH4, N2O and CO2 at a variety of surface temperatures.

The results offer what the authors consider the first simulated data on superhabitable atmospheres and assessments of the observability of such life. What stands out here is the optimum positioning of a superhabitable world around its star. Note this:

We find that planets positioned at the midpoint between the inner edge and center of the habitable zone, where they receive 80% of Earth’s solar flux, are more conducive to life. This contrasts with previous suggestions that planets at the center of the habitable zone—where our study shows they receive about 60% of Earth’s solar flux—are the most favorable for life (Heller and Armstrong 2014). Planets at the midpoint between the center and the inner edge need less CO2 for temperate climates and are more observable due to their warmer atmospheric temperatures and larger atmospheric scale heights. We conclude that a superhabitable planet orbiting a 4300K star with 80% of the solar flux offers the best balance of observability and habitability.

Image: An artist’s concept of a planet orbiting in the habitable zone of a K-type star. Image credit: NASA Ames/JPL-Caltech/Tim Pyle.

Observability presents a major challenge. Using the James Webb Space Telescope, a biosignature detection at 30 parsecs requires 150 transits (43 years of observation time) as compared to 1700 transaits (1699 years) for an Earth-like planet around a G-class star. That would be a mark in favor of K-stars but it also underlines the fact that studies of that length are impractical even with the anticipated Habitable Worlds Observatory. The JWST is working wonders, but clearly we are talking about next-generation telescopes – or the generation after that – when it comes to biosignature detection on potential superhabitable planets.

So what we have is encouraging in terms of the chances for life around K-class stars but a clear notice that observing the biosignatures of these planets is going to be a much harder task than doing the same for nearby M-class dwarfs, where extremely close habitable zones also give us a much larger number of transits over time.

The paper is Vilović et al., “Superhabitable Planets Around Mid-Type K Dwarf Stars Enhance Simulated JWST Observability and Surface Habitability,” accepted at Astronomical Notes and now available as a preprint. The earlier Heller and Armstrong paper is “Superhabitable Worlds,” Astrobiology Vol. 14, No. 1 (2014). Abstract. Another key text is Schulze-Makuch, Heller & Guinan, “In Search for a Planet Better than Earth: Top Contenders for a Superhabitable World,” Astrobiology 18 September 2020 (full text), which looks at candidates. Cuntz & Guinan, “About Exobiology: The Case for Dwarf K Stars,” Astrophysical Journal Vol. 827, No. 1 10 August 2016 (full text) should also be in your quiver.

A ‘Manhole Cover’ Beyond the Solar System?

Let’s start the year with a look back in time to 1957, a time when nuclear bombs were being tested underground for the first time at the Nevada test site some 105 kilometers northwest of Las Vegas. If this seems an unusual place to launch a discussion on interstellar matters, consider the story of an object that some argue became the fastest manmade artifact in history, an object moving so fast that it would have passed the orbit of Pluto four years after ‘launch,’ in the days of Yuri Gagarin and Project Mercury.

I’m bringing it up because the tale of the nuclear test known as Plumbob Pascal B is again active on the Internet, and it’s a rousing tale. Operation Plumbob involved a series of 29 nuclear tests that fed the development of missile warheads both intercontinental and intermediate. The history of such underground nuclear testing would make for an interesting book and indeed it has, in the form of Caging the Dragon (Defense Nuclear Agency, 1995), by one James Carothers.

But let’s narrow our focus to the nuclear devices known as Pascal A and B, the former used used in the first nuclear test below ground. This would have been the first such test in history, as the Soviet Union did not begin its underground program until 1961.

Image: The scene following the detonation of Ranier, an underground nuclear test similar to Pascal B. Credit: Plane Encyclopedia.

The key player here was Robert Brownlee (Los Alamos National Laboratory), who supervised the detonation of Pascal A and duly noted the fact that the yield was much greater than anticipated, so that a column of flame shot into the sky. The blast was not remotely contained. Pascal B was partially an attempt to fix that problem by lowering a 900-kilogram, 4-inch thick iron lid over the borehole. It seemed sensible to at least some at the time, but Brownlee himself evidently did not believe it would work to contain the blast, as indeed it did not.

The detonation of Pascal B caused the blast, like its predecessor, to climb straight up the borehole and escape. The interesting part is that the lid was never found. The only camera footage of the event caught the iron plate in only one frame, and that fact seems to be the source of the current interest. For Brownlee, extrapolating from the speed of the filming (one frame per millisecond) attempted a calculation on the speed of the object. He wound up with something on the order of six times Earth’s escape velocity, which would be 241,920 kilometers per hour, or 67.2 kilometers per second.

That’s an interesting figure! Voyager 1 is moving at about 17.1 kilometers per second and is more or less the yardstick for our thinking about where we are today in achieving deep space velocities. So what is commonly being described as a ‘manhole cover,’ which is pretty much what this object was, is conceivably the fastest moving object humans have ever produced.

Brownlee, recalling these events in 2002, described the iron cap as requiring a lot of ‘man-handlng’ to get it into place. And he goes on to say this:

For Pascal B, my calculations were designed to calculate the time and specifics of the shock wave as it reached the cap. I used yields both expected and exaggerated in my calculations, but significant ones. When I described my results to Bill Ogle [deputy division leader on the project], the conversation went something like this.

Ogle: “What time does the shock arrive at the top of the pipe?”

RRB: “Thirty one milliseconds.”

Ogle: “And what happens?”

RRB: “The shock reflects back down the hole, but the pressures and temperatures are such that the welded cap is bound to come off the hole.”

Ogle: “How fast does it go?”

RRB: “My calculations are irrelevant on this point. They are only valid in speaking of the shock reflection.”

Ogle: “How fast did it go?”

RRB: “Those numbers are meaningless. I have only a vacuum above the cap. No air, no gravity, no real material strengths in the iron cap. Effectively the cap is just loose, traveling through meaningless space.”

Ogle: And how fast is it going?”This last question was more of a shout. Bill liked to have a direct answer to each one of his questions.

RRB: “Six times the escape velocity from the earth.”

Image: Los Alamos’ Robert Brownlee (1924-2018). Credit: American Astronomical Society.

According to Brownlee, the answer delighted Ogle, who had never heard of a velocity given in terms of escape velocity from the Earth. Brownlee himself notes that because the object was only caught in one camera frame, there was no direct velocity measurement. He could only summarize the situation by saying that the ‘manhole cover’ was “going like a bat!” But he also notes that neither he nor Ogle believed that the cap would actually have made it into space. And the story doesn’t end just yet.

As passed along by my ever-reliable buddy Al Jackson (Centauri Dreams readers will know of Al as astronaut trainer on the Lunar Module Simulator during the Apollo era, and as the author of numerous papers on interstellar propulsion), I point to a set of calculations by one R. Finden titled “The Fastest Object Ever: The Manhole Cover,” evidently sent in response to an article in a magazine called Business Insider in 2016. The note appeared originally on a Reddit thread. Finden notes that his or her work should be considered as a rough estimate because “flight at a mach number upwards of 200 has not been studied and may never be.” Good point.

Finden’s calculations show that the cover would have reached temperatures five times its melting point before it could ever escape the atmosphere. And then this:

If the steel plate were magical and did not burn in the atmosphere, it would have escaped the upper stratosphere (50km) at 53 km/s just 934ms from launch. This not only means it would have made it to space, but it would have eventually escaped our solar system (depending on the time of day at launch). What likely happened was the plate was initially launched parallel to the ground and rotated with oscillation into the upright position, and by that time the drag from the first second of flight decreased its speed enough to prevent it from entering the upper stratosphere.

Conclusion: No manhole cover in space. It’s worth recalling, the Finden note adds, that the Chelyabinsk meteor was moving at only one-third the speed and had 13,900 times the weight of the flying cover, and even this mass was unable to survive Earth’s atmosphere. I dislike this result, as the idea of an object ‘launched’ in 1961 escaping the Solar System while we were still trying to get to the Moon is utterly delightful. And because R Finden’s math skills are well beyond my pay grade, I can’t reach a definitive conclusion about the result. So maybe we can still dream of flying manhole covers even if the odds seem long indeed.

Interstellar Reprise: Voyager to a Star

As I write, Voyager 1 is almost 166 AU from the Sun, moving at 17 kilometers per second. With its Voyager 2 counterpart, the mission represents the first spacecraft to operate in interstellar space, continuing to send data with the help of skilled juggling of onboard systems not deemed essential. Despite communications glitches, the mission continues, and it seems a good time to reprise a piece I wrote on the future of these doughty explorers back in 2015. Is there still time to do something new with the two probes once the demise of their plutonium power sources makes further communications impossible? The idea is hardly mine, and goes back to the Sagan era, as the article below explains. It’s also a notion that is purely symbolic, and for those immune to symbolism (the more practical-minded among us) it may seem trivial. But those with a poet’s eye may see the value of an act that can offer a futuristic finish to a mission that passed all expectations and will inspire generations yet unborn.

After Voyager 2 flew past Neptune in 1989, much of the world assumed that the story was over, for there were no further planetary encounters possible. But science was not through with the Voyagers then, and it is not through with the Voyagers now. In one sense, they have become a testbed for showing us how long a spacecraft can continue to operate. In a richer sense, they illustrate how an adaptive and curious species can offer future generations the gift of ‘deep time,’ taking its instruments forward into multi-generational missions of interstellar scope.

Now approximately 24 billion kilometers from Earth, Voyager 1, which took a much different trajectory than its counterpart by leaving the ecliptic due to its encounter with Saturn’s moon Titan, is 166 times as far from the Sun as the Earth (166 AU). Round trip radio time is over 46 hours. The craft has left the heliosphere, a ‘bubble’ that is puffed up and shaped by the stream of particles from the Sun called the ‘solar wind.’ Voyager 1 has become our first interstellar spacecraft, and it will keep transmitting until about 2025, perhaps longer. Voyager 2, its twin, is currently 138 AU out — 20.7 billion kilometers from the Sun — with a round-trip radio time of 38 hours.

Throughout history we have filled in the dark places in our knowledge with the products of our imagination, gradually ceding these visions to reality as expeditions crossed oceans and new lands came into view. The Greek historian Plutarch comments that “geographers… crowd into the edges of their maps parts of the world which they do not know about, adding notes in the margin to the effect, that beyond this lies nothing but sandy deserts full of wild beasts, unapproachable bogs, Scythian ice, or a frozen sea…” But deserts get crossed, first by individuals, then by caravans, and frozen seas yield to the explorer with dog-sled and ice-axe.

Voyager and the Long Result

Space is stuffed with our imaginings, and despite our telescopes, what we find as we explore continues to surprise us. Voyager showed us unexpected live volcanoes on Jupiter’s moon Io and the billiard ball-smooth surface of Europa, one that seems to conceal an internal ocean. We saw an icy Enceladus, now known to spew geysers, and a smog-shrouded Titan. We found ice volcanoes on Neptune’s moon Triton and a Uranian moon — Miranda — with a geologically tortured surface and a cliff that is the highest known in the Solar System.

But the Voyagers are likewise an encounter with time. The issue raises its head because we are still communicating with spacecraft launched almost forty years ago. I doubt many would have placed a wager on the survival of electronics and internal mechanisms to this point, but these are the very issues raised by our explorations, for we still have trouble pushing any payload up to speeds equalling Voyager 1’s 17.1 kilometers per second. To explore the outer Solar System, and indeed to travel beyond it, is to create journeys measured in decades. With the Voyagers as an example, we may one day learn to harden and upgrade our craft for millennial journeys.

New Horizons took nine years to reach Pluto and its large moon Charon. To reach another star? An unthinkable 70,000 years-plus at Voyager 1 speeds, which is why the propulsion problem looms large as we think about dedicated missions beyond the Solar System. If light itself takes over 23 hours to reach Voyager 1, the nearest star, Proxima Centauri, is a numbing 4.2 light years away. To travel at even a paltry one percent of lightspeed, far beyond our capabilities today, would mean a journey to Proxima Centauri lasting well over four centuries.

What is possible near-term? Ralph McNutt, a veteran aerospace designer at the Johns Hopkins Applied Physics Laboratory, has proposed systems that could take a probe to 1000 AU in less than fifty years, giving us the chance to study the Oort Cloud of comets at what may be its inner edge. Now imagine that system ramped up ten times faster, perhaps boosted by a close pass by the Sun and a coordinated shove from a next-generation engine. Now we can anticipate a probe that could reach the Alpha Centauri stars in about 1400 years. Time begins to curl back on itself — we are talking trip times as great as the distance between the fall of Rome and today.

The interesting star Epsilon Eridani, some 10.5 light years out, would be within our reach in something over three thousand years. Go back that far in human history and you would see Sumerian ziggurats whose star maps faced the sky, as our ancestors confronted the unknown with imagined constellations and traced their destinies through star-based prognostications. The human impulse to explain seems universal, as is the pushing back of frontiers. And if these travel times seem preposterous, they’re worth dwelling on, because they help us see where we are with space technology today, and where we’ll need to be to reach the stars.

A certain humility settles in. While we work to improve propulsion systems, ever mindful that breakthroughs can happen in ways that no one expects, we also have to look at the practicalities of long-haul spaceflight. Both Voyagers have become early test cases in how long a spacecraft can last. They also force us to consider how things last in our own civilization. We have buildings on Earth — the Hagia Sophia in Constantinople, the Pantheon in Rome — that have been maintained for longer than the above Alpha Centauri flight time. A so-called ‘generation ship,’ with crew living and dying aboard the craft, may one day make the journey.

Engagement with deep time is not solely a matter of technology. In the world of business and commerce, our planet boasts abundant examples of companies that have been handed down for centuries within the same family. Construction firm Kongo Gumi, for example, was founded in Osaka in 578, and ended business activity only in 2007, being operated at the end by the 40th generation of the family involved. The Buddhist Shitennoji Temple and many other well known buildings in Japanese history owe much to this ancient firm.

The Japanese experience is instructive. Hoshi Ryokan is an innkeeping company founded in Komatsu in 718 and now operated by the family’s 46th generation. If you’re ever in Komatsu, you can go to a hotel that has been doing business on the site ever since. Nor do we have to stay in Japan. Fonderia Pontificia Marinelli has been making bells in Agnore, Italy since the year 1000, while the firm of Richard de Bas, founded in 1326, continues to make paper in Amvert d’Auvergne, providing its products for the likes of Braque and Picasso.

Making Missions that Last

We have long-term thinking in our genes, as the planners of the Pyramids must have assumed. The Long Now Foundation, which studies issues relating to trans-generational thinking and the long-term survival of artifacts, has pointed out that computer code has its own kind of longevity. Enduring like the Sphinx, deeply planted software tools like the Unix kernel may well be operational a thousand years from now. Jon Lomberg and the team behind the One Earth Message — an attempt to transmit a kind of digital ‘Golden Record’ to the New Horizons spacecraft as a catalog of the human condition — estimate that the encoded data will survive at least one hundred thousand years, and perhaps up to a million if given sufficient redundancy.

‘Deep time’ takes us well beyond quarterly stock reports, and even beyond generational boundaries, an odd place to be for a culture that thrives on the slickly fashionable. It’s energizing to know that there is a superstructure that persists. The Voyagers are uniquely capable of keeping this fact in front of us because we see them defying the odds and surviving. Stamatios “Tom” Krimigis (JHU/APL) is on record as saying of the Voyager mission “I suspect it’s going to outlast me.”

Krimigis is one of the principal investigators on the Voyager mission and the only remaining original member of the instrument team. His work involves instruments that can measure the flow of charged particles. Such instruments — low-energy charged particle (LECP) detectors — report on the flow of ions, electrons and other charged particles from the solar wind, but because they demanded a 360-degree view, they posed a problem. Voyager had to keep its antenna pointed at the Earth at all times, so the spacecraft couldn’t turn. This meant that the tools needed included an electric motor and a swivel mechanism that could swing back and forth for decades without seizing up in the cold vacuum of space.

The solution was offered by a California company called Schaeffer Magnetics. Krimigis’ team tested the contractor’s four-pound motor, ball bearings and dry lubricant. The company ran the motorized system through half a million ‘steps’ without failure. The instruments are still working, still detecting a particle flow that is evidently a mix of solar and interstellar particles, one that is moving in a flow perpendicular to the spacecraft’s direction of travel, so that it appears we’re just over the edge into interstellar space, a place where the medium is roiled and frothy, like ocean currents meeting each other and rebounding.

One Last Burn

Although the spacecraft are expected to keep transmitting for several more years, we’ll continue to see both Voyagers suffering from power issues. But there is a way to keep them alive, if not in equipment then as a part of our lore and our philosophy. They will take about 30,000 years to reach the outer edge of the Oort Cloud (the inner edge, according to current estimates, is maybe 300 years away). Add another 10,000 years and Voyager 1 passes some 100,000 AU past the red dwarf Gliese 445, which happens to be moving toward the Sun and will, by this remote date, be one of the closest stars to the Solar System. As to Voyager 2, it will pass 111,000 AU from Ross 248 in roughly the same time-frame, at which point the red dwarf will actually be the closest star to the Sun.

Carl Sagan and the team working on the Voyager Golden Record wondered whether something could be done about the fact that neither Voyager was headed for another Solar System. Is it possible that toward the end of the Voyagers’ active lifetimes (somewhere in the 2020s), we could set up a trajectory change that would eventually lead Voyager as close as possible to one of these stars? Enough hydrazine is available on each craft that, just before we lose radio contact with them forever, we could give them a final, tank-emptying burn. Tens of thousands of years later, the ancient craft, blind, mute but still more or less intact, would drift in the general vicinity of a star whose inhabitants, if any, might find them and wonder.

A trajectory change would increase only infinitesimally the faint chance that one of these spacecraft would someday be intercepted by another civilization, and neither could return data. But there is something grand in symbolic gestures, magic in the idea that these venerable machines might one day be warmed, however faintly, by the light of another sun. Our spacecraft are our emissaries and the manifestations of our dreams. How we conceive of them through the information they carry helps us gain perspective on ourselves, and shapes the context of our future explorations. Giving the Voyagers one last, hard shove toward a star would speak volumes about our values as a questioning species determined to confront the unknown.

An Oddity in the Small Magellanic Cloud

Let’s make a quick return to the Magellanics after our recent look at WOH G64, a dying star imaged in the Large Magellanic Cloud (see Close-up of an Extragalactic Star). These satellite galaxies of the Milky Way have long proven useful in helping astronomers study the gravitational interactions that shape them, leading to further understanding of galactic structure. But today I want to focus on the star-forming cluster NGC 346, which presents us with something of a conundrum.

Located in the Small Magellanic Cloud some 200,000 light years away, the cluster is massive and particularly lacking in the heavier elements beyond hydrogen and helium. Intensively studied by the Hubble Space Telescope in the mid-2000s, it has become a proxy for much more distant galaxies in the ancient universe, where metals were harder to find. Why, then, did the Hubble data show that while stars in NGC 346 were between 20 and 30 million years old, they were accompanied by planet-forming disks? A new study puts the James Webb Space Telescope onto the question, with intriguing results.

Such disks should have dissipated after a scant 2 to 3 million years. With few heavy elements in the gas surrounding the star, it should be relatively easy for the stellar outflow from the host to blow the disk away. It’s not easy to reconcile that view with planets still forming in a 20 million year old disk that shouldn’t be there in the first place.

Image: This is a James Webb Space Telescope image of NGC 346, a massive star cluster in the Small Magellanic Cloud, a dwarf galaxy that is one of the Milky Way’s nearest neighbors. With its relative lack of elements heavier than helium and hydrogen, the NGC 346 cluster serves as a nearby proxy for studying stellar environments with similar conditions in the early, distant Universe. Ten, small, yellow circles overlaid on the image indicate the positions of the ten stars surveyed in a new study in The Astrophysical Journal. Credit: NASA, ESA, CSA, STScI, O. C. Jones (UK ATC), G. De Marchi (ESTEC), M. Meixner (USRA).

Findings that upset current models are red meat for researchers hunting new theories or adjustments to the old, but the question was whether the disks found in the cluster were actually evidence of accretion, or perhaps some other process that needed investigation. The good news is that JWST has obtained spectra from some of these stars, the first taken of pre-main-sequence stars in a nearby galaxy. The leader of the work, Guido De Marchi (ESA’s European Space Research and Technology Centre, Noordwijk, Netherlands) says the Webb data strongly confirm what Hubble revealed. The conclusion he draws is striking: “…we must rethink how we model planet formation and early evolution in the young Universe.”

That’s a tall order, of course. But with only ten percent of the heavier elements present in the chemical composition of our Sun, NGC 346 forces the question of whether our understanding of how some stars disperse their disks is correct. Perhaps light pressure from the star is more effective when the disk is laden with more metals. Or perhaps in the lack of heavier elements, the star would have to form from a larger cloud of gas in the first place, producing a disk that is more massive and harder to dissipate. Elena Sabbi (Gemini Observatory at NOIRLab in Tucson) puts the matter this way:

“With more matter around the stars, the accretion lasts for a longer time. The discs take ten times longer to disappear. This has implications for how you form a planet, and the type of system architecture that you can have in these different environments.”

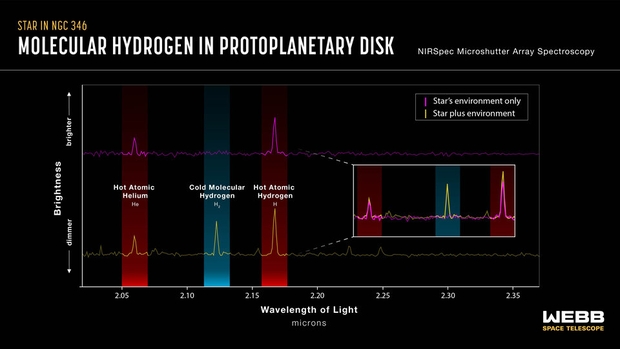

Image: This graph shows, on the bottom left in yellow, a spectrum of one of the 10 target stars in this study (and accompanying light from the immediate background environment). Spectral fingerprints of hot atomic helium, cold molecular hydrogen, and hot atomic hydrogen are highlighted. On the top left in magenta is a spectrum slightly offset from the star that includes only light from the background environment. This second spectrum lacks a spectral line of cold molecular hydrogen. On the right one can find the comparison of the top and bottom lines. This comparison shows a large peak in the cold molecular hydrogen coming from the star but not its nebular environment. Also, atomic hydrogen shows a larger peak from the star. This indicates the presence of a protoplanetary disc immediately surrounding the star. The data were taken with the microshutter array on the James Webb Space Telescope’s NIRSpec (Near-Infrared Spectrometer) instrument. Credit: NASA/ESA.

Because the study confirms that the spectral signatures of active accretion and the presence of molecular dust in the material around these stars can be detected, it becomes clear that these narrowband imaging methods can now be applied not only to the Magellanics but galaxies further away. That’s helpful in itself, and raises the prospect of further investigation into whether the core-accretion model for planet formation is fully understood around low-metallicity stars. From the paper:

If disk dissipation in low-metallicity stars were as quick as initially reported (C. Yasui et al. 2009), only very small rocky planets close to the star could form (J. L. Johnson & H. Li 2012).

And this in conclusion, relating the findings to the era known as ‘cosmic noon,’ when star formation in the visible universe would have been at its peak, some 10 to 11 billion years ago:

…our results indicate that, at the low metallicities typical of the early Universe, the disk lifetimes may be longer than what is observed in nearby star-forming regions, thus allowing more time for giant planets to form and grow than in higher-metallicity environments. This may have significant implications for our understanding of the formation of planetary systems in environments similar to those in place at Cosmic Noon.

The paper is De Marchi et al., “Protoplanetary Disks around Sun-like Stars Appear to Live Longer When the Metallicity is Low,” The Astrophysical Journal Vol. 977, No. 2 (16 December 2024), 214 (full text).

Charting the Diaspora: Human Migration Outward

It’s not often that I highlight the work of anthropologists on Centauri Dreams. But it’s telling that the need to do that is increasing as we continue to populate the Solar System with human artifacts, which are after all the province of this discipline. I’ve often wondered about the fate of the Apollo landing sites, originally propelled to do so by Steven Howe’s novel Honor Bound Honor Born and conversations with the author ranging from lunar settlement to antimatter. Long affiliated with Los Alamos National Laboratory, Howe is deeply involved in antimatter research through his work as CEO and co-founder of Hbar Technologies.

In my conversations with Howe almost twenty years ago while writing my original Centauri Dreams book, he was asking what would happen if commercial interests decided to exploit historical sites from the early days of space exploration. The question is still pertinent. Imagine Armstrong and Aldrin’s Eagle subjected to near-future tourists prying off souvenirs or putting footprints all over the original tracks left by the astronauts. Some kind of protection is crucial before technology in the form of cheap access to the lunar surface becomes available, and that may be a matter of decades more than centuries. The point here is to get the policies in place before the contamination can occur.

The Outer Space Treaty of 1967 is ratified by over 100 nations, but while offering access for exploration to all nations doesn’t address the preservation of historical sites. UNESCO’s World Heritage Convention does not apply to sites off-planet, while NASA’s 2011 protection guidelines offer useful ‘best practice’ solutions for protecting sites, but these are entirely voluntary. The Artemis Accords are bilateral agreements that offer language toward the protection of historical sites, but again are non-binding. So the Moon stands as an obvious example of an about to be exploited resource for which we lack any mechanisms to protect places future historians will want to examine.

Or think about Mars, as Kansas anthropologist Justin Holcomb does in a paper just published in Nature Astronomy. As we are increasingly putting technologies on the surface, we are building up yet another set of historical sites that will be of interest to future historians. This is important stuff even if the equipment is quickly rendered inert and soon obsolete, and it’s worth taking an anthropologist’s view here – we’ve learned priceless things about the past through the study of what a civilization thought of as materials fit only for the garbage dumps of the time. What will future scholars learn about the great era of Mars exploration that is now well underway?

“These are the first material records of our presence, and that’s important to us,” says Holcomb. “I’ve seen a lot of scientists referring to this material as space trash, galactic litter. Our argument is that it’s not trash; it’s actually really important. It’s critical to shift that narrative towards heritage because the solution to trash is removal, but the solution to heritage is preservation. There’s a big difference.”

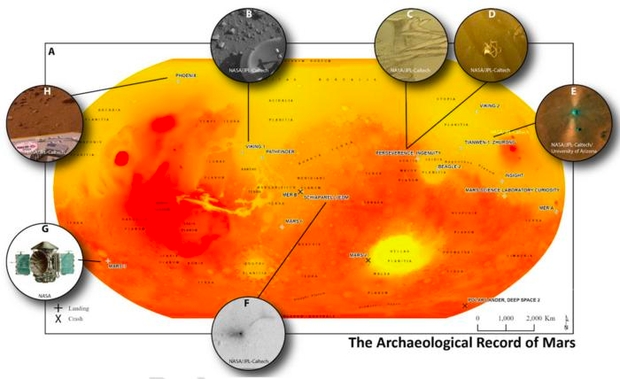

Image: Map of Mars illustrating the fourteen missions to Mars, key sites, and examples of artifacts contributing to the development of the archaeological record: (B) Viking-1 lander; (C) trackways created by NASA’s Perseverance rover; (D) Dacron netting used in thermal blankets, photographed by NASA’s perseverance rover using its onboard Front Left Hazard Avoidance Camera A; (E) China’s Tianwen-1 lander and Zhurong rover in southern Utopia Planitia photographed by HiRISE; (F) the ExoMars Schiaparelli Lander crash site in Meridiani Planum; (G) Illustration of the Soviet Mars Program’s Mars 3 space probe; (H) NASA’s Phoenix lander with DVD in foreground. Credit: Justin Holcomb.

In August of 2022, the Mars rover Perseverance encountered debris scattered during its landing a year earlier. Our Mars rovers repeatedly have come across heat shields and other materials from their arrival, while the wheels of the Curiosity rover have left small bits of aluminum behind, the result of damage during routine operations. Perseverance dropped an old drill bit in 2021 onto the surface as it replaced the unit with a new one. We can add in entire landers, intact but defunct craft like the Mars 3 lander, Mars 6 lander, the two Viking landers, the Sojourner rover, the Beagle 2 lander, the Phoenix lander, and the Spirit and Opportunity rovers. A 2022 estimate of spacecraft mass sent to Mars totals almost 10,000 kilograms (roughly 22,000 pounds), with 15,694 pounds (7,119 kilograms) now considered debris as the material is non-operational.

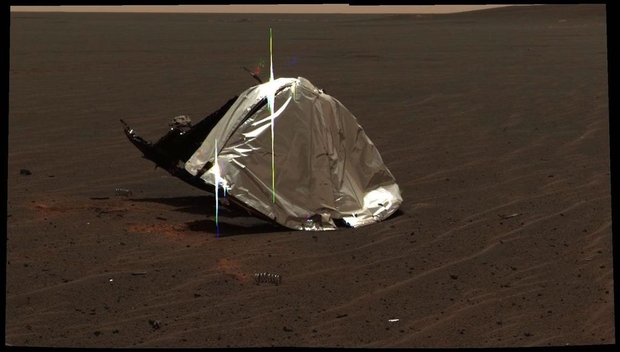

Image: This image from Opportunity’s panoramic camera features the remains of the heat shield that protected the rover from temperatures of up to 2,000 degrees Fahrenheit as it made its way through the martian atmosphere. This two-frame mosaic was taken on the rover’s 335th martian day, or sol, (Jan. 2, 2004). The view is of the main heat shield debris seen from approximately 10 meters away from it. Many rover-team engineers were taken aback when they realized the heat shield had inverted, or turned itself inside out. The height of the pictured debris is about 1.3 meters. The original diameter was 2.65 meters, though it has obviously been deformed. The Sun reflecting off of the aluminum structure accounts for the vertical blurs in the picture. The image provides a unique opportunity to look at how the thermal protection system material survived the actual Mars entry. Team members used this information to compare their predictions to what really happened. Credit: NASA.

The human archaeological record on Mars began in 1971 with the crash of the Soviet Mars 2 lander. The processes that affect human artifacts in this environment are key to how they degrade with time, and thus how future investigators might study them. We’re in the area now of what is known as geoarchaeology, which has ripened on Earth into a sub-discipline that analyzes geological effects at various sites. Holcomb points out that Mars has a cryosphere in the northern and southern latitudes where ice action would alter materials even faster than Martian sands. But don’t discount those global dust storms and dune fields like the one that will likely bury the Spirit Rover.

So we have many sites to protect from future human activities as well as a need to catalog the locations of debris that will disappear to view through natural processes over time. This is satisfyingly long-term thinking, and I like the way Holcomb describes extraterrestrial artifacts (left behind by us) in terms of our own past:

“If this material is heritage, we can create databases that track where it’s preserved, all the way down to a broken wheel on a rover or a helicopter blade, which represents the first helicopter on another planet. These artifacts are very much like hand axes in East Africa or Clovis points in America. They represent the first presence, and from an archaeological perspective, they are key points in our historical timeline of migration.”

Migration. Think of humans as a species on the move. Outward.

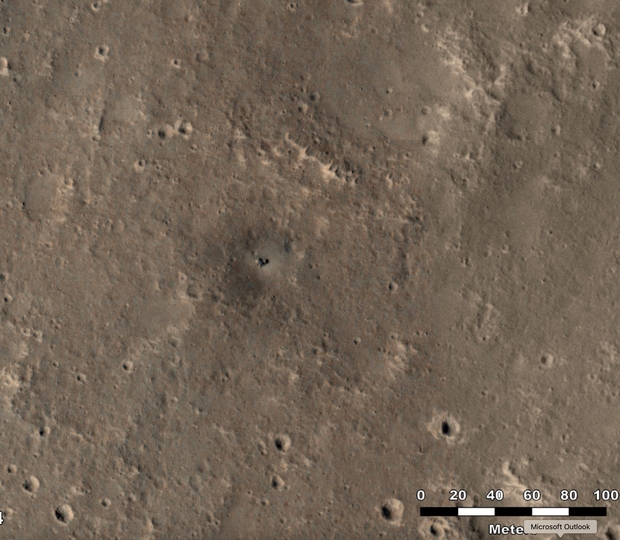

Image: Impact and its aftermath. What would a future anthropologist make of the materials found at this site? And how would it affect a future history of human exploration of another world? Credit: NASA.

In terms of mission planning, there is a pathway here. It would be best to avoid operations in sites where previous technologies were deployed, just as we wrestle with issues like those tourists on the Moon. Holcomb argues that we need to develop our methodologies for cataloging human materials on other planetary surfaces, and it’s obvious that we want to do that before such materials become prolific. So perhaps we should start seeing our space ‘debris’ on Mars as the equivalent of those Clovis points he mentioned above, which are markers for the Clovis paleo-American culture.

It always seems unnecessary to do analysis like this until the need surfaces with time, by which point we have let much of the necessary spadework remain undone. But on a broader level, I’m going to argue that thinking about the long-term stratigraphy of space exploration is another way we should engage with the reality of our species as multi-planetary. For that is exactly what we are headed for, a species that begins to explore its neighbors and perhaps establishes colonies either on them or in nearby orbits. That this process has already begun is obvious from the deluge of data we have already accumulated from the Moon all the way out into the Kuiper Belt.

Image: A historical site in context. Seen at the center of this image, NASA’s retired InSight Mars lander was captured by the agency’s Mars Reconnaissance Orbiter using its High-Resolution Imagine Science Experiment (HiRISE) camera on Oct. 23, 2024. The images show how the dust field around InSight – and on its solar panels – is changing over time. Sites like this will inevitably be obscured by such a rapidly changing planetary surface. Credit: NASA/JPL-Caltech/University of Arizona.

We need to stop being so parochial about what we are engaged in. Let’s be optimistic, and assume that humanity finds a way to keep migration going. The ancient middens that are nothing more than garbage dumps for early cultures on Earth have shown how valuable all traces of long vanished cultures can be. We need to preserve existing sites on other worlds and carefully ponder how we handle explorations that can, as they did in the American west, turn into stampedes. That we are talking here about preservation over future centuries is just another reminder that a culture that survives will necessarily be one that pays respect not only to its growth but to its history.

The paper is Holcomb et al., “Emerging Archaeological Record of Mars,” Nature Astronomy (16 December 2024). Abstract.