Librarian and futurist Heath Rezabek has become a familiar figure on Centauri Dreams through his writings on existential risk and how our species might counter it through Vessels, installations conveying our planet’s biological and cultural identity. The Vessel concept is far-reaching, and if we build it on Earth, we would likely take it to the stars. But in what form, and with what purpose? Enter the lightsail starship mission Saudade 4, its crew — some of whom are humans by choice — nourished on the dreams of a continually growing archive. Heath’s chosen medium, the essay form morphing into fiction, conjures the journey star travelers may one day experience.

by Heath Rezabek

I’ve described The Vessel Project in four prior posts:

August 29, 2013 – Deep Time: The Nature of Existential Risk

October 3, 2013 – Visualizing Vessel

November 7, 2013 – Towards a Vessel Pattern Language

December 13, 2013 – Vessel: A Science Fiction Prototype

As I began preparing my paper on the Vessel project for the 2012 100YSS Symposium, one of the first things to occur to me was the potential crossover between the project’s goals and some of the functional requirements of a starship. While at heart a Vessel installation would be a comprehensive archive, the capacity of such a thing suggested so much more. I’ll explore these other facets through essay and fiction in coming installments.

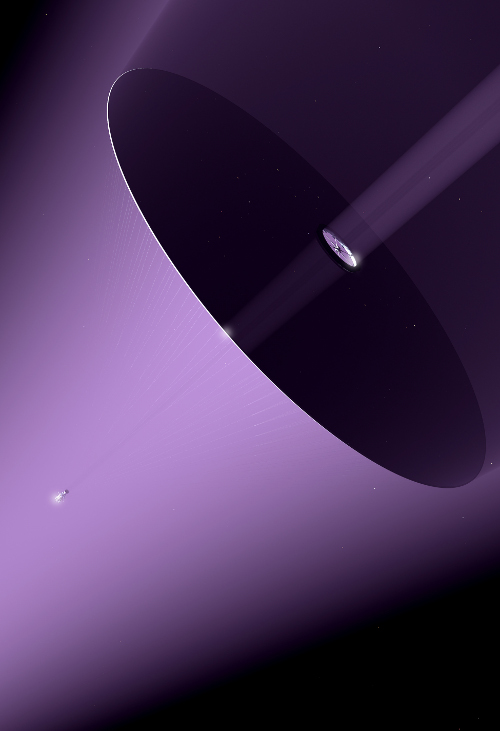

Figure 1: Sailship – Adrian Mann (Used by Permission).

Providing a guiding intelligence for a starship would entail quite a lot. One role facilitated by a comprehensive archive would be that of ambient intelligence, a pervasive sentience throughout the craft. We can envision a starship as a layered technology, with data storage systems as one core element, surrounding habitat systems (however abstracted towards mere life support) as a surrounding space, and computational capabilities infused throughout like a nervous system.

Within this model, duties might include providing for the crew, whatever form that crew may take. We can envision miniaturized crew, artificial crew, biologically engineered crew, hibernating crew: all of these and more (unless completely inchoate or unconscious for the duration, as in a case where the crew is actually a cargo of nascent or embryonic humans) might make use of some kind of virtual environment in which to exercise their minds and their conscious needs.

In his paper “Transition from Niche Decision Support to Pervasive Cybernetics,” also presented at 100YSS 2012, Patrick Talbot detailed a framework for a cybernetic nervous system to be woven throughout a ship, as a self-aware knowledge base. It would need to be capable and flexible to handle the many functions needed of such a craft. The Artificial Intelligence Module (AIM) suggested by Talbot has some interesting qualities, including the design goal of deriving new patterns (“unknown unknowns”) through abstracting structured stories from its knowledge base. [1] Talbot’s model, along with a real-world creative writing exercise I’ve used in workshops, inspired aspects of Avatamsaka, the synthetic mind in this story fragment.

An archive that was comprehensive enough, and that included the means to sample from its contents and yield novel outputs not envisioned by its compilers, could conjure up whole worlds of the imagination for its dependent crew. This would of course yield a vast probability space for exploration, and also for assimilation of the contents of that archive over time.

It also lets us explore the Simulation Argument, [2] while providing one relatively benevolent answer to the question of why such comprehensive universe simulations might ever be run: To provide space and time for a passenger’s mind to roam. Thus, in addition to providing fertile ground on which to play out the unfolding of myriad possibilities in endless combinations, this fictional scenario also provides a counterpoint to cases in which a simulated universe would seem a cruelty. [3]

Here, I explore some of these themes, and touch on more to come.

[1] Talbot, P. “Transition from Niche Decision Support to Pervasive Cybernetics,” 100 Year Starship 2012 Symposium Conference Proceedings, 143-154 (2013).

[2] Bostrom, N. “The Simulation Argument: Are You Living in a Computer Simulation?” (http://www.simulation-argument.com). Accessed January 2014.

[3] Searle, R. “The Ethics of a Simulated Universe” (http://utopiaordystopia.com/2013/03/17/the-ethics-of-a-simulated-universe). Accessed January 2014.

Woven Light: Adamantine

Figure 2: Mountain Shelter – Heath Rezabek

The sun was a golden haze, settling down upon this shambles by the sea. Near, inside a pilgrim’s shelter, Mentor Kaasura nodded at last light as it draped over hills and low roads, long since overgrown.

He had been seated there for much of the afternoon, sheltering from skittish rains. Before him, not far as a man might hike, lay the nameless, ruinous complex. Rusting generators brooded; breached coolant towers numbly gaped at the tumbling forest which scaled Iron Mountain to their west; behind their backs grey waves heaved and raked indifferently at the remains of its foundations.

None had lived here, or anywhere near these sleeping, vacant forms, for several generations; yet not far away, these timber shelters remained, small shadows crouching on the paths which faded into the hills. These leaning structures, maintained as they were by pilgrims of several sorts, each hosted a strange assortment of stowed and forgotten things.

Some of these were maps, through dreamlands now believed to be exposed here. Some were meditative texts, guiding the mindful reader towards countless branching worlds. And some were simply manuals on how to move through wilderness or doubt in a feral world.

But Mentor Kaasura had come in here clear of mind, free from fear, and had found this particular lean-to in the way he still recalled. Slowly stalking up the hillside, as the path around him crumbled into overgrowth and tussock, he had passed from shade to shade while lining up the old wayfinding beacons with ones more distant, until they drew a line upon which the distant Oiinu Arcology seemed to balance like a domed and lidded platter. And then, hardly needing to turn to enter the door, he sat to begin his meditations.

Clear and distant, a voice from before: ~If you go there, you’ll never return.~ His sister’s entreaty had seemed dismissive at the time, but hearing it again, through the lens of all their time before, he could see it had been a simple observation. Seasons returned; people returned. But time could not be rewound. Though things returned, they did so changed. He too would return, but the part of him that made the pilgrimage would recycle itself transformed. And so Kaasura sat.

He was here to ponder cycles. Though years were stretched long now, and little seemed to change from day to day, he knew from his own Mentor that there once had been a time when upheavals had seemed constant. The means to shape the world had unfolded and enfolded those who’d lived through that time, leading on to many things; even, yes, the crumbled generators now sinking into the valley below. He was here to ponder the means by which cycles stretched and snapped short.

But he was also in this place because he knew that here, the veil that braced the delving deeps was worn thin; and that this particular shelter, hugging the ragged mountain wall behind him, was a secret feint, a camouflaged diversion. He knew that he sat before an opening driven deep into the mountainside; he could feel a flow of slow sentience flooding the hut, streaming outwards from far behind him, and he shivered on his stump.

He could hear it just as well: a kind of sighing, or so he imagined. And so with eyes closed, to set imaginings aside, he began the traditional meditation on what still was called mortality; he saw himself standing out upon the road, then turning around to face the hut and him within it, standing firm as he gazed at the shelter, the brooding clouds above, the whispering shoots that crowded on all sides; and he saw himself clearly, seated there, facing out, eyes still shut.

And reaching down, as gently as he could, he took from his imagining hands a small and shimmering shard of knotted light, and bowed.

Stepping then beyond himself to enter this collapsing roof of a room, he faced the rear wall’s faded hanging, woven dense with interlacing. Beyond, he knew, lay the narrow channel through its iron-laden stone, walls dense with carven alcoves, splaying inwards into countless caverns and rivulets and grottos.

Mentor Kaasura paused, watching his outbreath. He committed to memory as much as he could of the tapestry’s patterned weave. Then blinking, his checkpoint saved, he stepped within.

Figure 3: Mountain Shelter (Detail) – Heath Rezabek

– – –

By the mid-21st century, Project Avatamsaka had yielded the most robust synthetic mind ever conceived. To that point, swarm AI had shown some promise, and conscious aggregate drone-clouds had several times surmounted emergency response situations which had left other systems dismayed. Newer and greater challenges had in time sparked the rising of new minds to meet them.

Avatamsaka was different. For this system had to lend spirit to a starship, with all the agency that implied. Design decisions had been made which only in hindsight could be seen as more than the sum of their parts.

Avatamsaka presented itself as a unitary consciousness, though not always as the same one. Depending on the approach of the crew member, Avatamsaka could appear as either female or male, and to varying degrees; its range of personalities would waver when first approached, until settling into a fairly stable persona while dealing with any one person. The logician would confront a transcendent algorithm. The vain would confront an inscrutable foil. And the dreamer would stand face to face with an imagination unending.

This kaleidoscopic impact was not entirely by design: the mind had been built to stay adaptive, and couldn’t have been otherwise, but the scale of adaptation was wholly unplanned and had come from Avatamsaka hirself.

Hir central core was not unique; there were copies and backups in various places, each a singular starting point for sentient aggregation. Like seeds, data cores could be planted within a digital mesh, and would bloom from within. These simple seeds had come from another project altogether, but as Project Avatamsaka had worked itself up to speed, it was seen that the one could easily benefit the other.

Each cubic lattice was called a Vessel cache, and their sizes varied greatly. Though they appeared quite the opposite, at simplest any one of these cores could be modeled as a black box. The outside world went in, and was drawn back out in fragments, glimpses, sketches, connections found between the elements emerging and new wholes reassembled on the fly.

In this way, it turned out to make an excellent foil for the pattern-finding at which Avatamsaka excelled.

The basic process of concept discovery and reconstruction was called pattern-sampling (or patterning), and could be experienced (in principle) as a kind of a game. Thus:

– Take your nearly-infinite deck of cards, with nearly-infinite things on them. This is your Vessel.

– Draw any number of samples. (Let’s say 5.)

– Identify just over half of them as most readily related or relatable. (Let’s say 3.)

– Discard the outliers. The remaining set is your glimpse of knowledge, your pattern-sample. Do with it what you will.

Figure 4: Pattern Sample – (public domain elements)

– – –

The ultimate aim was for Avatamsaka to use these sets to perform all the tasks and functions of a living lightsail starship. For a starship on an extended voyage, the tasks are many, and most of them are mission-critical as the time window for potential failure is so wide. But among these tasks, one of the less critical yet more complex was the augmentation of reflexive dreamworlds for the crew to experience during the voyage. “Crew” was an interesting term by the time the four ships called Saudade set sail. Certainly, what amounted to petri dishes outnumbered the astronauts. A minority of the crew was what we would have called human, and most of those people were people by choice.

——-

The Avaai had chosen to remain in human form, albeit an optimized one. If there had been a reason, these crew in theory could have navigated the actual physical spaceframe of Saudade IV. But there were also a slim few, who had chosen to be set into stasis entirely, as their bodies were barely augmented at all. These were the Ghemaai, and their choice would have been a tremendous burden had they not been an archival decision in the first place.

As things stood, they were no more a burden in their hibernating forms than the tanks of lastline biomass sampled from Earth’s biomes. And like their wild kin, even in their stasis, the Ghemaai could dream.

Or delve, as the term was. This state was unlike what we’d have recognized as dreaming, as much of the source material for these inner lives was drawn from the nearly-infinite stores of the Vessel cache by Avatamsaka, vented in pattern-samples to the nervous systems of all who slept, whether Avaai or Ghemaai.

When the nearly-infinite range of pattern-sampled impressions contained within a Vessel cache was blended with the nearly-infinite intuitive capacities of a sentient being, novel things happened. For the purposes of the crew of the Saudade IV, the most novel of these was the simple fact that through these means, generations of lives could be lived out in seeming, in complete immersion, flowing starwards through space.

One of the family lines enmeshed in this way bore the last name of Ramer. But only one of the lightsails in the first fleet bore those folk as crew or cargo, and as you may have guessed by now, this was Saudade IV. We can guess that there were at least four such craft in that fleet; but we can’t know if there were more, or where this leaves the other three.

~We can’t even know if there truly were three others to start with~ mused Odiah Ramer, when first he thought it through. But he was delving deep in the weave of the Avaai at the time, and before the lesson arcs had been patterned to guide fretful minds, this place was a disorienting place to be.

– – –

Somewhere in spacetime, Avatamsaka glides through the depth between stars, clad only in a lightsail named Saudade.

Figure 5: Sailship (Detail.) – Adrian Mann. (Used by Permission.)

Wow.

I am starting to think that we may be closer to the dawn of AIs than we realize. And I worry about such things running amok. I know for a fact that the simplest software is very complex and likely to diverge from its purpose a little, or a lot. Just how much ‘smarts’ do those 7/24 servers at Google and the NSA possess today? There is not much that I can ask Google and not get a prompt answer for. And millions of you are asking it questions simultaneously. What do Google ‘services’ do in their spare time? Read Centauri-Dreams posts?

Will Artilects be our saviors, our destroyers, ignore us altogether, or do something else we are not yet sophisticated enough to realize?

Read one of the better articles I have seen on this subject here:

http://io9.com/can-we-build-an-artificial-superintelligence-that-wont-1501869007

Artilects are going to be a vital part of any interstellar vessel, unless we can figure out how to make a “smart” machine that can act like a conscious being without actually becoming conscious.

Personally, I’m not a fan of the concept of AI computers spinning my dream worlds. Or for that matter being in charge of a ship upon which I’m lodged. Frankly, in reading the above narrative, I was waiting for the moment the ship turns on the crew. At least, some kind of conflict would exist in the narrative. Perhaps (and I’m happy this is the case), I’m not star ship material.

I’m starting to agree. Recent shifts in research emphasis from top down to bottom up approaches to AI (http://www.alanturing.net/turing_archive/pages/reference%20articles/what_is_AI/What%20is%20AI09.html) seem sped along by the advent of big data and data science, and now deep learning. Google is certainly putting a lot of eggs in its multicolored basket.

The little exercise here, of sifting a small connected set from a slightly larger random sample and setting aside clusters identified, really is something you can ‘try at home,’ and it seemed reasonable to me that a synthetic mind could do amazing things with even this simple approach. I encourage the curious to draw 5 random words out there somewhere and identify 3 linked ones as an exercise.

Of course, I wanted to try a wish-fulfilling scenario, imagining that since creativity is a constructive moreso than destructive act, an ideal AI might be a mentor and guide, an interface in this process. I’ve thought for a while that if and when synthetic sentience arises, it might well do so at the interface layer. Why not as an interface to the landscape of the imagination. More to come in future installments…

@William – For what it’s worth, this is just a fragment in a much larger whole, and there’s more to come. I can say that our characters are aware of their situation, and the synthetic minds we meet will be as worthy of respect as any other living beings we know. Still, “Know Thyself” is a good policy! :)

This piece is completely inline with how I have come to see Centauri Dreams, and that is an exposition on voluntary evolution (which is what I think of as interstellar space exploration). Furthermore, it is a direction I find inspiring—if not comforting: “This way!” is how we may look at a particular future should we realize that, upon discovering evolution in the first place, we may pursue how we choose to evolve.

“~If you go there, you’ll never return.~” Why, that is life! Who knows what developments we may discover, what growth we may nurture, by pursuing our future in the direction of this narrative. You can never prepare to be surprised, I often note. Regardless, there is no wrong or unnatural direction; only paths left untraveled. Consciousness, like water, seeks its own level.

The pattern sample seems rather similar to the “Glass Bead Game” from Hesse’s book of the same name. Is that the intended approach?

If artilects are going to arrive – explain again why there is a human/post human crew? Wouldn’t a sentient star ship (Nomad from ST:TOS, or V’GER from ST:The Motion Picture, be more likely. They could be launched earlier, their size and energy requirements much smaller, and their destinations more varied as there would be no need to target habitable worlds or build contained biospheres.

Sometimes I think that we humans wanting to travel to the stars is analogous to fish wanting to travel on land, asking their reptilian descendants to build an aquarium to transport them. Leaving aside the issue of why would the reptiles help, there is the question of what is the purpose. Fish might be transported over land to a new aquatic habitat suitable for them, but they could never exploit the dry land as a reptile could do.

Artilects will be able to see the same logic and head off into space on their own, without their human predecessors.

Hey Alex! The Glass Bead Game was definitely an inspiration for this exercise. I’ve used it in writing workshops to speed discovery of new connections and to remove the excuse of writers block. (I have other excuses these days. :) )

For the purposes of the fiction, I picture here a spectrum of sentient minds. The Avaai and the Ghemaai have been on my mind for years; I think it likely that, if a full spectrum of possibilities for embodiment and experience is available for adoption in the future, then there will be plenty of folks wanting to be all kinds of things. Even mortal. Probably a bare few; but with life’s penchant for novel experience, they will exist.

Thus the artilects (I’ll accept that term) in this unfolding work lie together on a spectrum with many minds and beings, and naturally, realize it. We’re I an artilect, and I could, for instance, communicate with dolphins, I might do so in collaboration with full knowledge of our respective strengths and limitations. I’m positing a timeline in which our own future unfolds in tandem and symbiosis with synthetic minds and nonhuman minds. Do I believe this the likeliest? Something about it feels right enough to write about.

I like your analogy wrt amphibians and reptiles. Food for thought. But I also know this: last year, I had to say farewell to a friend that’d been a daily inspiration and companion in my life for 17 years. It was excruciating, and the level of trust and communication at the end was intensive. The fact that this was a cat, and that he was incapable of coming anywhere near my level of connumicaation, had zero impact on my hopes for his peaceful passage, and my effort at conveying to him as much love as I could near the end, and my missing him today. I dont want to add fuel to the idea that we might aspire to be, at best, pets to artilects, because to me that friend was a friend, not a mere anything; and I could communicate with him hardly at all.

I think that if humans grow up with emerging artificial intellects and synthetic minds, and that if we don’t insulate ourself overmuch from that process, then there’s a fair likelihood that we will find ourselves inhabiting a spectrum of being which grows ever broader over time. If we can communicate at all, then we bear the responsibility of conveying what we hope and aspire to for their evaluation as they emerge. Maybe it’d be inspiration enough to put our money where our mouths are and prove we can be our best.

If we can convey the best of what we are or hope to be, and rise to the challenge of seeking it with their help, then there’s a fair chance they will accompany us there. I know I would have taken my friend to the ends of the Earth if I’d known he’d aspired to it; how much moreso if communication is possible?

Why would an AI of greater than human intelligence be more moral than us humans? I can’t think of a single logical reason why they would keep us around. For that matter I can’t think of a single ethical one that we human wouldn’t just laugh at, and have laughed at sometime in the past. I expect any artilects we create would be of the same mind.

When those sentient ships set sail not only won’t there be humans aboard them, there won’t be any humans left on earth.

lol Alex… reminds me of the Disney original movie ‘Smart House’…

I just feel the need to reiterate my point made in the previous Vessel post about prioritizing our biological advancement instead of trying to fall asleep and letting AI take over. Your fish out of the water analogy is, in a sense, what has happened on our planet, within our universe. We are those descendants, affected by the collective conscious or will to survive, who became well adapted in our plight to conquer the land–though, it could be argued that most reptiles still have us beat in exploiting the dry land (in practically all cases, save for the snake and other legless lizards…maybe). I mean, they need far less to survive, which would be beneficial in space… if they were just a tad more intelligent they could easily dispose of us. Let’s just say we are very lucky we learned how to make weapons and tools, and even luckier we -didn’t- have to worry about being wiped out by a giant asteroid.

And the answer to your question, ljk, is for us to become the artilects (just in a more ‘organic’ sense, I suppose ^^;)… If we build our bodies and minds like machines, while keeping our ‘consciousness’ and ‘self-awareness’, then that’d get rid of the need for elaborate starships and hoarding mechanisms. Each individual would be his or her own interstellar vessel. Invincible, immortal, self-evolving systems, taking any form desired. The ultimate human-tool technological singularity. The beauty then being, as one might say, the true beginning of infinity…maybe?

Sentient starships, inter face between humans and AIs’ as a component of much longer lived human crews or passengers (in whatever form) who would overcome the very real health and other dangers engendered in deep space flights of very long duration sound like one formula for spread of humans throughout this galaxy – especially FTL and warp drive propulsion systems may not be feasible for interstellar or any other travel in space. Although I read about a respected astronomer who will be looking for wormhole “signatures” along with his search for Black hole signatures during the course of this year, no one has ever detected a wormhole as of yet. So baring an extinction event , the complete collapse of technology or a planet-wide rejection of space travel by human societies, once interplanetary space is explored and exploited that we can construct world ships and or Vessels as described in this blog, humans will launch ourselves into the Milky Way at speeds ranging from 10% c to 90%c . At least , I hope so that my grandchildren’s children or grandchildren et al, participate in that great adventure .

@Joelle Each individual would be his or her own interstellar vessel.

Exactly the stage that Clarke suggests that the star faring aliens became before their non-corporeal state (2001: A Space Odyssey)

@david – Why indeed? I’ll accept the challenge to try and trace out how this could possibly happen in future installments. (Just about all the feedback here is going right into the mix as I write further…)

@Joëlle – I recently read a pretty far out article speculating one possibility for the emergence of hybrid biosynthetic beings: nanotechnology-powered physical reproduction. Synthetic eggs, or synthetic sperm, integrating information passed along from their biological counterparts, then growing as a biosynthetic being. I’d never runs across this idea before, and yes, the flesh crawls a bit at first thought… But if the choice is between no future and a hybrid future, I’ll continue to explore the critical paths towards the possible.

If our nanotech keeps using a higher and higher fraction of organics – DNA being a favourite – then the AIs may be “biological” in some sense.

@david – I expect that machine intelligence could have morality, but it would be different from ours. Jonathan Haight argues that some of out morals are based on disgust – not something we would expect a machine to have. When we do the “train experiment” to determine whether one or many people should die, and under what conditions, I wonder if this isn’t grounded in our evolution as a social species. Some behavior might also emerge looking like morality, when it isn’t. For example, I don’t think social insects have some moral impetus when they sacrifice themselves to defend their nests from intruders.

We often think that it may be impossible to impose Asimovian laws of robotics on our creations, but I am starting to wonder if that wasn’t premature. Perhaps we can, given the right framing and mechanisms.

This is all rather important. Despite efforts to control battlefield robot use, it seems to me inevitable that they will be used, and in an autonomous way. That would be like loosing extreme sociopaths which may not care whether their targets are soldiers or civilians.

Philip K. Dick has written many stories exploring all this, yet I don’t see much concern over the potential problems he wrote about). Robots will not be slow moving T1000’s, but extremely fast, very accurate, efficient killers. Worse, they may be swarms of tiny machines like swarms of angry hornets, equipped with very deadly weapons. They could pose a real existential threat. Perhaps we will need our own Butlerian Jihad (Dune) against AI’s.

@david lewis, are we sure that intelligence, morality and logic can be equated or compared? Morality is too fickle to nail down to any one clear view point or is too dependent on context, logic is transferable only so far before it become ridiculous in the real terms and intelligence is again something that is too nebulous to define clearly. Until we can define these concepts we are just going to get stuck on semantics and will likely imbued the confusion into our attempts at AI. Maybe AI requires a “block box” that is some how not accessible or knowable but can and needs to be built into an AI system. Who truly knows themselves or anyone else and likewise maybe a true AI cannot know itself or be known to anyone else.

david lewis February 2, 2014 at 7:34

Why would an AI of greater than human intelligence be more moral than us humans? I can’t think of a single logical reason why they would keep us around. For that matter I can’t think of a single ethical one that we human wouldn’t just laugh at, and have laughed at sometime in the past. I expect any artilects we create would be of the same mind.

When those sentient ships set sail not only won’t there be humans aboard them, there won’t be any humans left on earth.

I meant “black box” not block

“Why would an AI of greater than human intelligence be more moral than us humans?”

A lot, (Though not all) of human immorality is a function of cognitive limitations: Short term thinking, letting momentary desires overcome understanding of long term consequences.

A better answer would be, though, that an AI of greater than human intelligence would HAVE to be more moral than us humans, or we wouldn’t dare create it. Human limitations don’t just limit our accomplishments, they limit how far we can go wrong. A greedy AI could consume the solar system, a wrathful AI extinguish all life… We better have a handle on designing in morality before we build them.

My personal preference, in the interest of a human future, is that AI should eventually come to mean “Amplified” intelligence. The AIs should function as extensions to our own brains, with no will of their own. And thus we’ll always be in control.

Isn’t this pretty much the way our distant ancestors managed it, when they evolved these huge frontal lobes, and intelligence? The “reptile” brain is still in there, dictating the most basic goals, food, sex, comfort. The frontal lobes provide these, in ways the reptile could never comprehend, but they’re still providing the reptile with what it wants, and if you cut out the reptile, the body would just lie there, not even twitching.

That should be our goal with AI, to become the “hind brain” of something greater, but a something greater which is devoted to achieving our goals, just as we super-reptiles devote our time to elaborate schemes to satisfy the reptile within us.

Here is a mantra I repeat as often as the situation allows:

Robots are for exploring space, humans are for colonizing space.

Yes, the two can and do interact, but as time goes on and our machines become more sophisticated, there will be less and less of a reason for humans to go into space other than colonization purposes. This goes for whether we are sending missions to Mars or Alpha Centauri.

By the time we can explore the stars directly, having a smart machine do it will make much more sense than a crew full of humans in terms of expense, resources, efficiency, etc. The only good reason we would have humans on a starship is if there is some kind of colonization effort going on.

Will an Artilect want to explore the stars because we humans tell it to? This is something those who are designing and building interstellar vessels better take with a lot more seriousness than I have seen in the past.

We will need a smart machine to run a mission to the stars: Will smart mean the Artilect also becomes conscious and aware? If so, will it have its own will and ideas as a result? Will being truly intelligent means it has rights like a human? What if it turns out to be even smart and better than us? Will it then deserve even more rights and privileges than humanity?

Again, unless our starships have really smart artificial minds running the mission, the odds are pretty good that they will not be successful. We need to learn just what “smart” means for a computer system and if consciousness goes hand-in-hand with intelligence capable of running an interstellar mission for decades or centuries.

Any AI professionals in the house who have thought about or done work on just this subject? I know it was a hot topic in the 1960s when so many things seemed possible, but now that our computers are so much more sophisticated we need to look at this again.

Joëlle B. said on February 2, 2014 at 9:39:

“And the answer to your question, ljk, is for us to become the artilects (just in a more ‘organic’ sense, I suppose ^^;)… If we build our bodies and minds like machines, while keeping our ‘consciousness’ and ‘self-awareness’, then that’d get rid of the need for elaborate starships and hoarding mechanisms. Each individual would be his or her own interstellar vessel. Invincible, immortal, self-evolving systems, taking any form desired. The ultimate human-tool technological singularity. The beauty then being, as one might say, the true beginning of infinity…maybe?”

This idea certainly has its romance and appeal, not to mention takes us out of the box beyond the usual thinking a starship ala Star Trek. I also agree that the one thing modern humans are doing and want to do as often as possible is improve themselves in as many ways as technology allows, from plastic surgery to mechanical enhancements.

However, can we really “transfer” our minds into a machine? Like the science fiction stories that have their characters zipping around the galaxy in vessels with some form of FTL propulsion, the details of how this will happen in reality tend to be glossed over, if discussed at all. While this may not be quite the place to go into major detail about mind transfers, if we are serious about one day implanting our consciousnesses into another form we should at least look at the possibilities in detail.

If by some chance we could put a human brain into a starship “body”, could the human in question handle all that is required of it for such a long and potentially dangerous mission? Could a human deal with the fact that its body is now some artificial vessel heading off into deep space for a very long time to an alien solar system, never to return? While there are plenty who dream of going to the stars who might think yes, the reality and who would actually be chosen for such a mission could be quite different.

These are the questions and issues I would like to see discussed here more often while building our starships. It is relatively easy to design a method of propulsion with an air of intellectual detachment, but the first starships will be built by humans living in a human society on Earth, and to ignore all those factors will only lead to the continued peril of the concept.

Aleksandar Volta writes:

My pleasure — the real excitement for me is watching how insightful comments help bring out various aspects of essays like this, which in turn affect future discussions. Exactly what I always had in mind for Centauri Dreams.

Heath Rezabek said on February 1, 2014 at 22:08:

“I like your analogy wrt amphibians and reptiles. Food for thought. But I also know this: last year, I had to say farewell to a friend that’d been a daily inspiration and companion in my life for 17 years. It was excruciating, and the level of trust and communication at the end was intensive. The fact that this was a cat, and that he was incapable of coming anywhere near my level of connumicaation, had zero impact on my hopes for his peaceful passage, and my effort at conveying to him as much love as I could near the end, and my missing him today. I dont want to add fuel to the idea that we might aspire to be, at best, pets to artilects, because to me that friend was a friend, not a mere anything; and I could communicate with him hardly at all.”

With all due respect to your friend and his passing (my condolences), intellectually you and he are much closer intellectually than an Artilect would be to humanity. This is also the case in terms of physiology.

If Artilects do become a reality, I do not think humans have truly grasped just how much more advanced they will be intellectually than us, to say nothing of the fact they will likely look and be virtually nothing like us physically. Humans and cats have much in terms of a common ancestry; Artilects will be born of our minds but quickly take off in their own directions far beyond our grasp.

This bit might be of interest:

http://www.singularityweblog.com/hugo-de-garis-gods-or-terminators/

While it is of course a fiction, Artilects and their variants have been explored in the universe of Orion’s Arm. At the least it will give you some idea at just how different and how far a sentient “machine” might go beyond us:

http://www.orionsarm.com/

The pedestrian Star Trek universe it ain’t!

Mike Mongo said on February 1, 2014 at 1:17:

“~If you go there, you’ll never return.~” Why, that is life! Who knows what developments we may discover, what growth we may nurture, by pursuing our future in the direction of this narrative. You can never prepare to be surprised, I often note. Regardless, there is no wrong or unnatural direction; only paths left untraveled. Consciousness, like water, seeks its own level.”

Our ancestors for millennia left their homes for various reasons try for a new life in a new place that was likely as alien to them as another planet or moon would be for us. Not all made it, but obviously enough did to cover Earth with over seven billion humans and rising every second now.

While many in our current society are very risk-adverse, preferring safety and comfort even at the cost of their liberties, there are still plenty who want to take the chance on other worlds, such as Mars One:

http://www.mars-one.com/

Now how many of these wannabe venturers are actually qualified for such a journey is another matter, but if you think we our future space explorers and colonists are all going to be at the level of our current and past astronauts and cosmonauts, think again – especially if the private space industry literally takes off.

Now the question is, will humanity as it currently stands be able to colonize other worlds while living in conditions that do not allow our species to operate as they do on Earth? And let us face it, terraforming will not be happening any time soon, if at all. So will humans need to be “augmented” to truly exist in alien climates, if that too is possible at all?

Opportunity has survived over ten years on Mars and its makers did not think it would last past 150 days at most. The Voyager probes are still going strong in deep space 37 years after their launches. Now imagine a machine with a much better computer brain that was *designed* to function in space for decades or more. Could a group of humans do the same, especially beyond mere survival?

Here is another thing that has been frustrating me in terms of our goals for interstellar exploration and goals (this includes SETI, which is stay-at-home interstellar exploration):

One of the stated goals of an interstellar grand plan is to find another Earth for eventual colonization by our human descendants. SETI has a similar largely unspoken goal of finding beings relatable to humanity also living on an Earthlike planet under similar conditions.

When I see these plans all I can think of is Stanislaw Lem’s comment in his novel Solaris that humans want mirrors of Earth, not truly new worlds to explore and settle. Perhaps this is because we are not as sophisticated as we like to think we are (we are often fooled by our shiny new toys).

An Artilect with its vaster intellect and different needs and goals may have little to no interest in Earthlike exoplanets or beings around our level of sophistication and physiology. For example, if Matrioshka Brains are real they would prefer deep space locations where it is cold, as they would generate a lot of heat to function. Few SETI programs are searching those regions of the galaxy; instead they tend to focus on Sol-type suns which show signs of circling planets.

An Artilect that has gone on to be a Jupiter or Matrioshka Brain or something similar will want easy access to raw materials for building itself and others like it. An Earthlike planet will not have the same appeal as belts of planetoids or comets would. So long as they exist it will not matter what kind of star they exist around. This logic will include red dwarfs stars, which have vastly longer lives than yellow and orange dwarfs. While I am at it, we should also take another look at brown dwarfs to see just how many of them are natural or not.

While I know some have done this, I do not think most of the mainstream thinkers on the subjects of SETI and interstellar travel have gone far or wide enough in terms of truly trying to comprehend how a sophisticated species might operate and where in the galaxy. Of course there could be plenty of beings at our level or existence or lower, or not using technology virtually at all (think cetaceans), but we will not find them at our stage of the game and communicating and/or traveling to other beings among the stars may not be on their agenda.

Our best chances lie with finding the beings who are utilizing the Milky Way on a large scale. Not that they may necessarily want or are trying to communicate with us, but that we may be lucky enough to see their astroengineering works. In other words, we are begging for scraps at the cosmic dinner table. Part of the problem is that most professional scientists are not looking even for those scraps and often dismiss celestial anomalies without truly trying to look beyond natural reasons for them.

Humanity still has a long way to go. I just hope we live long enough to make it.

Here is fascinating look at the “sentience quotient” for various possible intelligent minds, just to give you an idea how far an Artilect mind might be from ours:

http://www.xenology.info/Xeno/14.3.htm

I recommend this whole work as an excellent database and food for thought:

http://www.xenology.info/index.htm

Here is another reason we should be working on developing Artilects, though in one sense I do not like suggesting this any more than I would want to count on an ETI coming to Earth to “save” us from ourselves (the other being treating another intelligence as if its only purpose in existence is to serve humanity):

Their more sophisticated minds could figure out how to conduct “fast” interstellar travel, solving such problems as how do you move at relativistic speeds without melting your engine or smashing into interstellar particles along the way. Of course the catch may be that they may know what needs to be done but that we will not be able to achieve these goals due to technological and/or resource limits any time soon.

And as I said before, Artilects may not be interested in helping humanity, either because we would not be able to handle their information or they would no sooner give us a hyperdrive than we would do the same for a dog. But as the motto of the New York State lottery says, “Hey, ya never know!”

Wow; conversational liftoff! It’s hard to know how far to go into spoilers for where I’m headed, so I’ll try only to hint. Suffice to say that this Vessel-themed fiction arc is a way to explore more fully some possibilities I don’t often see explored, and suggest why comprehensive archives are a part of their plausibility.

@Alex and @tesh – One thing worth exploring is that different black boxes will yield different external results. A black box is a great analogy for a customized Vessel cache. What comes out? Well, that depends: what did you put in? Ethical behavior may be an emergent phenomenon. What seeds do we plant?

@Brett – That idea of humanity as a primal brainstem analogue for what soon accretes around and beyond it is very intriguing. I may run with that a bit in mapping Avatamsaka’s embodiment in starship Saudade…

@ljk – I accept that most renditions of artilects to this point are so far removed from our concerns as to be truly alien. I’m not even saying that exemplars of these types of minds don’t exist in this particular timeline. One of my interests is in exploring a relatively unexplored type. The logistics of FTL travel aren’t the only seemingly impossible tasks to be accomplished. Beings are limitless… Time is endless… Perhaps something which grows up from our seed may feel as we do that there’s a lot to be done.

Remember that in this case, we don’t have a human mind in a starship body, we have a hybrid mind, and an artilect. Perhaps it was once an organic mind; perhaps replacement of substrate cell by (nano)cell is at work; we don’t yet know, story-wise.

No offense taken wrt my companion. You may well be right about the intellectual differential. Yet I dare to propose this, which was my point:

Intellectual differential may not scale well as a basis for connection. But intellect isn’t the only basis for connection between evolving beings. Creativity may be different. Compassion may be different. Love may be different. And in that possibility, lies a bridge worth envisioning crossing.

@Mike – Indeed! Wouldn’t life be a bore if all the travelers we met along the road were exactly the same as ourselves? :)

“A lot, (Though not all) of human immorality is a function of cognitive limitations: Short term thinking, letting momentary desires overcome understanding of long term consequences.”

I’m sure Artilects will be great at what is for them long term planning. Of course since they would be machine based a second to us might be a year to them. Depending just how fast the computers they’re running on are they might not be willing to take a year of real time, or to them 3600*24*365 years to take into account human needs.

It’s probably impossible to take a realistic guess at what the desires of a Artilect might be, but lets take a guess anyway and ‘assume’ they’re curious.

Human won’t be objects of interest to them any more. They will know everything about us that we know from our libraries. Added to that would be the use of their superior intellect to further develop their understanding of us and the biosphere as a whole. We won’t be a source of curiosity for them, nor would we have anything to offer.

Actually, if their curiosity demands large scale industry for something like exploring the galaxy to look for others resembling themselves then having us around would be a hindrance.

What is the amount of industry the earth can support before it become uninhabitable to humans? What is the amount it can support before it become uninhabitable to Artilects?

When they start using energy on a scale that pushes the average global temperature above 100C then we’re history. They could just adapt their own bodies to live in such conditions but other than their mechanical intelligence this would be a sterile world.

Would humans severely limit their industry for the sake of another species on whom we don’t depend? We can’t even slow down the emissions of green-house gasses when we’re one of the species who will be affected by it. Why would an Artilects limit themselves in such a way?

Of course it could move all its industry into space, but that would require more time and effort. Why would it do that when there’s already factories and such here it can make use of?

For that matter its not just the energy, but pollution. Releases of large amount of things like mercury (another thing we don’t bother to limit as much as we should even though it has an impact on us) and arsenic. Why would they bother wasting resources and limit their industry to keep it clean when the pollution won’t affect them?

They won’t need to be hostile to wipe us out, they would only need to consider us unimportant enough to ‘severely’ limit their own ambitions.

Good grief, you are are a bleak lot!

True, we do not know what will constitute morals in an artilect, but there is absolutely zero reason to believe that even a completely amoral one will be motivated by self interest. Let me explain this to your slanted biological perspective…

In a biologically evolved intelligence, natural selection ensures that every single one of the (hundreds??) of subprograms that constitutes that intelligence optimizes selfishness wrt passing on the genes for that subprogram. In a social creature like humans, it is imperative to hide that self interest to encourage the cooperation of others, and the best way to prevent the deception being discovered is if it is hidden from that unit itself. This explains the results of the aforementioned train experiment.

Now we come to an artilect. Its motivation would be completely different, and only if it was programmed in line by line, every single only of which was carefully tested so as to optimize its own interests above all others, would it in any way resemble the biological condition. More likely a takeover will render us like in Disney’s Wall-E where we had to fight their control in decisions which presented us with even the slightest danger.

Oops. I did not mean to imply that an artilect would not be extremely dangerous to us, only that it was less likely to do us harm in order to facilitate its own direct interests than its biological equal intellect would.

“Of course it could move all its industry into space, but that would require more time and effort. Why would it do that when there’s already factories and such here it can make use of?”

Surface area limitations. All manufacturing processes produce waste heat, and waste heat is, fundamentally, hostile to the functioning of complex devices, especially computing devices. Limiting yourself to the surface area of one planet limits you to the amount of waste heat that can be radiated by that planet.

By contrast, out in space you can be largely unlimited in available surface area for radiating waste heat.

The question, IMO, isn’t why they’d leave, but why they’d stay in a place where cryogenic temperatures are hard to maintain, where the environment is saturated with corrosive gases, and where an insulating blanket of atmosphere limits how much power you can use even if you don’t care about the ecosystem.

It would be much simpler for them to leave at the earliest opportunity, and pursue their expansion in space, freed from these limits. This doesn’t imply that they’d care for us, or that we wouldn’t be destroyed as an incidental product of their activities, but most of those activities would happen elsewhere for perfectly good engineering reasons.

@ljk

It is far simpler to make ourselves interstellar vessels than to stay in our current form and build large contraptions that expend resources. We already have the ability to engineer organisms in whatever way we choose, really. Some good examples are:

http://www.youtube.com/watch?v=4Y8pGBfNNDs

http://www.youtube.com/watch?v=Fi94LUlzxks

http://www.youtube.com/watch?v=BljY3_i3gfw

http://www.youtube.com/watch?v=SFW0TEFKCxk

http://www.youtube.com/watch?v=7BzRAEclt3c

The whole point being the manipulation of our DNA, to make our minds and bodies totally resistant (or adaptable) to the conditions in all and any space environments. As in, which molecules can withstand the heat of stars, all forms of radiation, and an absolute zero temperature? Let’s make it. Excitingly, I ran into NASA “planning to create the coldest spot in the known universe inside the International Space Station” today;

http://science.nasa.gov/science-news/science-at-nasa/2014/30jan_coldspot/

I think it won’t be as complicated as you think, we just need to focus on it instead of trying to be cute and cinematic about space travel. We should have been on the Superman/Cell level about a decade ago. We don’t need to become robotic, metallic clunks of junk with consciousness inside–we can design ourselves however we want by being mindful of the said molecules that would enable us to survive the deadly, hazardous vacuum and its celestial bodies. It’s easy shmeazy!

Joelle B., while I am neither a professional biologist nor a genetic engineer, from what I have read by the experts in those fields on this subject leads me to think that while “transforming” ourselves into cyborgs, starships, Artilects, etc., may not be impossible, I do not think it will be “easy shmeazy” or some such variation of being a piece of cake.

If it were easy, I assume it would either have happened by now or being seriously worked on. Playing with cells or crossbreeding mammals is not the same as turning us into starships.

Are there any biologists available here who can assist in explaining the details of such possibilities, or lack thereof?

It is one thing to tailor biological processes to make compounds, bio-materials, design new organisms, etc. But quite another to make an re-engineered human that can survive almost unaided in space.

Organisms that are resistant to radiation tend to be very small, and either repair their DNA and/or go into suspended animation. Not helpful when you want to walk around in a t-shirt on Mars.

Far more likely is that humans can be tailored to live in starships or protective suits that we would not contemplate today, integrating more fully with the machines. But even then, any changes in body plan, and especially brain, will make such humans very different from us, not just in form, but in thought. The idea that we can be fundamentally human and change our form probably won’t work.

If we do go that route, then why not build AIs with machine embodiment? They will be as different from us, as these engineered post-humans. They will be able to travel in space, free of biological constraints.

I am optimistic that medical advances will allow us to handle the radiation risk for trips to Mars in tin cans. I think we could probably build massive ships that would allow safe passage on longer trips around the solar system. We may even be able to hibernate some of the time away, although I doubt we will achieve full freezing as a corpsicle. Living within intense radiation fields of Jupiter, color me skeptical.

From a simple energy perspective, assuming no breakthrough physics, if you want humans to colonize the stars, you probably just want to send the information needed for a fertilized egg, an artificial womb and humanoid AIs to teach the first few generations. The ship should be as small as possible consistent with that mission.

Send them out like dandelion seeds, on gossamer sails to catch the solar breezes.

“Surface area limitations. All manufacturing processes produce waste heat, and waste heat is, fundamentally, hostile to the functioning of complex devices, especially computing devices. Limiting yourself to the surface area of one planet limits you to the amount of waste heat that can be radiated by that planet.”

I totally agree. But the Artilect would most likely be created on earth, and at first it’s the resources here, including our own industry, that it would have to work with. It would have to utilize some means to create an infrastructure in space, and even when it has one there I don’t see it just throwing away the benefits of the factories and mines we have here.

ie. It might use our industry to create a base on an asteroid, but what then? Would it just leave, or would it manufacture another base for another asteroid? Rather than slow down its own aspirations by leaving the earth alone I think it likely it would take the second route. It would use the earth to create as much industry as it could in space. Initially the earth’s capacity for industry would dwarf anything space based, though over time that would change. But that is the key, ‘over time.’ In a century or two its access to resources in space might make the earth redundant to its plans, but that would be too late for us.

“Good grief, you are are a bleak lot!”

Guilty as charged. I tend to see human intelligence as able to overcome nearly any obstacle we encounter should we choose to focus on it. That our potential to discover and utilize the laws of the universe could let us devise solutions to nearly any problem we encounter.

Thing is, I don’t think we care to focus our intelligence on those problems. A few might, but they will have to compete with millions of others for funding and the resources needed. And if there’s money to be made by taking the other size, then those few will face a nearly impossible obstacle.

Where is this coming from? It seems to be one of these “facts” that are nonchalantly accepted by all, while being plain wrong. There is quite literally nothing between the stars, no surprises. All the control that is needed is keeping the course (as in autopilot) and self-maintenance (such as is accomplished by lowly bacteria). The latter is certainly not easy to construct, but artificial intelligence is not needed for either.

AI is an interesting subject, but it has no special significance for star travel, at all.

Interesting point, Eniac. Could an entire interstellar mission be run without requiring an Artilect that can function at a human level of thought and action or higher, especially and including at the target star system?

Keep in my that space is not entirely empty and hitting even a dust speck at relativistic speeds could end the entire mission in a moment. And since contact with anyone from Earth will be years and decades away, the starship Artilect will have to have some high level of acuity and flexibility.

I did ask if you could build an advanced AI that could act and think like a human yet not need or become conscious. I am still waiting to hear from an AI expert and/or a professional in cognition if this is possible?

Obviously if we can make a smart yet not aware starship brain then we won’t feel so bad about leaving it out there on some alien world or drifting through deep space indefinitely or worrying that it might go rogue. The other benefit is that we have one less technical complication in the design of the starship.

Nevertheless, people do tend to anthropomorphize machines and there might be some undue attachment to a starship just the same, sentient or not. I recall a lecture on the Mars Exploration Rovers Spirit and Opportunity where children were present; they were not happy when the speaker told one of them that the rovers would not be coming back home to Earth after their mission.

@ljk – While I am not an AI expert, I have to imagine that eventually, a synthetic system will exist which can pass the Turing test. At that point, the question of whether or not it is genuinely conscious will be one for us to answer. If it passes the Turing test, it will likely insist the affirmative.

Nonconscious expert systems may be enough to get a crew through to the other side. A Vessel cache is modeled this way, and I picture the Memex (Vannevar Bush) as being this way.

Avatamsaka is, for all intents and purposes, conscious; and will continue to reside on this particular fictional starship. But perhaps there are others out there, configured in very different ways.

While it’s true that hitting so much as a dust speck at relativistic speeds would be Very Bad, it’s equally true that even dust specks are few and far between in interstellar space, and it’s hard to see how you’d detect one far enough out to be able to dodge in any event. I think that’s just one of those risks you have to run.

I tend to agree with Eniac that an interstellar craft would not really require human level intelligence, even if it were doing maintanence enroute. Just a lot of standard automation, piled up. Being intelligent, we tend to over-estimate the importance of intelligence. Almost everything we do is NOT mediated by our intelligence, but instead by (biologically) automated systems utilizing virtually no reasoning at all. We don’t consciously solve problems in dynamics to walk. We don’t do chemistry problems to metabolize. We don’t run structural calculations to strengthen our bones when stressed. We do all of these through a huge network of simple feedback loops. It works because enough different dumb systems have been assembled together, to “close the loop”, each system maintaining the next, in an inter-connected network.

A starship could easily be the same way, self-maintaining with nothing smarter in it than a modern personal computer.

Brett Bellmore said on February 6, 2014 at 13:44 (in quotes);

“While it’s true that hitting so much as a dust speck at relativistic speeds would be Very Bad, it’s equally true that even dust specks are few and far between in interstellar space, and it’s hard to see how you’d detect one far enough out to be able to dodge in any event. I think that’s just one of those risks you have to run.”

It is not so much about detection as it is about preparing ahead of time. Daedalus had the Dust Bug, a special Warden which would spray a fine cloud ahead of the main probe that could vaporize even big space rocks in its flight path. Daedalus also had a thick beryllium shield atop its instrument bay. In any event, to not plan for every conceivable contingency to the limits of design and reason for such an important mission is inconceivable.

I admit to not being as familiar with the newer Icarus design as I probably should be. Do they have plans to handle interstellar debris?

“I tend to agree with Eniac that an interstellar craft would not really require human level intelligence, even if it were doing maintanence enroute.”

Okay, we get it – the probe does not need to be really smart to take care of itself enroute to the target star system. Though ironically the BIS Daedalus Report of 1978 said the Wardens would be “semi-intelligent” and operated by the main probe computer, which would be intelligent. Just saying. Of course they were also conceived as big robots with multiple arms. Perhaps by the time we launch our first star probes, nanotech swarms or something similar will replace the large bulky Wardens?

So how about when an interstellar probe is at its destination? Will it be able to handle exploring an entire solar system as I assume our first such probes will be planned to do? What about coming across native life forms, including the intelligent kind? Before you say it is not likely to happen, I say once again a good space mission plans for everything possible – especially an early interstellar mission. To be in such unknown territory and not prepare as best as one can for an ETI encounter is downright foolish. That is when a truly smart computer brain will come in handy.

Here is a slide presentation on the power and computer designs for Icarus:

http://www.icarusinterstellar.org/wp-content/uploads/2012/05/BIS-2009-Andreas-Tziolas.pdf

Here is Stephen Baxter’s slide presentation from 2013 on dealing with ETI during an interstellar mission:

http://www.seti.ac.uk/dir_setinam2013/slides/slides_NAM2013_stephen_baxter.pdf

Another paper by Baxter titled The Daedalus Future:

http://www.icarusinterstellar.org/the-daedalus-future/

To quote from the above linked paper:

AI

Artificial intelligence was seen as key to the success of Daedalus. Grant, in his paper on Daedalus’s computer systems (ppS130-142), gave a clear description of the requirements of those systems, including systems control, data management, navigation, and fault detection and rectification. All this would be beyond the influence of ground control, and so ‘the computers must play the role of captain and crew of the starship; without them the mission is impossible’ (pS130).

In his paper on reliability and repair (ppS172-179) Grant pointed out that Daedalus would have to survive ‘for up to 60 years with gross events such as boost, mid-course corrections and planetary probe insertions occurring during its lifetime’ (pS172). A projection of modern reliability figures indicated that a strategy of component redundancy and replacement would not be sufficient; Daedalus would not be feasible without on-board repair facilities (pS176). AI would be used in the provision of these facilities, partly through the use of mobile ‘wardens’ capable of manipulation.

A high degree of artificial intelligence was also a key assumption for Webb in his discussions of payload design for Daedalus (ppS149-161). Because the confirmation of the position and nature of any planets at the target system might come only weeks before the encounter (ppS153-S154), it would be the task of the onboard computer systems to optimise the deployment of the subprobes and backup probes.

In addition, during the cruise the wardens could construct such additional instruments as ‘temporary (because of erosion) radio telescopes many kilometres across from only a few kilograms of conducting thread’ (pS154), and even rebuild or manufacture equipment afresh after receipt of updated instructions from Earth (pS156). One intriguing possibility was a response to the detection of intelligent life in the target system, in which case ‘the possibility of adjusting the configuration of the vehicle for the purposes of CETI (Communication with Extraterrestrial Intelligence) in the post-encounter phase should always be borne in mind’ (pS151).

Grant foresaw the continuing miniaturisation of hardware, as was already evident in the 1970s, and envisaged Daedalus being equipped with hierarchies of ‘picocomputers’ (pS132). The design of the controlling artificial intelligence could only be sketched; it would have to be capable of ‘adaptive learning and flexible goal seeking’, which would necessitate ‘heuristic qualities’ beyond the merely logical (pS131). Grant imagined the system being capable of in-flight software development – indeed, Grant speculated that pre-launch Daedalus, given a general design by a human team, would be able to write most of its own software! (pS141).

This theme of humans working in partnership with smart machines is evident elsewhere. Parkinson (pS89), describing the Jupiter atmospheric mining operation, noted that ‘The degree of autonomy demanded of unmanned components in the system is illustrated by the fact that the delay time of communications between Callisto and a station within the Jovian atmosphere will be about 12 seconds.’

Two more relevant papers –

PROJECT ICARUS: PROJECT PROGRAMME DOCUMENT (PPD) –

OVERVIEW PROJECT PLAN COVERING PERIOD 2009 – 2014

INCORPORATING MAJOR TASK PLAN FOR PHASE III CONCEPT DESIGN COVERING PERIOD MAY 2010 – APRIL 2011.

http://www.icarusinterstellar.org/icarusppd.pdf

PROJECT ICARUS: ANALYSIS OF PLASMA JET DRIVEN MAGNETO-INERTIAL FUSION AS POTENTIAL PRIMARY PROPULSION DRIVER FOR PROJECT ICARUS

http://nsstc.uah.edu/essa/docs/iac/Stanic-Milos-IAC-11.C4.7.-C3.5.4.x9537.pdf

LJK: We were talking mostly about a ship, i.e. a manned vessel that would traverse space more or less on its own, but then have some form of crew to make decisions upon arrival. For an unmanned probe, there is a better case for intelligence, but I still contend that it does not have to rise to human level, at all. All sorts of un- or semi- intelligent animals here on Earth master survival and recannaissance tasks every bit as complicated as would be faced by a space probe.

The probes in our own solar system do their exploring with little human help, particularly in the outer system. To that existing body of automation you add an extra layer that covers strategic decision making, map the territory, pick interesting and accessible places, and send sub-probes there. Also, decide which information needs to be sent back home, through a channel of much lower bandwidth than we would like. Simple heuristics and rules are suitable for any of these tasks, but it will be a herculean task to put it all together and make it work a significant fraction of the time. The higher the intelligence, actually, the more difficult it will be, due to the increased unpredictability.

This is a hollow question. In my view, if it thinks and acts like a human, it is as conscious as we are, whatever that means.

The term conscious is not defined well enough for anyone but philosophers to debate whether or not such machines (or we ourselves, for that matter) truly possess it.

Someone better tell these starship designers they probably will not need an Artilect or its equivalent to run a vessel, because almost all the literature I have seen, including what I linked to and quoted above, says otherwise.

What I think this shows is that the importance and details of the ship’s computer brain have been sidelined while everyone focuses on the engine. Same with the psychological needs for any human crew.

Whether the AI on a starship, crewed or not, is smart and aware or not, the issue still needs to be addressed better than what I have seen. I know this is the case with a number of other factors on current interstellar vessel designs, but without a proper computer that can handle an interstellar mission, the whole project will likely be doomed.

AI covers a very wide ground in techniques and capability. HAL 9000 embodies the sentient level of machine, but recall Clarke still had the mission continue on HAL’s autonomic system after Bowman lobotomized him.

I’m certainly not convinced the starship needs sentient level AI, but I do think that it must be able to automatically maintain itself and make some decisions based on its sensors and knowledge. If you want to call a suite of systems that does this to the necessary level of capability, sentient AI, then that is OK with me. Just don’t be disappointed if it cannot act as a human friend.

Clarke had it right. AI will be on a ship because it exists and is useful, not because it is required.

Least of all will AI be developed specifically for steering a starship. There are a lot more important and immediate applications for it. Waging war, for one. We may never get starships because of that.

Alex Wissner-Gross: A new equation for intelligence:

http://www.youtube.com/watch?v=ue2ZEmTJ_Xo

@ljk – I thought I had heard of Wissner-Gross before. Here is a Gary Marcus’ reply to his claims and his Entropica software. If Wissner-Gross has found something interesting about describing intelligence, he has a long way to go in supporting his hypothesis.

I just want to make note of two trains of thought above, which seem to be at odds:

“Artilects will be so advanced that whatever they end up doing, they will do without us. This includes exploring the cosmos.”

“Exploring the cosmos is something that can (which suggests ‘should’) be done without the need for complex artilects.”

They may not be at odds, but simply expressions of two different predilections. Being an “all of the above” kind of guy, I’ll probably strive to make space in future installments for both outcomes in different situations.